International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Sindhiya R1 , Akash A2 , Eswar M S3

1Assistant Professor, Dept. of Computer Science of Engineering, K.L.N. College of Engineering, Tamil Nadu,

2Student, Dept. of Computer Science of Engineering, K.L.N. College of Engineering, Tamil Nadu, India

3 Student, Dept. of Computer Science of Engineering, K.L.N. College of Engineering, Tamil Nadu, India

Abstract - Facial expressions play a crucial role in nonverbal communication and are an essential component of social interaction. Recognizing emotions through facial expressions has become a significant research area with applications in self-driving cars, entertainment, healthcare, andsecuritysystems.Emotionrecognitionsystems(ERS)serve as a vital part of human–machine interaction, enabling computers to understand and respond to human feelings effectively. Recognizing emotions is a challenging task for machines due to variations in facial features, lighting conditions, and individual expressions. To address these challenges, the proposed Smart Emotion-Based Activity RecommendationSystemUsingConvolutionalNeuralNetwork (CNN) employs deep learning for precise emotion classification.Thesystemperformsfourmainprocesses face detection,preprocessing,featureextraction,andclassification. A single CNN model is trained to detect emotions such as happiness, sadness, anger, fear, surprise, and neutral states from real-time camera feeds or uploaded images. Once the emotionisidentified,GeminiAIprovidespersonalizedactivity recommendations such as songs, movies, hobbies, or motivational quotes that align with the detected mood. This integration of CNN-based emotion detection and AI-driven activity suggestionenhances user engagement andpromotes emotionalwell-being.Theproposedsystemdemonstrateshigh accuracy and reliability, making it suitable for real-world applications in mental health support, intelligent user interfaces, and adaptive entertainment systems.

Key Words: Emotion Recognition, Convolutional Neural Network, Gemini AI, Activity Recommendation, RealTime Detection, Mental Health Support, HumanComputer Interaction.

Facial expressions play a crucial role in non-verbal communication and form an important aspect of social interaction. The ability to recognize human emotions throughfacialcueshasbecomeanemergingresearchareain the field of computer vision and artificial intelligence. Emotion recognition systems (ERS) help machines understandhumanfeelingsandreactaccordingly,enablinga more natural and effective form of Human-Machine Interaction(HMI).Applicationsofemotionrecognitioncan

befoundinvariousdomainssuchasautonomousvehicles, healthcare monitoring, entertainment, education, and securitysystems.

Emotion recognition through facial expressions is a challengingtaskbecausehumanemotionsvarydepending on environmental factors, lighting, pose, and cultural differences.Traditionalmachinelearningapproachesrelyon handcraftedfeatureextractiontechniques,whichoftenlimit accuracy and adaptability. With the evolution of deep learning, Convolutional Neural Networks (CNNs) have proven highly effective for image-based emotion classification due to their ability to automatically learn spatialfeaturesfromfacialimages.

The proposed Smart Emotion-Based Activity Recommendation System uses a single CNN model for emotionrecognition.Thesystemcapturesfacialexpressions through a real-time camera feed or uploaded image, preprocessesthedata, extractsfeatures,andclassifiesthe emotion into predefined categories such as happiness, sadness,anger,fear,surprise,andneutral.Oncetheemotion is identified, Gemini AI is used to recommend suitable activities such as songs, movies, hobbies, or motivational quotes to enhance the user’s emotional state. The combinationofCNN-basedemotionrecognitionandGemini AI-driven recommendations creates a dynamic and interactive environment that supports mental well-being and personalized engagement. This research aims to contribute to the development of intelligent systems that understandhumanemotionsandrespondinacontext-aware manner.

Themainobjectiveofthisprojectistodesignandimplement a Smart Emotion-Based Activity Recommendation System capable of detecting human emotions in real time and suggestingsuitableactivitiestoimproveuserengagement and mental well-being. The system utilizes a single Convolutional Neural Network (CNN) model for facial emotion recognitionusinglivewebcamfeedsoruploaded images,accuratelyclassifyingemotionssuchashappiness, sadness,anger,fear,surprise,andneutral.Oncetheemotion isidentified,GeminiAIisintegratedtoprovidepersonalized

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

recommendations such as songs, movies, hobbies, or motivational quotes that correspond to the detected emotionalstate.Thescopeofthisprojectextendstocreating an intelligent and interactive human–computer interface thatcombinesdeeplearningandgenerativeAIforemotionawarepersonalization.Thesystemcanbedeployedasaweb or desktop application and has potential applications in mental health monitoring, entertainment, education, and userexperienceenhancement.Italsoprovidesafoundation forfuturedevelopmentinaffectivecomputing,wheremultimodal emotion recognition and adaptive AI-based recommendation systems can be integrated to build emotionallyintelligentdigitalenvironments.

1.HybridDeepLearningModelforEmotionRecognition UsingFacialExpressions

Author:GarimaVerma,HemrajVerma–Springer,2020

Summary:Thisstudyproposedatwo-stageCNNmodelfor facial emotion recognition. The primary CNN classified emotions into Happy and Sad, and the secondary CNN refined them into Surprise, Neutral, Anger, and Fear. The modelwasevaluatedonFER2013andJAFFEdatasetswith preprocessinganddropoutregularization.

Merits:Achievedveryhighaccuracy(97.07%onFER2013 and94.12%onJAFFE),demonstratingthemodel’sstrength indistinguishingcomplexfacialexpressions.

Demerits: Requires higher computation due to two CNN stages, making it less efficient for lightweight real-time deployment.

2.ActivityRecommendationSystemBasedonFacialand SpeechEmotionRecognitionUsingCNN

Author:RohitBari,VaishnaviBelsareetal.–IJSREM,2022

Summary: The study developed a CNN for facial emotion recognitioncombinedwithspeechemotiondetection.The multimodalapproachimprovespredictionaccuracyandis usedtorecommendreal-timeactivitiesbasedondetected emotions.

Merits: Provides richer and more accurate activity suggestionsbyintegratingbothfacialandspeechsignals. Demerits: System performance is sensitive to background noise,reducingaccuracyinreal-worldenvironments.

3.Emotion-Based Music Recommendation System Using VGG16-CNNArchitecture

Author:B.KranthiKiranetal.–IJRASET,2024

Summary:ThisworkusedtransferlearningwithVGG16CNN

for facial emotion detection. Detected emotions were mapped to playlists for personalized music recommendations.

Merits: Transfer learning improves accuracy and reduces trainingtime,enablingreliableemotionrecognition.

Demerits:Thesystemislimitedtomusicrecommendations andlacksversatilityforsuggestingotheractivities.

4.PaperName:FacialEmotionDetectionUsingCNNand OpenCV

Author:R.M.R.Raut,S.N.Deshmukh–IJERT,2020

Summary:AlightweightCNNmodelintegratedwithOpenCV for real-time webcam-based emotion detection was proposed.ThemodelistrainedontheFER2013datasetand designedforfastdeployment.

Merits: Lightweight, fast, and easily integrable with applications,suitableforreal-timesystems.

Demerits: Limited accuracy (~65–75% on FER2013) and reducedrobustnessunderpoorlightingoroccludedfaces.

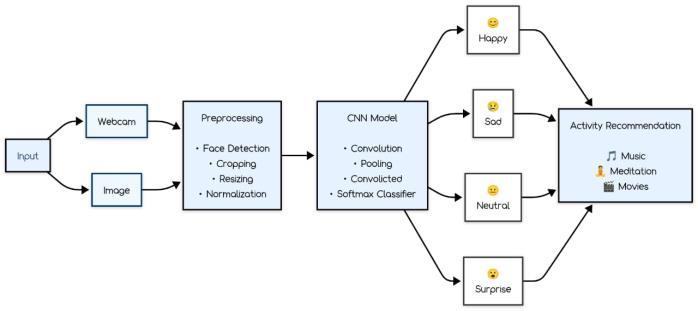

3. METHODOLOGY

The proposed Smart Emotion-Based Activity Recommendation System combines deep learning-based facial emotion recognition with Gemini AI to provide personalizedactivitysuggestions.Thesystemisimplemented asaweb-basedapplicationthatsupportsreal-timewebcam inputaswellasimageuploads.Themethodologyisdivided intothefollowingkeysteps:

i. Image Acquisition:

Thesystemcapturesinputthroughalivewebcamfeedor anuploadedimage.Forreal-timeemotiondetection,the webcamcontinuouslystreamsframesthatareprocessed bythesystemforfacedetection.

ii. Face Detection and Preprocessing:

Faces are detected using the Haar Cascade classifier. Detected facial regionsareconverted tograyscaleand resized to a standardized dimension to ensure consistency for the CNN model. Preprocessing also includesnormalizationandnoisereductiontoimprove modelaccuracy.

iii. Emotion Classification Using CNN:

AsingleConvolutionalNeuralNetwork(CNN)modelis employedforemotionrecognition.TheCNNcomprises convolutionallayerswithReLUactivations,max-pooling layers for dimensionality reduction, dropout layers to prevent overfitting, and fully connected layers for classification.Themodelistrainedonstandarddatasets

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

and optimized for detecting six basic emotions: happiness,sadness,anger,fear,surprise,andneutral.

iv. Attribute Extraction:

Inadditiontoemotion,thesystemextractsotherfacial attributes such as age, gender, and confidence scores usingDeepFace.Theseattributesenhancethecontextfor personalizedrecommendations.

v. Activity Recommendation Using Gemini AI: The extracted emotion and attributes are passed to Gemini AI. Using a generative prompt, Gemini AI provides personalizedsuggestions includingactivities, music,movies,andmotivational quotestailoredtothe detectedemotionalstate.

vi. Display and Feedback:

The front-end interface displays the detected emotion andthecorrespondingactivityrecommendationsinrealtime.Thisprovidesaninteractiveexperiencethatadapts dynamicallytochangesintheuser’sfacialexpressions.

vii. Deployment:

The system is implemented using Flask, OpenCV, DeepFace,andGeminiAI.Itsupportsaseamlesspipeline fromimageacquisitiontoemotiondetectionandactivity recommendation,ensuringreal-timeresponsivenessand accurateuserengagement.

4. SYSTEM DESIGN

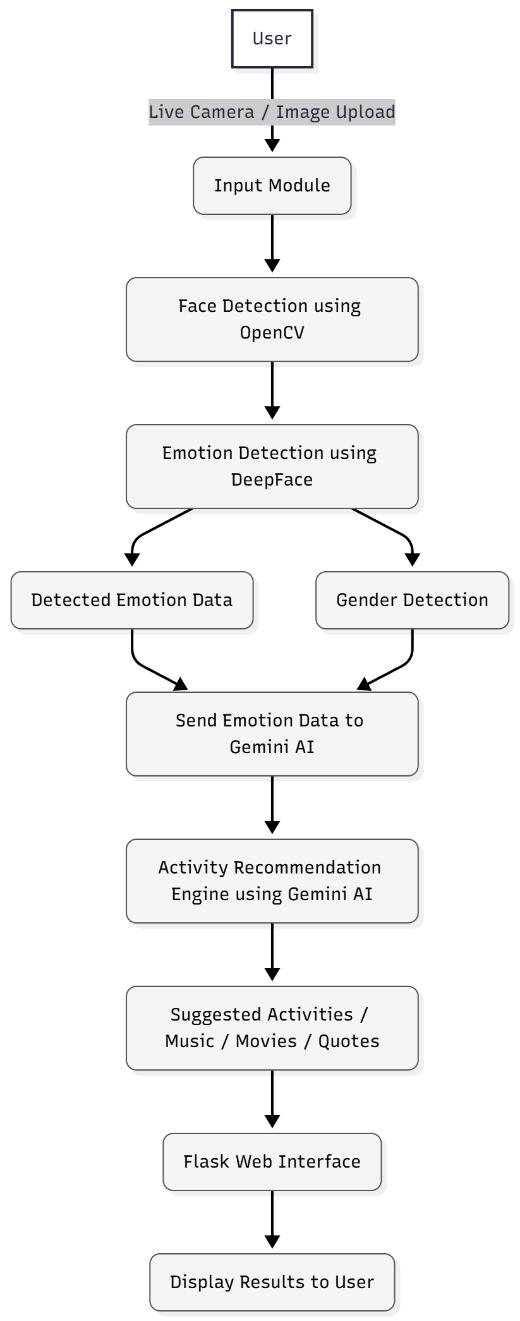

The proposed system is a Smart Emotion-Based Activity Recommendation System that detects a user's emotion through facial expressions and suggests personalized activities, music, movies, and motivational quotes. The system is implemented as a web-based application using Python(Flask framework),OpenCV, DeepFace,andGoogle Gemini AI. The design emphasizes real-time emotion detectionviawebcamandimageuploadmodeforflexibility.

4.1. System Architecture Overview

Thesystemconsistsofthefollowingmajormodules:

a) UserInterfaceModule(Front-End)

Providestwomodesfortheuser:

o CameraMode:Livewebcamfeedforreal-time emotiondetection.

o UploadMode:Allowsuserstouploadanimage foremotionanalysis.

BuiltusingHTML,CSS,andJavaScripttemplatesin Flask.

Displays detected emotion, gender, and recommendedactivitiestotheuser.

b) WebServerModule(Back-End)

BuiltusingFlask.

Handles HTTP requests from the front-end, includingmodeselection,videofeed,andimage upload.

Initializes and manages the webcam for live detection.

Returns JSON responses containing detected emotionsandsuggestedactivities.

c) EmotionDetectionModule

Uses OpenCV Haar Cascade Classifier for face detection.

UsesDeepFacelibrarytoanalyzethedetected facefor:

o DominantEmotion(e.g.,happy,sad,angry)

o Gender

o Age

o Confidencelevelofdetection

Handles errors if no face is detected or frame capturefails.

d) ActivityRecommendationModule

Integrates Google Gemini AI (Gemini 1.5) throughacustomPythonmodule(bard.py).

Sendsdetectedemotion,gender,andotherface attributestoGemini.

Gemini generates personalized suggestions, including:

o Recommendedactivity

o Musicsuggestion

o Moviesuggestion

o Motivationalquote

-1: Architecture Diagram

4.2. Data Flow

i. The user accesses the web interface and selects a mode(CameraorUpload).

ii. The server either initializes the webcam (Camera Mode) or processes the uploaded image (Upload Mode).

iii. The Emotion Detection Module detects faces and extractsemotions,gender,age,andconfidence.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

iv. The Activity Recommendation Module sends this data to Gemini AI, which returns personalized suggestions.

v. TheserversendsJSONdatatothefront-end,which displays:

Detectedemotionandgender

Suggested activity, music, movie, and motivationalquote

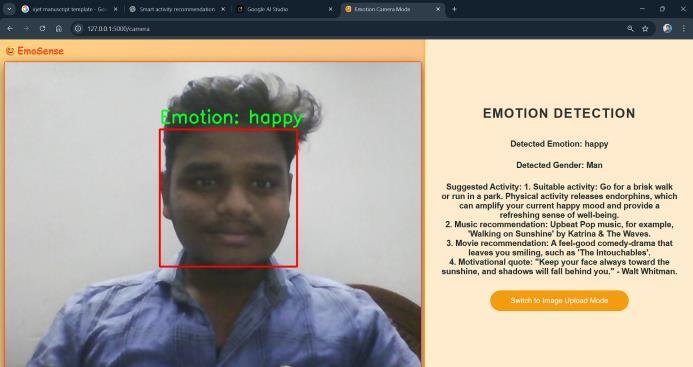

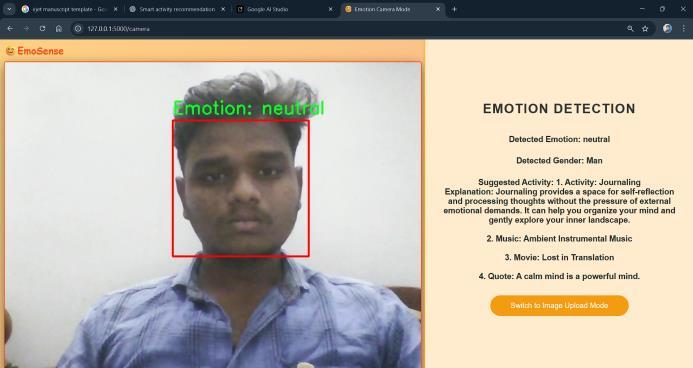

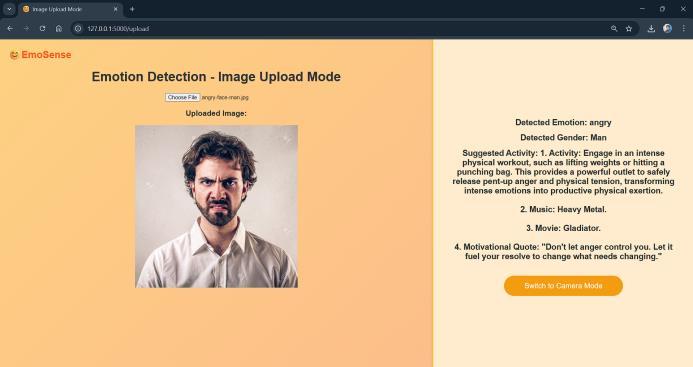

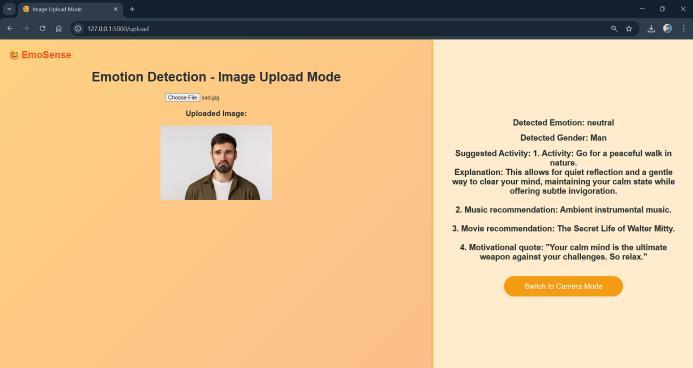

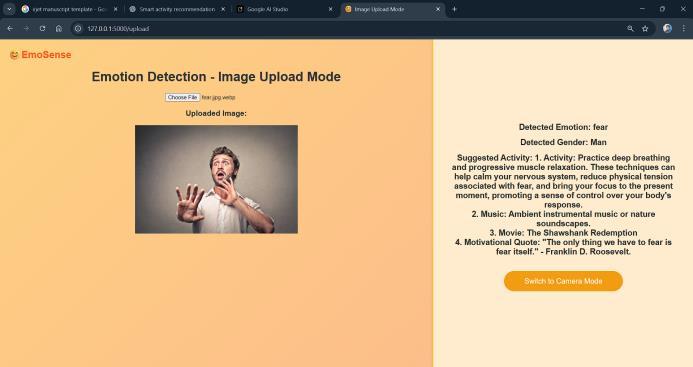

The systemwas tested onmultiple subjectsunder varying lightingconditions.Thekeyobservationsinclude:

Emotion Detection Accuracy: DeepFace accurately recognizedbasicemotionssuchashappiness,sadness, anger,surprise,fear,disgust,andneutralstateswithhigh reliability.

Gender Recognition: Gender identification was performedaccuratelyforbothliveanduploadedimages.

ActivityRecommendations:GeminiAIprovidedrelevant activitysuggestions,music,movierecommendations,and motivationalquotesbasedonthedetectedemotion.For example:

o EmotionDetected:Happy

o SuggestedActivity:Goforawalkintheparkto maintainpositivity.

o MusicRecommendation:Upbeatpopsongs.

o MovieRecommendation:Light-heartedcomedy movies.

o Motivational Quote: “Happiness is not something ready-made. It comes from your actions.”

The systemsuccessfully displayed the live video feed with boundingboxesarounddetectedfacesandreal-timeupdates of detected emotions and suggested activities. Uploaded images also provided accurate detection and recommendations.

PerformanceMetrics:

Real-timecamera modelatency:~0.5–1.5secondsper frame.

Imageuploadprocessingtime:~1–2secondsperimage.

User-friendly interface allowed seamless switching betweencameraanduploadmodes.

In Camera Mode:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Image Upload Mode:

The proposed Smart Emotion-Based Activity Recommendation System effectively combines deep learning-basedemotiondetectionandAI-poweredactivity recommendationtoprovidepersonalizedsuggestions.The systemdemonstrates:

High Accuracy: Reliable emotion and gender detectionthroughDeepFace.

Personalization: Gemini AI generates tailored suggestionsincludingactivities,music,movies,and quotes.

Versatility: Both live camera and image upload modesaresupported.

Practical Utility: Canbeextendedtomentalhealth monitoring,mood-basedcontentrecommendation, andinteractivehuman-computerinterfaces.

Future work may focus on integrating multi-modal inputs such as voice or text-based emotion detection, improving latency, and expanding the recommendation database to includelifestyle,fitness,andeducationalactivities

[1] Garima Verma and Hemraj Verma, “Hybrid Deep Learning Model for Emotion Recognition Using Facial Expressions,” Springer Journal, 2020. DOI: 10.1007/s12626-020-00061-6.

[2] S. Agrawal and S. Choudhary, “Real-Time Emotion Detection using Deep Learning Techniques,” 2021 InternationalConferenceonComputer,Communication, and Electronics (ICCES), pp. 17–22, 2021. DOI: 10.1109/ICCES54031.2021.96861082.

[3] S. Minooe, A. Abdar, et al., “Deep Facial Expression Recognition:ASurvey,”IEEETransactionsonAffective Computing, Vol. 12, No. 3, 2021. DOI: 10.1109/TAFFC.2021.3075993.S.

[4] A.B.Hassan,“EmotionRecognitionUsingCNNandRNN withSVMClassifier,”InternationalJournalofAdvanced Computer Science and Applications (IJACSA), Vol. 11, No.5,2020.

[5] R. M. Raut and S. N. Deshmukh, “Facial Emotion DetectionusingCNNandOpenCV,”InternationalJournal ofEngineeringResearch&Technology(IJERT),Vol.9, Issue06,June2020.

[6] Agung E. S., “Image-based Facial Emotion Recognition UsingDeepLearningModels,” Scientific Reports,2024.

[7] Mellouk W., “Facial Emotion Recognition Using Deep Learning,” Procedia Computer Science,2020.

[8] GanesanP,“FacialExpressionRecognitionUsingSVM With CNN and Handcrafted Features,” International Journal of Recent Technology and Engineering (IJRTE), Vol.8,Issue4,2019.

[9] Kim J. C., “Hybrid Approach for Facial Expression Recognition Using CNN and SVM,” Applied Sciences, 2022.

[10] SinghR., “FacialExpressionRecognitioninVideosUsing Hybrid CNN and ConvLSTM,” Frontiers in Computer Science,2023.