International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Prof.

Lakshminarayana P1 , Ms. Chandana2

1Associate Professor, BMS College of Engineering, Dept of Computer Applications, Bengaluru 2 Student, BMS College of Engineering, Dept of Computer Applications, Bengaluru

Abstract - One of the most prevalent and possibly fatal types of cancer in the world is skin cancer. Early and accurate diagnosis plays a critical role in effective treatment and improved survival rates. In this research, we present a deep learning-based skin cancer detection system utilizing dermatoscopic images from the publicly available ISIC dataset. The dataset was preprocessed using grayscale conversion, CLAHE for contrast enhancement, Gaussian blurring for noise reduction, and Canny edge detection with edge overlays to highlight critical features. To ensure class balance and improve model generalization, data augmentation techniques such as rotation, horizontal flipping, and zooming were applied. The dataset was then split into training (80%), validation (10%), and test (10%) sets.

We implemented and trained three deep learning models EfficientNet, MobileNet, and ResNet50 using TensorFlow and Keras, with hyperparameter tuning and the Adam optimizer. Among the models, EfficientNet achieved the highest validation accuracy of 92.44%, followed by MobileNet (88.89%) and ResNet50 (84.22%), with corresponding F1-scores indicating strong class-wise performance. The system also incorporates Explainable AI (XAI) techniques to visualize model decisions, increasing interpretability and trust. A user-friendly web application was developed using HTML, CSS, JavaScript (frontend), and Flask (backend). Users can upload skin lesion images to receive instant predictions on the type of skin cancer, along with treatment guidelines, precautionary measures, and helpful resources. This project demonstrates the effectiveness of deep learning in automated skin cancer diagnosis and provides a practical tool for early screening, especiallyinremoteorunderservedareas.

Key Words: Skin Cancer, Explainable AI, Deep Learning, Web Technologies

1.INTRODUCTION

Skin cancer is one of the most prevalent cancers globally, with millions of new cases diagnosed each year. It primarily occurs due to the uncontrolled growth of abnormalskincells,oftentriggeredbyexcessiveexposure to ultraviolet (UV) radiation from the sun or artificial sources like tanning beds. Early detection and accurate classification of skin cancer are crucial for effective treatmentandsignificantlyimprovethechancesofpatient

survival. However, traditional diagnostic methods depend heavily on expert dermatologists and histopathological analysis,whichcanbetime-consuming,subjective,andnot always accessible, especially in rural or underdeveloped regions.

With the advancement of Artificial Intelligence (AI) and Deep Learning (DL), computer-aided diagnosis (CAD) systems have gained popularity as powerful tools to support medical professionals in early skin cancer detection. In this project, we propose an automated deep learning-based system to classify various types of skin cancer using dermatoscopic images from the ISIC dataset. The dataset was enhanced through data balancing and augmentation techniques to address class imbalance and improve model performance. We applied several preprocessing steps, including grayscale conversion, CLAHE for contrast enhancement, Gaussian blur for noise reduction, and Canny edge detection with red overlay to emphasize the lesion boundaries. We trained and evaluated three state-of-the-art convolutional neural network (CNN) architectures EfficientNet, MobileNet, and ResNet50 using TensorFlow and Keras. These models were optimized using the Adam optimizer and trainedover30epochs.

To enhance the transparency of the model’s decisionmaking process, Explainable AI (XAI) techniques were integrated, enabling visualization ofthe regionsthat most influenced predictions. Finally, a fully functional web application was developed using Flask (backend) and HTML,CSS,JavaScript(frontend),allowinguserstoupload skin lesion images and receive instant predictions along with treatment suggestions, precautions, and useful medical resources. This system aims to assist in rapid, reliable skin cancer screening and provide a supportive diagnostic tool for healthcare professionals and individualsalike.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

One of the most common and deadly malignancies in the world, skin cancer requires early detection in order to be effectively treated and to improve patient outcomes. However,thereisstilllimitedaccesstodiagnosticfacilities anddermatologicalknowledge,especiallyinimpoverished anddistantareas.Manualidentificationtakesalotoftime and energy, is inconsistent, and is susceptible to interobserver inconsistency. An automated, precise, and easily available diagnostic tool is becoming more and more necessary to support the early identification and categorizationofskincancer.

Recent developments in deep learning and artificial intelligence have greatly improved the precision and dependability of computer-aided diagnostic (CAD) systems for the detection of skin cancer. To enhance classification performance, several research have investigated different deep learning architectures, datasets, and optimization strategies. A thorough review of the literature on three important studies that are pertinent to the creation of deep learning-based skin cancerdetectionsystemscanbefoundbelow.

In one of the studies [1] research combines Deep Convolutional Neural Networks (DCNNs) and transfer learning to classify three types of skin lesions: melanoma, common nevus, and atypical nevus. 200 dermatoscopic pictures(40ofmelanoma,80ofcommonnevus,and80of atypicalnevus)fromthePH2datasetwereused. Because of the small quantity of the dataset, the authors rotated each image at several different angles as part of a comprehensive augmentation method, which increased thevolumeofdatabytentimes.

To further enhance classification performance, transfer learningwasutilizedthroughapre-trainedAlexNetmodel. Thefinalsoftmaxclassificationlayerwasretrainedonthe targetclasses,whiletheconvolutionallayersretainedpretrainedweights.Experimentsconductedwithandwithout augmentation showed significant improvement in performance when augmentation was applied. The model achieved 98.61% accuracy, 98.33% sensitivity, 98.93% specificity, and 97.73% precision on the augmented dataset, outperforming previous methods. This research effectivelydemonstratedthebenefitofdataaugmentation andtransferlearninginskincancerclassification.

In another study[2] researchers used a dataset of 2,357 dermoscopic images provided by the International Skin Imaging Collaboration (ISIC), focusing on differentiating between malignant (melanoma) and benign (moles) skin tumors. The researchers proposed a CNN architecture consisting of three convolutional blocks with ReLU

activations and max pooling, followed by two fully connected layers with softmax activation for final classification.

The input images were resized to 64×64 pixels, and the model was trained using the Adam optimizer with a learningrateof2e-4,batch sizeof32,dropoutrateof0.5, and over 100 epochs. The proposed CNN demonstrated reliable performanceindistinguishingbetween malignant and benign lesions. The model leveraged deep feature extraction through convolutional layers, offering a relatively lightweight yet effective structure for image classification.

This work emphasizes the utility of tailored CNN architectures in skin lesion analysis and showcases how even simplified models can yield competent performance withpropertrainingandoptimization.

Another research [3] presents a systematic literature reviewaimedatcategorizingandevaluatingdeeplearning approachesforskincancerdetection.Thereviewfollowed astructuredframeworkencompassingplanning,selection, evaluation, and results generation. Out of an initial 1,483 research papers, a final set of 51 high-quality papers was selected based on relevance, quality, and adequacy in addressingkeyresearchquestions.

The review highlighted the prominent role of Convolutional Neural Networks (CNNs) and Artificial Neural Networks (ANNs) in skin lesion classification. It also explored the effectiveness of other machine learning models, such as K-Nearest Neighbors (KNN) and Generative Adversarial Networks (GANs). Specific techniques like the Mumford–Shah segmentation algorithm, Gray-Level Co-occurrence Matrix (GLCM) for feature extraction, and the Levenberg–Marquardt optimization algorithm were cited as impactful tools in priorworks.

The review reported that ANN-based approaches could achieve high specificity (up to 95.1%) and classification accuracy (over 91% in some studies). The study underscored the importance of robust datasets, wellstructured neural architectures, and explainability in developingreliableskincancerdetectionsystems.

Thecollectiveinsightsfromthesestudieshighlightseveral critical components for building an effective skin cancer detectionsystem:

Data Augmentation is essential when dealing with limited datasets, as it significantly boosts model generalizationandaccuracy.

Transfer Learning using pre-trained models such as AlexNet, ResNet, or EfficientNet can accelerate training andimproveperformanceonsmalldatasets.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Custom CNN Architectures with appropriate hyperparametertuning(learningrate,dropout,batchsize) candeliverstrongclassificationoutcomes.

Systematic Reviews provide valuable benchmarks and helpidentify the most promisingtechniquesandcommon pitfallsinthefield.

These findings informed the methodology and design choicesofourproposedsystem,whichintegratesstate-ofthe-art CNN models, robust preprocessing, and explainable AI (XAI) components for accurate and interpretableskincancerclassification.

Outcome of the study

Thecollectiveinsightsfromthesestudieshighlightseveral critical components for building an effective skin cancer detectionsystem:

Data Augmentation is essential when dealing with limited datasets, as it significantly boosts model generalizationandaccuracy.

Transfer Learning using pre-trained models such as AlexNet, ResNet, or EfficientNet can accelerate training and improve performance on small datasets.

Custom CNN Architectures with appropriate hyperparametertuning(learningrate,dropout,batchsize) candeliverstrongclassificationoutcomes.

Systematic Reviews provide valuable benchmarks and helpidentify the most promisingtechniquesandcommon pitfallsinthefield.

These findings informed the methodology and design choicesofourproposedsystem,whichintegratesstate-ofthe-art CNN models, robust preprocessing, and explainable AI (XAI) components for accurate and interpretableskincancerclassification.

Table -1:SummaryofKeyDrawbacksacrossExisting Systems

ISSUE Description

Limited Dataset

Simple/ Outdated models

Lack of Multiclass Classification

Most studies rely on small datasets (like PH2), increasing the risk of overfitting andpoorgeneralization.

Some models (e.g., AlexNet, shallow CNNs) are outdated compared to modern architectures.

Several systems only perform binary classification rather than distinguishing betweenmultiplecancertypes.

Inconsistent Evaluation Performance comparison is difficult due to varying datasets, architectures, and evaluationmetrics.

Minimal Focus on Real-time Deployment

Very few studies discuss deployment challenges, latency, or user interfaces for real-worldusage.

The skin cancer detection system is built using a deep learning-based image classification approach. The system classifies skin lesion images into benign or malignant categories(ormulticlass,ifextended).

Fig-2:Imagetoshowrelationshipbetweendifferent approachesinML

Themethodologycomprisesthefollowingphases:

4.1

Dataset Source:KaggleorISICarchive

Data Format:RGBimagesofvariousskinconditions

Classes: Benign, Malignant (can be extended to include Melanoma,Nevus,SeborrheicKeratosis,etc.)

Image Dimensions: Resized to 224×224 pixels for compatibilitywithdeeplearningmodels.

Resizing: All images are resized to 224×224 to fit input dimensions of pretrained models like ResNet or EfficientNet.

Grayscale Conversion (Optional): Used to reduce computationalcomplexity(ifneeded).

CLAHE (Contrast Limited Adaptive Histogram Equalization):

Enhancescontrastindermoscopicimages

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Helpsinhighlightinglesionbordersandpatterns

Noise Removal:

TheGaussianfilterisutilizedtoenhanceimage smoothnessandeliminateartifacts.

Edge Detection:

Cannyedgedetectionusedtoidentifylesionboundaries

Normalization:

Allpixelvaluesscaledto[0,1]forfasterandmorestable modelconvergence

To prevent overfitting and improve generalization, the followingtransformationsareappliedduringtraining:

Horizontal andvertical flipping,Rotation(0–360°),Zoomin/out, Widthandheightshifting,Brightnessandcontrast adjustment.

4.4 Model Design and Training

BaseModelsUsed:

EfficientNetB0: Lightweightandpowerfularchitecture Inputsize:224×224

ResNet50: Deepermodelwithskipconnections Preventsvanishinggradientproblem

HybridModel(ResNet+EfficientNet): Featuremapsextractedfrombothnetworks. Concatenatedbeforefeedingintofullyconnectedlayers forclassification

TrainingParameters:

Optimizer:Adam,Loss Function:Categorical cross entropy (for multiclass) or Binary Cross entropy (for binary), Epochs: 50–100, Batch Size: 32, Learning Rate: 0.0001, ValidationSplit:20%

4.5 Model Evaluation

ConfusionMatrix ClassificationReport: Precision,Recall,F1-Score,Accuracy

VisualizationTools: Trainingvs.ValidationLoss/AccuracyCurves

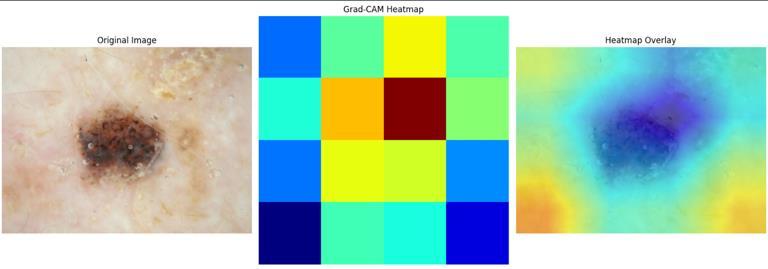

Grad-CAM or Heatmaps to visualize model attention (ExplainableAI)

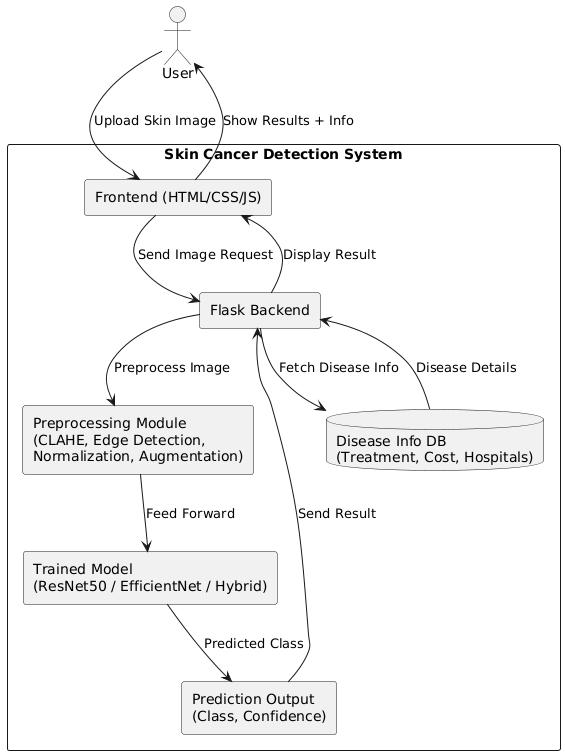

4.6 Web Application Development

Frontend: HTML,CSS,JavaScriptwhichallowsusertouploadskin lesionimage

Backend: Flask (Python web framework) & Receives image input, preprocessesit,andsendstothetrainedmodel.

Output: Predictedclass&Confidencescore

Disease description, precautions, treatment cost, and expertrecommendations

Components:

1. UserInterface(Uploadimage)

2. FlaskBackend

3. PreprocessingModule

4. TrainedDeepLearningModel

5. ResultInterpretationModule

6. Database(Optional)fordiseasedetails, precautions,hospitals

Use datasets such as PH2, ISIC, or a custom-labeled skin lesion dataset. Organize the dataset into train, validation, and test directories with subfolders for each class (e.g., Melanoma,Nevus,Keratosis).

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Fig-3:SystemArchitectureoftheProposedModel

5.2 Data Pre-processing

Applyimageresizingtostandardinputsize(e.g., 224x224).

Normalizepixelvalues(rescalebetween0and1). Enhance dataset with data augmentation techniques: Rotation, Zoom, Width/height shift, Horizontal/vertical flip

Applyedgeenhancementtechniqueslike CLAHE or Canny Edge Detection (optional but useful for segmentation tasks).

5.3 Model Building

5.3.1ResNet50

Loadpretrained ResNet50 withImageNetweights.

Removethetoplayerandadd:

GlobalAveragePooling DenselayerwithReLU OutputlayerwithSoftmax(forclassification)

5.3.2EfficientNetB0

Loadpretrained EfficientNetB0 withImageNetweights.

AddcustomtoplayerssimilartoResNet50.

5.3.3HybridModel

Use both ResNet50 and EfficientNetB0 as feature extractors Concatenate the features from both models. And add dense layers after concatenation for final classification.

5.4 Model Compilation

Usethe Adam optimizerwithasuitablelearningrate(e.g., 0.0001).

Use categorical cross entropy asthelossfunction(multiclassclassification).

Metrics:Accuracy,Precision,Recall,andF1-score.

5.5 Model Training

Train models using Training data generator & Validation datagenerator

Apply EarlyStopping and ModelCheckpoint callbacksto Avoidoverfitting&Savethebestmodelbasedon validationaccuracy

5.6. Model Evaluation

Evaluatethetrainedmodelonthe test dataset Generate:Accuracy&ConfusionMatrix

ClassificationReport(Precision,Recall,F1-scoreforeach class)

5.7 Web Application Integration

Builda Flask backend: -Acceptsimageuploadsfromusers. -Loadsthebest-trainedmodel. -Preprocessestheuploadedimage. -Predictstheclassandreturnstheresulttothefrontend.

Builda frontend usingHTML/CSS/JavaScript: Allowsuserstouploadskinlesionimages.

Displays prediction result and additional information (e.g., disease description, treatment, cost estimate, and hospital/doctorslist).

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

5.8 Result Analysis

Compare individual models and the hybrid model. Analyzeperformanceon:

Accuracy–Generalization

Robustnesstodifferentskintones,lightingconditions,and imageresolutions.

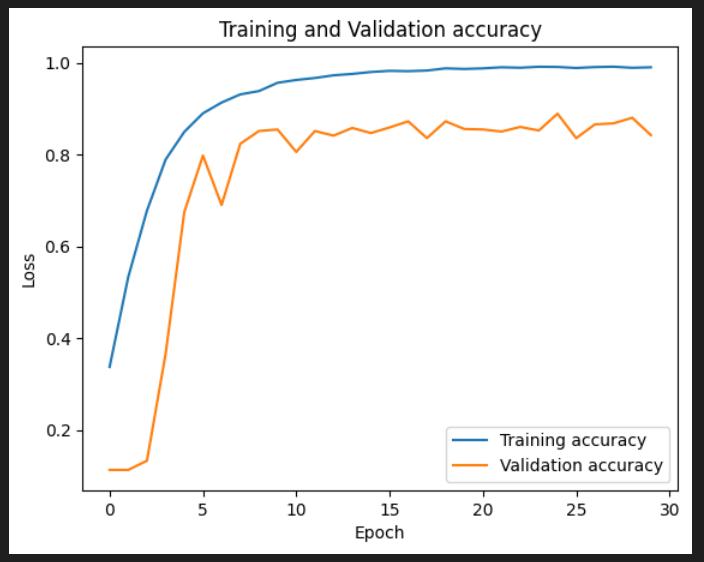

This section outlines the experimental results, evaluation metrics, and comparative performance of the proposed models EfficientNetB0, MobileNetV2, and ResNet50 for multiclass skin cancer classification. The performance of each model was assessed on the same validation set of 900 images, using key metrics like accuracy, precision, recall,and F1-score

6.1 Validation Accuracy Comparison

Table -2:Comparisonofvalidationaccuracy

Model Final Validation Accuracy Best Epoch Accuracy

EfficientN etB0

MobileNet V2

(train),

(train),

ResNet50 84.22% 98.99% (train), 88.00%(val)

6.2 Classification Report Summary

EfficientNetB0 Highlights:

Highest overall validation accuracy of 92.44%

ConsistentlyhighF1-scoresforcriticalclasseslike:

Melanoma:F1=0.8558

Basal Cell Carcinoma:F1=0.9794

Pigmented Benign Keratosis:F1=0.9637

Best macro average and weighted average metrics:

MacroF1: 0.9057

WeightedF1: 0.9024

MobileNetV2 Highlights:

Lightweightmodelwith faster training times

Strongperformancein:

Dermatofibroma (F1=0.9827)

Basal Cell Carcinoma (F1=0.9238)

Lowerperformancein Melanoma (F1=0.7489)

ResNet50 Highlights:

Performswellfor: Vascular Lesion (F1=0.9851)

Dermatofibroma (F1=0.9714)

Lowerperformancein: Squamous Cell Carcinoma (Recall=0.6604)

Melanoma (F1=0.7750)

Validation accuracy dippedto 84.22% duetopotential overfitting

6.3 Model-wise Strengths and Weaknesses

Table -3:Comparisonofstrengthsandweaknesses

Aspect EfficientNetB 0 MobileN etV2 ResNet50

BestAccuracy 92.44% 88.89% 84.22%

SpeedTraining Time) Moderate Fastest Slowest

Melanoma Detection Good (F1: 0.85) Fair (F1: 0.74) Poor (F1: 0.77)

Generalization Best Good Overfitting observed

ModelSize Moderate Smallest Largest

6.4. Why EfficientNetB0 Performs Better Than Existing Systems

Table -4:Comparisonofperformance

Comparison Factor Previous/Existing Systems EfficientNetB0basedSystem

Feature Learning Depth

Shallow or singlefeatureextraction Compound scaling (depth, width, resolution)

Image Preprocessing Basicresizing CLAHE + Edge Detection

Model Optimization Standardoptimizers Adam with tuned learningrate&early stopping

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Class Confusion (Melanoma vs Nevus)

Real-time Readiness

6.5. Conclusion

Highoverlap Significantseparation with high recall/precision

Limited

Optimized for deploymentviaFlask app

EfficientNetB0 proved to be the most accurate and reliable model forskincancerclassificationinthisstudy, outperforming MobileNetV2 and ResNet50 in most metrics.

It shows robust generalization, particularly for challenging cases like Melanoma vs Nevus, which are often misclassified in traditional systems. While MobileNetV2 islightweightandsuitableformobile deployment, it lags slightly in critical-class detection. ResNet50, though powerful, showed signs of overfitting and underperformed in multi-class classification accuracy comparedtoEfficientNetB0.

Chart -1:AccuracyGraph

7. CONCLUSIONS

Based on our results, EfficientNet emerges as the most effective deep learning architecture for skin disease classification in our experiments. It outperformed both MobileNet and ResNet50 in terms of overall classification metricsandclass-specificperformance.

However, MobileNet remains a strong candidate for lightweight and real-time applications due to its faster trainingandlowercomputationaldemands.

Forfuturework,werecommend:

Incorporating explainable AI (XAI) techniques to highlightdecisionregionsformedicalinterpretability.

Applying ensemble models to further enhance performance.

Addressing class imbalance using advanced sampling or augmentationstrategies.

[1] K. M. Hosny, M. A. Kassem, and M. M. Foaud “Skin Cancer Classification using Deep Learning and Transfer Learning” 2018 9th Cairo International Biomedical Engineering Conference (CIBEC), IEEE, doi: 10.1109/CIBEC.2018.8641762.

[2] Santos D. “Melanoma Skin Cancer Detection using Deep Learning”. medRxiv; 2022. DOI: 10.1101/2022.02.02.22269505.

[3] Dildar M, Akram S, Irfan M, Khan HU, Ramzan M, Mahmood AR, Alsaiari SA, Saeed AHM, Alraddadi MO, MahnashiMH.“SkinCancerDetection:AReviewUsing Deep Learning Techniques”. Int J Environ Res Public Health. 2021 May 20;18(10):5479. doi: 10.3390/ijerph18105479. PMID: 34065430; PMCID: PMC8160886.

[4] K. M. Hosny, M. A. Kassem, and M. M. Foaud “Skin Cancer Classification using Deep Learning and TransferLearning”(IEEECIBEC2018)

[5] Kumar Y, Gupta S, Singla R, Hu YC. “A Systematic Review of Artificial Intelligence Techniques in Cancer Prediction and Diagnosis.” Archives of Computational Methods in Engineering : State of the art Reviews. 2022 ;29(4):2043-2070. DOI: 10.1007/s11831-02109648-w.PMID:34602811;PMCID:PMC8475374.

[6] Zafar, M., Sharif, M. I., Kadry, S., Bukhari, S. A. C., & Rauf,H.T."SkinLesionAnalysisandCancerDetection Based on Machine/Deep Learning Techniques: A Comprehensive Survey." Life, vol. 13, no. 1, 2023, Art. no.146.doi:10.3390/life13010146(MDPI)

[7] Naqvi,M.,Gilani,S.Q.,Syed,T.,Marques,O.,&Kim,H.C. "Skin Cancer Detection Using Deep Learning A Review." Diagnostics, vol. 13, no. 11, 2023, Art. no. 1911.

doi:10.3390/diagnostics13111911(MDPI)

[8] Azeem, M., Kiani, K., Mansouri, T., & Topping, N. "SkinLesNet: Classification of Skin Lesions and Detection of Melanoma Cancer Using a Novel MultiLayer Deep Convolutional Neural Network." Cancers,

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 |

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

vol. 16, no. 1, 2024, Art. no. 108. doi: 10.3390/cancers16010108(MDPI)

[9] Zhang, P., & Chaudhary, D."Hybrid Deep Learning Framework forEnhancedMelanomaDetection." arXiv preprint, arXiv:2408.00772, 2024. https://arxiv.org/abs/2408.00772(arXiv)

[10] Akter, M., Khatun, R., Talukder, M. A., Islam, M. M., & Uddin, M. A. "An Integrated Deep Learning Model for Skin Cancer Detection Using Hybrid Feature Fusion Technique." arXiv preprint, arXiv:2410.14489, 2024. https://arxiv.org/abs/2410.14489(arXiv)

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008