International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Namarata Kumari1, Deepshikha2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

Abstract - The need to cope with noisy and evolving data streams has become a major concern in the light of the increasing spread of real-time data in areas such as monitoring of IoT, healthcare diagnostics and cybersecurity. Nevertheless, the traditional online learners fail when placed in high-noise scenarios where there are corrupt features, mislabeled moments and adversarial interferences. The proposed research work suggests such a framework to be called Robust Anomaly Detector (RAD), which incorporates noise-aware components in the online learning pipeline to enhance robustness to the numerous types of noise at the expense of little predictive accuracy and model stability drop. The RAD architecture has 2 parts namely the model of the label quality, its prediction, which is the dynamics of eliminating unknown data because there will be unreliable information, and the online classifier which To boost the flexibility even further, three longer variants, namely, RAD Voting, RAD Active Learning andRAD Slim, are being presented to provide the support to ensemble disagreement, human-in-the-loop feedback, and resourceefficient calculations respectively. With synthetic and realworlddatasettesting (including dataonIoT intrusion,cloud task failure, and facial recognitions) it is concluded that the RAD framework shows a large margin of improvement compared to the normal online learners in terms of robustness andimprovedbyupto 30percent instandards of robustness, with no reduction in efficiency of the computational process. The results make RAD an adaptable and scalable tool to use to implement credible online learning systemsto noisy real-timeenvironments.

Key Words: Robust Online Learning, Label Noise, Streaming Data, Noise Reduction, Ensemble Learning, AnomalyDetection,Real-TimeLearning,MachineLearning Robustness.

1. INTRODUCTION

1.1 Background

Exponential rise in the amount of data produced in the real-time systems such as the Internet of Things (IoT) systems and healthy monitors as well as the cyberprotectionshasbroughtabouta paradigmchangein the study of machine learning. Big Data is not yet static and batch-based but it comes in high velocity, continuous

stream and requires real time processing and learning. The model based on online learninghas become the most popular solution to accommodate these requirements because it learns gradually, receiving every new data sample, and even makes the prediction in real time, but without retraining on all data present in the dataset. The said method is especially important in adaptive systems thataresupposedtobecontinuous,i.e.,networkintrusion detection, health monitoring devices - guest worn, and automatedequitytradingsystems.

Even though online learning in the real world has several benefits, noise very often undermines its quality. The sensor data streams, or other forms of logging, or user interactions can have corrupted features determined by hardware failures or information transmission error, and mislabeled instances because of a human error during annotation, or malicious attack by an adversary. In an extremely dynamic environment, such as one in IoT or dealing with cybersecurity, the noise will be significant and common, and it will have a dire impact on accuracy andstabilityoflearningsystems.Thereishenceanurgent need in bolstering online learning models with strong noise-control responsibilities in order to guarantee reliability and upsurge in such un-predictable and highnoisepositions.

Basic online learning algorithms are quite effective and goodincaseofprocessingsequentialdata,but,atthesame time, they are predisposed to the negative influence of corrupted input.Thetrainingmodelstendto expectclean

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

and trustful data, which is hardly ever in the real life implementation. Noise may impair the model in form of feature noise that causes confusing internal updates (e.g. volatile sensor measures or corrupted packet data), or label noise that adds fundamentally flawed supervision tuition (e.g. incorrect labeling in a supervised task). The effect is a decrease in model performance, slow convergence, unstable predictions and an augmented risk of catastrophic forgetting such that prior knowledge is overwrittenbycorruptexamples.

This problem is more severe in the context of real time development in which the models cannot only be reliable but also fast and responsive. Conventional non-online noise-handling methods, e.g. batch data cleansing or compound adversarial training cannot be applied online since they are computationally expensive. Thus, the only challenge that this study aims at answering is how to designanddevelopthestrongonlinelearningmodelsthat will be able to handle a lot of noise without losing durability, flexibility, speed, or the ability to efficiently utilizetheavailableresources.

Themaingoalofthegivenresearchwillconsistincreating a comprehensive approach to creating sound online education that will allow suppressing the effects of noisy data in real-time settings most effectively. This is done throughthedesignoftheRobustAnomalyDetector(RAD) architecture that adds a two-layer design comprising of thelabelqualitypredictionmodelandonlineclassification model. The label quality predictor allows predicting unreliableorcorruptedlabelsinanonlinemanner,sothat the classifier is trained only on high-confidence filtered instances.

It is expanded in terms of strategic variants in order to makethisframeworkversatileandscalable.RADVotingis an improvement of this decision reliability via ensemble consensus;RADActiveLearningalsoaddsexpertfeedback toclearupambiguous labels,andRAD Slimis a resourceefficient variant which can be used in edge computing environments. A variety of experimental configurations withsyntheticandreal-lifedatastreamsisconsideredand ineachofthem,noiseissystematicallyaddedwithvarying levels of severity. It is also expected to empirically justify the framework using various kinds of noise such as symmetric, asymmetric, and adversarial label corruption aswell.

The study has a number of contributions to the online robust learning study. To begin with, it presents a new dual-layer (RAD), which considers the problems of realtimedetectionoflabelingnoiseandadaptiveclassification, whichisoneofthemostintractabletasksinnoisylearning

in the stream. Second, it suggests that it has three extendedstrategies,RADVoting,RADActiveLearning,and RAD Slim, which increases the base model options and performance in various working scenario, extending to high-accuracy, enterprise deployments to limited edge devices.

Thirdly, the framework is highly compared with the traditional/state-of-art online learners under different noise conditions. Its analysis contains symmetric label flipping noise, different levels of asymmetric classdependentnoise,andadversarialcorruptionthatmatches the complicated reality of deployment in areas, such as IoT, cloud systems, and face recognition. They use such measuresaspredictiveaccuracy,modelstability,recovery time,andresistanttocatastrophicforgettingtothoroughly evaluate performance. The findings indicate that, even forming the RAD with its variants, generalization and robustness qualities are better as compared to those of baseline models, without the extra cost of computational load that would have been imposed during their formation. The set of contributions has a significant value in terms of the improvement of the design of online learning systems that can be used in the environment of disturbingnoise,inthereal-time.

Digital learning has become the anchor point in real-time data processing wherein the models progressively accommodatedeparting data streamsin real-timeaseach fresh data point evolves, modifying their parameters in a step-by-step fashion. Simple, fast and more importantly with low computational overhead have been classical algorithms like the Perceptron, Passive-Aggressive (PA) algorithms as well as Online Support Vector Machines (Online SVMs). Perceptron algorithm learns based on errors, during which its weights are changed accordingly; hence, this algorithm demands rapid learning. PassiveAggressive algorithms distance this further by adding a marginofconfidence,suchthatthemodelisnotjustfixing errors, but is also robust to later misclassifications in so far as its decision boundaries are concerned. The Online SVMs on the other hand provide a handy kernel based method to solve non- linear classification problems by holdingacollectionofsupportvectorsandupdatingthem viatheincomingdata.

Thesealgorithmshaveseveralseriouslimitations,inspite ofbeingveryuseful,inpresenceofnoisydatastreams.The updates used by them are sensitive to specific entries of data; therefore, a comparatively small percentage of distorted labels or features, can generate profound divergence in the behavior of the models. Noise may deludedirectionofgradients,contributetounstabilityand poor convergence or overfitting to the incorrect patterns.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Thesealgorithmscanperformdisastrouslyinthepresence of high noise and there is a clear need of designing algorithms that are more robust and explicitly deal with thenoiseproblem.

In order to deal with the data corruption issue in conventional learning scenarios, a fertile literature on robust machine learning has suggested several solutions especially within the offline or batch learning schemes. Among the most noticeable methods are employing very strong loss functions supporting outliers such as Huber loss, the combination of Mean Squared Error and Mean Absolute Error, to reduce the effect of outliers and Tukey biweight loss, which down-weights severe values in the loss-surface. Label cleansing, another method of popular approach tries to discover and fix the mislabeled data by using confidence levels, ensemble consensus or small validation set. Unit learning algorithms e.g. Bagging, BoostingandRandomForestshaveprovedtoberobustin themselves as the predictions made by different models are combined so that the effect of noisy samples is reduced.

But the offline channels cannot be applied directly to the streaming environment. Their performance normally depends on having the complete data, the capacity to resample the data repeatedly and a lot of computing resourcesthatpermitthestepstobecarriedoutinabatch manner-things which cannot be achieved in the online learning context. Furthermore, data streams have a considerable level of dynamism, and the real-time forecasting and adjustments, as well as computational simplicity, upon an algorithm compose, require its noise robustness, regardless of the fact that it will be relatively lightweight, with a behavior that is adaptive in terms of time. Hence, although offline robust techniques can be informative, there are drawbacks to apply them in the online learning setting that require the design of more specializedones.

Noiseinthestreamingdatatakesmanycomplicatedforms most of which involve a complication to the learning process.Ataxonomyof noise(comprehensive)consists of three main property noise, label noise, and adversarial noise, and a hybrid category (comprising noise-concept driftinteractions).Thefeaturenoiseiscorruptionintheir inputattributes,thesecanpossiblybecausedbyincorrect sensorsorcommunication message, orsignalnoise.Ithas such patterns as additive Gaussian noise, impulse noise andmissingvalues.Label noise,initsturn,occurswhena wrong target output is attached to the data instance. This may be symmetric (in the case where the labels are randomlyflippedonallclasses)orasymmetric(inthecase where the mislabeling between particular pairs of classes

ismorecommonandthisisusuallybecausebiasesonthe annotation or systematic defects of information gathering usually contribute). The adversarial noise is a form of malicious perturbations done to the work with an intention of deceitful learning algorithms that is usually donetotakeadvantageofknownmodelvulnerability.

Noiseinthestreamofdataisabigproblemtothelearning activity. It disrupts convergence, injecting in valid model updates spurious gradients that make it harder to learn, generalize in general (it favors training the model to get stuckonthelatestlearningnoiseratherthanontheactual truth) and is much easier to make Democrats and Republicans forget old, correct information that they are overwritten on their new, noisy data. Also, noise might resemble some real distributional changes or hide them completely, making the task of adaptive learning more difficult in the context of concept drifts. The effects compound and this makes sound learning in noisy environments a very difficult problem which needs innovation both at a theoretical level as well as at the pragmaticlevel.

In the attempt to defy the problems causing noise in sequential data applications, modern strides in robust online learning proposed some new and strategic techniques. Finding one of the tasks is when it comes to incorporating powerful loss functions into online update rules. Huber loss and generalized cross-entropy loss have been modified to work in streaming settings allowing models not to be affected by noisy examples and badly labeled samples but with minimal increase in computational expenses. Such loss functions reduce excessivegradientupdatesduetoanomalousinputshence more stable and progressive learning in the presence of stability.

The online context was not an exception in adaptation of ensemble-based methods. Such methods as Online Bagging, Online Boosting and Adaptive Random Forests (ARF)exploittheredundancyanddiversityofanumberof learners to be more robust. These ensembles can soak up the noisy effectofindividual noisyinstances byaveraging predictions over many ensemble members or voting over the membership models to get majority results. Besides, ensemble members could be dynamically weighted or chosen according to their latest performance and the system can respond to the changing data conditions and concentrateonthemostsufficientlyreliablecomponents.

Theothergreatplaceofdevelopmentisonnoisedetection andfilteringlabelsassociatedwithinternet.Approachhas been given to estimate the reliability of incoming labeled cases and re-weight them, or drop them in case they appear unreliable. Filtering methods based on disagreement, e.g. with samples with large variance in

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

their ensemble-predicted labels viewed as suspicious (disagreement-based filtering) and an instance-level trust score based on past prediction accuracy have been promising. These methods are incremental in nature and theydonothavehighoverheadshencetheycanbeusedin real-time.

The combination of the advances is a big step in the directionofdevelopingthestrongonlinelearningsystems. Still, they can hardly deal with high-intensity noise or complicated noise-drift associations. This shortcoming explains why more integrated and adaptive architectures need to be developed so that they can holistically incorporate noise detection, selective learning, and ensemble-basedlearninginordertoattainresilienceeven in the most-severe streaming architectural settings like theRADframeworkstudiedinthisstudy.

3.1

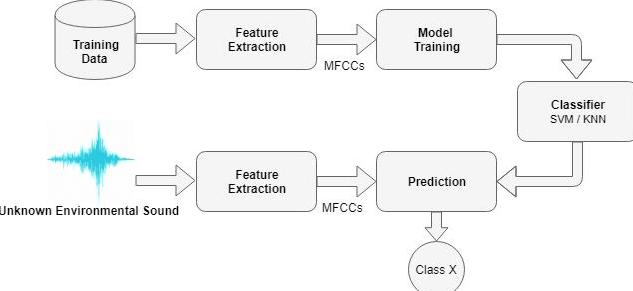

The main architectural design that is suggested by the research is a powerful and double model based online learningsystembuiltspeciallytoworkasasysteminhigh noisedatastreams.Theconstructionofthisarchitectureis based on a modular and pipeline-driven architecture namedRAD(RobustAnomalyDetector)frameworkthatis composed of two main modules including Label Quality Predictor and Online Classifier. These two models are complimentary to each other as both of them have different yet interconnected roles to play such that they reduce the impact of a noisy label and noisy feature and allowcontinuousupdatesandreal-timeprediction.

The Label Quality Predictor has a role of a gateway to incoming data. It examines the accurateness of the labels thatareputoneverydatainstanceandascertainswhether or not the sample/label needs to be employed to update the classifier. Training of this predictor is online in terms ofhistoricalpredictionbehavior,classificationconfidence, inter-model agreement (in ensemble techniques) and patterns in quality of the data over time. It seeks to make sure that only high-confidence and trusted information is fed to the downstream classifier hence minimising the effectsofnoiseinthelearningpart.

After this filtering, Online Classifier carries out prediction in real-time in addition to incremental learning. It correspondently updates its parameters only when the instance coming in has been judged reliable by the predictor of labels. Based on the situation, this type of a classifier can be realized in the form of Perceptron, Passive-Aggressive algorithms, Online SVMs or Stochastic Gradient Descent learners all optimized to use streaming updates.

Also to increase fault-tolerance and stability of predictions,integrationintoanensembleisprovided.This implies that the system may instantiate several classifiers concurrently and combine the prediction of all of classifiers into a single output using a majority voting scheme, and extract disagreement information to update label quality estimation. The variant extensions provided by the modularity of this architecture lead to the customization of the system with regards to constraints encounteredinvariousenvironmentsintermsofavailable resourcesandaccuracyrequirementse.g.inenvironments thatneedefficiencyofcomputationormanualapprovalby humanbeings.

Table-1: Components of the RAD System Architecture.

Component Function

LabelQualityPredictor Detectsandfiltersnoisyor unreliablelabeleddata

OnlineClassifier Performsreal-timeclassification andincrementalmodelupdates

EnsembleLayer Aggregatespredictionsfrom multiplelearnerstoenhance robustness

NoiseInjectionControl Simulatesnoisyenvironments duringtrainingandbenchmarking

ModularExtensions Enablesvariantimplementations likeRADVoting,RADActive,and RADSlim

Thisarchitectureensuresarobustlearningpipelinewhere model updates are noise-aware, adaptive, and computationally efficient meeting the requirements of real-timeapplicationsinnoisyanddynamicenvironments.

InordertoincreasetheRADapplicabilityinawiderrange of the operational environment three strategic versions are worked out. All the variants are also modeled to address certain practical constraints, which include disagreementbetween models,thecostofannotationand thelimitationsofresources.

RAD Voting is an ensemble version which makes use of multi-modelconsensustoenhancewell-being.RADVoting keepsthenumberofbaselearnerstrainedsimultaneously on data stream, in lieu of the need to use only a single classifier.Incaseofanewinstance,labelqualitypredictor evaluates reliability in respect of disagreeing with the ensemble. In case the models have a lot in common, then the label is clean, otherwise, it is marked noisy. Majority voting is then determined to come up with the final prediction. It is based on the fact that this method allows exploiting diversity in the models to cancel out the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

influenceofparticularnoisyobservationsandimprovethe confidenceinpredictions.

To address the label quality as ambiguous, RAD Active Learning is founded on a human-in-the-loop feedback module. In those cases where the label predictor recognizes uncertain, or very questionable, labels a query ismadetoanexternaloracle(e.g.,toahumanexpert,orto averifieddatabase).Suchamechanismwillguaranteethat serious mislabelings will be rectified on the spot, which will enhance the stability of learning greatly during its long-term use. Although such approach leads to added cost of annotation, it provides a significant level of confidenceinsuchsettingasinthehealthcareorfinancial frauddetection,wheretheneedofaccuracyisparamount.

RAD Slim Another product is called RAD Slim and it is a light-weight version designed to be deployed in the resource-limited environment on edge devices, IoT sensors, or mobile devices. it reduces the memory footprintandcomputationaloverheadbymakingthelabel quality prediction mechanism quite simple (i.e. using a single-classifier confidence threshold instead of discrepancy between deep learners) and changing the deep models with shallow. The RAD Slim has forgone a minoramountofrobustnesstogainconsiderableamounts ofspeedandefficiency,itisthereforeappropriatetousein latencysensitiveapplications.

The different noise handling strategies, which are used to establish the RAD framework, work on both levels: loss function level and learning process level in order to find robustnessinnoisedatastreamsenvironment.

Oneofsuchelementsistheemploymentofthestrongloss functions, which are not easily interfered by the outliers and poorly labeled samples as opposed to the traditional ones, e.g., cross-entropy or mean squared error. This paper includes Huber loss fans of which squared loss is smoothlyblendedwithabsoluteloss forsomewhatbigger errrors and thus very well suited to noisy regression or classification signals. Another option, Generalized Cross Entropy (GCE) loss is another compelling trade off between mean absolute error and cross-entropy, and it has excellent label noise robustness. Besides, they use symmetric loss functions where they address incorrect class prediction in a similar way, which is significantly successfulinclassificationtasksinvolvingsymmetriclabel noise.

Conjoint with them we have such confidence-weighted learningstrategiesinwhichthemodellearningrateorthe strengthofupdatesisadjustedaccordingtoitsconfidence in the prediction. To cite one example, when the model is not sure about its classification, it will give a smaller weight to the gradient update and this will minimize the

effect of possibly-noisy examples. Such an equilibrium increases model stability and quality of learning in the courseoftime.

More strength is gained by instance-level filtering where each of the data points is considered on a pre-defined basis or on learned measures named as ensemble disagreement or prediction confidence. Training is either discardedordown-weightingonsamplesthatfailtoreach aqualitylevel.Incombinationwithadaptivelearningrate schedules they guarantee that noisy data points do not upset the learning process, and the model can tolerate transientimpairmentinperformance.

Collectively, the above defensive measures develop a stratified form of attack on the various and unruly forms of noise found in real-world data streams. They have helpedtheRADframeworknotonlytoexistbuttoprosper intoughenvironmentstowhichconventionalmodelstend togo.

4.1

A range of datasets was used in order to test the effectiveness, stability, and robustness of the proposed RAD framework and its modifications in terms of their ability to deal with high-noise online learning environments. Synthetic data as well as real-world data were considered in the experimental design to enable theretobeanequilibriumbetweentheexperimentationof benchmarksandreal-worldapplication.

The artificial data was created by using the well-known stream generators which assume progressing environments with constrained disturbance and conceptdrift. Three datasets were adopted as the main set, SEA, Hyperplane, and Random RBF. It is known that SEA datasetisfrequentlyusedasacommonbenchmarktotest the binary classification problem in data streams. It employs a sudden concept drift when decision boundary changes with time. Hyperplane dataset mimics slow drifting as it varies coefficients that were defining a separating hyperplane in high-dimensional space, so it is applicable during the testing of drift-resistant learning. Random RBF dataset represents a data model as the points sampled out of drifting Gaussian streams to model thecomplexityofagingandchanging conceptsinaddition tonulldipindistributions.Thosesyntheticdatasetsmade it easy to manipulate with precision drift patterns and noise levels, which provided a perfect testbed on which controlledexperimentscanbeimplemented.

A noise injection procedure was devised to conduct a proper examination of the noise robustness of the RAD

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

framework and its variants. This included the simulation of two major forms of labels corruption i.e.; symmetric corruption and asymmetric corruption and also it added featurenoisebyemployingseveralformsofcorruption.

Symmetric label flipping was done by randomly re assigning the class labels of a set percentage of the incoming instances to any of the other classes with equal probability. Such noise also resembles systematic errors onannotation and is commonlyapplied to the robustness testing. On the other hand, the label flipping was not entirelysymmetrical,asmorerealisticcircumstanceswere takenintoaccountbyasymmetriclabelflipping,whichled to the introduction of mislabeling that depended on the class, or in other words, labels were more likely to be flipped to classes and categories that appeared semantically or statistically close to each other e.g. confusion of celebrity face images in the FaceScrub dataset.

On feature-level corruption, Gaussian noise, that adds normally distributed random numbers to numeric features, and salt-and-pepper noise, which sets feature value to either minimum or maximum extremities randomly, wereapplied. Thelattertypecancommonly be found in image and sensor data by bit or sensor malfunction.

The experiments covered a wide range of noise intensity, including10%,50%,and90%soastotesttheintegrityof themodelbothintheconditionsoflightandseverenoise. Thisgradientallowedtherecordingofdegradationcurves, recoverytimesandthestabilityofthemodelwithincrease in levels of noise. The noise injection was completed onlinewheretheinjectionofnoisetothemodelwasdone gradually in real time hence maintaining the context of streamingenvironment.

To provide an exhaustive assessment of the operation of the suggested RAD architecture and its versions, several evaluationmetricswereused.Thesemetricswereselected in order to measure accuracy as a predictor, but also to measure a degree of robustness, the stability of learning behavior, and efficiency of online learning systems, which are very important properties of robust online learning systems.

Accuracy and F1-score were the two most important measures of performance used in this study, as they gave an idea about how accurate is the model in terms of classification in balanced and unbalanced data. As the measure of overall correctness, accuracy was good, whereas F1-score worked especially well in terms of measuringrobustnesstolabelimbalanceandnoise.

The degradation slope and the recovery time were employed as the two fundamental indicators to evaluate robustness;theformerusedtoindicatethespeedatwhich performance of the model declined as the noise level was increased and the last one (recovery time) defined as the number of times the model needed to restart from the same level it had previously reached before being disturbedbyaburstofnoise.Lesssteepdegradationslope and a shorter recovery time was observed which illustratesgoodresilienceoftheIPtonoise.

Variance on performance over time windows wasused to measurestability.Thismetricwasobtained bymeasuring the standard deviation of accuracy or F1-score with respect to sliding windows, summary of the errors in a random nature or stability of the model over the streaming.

Streaming efficiency was the priority that is why such metrics as average time per update, memory footprint, and inference latency were monitored. Through these measurements,itwasmadesurethattheimprovementin robustness did not entail computally unsustainable costs, particularly in resource-orthodox environments that RAD Slimtendstooperate.

That task was carried out in order to evaluate the performance of the proposed RAD framework compared to some basic baselines online classifiers, such as Online Perceptron, Stochastic Gradient Descent (SGD), and the Online Support Vector Machines (Online SVMs). The choiceofsuchmodelsisexplainedbytheirbroadusagein online learning setting and diversity of their learning strategies, including the updates caused by mistakes, margin-basedlearning,orgradient-basedoptimization.

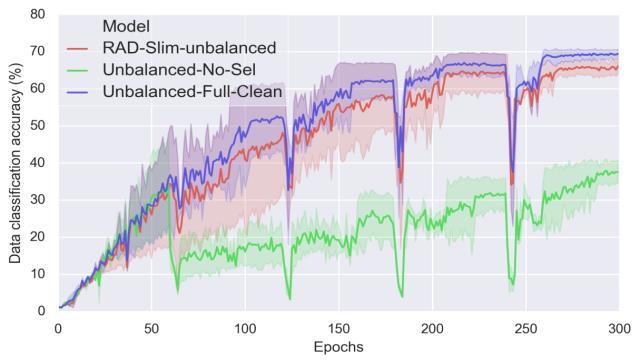

In the clean data condition, there was the same performance of the models in accuracy and convergence including RAD. Nevertheless, the shortcomings of the classicalmodelsstartedbecomingclearasthenoiselevels haverisen.Therewasquiteafastdegradationinprecision (as measured by accuracy) in the Online Perceptron and SGD because these were highly sensitive to label and feature noise. There was also little performance in online SVMs that were somewhat more stable but could not preserve under asymmetric label flipping and feature corruption at high intensity. RAD, on the contrary, performedbetterinallthetestdatavis-a-visallbaselines. Thearchitecturethatincludesthedual-modelarchitecture and especially the label quality predictor allowed RAD to filter out the erroneous cases, thus leading to massive increases in accuracy and F-1-scores in even extreme noise.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Inaddition,ensemblemechanismsaspartofRADsetinto yield significant increase in model stability as well as robustness. Combining output of different learners and using the information on disagreement to improve the evaluationoflabels,thevariantsofRADenhancedwithan ensembleperformedwithdecreasedovertimevarianceof predictionsandashallowerslopeofregressiontonoise.

Table-2: Average Accuracy and F1-Score at 30% Noise Level.

Further, the stability of RAD on the situations of concurrentconceptdriftandnoisewasexamined,andthe Hyperplane and Random RBF datasets were used as the test subject. With noisy changes, standard learners were likely to misunderstand the concept drift and reset wronglyorshiftweightsoften. Onthecontrary,RAD with the help of its label quality filter and adaptive ensemble techniques could better differentiate between real drifts and interim noise. This was the capability that enabled it tomaintainwhatithadbeentaughtbeforeasitsettleson the new forms of patterns thus reducing catastrophic forgettingandlong-termconsistencyoflearning.

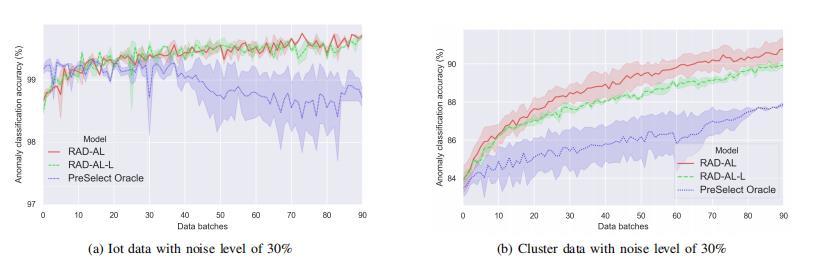

In order to examine the flexibility and on-ground adaptability of the RAD architecture, three types of such, namely RAD Voting, RAD Active Learning, and RAD Slim were tested under a range of working conditions and applicationenvironments.

The table illustrates that RAD not only delivers superior predictive performance but also ensures more consistent behavior under noisy conditions, a critical advantage in real-time,high-riskapplications.

In order to observe the resiliency of RAD framework to a study of wider range of noise intensities, experiments were conducted at a study of noise intensity on an increment of 10 percent in range between 10 percent to 90 percent of noise on both labels and features. Accuracy decay trends exhibited by the baseline models revealed that their performance decreased exponentially and even drastically with increment in noise in mostly a non-linear mannerespeciallywiththeincreaseinnoiseexceedingthe 40% mark. RAD, in contrast, decline was much more gradual, and accuracy was still fairly high even with 60% noise,andremainedabove-trivialperformanceeveninthe extremely noisy 90%-noise case - beyond which other modelsalldegeneratedintonear-randomness.

TheRADVotingprovedtobethemostgenerativeandalso fault-tolerantoutofallthemodelsthatweretested.Using prediction consensus over an ensemble of classifiers, it was able to obtain the best results whenever applied on real world datasets congregating/involving IoT intrusion detectionandcloudfailuredetectionamongothers.Voting mechanism was useful to seal effects of noise-induced misclassifications and gave a strong decision surface. But the cost that was traded was that it required more computationaloverheadsinceitkeptmanylearnersactive atthesametime.

The RAD Active Learning presented feedback loop of a human in the loop whereby the system was permitted to question doubtful samples. The coverage of selecting samples (510% of all samples redirected to an expert) resulted in almost perfect accuracy, being close to that of clean-labelaccuracy.Suchavarianthasparticularvaluein medical diagnostics, to illustrate with an example of multiple system atrophy diagnostics, whose annotation budgets are smaller than in low-stakes areas despite precisionvaluesbeingcritical.

Figure-3: Comparison of RAD Active Learning Limited (RAD-AL-L) and Pre-Select Oracle, showing the power of selection.

International Research

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net

RADSlimwhichwasanedge-scarrierandresourcelimited environment was fashioned as a minimal alternative. It employed a simplified version of the label quality predictor and smaller number of learners, which has had an enormous reduction in requirements, both memorywise and compute-wise. Although its precision can be consideredabitinferiortofullRAD,itwassuperiortoany of the conventional baselines, which is why it would be a suitable choice concerning the inferences that have to happeninreal-timeinIoT-basedsystemsandmobileapps.

Table-3: Variant Evaluation Summary.

In order to get insight under the role of the components that compose the RAD architecture, a number of ablation studieswereconducted.Theformerexploredtheeffectof withdrawal of the quality label model thus making RAD a regular online student. This failure of noise-filtering mechanismdemonstratedthatinitsabsence,theclassifier wouldbesensitivetocorruptedlabelsanditsperformance would drop drastically, which confirmed the importance oflabelassessmentintheprocessofobtainingrobustness.

Inasecondexperimentperformanceofsingle-learnerand multi-learner ensembles was compared. The variants of the ensemble-enhanced versions repeatedly provided the beststabilityandaccuracyespeciallyinthenon-stationary andnon-reversedsituation.Ensemblesalsominimizedthe impactof model drift, and gavemore balancedupdates in theuncertaintimes.

Finally,theeffectofvarioustypesofthelossfunctionswas studied.Usingconventionalcross-entropyloss,themodels were found to be extremely vulnerable to label noise, whereas the ones using Huber loss, generalized crossentropy (GCE) and symmetric as loss functions preserved decent performance, which further validates the fact that streaming environments required the specific use of loss conditionsdevelopedwithrobustnessastheprimarygoal.

All theseoutcomesofablationconfirmthattheadvantage of the RAD architecture does not lie in any single feature but rather in the thoughtful combination of several mutually complementary ones: each of them improves a different facet of robustness needed to survive online learninginhigh-noiseregimes.

6.1 Conclusion

The study has introduced a very detailed model Robust Anomaly Detector (RAD) to address the urgent issues of working with online learning under the high-noise data stream environment. RAD is an effective dual-model architecture that allows sufficient filtering of noisy labels andreal-timemodelupdatesbyincorporatingamodulein predictingthequalityoflabelsandanonlineclassification model. The framework was then further extended into three strategic derivations including RAD Voting, RAD Active Learning, and RAD Slim to cover many different varieties of operation scenarios such as high-assurance predictionsystemstoresource-limitededgeuse-cases.

Large scale empirical testing on both synthetic and realworld datasets showed that RAD and its variants uniformly outperformed all the common online models of learning e.g. the Perceptron, Stochastic Gradient Descent (SGD), Online Support Vector Machines and so on, not least when label and feature noise was high. It is important to note that RAD still had a less rapid degradation curve of accuracy, rebounded fast on noise bursts, and was stable even in the situations that include conceptdrift.Moreover,integrationabilityensuredthatin accordance with inductive maximizing by ensembles and adapted learning techniques, generalization and robustness were achieved such that RAD was able to perform even in settings where prototype learners miserablyfailed.ThefindingspointtothefactthatRADis also efficient in noise filtering, as well as it is able to provide scalable, reliable predictions in continuous and uncertaindatasources.

6.2

Although the suggested RAD framework proves to be successful, the limitations that exist within the current researcharealsomultiple, whichdeterminesthescope of its application and points to the signification of possible researchfurther. To begin with,theresearchislimited by suchtasksasclassificationthatoccurinthesupervisedor the semi-supervised environments. Learning paradigms like regression and reinforcement learning (RL) are important learning paradigms that have not been taken careofandthishinderstheapplicabilityoftheframework in more expansive applications such as in predictions; in timeseriesorindynamicenvironmentswith reference to makingonlinedecisions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Secondly, even though the tested models added datasets with synthetic and real-world concept drift, the degree of the drift was moderate and managed. The extreme or rapid drift cases (in particular with noise), were not explored exhaustively. These unstable systems are highly likelyto besituationswhereeven stalwartmodelscannot predict whether true change could be seen or whether some cleansing cycles were corroding the system and then,theexistingprocessofRADmighthavetoevolve.

Also the framework has not been as well in use on very high-dimensional data streams (i.e. genomics datasets, naturallanguage,fine-grainedimagesequenceswhereitis possiblethatfeaturesparsityandnoiseinteractwitheach otherinmorecomplexways).Inthesameway,structured noise, i.e. errorsthat are temporallycorrelated or depend on the context, e.g. label flips, did not fall within the experimentalrangeeventhoughtheyarefrequentinother settings, e.g. video surveillance or sensor fusion systems.

After the success of the research or based on the findings inthelight of imposed limitations,fewresearchareasare beingforeseentoextendthescopeandincreasethedepth of the RAD framework. An obvious of these directions is bringingRADtounsupervisedandreinforcementlearning scenarios. In unsupervised online learning it is necessary tofindapatternofanomalieswithoutlabelsupervising,so new approaches are needed in the instance selection and filtering. Label quality estimation In reinforcement learning, and in particular when acting in partially observable environments or when the reward signal is noisy(thatistosay,inreinforcementlearningproblemsin general), label quality estimation methods may be modified to evaluate policy updates and reward credibility.

The next possible way is in the direction of creating adaptivearchitecturesthat couldbe dealing with rapid or adversarial concept drift. RAD might learn not just off of thecurrent data, butalsooffitsownpastadaptations (by incorporating ideas inspired by meta-learning or online Bayesianmethods),sothatitwouldbeabletoreactbetter to new changes in the distribution of the data. Some methods associated with learning such as continual learning and memory based networks might as well be used to enhance longevity in absorption amidst the vicissitude.

Last but not least, the most important way of bringing impact into real life is to implement RAD on edge and embedded systems in such real-world areas like autonomous vehicles, wearable health sensors, and smart manufacturing platforms. This would be realized through additionaloptimizationofcomputationalfootprint,energy usage as well as latency of RAD components. By

incorporating RAD into real-time data acquiring pipelines and user-feedback capacities, it will become more operationalandreliableinthemission-essentialsetting.

1. H. Bifet, G. Holmes, R. Kirkby, and B. Pfahringer, “MOA: Massive Online Analysis,” Journal of Machine LearningResearch,vol.11,pp.1601–1604,2010.

2. A. Ghosh, H. Kumar, and P. S. Sastry, “Robust loss functions under label noise for deep neural networks,”ArXivpreprintarXiv:1712.09482,2017.

3. H. M. Gomes et al., “Adaptive random forests for evolving data stream classification,” Machine Learning, vol. 106, pp. 1469–1495, 2017, doi:10.1007/s10994-017-5642-8.

4. A. Arazo et al., “Unsupervised label noise modeling and loss correction,” ArXiv preprint arXiv:1904.11238,2019.

5. B. Tabbaa, S. Ifzarne, and I. Hafidi, “An online ensemble learning model for detecting attacks in wireless sensor networks,” ArXiv preprint arXiv:2204.13814,2022.

6. J. Mourtada, S. Gaïffas, and E. Scornet, “AMF: Aggregated Mondrian forests for online learning,” ArXivpreprintarXiv:1906.10529,2019.

7. E. Arazo et al., “Beta mixture loss for learning with noisylabels,”ICLR,2020.

8. A.BonabandF.Can,“Atheoreticalframeworkonthe ideal number of classifiers for online ensembles in data streams,” ACM Transactions on Knowledge Discovery from Data, vol. 11, no. 4, 2017, doi:10.1145/3015819.

9. A. M. Tabbaa, S. Ifzarne, and I. Hafidi, “Online ensemble for intrusion detection in WSNs,” Sensors, vol.22,no.5,2022,doi:10.3390/s22050516.

10. B. Tabbaa et al., “ARF+HAT: A heterogeneous ensembleforevolvingdatastreams,”Entropy,vol.24, no.7,2022,doi:10.3390/e24070859.

11. E. Ghosh et al., “Enriched random forest for highdimensional genomic data,” IEEE/ACM Transactions onComputationalBiologyandBioinformatics,vol.19, no. 5, pp. 2817–2828, 2022, doi:10.1109/TCBB.2021.3089417.

12. R. Mezbah-ul-Islam and R. Jain, “Robust pairwise learningwithHuberloss,”Neurocomputing,vol.450, pp. 214–232, 2021, doi:10.1016/j.neucom.2021.03.045.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

13. T. Gao, H. Gouk, and T. Hospedales, “Searching for robustness: Loss learning for noisy classification,” Computer Vision and Pattern Recognition (CVPR), 2021.

14. K. Bonab and F. Can, “Less is more: framework for number of components in online ensembles,” AAAI, 2017.

15. H. M. Gomes et al., “Adaptive Random Forest with dynamicdetectors,”ACMInternationalConferenceon Similar,2023,doi:10.1145/3594315.

16. A. Regularized Ensemble for evolving streams,” Pattern Recognition Letters, vol. 178, pp. 1–8, 2024, doi:10.1016/j.patrec.2023.12.012.

17. L. Sagi and L. Rokach, “Explainable decision forest: transforming a decision forest into an interpretable tree,” Information Fusion, vol. 65, 2020, doi:10.1016/j.inffus.2020.08.002.

18. A.GhoshandP.Sastry,“Huberlossinrobustmachine learning,”AnnalsofStatistics,1964(rev.2019).

19. R. Polikar, “Ensemble based systems in decision making,” IEEE Circuits and Systems Magazine, vol. 6, no. 3, pp. 21–45, 2006, doi:10.1109/MCAS.2006.1688199.

20. L. Rokach, “Ensemble-based classifiers,” Artificial Intelligence Review, vol. 33, pp. 1–39, 2010, doi:10.1007/s10462-009-9124-7.

21. D. Opitz and R. Maclin, “Popular ensemble methods: An empirical study,” Journal of Artificial Intelligence Research, vol. 11, pp. 169–198, 1999 (republished 2005).

22. K. Kuncheva and C. Whitaker, “Measures of diversity in classifier ensembles,” Machine Learning, vol. 51, pp.181–207,2003(republishedfeaturesin2005).

23. G. Brown et al., “Diversity creation methods: a survey,” Information Fusion, vol. 6, no. 1, pp. 5–20, 2005,doi:10.1016/j.inffus.2003.07.001.

24. S. Miao et al., “Meta-learning for noisy labels,” NIPS, 2015.

25. A.Zhuet al.,“Co-teaching:robusttraining with noisy labels,”NIPS,2018.

26. S. Ren et al., “Learning to reweight examples for robusttraining,”ICML,2018.

27. B. Han et al., “Meta-learning in noisy-label environments,”ICLR,2018.

28. A. Gao et al., “Learning white-box noise-robust loss functions,”ICML,2021.

29. D.Menahem etal.,“Improvingmalwaredetectionvia ensemble,” Comp. Statistics & Data Analysis, vol. 53, pp. 3404–3414, 2009, doi:10.1016/j.csda.2009.05.010.

30. F. Savio and M. García-Sebastián, “Neurocognitive disorder detection with ensemble learning,” Comp. Biology and Medicine, vol. 43, pp. 613–629, 2013, doi:10.1016/j.compbiomed.2012.11.003.

31. D. Valenkova et al., “Trip U-Net for MR image segmentation,”MedicalImageAnalysis,vol.76,2021, doi:10.1016/j.media.2021.102136.

32. Q.Guetal.,“EnsembleclassifierforGPCRprediction,” Neurocomputing, vol. 147, pp. 1135–1144, 2015, doi:10.1016/j.neucom.2014.08.003.

33. M.Chenetal.,“Streamingrandompatches:ensemble for evolving streams,” Knowledge and Information Systems, vol. 63, pp. 1–29, 2021, doi:10.1007/s10115-019-01352-y.

34. E. Omer and S. Rokach, “Explainable forest,” Information Fusion, 2020, doi:10.1016/j.inffus.2020.05.002.

35. N. Dessi et al., “Enhancing random forest in microarray data,” LNCS, 2013, doi:10.1007/978-3642-38326-7_15.

36. J. Arun Raj Kumar and S. Selvakumar, “DDoS detection using ensemble neural classifiers,” Computer Communications, vol. 34, no. 8, pp. 966–1008,2011,doi:10.1016/j.comcom.2010.12.020.