International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

Anshul Saxena * , Prof. Neha Khare**

*Research Scholar Department of CSE, Takshshila Institute of Engineering & Technology, Jabalpur, M.P.

**Prof., Department of CSE, Takshshila Institute of Engineering & Technology, Jabalpur, M.P.

Abstract- The core objective is to develop a reliable and accurate system capable of identifying depressed and nondepressed users through natural language processing (NLP) techniques. To this end, we experimented with a range of sequence modelling approaches, including Simple RNN, UnidirectionalandBidirectionalLSTM,GatedRecurrentUnits (GRU),andtheirbidirectionalcounterparts.Furthermore,we integrated a transfer learning approach using BERT (Bidirectional Encoder Representations from Transformers) to analyses complex sentence structures and contextual relationships in user posts. All models were trained and evaluatedonalabelleddatasetconsistingofsocialmediatext entries annotated for depression. The evaluation metric of choicewasRecall,giventhecriticalimportanceofminimizing false negatives in mental health detection. The BERT model achievedthehighestrecall scoreof98.10%, followedclosely by Bidirectional LSTM and Bidirectional GRU models, both scoring97.76%.Theseresultsdemonstratethesuperiorityof bidirectional architecturesincapturingcontextual semantics and underline the importance of transfer-based models like BERTinimprovingmentalhealthclassificationperformance. Our study confirms that bidirectionality and contextual embedding’ssignificantlyboostdetectioncapabilitiesinNLPbased mental health applications. This work contributes to buildingintelligentsystemsforearlydiagnosisofdepression, supporting mental health professionals with enhanced screeningtoolsinonlineenvironments.

Keywords: Depression Detection, Social Media Analysis, Natural Language Processing (NLP), Recurrent Neural Networks (RNN), Long Short-Term Memory (LSTM), Gated RecurrentUnit(GRU),BidirectionalModels,BERT.

An uncontrolled growth of brain tissues is a brain Tumor. It producespressureintheskullandinterfereswiththebrain’s natural functioning. Brain Tumor comes in two different types: Benign (non-cancerous) and Malignant (cancerous). Among them, malignant tumours grow quickly in the brain, damage the normal tissues, and may replicate themselves in

other parts of the body [1], [2], [3]. Brain tumors are graded intofourdifferentcategories:

Grade I: These tumors do not spread quickly and develop slowly. These are connected to a higher chance of enhanced order and may be surgically eliminated nearly entirely. One suchtumorisapilocyticastrocytoma.

Grade II: Although they may migrate to surrounding tissues and advance to higher grades, these tumors also grow over time.

GradeIII:Thegrowthofthesetumorsarequickerthangrade IImalignanciesandspreadtoadjoiningtissues.

GradeIV:Itisdangerousofallandlikelytospreadmalignant tumors are in this category. Might even use blood vessels to speeduptheirgrowth.

Xu et al. [4] developed an inertial microfluidic sorting device that has a 3D-stacked multistage intended for circulating tumor cells (CTCs) with efficient downstream analysis. The initial step includes a trapezoidal cross-section for maximum separation, followed by symmetrical square serpentine channels for more enrichment. This novel design produces rates yields over 80% efficiency and over 90% purity. This approachallowsforquickandintegratedCTCanalysis.

Recent advancements in vision models, such as Vision Language Models (VLLMs) and other emerging technologies, havethepotentialtosignificantlyenhancetheperformanceof braintumordetectionmethods.VLLMs,forinstance,combine vision and language processing, allowing for a more nuanced understanding of both visual data and associated medical narratives. Incorporating these models could improve our system’sabilitytointerpretcomplexMRIscansinthecontext of clinical documentation, patient history, or radiology reports. Furthermore, other emerging technologies, such as self-supervised learning and attention mechanisms, are showing promise in enhancing the ability of models to learn

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

from limited data, which would be particularly beneficial for improvingperformanceonraretumortypes.

Brain tumors at early stage can help prevent tumor growth, exertion dissemination of cancerous growths to different brain regions. Traditionally, radiologists analyse brain tumors by employing some medical imaging techniques to spot abnormalities like brain tumors. Even though MRI, computed tomography (CT), and positron emission tomography (PET) scans are the preferred choice among medical practitioners. The non-invasive characteristics, exceptional depiction of soft tissue and metabolite characteristics, MRI is the most sought-after imaging techniqueby medical professionalsfor tumordetection. MRI playsafundamentalroleincharacterizingthetypeoftumor, which,inturn,guidesphysiciansindevisingtreatment plans andmonitoringtheprogressionofthedisease.Thestructural MRI images, specifically T1, contrast enhanced T1, and T2weighted images, are instrumental in delineating both the healthy anatomical structures and the extent of tumor involvement [5]. Consequently, MRI has attracted significant attentionfromresearchersandscientists.Recently,therehas been a notable rise in interest in MRI-based medical image analysis for brain tumor studies, driven by an increasing need for efficient and unbiased analysis of large amounts of medicaldata.Previously,2Dsegmentedmodelswerecreated specifically for radiologists’. However, achieving high accuracy in tumor boundary segmentation remains a challenge. Identifying, segmenting, and classifying brain tumors is vital but often time-consuming. Conventional methodsarelaboriousandsusceptibletohumanerror.

Various tumor segmentation and classification techniques have been categorized into registration-based, atlas-based, supervised,unsupervised,andhybridalgorithms.Atlas-based algorithms involve applying these techniques in Pipitone et al.’s work [6], utilizing registration techniques outlined by Bauer et al. [7] for unlabelled data, unsupervised techniques are employed to capture inherent characteristics, as discussed in Chander et al.’s work [8], utilizing various clustering methods [9], [10], Gaussian modelling, or other approaches. Supervised techniques are utilized for labelled data, such as decision forests, where multiple decision trees arecombinedtomakepredictions.

High-dimensional and effective algorithms such as support vectormachines(SVM)areemployedforclassificationtasks. Additionally,extremelyrandomizedtreesandself-organizing maps are used for exploratory data analysis and visualization.Allofthementionedmethodsleveragefeatures extracted through feature engineering, encompassing extracting and selecting relevant features. The advent of computerized algorithms has significantly eased the

segmentation and classification of brain tumors. Deep learning, in particular, has remarkably impacted tumor detection, segmentation, and classification. In recent years, deep learning has surpassed traditional machine learning methods in various applications. Specifically, in classifying brain tumor grades, several researchers have employed pretrained Convolutional Neural Network (CNN) architectures and fine-tuned them to achieve superior performance. Additionally, some experts have advocated for the use of modernCNNarchitecturestrainedfromthegroundup.While the majority of CNNs used for this purpose have been 2Dbased, there have also been instances where researchers appliedCNNarchitecturesina3Dcontext.Inmedicalimaging, computerized systems primarily perform two critical tasks: classificationandsegmentation.Malignantandbenigntumors exhibit distinct shape characteristics. For instance, malignant tumors often have irregular and speculated shapes, while benign tumors tend to have smooth, oval, or round boundaries. Radiologists rely on these shape features for clinical diagnosis, helping them categorize tumors as either benignormalignant.Thesepropertiesplayavitalroleinboth tumorclassificationandsegmentation.Consequently,theidea ofperforming these dual tasksvia a single neural network to tacklebothtaskswhilesharingrelevantfeaturesbetweenthe twoholdssignificant promiseandmeritsfurther exploration. Thisthesisintroducesauniqueapproachtodiseasedetection focusedonsimultaneouslyaddressingthechallengesof brain tumordetectionandclassification.

Atumorisanuncontrolledproliferationofcancercellsinany region of the body. Tumors can vary widely in type, characteristics, and treatment approaches. Currently, brain tumorsarecategorizedintoseveraldistincttypes[11].Before delvingintobraintumorsegmentationmethods,itisessential to introduce the MRI pre-processing operations, as they directly influence the quality of the segmentation results. Additionally, there are numerous challenges in engineering solutionsforbraintumortreatment,ashighlightedbyLyonet al. [12]. Malignant brain tumors are among the most devastating forms of cancer, marked by dismal survival rates that have remained largely unchanged over the past six decades.Thisstagnationisprimarilyattributedtothescarcity of available therapies capable of navigating anatomical barriers without causing harm to delicate neuronal tissue. The recent progress in cancer immunotherapies offers a promising frontier for potentially treating these otherwise inoperable brain tumors. However, despite the promising outcomesobservedinothertypesofcancer,limitedheadway has been made in the case of brain tumors. The human brain constitutes a vital organ with exceptional anatomical, physiological, and immunological complexities that impede

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

theachievementofsuccessfultreatments.Theseunparalleled anatomical and physiological restrictions give rise to inherent technical challenges when designing therapeutic approaches that must efficiently function within the brain and its tumor immune microenvironment. To date, engineeringstrategiesforimplementingimmunotherapiesin the brain, beyond merely adapting existing extra neural immunotherapy’s, are lacking. Nevertheless, there exist ampleopportunitiesforinnovation.Approachingtherapeutic strategies from an engineering standpoint may allow us to harness a wider array of technologies to advance tailored treatment approaches that can effectively address the specific biological constraints associated with brain cancer treatment, ultimately facilitating improved brain cancer immunotherapies.

Pre-processing of raw MRI images is a fundamental step in achieving accurate brain tumor segmentation. These preprocessing operations encompass tasks such as de-noising, skull-stripping,andintensitynormalization,andtheydirectly influence the quality of brain tumor segmentation results [13].Annotatingmedicalimagesrequiressignificanttimeand expertise, making the identification of a large number of images particularly challenging. Several efforts have been undertaken to address the challenges mentioned. Transfer learning models have proven to be a valuable choice, especiallyinscenarioswith limitedlabelleddata fortraining [14].Theadvancementinreducinghumanerrorandlowering mortality rates relies on the development of accurate and automated classification methods. To this end, automated tumor identification systems based on deep learning have emerged, aimed at diminishing the time and effort spent by radiologists on brain tumor analysis using MRI. Recent studies indicate that automated image analysis, complemented by clinical picture assessment, holds promise as a solution to save time and deliver dependable results. Furthermore, these automated assessments can play a pivotal role in enhancing the therapeutic management of braintumorsbyalleviatingtheburdenondoctorswhowould otherwise manually describe tumor growth. In essence, computer algorithms have the potential to offer robust and quantitative estimations of tumor characteristics [15], [16]. Noteworthy medical issue positioned as the tenth most common cause of mortality in the United States. It is estimated that approximately 700,000 individuals are afflicted by brain tumors, with 80 percent categorized as benign and 20 percent as malignant [17]. The challenges in this domain arise from substantial variations in brain tumor attributes, encompassing size, shape, and intensity, even withinthesametumorcategory,along withresemblancesto manifestationsofotherdiseases.Misclassifyingabraintumor can lead to severe consequences, diminishing a patient’s chances of survival. As a result, there has been a growing

interest in the development of automated image processing technologies to address the limitations of manual diagnosis [18], [19]. Many researchers have delved into various algorithms for the identification and categorization of brain tumors, with a strong emphasis on achieving high performanceandminimizingerrors.

Author’s This work [20] proposing an enhanced model for classifying meningioma, Glioma, and pituitary gland tumors, thereby improving precision in brain tumor detection. Trained on a dataset of 5712 images, the model achieves exceptional accuracy (99%) in both training and validation datasets, with a focus on precision. Leveraging techniques such as data augmentation, transfer learning with ResNet50, andregularizationensuresstabilityandgeneralizability.

The work [21] [22] encompasses three distinct classes, including Meningiomas, Glioma and pituitary tumors. Mask alignmenttechniqueisusedforprecisesegmentationofbrain tumorregions

This research work [23] solves the problem by identifying and categorizing brain tumors in difficult cases using deep learning (DL) methods. Dilated Convolution and YOLOv8 Feature Extraction Network (DC-YOLOv8FEN) is proposed to improve tumor detection accuracy. Based on the experimental results, the proposed method achieved precision of 95.2%, recall rate of 90.5%, accuracy of 99.5% andF1-scoreof96.5%.

The paper in [24] uses different traditional and hybrid ML modelstoclassifythebraintumorimageswithoutanyhuman intervention. Along with these, 16 different transfer learning models were also analysed to identify the best transfer learning model to classify brain tumors based on neural networks. Finally, using different state-of-the-art technologies, a stacked classifier was proposed which outperformsalltheotherdevelopedmodels.

The work in [25] proposed a deep convolutional neural network(CNN)EfficientNet-B0basemodelisfine-tunedwith our proposed layers to efficiently classify and detect brain tumor images. The image enhancement techniques are used by applying various filters to enhance the quality of the images. Data augmentation methods are applied to increase the data samples for better training. EfficientNet-B0 outperforms other CNN models by achieving the highest classification accuracy of 98.87% in terms of classification anddetection.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

The work in [26] proposed module method and accuracy of brain tumor detection. The first module, named the Image Enhancement Technique, utilizes a trio of machine learning and imaging strategies adaptive Wiener filtering, neural networks.ThesecondphaseusesSupportVectorMachinesto perform tumor segmentation and classification. Applied to various types of brain tumors, including Meningiomas and pituitary tumors, the model exhibited significant improvements in contrast and classification efficiency. It achieved an average sensitivity and specificity of 0.991, accuracyof0.989,andaDicescore(DSC)of0.981.

Author’s in [27] presents a novel brain tumor detection framework, the Patch Base Vision Transformer (PBVit). The work in [60] proposes a novel framework that integrates fuzzy logic-based segmentation with deep learning (DL) techniques to enhance brain tumor detection and classificationinmagneticresonanceimaging(MRI)scans.

Ref. Algorithm Used Augmentation Techniques

[20] ResNet50 Range of transformations, including rotation, shifting, shearing,zooming,andhorizontal flipping.

[21] YOLOv7 Converting.matfilesto .pngfiles. Polygonal segmentation coordinatesareextracted.

[22] 3D-CNN Pre-processing techniques including filtration, intensity correction, and skull stripping is being used to maintain the originalvisualcharacteristics.

[23] YOLOv8 First, the vision transformer blockmaximizesthefeaturemap, and the Self Shuffle Attention (SSA) improves the feature extractionnetwork(FEN).

[24] CNN,SVM,RF,DT, NB,andKNN. Black portions of each of the images were removed. Contours are identified on the top, bottom, left, and right direction based on thepresenceoftheblackregions.

[25] EfficientB0 Albumenatations to enhance the size of the dataset by creating a new set of images via various transformation methods such as randomrotation(90◦ ,180◦ ,270◦ ), horizontal, and vertical flips, andtransposition

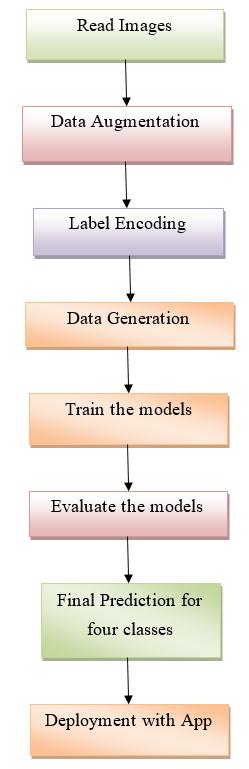

Thearchitectureoftheproposedmodelisshownbelowin figurebelow:

Figure3.1:proposedworksteps.

After data augmentation, we train the model using pretrained CNN models like VGG16, ResNet50, InceptionV3, DenseNet121, DenseNet201 and ViT. All these models are pre-trained using large ImageNet dataset. The explanation of everyIndividualmodelisgivenbelow:

VGG16isoneoftheearliestdeepconvolutionalnetworksthat demonstrated the power of depth in CNNs. It consists of 13 convolutional layers followed by 3 fully connected layers, makingatotalof16weightlayers.

Architecture Highlights:

Uses small (3×3) convolution filters throughout the network

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

Stacks multiple convolution layers before pooling to capturecomplexpatterns

Simple and uniform design, which makes it easy to understandandfine-tune

Strengths:

Performswellondatasetswithclean,well-alignedimages

Easytomodifyandinterpretlayer-wise

Limitations:

Very large model (138 million parameters), making it memoryintensive

Trainingfromscratchisimpracticalforsmalldatasets

B.ResNet50 (Residual Networks)

ResNet50 introduced the concept of residual learning instead of learning the target mapping directly, the model learns the residual (difference) between the input and the output.Thishelpsintrainingmuchdeepernetworkswithout vanishinggradientproblems.

Architecture Highlights:

50layersdeep,using residual blocks

Eachblockusesskipconnectionsthatbypassafewlayers

Facilitates very deep network construction without degradation

Strengths:

Performsverywellonlarge-scaletasks

Avoidsoverfittinganddegradationindeepernetworks.

Limitations:

SlightlymorecomplexthanmodelslikeVGG

Can underperform on smaller datasets if not carefully regularized.

C.EfficientNetB0

EfficientNet introduces a new scaling approach that uniformly balances network depth, width, and input resolution using a compound coefficient. EfficientNetB0 is the smallest variant and was created using neural architecturesearch(NAS).

Architecture Highlights:

Built using Mobile Inverted Bottleneck Convolution (MBConv)blocks

Optimizedforbothperformanceandefficiency

UsesSwishactivationinsteadofReLU.

Strengths:

Verylightweight(fasterandsmaller)

Highaccuracy-to-parameterratio

Energy-efficient–perfectformobileandedgedevices.

Limitations:

Lower layers may not generalize well if medical images differsignificantlyfromImageNet

Might require fine-tuning more layers for best performanceonmedicaltasks.

InceptionV3 builds on the original Inception architecture, which introduced the idea of multi-scale processing within thesamelayer.Itusesseveraltypesoffiltersinparallel(1×1, 3×3,5×5)tocapturebothlocalandglobalfeatures.

Architecture Highlights:

Incorporates factorized convolutions and auxiliary classifiers

Reducescomputationusingasymmetricconvolutions(e.g., 1x7followedby7x1)

Deeperandmoreefficientthanearlierversions

Strengths:

Excelsatextractingfeaturesatmultiplescales

Performswellonvariedorcomplexmedicalimages

Limitations:

Complexstructuremakesithardertomodify

Requiresmorecarefulpreprocessing

DenseNet(DenseConvolutionalNetwork)connectseachlayer toeveryotherlayerinafeed-forwardfashion.Thatis,thelth layerreceivesthefeaturemapsfromall precedingl−1layers, enhancinginformationflowandgradientpropagation.

Architecture Highlights:

121layerswith dense blocks and transition layers

Noneedtorelearnredundantfeatures efficientreuse

SmallermodelsizethanResNetforsimilaraccuracy

Strengths:

Reduces vanishing gradients and improves feature propagation

Usesfewerparametersthansimilardeeparchitectures

Limitations:

Concatenation of many layers can increase GPU memory usage

Complexskipconnectionsmaybehardertodebug.

DenseNet201 is a deeper and more powerful version of DenseNet121, featuring 201 layers. It maintains the same

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

philosophy of densely connected blocks but captures even morenuancedfeaturesfrominputimages.

Architecture Highlights:

DeepenedversionofDenseNet121

Maintainsdenseconnectionsandtransitionlayers

Extracts high-level features while retaining fine-grained spatialinformation

Strengths:

Achieves very high accuracy withmoderatemodelsize

Encouragesfeaturereuseatalllevels

Learns complex relationships even from limited training data

Limitations:

Training and inference may take longer due to model depth

High memory consumption compared to smaller DenseNet.

4.2ProposedAlgorithm

Step 1: Input Processing

Input Shape

Acceptsinputimagestypicallyresizedto 224×224×3.

Preprocessing

Normalize pixel values to a 0–1 range or apply datasetspecific preprocessing (e.g., tf.keras.applications.densenet.preprocess_input).

Step 2: Initial Convolution and Pooling Layer

Apply a 7×7 convolution with stride 2 and padding to reducespatialresolution.

Follow with a 3×3 max pooling operation to further downsamplethefeaturemap.

Step 3: Enter Dense Block 1

Startstackinglayerswhere:

o Each new layer receives all previous feature maps asinputviaconcatenation.

o Eachlayercontains:

Batch Normalization → ReLU Activation → 1×1

Convolution(bottlenecklayer)

Batch Normalization → ReLU Activation → 3×3

Convolution(compositefunction)

DenseBlock1consistsof 6 such composite layers

Step 4: TransitionLayer 1

Reducefeaturemapsizeandnumberofchannels:

o BatchNormalization→1×1Convolution

o 2×2AveragePooling(stride2).

Step 5: Dense Block 2

Alargerblockwith 12composite layers

Each layer takes input from all preceding layers within thisblock(denseconnectivity).

Step 6: TransitionLayer 2

Anothercompressionstep:

o BatchNormalization→1×1Convolution

o 2×2AveragePooling.

Step 7: Dense Block 3

Evendeeper:contains 48 layers

Reusesfeaturesheavilyviaconcatenation,whichimproves gradientflowandrepresentation.

Step 8: TransitionLayer 3

Samepattern:

o BatchNormalization→1×1Convolution

o 2×2AveragePooling.

Step 9: Dense Block 4

Finaldenseblockwith 32 layers

Maintains dense connections and continues expanding featurerichness.

Step 10:Global Average Pooling

Instead of flattening, apply global average pooling to reducespatialdimensionsto1×1perfeaturemap.

Output becomes a 1D vector representing the entire image.

Step 11:Fully Connected (Dense) Layer

Add a Dense layer with neurons equal to the number of classes(e.g.,4fortumortypes).

Use Softmax activation tooutputclassprobabilities.

Step 12:Model Compilation and Training

Compilewith:

o Loss function: categorical_crossentropy or sparse_categorical_crossentropy

o Optimizer: AdamorSGD

o Metrics: accuracy

Train on labeled MRI data using a generator or dataset pipeline.

Table 5.1 below shows the accuracy comparison of the all modelsimplementedforthework.

Table5.1:AccuracyScore.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

Figure5.29:AccuracyComparisons.

We evaluated multiple deep learning models for brain tumor classification and obtained impressive accuracy scores. Dense Net (Densely Connected Convolutional Network) is a deep learning architecture where each layer receives inputs from all previous layers and passes its output to all subsequent layers. It was introduced by GAO Huang Et Al. inthepaper"DenselyConnectedConvolutional Networks"–CVPR2017.

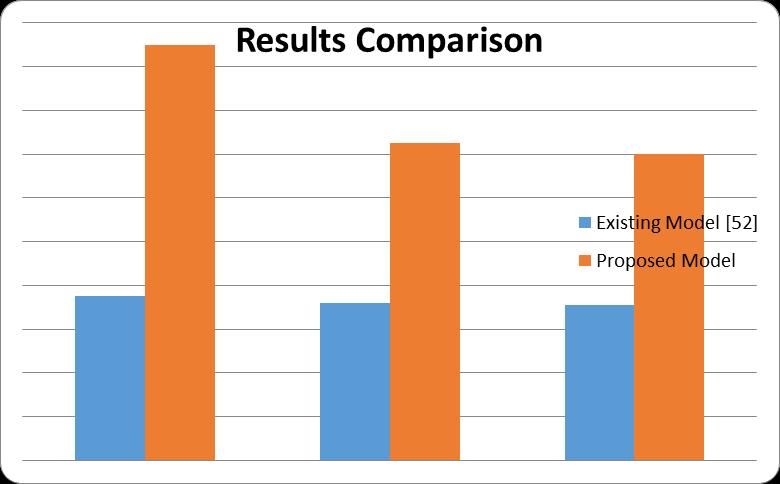

Finally, the comparison with existing model results and proposedresultsonotherfactorsareshownintable5.2.

Table5.2:AccuracyScorewithexistingandproposedwork.

Parameters

[52]

Figure5.30:ResultsComparisons.

TheproposedDenseNet201outperformsinallparametersas comparedtoResNet50.[52]

We explored and implemented a comprehensive deep learning pipeline to detect brain tumors from MRI images using various pre-trained convolutional neural networks (CNNs). Here’s a structured summary of the key work completed:

We successfully trained and evaluated multiple pretrained CNN models including:

o VGG16

o ResNet50

o InceptionV3

o EfficientNetB0

o DenseNet121

o DenseNet201

Among these, DenseNet201 achieved the highest accuracy of 99.24%, making it the most effective model for thisclassificationtask.

5.1

You structured the dataset with separate Training and Testingfolders.

We used ImageDataGenerator for real-time data augmentation (rotation, zoom, flips), which helped improve modelrobustness.

You learned how to manually load, shuffle, and preprocessimagepathsandlabels.

Wediscussedmodelevaluationusing:

o Accuracy

o ConfusionMatrix

o ClassificationReport

A bar chart comparing model accuracies was created to visualizeperformanceacrossarchitectures.

[1]M. J. Lakshmi and S. N. Rao, ‘‘Brain tumor magnetic resonance image classification: A deep learning approach,’’ Soft Comput., vol. 26, no. 13, pp. 6245–6253, Jul. 2022, doi: 10.1007/s00500-022-07163-z.

[2]W.JunandZ.Liyuan,‘‘Braintumorclassificationbasedon attention guided deep learning model,’’ Int. J. Comput. Intell. Syst., vol. 15, no. 1, p. 35, Dec. 2022, doi: 10.1007/s44196022-00090-9.

[3]A.Rehman,S.Naz,M.I.Razzak,F.Akram,andM.Imran,‘‘A deep learning-based framework for automatic brain tumors classification using transfer learning,’’ Circuits, Syst., Signal Process., vol. 39, no. 2, pp. 757–775, Feb. 2020, doi: 10.1007/s00034-019-01246-3.

[4]X. Xu, X. Huang, J. Sun, J. Chen, G. Wu, Y. Yao, N. Zhou, S. Wang,andL.Sun,‘‘3D-stackedmultistageinertialmicrofluidic chip for high throughput enrichment of circulating tumor cells,’’ Cyborg Bionic Syst., vol. 2022, Jan. 2022, Art. no. 9829287.

[5]M. Soltaninejad, G. Yang, T. Lambrou, N. Allinson, T. L. Jones, T. R. Barrick, F. A. Howe, and X. Ye, ‘‘Supervised learning based multimodal MRI brain tumour segmentation using texture features from supervoxels,’’ Comput. Methods ProgramsBiomed.,vol.157,pp.69–84,Apr.2018.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

[6]J. Pipitone, M. T. M. Park, J. Winterburn, T. A. Lett, J. P. Lerch, J. C. Pruessner, M. Lepage, A. N. Voineskos, and M. M. Chakravarty, ‘‘Multi-atlas segmentation of the whole hippocampus and subfields using multiple automatically generated templates,’’ NeuroImage, vol. 101, pp. 494–512, Nov.2014.

[7]S. Bauer, C. Seiler, T. Bardyn, P. Buechler, and M. Reyes, ‘‘Atlas-based segmentation of brain tumor images using a Markov random field-based tumor growth model and nonrigid registration,’’ in Proc. Annu. Int. Conf. IEEE Eng. Med. Biol.,Aug.2010,pp.4080–4083.

[8]A.Chander,A.Chatterjee,andP.Siarry,‘‘Anewsocialand momentum component adaptive PSO algorithm for image segmentation,’’ Expert Syst. Appl., vol. 38, no. 5, pp. 4998–5004,May2011.

[9]S. Ji, B. Wei, Z. Yu, G. Yang, and Y. Yin, ‘‘A new multistage medicalsegmentationmethodbasedonsuperpixelandfuzzy clustering,’’ Comput. Math. Methods Med., vol. 2014, no. 1, 2014.

[10] S. Saha and S. Bandyopadhyay, ‘‘MRI brain image segmentation by fuzzy symmetry-based genetic clustering technique,’’inProc.IEEECongr.Evol.Comput.,Sep.2007,pp. 4417–4424.

[11] P. Gupta, M. Shringirishi, and D. singh, ‘‘Implementation of brain tumor segmentation in brain MR images using K-means clustering and fuzzy Cmeans algorithm,’’ Int. J. Comput. Technol., vol. 5, no. 1, pp. 54–59, 2006.[Online].Available:https://www.cirworld.com.

[12] J.G.Lyon,N.Mokarram, T.Saxena,S.L.Carroll,andR. V. Bellamkonda, ‘‘Engineering challenges for brain tumor immunotherapy,’’ Adv. Drug Del. Rev., vol. 114, pp. 19–32, May15,2017,doi:10.1016/j.addr.2017.06.006.

[13] J.Liu,M.Li,J.Wang,F.Wu,T.Liu,andY.Pan,‘‘Asurvey of MRI-based brain tumor segmentation methods,’’ Tsinghua Sci.Technol.,vol.19,no.6, pp.578–595, Dec.2014.[Online]. Available:https://www.abta.org.

[14] R. Tr, U. K. Lilhore, P. M, S. Simaiya, A. Kaur, and M Hamdi, ‘‘Predictive analysis of heart diseases with machine learningapproaches,’’MalaysianJ.Comput.Sci.,pp.132–148, Mar.2022,doi:10.22452/mjcs.sp2022no1.10.

[15] U. Kosare, L. Bitla, S. Sahare, P. Dongre, S. Jogi, and S. Wasnik, ‘‘Automatic brain tumor detection and classification onMRIimagesusingdeeplearningtechniques,’’inProc.IEEE

8th Int. Conf. Converg. Technol. (I2CT), Apr. 2023, pp. 1–3, doi:10.1109/I2CT57861.2023.10126412.

[16] S. Simaiya, U. K. Lilhore, R. Walia, S. Chauhan, and A. Vajpayee, ‘‘An efficient brain tumour detection from MR images based on deep learning and transfer learning model,’’ in Proc. Int. Conf. IoT, Commun. Autom. Technol. (ICICAT), Jun.2023,doi:10.1109/icicat57735.2023.10263702.

[17] M.L.Rahman,A.W.Reza,andS.I.Shabuj,‘‘AnInternet of Things-based automatic brain tumor detection system,’’ Indonesian J. Electr. Eng. Comput. Sci., vol. 25, no. 1, p. 214, Jan.2022,doi:10.11591/ijeecs.v25.i1.pp214-222.

[18] W.Ayadi,W.Elhamzi,I.Charfi,andM.Atri,‘‘DeepCNN for brain tumor classification,’’ Neural Process. Lett. vol. 53, no. 1, pp. 671–700, Feb. 2021, doi: 10.1007/s11063-02010398-2.

[19] M. Nazir, S. Shakil, and K. Khurshid, ‘‘Role of deep learning in brain tumor detection and classification (2015 to 2020):Areview,”ComputerizedMed.Imag.Graph.vol.91,Jul. 2021, Art. no. 101940, doi: 10.1016/j.compmedimag.2021.101940.

[20] A.Younisetal.,"AbnormalBrainTumorsClassification Using ResNet50 and Its Comprehensive Evaluation," in IEEE Access, vol. 12, pp. 78843-78853, 2024, doi: 10.1109/ACCESS.2024.3403902.

[21] M.F.Almufareh,M.Imran,A.Khan,M.HumayunandM. Asim, "Automated Brain Tumor Segmentation and Classification in MRI Using YOLO-Based Deep Learning," in IEEE Access, vol. 12, pp. 16189-16207, 2024, doi: 10.1109/ACCESS.2024.3359418.

[22] S. Solanki, U. P. Singh, S. S. Chouhan and S. Jain, "Brain Tumor Detection and Classification Using Intelligence Techniques:AnOverview,"inIEEEAccess,vol.11,pp.1287012886,2023,doi:10.1109/ACCESS.2023.3242666.

[23] L. Annet Abraham, G. Palanisamy and V. Goutham, "Dilated Convolution and YOLOv8 Feature Extraction Network: An Improved Method for MRI-Based Brain Tumor Detection," in IEEE Access, vol. 13, pp. 27238-27256, 2025, doi:10.1109/ACCESS.2025.3539924.

[24] M. S. Majib, M. M. Rahman, T. M. S. Sazzad, N. I. Khan and S. K. Dey, "VGG-SCNet: A VGG Net-Based Deep Learning Framework for Brain Tumor Detection on MRI Images," in IEEE Access, vol. 9, pp. 116942-116952, 2021, doi: 10.1109/ACCESS.2021.3105874.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN:2395-0072

[25] H.A.Shah,F.Saeed,S.Yun,J.-H.Park,A.PaulandJ.-M. Kang, "A Robust Approach for Brain Tumor Detection in MagneticResonanceImagesUsingFinetunedEfficientNet,"in IEEE Access, vol. 10, pp. 65426-65438, 2022, doi: 10.1109/ACCESS.2022.3184113.

[26] A. A. Asiri, T. A. Soomro, A. A. Shah, G. Pogrebna, M. Irfan and S. Alqahtani, "Optimized Brain Tumor Detection: A Dual-Module Approach for MRI Image Enhancement and Tumor Classification," in IEEE Access, vol. 12, pp. 4286842887,2024,doi:10.1109/ACCESS.2024.3379136.

[27] P. Chauhan et al., "PBVit: A Patch-Based Vision Transformer for Enhanced Brain Tumor Detection," in IEEE Access, vol. 13, pp. 13015-13029, 2025, doi: 10.1109/ACCESS.2024.3521002.

2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008