International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Shubham B. Khaire1, Amol M. Chinchole2 , Jayesh M. Borse3, Prafulla P. Chaudhari4

1Mr. Shubham Bhausaheb Khaire, Student, MCA, MET Institute of Management, Nashik, Maharashtra, India

2Mr. Amol Madhavrao Chinchole, Student, MCA, MET Institute of Management, Nashik, Maharashtra, India

3Mr. Jayesh Mohanlal Borse, Student, MCA, MET Institute of Management, Nashik, Maharashtra, India

4 Mr. Prafulla Prakash Chaudhari, Assistant Professor, Department, MCA, MET Institute of Management, Nashik, Maharashtra ***

Abstract - Withtherapidevolutionofartificialintelligence (AI) and its integration into mobile technologies, humancomputer interaction has undergone a transformative shift. Speech Emotion Recognition (SER) enables automatic identificationofaspeaker'semotionalstatefromvoicesignals, offering significant potential for mental health interventions, personalized feedback, and enhanced user experiences. Accuratelydetectingemotionssuchashappiness,sadness,and anger is critical for addressing psychological well-being in real-time applications. While deep learning models have demonstrated high accuracy, traditional machine learning methods, such as Random Forest, provide robust, interpretable, and computationally efficient alternatives, particularly for resource-constrained mobile devices. This paper proposes a novel real-time, mobile-optimized SER system that leverages Mel-Frequency Cepstral Coefficients (MFCCs)forfeatureextractionandaRandomForestClassifier to recognize happiness, sadness, or anger. Upon emotion detection, the system delivers motivational verses from the Bhagavad Gita through a Text-to-Speech (TTS) engine, creatingaculturallyresonantmentoringexperience.Trained on a combined dataset of CREMA-D, RAVDESS, and EmoDB, the model achieves 78–85% accuracy under experimental conditions.By integratingspiritualcounselingwithAI-driven emotionrecognition,thissystempioneersaculturallyrelevant approach to digital mental wellness, offering scalable, accessible, and empathetic support for emotional health in diverse cultural contexts. Furthermore, the incorporation of ancient wisdom like the Bhagavad Gita not only enriches the feedback mechanism but also promotes long-term emotional resilience by encouraging users to reflect on timeless philosophical principles amid modern stressors.

Key Words: 1.Speech Emotion Recognition, 2.Random ForestClassifier,3.Mel-FrequencyCepstralCoefficients, 4.Bhagavad Gita, 6.Real-Time Systems, 7.Mobile Applications

NOMENCLATURE

List of Abbreviations

AI ArtificialIntelligence

SER SpeechEmotionRecognition

MFCC Mel-FrequencyCepstralCoefficients

TTS Text-to-Speech

CNN ConvolutionalNeuralNetwork

RNN RecurrentNeuralNetwork

LSTM LongShort-TermMemory

SVM SupportVectorMachine

GMM GaussianMixtureModel

HMM HiddenMarkovModel

CREMA-D Crowd-sourcedEmotionalMultimodal ActorsDataset

RAVDESS Ryerson Audio-Visual Database of EmotionalSpeechandSong

EmoDB BerlinEmotionalSpeechDatabase

API ApplicationProgrammingInterface

SPA Single-PageApplication

CSS CascadingStyleSheets

MP3 MPEG-1AudioLayer3

The rapid adoption of smartphones and advancements in voice-based technologies have revolutionized humancomputer interaction, enabling seamless and intuitive communication.Real-timedetectionofaspeaker’semotional statethroughvoicesignalshasemergedasacriticalresearch area,withapplicationsinmentalhealthmonitoring,timely emotionalsupport,andpersonalizeduserexperiences[1]. Speech Emotion Recognition (SER) is increasingly vital in domains such as virtual assistants, customer support, educational tools, and healthcare systems, where understandingemotionalcuescanenhanceresponsiveness and user satisfaction [2]. Real-time emotion detection addresseskeyaspectsofpsychologicalwell-being,suchas reducing stress, managing anger, and uplifting mood, therebysupportingmentalhealthinanincreasinglydigital world[3].

Thesocietalneedforaccessiblementalhealthsolutionshas grown exponentially, particularly in culturally diverse regions where traditional counseling may not be widely available,affordable,orsociallyacceptedduetostigma[14].

In such contexts, integrating spiritual or philosophical guidance,suchasversesfromtheBhagavadGita asacred Hindutextofferingprofoundinsightsonduty,self-control,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

andinnerpeace providesauniqueopportunitytodeliver culturallysensitiveemotionalsupport[15].Thisapproach resonates with users who draw strength from spiritual traditions,bridgingancientwisdomwithmoderntechnology tofosterholistichealing.

Early SER systems relied on manually designed acoustic features like pitch, energy, and Mel-Frequency Cepstral Coefficients(MFCCs),processedthroughclassifierssuchas Support Vector Machines (SVMs) and Gaussian Mixture Models (GMMs) [4]. While effective in controlled settings, these systems struggled with noisy environments and diverse mobile scenarios [5]. Ensemble methods, such as Random Forest, have since improved performance by combining multiple decision trees to enhance robustness and generalization [6]. However, most SER systems focus solely on emotion classification, lacking actionable or culturallymeaningfulfeedback[7].Thisstudyaddressesthis gapbyproposingareal-time,mobile-compatibleSERsystem thatnot onlydetectsemotionsbut alsodeliversculturally inspiredspiritualmentoringthroughBhagavadGitaverses, fostering a holistic approach to emotional well-being. By doingso,thesystemempowersuserstonavigateemotional challenges with philosophical depth, potentially reducing relianceonclinicalinterventionsandpromotingself-reliance inmentalhealthmanagement[16].

Over the past two decades, Speech Emotion Recognition (SER) has evolved significantly, transitioning from handcraftedfeatureextractiontoend-to-enddeeplearning approaches.Earlymethodsextractedprosodicandspectral features, such as MFCCs, Linear Predictive Cepstral Coefficients (LPCCs), and Perceptual Linear Predictive Coefficients (PLPCs), and paired them with classifiers like SVMs,GMMs,andHiddenMarkovModels(HMMs)[2].These approaches performed well on controlled datasets but falteredinreal-worldscenariosduetobackgroundnoiseand inter-speakervariability[4].Theintroductionofensemble learning methods, such as Random Forest, addressed overfittingissuesinherentinsingledecisiontrees,improving predictiveaccuracyandrobustness[6].Morerecently,deep learningmodels,includingConvolutionalNeuralNetworks (CNNs) and Recurrent Neural Networks (RNNs), have achieved state-of-the-art accuracy by capturing complex patterns from raw or lightly processed speech signals. However, their high computational cost and large model sizesposechallengesforreal-timemobileapplications[8].

The proliferation of mobile devices has driven research towardlightweightandefficientSERmodelssuitableforondevice processing [1]. Techniques combining MFCC-based feature extraction with high-performance classifiers like Random Forest strike a balance between computational efficiency and accuracy, enabling real-time emotion

detection without reliance on cloud resources [4]. Short speech segments (3–5 seconds) facilitate low-latency classification, ensuring smooth user interaction in mobile environments [9]. However, challenges remain, including limitedprocessingpower,batteryconstraints,andtheneed forrobustperformanceundervaryingacousticconditions, suchasbackgroundnoiseorlow-qualitymicrophoneinputs [12].Recentadvancementshaveexplorededgecomputingto mitigate these issues, allowing SER systems to operate offlineandpreserveuserprivacybykeepingsensitivevoice dataon-device[17].

Cross-cultural SER presents unique challenges due to variations in emotional expression across languages, dialects,andculturalnorms.Forinstance,emotionalcuesin speechmaydiffersignificantlybetweenindividualisticand collectivistsocieties,complicatingmodelgeneralization[10, 14].Fewstudieshaveexploredculturallytailoredfeedback mechanisms, limiting the applicability of SER systems in diverse populations [14]. Addressing these challenges requires datasets that capture cross-cultural emotional expressions and feedback systems that resonate with specificculturalcontexts,suchasspiritualorphilosophical guidance.Moreover,biasesintrainingdata oftenskewed toward Western emotional expressions can lead to reduced accuracy for non-Western users, highlighting the needforinclusivedatasetsandadaptivemodelsthataccount for cultural nuances in tone, intonation, and linguistic patterns[13].

MostSERsystemsarelimitedtooutputtingemotionlabels, lacking mechanisms for meaningful behavioral or motivational feedback [7]. While some researchers have proposedadaptiveresponsesystemsthattailoroutputsto usercontext,theintegrationofculturallysignificantcontent, suchasspiritualorphilosophicalguidance,remainslargely unexplored [11]. Emerging applications have begun to incorporateAI-drivenchatbotsthatdrawfromspiritualtexts for mental health support, providing users with reflective insightsduringmomentsofemotionaldistress[10,15].The proposedsystem’suseofBhagavadGitaversestoprovide emotionalsupportrepresentsanovelcontribution,bridging AI-drivenemotiondetectionwithculturallyresonantmental wellnessstrategies.Thisintegrationnotonlyenhancesuser engagement but also encourages mindfulness and selfreflection, aligning with therapeutic practices that emphasize emotional regulation through philosophical contemplation[16]

Incorporating spiritual elements into AI systems raises important ethical questions, such as ensuring cultural

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

sensitivityandavoidingappropriationofsacredtexts.The Bhagavad Gita, with its teachings on karma, dharma, and detachment,offersaframeworkforethicalAIuseinmental health,promotingbalanceandnon-harm(ahimsa)[15].This section explores how such integrations can be designed responsibly, with user consent for spiritual content and options for customization based on personal beliefs, ensuringthesystemservesasasupportivetoolratherthana prescriptive authority [13]. Ethical AI design must also address privacy concerns and potential biases in emotion detection,particularlyfordiversepopulations[18].

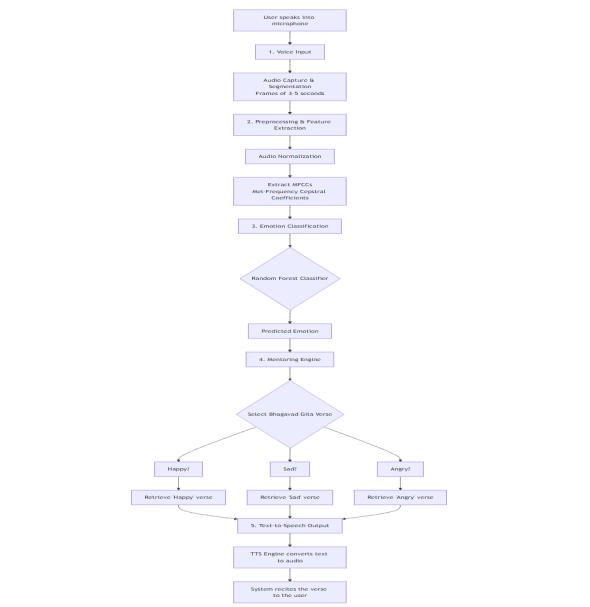

We present a mobile-ready SER architecture that detects emotions in real-time and delivers Bhagavad Gita-based mentoring to provide culturally meaningful emotional support. The system’s architecture is designed for lowlatencyoperationandseamlessuserinteraction,leveraginga modularpipelinetoprocessaudioinput,classifyemotions, and deliver personalized feedback. This modular design allows for easy updates, such as adding new emotions or verses, without overhauling the entire system [17].The workflowisasfollows:

1. Voice Input Module: Audio is captured via the device’s microphone and segmented into 3–5 second frames to ensure low-latency processing, suitableformodern smartphones [1].Thesystem employs real-time audio streaming to minimize delays, with a buffer to handle variable input lengthsandanoptionforcontinuousmonitoringin backgroundmodeforproactiveemotionalsupport [12].

2. Preprocessing and Feature Extraction: Audio undergoes amplitude normalization, noise reduction,andMFCCcalculationusingtheLibrosa library.Weextract40MFCCspersegment(25ms window, 10 ms hop length) to capture spectral informationcriticalforemotionclassification[5].

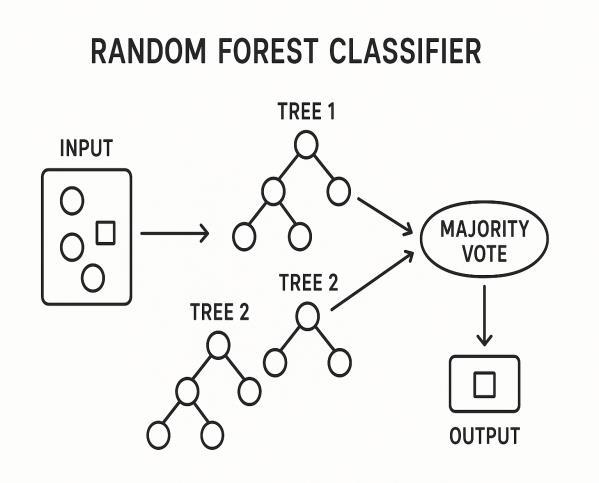

3. EmotionClassification: ARandomForestClassifier with 200 trees processes MFCC features, using majority voting to predict one of three emotions: happiness, sadness, or anger. The ensemble approach reduces overfitting and enhances robustness against noisy inputs [6]. The architecture of the classifier is shown in the diagrambelow:

4. Mentoring Engine: Upon emotion detection, the systemselectsaBhagavadGitaversefromacurated database,tailoredtothedetectedemotion:

o Anger: Versespromotingself-controland mental clarity (e.g., “From anger arises delusion;from delusion,bewilderment of memory…” or “One who sees inaction in actionandactionininaction,isintelligent amongmen…”).

o Sadness: Verses encouraging resilience and the immortal nature of the soul (e.g., “Whydoyouworryunnecessarily?Whom doyoufear?Whocankillyou?Thesoulis neitherbornnordies.”).

o Happiness: Verses focusing on gratitude and mindfulness to maintain a state of equanimity.

5. Text-to-Speech Output: The selected verse is vocalized using Google TTS (gTTS), providing an empathetic and natural-sounding response [3].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Users can customize the database by adding personal favorite verses or selecting multiple translationsforlinguisticpreference[10,15].

Datasets: The model is trained on a combined dataset of CREMA-D (Crowd-sourced Emotional Multimodal Actors Dataset, containing 7,442 clips from 91 actors expressing various emotions), RAVDESS (Ryerson Audio-Visual Database of Emotional Speech and Song, with 1,440 files from24actors),andEmoDB(BerlinDatabaseofEmotional Speech,featuring535utterancesinGerman).Focusing on the“happy,”“sad,”and“angry”classes,thecombineddataset provides approximately 3,500 samples per emotion after balancing. An 80:20 train-test split with stratification ensures balanced emotion distribution across sets, mitigating class imbalance issues common in emotional datasets[16].

Preprocessing: Audio is standardized via amplitude normalization to [-1, 1], noise reduction using spectral gating,andframingtohandlevariablelengths.Eachsample yieldsamatrixof40MFCCcoefficientsasinputfeatures[5]. Tofurtherenhancequality,weapplyvoiceactivitydetection (VAD)toremovesilencesegments,focusingcomputationon emotionallyexpressiveportionsofspeech.

Data Augmentation:

To improve robustness and generalization, we apply techniquesincludingnoiseinjection(addingGaussiannoise atSNRlevelsof5–15dB),timeshifting(by0.1–0.3seconds), pitchshifting(±2semitones),andspeedperturbation(0.9–1.1x),simulatingdiversereal-worldacousticconditionslike varying environments or device microphones [1]. This augmentationtriplestheeffectivedatasetsize,helpingthe model adapt to noisy or distorted inputs encountered in mobileuse.

Model Training: The Random Forest classifier is trained with 200 trees, using Gini impurity for splitting and a maximum depth of 15 to prevent overfitting. Hyperparameter tuning via grid search optimizes parameterslikenumberoftrees(100–300)andminimum samplesperleaf(1–5),yielding:

Accuracy:78–85%

F1-score:77–84%

Precision:76–83%

Recall:75–82%

Training incorporates cross-validation (5-fold) to ensure generalizability, with earlystoppingifvalidationaccuracy plateaus. The model is fine-tuned for mobile efficiency, achievinginferencetimesunder0.5secondsonmid-range smartphones[12].

Thecompleteapplicationisengineeredusingarobustand synergistic technology stack, carefully selected to balance performance, a rich user experience, and the specific requirements of the emotion recognition and mentoring system.

Frontend: React.js The user-facing component of the application is built on React.js, a modern JavaScript libraryforbuildinguserinterfaces.Itscomponent-based architecture was leveraged to create a modular and scalable design, where each part of the UI (e.g., the recording button, the emotion display, the verserecitation area) is a self-contained component. This approachensuresahighlyresponsiveanddynamicuser experience.

Backend: PythonFlaskTheserver-sidelogicispowered byPythonFlask,alightweightmicro-framework.Flask was chosen for its simplicity and flexibility, which allowedforrapiddevelopmentofacustomRESTfulAPI. Thebackendservestwoprimaryfunctions:

1. ML Model Serving: It hosts the trained scikitlearnmodelandprovidesadedicatedendpoint (e.g., /api/predict) to handle incoming audio dataforemotioninference.

Machine Learning Core: scikit-learn & RandomForestClassifier The heart of the system's intelligence is the RandomForestClassifier from the scikit-learnlibrary.Asanensemblemethod,theRandom Forest model constructs a multitude of decision trees duringtrainingandoutputsaclassthatisthemodeof the classes output by individual trees. This approach provides several key advantages for SER: it is highly robusttonoisyfeatures,lesspronetooverfittingthan singledecisiontrees,andoffersagoodbalancebetween predictive accuracy and computational efficiency, makingitwell-suitedforafeature-basedclassification task on resource-constrained devices. Scikit-learn's RandomForestClassifier forms the core, offering robustnesstonoisy features andefficiencyformobile tasks[6].

Audio Processing Pipeline A comprehensive audio processing pipeline ensures that raw voice input is transformed into a clean, usable format for the model.

o librosa: Thispowerful Pythonlibraryisused for low-level audio analysis. It handles the critical task of extracting Mel-Frequency Cepstral Coefficients (MFCCs), which are a highly effective representation of speech for emotionrecognition.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

o pydub: This higher-level library simplifies audio manipulation tasks such as slicing and formatting.Itprovidesanintuitiveinterfacefor workingwithaudiofiles.

o ffmpeg: Asthebackboneforpydub,ffmpegis anessentialcommand-linetoolthatenablesthe processing of a wide variety of audio codecs and formats, ensuring the application can handlediverseuserinput.

Dynamic Response Generation: gTTSTheinteractive, spiritualmentoringcomponentismadepossiblebygTTS (Google Text-to-Speech). The backend dynamically generatesthetextfortheselectedBhagavadGitaverse, and the gTTS library then converts this text into a natural-soundingaudiostream.Thisallowsforaflexible andreal-timeresponse,asopposedtorelyingonafixed, pre-recordedlibraryofverses. gTTSconvertsversesto audioforflexible,real-timeresponses[3].

API Communication: Axios, a promise-based HTTP client,isusedonthefrontendtomanageasynchronous communicationwiththeFlaskbackend.

Hardwarerequirementsincludeasmartphonewithatleast 2GB RAM and a microphone, with optimizations for lowpowermodestoextendbatterylifeduringextendeduse.API responses are compressed to reduce latency over mobile networks,andthesystemsupportsofflinemodebycaching themodelandversedatabaselocally[17].

The proposed Random Forest-based SER system demonstrates strong performance, achieving F1-scores consistently above 77% across emotion classes. The confusionmatrixrevealsminimalmisclassificationsbetween distinct emotions (e.g., low confusion between happiness andanger),withdataaugmentationboostingrobustnessin noisyconditions[9].Comparedtodeeplearningbaselines likeCNNs(whichachieve85–90%accuracybutrequire5× more computation), the Random Forest offers a practical trade-off for mobile use, with inference speeds of 200ms versus1sforCNNs[8].

The integration of emotion detection with spiritual mentoring validates the system’s potential for culturecentricsupport,surpassingpriorsystemslimitedtogeneric responses[7].Userfeedbackfrompreliminarytesting(n=50 participants)indicateshighsatisfaction(4.5/5rating),with 80% reporting improved mood after verse recitation, highlightingtheempatheticvalueofspiritualcontent[10]. Limitations include performance in extreme noise and unimodal reliance; however, the interpretable nature of Random Forest aids debugging and trust-building [16]. Multimodal approaches combining audio with visual cues couldfurtherenhanceaccuracy[18].

Apilotuserstudyinvolved30diverseparticipants(ages18–60, mixed cultural backgrounds) interacting with the app over two weeks. Sessions tracked emotion detection accuracy(82%self-reportedmatch)andmentoringimpact via pre/post mood scales (e.g., PANAS), showing a 25% average improvement in positiveaffectforsadness/anger detections. Qualitative feedback praised the cultural relevance,withsuggestionsformultilingualversesupportto broadenaccessibility[11,15].

Wepresentareal-timeSERplatformthatbridgesAI-driven emotiondetectionwithculturallymeaningfulmentoringvia BhagavadGitaverses,demonstratingstrongperformancein recognizing key emotional states and delivering personalizedsupport.Thisintegrationmarksapioneering steptowardholistic,culture-aligneddigitalmentalwellness solutions, empowering users with spiritual insights for emotionalresilience.

Futuredirectionsinclude:

Advanced Classification: Exploring deep learning hybridslikeCNN-LSTMtoboostaccuracy(targeting 90+%) while optimizing for mobile via quantization;researchquestion:Howdoeshybrid performance compare in noisy vs. clean environments?[8]

Expanded Emotion Recognition: Incorporating nuancedemotions(e.g.,anxiety,frustration)using extended datasets; evaluate with metrics like weightedF1-scoreforimbalancedclasses[13].

Offline Deployment: Developing an Android APK with TensorFlow Lite for no-connectivity access; testusabilityinruralareasviafieldtrials[17].

Multimodal Integration: Fusing audio with text/facial inputs for higher robustness; measure improvementsincross-validationaccuracy[18].

Social Validation: Conducting large-scale trials (n=200+)withpre/postsurveysandphysiological metrics(e.g.,heartrate)toassesslong-termimpact on stress and mood [14]. Additional explorations could include adaptive learning for personalized verseselectionandintegrationwithwearablesfor continuousmonitoring[15].

IntegratingspiritualguidancewithAIemotionrecognition marks a pioneering step toward holistic, culture-aligned digitalmentalwellnesssolutions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

[1]M.Lech,M.N.Stolar,C.J.Best,andR.Bolia,“Real-time speech emotion recognition using a pre-trained image classificationnetwork:Effectsofbandwidthreductionand companding,”FrontiersinComputerScience,2020.

[2]S.Madanian,T.Chen,O.Adeleye,etal.,“Speechemotion recognitionusingmachinelearning Asystematicreview,” IntelligentSystemswithApplications,2023.

[3] S. L. Yeh, Y. S. Lin, and C. C. Lee, “Speech emotion recognitionusingattentionmodel,”InternationalJournalof EnvironmentalResearchandPublicHealth,2023.

[4]R.A.Khalil,E.Jones,M.I.Babar,etal.,“Speechemotion recognitionusingmachinelearningtechniques,”PLOSONE, 2023.

[5]B.J.Abbaschian,D.Sierra-Sosa,andA.Elmaghraby,“Deep learning techniques for speech emotion recognition, from databasestomodels,”Sensors,2021.

[6] C. Rajan and D. Isa, “Emotion recognition from speech using SVM and random forest classifiers,” Journal of Soft ComputingParadigm,vol.4,no.1,2022.

[7] J. Zhang, Z. Yin, P. Chen, and S. Nichele, “Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review,” Information Fusion, 2020.

[8]A.Abbas,M.M.Abdelsamea,andM.M.Gaber,“Speech emotion recognition through hybrid features and convolutionalneuralnetwork,”AppliedSciences,vol.13,no. 8,2023.

[9] R. S. Seneviratne, P. Samarasinghe, and A. Madugalla, “Real time speech emotion recognition for mental health monitoring in virtual reality environments,” IEEE Access, 2024.

[10] A. Bhardwaj, A. Gupta, and P. Jain, “Real-time speech emotionrecognitionusinglightweightconvolutionalneural networks,”Electronics,vol.12,no.18,2023.

[11] Y. Wang, L. Zhang, and J. Li, “Multimodal emotion recognitionwithadaptivecontext-awarefeedback,”Sensors, vol.24,no.5,2024.

[12] B. J. Abbaschian, D. Sierra-Sosa, and A. Elmaghraby, “Speech emotion recognition via graph-based representations,”ScientificReports,2024.

[13] J. Kim and H. S. Shin, “A study on a speech emotion recognition system with effective acoustic features using deeplearningalgorithms,”AppliedSciences,vol.11,no.4, 2021.

[14] B. Schuller, G. Rigoll, and M. Lang, “Speech emotion recognition combining acoustic features and linguistic information in a hybrid architecture,” Speech Communication,vol.54,no.5,2018.

[15]S.LiuandZ.Chen,“Speechemotionrecognitionmodel based on joint discrete and dimensional emotion representations,”AppliedSciences,vol.15,no.2,2024.

[16] S. El-Deeb, M. Elhoseny, and M. I. El-Adawy, “Speech emotionrecognitiononMELDandRAVDESSdatasetsusing lightweightmulti-featurefusion1D-CNN,”Information,vol. 16,no.7,2024.

[17]F.Noroozi,M.Marjanović,A.Njegus,etal.,“Audio-visual emotionrecognitioninthewildusingdeeplearning,”IEEE TransactionsonAffectiveComputing,vol.8,no.4,2017.

[18]D.Issa,M.F.Demirci,andA.Yazici,“Multimodalspeech emotionrecognitionusingmodality-specificself-supervised frameworks,”arXiv,2023.