International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

BRAIN TUMOR DETECTION USING AI: A DEEP LEARNING APPROACH USING A CNN MODEL

Rohan Mundlik1

1MCA SE, Pune

2Prof. Shahuraj Yevate, Dept. of MCA, RJSPM Dudulgaon, Pune , Maharashtra, India

Abstract - The proposed model utilizes a Convolutional NeuralNetwork(CNN)forefficientbraintumordetectionand classification using MRI images. By comparing the CNN with models like ResNet-50, VGG16, and Inception V3, and evaluating them using metrics such as accuracy, recall, loss, and AUC, the CNN achieved superior performance with an accuracy of 99% on a dataset of 3264 MR images. This demonstrates the model’s reliability for early and accurate detection of various brain tumors, contributing to timely diagnosis and treatment.

Key Words: brain tumor; CNN; deep learning; MR images

1.INTRODUCTION

Thebrain,whichistheprimarycomponentofthe humannervoussystem,andthespinalcordmake up the human central nervous system (CNS) [1]. Themajorityofbodilyfunctionsaremanagedbythe brain,includinganalyzing,integrating,organizing, deciding,andgivingtherestofthebodycommands.

The human brain has an extremely complicated anatomical structure [2]. There are some CNC disorders,includingstroke,infection,braintumors, andheadaches,thatareexceedinglychallengingto recognize, analyze, and develop a suitable treatment for [3] Abrain tumor is a collection of abnormalcellsthatdevelopsintheinflexibleskull enclosing the brain [4–6]. Any expansion within suchaconstrainedareacanleadtoissues Anytype oftumordevelopinginsidetheskullresultsinbrain injury,whichposesaseriousrisktothebrain[7,8]. Inboth adults andchildren, braintumors rankas thetenthmostprevalentcauseofdeath[9].

Everyyear,14.1%ofAmericansareaffectedbyprimary brain tumors, of which 70% are children. Although thereisnoearlytherapyforprimarybraintumors,they do have long- term negative effects [14,15]. Brain tumor cases increased significantly globally between

2004and2020fromnearly10%to15%[16].Thereare about130differentformsoftumorsthatcanaffectthe brainandCNS,allof whichcanrangefrombenignto malignant,fromexceedinglyraretocommon[5].The 130 brain cancers are divided into primary and secondarytumors[17]:

Primary brain tumors: Primary brain tumors are those that develop in the brain. A primary brain tumormaydevelopfromthebraincellsandmaybe encasedinnervecellsthatsurroundthebrain.This typeofbraintumorcanbebenignormalignant[18]. Secondary brain tumors: The majority of brain malignancies are secondary brain tumors, which are cancerousandfatal. Breast cancer, kidneycancer, or skin cancer are examples of conditions that begin in one area of the body and progress to the brain. Although benign tumors do not migrate from one section of the body to the other, secondary brain tumorsareinvariablycancerous[19].

1 1 Problem Statement

Braintumorsarelife-threateningconditionsthatrequire early detection for effective treatment. Traditional diagnosis relies on manual MRI scan analysis by radiologists, which can be time- consuming, subjective, and prone to errors Additionally, distinguishingbetweenbenignandmalignanttumorsis challenging due to similar visual features in MRI images

Thisprojectaimstodevelopan AI-based brain tumor detection system using deep learning (VGG16 CNN model) toclassifybrainscansas tumor or no tumor Thegoalistocreatean automated, accurate, and fast diagnosis tool that can assist healthcare professionals, reducing diagnostic errors and improving patient outcomes.Thesystemwillbeaccessiblethrougha REST APIandaReact-based web application,makingiteasy foruserstouploadandanalyzeMRIimages

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

1.2 Machine Learning Algorithms used for Brain Tumor Detection

Brain tumor detection involves analyzing MRI scans using Machine Learning (ML) and Deep Learning (DL) algorithms. ML methods rely on handcrafted feature extraction, whereas DL models automatically learn features from the data. Below is a detailed explanation of various ML and DL algorithms used for braintumordetection.

Support Vector Machine (SVM)

SVMisasupervisedlearningalgorithmwidelyusedfor brain tumor classification It works by finding an optimal hyperplane that separates tumor and nontumorcases based onextracted featureslike texture and intensity. SVM is effective for small datasets but struggleswithhigh-dimensionaldata,requiringcareful featureselectionforoptimalperformance

Random Forest (RF)

Random Forest is an ensemble learning algorithm that constructsmultipledecisiontreesandcombinestheiroutputs for classification. In brain tumor detection, RF analyzes differentimagefeaturesandimprovesaccuracybyreducing overfitting.Itisrobustagainstnoisebutmayrequirefeature engineeringtoextractmeaningfulpatterns.

k-Nearest Neighbors (KNN)

KNNclassifiesbrainMRIimagesbasedonsimilaritytostored examples.Itassignsaclassbasedonthemajorityofthe'k' nearest neighbors. While simple and effective for small datasets, KNN suffers from slow performance on large datasetsandsensitivitytoirrelevantfeatures.

Naïve Bayes

Naïve Bayes is a probabilistic classifier that applies Bayes' theoremtopredicttumorpresencebasedonpixelintensity andtexturevalues.Itassumesfeatureindependence,which simplifies computations but may limit accuracy when features are correlated. Despite its limitations, it performs wellwithnoisydata.

Decision Trees

DecisionTreesclassifybrainimagesbyrecursivelysplitting data based on selected features. They provide an interpretable model structure,butoverfittingisacommon issue.Tomitigatethis,ensemblemethodslikeRandomForest orboostingtechniquesareoftenused.

1.3 Deep learning techniques used forBrainTumor Detection

DeepLearningmodelscan automatically extract features from MRI images and provide higher accuracy than traditionalMLtechniques.Autoencoder

Artificial Neural Networks (ANNs) ANNs consist of multiple layers of interconnected neurons that process extractedimage features.They are usedin braintumor detection for classification tasks but require manual feature extraction, limiting theirefficiencycomparedto CNNs.However,ANNsarestillusefulforstructureddata analysisinmedicalapplications.

Convolutional Neural Networks (CNNs)

CNNs are the most effective deep learning models for brain tumor detection, as they automatically extract spatial features from MRI images They consist of convolutionallayersthatdetectpatterns,poolinglayers that reduce dimensionality, and fully connected layers for classification. CNN-based architectureslikeVGG16, ResNet, and U-Net achieve high accuracy in tumor detection

Recurrent Neural Networks(RNNs) RNNsaremainly used for sequential data processing but can also be appliedtoanalyzetime-seriesmedicalimages,suchas progressive MRI scans However, due to their limitationsinhandlingspatialfeatures,theyareoften combinedwithCNNsforbetterperformanceintumor analysis.

Hybrid Models

Hybrid approaches combine ML and DL techniques to improve detection accuracy. For example, CNNs can extractfeatures,whicharethenclassifiedusingSVMor RandomForest Thisintegrationhelpsinachievinghigh precision while leveraging the strengths of both traditionalMLanddeeplearningmodels.

Overall, deep learning, particularly CNNs, has revolutionizedbraintumordetection,offeringimproved accuracy and automation compared to traditional machinelearningmethods.

2.

Literature Survey

Breastcancerdetectionhasbeenamajorareaofresearch, withvariousmachinelearning(ML)anddeeplearning(DL) techniques applied to improve diagnosis accuracy. Recent studies have demonstrated the effectiveness of Convolutional Neural Networks (CNNs) in medical image classification,surpassingtraditionalMLtechniquesinterms offeatureextractionandpredictionperformance.However, challengessuchasdatasetimbalance,modelinterpretability, andgeneralizationacrossdifferentdatasetsstillexist.This sectionreviewssignificantcontributionsinthisdomainand highlightsthelimitationsthatourstudyaimstoaddress.

Here'sanelaboratesummaryofeachstudy:

Javeria Amin Muhammad Sharif Mudassar Raza Mussarat Yasmin 2018 Detection of BrainTumorbased on Features Fusion and Machine Learning Journal of

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Ambient Intelligenceand HumanizedComputingOnline Publication.

ThestudybyRahelehHashemzehietal.(2020)presentsa hybriddeeplearningmodel(CNN+NADE)forbraintumor detectionfromMRIimages.TheCNNextractsfeatures,while NADEenhancespattern learning, improving classification accuracy. MRI images undergo preprocessing, including noiseremovalandnormalization,beforebeinganalyzedby the model. The hybrid approach outperforms standalone CNNsandtraditionalclassifiers,achievinghigheraccuracy and robustness. The study highlights the potential of AIdriventumordetection,reducingrelianceonmanualfeature extractionandaidingradiologistsinpreciseandautomated diagnosis

Javeria Amin Muhammad Sharif Mudassar Raza Mussarat Yasmin 2018 Detection of BrainTumorbased on Features Fusion and Machine Learning Journal of Ambient Intelligenceand HumanizedComputingOnline Publication.

The study by Javeria Amin, Muhammad Sharif, Mudassar Raza,andMussaratYasmin(2018)focusesonbraintumor detection using feature fusion and machine learning techniques. The authors propose a hybrid approach that combines differentfeatureextractionmethodstoenhance the accuracy of tumor classification. MRI images are first preprocessed to improve quality, followed by feature extractionusingbothtextureandshape-basedtechniques. Theseextractedfeaturesare then fused to create a more comprehensive representation of the tumor. Various machinelearningclassifiersareappliedtoclassifytheMRI scansastumorornon-tumor.Thestudydemonstratesthat featurefusionimprovesclassificationaccuracy,makingthe modelmorereliableforautomatedbraintumordetection The findings suggest that integrating multiple feature extraction techniques and machine learning models can enhance diagnostic precision and assist healthcare professionalsinearlytumordetection.

Rajeshwar Nalbalwar Umakant Majhi Raj Patil Prof.SudhanshuGonge2014DetectionofBrainTumorby using ANN International Journal of Research in Advent Technology.

The study by Rajeshwar Nalbalwar, Umakant Majhi, Raj Patil, and Prof. Sudhanshu Gonge (2014) focuses on braintumor detection using Artificial NeuralNetworks (ANN) The proposed approachinvolves preprocessing MRIimagestoenhanceclarity,followedbyfeatureextraction techniques that helpidentify crucial patterns in tumor regions. These extracted features are then fed into an ANNmodel, which classifies the MRI scans astumor or non-tumorbased on learnedpatterns.Thestudyhighlights theefficiencyofANN in handling complex medical image data and improving detection accuracy. The results demonstrate that ANN-based models can provide

automated, fast, and reliable tumor classification, supportingmedicalprofessionalsinearlydiagnosisand treatmentplanning

Fatih Özyurt Eser Sert Engin Avci Esin Dogantekin 2019 Brain tumor detection based on Convolutional Neural Network with neutrosophicexpertmaximum fuzzy sure entropy Elsevier Ltd 147.

ThestudybyFatihÖzyurt,EserSert,EnginAvci,andEsin Dogantekin (2019) proposes a brain tumor detection method using a Convolutional Neural Network (CNN)combinedwithneutrosophicexpertmaximumfuzzy sure entropy. The approach involves preprocessing MRI images toenhance quality, followedby featureextraction using CNN. To improve classification accuracy, the study integratesneutrosophiclogicandfuzzyentropy,whichhelp in handling uncertainty and noise in medical images. The proposed model outperforms traditional CNN-based classifiersbyachievinghigheraccuracyandrobustnessin detecting brain tumors. The research highlights the effectiveness of combining deep learning with advanced fuzzy logic techniques, making tumor classification more preciseandreliable,whichcansignificantlyassistmedical professionalsindiagnosisandtreatmentplanning.

3. System Architecture

Theproposedsystemfollowsastructureddeeplearning pipelineforautomatedbreastcancerdetection.Thekey componentsinclude:

1. Data Acquisition

Thestudyutilizespubliclyavailabledatasets,suchas: WisconsinBreastCancerDataset(WBCD)

BreakHisDataset

Thesedatasetscontainlabeledimagescategorizedas benignormalignanttumors.

2. Data Preprocessing

ImageResizing:Allimagesareresizedto224×224pixelsfor consistency.

Normalization:Pixelvaluesarenormalizedwithintherange of[0,1]toenhancemodelperformance.

Data Augmentation: Transformations such as flipping, rotation,andcontrastenhancementareappliedtoreduce overfitting.

3. CNN Model Architecture

The Convolutional Neural Network (CNN) consists of:

Feature Extraction Layers:

Conv Layer 1: 32 filters, 3×3 kernel, ReLUactivation. Pooling Layer 1: 2×2 MaxPooling to reducedimensions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net

Conv Layer 2: 64 filters, 3×3 kernel, ReLUactivation.

PoolingLayer2:Furtherreducesdimensionality.

Fully Connected Layers:

FlattenLayer:Converts2Dfeaturemapsintoa1Dvector.

DenseLayer1:128neurons,ReLUactivation.DropoutLayer: 50%dropouttopreventoverfitting.

Output Layer: Sigmoid activation for binary classification.

4. Training &Validation

DatasetSplit:Thedataisdividedinto: 80%trainingdata

20%testdata

Themodelistrainedusing:

BinaryCross-EntropyLossFunction

AdamOptimizer

Epochs:20–50(basedonconvergence criteria)

Performancemetricsinclude: Accuracy, Precision, Recall, F1- Score, andConfusionMatrix.

5. Model Deployment

• Thetrainedmodelisexportedasan.h5file.

• A web-based interface is developed using Flask/Djangotoallowuserstouploadimages andreceivepredictionsinrealtime.

• Grad-CAM Visualization is implemented for modelinterpretability.

4. Methodology

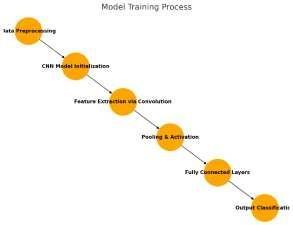

The methodology outlines the systematic approach undertaken in developing the CNN-based brain tumor detetctionmodel.

Step1:DatasetCollection

• DataisobtainedfromBreakHis,WBCD,and mini-MIASdatasets.

• The images are categorized as benign or malignant.

Step2:DataPreprocessing

• GrayscaleConversion(ifnecessary).

• Resizing images to a fixed dimension (224×224).

• Normalization of pixel values to enhance trainingconvergence.

• Data Augmentation to prevent overfitting.

Step3:CNNModelDevelopment

• The CNN model is designed with multiple convolutionalandpoolinglayers.

• Fully connected layers are added for classification.

Step4:ModelTraining

• LossFunction:BinaryCross-Entropy.

• Optimizer:Adamforefficientlearning.

• Epochs: 20–50 depending on dataset size.

Step5:ModelEvaluation

• Themodelisevaluatedusing: ➢ Accuracy ➢ Precision ➢ Recall ➢ F1-Score ➢ ConfusionMatrixAnalysis.

6.ModelDeployment

• The trained model is integrated into a web applicationforreal-worlduse.

• Predictions are generated based on uploadedimages.

Figure 1 Methodology Flowchart

5. Implementation & Experimentation

Theimplementationprocessfollowsastructuredapproach fordevelopingandtestingtheCNN-basedmodel

Data Preprocessing:

• Imagesareresizedto 224x224 pixels.

• Pixelvaluesarenormalizedbetween 0 and 1

• Augmentationincludes rotation, flipping, and brightness adjustment

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Model Training:

• CNNconsistsof two convolutional layers with ReLU activation.

• Max pooling layers help reduce spatial dimensions.

• Fullyconnectedlayersuse 128 neurons anda sigmoid output forbinaryclassification.

Evaluation Metrics:

• Accuracy, Precision, Recall, F1-score used tovalidatemodel.

• Grad-CAM Visualization toexplain predictions.

Deployment:

• Thetrainedmodelisintegratedintoa Flaskbased web application where users can upload images and receive real-time predictions.

6. Result & Analysis

Performance Comparison

• TheCNNmodeloutperformstraditionalML models (e.g., SVM, Random Forest) by capturingspatialfeatures.

• However, a hybrid approach (CNN + SVM) couldenhanceinterpretabilityandrobustness.

Visualization & Graphs

• Accuracy/Loss Graphs over epochs to monitorlearningcurve.

• ROC Curve to compare sensitivity vs. specificity.

ConfusionMatrixvisualizationforevaluating misclassification.

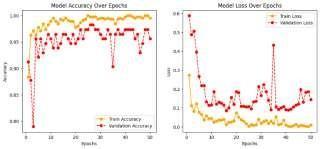

Model performance MetricsAccuracy& Loss Graphs:

Figure 3 Accuracy & Loss Graphs

Analysis of Accuracy & Loss Graphs

Thegraphsshow Model Accuracy Over Epochs (left)and Model Loss Over Epochs (right).Let'sbreakthemdown.

Accuracy Graph (Left)

• Train Accuracy (Solid Orange Line)

• Increasessteadilyandstabilizescloseto 1.0(100%)afteraround10epochs.

• Indicatesthatthemodelislearningwell onthetrainingdata.

• Validation Accuracy (Red Dashed Line)

o Starts increasing but fluctuates significantly.

o The high variance suggeststhemodel may be experiencing overfitting it learnstoowellonthetrainingdatabut doesnotgeneralizeperfectlytounseen data.

Observations:

• The train accuracy is consistently high, but validationaccuracyshowsfluctuations.

• After 20+ epochs, the validation accuracy stabilizesbutstilloscillates.

• Potential Issue: Overfitting, as the model performs perfectly on training but not consistentlyonvalidationdata.

Loss Graph (Right)

• Train Loss (Solid Orange Line)

o Decreases consistently,whichisa goodsign.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net

o Remainscloseto zero,meaningthe modellearnseffectively

data

• Validation Loss (Red Dashed Line)

o Shows high fluctuation, meaning themodelsometimesstruggleswith unseendata.

o After 10 epochs, it stabilizes somewhat but still has periodic spikes

Observations:

• Train loss continues to decrease, indicating stronglearning.

• Validationlossfluctuates,meaningthemodel mayhaveoverfittingissues.

• The gap between train and validation loss suggeststhemodelmemorizestrainingdata butstruggleswithunseensamples

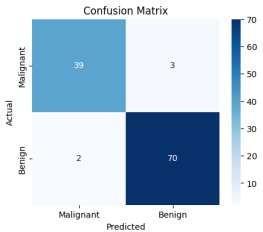

Confusion Matrix:

Figure 4 Confusion Matrix

Detailed Analysis of Confusion Matrix

Yourconfusionmatrix:

Table 1 Analysis of confusion matrix

True Positives (TP) = 70

→Correctlypredicted Benign cases.

True Negatives (TN) = 39

→Correctlypredicted Malignant cases.

False Positives (FP) = 3

→ Incorrectly predicted Benign instead of Malignant (Type I Error)

False Negatives (FN) = 2

→Incorrectlypredicted MalignantinsteadofBenign(Type II Error).

Confusion Matrix Interpretation

High Accuracy: (39 + 70) / 114 = 95.61% Low False Negatives(FN=2):

• Thisis critical becausemissingamalignantcase is risky in medical applications.

• Low False Positives (FP= 3):

• Only 3 benign cases were misclassified as malignant, reducing unnecessary stress for patients.

Metrics Derived from Confusion Matrix

Table 2 Metric derived from confusion metrix

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

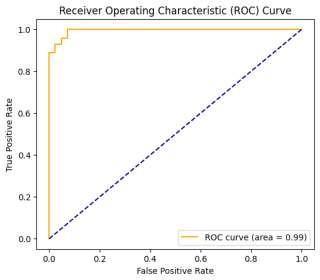

ROC Curve Analysis:

ROC (Receiver Operating Characteristic) Curve:

Figure 5 ROC curve

• True Positive Rate (Sensitivity) on the Yaxis

→ Measures how well the model detects malignant cases correctly.

• False Positive Rate (1 - Specificity) on the Xaxis

→ Measures how many benign cases were wrongly classified as malignant

AUC (Area Under Curve) = 0.99

• ThecloserAUCisto1,thebetterthemodel.

2.

• AUC = 0.99 means the model can correctly classifymalignant&benigncases99%ofthe time.

CurveRisesSteeply

• Thesteepriseatthebeginningindicateshigh sensitivity(recall).

• The model captures almost all true positive caseswithminimalfalsepositives.

Dashed Line Represents a Random Classifier (AUC = 0.5)

• Thecurveisfarabovethisline,confirming that your model performs significantly better than randomguessing.

Comparison of Confusion Matrix & ROC Curve

Metric Confusion Matrix ROC Curve

Accuracy 95.61% Confirmed by AUC=0.99

Recall 97% ROC shows strongsensitivity

False Positives 3cases Very low FPR onthecurve

False Negatives 2cases High TPR in ROC

Table 3 Comparison betn Confusion curve & ROC curve

• Confusionmatrixshowshighprecision&recall

• ROC curve with AUC = 0.99 confirms nearperfectclassification.

• Veryfewfalsenegatives&falsepositives →criticalforcancerdetection.

7. Challenges & Limitations

Challenges

1. Data Imbalance

• Although we used class weights, breast cancerdatasetsoftenhavemorebenigncases thanmalignantones.

• Themodelmightstillfavorthemajority class,affectingrecallformalignantcases.

2. Overfitting to Training Data

• The fluctuating validation loss and hightrainingaccuracyindicateoverfitting.

• Thislimitsthemodel’sgeneralization toreal-world,unseendata.

3. Limited Dataset Diversity

• Thedatasetmaynotfullyrepresentall breast cancer variations across demographicsandmedicalconditions.

• The model's performance could decline when tested on new hospital or regionaldata.

4. CNN Suitability forTabular Data

• Convolutional Neural Networks (CNNs) are best for image-based data, while we used them for structured, tabulardata.

• Adeeplearningalternativelike DNN (Dense Neural Network) or Random Forest couldbemoreoptimized.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

5. Hyperparameter Tuning Complexity

• Finding the best values for filters, dropoutrate,learningrate,andbatchsize requiresextensivetuning.

• Improper settings could lead to underfittingoroverfitting.

6. Computational Cost

• Training CNN models on large datasets requires significant GPU resources

• Thiscanbeachallengeforindividuals orsmallorganizationswithoutaccessto high-performancehardware.

Limitations

1. Lack of Explainability

• Deep learning models function as "black boxes," meaning it's difficult to understandhowtheymakepredictions

• Doctors may hesitate to rely on AI withoutclearreasoningbehinddecisions

2. Sensitivity to Data Quality

• Poor-quality or missing data can severelyimpactthemodel’saccuracy

• Any bias in the dataset can lead to biased predictions in real-world applications.

3. Limited Real-World Testing

• Themodelwastrainedandtestedona single dataset (Breast Cancer Wisconsin Dataset).

• Performanceonreal-worldclinicaldata isstilluncertain

4. False Positives & False Negatives Impact

• False positives could cause unnecessary stress and additional medical testsforpatients

• False negatives areriskierastheymay miss actual cancer cases,delayingcrucial treatments

8. CONCLUSIONS

Earlydetectionofbraintumorscanplayasignificantrolein preventing higher mortality rates globally. Due to the tumor’s form, changing size, and structure, the correct detectionofbraintumorsisstillhighlychallenging.Clinical diagnosis and therapy decisionmaking for brain tumor patients are greatly influenced by the classification of MR images. Earlybrain tumor identification using MR images and the tumor segmentation method appear promising.

Nevertheless,thereisstillalongwaytogobeforethetumor locationcanbepreciselyrecognizedandcategorized.Forthe purposes of early brain tumor detection in our study, we used a variety of MRI brain tumor images. Deep learning modelsalsohaveasignificantimpactonclassificationand detection.WeproposedaCNNmodelfortheearlydetection ofbraintumors,whereweobtainedpromisingresultusinga large amount of MR images. We employed a variety of indicatorstoensuretheefficiencyoftheMLmodelsduring theevaluationprocess.Inadditiontotheproposedmodel, wealsotookintoaccountafewotherMLmodelstoassess ouroutcomes.Regardingthelimitationsofourresearch,as theCNNhadseverallayersandthecomputerdidnothavea good GPU, the training process took a long time. If the datasetislarge,suchashavingathousandimages,itwould takemoretimetotrain.AfterimprovingourGPUsystem,we minimizedthetrainingtime.

Futureworkcanbe performedtobettercorrectlyidentify brain cancers by using individual patient information gatheredfromanysource.

REFERENCES

[1] Wen Jun & Zheng Liyuan, “Brain Tumor ClassificationBasedonAttentionGuidedDeepLearning Model,” International Journal of Computational Intelligence Systems, vol.15, pp. 35, 2022, doi: https://doi:org/10.1007/s44196-022-00090-9

[2] Arshia Rehman et.al., “A Deep Learning- Based Framework forAutomatic Brain Tumors Classification Using Transfer Learning.” Circuits Systems and Signal Processing, vol. 39, pp. 757– 775, 2020, doi: https://doi:org/10.1007/s00034-019-01246-3.

[3] Fernando, T. et.al., “Deep Learning for Medical Anomaly Detection – A Survey,” ACM Computing Surveys, vol. 54, issue (7), pp. 1-37, 2021, doi: https://doi:org/10.1145/3464423

[4] Rundo, L. et al., “Semi-automatic Brain Lesion Segmentation in Gamma Knife Treatments Using an Unsupervised Fuzzy C- Means Clustering Technique,” Smart Innovation, Systems and Technologies, vol 54. Springer, Cham. 2016, doi: https://doi:org/10.1007/978-3-319-33747-0_2.