International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

Utkarsh Tripathi, Priyanka Thapa, Aditi Bais, Ram Gopal Sharma

Abstract

The emergence of distant communication has changed how individuals work together in the personal, professional, and educational spheres. However, smart features that increase accessibility and engagement are sometimes absent from traditionalvideoconferencingsolutions.ThisstudypresentsMeetSync,aweb-basedvideoconferencingplatformwithAI integration. With features including voice control for necessary operations, air canvas painting with hand gestures, speech-to-text transcription, and intelligent attendance tracking, MeetSync improves virtual cooperation. MeetSync was created with HTML,CSS, JavaScript, Python, Django, and integrated APIs suchasMediaPipe,ZegoCloud, and WebSpeech APIwiththegoaloftransformingreal-timedigitalcommunication

Key Words: Video Conferencing, Artificial Intelligence (AI), Gesture Recognition, Voice Commands, Real-time Transcription,WebApplication,SmartMeetingSystem

1. Introduction

In the current digital era, communication has been more widespread than ever before, and virtual platforms are now essential in the social, professional, and educational spheres. Organizations, educational institutions, and people were forced to quickly embrace video conferencing technologies during the COVID-19 epidemic, which greatly increased the needfordistantcommunication.ThefoundationofinternationalcommunicationwasestablishedbyprogramslikeZoom, Google Meet, Microsoft Teams, and Cisco Webex, which provided necessary functions including screen sharing, video calling,andmessaging.

Butevenwhilethesetraditionalplatformsarewidelyused,theymostlyprovidestandardizedfeaturesandfrequentlylack morecomplexdegreesofautomation,intelligence,anduserinteraction.Therewasagrowingneedformoreintelligent,AIpowered features that might improve accessibility, user engagement, and meeting efficiency as users grew increasingly dependentonvirtualmeetings.Manycustomersfinditdifficultandtechnicallydemandingtoaccessadvancedcapabilities like real-time transcription, gesture control, or voice-activated instructions through existing systems, which frequently requireadditionalpluginsorthird-partyintegrations.

Given the shortcomings of the available video conferencing tools, there was an obvious need for a more user-friendly, interactive,andAI-enabledplatformthatcouldhelpuserswithouttheneedformorecomplexity.Theresultofthiswasthe idea and creation of MeetSync, an intelligent web application for video conferencing that incorporates AI. Through the direct integration of artificial intelligence into the platform's basic features, MeetSync aims to improve the conventional meeting experience. It has cutting-edge features like voice command execution for important meeting controls, real-time speech-to-text transcription via the Web Speech API, automated attendance tracking stored methodically using Python's openpyxl library, and air canvas drawing using hand gesture recognition powered by MediaPipe.

When these elements are combined, a platform is created where users can engage and communicate in dynamic and intelligent ways thatwere previouslyimpossible or challengingto execute without complicated settings. MeetSync seeks to improve and democratize virtual collaboration, whether it be a teacher utilizing air drawing to illustrate concepts, a businessteamkeepingautomatedattendancelogs,orauserwithadisabilityusingvoicecommandstooperatethesession.

ModernwebtechnologieslikeHTML,CSS,andJavaScriptwereusedintheconstructionofMeetSync,whichisscalableand effective.ItissupportedbyaDjangobackendandisdrivenbyreliablethird-partyAPIslikeZegoCloudforstreamingaudio and video. Advanced AI modules are completely integrated into its architecture, which guarantees seamless real-time performance.

As a result, MeetSync is not just a substitute for current platforms; rather, it is a next-generation development of virtual meetingsthatcanbemoreintelligent,accessible,andengaging,openingthedoorfordigitalcommunicationinthefuture.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

Theincreasingneedfordistantcollaborationtoolsthataremoreeffective,accessible,andinteractivehasencouragedthe development of digital communication platforms. A number of popular video conferencing programs have been created andimprovedthroughouttimetosatisfytheseuserrequirements:

Google Meet:Providesreliableaudioandvideocommunicationalongwitha smoothintegrationintotheGoogle Workspace network. Breakout spaces, live captioning, and simple meeting controls are among the features. Nevertheless, it is devoid of sophisticated AI-powered features like intelligent session management and gesturebasedcontrols.

Microsoft Teams: Offers excellent video conferencing along with project management, chat, and collaborative documentsharingfeatures.EvenwhileAIhasimprovedrecentlywithfeatureslikeautomatedmeetingrecapsand noisereduction,real-timegesturecontrolandautomatedattendancetrackingarestillscarce.

Zoom: Well-known for its intuitive user interface, Zoom facilitates webinars, breakout spaces, and transcription services. Even though it just released AI-powered meeting summaries through its AI companion, gesture-driven interactivityandair-canvaspaintingfeaturesarestillnotfullyintegrated.

Cisco Webex:Intendedfor businesscustomers, Webex hasAI-poweredcapabilities includingnoisesuppression, meeting highlights, and real-time translation. Notwithstanding these advancements, the platform does not yet havefullyintegratedsmartautomationfeaturesorpersonalizedgesture-basedcontrols.

SeveralnewplatformsandresearchprototypesareinvestigatingmoresophisticatedAIintegrationsinvideoconferencing systemsatthesametime:

Spatial: Concentrates on utilizing VR and AR technologies to create 3D virtual meeting spaces for engaging teamwork. However, its practical acceptance for regular video conferencing users is limited by its significant relianceonspecializedVRequipment.

Verizon’s BlueJeans: Uses AI to provide performance analytics, highlights, and meeting transcripts. However, it hasn'tmadeconsiderableuseofinteractivepaintingtoolslikeanaircanvasorgesture-basedcontrolsystems.

Prototypes for Academic Research: Several research projects have used frameworks such as MediaPipe and OpenCV to study gesture-controlled communication. AI-powered note-taking, participant behavior analysis, and touchless interactions have also been integrated into experimental systems. Many of these prototypes, nonetheless,arestillintheearlystagesofdevelopmentandhavenotyetbeenwidelyusedbythepublic.

Comparative Summary:

A genuinely unified platform that seamlessly integrates gesture control, real-time voice transcription, voice-commanddrivenmeetingcontrol,interactiveair-canvaspainting,andautomatedattendancetrackingisconspicuouslylacking,even ifsomeAIelementshavebeenincludedintocurrentplatforms.

MeetSync fills this void by providing a comprehensive, AI-powered video conferencing solution that enhances user engagement,accessibility,anddistantcollaboration.

3. Proposed Methodology

3.1 Objectives

•Todevelopasophisticatedonlinecommunicationtool.

•ToincorporateAItechnologiesforimproveduserengagement.

•Toincorporateintelligentelementstoincreasemeetingproductivity.

3.2 Technologies Used

Frontend:

o HTML,CSSandJavaScript

o ZegoCloudUIKitPrebuiltSDK

o WebSpeechAPI(SpeechRecognition)

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

Backend:

o Python

o DjangoFramework

o OpenCV+MediaPipe(AirCanvas)

o openpyxl(ExcelAttendanceLogging)

External APIs/Libraries:

o ZegoCloudSDKforaudioandvideocalls

o WebSpeechAPIforvoicecommandsandtranscription

3.3 Key Features

1. Video Conferencing (ZegoCloud SDK Integration)

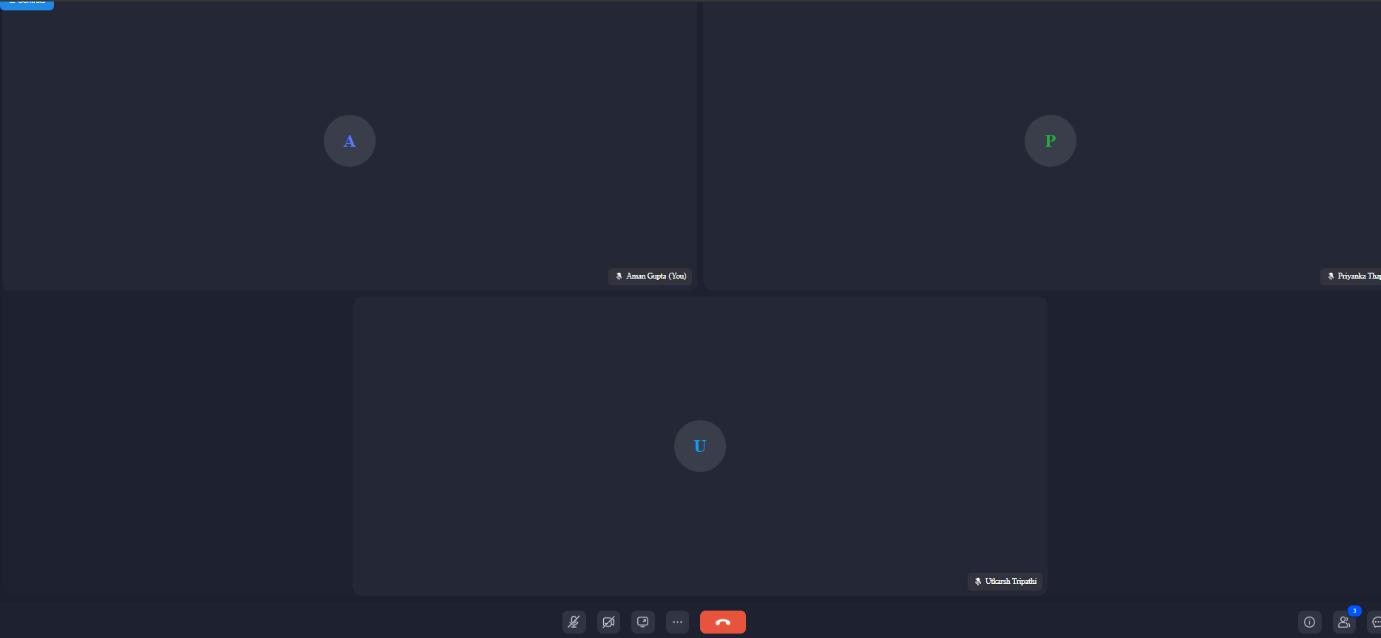

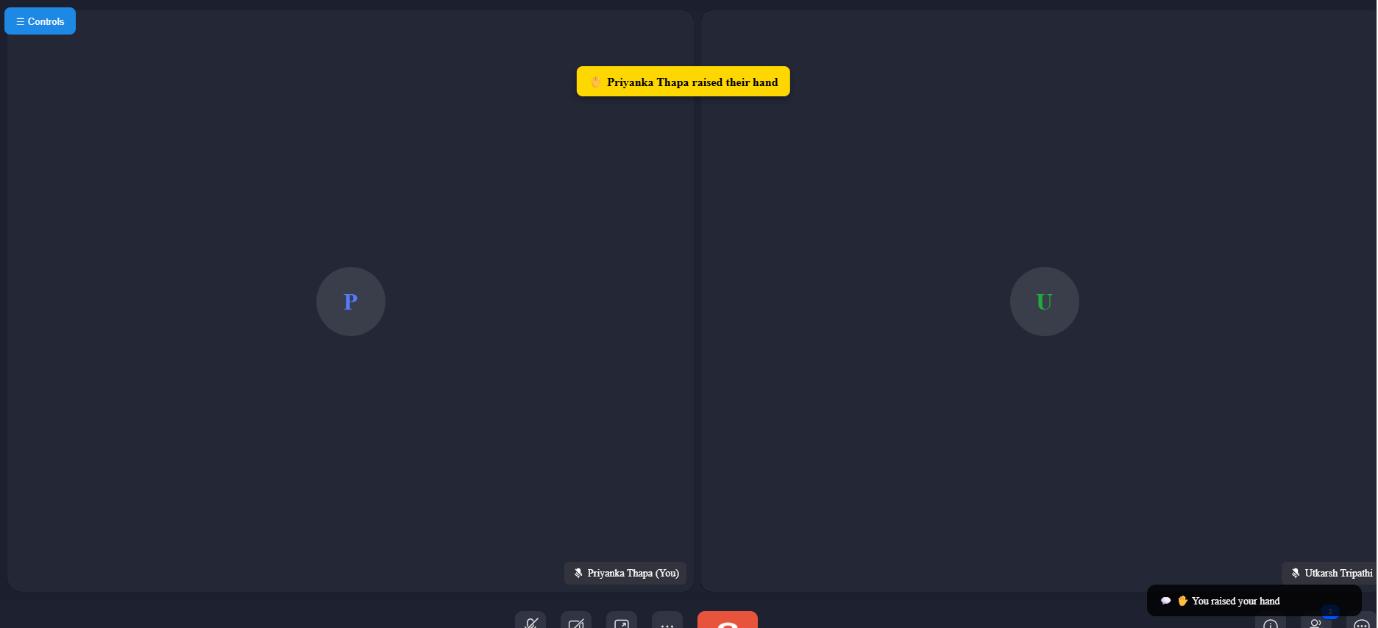

MeetSync creates reliable, low-latency, real-time video conferencing capabilities with the ZegoCloud SDK. A secure signaling system establishes a connection to ZegoCloud's servers when a user inputs a valid Room ID. Audio-video data transfer is supported by WebRTC (Web Real-Time Communication) protocols, which guarantee encrypted peer-to-peer streaming whenfeasibleandrelyonTURNserversforNATtraversal. Inordertoprovideaflawlessuserexperience,theZegoCloudSDKconstantlyadjustsaudioandvideoqualityinresponse to network disturbances. Additional features include in-room text chat for side conversations and screen sharing, which records and broadcasts a user's screen. As illustrated in Figure 1, participants have fine-grained control over their microphones,cameras,andabilitytoleavemeetingswithoutdisturbingotherparticipants.

Working Flow:

JoinMeeting→AccessMediaDevices→StreamtoServer→DistributeStreamstoOtherParticipants

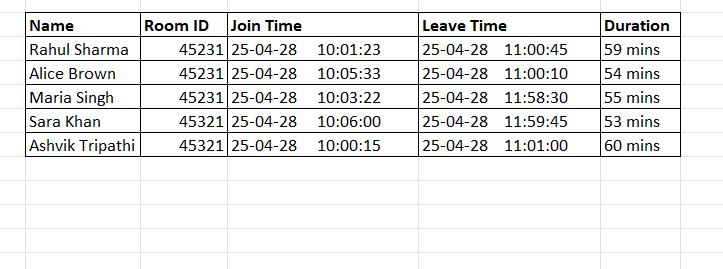

2. Attendance Management (Automated Logging with openpyxl)

MeetSync's backend is equipped with an intelligent attendance recording mechanism. A user's name, timestamp, and RoomIDarerecordedusingDjangosessiondataassoonastheyenterameeting.TheopenpyxlmoduleisusedbyaPython scripttoautomaticallyappendthisdatatoanExcelworkbook.

For every meeting, a distinct file is created with the type Attendance_<RoomID>_<Date>.xlsx. In order to provide more thorough session logs, each participant's join and exit times are additionally monitored. Figure 2 illustrates how this system provides data preservation for upcoming audits, reports, or certification procedures while doing away with the requirementformanualrollcalls.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

Working Flow:

UserJoins→DjangoCapturesDetails→openpyxlWritestoExcel→SaveFile

2:Screenshotoftheauto-generatedExcelattendancesheetcreatedusingDjangosessionsandtheopenpyxllibrary.

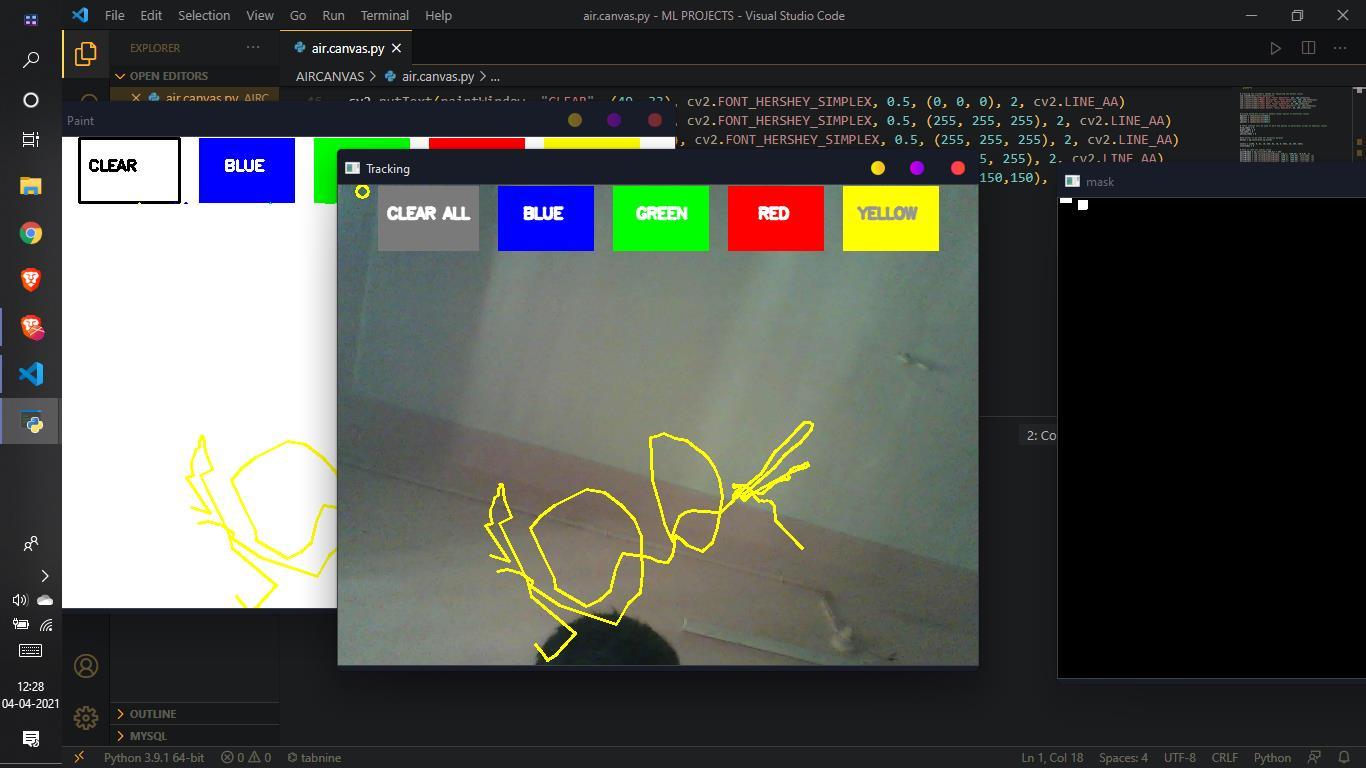

3. Air Canvas Module (Hand Gesture-based Drawing System)

With the Air Canvas, users can sketch with hand motions on an easy-to-use virtual whiteboard without ever touching a device.OpenCVisusedtocontinuallyprocessthecamerafeedframebyframe.Keyspots(landmarks)ontheuser'shand, particularlytheindexfingertip,aredetectedbyMediaPipeHands.

The system perceives the extension of the index finger while the remaining fingers are closed as a "drawing mode." The coordinatesofthefingertip (x,y) are recorded and plottedaslinesonthecanvas. Pen colorchanges,strokeundoes,and canvas clearing are all made possible by various hand motions. This fosters a lively, participatory atmosphere that is particularlyhelpfulfortechnicaldiagrams,brainstormingsessions,andlectures.

Working Flow:

CaptureFrame→DetectHandLandmarks→MapFingerMovements→DrawonCanvas

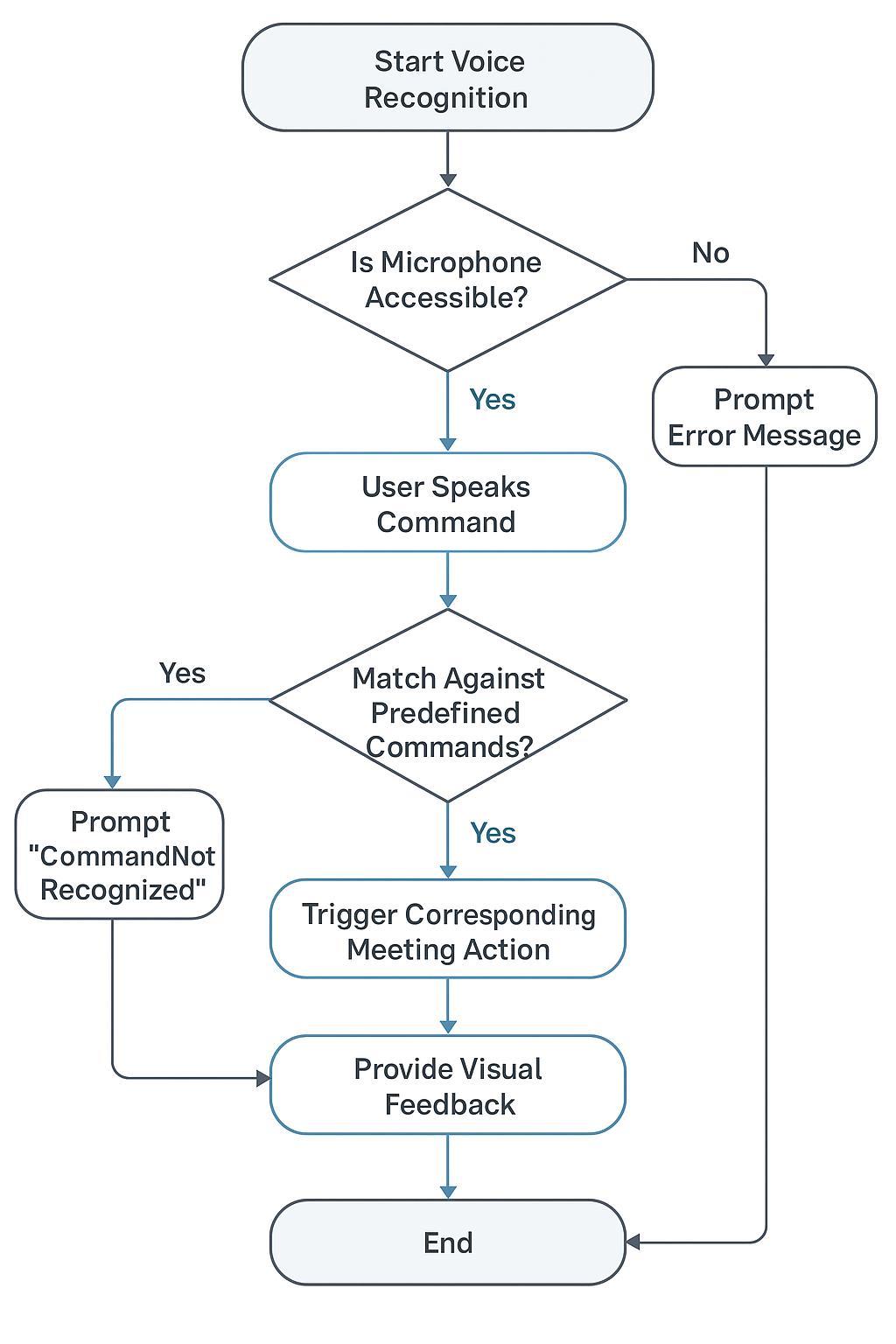

4. Voice Command System (Hands-free Meeting Control)

ApowerfulvoicecommandrecognitionmechanismisalsoincludedinMeetSynctofurtherimproveaccessibility.TheWeb Speech API continuously searches for particular words or phrases after it is activated. JavaScript routines connected to matchingmeetingactions(suchasmutemike,leavemeeting,andopenAirCanvas)aretriggeredbyrecognizedutterances. Voiceactivitydetection(VAD)isusedbythesystemto minimizebatteryanddatausageandoptimizelisteningintervals. For ease of usage, commands are made to be straightforward, everyday language expressions. As shown in Figure 3, an error-handlingprocedurepromptsuserstoretrycommandsiftheyarenotsuccessfullydetectedbyprovidingfeedback.

Working Flow:

ActivateVoiceRecognition→DetectCommand→ExecuteMeetingAction

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

Figure3:Flowchartillustratingtheprocessofvoiceinputrecognitionandcorrespondingmeetingactionexecution.

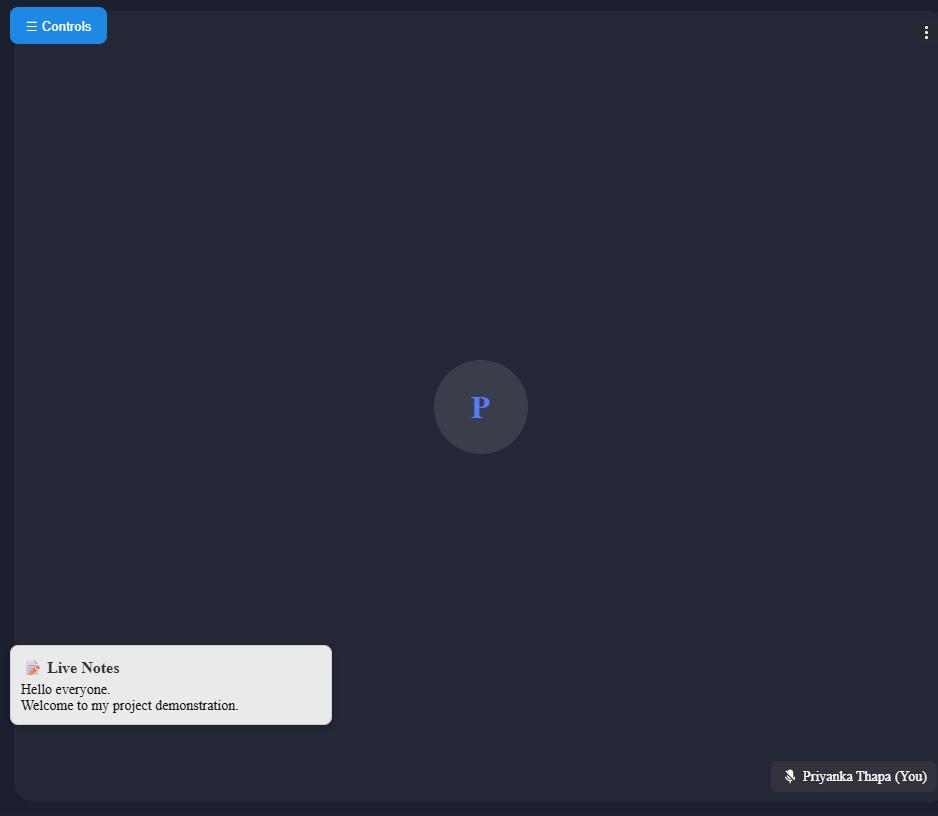

5. Speech-to-Text Notes (Real-time Meeting Transcription)

With real-time transcription, users can record conversations, choices, and concepts without taking notes by hand. Audio fromtheuser'smicrophoneiscapturedbytheWebSpeechAPIonceitisenabled.Inordertoconverttherecordedspeech intotext,pauses,intonation,andsimplepunctuationarehandled.

OntheMeetSync interface,thelivetranscriptionisdisplayedinatextbox.Thetranscriptionisavailablefordownloadas a.txt file at the conclusion of the conference, guaranteeing mobility and sharing. Figure 4 illustrates how important this functionisforminute-taking,hearing-impairedusers,andproducinglegalmeetingdocumentation.

Working Flow:

EnableTranscription→CaptureMicrophoneInput→ConverttoText→Display&SaveNotes

Volume: 12 Issue: 05 | May 2025 www.irjet.net

6. Raise Hand Feature (Structured Communication Assistance)

WithitsRaiseHandfunction,MeetSyncpromotescivilconversation,whichisessentialatlargerevents.Inordertovisually recognize themselves and send an event notification to all users,participantscanclicka"RaiseHand"button. Thepresenterorhostmightrankthespeakersaccording tothesequenceinwhichtheyraisetheirhands.Eitherthehost or the user can select "Lower Hand," which will remove the notification, after they have finished speaking. As seen in Figure5,thismaintainsadisciplineddebateflow,avoidsinterruptions,andguaranteesinclusionforthosewho aremore reserved.

Working Flow:

UserClicksRaiseHand→BroadcastEvent→HostManagesSpeakerQueue

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

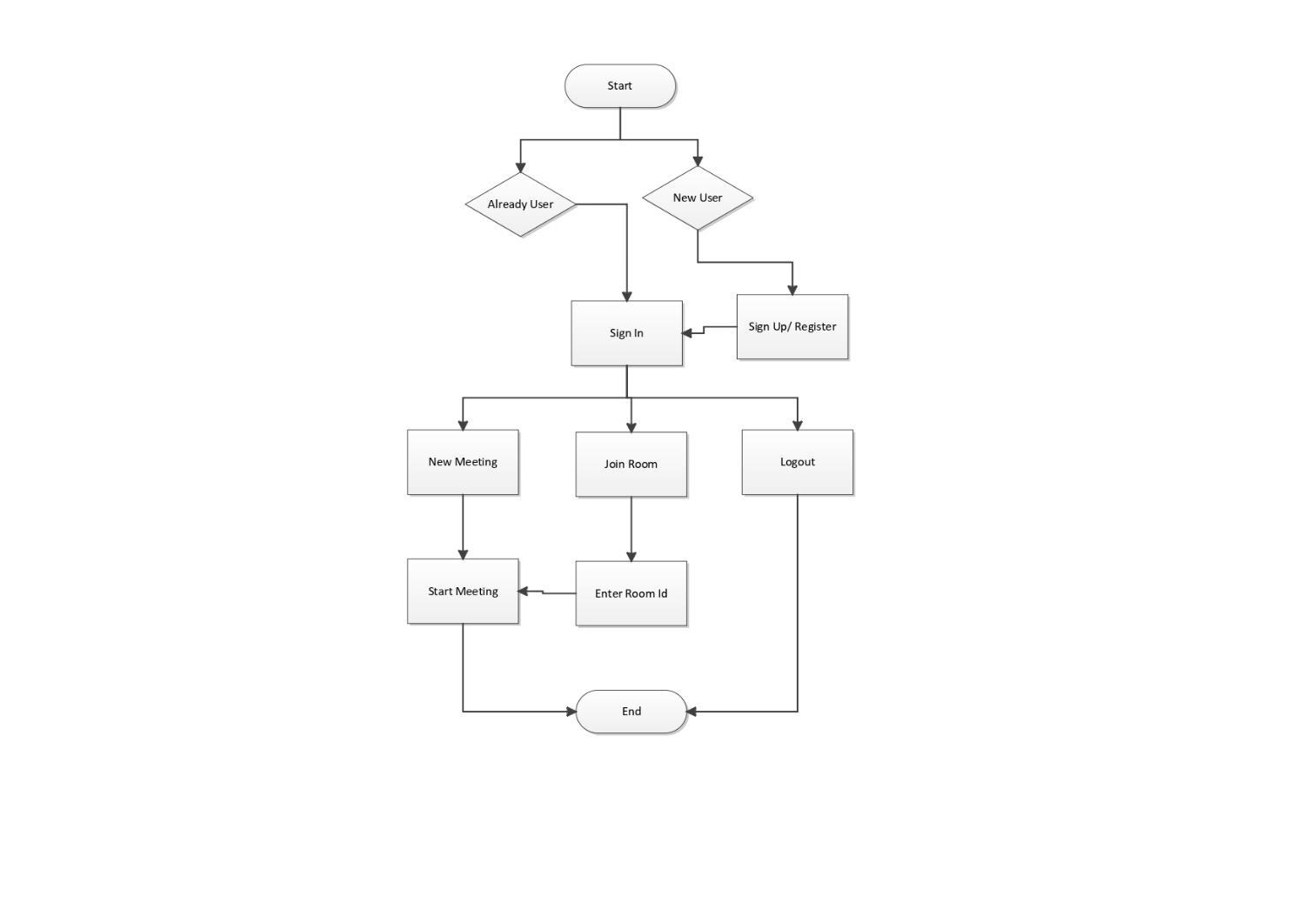

TheMeetSyncworkflowisshowninFigure6.UserscancreateorjoinameetingfollowingDjango sessionauthentication. Featureslikeasvoicecommands,aircanvas,raisehand,andtranscriptionareavailableduringthesession.Eachsession's attendanceandnotesaredocumented.

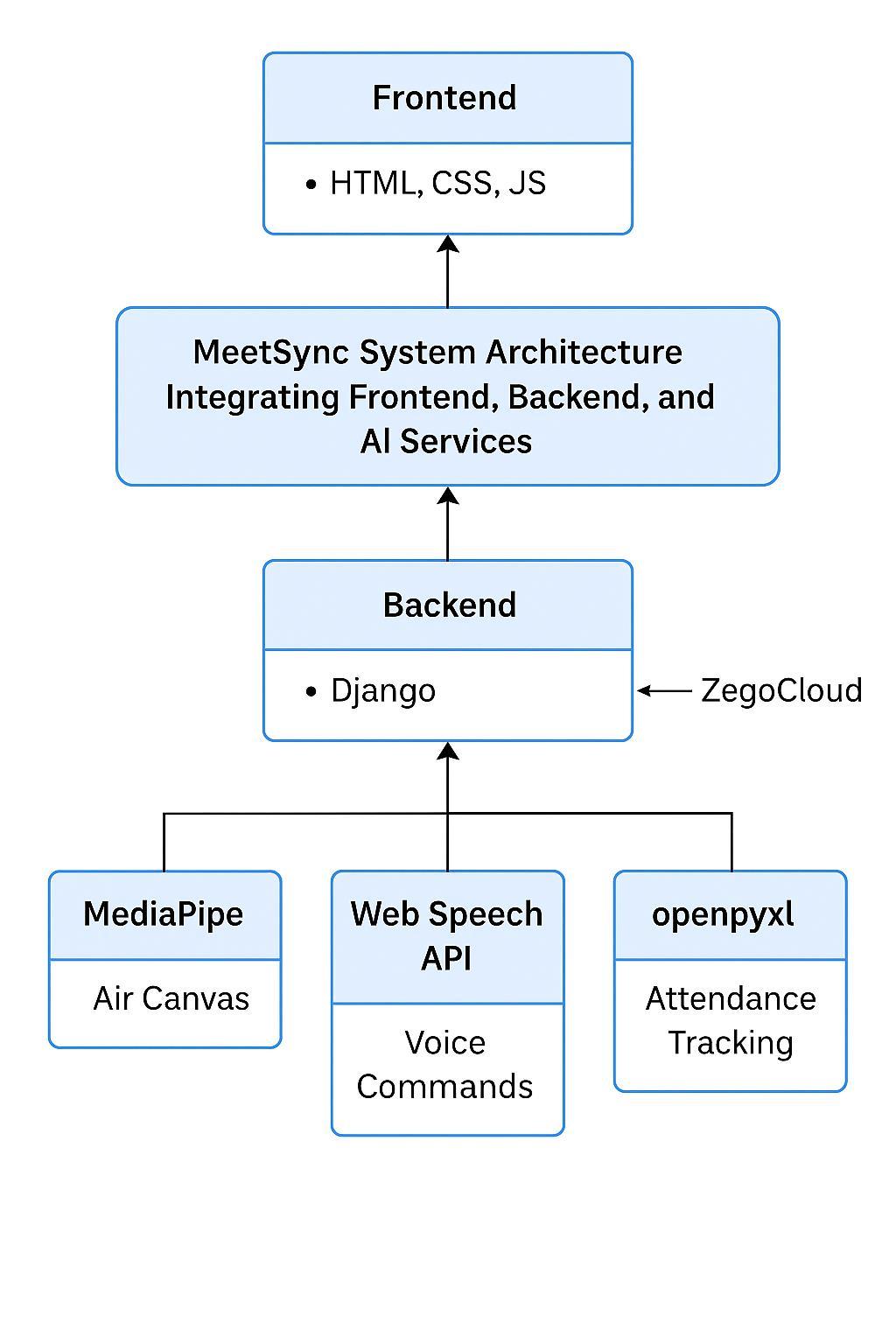

3.5 System Architecture

TheMeetSyncarchitecturallayoutisshowninFigure7.ItiscomprisedofaDjangobackendlinkedtoafrontendinterface constructed with HTML, CSS, and JS. ZegoCloud handles media streaming. Data storage is controlled locally and on the serverside,andAIfeaturesaremadepossibleviaMediaPipe(aircanvas)andWebSpeechAPI(speechrecognition).

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

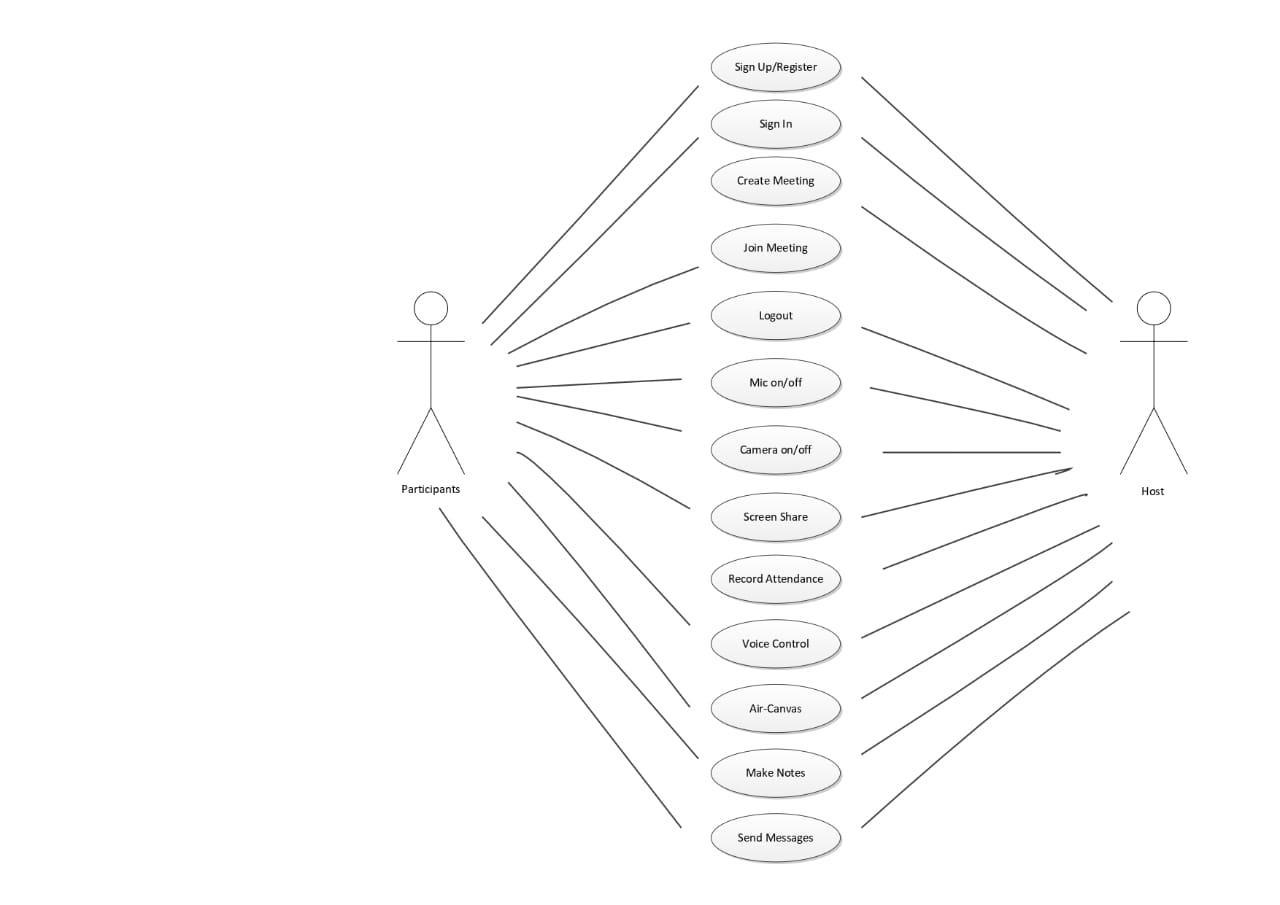

3.6 User Interaction and Functional Use Cases

The user interactions inside the MeetSync system are depicted in Figure 8. It draws attention to behaviors like raising a hand, joining a meeting, turning on Air Canvas, using speech-to-text, and giving voice instructions. The meeting's managementisfurtherwithinthehost'scontrol.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

4. Results & Discussion

MeetSync has been tested in a number of live meeting settings. Its efficiency and usefulness across major features are validatedbythefindings.

4.1 Air Canvas Performance

The air canvas used MediaPipe to demonstrate high-accuracy real-time gesture recognition. No discernible slowness or misunderstandingoccurredwhenusersdrew.

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

4.2 Voice Command Accuracy

Voice instructions had low latency and were correctly interpreted. Commands like "mute mic," "turn off camera," and "starttranscription"weretestedandwereallsuccessfullyperformedviatheWebSpeechAPI.

4.3 Attendance Logging

An Excel-formatted, well-organized attendance log was automatically created for each meeting. In professional and educationalcontextswhereparticipanttrackingiscrucial,theapproachprovedhelpful.

4.4 User Experience

MeetSync's clever features were easy for users to understand. AI tools improved engagement and made meeting managementeasier.Theraisinghandandspeech-to-textfunctionswereespeciallywell-liked.

5. Conclusion

MeetSync is a creative and clever platform for collaboration that effectively combines AI-powered capabilities with conventional video conferencing solutions. Accessibility and productivity are increased through the use of automated tracking, gesture control, and speech recognition. Future iterations might have calendar integration, collaborative whiteboards,hugelanguagemodel-poweredmeetingsummaries,andimprovedbackendcommunicationmodules.

6. References

1. X. Zhang, Y. Li, and M. Zhou, "Real-time Gesture Recognition in Video Conferencing Systems," IEEE Transactions on Multimedia,vol.24,pp.1203–1214,2022.

2. Google Developers, "MediaPipe Hands: ML Solutions for Hand Tracking," [Online]. Available: https://developers.google.com/mediapipe/solutions/hands,2021.

3. Mozilla Developer Network, "Web Speech API Documentation," [Online]. Available: https://developer.mozilla.org/enUS/docs/Web/API/Web_Speech_API,2023.

4.PythonSoftwareFoundation,"OpenpyxlDocumentation,"[Online].Available:https://openpyxl.readthedocs.io/,2023.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

5.ZegoCloud,"UIKit&APIDocumentation,"[Online].Available:https://docs.zegocloud.com/,2023.

6.M.R.AsifandA.Rizwan, “ComparativeStudyofZoom,GoogleMeet,andMicrosoftTeamsforRemoteTeachingduring COVID-19,” International Journal of Distance Education Technologies,vol.20,no.1,pp.1–14,2022.

7.B.Singhetal.,“WebRTCTechnologyforReal-TimeCommunication:ArchitectureandChallenges,” International Journal of Computer Applications,vol.179,no.30,pp.25–29,2021.

8. N. Patel and S. Shah, “AI-Based Voice Command Recognition for Smart Interfaces,” in Proc. of 6th International Conference on Intelligent Systems and Control (ISCO),Coimbatore,India,2023.

9. A. Kumar et al., “Speech-to-Text Technologies for Real-Time Transcription: A Survey,” ACM Computing Surveys (CSUR), vol.55,no.4,pp.1–30,2022.

10.R.ThomasandL.Zhang,“EnhancingAccessibilityinVirtualMeetingsusingAI:Tools,Benefits,andChallenges,” Journal of Web Engineering,vol.21,no.6,pp.543–561,2023.

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008