International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Madhuri Maruti Mangutkar1 , Prof. A. S. Garande,

1 PG Student, SPPU

2 Prof. ZEAL COLLEGE OF ENGINEERING & RESEARCH NARHE, PUNE - 411041

Abstract – A cutting-edge technical advancement that blendsartificialintelligenceandcomputervisionistheFood Recognition System (FRS). Food Recognition System is an effective tool for automating the identification and classificationofdifferentfoodproductsusingimageanalysis inaworldwithagreatdealofculinarydiversity.Wesuggest usingtheveryaccuratedeeplearningmodelVGG30forfood recognition. Convolutional neural networks (CNNs) like VGG30 are made especially for classifying images. It can accurately identify a variety of food items because it was trainedonadatasetoffoodphotos.InordertouseVGG30 forfoodrecognition,acollectionoffoodphotosmustfirstbe produced,witheachimagelabelledwiththetypeoffoodit depicts.Thepreprocesseddatasetcanthenbeusedtotrain the VGG30 model. Because of its deep architecture, it can capturethefinedetailsofmanyfoodproductsandperform wellevenindifficultsituationswithchangingbackgrounds, lighting,andangles.Second,becauseofitsscalability,VGG30 can manage big datasets with ease, which makes it appropriate for applications where there may be a significantvolumeandvarietyoffoodphotos.Furthermore, themodelgainsfromtransferlearning,whichminimizesthe requirement for labelled food data and expedites development. Its interpretability makes it easier to comprehend how it identifies food items, which improves openness and confidence. Furthermore, VGG30 is a dependable option for precise food recognition across a varietyofdomainsbecausetoitsbroadcommunitysupport and cutting-edge performance. Lastly, because of its versatility, it can be adjusted to particular jobs or preferences, which makes it a perfect fit for the Food RecognitionSystem.

Key Words: Continual Learning, Long-Tailed Distribution, FoodRecognition

1.INTRODUCTION

A.ContinualLearingInthedomainsofartificialintelligence andmachinelearning,theconceptofcontinuous learning, alsoreferredtoaslifelonglearningorincrementallearning, presents a paradigm shift. Its main goal is to tackle the challengeofadaptingmodelstochangingdatadistributions over time. Unlike conventional machinelearning methods that assume a fixed dataset, continual learning aims to empower models to continuously learn from new information while retaining knowledge gained from past experiences.Thisdynamicframeworkisespeciallyvitalin practical applications where data is non-stationary, as it

enablesmodelstostaypertinentandefficientinthemidstof evolvingcircumstances.

B. Long-Tailed Distribution Along-taileddistributionisa statistical concept that shows a notable accumulation of eventsinthetail sectionof thedistribution.Thisiswhere unusual events or extreme values are more common compared to a standard distribution. Unlike a normal distribution,wheredatapointsareconcentratedaroundthe meanandtaperoffslowly,along-taileddistributiondisplays anextendedandfrequentlydensetail,signifyingagreater occurrence of rare events. This distribution pattern is widespreadindifferentpracticalsituations,suchasincome distribution,webtraffic,andtheprevalenceofuncommon diseases.

C. Food Recognition Within the field of computer vision and artificial intelligence, food recognition is a fascinating andquicklydevelopingfield.Itentailscreatingmodelsand algorithms that can recognize and classify different foods that are portrayed in pictures or movies. Because social media and smartphones are so widely used, there is an increasingneedforfood-relatedmaterialtobeshared,which makes automatic food recognition both a technological difficultyandausefulrequirement.Thiscutting-edgefield aimstomimichumanvisualperceptionandallowcomputers to discriminate between different dishes, ingredients, and culinary traditions through the use of state-of-the-art machinelearningtechniqueslikedeepneuralnetworks

Research by Jianping He [1] et al. shown the exceptional efficacyofdeeplearningmethodsinarangeofpicture-based nutritionassessmentapplications.Foodportionproportions andfoodcategorizationaretwoexamplesoftheseuses.But currentapproachesfocusonsingleactivities,whichcauses problems when numerous tasks need to be completed in parallel in real-world circumstances. The authors used a multi-task learningstrategy toaddressthisissue, training boththeclassificationandregressiontasksatthesametime throughtheuseofsoftparametersharingbasedonL2-norm. To improve food portion size estimation accuracy, the authors also suggested combining cross-domain feature adaption with normalization. The authors' results outperformthecurrentapproachesforportionassessment intermsofmeanabsoluteerrorandclassificationaccuracy, showinggreatpromisefortheadvancementofpicture-based nutritionalevaluation.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Xinyue[2]Fooditemcategorization,accordingtoDish and colleagues, is critical to picture-based dietary assessmentsinceitallowsforthemeasurementofnutrient intake from photographed food. All of the present food classification research, however, is more concerned with defining "food types" than it is with offering precise nutritionalcontentinformation.Thisrestrictionresultsfrom differences in nutrition databases, which are in charge of connecting every "food item" to the pertinent data. Thus, categorizingfood productsusingthe nutrition database is thestudy'sgoal.Inordertodothis,theauthorspresentthe VFN-nutrient dataset, which contains information on the nutritionalmakeupofeveryfoodimageinVFN.Sincefood itemsareclassifiedmorethoroughlythanfoodcategories, thedatasethasahierarchicalstructureasaresult.

A approach that uses the teacher network's softmax outputasadditionaladvicefortrainingthestudentnetwork duringtheearlyphasesofKnowledgeDistillation(KD)was introduced by Guo-Hua Wang and colleagues [3]. On the otherhand,ithasbeennotedthattheresultofahighcapacity network does not always match the ground truth labels. Moreover, the performance of the student model is negativelyimpactedbytheclassifierlayersincethesoftmax output is less informative than the representation in the penultimate layer. KD has problems when it comes to distilling instructor models from unsupervised or selfdirected learning. Lately, there has been a greater emphasisonfeaturedistillation,withlessattentionpaidto directly replicating the teacher's traits in the penultimate layer. The problem with class imbalanced data in this paradigm,accordingtoSeulkiPark[4]etal.,isthatthelack of data from minority classes reduces the prediction accuracyoftheclassifier.

Inthisstudy,weofferanovelsolutiontothisissueby putting forth a minority oversampling methodology that enhances various minority samples by using backdrop photosthatprovideabundantcontextsuppliedbymajority classes.Usingrich-contextphotographsfromamajorityclass asbackgroundimagesoverlaidwithimagesfromaminority class is our primary method for diversifying the minority samples. This approach is simple to use and may be combined with other well-known recognition methods without any problems. Our proposed oversampling technique is empirically validated by ablation studies and large-scale trials. The Jiangpeng He [5] and his associates have put up a paradigm in which the first step towards image-baseddietaryassessmentisfoodgrouping.

Predictingthekindsoffoodineachinputimageistheaim.In real-world situations, on the other hand, food kinds are usuallyinvestigatedoverlongperiodsoftimetodetermine whichkindsareingestedmorefrequentlythanothers.This leadstoaseriousissueofclassdisparity,whichundermines theframework'soverallefficacy.Furthermore,sincenoneof the current long-tailed classification algorithms are

especially designed to handle food data, there are other issuesarisingfromthesimilaritiesbetweendifferentfood classesandthevariabilitywithineachclass.Thisresearch study presents Food101-LT and VFN-LT, two new benchmarkdatasetsforlong-tailedfoodclassification,inan effort to address these issues. The VFN-LT dataset is very important since it shows that a real long-tailed food distributionsystemexists.Asaresult,auniquetwo-phase strategy is put out to deal with the problem of class inequality.

The utilization of meal photos has greatly enhanced the capabilities of deep learning-based food recognition in distinguishing various food types. But there are two main obstaclesthatpreventitsreal-worldimplementation.First,a trained model needs to be able to learn new foods while retainingitsmemoryofpreviouslyrecognizedonesinorder topreventcatastrophicforgetfulness.Secondly,theuneven distribution of food images in real-world scenarios, with certain popular food types being more prevalent, poses a challenge.Itisimperativetolearnfromimbalanceddatato improvethesystem'sabilitytorecognizelesscommonfood classes.Ourprojectaimstotacklethesechallengesthrough long-tailedcontinuouslearning.

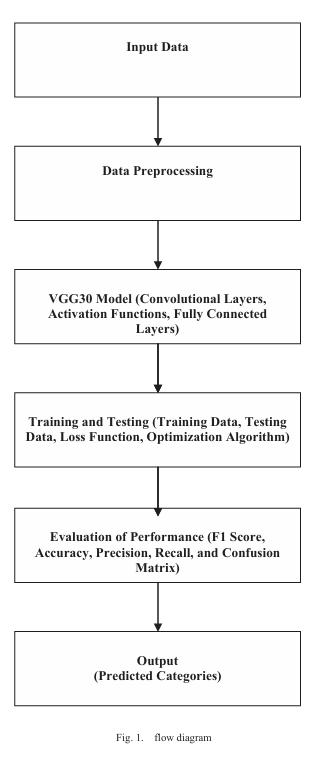

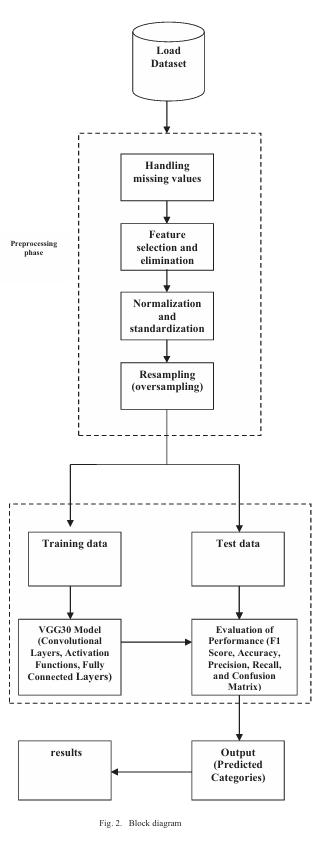

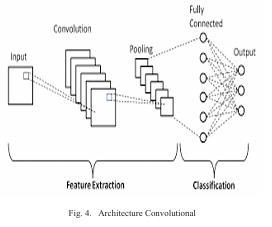

Food Recognition Systems (FRS) represent a cuttingedge solutiontothecomplex and widespread issuesassociated withfoodidentification,markingasignificantadvancement in the realm of technological innovation. In a time where visual content reigns supreme in our digital world, FRS harnesses the power of computer vision and artificial intelligencetointerpretandclassifyavastarrayofculinary options. Convolutional neural network (CNN) used in the proposedfoodrecognitionsystemistrainedonadatasetof foodimagesusingVGG30.CNNisabletorecognizefoodsin freshimagesbyapplyingitsunderstandingofthedistinctive qualities of many dishes. The initial stage of the system's process involves preparing the input image, which may involve resizing it, standardizing its pixel values, and converting it to a format compatible with VGG30. The preprocessedimageisthenfedintotheVGG30model.The sortoffooddepictedintheimageispredictedbythemodel byusingfeaturesthatithasextractedfromtheimage.The outputofthemodelisaforecastregardingthekindoffood that is seen in the picture. This prediction can be used to recognize the food or to perform other tasks, such as recommendingrecipesorprovidingadditionalinformation aboutthefood.

A. Load Input

During the initial phase of developingafoodrecognitionsystemwithVGG30, theinputdataiscollected.Thisdatasetconsistsof variousimagesshowingdifferentfooditems,which will be used for training and testing the neural

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

network. The foundation for the categorization procedureislaidbythefactthateveryimageinthe collectionisconnectedtoacertainfoodgroup.The preparation of the input data involves organizing andpreparingthedatasettobefedintotheneural networkfortheupcomingtrainingstage.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

B. Data Preprocessing Upon loading, the data is preprocessedthroughvariousstagestoensureitssuitability for training. These steps include uniformly scaling the images,normalizingthepixelvaluestoastandardrange,and potentially augmenting the data by rotating or flipping it. Datapreparationconsiderablybooststhemodel'scapacityto generalizepatternsfromthetrainingsettonew,unforeseen circumstances.

C. Feature Selection Theutilizationofconvolutionalneural networks(CNNs),suchasVGG30,anddeeplearninginthis course involves the application of "feature selection" to identifyimportantcomponentswithinimages.However,itis worthnotingthatdeeplearningmodelslikeVGGpossessthe abilitytoautonomouslylearnsignificantfeaturesduringthe training process. This eliminates the necessity for explicit feature selection, as seen in traditional machine learning approaches.TheconvolutionallayersofVGGarespecifically engineeredtoautomaticallyrecognizehierarchicalfeatures withinimages.

D. Traning And Testing Once the data has undergone preprocessing,theVGG30modelundergoestrainingusinga specific portion of the data referred to as the training set. Themodellearnstoidentifytraitsandpatternsinthephotos that correlate to various food groups during this training phase.Thetestingsetisadistinctsubsetofthedatasetthat isusedtoassesshowwellthemodelgeneralizestonewand unobservedoccurrences.Itissignificanttorememberthat thistestingsetisnotavailabletothemodelthroughoutthe trainingprocess.Thetrainingprocessinvolvesadjustingthe model'sweightsbasedonthecalculatedmistakeorlossat eachiteration.

E. Performance Evaluation After going through training and testing, the efficacy of the VGG30 food recognition system is assessed using a variety of metrics, including accuracy, precision, recall, and F1 score. These metrics provideimportantinformationabouthowwellthesystem classifies food items. A high accuracy level indicates the system's proficiency in categorizing most cases, while precision and recall metrics shed light on its capability to identify all positive instances and make precise positive predictions. In order to assess the feasibility of the food identification system and identify potential areas for enhancement,performanceevaluationplaysacriticalrole.

TheabstractintroducestheFoodRecognitionSystem(FRS), a state-of-the-art technological advancement that merges computervisionandartificialintelligencetorevolutionize thewayindividualsengagewithandcomprehendfood.Ina globallandscaperichinculinaryvariety,FRSemergesasa robust mechanism for streamlining the identification and classification of numerous food items through image analysis.TheproposaladvocatesfortheutilizationofVGG30, an exceptionally precise deep learning model, for food recognitionassignments.VGG30standsasaconvolutional neural network (CNN) meticulously crafted for image categorization.Toensurediversityamonga widearrayof food products, an assortment of food images is initially compiled, with each image categorized based on its respectivefoodgroup.Subsequently,thedatasetundergoes pre-processing by resizing all images to a standardized dimensionandnormalizingpixelvaluestofacilitatemodel training.Subsetsofthedatasetarethencreatedfortraining and testing in order to assess the model's efficacy. The VGG30 model is trained by feeding training images into it andutilizingbackpropagationtoadjustitsparametersusing the training dataset. Until convergence or a predefined numberofepochsisreached,thisiterativeprocessiscarried out.Lastly,themodel'sperformanceisassessed using the testing dataset, which also yields measures like F1-score, precision, and recall that provide insight into the model's abilitytoclassifyfoodimages

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

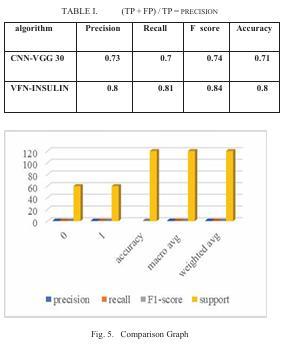

UpontrainingtheFoodRecognitionSystem(FRS)usingthe VGG30modelonadatasetoffoodimagesandevaluatingits performanceonadistincttestset,theresultsrevealitshigh accuracyinidentifyingvariousfooditems.Theassessment criteria,whichincludeaccuracy,recall,andF1-score,show how well the FRS can accurately classify food items using pictureanalysis.Excellentperformancemetricsintermsof precision, recall, and F1-score are shown by the Convolutional Neural Network (CNN) with the Inception architecture for both classes. The model successfully classifieseachclasswithprecision,scoring0.87forclass0 and 0.9 for class 1. Furthermore, the model's capacity to capturethemajorityofcaseswithineachclassisindicated byrecallscoresof0.87and0.9.Notably,theF1-scoresfor classes 0 and 1 are notably high at 0.89 and 0.88, respectively,strikingabalancebetweenrecallandprecision. Themodel'soverallaccuracyof0.88signifiesitscapabilityto accurately classify examples in both classes. This wellroundedperformanceisfurthervalidatedbythemacro and weighted average metrics, which also yield favorable outcomes in recall, precision, and F1-score. Consequently, our findings underscore the CNN with Inception architecture's effectiveness in distinguishing between the twoclasses,hintingatitspotentialapplicationinreal-world scenarios necessitating food recognition. One commonly usedmetrictoevaluatetheeffectivenessofclassificationis accuracy. The calculation involves ascertaining the proportionofaccuratelycategorizedsamplesrelativetothe totalnumberofsamples.Conversely,precisionquantifiesthe proportionofpositiveclassforecaststhatactuallybelongto thepositiveclass.Tofindthis,utilizetheformulabelow:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Ultimately,thesuggestedsystemestablishesastrongfood recognitionsystemthroughthecombinationofthereliable andpreciseInceptionNetarchitecturewiththeuserfriendly Streamlitinterface.Theprocesscommenceswitharangeof datacollectionandpreparationtechniques,guaranteeinga substantial dataset for training purposes. The system's capacitytodifferentiatebetweendifferentfoodcategories and display styles is improved through iterative model trainingalongsidetheutilizationofInceptionNetforfeature extraction.TheincorporationofStreamlitenablesasmooth userinteraction,simplifyingtheprocessofuploadingimages andobtainingimmediatepredictions.

FoodRecognitionSystems(FRSs)canrevolutionizetheway peopleperceiveandinteractwithfood.Thesesystemsplaya crucial role in cultural and culinary education, aiding individuals with dietary restrictions, automating meal tracking,providingnutritionalinformation,andsupporting those with food allergies. Among the various benefits of FRSs,oneofthemostpromisingareasisassistingindividuals with dietary limitations in identifying safe food options. Enhancingtheaccuracyandreliabilityofthesystemcanbe achievedbyutilizingabroaderrangeoffoodimagedatasets, implementingadvancedfeatureextractiontechniques,and employing more sophisticated machine learning models. Improving system scalability and efficiency can be accomplishedbyoptimizingthecode,utilizinglightweight models,andincorporatingdistributedcomputingmethods. To enhance user experience, designing user friendly interfaces and integrating the system with existing applications can promote a more accessible and userfriendlysystem.

[1]ThecreatorsofthispaperareJiangpengHe,ZemanShao, JanineWright,DeborahKerr,HymnBoushey,andFengqing Zhu.Thetitleofthepaperis"Performvarioustaskspicture baseddietaryappraisalforfoodacknowledgmentandpart size assessment." It was introduced at the 2020 IEEE Gathering on Media Data Handling and Recovery, and it tendstobefoundonpages49-54ofthemeetingprocedures.

[2] Runyu Mao, Jiangpeng He, Zeman Shao, Sri KalyanYarlagadda,andFengqingZhuaretheauthorsofthis paper. "Visual aware hierarchy based food recognition" is the title of the paper. It was introduced at the Global GatheringonExampleAcknowledgmentStudio,andittends tobefoundonpages571-598ofthemeetingprocedures.

[3] The paper was published in the IEEE Transactions on Pattern Analysis and Machine Intelligence under the title "Distillingknowledgebymimickingfeatures."Thecreators

arenotreferencedinthegiventext.Itisfoundinvolume44, issue11,andrangespages8183-8195.

[4] Seulki Park, Youngkyu Hong, ByeonghoHeo, Sangdoo Yun,andJinYoungChoiaretheauthorsofthispaper.The titleofthepaperis"Thegreaterpartcanhelptheminority: Setting rich minority oversampling for long-followed arrangement." It was presented in 2022 at the IEEE/CVF Conference on Computer Vision and Pattern Recognition, andtheproceedingsofthatconferenceincludepages68876896.

[5] The creators of this paper are Zeman Shao, Yue Han, JiangpengHe,RunyuMao,JanineWright,DeborahKerr,Ditty JoBoushey,andFengqingZhu.The title ofthepaperis "A coordinatedframeworkforversatilepicturebaseddietary appraisal."ItwasintroducedatthethirdStudioonAIxFood in2021,anditverywellmaybetrackeddownonpages1923ofthestudioprocedures.

[6]ChaoandHass,''Choice-baseduserinterfacedesignofa smarthealthyfoodrecommendersystemfornudgingeating behaviorofolderadultpatientswithnewlydiagnosedtypeII diabetes,''inProc.Int.Conf.Hum.-Comput.Interact.Cham, Switzerland:Springer,2020,201-234.

[7]DataScienceandBigDataAnalyticsreleasedareportby VairaleandShuklatitled"Recommendationframeworkfor dietandexercisebasedonclinicaldata:Asystematicstudy." Springer,Singapore,2019.pages346–333.

[8] "Health-aware food recommender system," M. Ge, F. Ricci,andD.Massimo, Proc. 9thACMConf.Recommender Syst.,Sep.2020,pp.333–334.

[9]"Differencesindietaryintakeexistamongusadultsby diabetic status using NHANES 2009-2016," Luotao Lin, FengqingZhu,EdwardJ.Delp,andHeatherAEicher-Miller.

[10] M. Melchiori, N. De Franceschi, V. De Antonellis, D. Bianchini,"PREFer:Aprescription-basedfoodrecommender system,"ComputerStandardsandInterfaces,vol.54,pp.6475,November2019.

[11] Tran et al., ''Hospital recommender systems: Current andfuturedirections,''JournalofIntell.Inf.Syst.,vol.57,no. 1,pp.171-201,August2021.

[12]"Improvingdietaryassessmentviaintegratedhierarchy foodclassification,"IEEE23rdInternational Workshop on MultimediaSignalProcessing(MMSP),2021IEEE,pp.1–6, RunyuMao,JiangpengHe,LuotaoLin,ZemanShao,Heather A.EicherMiller,andFengqingZhu.

[13]"Onlinecontinuallearningforvisualfoodclassification," Jiangpeng He and Fengqing Zhu, Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops,October2021,pp.2337–2346.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

[14]"Anopen-endedcontinuallearningforfoodrecognition usingclassincrementalextremelearningmachines,"Ghalib Ahmed Tahir and Chu Kiong Loo, IEEE Access, vol. 8, pp. 82328–82346,2020.

[15] Shuicheng Yan, Jiashi Feng, Bryan Hooi, Yifan Zhang, andBingyiKang."Acomprehensiveanalysisofdeeplongtailedlearning,"arXivpreprintarXiv:2110.04596,2021.

[16] "Conversational agents for recipe recommendation," Barko-Sherif, Elsweiler, and Harvey, Proc. Conf. Hum. Inf. Interact.Retr.,Mar.2020,pp.73-82.

[17] M. Melchiori, N. De Franceschi, V. De Antonellis, D. Bianchini,"PREFer:Aprescription-basedfoodrecommender system,"ComputerStandardsandInterfaces,vol.54,pp.6475,November2019.

[18] "Towards user-oriented privacy for recommender systemdata:Apersonalizedapproachtogenderobfuscation foruser profiles," by Slokom,Hanjalic,andLarson.Article number.102722,Inf.Process.Manage.,vol.58,no.6,Nov. 2021.

[19]InOctober2019,Yuandcolleaguespublished"Acrossdomaincollaborativefilteringalgorithmwithgrowinguser and item features via the latent factor space of auxiliary domains"inPatternRecognition,vol.94,pp.96-109.

[20] [A. Kale and N. Auti, ''Automated menu planning algorithm for children: Dietary management system employingID3forIndianfooddatabase,'Proc.Comput.Sci., vol.50,pp.197-202,January2020].