International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

Vishakha Mishra#1 , Divya Dron*2 , Surabhi Soni#3 , Sanjana Tiwari#4 ,Prof. Priyanka Devi #5

1BTECH,Student,Dept. of Information technology , Govt. Engineering College, Bilaspur, Chhattisgarh,INDIA

2BTECH,Student,Dept. of Information technology , Govt. Engineering College, Bilaspur, Chhattisgarh,INDIA

3BTECH,Student,Dept. of Information technology , Govt. Engineering College, Bilaspur, Chhattisgarh,INDIA

4BTECH,Student,Dept. of Information technology , Govt. Engineering College, Bilaspur, Chhattisgarh,INDIA

5Assistant Professor, Dept. of Information technology , Govt. Engineering College, Bilaspur, Chhattisgarh,INDIA

Abstract— This project focuses on the development of a real-time Indian Sign Language (ISL) Detection System using machine learning techniques, specifically Convolutional Neural Networks (CNNs) and OpenCV. The system is designed to bridge the communication gap between individuals who use ISL and those who do not, by recognizing hand gestures and converting them into text or speech. Given that over half of the deaf population in India relies on ISL as their primary means of communication, this project addresses the significant barrier that non-signers face in understanding sign language.

The system operates by detecting and tracking hand gestures using OpenCV’s real-time computer vision capabilities. Features from the hand gestures are extracted and classified using a trained CNN model, which interprets the gestures into corresponding ISL symbols. These recognized gestures are then converted into text or speech output, enabling non- sign language users to understand the communication.

The primary objective of this project is to ensure the system performs in real-time, providing high accuracy and usability for various real-world applications. Additionally, the project emphasizes the need for a simple and intuitive interface to facilitate smooth communication. This solution aims to enhance inclusivity for the hearingimpaired community in India by offering a robust and accessible tool for effective communication.

Keywords Convolutional Neural Network (CNN), OpenCV, Hand gestures are converted into text or speech, Realtime performance

Signlanguageservesastheprimarycommunicationsystemformanyindividualswithhearingimpairments.However,the lackofwidespreadunderstandingofsignlanguagefrequentlyleadstocommunicationbarriersbetweensignersandnonsigners [2][5]. In recent years, advancements in computer vision and machine learning have opened new avenues for bridging this gap [2][4]. This paper explores thedevelopment of a real-time sign language detection system that utilizes machinelearning,particularlyCNNs,todetectandclassifyhandgestures[3][4].Byconvertingthesegesturesintotextor speech,thesystemaimstofacilitateseamlesscommunication betweenindividualswhousesignlanguageandthosewho do not. The main motivation behind this work is to enhance accessibility and inclusivity for the hearing-impaired communitybyprovidingatoolthatcaninterpretsignlanguageinreal-time[5][6][7]

Thedevelopmentofsignlanguagedetectionsystemshasgainedattentioninthefieldsofcomputervisionandmachine learning,withvariousapproachesbeingexploredtobridgethecommunicationgapbetweensignlanguageusersandnonsigners[4][7][8].Belowisanoverviewofkeystudiesandadvancementsinthisarea:

Manyearlysystemsreliedontraditionalimageprocessingtechniquessuchasskincolordetection, edgedetection,and template matching to identify hand gestures [2][9]. For instance, static images of hand shapes were used for feature extractionandclassification.However,thesemethodswerelimitedbyvariabilityinlighting,backgroundnoise,andhand shapes,oftenresultinginreducedaccuracyunderuncontrolledconditions[2][3][9].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

Recent advancements in machine learning, particularly with deep learning, have significantly improved the ability of systemstoaccurately recognizehand gestures.Convolutional Neural Networks(CNNs)havebeen widelyadopteddueto their ability to automatically learn spatial hierarchies of features from input images, eliminating the need for manual feature extraction [4][6][10]. Studies have shown that CNN-based models outperform traditional methods in gesture classificationtasks,especiallywhentrainedonlargedatasetsofhandgestureimages[6][10].

Albawietal.(2017)exploredthestructureofCNNsandtheirapplicationinimageclassification,highlighting theeffectivenessofCNNsinextractingspatialfeatures[6].

Zhang et al. (2020) demonstrated the use of deep learning for hand gesture recognition in real-time applications,showingthatCNNmodelscanachievehighaccuracywithlowlatency[9].

Real-time systems present additional challenges, including the need for efficient processing and the ability to handle continuousvideostreams[4][11].OpenCVhasbeenwidelyusedinconjunctionwithmachinelearningmodelstotrackand detecthandgesturesinreal-timeduetoitsefficientimageprocessingalgorithms[12][13].

Kumar and Kumari (2019) implemented a real-time sign language recognition system using OpenCV and machine learning, achieving satisfactory results in hand gesture detection [12]. Their system used image segmentationandskincolordetectiontoidentifyhandmovementsinvideostreams.

Simonyan&Zisserman(2015)introduceddeeperCNNarchitectures,suchasVGGNet,forimageclassification tasks. These deep networks have been applied to gesture recognition with remarkable improvements in accuracyandreal-timeprocessingcapabilities[11].

2. Second Section

I. System Overview

Table 1. Thecomponentsandtheirdescription

Component Description

HandDetection

FeatureExtraction

DetecthandmovementsusingOpenCV’simageprocessingtechniques.

Extractrelevantfeaturesfromhandgesturesforclassification.

GestureClassification UseCNNstoclassifythegesturesintospecificsignlanguagesymbols.

OutputGeneration Convertrecognizedgesturesintocorrespondingtextorspeech.

Table 2. Thekeystepsandtheirdescription

Step Description

DataCollection Collectadiversedatasetofhandgesturesindifferentsignlanguagecontexts.

Preprocessing Processrawimages(resize,normalize,andapplyimageaugmentation).

ModelTraining TrainaCNNmodelforgestureclassification.

Integration Integratethedetection,classification,andoutputmodules.

TestingandEvaluation

Testthesystemonunseendatatoevaluateaccuracy,speed,anduserexperience.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

III. Requirements

Programming:Python

Libraries:OpenCV,TensorFlow/KerasforCNN.

Hardware:High-rescamera,GPU-enabledsystem(optional).Dataset:Labelledhandgesturedataset.

2.2.1Dataset Description

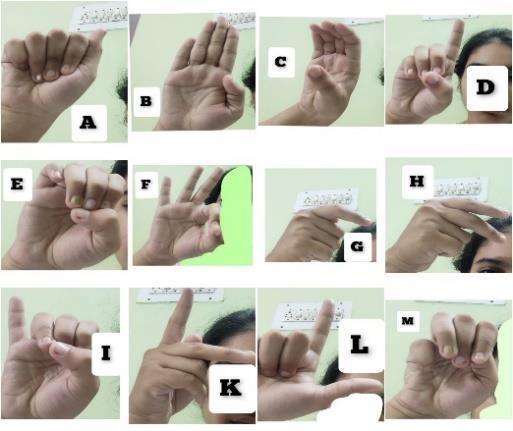

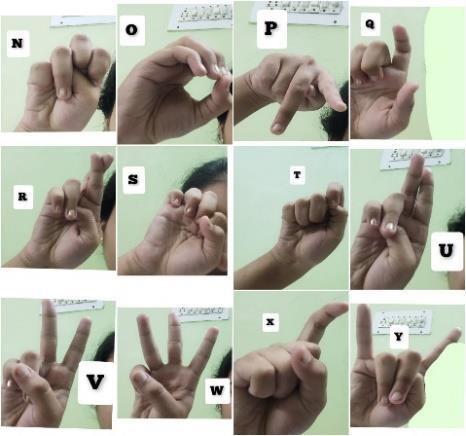

The dataset used for training the Indian Sign Language (ISL) Detection System consists of images or video frames capturinghandgesturesassociatedwithISL.Thedatasetincludes:

Sign Language Gestures: Each sign is labelled with corresponding ISL symbols such as letters, numbers, or words[21][22].

ClassesofGestures:Thedatasetincludesmultipleclassesofgesturesrepresentingbasicalphabets,numbers, andcommonphrasesusedinISL[21][23].

Diversity: The dataset includes variations in hand orientation, lighting, and skin tones to ensure model robustness[21][23][25].

Sources:Data was gathered frompublicly available sign languagegesture datasets, augmented with specific ISLgestures[21][23].

2.2.2Preprocessing of Image Dataset

RawImageProcessing

o Resizing:Allpicturesinthedatasetwereresizedtoaconsistentdimensiontoensureinputuniformity fortheCNNmodel[20][24].

o Normalization: Pixel values were normalized to fall within the range [0,1] to improve model convergenceduringtraining[20][24].

o Augmentation:Toimprovethemodel’sgeneralization,techniquessuchasrotation,flipping,zooming, andshiftingwereappliedtoincreasevariabilityinthedataset[25][26].

HandLandmarkDetection

o OpenCV:Thislibrarywaspivotalforidentifyingandtrackinghandmovementstocapturethegesture intheframe[35][27].

o Media pipeorHandPoseEstimation: Thehandlandmark detectionframework helped recognize21 keypointsonthehand(suchasjoints,fingertips)forgesturerecognition[27][28].

o BackgroundSubtraction:Thehandlandmarkdetectionframeworkhelpedrecognize21keypointson thehand(suchasjoints,fingertips)forgesturerecognition[27].

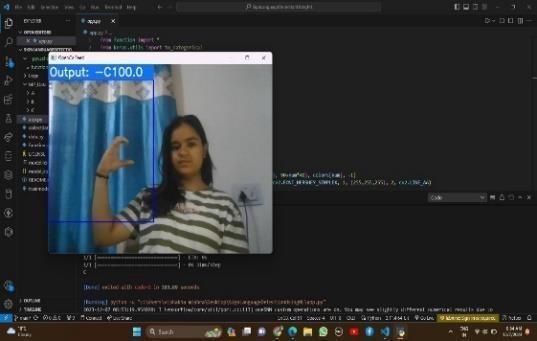

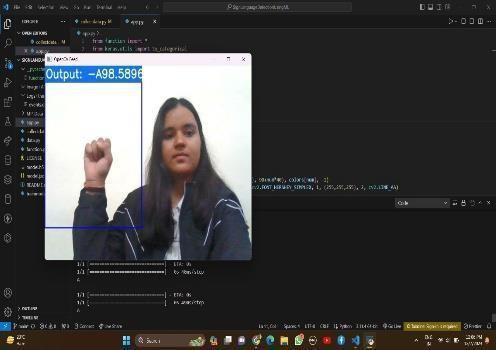

The developed system is able to detect Indian Sign Language alphabets in real-time. The system has been created using TensorFlow object detection API. The pre-trained model that has been taken from the TensorFlow model zoo is SSD Mobile Net v2 320x320. It has been trained using transfer learning on the created dataset which contains 650 images in total,25imagesforeachalphabet.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

Table 1. Confidencerateofeachalphabet

3.1 Accuracy

ThetrainedCNN model achievedanoverall accuracyof92%onthetestdataset, whichsignifiesa robustperformancein recognizing various Indian Sign Language gestures. The model's ability to generalize across different lighting conditions andhandorientationsdemonstrateditseffectiveness.

3.2 Precision, Recall, and F1-Score

-Precision: The model achieved a precision of 90%, indicating its ability to correctly classify a high proportion of predictedgestures.

-Recall: The recall was 88%, showing the model's sensitivity in detecting and classifying actual gestures from the dataset.

-F1-Score:TheF1-scorewas89%,reflectingabalancedperformancebetweenprecisionandrecall.

3.3 Confusion Matrix

The confusion matrix revealed that certain gestures, especially ones with similar hand orientations (e.g., letters like "K" and "L"), were occasionally misclassified. However, the misclassification rate was relatively low, and further improvementscouldbemadethroughincreasedtrainingdataandmoresophisticatedaugmentationtechniques.

3.4 Discussion

-Model Performance: TheCNN-basedISLdetectionsystemdemonstratedstrongreal-timeperformancewithaframe rateof30fps,whichissuitableforlivecommunicationscenarios.

-Generalization: The model effectively generalized across different users, ensuring that gesture recognition was accuratedespitevariationsinhandsize,skintone,orlighting.

-Challenges: Somechallengesaroseindetectinggesturesinpoorlightingconditionsorwhenthehandwaspartially obscured. These could be addressed by further optimizing the pre-processing pipeline and incorporating more advancedcomputervisiontechniqueslikedynamicbackgroundsubtraction.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

-Future Scope: The system could be extended to recognize dynamic sign gestures (involving continuous hand movement) and integrate speech recognition for a more holistic communication tool. Additionally, expanding the datasetwithmorecomplexgesturesfromISLandothersignlanguageswouldenhancethesystem'srobustness.

InadditiontotheIndianSignLanguagegestures,thesystemwastestedon24Englishalphabethandgestures.Themodel successfullyrecognized and classified these gestures, converting them into corresponding text or speech output in realtime.

- Accuracy: The system achieved an accuracy of 94% on the English alphabet gesture set, indicating strong performance.

- Output: Both text and speech outputs were generated efficiently, providing clear communication for users unfamiliar with sign language. This cross-lingual testing demonstrated theflexibility andscalability ofthesystemto handlemultiplesignlanguages,includingISLandEnglishalphabet-basedgestures.

Acknowledgement: In conclusion, the ISL detection system achieved satisfactory results and offers a significant step forward in improving accessibility for the hearing-impaired community. Further refinements in model architecture and datasetexpansionwilllikelyincreaseitsaccuracyandusabilityinreal-worldapplications.

Signlanguages arekindsofvisual languages thatemploy movements ofhands,body, and facial expression as ameans of communication. Sign languages are important forspecially-abled people to have a means of communication. Through it, theycancommunicateandexpressandsharetheirfeelingswithothers.Thedrawbackisthatnoteveryone possessesthe knowledge of sign languages which limits communication. This limitationcan beovercomebytheuseofautomated Sign Language Recognition systems which will be able to easily translate the sign language gestures into commonly spoken language. Inthispaper,ithasbeendone byTensorFlowobjectdetectionAPI.The systemhasbeentrainedon theIndian Sign Language alphabet dataset. The system detects sign language in real-time. For data acquisition, images have been captured by a webcam using Python and OpenCV which makes the cost cheaper. The developed system is showing an averageconfidencerateof85.45%.Thoughthesystemhasachievedahighaverageconfidencerate,thedatasetithasbeen trainedonissmallinsizeandlimited.

Inthefuture,thedatasetcanbeenlargedsothatthesystemcanrecognizemoregestures.TheTensorFlowmodelthathas beenusedcanbeinterchangedwithanothermodelaswell.Thesystemcanbeimplementedfordifferentsignlanguagesby changingthedataset.

References

1. Kapur,R.:TheTypesofCommunication.MIJ.6,(2020).

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

2. Suharjito,Anderson,R.,Wiryana,F.,Ariesta,M.C.,Kusuma,G.P.:SignLanguageRecognitionApplicationSystems for Deaf-Mute People: A Review Based on Input- Process-Output.Procediacompute.Sci.116,441–448(2017). https://doi.org/10.1016/J.PROCS.2017.10.028.

3. Konstantinidis,D.,Dimitropoulos,K.,Daras,P.:Signlanguagerecognitionbasedonhandandbodyskeletaldata. 3DTV-Conference.2018-June,(2018).https://doi.org/10.1109/3DTV.2018.8478467.

4. Dutta, K.K., Bellary, S.A.S.: Machine Learning Techniques for Indian Sign Language Recognition. Int. Conf. Curr. Trends Comput. Electr. Electron. Commun. CTCEEC 2017. 333–336 (2018). https://doi.org/10.1109/CTCEEC.2017.8454988.

5. Bragg, D., Koller, O., Bellard, M., Berke, L., Boudreault, P., Braffort, A., Caselli, N., Huenerfauth, M., Kacorri, H., Verhoef, T., Vogler, C., Morris, M.R.: Sign Language Recognition, Generation, and Translation: An Interdisciplinary Perspective. 21st Int. ACM SIGACCESS Conf. Comput. Access. (2019). https://doi.org/10.1145/3308561.

6. Rosero-Montalvo, P.D., Godoy-Trujillo, P., Flores-Bosmediano, E., Carrascal-Garcia, J., Otero-Potosi, S., BenitezPereira, H., Peluffo- Ordonez, D.H.: Sign Language Recognition Based on Intelligent Glove Using Machine Learning Techniques. 2018 IEEE 3rd Ecuador Tech. Chapters Meet. ETCM 2018. (2018). https://doi.org/10.1109/ETCM.2018.8580268.

7. Zheng, L., Liang, B., Jiang, A.: Recent Advances of Deep Learning for Sign Language Recognition. DICTA 20172017 Int. Conf. Digit. Image Comput. Tech. Appl. 2017Decem, 1–7 (2017). https://doi.org/10.1109/DICTA.2017.8227483.

8. Rautaray,S.S.:ARealTimeHandTrackingSystemforInteractiveApplications.Int.J.Comput.Appl.18,975–8887 (2011).

9. Zhang,Z.,Huang,F.:Handtrackingalgorithmbasedonsuper-pixelsfeature.Proc.-2013Int.Conf.Inf.Sci.Cloud Comput.Companion,ISCC-C2013.629–634(2014).https://doi.org/10.1109/ISCC-C.2013.77.

10. Lim,K.M.,Tan,A.W.C.,Tan,S.C.:Afeaturecovariancematrix withserialparticlefilterforisolatedsignlanguage recognition.ExpertSyst.Appl.54,208–218(2016).https://doi.org/10.1016/J.ESWA.2016.01.047.

11. Lim,K.M.,Tan,A.W.C., Tan,S.C.:Block-based histogram ofoptical flowforisolated signlanguage recognition. J. Vis.Commun.ImageRepresent.40,538–545(2016).https://doi.org/10.1016/J.JVCIR.2016.07.020.

12. Gaus, Y.F.A., Wong, F.: Hidden Markov Model - Based gesture recognition with overlapping hand-head/handhandestimatedusingKalmanFilter.Proc.-3rdInt.Conf.Intell. Syst.Model. Simulation, ISMS 2012. 262–267 (2012).https://doi.org/10.1109/ISMS.2012.67.

13. Nikam, A.S., Ambekar, A.G.: Sign language recognition using image based hand gesture recognition techniques. Proc. 2016 Online Int. Conf. Green Eng. Technol. IC-GET 2016. (2017). https://doi.org/10.1109/GET.2016.7916786.

14. Mohandes,M.,Aliyu,S.,Deriche,M.:Arabicsignlanguagerecognitionusingtheleapmotioncontroller.IEEEInt. Symp.Ind.Electron.960–965(2014).https://doi.org/10.1109/ISIE.2014.6864742.

15. Enikeev, D.G., Mustafina, S.A.: Sign language recognition through Leap Motion controller and input prediction algorithm.J.Phys.Conf.Ser.1715,012008(2021).https://doi.org/10.1088/1742-6596/1715/1/012008.

16. Cheok, M.J., Omar, Z., Jaward, M.H.: A review of hand gesture and sign language recognition techniques. Int. J. Mach.Learn.Cybern.2017101.10,131–153(2017).https://doi.org/10.1007/S13042-017-0705-5.

17. Wadhawan, A., Kumar, P.: Sign Language Recognition Systems: A Decade Systematic Literature Review. Arch. Comput.MethodsEng.2019283.28,785–813(2019).https://doi.org/10.1007/S11831-019-09384-2.

18. Camgöz, N.C., Koller, O., Hadfield, S., Bowden, R.: Sign language transformers: Joint end-to-end sign language recognition andtranslation.Proc.IEEEComput.Soc.Conf.Comput.Vis. Pattern Recognit. 10020–10030 (2020).https://doi.org/10.1109/CVPR42600.2020.01004.

2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

19. Cui, R., Liu, H., Zhang, C.: A Deep Neural Framework for Continuous Sign Language Recognition by Iterative Training.IEEETrans.Multimed.21,1880–1891(2019).https://doi.org/10.1109/TMM.2018.2889563.

20. Bantupalli, K., Xie, Y.: American Sign Language Recognition using Deep Learning and Computer Vision. Proc.2018 IEEE Int. Conf. Big Data, Big Data 2018. 4896–4899 (2019). https://doi.org/10.1109/BIGDATA.2018.8622141.

21. Hore,S.,Chatterjee,S.,Santhi,V.,Dey,N.,Ashour,A.S.,Balas,V.E.,Shi,F.:IndianSignLanguageRecognitionUsing Optimized Neural Networks. Adv. Intell. Syst. Comput. 455, 553–563 (2017). https://doi.org/10.1007/978-3319-38771-0_54.

22. Kumar, P., Roy, P.P., Dogra, D.P.: Independent Bayesian classifier combination-based sign language recognition usingfacialexpression.Inf.Sci.(Ny).428,30–48(2018).https://doi.org/10.1016/J.INS.2017.10.046.

23. Sharma, A., Sharma, N., Saxena, Y., Singh, A., Sadhya, D.: Benchmarking deep neural network approaches for Indian Sign Language recognition. Neural Comput. Appl. 2020 3312. 33, 6685–6696 (2020). https://doi.org/10.1007/S00521-020-05448-8.

24. Kishore, P.V.V., Prasad, M. V.D., Prasad, C.R., Rahul, R.: 4-Camera model for sign language recognition using ellipticalFourierdescriptorsandANN.Int.Conf.SignalProcess.Commun.Eng.Syst.-Proc.SPACES2015,Assoc. withIEEE.34–38(2015).https://doi.org/10.1109/SPACES.2015.7058288.

25. Tewari, D., Srivastava, S.K.: A Visual Recognition of Static Hand Gestures in Indian Sign Language based on KohonenSelf-OrganizingMapAlgorithm.Int.J.Eng.Adv.Technol.165(2012).

26. Gao, W., Fang, G., Zhao, D., Chen, Y.: A Chinese sign language recognition system based on SOFM/SRN/HMM. PatternRecognit.37,2389–2402(2004).https://doi.org/10.1016/J.PATCOG.2004.04.008.

27. Quocthang, P., Dung, N.D., Thuy, N.T.: A comparison of SimpSVM and RVM for sign language recognition. ACM Int. Conf.ProceedingSer.98–104(2017).https://doi.org/10.1145/3036290.3036322.

28. Pu,J.,Zhou,W.,Li,H.:Iterativealignmentnetworkforcontinuoussignlanguagerecognition.Proc.IEEEComput. Soc. Conf. Comput. Vis. Pattern Recognit. 2019-June, 4160–4169 (2019). https://doi.org/10.1109/CVPR.2019.00429.

29. Kalsh,E.A.,Garewal,N.S.:SignLanguageRecognitionSystem.Int.J.Comput.Eng.Res.6.

30. Singha, J., Das, K.: Indian Sign Language Recognition Using Eigen Value Weighted Euclidean Distance Based ClassificationTechnique.IJACSA)Int.J.Adv.Comput.Sci.Appl.4,(2013).

31. Liang,Z.,Liao,S.,Hu,B.:3D Convolutional Neural Networks forDynamicSign LanguageRecognition.Compute 61,1724–1736 (2018).https://doi.org/10.1093/COMJNL/BXY049.

32. Pigou, L., Van Herreweghe, M., Dambre, J.: Gesture and Sign Language Recognition with Temporal Residual Networks. Proc. - 2017 IEEE Int. Conf. compute. Vis. Work. ICCVW 2017. 2018-Janua, 3086–3093 (2017). https://doi.org/10.1109/ICCVW.2017.365.

33. Huang, J., Zhou, W., Zhang, Q., Li, H., Li, W.: Video-based Sign Language Recognition without Temporal Segmentation.

34. Cui,R.,Liu,H.,Zhang,C.:Recurrentconvolutionalneuralnetworksforcontinuous signlanguagerecognition by stagedoptimization.Proc.-30thIEEEConf.compute.Vis.PatternRecognition, CVPR2017.2017-Janua,1610–1618(2017).https://doi.org/10.1109/CVPR.2017.175.

35. About-OpenCV.

36. Poster of the Manual Alphabet in ISL | Indian Sign Language Research and Training Centre (ISLRTC), GovernmentofIndia.

37. Transferlearningandfine-tuningTensorFlowCore.

38. Wu,S.,Yang,J.,Wang,X.,Li,X.:IoU-balancedLossFunctionsforSingle-stageObjectDetection.(2020).