International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

Vaibhav Kewat¹, Sanket Kamble², Snehal Bandal³, Dr. Mrs. A. P. Laturkar⁴

¹Electronics & Tele. Dept., PES Modern College of Engineering, Shivajinagar, Pune

²Electronics & Tele. Dept., PES Modern College of Engineering, Shivajinagar, Pune

³Electronics & Tele. Dept., PES Modern College of Engineering, Shivajinagar, Pune

⁴Electronics & Tele. Dept., PES Modern College of Engineering, Shivajinagar, Pune

Abstract - This paper presents the design and development of a prosthetic hand system that translates keyboard inputs into American Sign Language (ASL) gestures to facilitate communication for deaf and muteindividuals.Thesystemuses an Arduino Uno microcontroller, MG995 servo motors, and a mechanical keyboard to mimic selected ASL alphabets. The project addresses challenges like gesture complexity and hardware limitations while proposing solutions through structured design and calibration. This work contributes to improving accessibility for the specially-abledcommunityand opens up avenues for future innovation using AI and wireless controls.

Key Words: Prosthetic Hand, Arduino, Servo Motors, Sign Language, American Sign Language (ASL), Assistive Technology,Robotics

Communication is a fundamental part of human life, yet individualswithhearingorspeechimpairmentsoftenface significantchallengeswheninteractingwithpeoplewhodo not understand sign language. American Sign Language (ASL) is a primary mode of communication for many individualswhoaredeaformute.However,duetoalackof widespread understanding of ASL, a significant communication barrier exists between signers and nonsigners, often resulting in feelings of isolation, misunderstanding, and frustration. To address this challenge, advancements in assistive technologies are enabling new ways to bridge this gap and improve accessibility.

One such development is the creation of prosthetic hands capable of translating typed text into ASL gestures. These devices allow users to input characters using a keyboard, whicharethenconvertedintohandsignsusingservomotors and microcontroller-based control systems. Our project focusesondesigningsucha cost-effectiveprosthetichand using an Arduino Uno, MG995 servo motors, and a mechanicalkeyboard.Thegesturesaredisplayedthrougha 3D-printed hand, allowing real-time letter representation. WhilethefullASLalphabetincludescomplexdynamicand multi-hand gestures, our prototype currently supports 15 staticalphabets:A,B,C,D,E,F,I,L,O,S,U,V,W, X,and Y. Theseparticularletterswerechosenbecausetheyinvolve straightforward finger positioning without movement or

intricate crossing, making them feasible with our limited hardwaresetupofsixservomotors.

LetterssuchasJandZinvolvecontinuousmotion,whileH,K, and T require finger overlaps or dual-hand signs that our hardware cannot currently replicate. Despite these limitations, our system can form simple yet meaningful words like COOL, YES, FOOD, SOUL, WIFI, LIFE, and YOU. Thesewordsallowuserstoexpressbasicneeds,emotions, oraffirmations,significantlyaidingdailycommunicationfor deaf and mute individuals. This project not only demonstratesastepforwardininclusivetechnologybutalso lays the foundation for future enhancements such as AI integration, wireless input, and expanded gesture vocabulary.

ThedevelopmentofArduino-basedsignlanguagetospeech conversion systems has gained traction in improving communication for individuals with hearing impairments. Ishak et al. explored the use of flex sensors for gesture recognition, achieving commendable accuracy while highlighting the importance of user training [3]. This groundworksetthestageforfurtheradvancements,suchas thereal-timegesturerecognitionsystempresentedbyAnkit andRajesh,whichdemonstratedtheefficiencyofmultiple sensors in translating hand movements into speech [4]. Kumari et al. also contributed by analyzing various recognitiontechniques,reinforcingArduino'sroleinthese systemswhilepointingoutvocabularylimitations[6].

Karthiga et al. developed a flex sensor-based system that successfullyconvertedgesturesintospeech,thoughitsuse was limited by its restricted gesture set [2]. Similarly, SudhakarandSadeghiimplementedsmartglovesutilizing flex sensors to improve accessibility for deaf and mute individuals [5], [6]. Collectively, these works highlight Arduino's effectiveness in assistive communication, while also identifying limitations in gesture recognition and vocabularybreadth.

Overall, the findings emphasize the promise of Arduino technologyinbridgingcommunicationgaps,suggestingthat futureresearchshouldfocusonexpandinggesturedatabases and integrating machine learning to enhance recognition accuracy.Innovativesolutionsaddressingthesechallenges

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

will be essential for developing more effective communicationaidsforindividualswhousesignlanguage.

TheArduinoUnoiswidelyusedduetoitsaffordability, ease of programming, and large community support [2][3]. Arduino is ideal for handling simple inputs like text from a keyboard and controlling multiple servo motors to mimic finger movements. More powerful alternatives like Raspberry Pi are used for advanced processingneedsbutmaybeexcessiveforbasicgesture reproduction[8].

Other microcontrollers, such as theRaspberry Pi, offer morecomputationalpowerandcanhandlemorecomplex tasks, such as processing real-time data from sensors. However,theRaspberryPiismoreexpensiveandmaybe overkill for simple ASL translation projects that don’t requireadvancedfunctionalities.

Servomotorsarecriticalcomponentsinprosthetichands, as they control the movement of the fingers. TheSG90 servo motoris commonly used in low-cost systems becauseitissmall,affordable,andcapableof180-degree rotation,whichissufficientforformingbasicASLgestures [3]. However, more advanced systems may require strongerandmoreprecisemotors,suchasMG90Smetal gearservos,whichofferhighertorqueanddurability[6].

Theaccuracyoftheservomotorsiscrucialforensuring that the fingers form the correct ASL gestures. Misalignmentinmotorcalibrationcanresultinincorrect gestures,whichimpactstheusabilityofthedevice.Proper calibrationandcontrolalgorithmsarerequiredtoensure thesystem’saccuracy.

C. Input Devices (Keyboards)

Mostprosthetichandsystemsuseasimplekeyboardas the input device. The user types a letter, which is then processed by the microcontroller to control the servo motors,translatingtheinputintothecorrespondingASL gesture [7]. Some advanced systems usegesture recognition glovesor touch-sensitive input devices, allowing for more intuitive and natural inputs, though thesearemoreexpensiveandlesspracticalforeveryday use.

4. METHODOLOGY

Thesystemworksonasimpleinput-outputlogic.Auser presses a key on amechanical keyboardconnected to

anArduinoUnoviaUSBHostShield.TheArduinoreads thiskeyandtranslatesitintoapredefinedservomotor pattern, which controls a3D-printed prosthetic hand. Eachservoispre-calibratedtomoveaspecificfingeror the thumb to replicate the ASL gesture. ComponentsUsed:

ArduinoUno

MG995ServoMotors

USBHostShield

MechanicalKeyboard

12VPowerSupply

Custom3D-printedHand

5. CHALLENGES IN DEVELOPING PROSTHETIC HANDS FOR ASL TRANSLATION

I. Motor Calibration and Accuracy

Servo motorcalibrationis one of the mostsignificant challenges in developing prosthetic hands for ASL. Precise calibration is necessary to ensure the fingers form the correct gestures, but misaligned motors can leadtoincorrectorincompletegestures.

II. Gesture Complexity

Translatingbasicalphabeticgestures(A-Z)intoASLis relativelystraightforward,buthandlingmorecomplex gestures, including entire phrases, is much more challenging.Dynamicgesturesthatinvolvecontinuous handandfingermovementsareparticularlydifficultto implement with current hardware. Future advancementsinmotorcontrolandmachinelearning couldhelpaddressthisissue.

III. Power Consumption and Portability

Thepowerrequirementsformultipleservomotorscan limit the portability of prosthetic hands. Batterypowered systems often have limited operation times, and managing the trade-off between power consumption and motor performance remains an ongoingchallenge.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

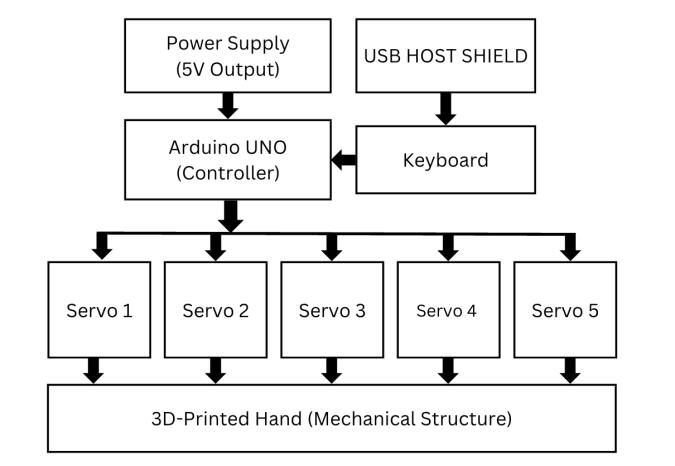

Fig -1:BlockDiagramofProstheticHand

Theblockdiagram(Fig.1)illustratesthecompleteworking oftheprosthetichandsystemdesignedforAmericanSign Language(ASL)translation.Thesystemispoweredbya12V DCsupply,steppeddownandregulatedtomeetthevoltage requirements of the Arduino Uno and servo motors. A mechanicalkeyboardisconnectedtotheArduinoUnoviaa USB Host Shield, enabling it to accept input characters directlyfromtheuser.Whenakeyispressed,theArduino readsthecharacterand processesitaccording tothepreprogrammedgesturemappingstoredinitsmemory.

UponidentifyingthecorrespondingASLgesture,theArduino sendsappropriatecontrolsignalstosixMG995servomotors throughPWMoutputpins.Eachmotorisassignedtomove onefingerorthethumbonthe3D-printedprosthetichand. Theseservomotorsrotatetospecificanglescalibratedfor each gesture, allowing the hand to form the desired ASL letter.Thesystemreliesonfixed,Cartesian-stylestaticfinger positions to replicate gestures accurately and repeatably. This structure ensures smooth data flow from keyboard input to physical hand gesture offering an effective solution for aiding communication among deaf and mute individuals.

The development of a prosthetic hand system capable of translating text input into American Sign Language (ASL) gesturesinvolvesmultipletechnicalandpracticalchallenges. Despitethepromisingoutcomes,severallimitationsremain thatmustbeaddressedforfutureimprovements.

One of the major challenges lies in achieving precise motor calibration. Each servo motor must rotate to a specificangletoformaccurateASLgestures.Evenaslight deviationintheservoanglecanleadtoanincorrector ambiguous gesture, reducing the effectiveness of

communication. Mechanical tolerances in 3D-printed parts, backlash in gears, and slight inconsistencies in servoperformancefurthercomplicatethiscalibration.

Moreover, repeated usage can lead to wear and tear, causing servos to lose their calibrated positions over time.Thisrequiresperiodicrecalibration,whichmaynot befeasibleforalluserswithouttechnicalsupport.

Thecurrentprototypesupportsonly15staticletters(A, B,C,D,E,F,I, L,O, S,U, V, W, X, Y),excluding dynamic letters like J and Z that require continuous motion. Additionally, complex gestures involving overlapping fingers,two-handsigns,orexpressivemovementscannot be replicated with the current setup. This restricts the vocabularyandtherichnessofcommunication.

For real-world use, a full ASL system should include numbers,dynamicgestures,commonphrases,andeven facial expressions, which are essential components of signlanguagegrammarandcontext.

Operating six high-torque MG995 servo motors simultaneously demands significant power. The continuous current draw can lead to high power consumption, making battery-powered operation impracticalforlongerdurations.Furthermore,prolonged operation of multiple servos may generate heat, potentiallyaffectingcomponentlifespanandsafety.

Effectivethermalmanagementsolutions,suchasusing heatsinksorventilatedenclosures,arenotimplemented inthecurrentdesign,representingapotentiallimitation forcontinuoususe.

The prosthetic hand's fingers are designed for gesture formation only and lack the structural strength for gripping or holding objects. Unlike advanced robotic hands used in prosthetics for physical assistance, this system cannot exert force, limiting its functionality to visualcommunicationalone.

Currently,thesystemlacksanyformoftactileorhaptic feedback.Inreal-lifescenarios,providingfeedbacktothe user(suchasconfirmingsuccessfulgestureformation) could greatly enhance usability. The absence of such featuresmakesthesystemlessinteractiveandlimitsuser confidence.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

Thesystem,includingtheArduino,powersupply,servos, andkeyboard,isrelativelybulky.Itisnoteasilywearable or portable in its current state, reducing its practical applicabilityformobileoron-the-gocommunication.

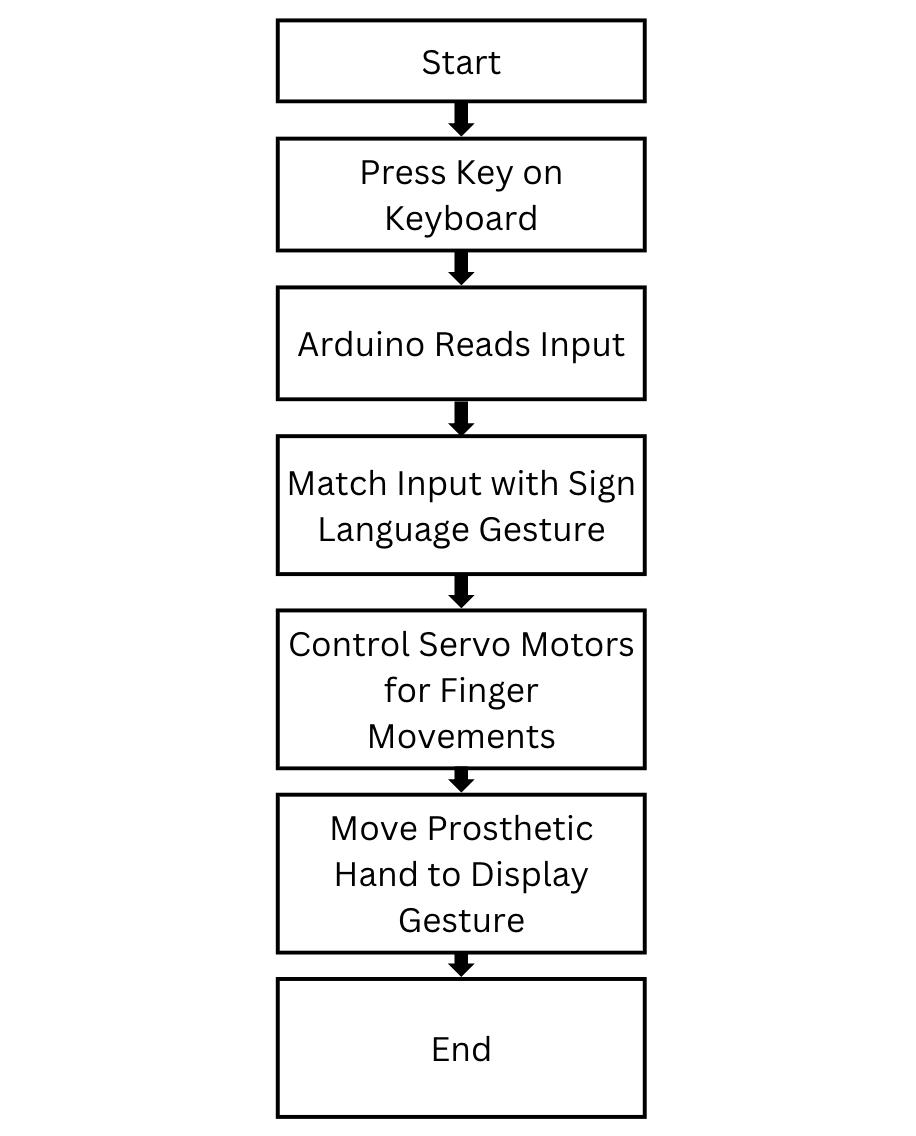

The flowchart outlines the operational sequence of the prosthetichandsystemfortranslatingkeyboardinputsinto AmericanSignLanguage(ASL)gestures.Theprocessbegins with theStartstep, where the user is prompted topress a key on the keyboard. Once a key is pressed, theArduino reads the inputfrom the keyboard. The system then proceeds tomatch the input with the corresponding sign languagegesturestoredinitsmemory.Followingthis,the Arduinocontrolstheservomotorstofacilitatethenecessary finger movements. Finally, theprosthetic hand movesto display the selected gesture, completing the process. The flowconcludeswiththeEndstep,indicatingthecompletion oftheoperation.

Despite the challenges, the proposed prosthetic hand system offers multiple advantages and promising applicationareas.

The most significant advantage of this system is its potentialtoimproveaccessibilityforindividualswhoare deaformute.Byprovidingavisual mediumtoexpress letters and form basic words, it bridges the communication gap with non-signers, promoting inclusivity in social, educational, and professional settings.

TheuseofwidelyavailablecomponentssuchasArduino Uno,MG995servos,and3D-printedstructuresmakesthe system highly cost-effective. This affordability enables broader adoption, especially in developing regions or educationalinstitutionswithlimitedbudgets.

C. Educational Tool

Theprosthetichandcanserveasaneffectiveeducational toolforteachingASLalphabetstostudents,teachers,and family members of deaf individuals. Its visual and interactive nature helps learners understand finger positionsbetterthanstaticimagesorillustrations.

D. Demonstration and Research Platform

This system provides a flexible platform for further researchinrobotics,human-computerinteraction,and assistivetechnology.Researchersandstudentscanuseit asabasemodeltoexploreenhancementslikedynamic gesture integration, AI-based gesture recognition, or wirelesscontrol.

E. Customizability and Expandability

The modular design of the system allows easy modification.Additionalgesturesorexpressionscanbe incorporatedbyreprogrammingtheArduinoandadding moreservosifneeded.Thisflexibilitymakesthesystem future-proofandadaptabletoevolvingrequirements.

F. Low Maintenance and Repairability

Asthecomponentsareopen-sourceandeasilyavailable, maintenance and repairs can be conducted without specialized support. Parts such as servos, the Arduino board, and the 3D-printed fingers can be replaced individually,keepinglong-termcostslow.

G. Awareness and Outreach

Deployingsuchsystemsatpublicevents,workshops,or exhibitions can raise awareness about the challenges faced by the deaf and mute community and promote empathy and understanding. This helps spread knowledgeaboutsignlanguageanditsimportance.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

IntegratingAI and machine learning (ML) into prosthetic hand systems could significantly enhance theirperformance.AIcouldimprovetherecognitionof morecomplexASL gesturesandenablethesystemto adapttotheuser’ssigningstyle.Additionally,machine learningalgorithmscouldassistthesysteminpredicting gestures,therebyincreasingthespeedandaccuracyof thetranslationprocess.

The adoption of wireless input devices, such as Bluetoothkeyboardsorgesture-recognitiongloves,can provide greater flexibility in controlling prosthetic hands.Thesewirelesssystemswouldeliminatetheneed forphysicalconnectionsbetweeninputdevicesandthe prosthetic hand, enhancing portability and userfriendliness.Thisshifttowardwirelesstechnologycould make the devices more accessible andconvenient for usersinvarioussettings.

ProsthetichandsdesignedforASLtranslationareanexciting development in the field of assistive technology. While currentsystemsareeffectiveattranslatingbasicalphabetic gestures, there are significant challenges that need to be addressed,includingmotorcalibration,gesturecomplexity, andpowerconsumption.EmergingtechnologiessuchasAI, tactilefeedback,andwirelessinputsystemsofferpromising solutions to these challenges. By integrating these advancements,futureprosthetichandscouldbecomemore accurate,user-friendly,andcapableofhandlingthefullrange ofASLgestures.

[1] Vinod J. Thomas, Diljo Thomas. "A Motion Based Sign LanguageRecognitionMethod." InternationalJournalof Engineering Technology,vol.3,no.4,Apr.2015.

[2] Sameera Fathimal M, E. Karthiga, N. Gowthami, P. Madura. "Sign Language to Speech Using Arduino Uno." International Journal of Scientific Progress and Research,Jan.2018.

[3] M.Nisha,M.Preethi,R.Anushya."GestureRecognition UsingFlexSensorsandArduino." International Journal of Advanced Research in Computer Engineering & Technology,vol.5,no.2,Feb.2016.

[4] A. Thirugnanam, V. A. Vennila, S. K. Aishwarya. "Sign Language to Speech Conversion Using Embedded System." InternationalJournalofEngineeringScienceand Computing,vol.7,no.1,Jan.2017.

[5] P.R.Usha,P.M.Lavanya,P.V.Srinivasa."SmartGlove for Sign Language Translation Using Arduino." International JournalofInnovativeResearchin Computer and Communication Engineering,vol.6,no.4, Apr.2018.

[6] Aarthi M., Vijayalakshmi P. "Sign Language to Speech Conversion." Fifth International Conference On Recent Trends in Information Technology,2016.

[7] Y. Tan, Y. R. Zhang, and M. M. Ouhbi. "Design and Development of a Smart Glove for Real-Time Sign LanguageTranslation." IEEE Access,vol.7,pp.108292108302,2019.

[8] R.Liu,L.Li,T.Zhang."IntelligentGestureRecognition Based on Motion Sensors." Journal of Ambient Intelligence and Humanized Computing,vol.10,no.3,pp. 1167-1175,2019.