International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Isha Srivastava1 , Deepshikha2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India 2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

Abstract - Cloud computing has changed the deployment and management of applications, two other paradigms are now becoming very popular, serverless computing and virtual machines (VMs). Serverless computing provides event-oriented and scalable and cost-effective execution environments in which program developers do not have to deal with infrastructure. On the other hand, the virtual machines offer enhanced control, isolation and congruence with old systems and steady workloads. The current research paper undertakes a comparative analysis of the serverless computing and virtual machines in the public cloud setting concerning performance, scalability, and costefficiency based on the experimental analysis and case studies methodologies.

AWS Lambda, Azure Functions and Azure VM were created on which controlled experiments were executed that simulated CPU-bound, memory-bound and workloads with I/O bound on them. Performance metrics which are of key importance like response time, throughput, latency, and resources utilisation were analyzed. The findings indicate that serverless is more efficient in dynamic scaling and costeffective when it comes to short-lived event-based workloads, whereas, it has a latency issue of cold start and limited concurrency. VMs on the other hand provide more consistent performance and fewconfigurability with greater idle costs andslower scaling.

These results are also supported by case studies in such spheres as IoT, e-commerce, and healthcare. Guidelines to deployment and a decision matrix in order to assist the organisations in selecting the apt paradigm depending on the workload nature are provided to conclude the study. The study provides excellent knowledge on the optimization of cloudapproaches interms of performance and costs.

Key Words: Serverless Computing, Virtual Machines, Cloud Scalability, Cost Efficiency, Performance Metrics, CloudArchitecture,ComparativeAnalysis.

Cloud computing has turned out to be a revolution to the provisioning, managing, and consuming of easier computing resources in the organizations. As we have been becoming dependent on the use of cloud-based

infrastructures, there have been two notable paradigms that have arisen, which are the Virtual Machines (VMs) andServerlessComputing. Although theyhavethe shared goal of providing compute in a cloud environment, the architecture of the two, their working model, and even how they can be used in different environments are incredibly different. The study investigates these variations by developing a comparative study to provide anopportunitythroughwhichtheorganizationscanmake therightdecisionwithregardtothechoiceofdeployment model.

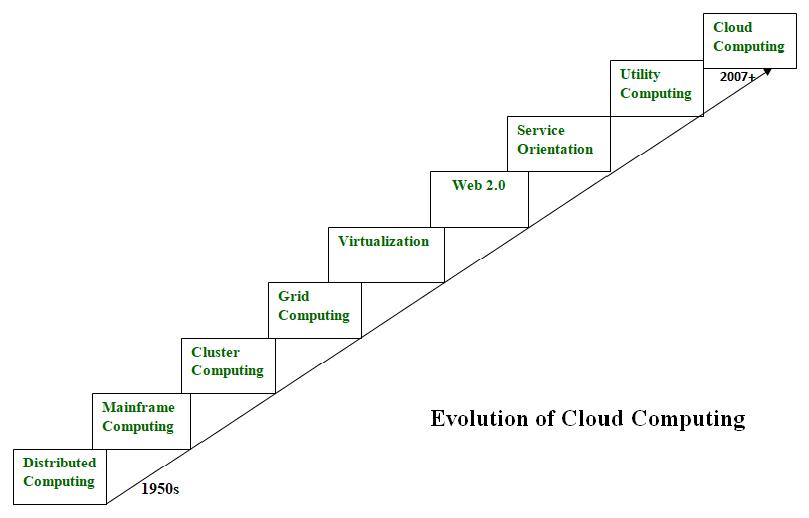

The concept of computing as a utility was created as far backasthe1960showever,upuntilearly2000sitwasnot commercially feasible and only with the advent of cloud computingdiditsoonbecometheequivalent.AWSisseen to have originated in 2006 with the introduction of the Amazon Web Services (AWS) platform which comprised Elastic Compute Cloud (EC2) and Simple Storage Service (S3)which initiated the Infrastructureasa Service (IaaS). This was then followed by the coming up of Platform as well as Software as a Service (PaaS) and Software as a Service (SaaS) which changed the way businesses accessedaswellasusedITresources.

Figure-1: Evolution of Cloud Computing

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

The Cloud computing was made possible by the virtualization technology. It made several operating systems run on a single physical machine with the use of hypervisorsandthereforemaximizingontheutilizationof thehardwareandalsoofferedisolation.VMs werepart of the basis of cloud platforms which provided flexibility, compatibility and management of resources of a different application.

More recently has been the paradigm shift of serverless computinginwhichtheprovidersofthecloudmanagethe infrastructure and resources are automatically assigned. The developers only write functions that will be called upon on event reception. This model has gained wide popularity through services such as AWS Lambda, Azure Functions and Google Cloud Functions (all of which provide automatic scaling, high-resolution metering and easier deployment of microservices and event-driven applications).

When cloud computing installations grow in size, there is an urgent problem of managing resources in an optimal manner. The costs incurred will increase with overprovision and there would be wastage, and on the other hand, there would be bottlenecks in the performance when under-provisioned. Organizations have to reconcile between performance and low-cost operations particularlyinunstableworkflowenvironments.

The serverless and virtual machine have varying tradeoffs. Serverless is cheap to run and automatically scales, butisvulnerabletothecoldstarteffectandvendorlock-in compared to VMs, which have a high level of control and suitastableworkloadbutarealsomorecostlytomanage and have fixed prices. Owing to such differences, a full solution that would fit a wide variety of use cases is hard topick.

With the differences existing in the strengths as well as limitationsofthetwomodels, thereisneedtoconduct an elaborate comparative analysis. Being aware of the performance of each paradigm in different metrics (definedintermsofcost,scalability,etc.),decision-makers can decide on the most appropriate deployment strategy tousewithusuallydifferentapplicationsandworkloads.

Theprimarygoalofthisresearchistocompareserverless computing and virtual machines in public cloud environments.

1.3.1

The study aims to benchmark the two paradigms across different workloads, focusing on response time, throughput, latency, and total cost of ownership (TCO). The performance under both steady and burst workloads isanalyzedtoassessscalabilityandefficiency.

Theothergoalistofindoutthenatureofapplicationsand scenarios,inwhichserverlesscomputingisgreatandVMs would be a better option. This involves studying the case studies of the industries like those of healthcare, finance, andInternetofThings.

1.3.3

According to the research results and the case study researching, a framework of decision making will be offered to the organizations in order to select the approach to be used between the serverless and VM deployments,orahybridsolutionincaseswherebyitcan beapplied.

1.4.1

The present study is a very important contribution to the literature because it compared the use of serverless computing with virtual machines in terms of different dimensions. There are lots of studies that analyze it separately,butnotmuchintermsoftheanalysisincluding performance, scalability and cost. The implications of the findingswillbeontheworldofscholarshipsandmayalso play the role of a reference point on future research on cloudcomputing.

Practically, the research seeks to provide the architects, developersofthecloud,andmanagersoftheITprograms with practical information to streamline their infrastructure selection. The strategic dimensions of the model choice are based on an increase in cloud budgets allotted to performance. The study will allow addressing the issue of the gap between theoretical knowledge and deploymentconsiderations.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Cloudcomputinghasbecomea wideecosystemproviding different services delivery options which basically are Infrastructure as a Service (IaaS), Platform as a Service (PaaS)andFunctionasa Service(FaaS).Thedifference in these models is in the form of control, management and abstraction provided to the users. In IaaS, vendors offer basic computing facilities like virtual machines, storage and networks, where users will rate and occupy themselves. Some of the platforms involved in this model areAmazonec2,Microsoftazurevirtualmachines,Google compute engine which are very flexible and compatible particularly with legacy systems. PaaS provides an abstraction of the infrastructuremanagementlayer of the cloud to a ready-to-use platform with runtime environments, databases and development tools (such as Google App Engine and Azure App Service). Finally, FaaS, anabbreviationofserverlessmodel,enablesprogramming of developers to execute a code in reaction to certain predetermined events without the need to provision or manage infrastructure. Using FaaS services such as AWS Lambda,AzureFunctions,andGooglecloudfunctions,one only has to post functions along with trigger definitions andonlybechargedperexecutiontime.

IaaSrevolvesaroundthevirtualmachines(VMs),andthis explains why they are essential in the cloud setup. They enable several isolated operating system process to be executed on the same physical host by the use of hypervisors such as VMware ESXi, Microsoft hyper-V, or KVM.VMsareappreciatedbythefactthattheyfitthevast variety of software ranges and have powerful isolation abilities. They support many OS-level configurations, being appropriate in various enterprises, such as web hostingandhigh-performancecomputingamongothers.

Alternatively, the more abstracted method is the serverless computing. In this model, all components of resource management, provisioning, scaling as well as fault tolerance are automatically done by the cloud provider. The serverless functions are stateless and temporary and start on executing a specific event. These attributes render serverless to be quite feasible to microservices, real-time data processing, and IoT applications. The developers do not have access to the underlying infrastructure, but they enjoy a lowered overhead of operations, acceleration in the deployment, andprovisionofbillingatfinelevels.

Virtual machines and serverless computing have unique prosandconswhichaffecttheirusageinthecloud-native andlegacysystems.

Serverless computing has powerful advantages of automatic scaling, the concept of pay-per-use and simplicity to the developer. Their scale functions are elastic,which meansthat theyscaleupandscale down in reaction to demand, and thus they work effectively in highlyvariableandeventdrivenworkloads.Sucha model takes away the responsibility of managing servers and it gives developers only the task of working on application logic. Nevertheless, cold start latency, which is one of the serverless platform limitations implies that the functions thathave not been used withina certain period take time to start and thus delays the response time. Moreover, the questionofvendorlockinisamajorweakness,sincemost ofthe functionsarecloselyintegratedwiththe ecosystem of certain cloud providers, which makes the move complicated and expensive. Applications which need high systemlevelcustomizationorlongrunningprocessesmay also become limiting since there is no control over the runtimeenvironments.

Virtual machines, on the contrary, are more controllable and stable. VMs are good environments to run legacy applications, stateful workloads and software that have specialenvironmentaldependencysincetheusersareable to customize the OS and application stack. VMs are also capable of well isolation in between workloads and can handle multi tier applications. Nonetheless, they have increased overhead of operations and users have to address updates, security patch and scale themselves or use orchestrator such as Kubernetes. VMs are also more pronetopayhigheridleresourcecostsincetheresourceis charged and available within a stipulated period of time irrespectiveofitsuse.

The way serverless computing and virtual machine work and their efficiency features have been studied in many studiesseparately.Thetypicalareasofinvestigationofthe serverlessplatformsarecoldstartlatency,executiontime, and event response rate along with cost savings when faced with bursty traffic patterns. In parallel, researches on virtual machines focus on throughput and resource handlingefficiencyandlegacycompatibility.Nevertheless, thesepiecesofworkarehelpful,butthedifferencelieson thelimitedscaleofmulti-dimensionalcomparisonwork.

Themajorityofavailablecomparativestudiesspecializein one element of functionality, e.g. in performance or cost, whereas it is desirable to get the full picture with the consideration of the factors of scalability, resource consumption, and difficult deployment. Integrative analysisshouldbeperformedsoastomakeasynthesisof thesefactorstoconceptualizethetrade-offsappropriately. Due to the practical implications of the choice that organizations should make between the serverless and VM-basedarchitecture,herecomesanecessitytoconsider these paradigms under homogeneous workloads and

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

conditions that are close to production settings. The objectives of such comparative studies should be to come up with the systems through which context-sensitive decisions can be made depending on the nature of the workload, its projected magnitude, cost-limitations and thecomplexityoftheoperation.

This chapter gives the methodological framework used to compare the serverless computing and virtual machines (VMs) in cloud computing. The general goal of the methodology at hand would be to assess the two paradigmsonseveraldimensionsofperformancethrough thecombinationofexperimentalarrangements,simulated workloads, and data analysis approaches. Using an organized comparative method, the study will make the findings not only strong but also highly applicable in practiceinareallifesituation.

The research relies on a framework of the comparative analysis of serverless and VM-based cloud computing models based on unified metrics. Such parameters are responsetime,throughput,latency,costandCPU/memory usage. Response time is the time between a request and the first byte of response as it is necessary in user facing applications. Throughput characterizes the requests accepted per unit time that shows the system capacity when loaded. Latency is concerned with the delay that is experienced by the requests particularly in the peak situations. Cost is estimated in accordance with billed information regarding the use of compute, storage and network. At last is the use of the CPU and memory statistics, that is how each architecture consumes the resourcesprovidedtoiteffectively.

The multi-dimensional evaluation would aid in not only knowingtheparadigmperformancebehaviourunderload, buttheperformanceandefficiencyoftheuseofresources and economic trade-offs. The structure therefore makes sure that technical factors and operational factors are measuredwell.

The experiments were performed under the conditions of the controlled clouds with the help of three popular serverless platforms and three services on the basis of virtual machines. In the case of serverless computing model, the paper has used AWS Lambda, Azure functions, and Google Cloud functions. These platforms are the brightestrepresentativesoftheserverlesstechnologyand allow to integrate with the native monitoring and billing systems, making it possible to track the correct performancerates.Thefunctionswereplacedondifferent runtime environments such as python and node.js with

different memory allocations and trigger types (HTTP, cron,fileevents).

The experimental setup involved Azure Virtual Machines, Google Compute Engine (GCE), and VMware vSphere on theotherhandinthecaseofthearchitecturebasedonthe virtual machine. Different instance types of virtual machines (e.g., general-purpose, compute-optimized, etc.) wereconfiguredwith2vCPUand48GBRAMeach,which wasusuallyassignedtovirtualmachines.Tohaveequality and consistency among tests, the VM environments were installedusingthesamesoftwarestacks.

This sufficiently-controlled deployment to a variety of platforms enables the experiments not only to report on performancepropertiesofthecomputingmodels,butalso the influence of platform-specific optimizations and restrictionsontheresult.

To evaluate the performance of serverless functions and VMs under realistic operational conditions, a range of synthetic workloads was generated. These were categorized into CPU-intensive, memory-bound, and I/Ointensive tasks to reflect common use-case profiles encounteredinreal-worldcloudapplications.

CPU-intensiveworkloadsinvolvedoperationssuchas large matrix multiplications, cryptographic hash calculations, and recursive computations, which stresstheprocessor.

Memory-bound workloads focused on in-memory data structures such as hash maps and arrays, simulating scenarios like in-memory caching or machinelearningtraining.

I/O-intensive workloads consisted of file read/write operations, database queries, and network requests, typicalofdatapipelinesorbackendservices.

There was a mix of tools that were used in order to simulate and test these workloads. Apache JMeter produced heavy concurrency requests (HTTP) to test the response of the system. The tests which aimed at simulating compute and memory demands were implemented as the Python scripts with adjustable parameters. Siege and wrk were used to simulate thousands of users and transactions as expected in stress testingandtestsofconcurrency.

These enable repeatable, tunable and cross-platform performance testing and may be used to detect bottlenecks on scaling and latency patterns in both VM andserverlessenvironments.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

The process of data collection was conducted in real-time whileexecutingworkloadwiththeuseofnativeandthirdparty monitors. In case of serverless platforms, the performance metricsweregatheredwiththehelp ofAWS CloudWatch,AzureMonitor, andGoogle CloudOperations Suite. Thetoolsoffered accesstothelogsanddashboards that monitored time spent in execution, memory consumption, invocations, error rates, and latency of the coldstarts.

In the case of virtual machines, it was monitoring the usageoftheavailableresourcesbasedontheplatform,i.e. Azure Metrics Explorer, Google Cloud Monitoring, and VMwarevRealizeOperationsManager.Theseutilitiesgave readings of the CPU usage, disk I/O, memory usage and networkthroughput.

Direct cost data was collected based on the billing dashboards which include AWS Cost Explorer, Azure Cost ManagementandGoogleCloudBillingReports.Thepricing information obtained using these dashboards such as compute time, cost of storage, data transfer fee and idle resources costs, was able to be used to derive the Total CostofownershiporTCOofeveryconfiguration.

The dimensional analysis of the obtained data was performed with the help of quantitative analysis with the application of Python (NumPy, Pandas, Matplotlib) and R (ggplot2, stats) as statistical data analysis and visualizationtools.Thematiccodingandpatternextraction of qualitative data collected in a form of case studies and configurationlogswascarriedoutbyNVivo.

Table 1: Summary of Methodology Components.

Component

Metrics

Serverless Platforms

Details

ResponseTime,Throughput,Latency, CPU/MemoryUsage,Cost

AWSLambda,AzureFunctions,Google CloudFunctions

VMPlatforms AzureVMs,GoogleComputeEngine, VMwarevSphere

WorkloadTypes

CPU-intensive,Memory-bound,I/Ointensive

TestingTools ApacheJMeter,PythonScripts,Siege,wrk

MonitoringTools AWSCloudWatch,AzureMonitor,Google OpsSuite,VMwarevRealize

The chapter proposes the resultsobtained in the instance comparing serverless computing and virtual machines (VMs) in a number of performance aspects based on

studies conducted in experiments. This analysis will be donearound four maincomponents namely performance, scalability, cost effectiveness as well as suitability of use. The findings are based on the regulated tests and proved with the help of the case studies and are aimed to make strategic decisions concerning the choice ofthe models of theclouddeployment.

Measurement of performance was done against three key parameters which include cold start latency, concurrency throughput and CPU/Memory usage. The factors closely affect application responsiveness especially when a dynamicworkloadisexperienced.

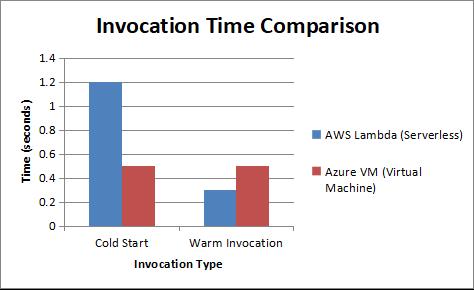

One of the limits of serverless architecture is called cold start latency. Since a serverless-based function may require execution environment initialization when a developer does not use it for some time, there will be delays. On our tests, AWS Lambda demonstrated the averagecoldstartduring1.2seconds,asopposedtoa 0.5 seconds response time of consistent control runs within Azure VMs. But burst workloads in Lambda were warm invocationsthatranin0.3secondsascomparedwithVMs.

Thisisthetradeoff,thatalthoughtheserverlessplatforms might not be the best fit in the case of latency-sensitive applications where traffic is at most intermittent, in case of sustained traffic where invocation of functions is frequent, the serverless tends to outdo itself predominantly.

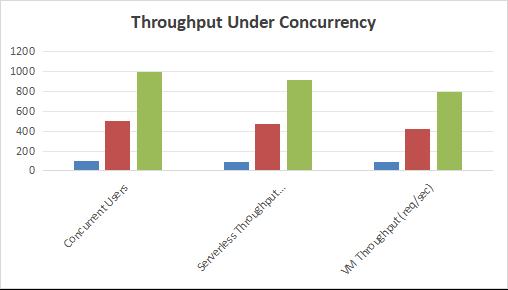

Throughput is the measurement of how a system will performonalargeflowofconcurrentrequests.Serverless functions in general (ex: AWS Lambda) started performances much faster and sustained about 920 requests/second, compared to VMs that stayed at about

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

800requests/second,andwouldthereforehavetowaitto be manually managed or auto-scaling tools to be preconfigured.

This demonstrates serverless platforms’ ability to handle bursty traffic effectively due to their event-driven nature andelasticscaling,outperformingVMsinthroughput.

There was consistency in latency in VMs and it increased drastically in serverless functions in a cold start. Nonetheless, the usage of resources was much more efficient in the case of serverless computing where, by default, the resources are released during the time between the different invocations. VMs on the otherhand kepttheidleCPUusageata20-30percentwheneverthey werenotreceivingrequests.

Table-2: Latency and CPU/Memory Usage.

TypesofserverlessplatformslikeAWSLambdaandAzure Functionsaswellasserverlessoffernear-immediateautoscaling.Whentheproofwasputtotest,AWSLambdawas able to scale 0 to 10 000 instances within 2 minutes without administrative interaction involved. There are however upper limits to this scaling ability in the form of regional concurrency limits (e.g. there are 1,000 concurrent executions permitted in AWS regions by default).

Table-3: Auto-scaling Efficiency of Serverless Platforms

Thus, in terms of performance under variable workloads, serverless computing provides better efficiency, though VMsoffermorestablelatency.

Scalability defines the effectiveness of a system in the process of accommodating increased work loads. In this section, the authors discuss how serverless platforms auto-scale in comparison to manually and orchestrated scalinginVMwerepresented.

Despite these limits, serverless platforms are well-suited toapplicationswithfluctuatingloads.

Most VM-based systems have a manual scaling option or on container orchestration engines such as Kubernetes. A GCE (Google Compute Engine) cluster with 3-10 nodes was scaled in 4-6 minutes by the Horizontal Pod Autoscaler of Kubernetes when testing the same. Vertical scaling meant that VM has to restart to account losses of 23minutes.

Table-4: Manual and Orchestrated Scaling in VMs.

Scaling Type Time to Scale Downtime Best Use Case

Horizontal (VM) 4–6 minutes No

Distributedweb applications

Vertical (VM) 2–3 minutes Yes Monolithic databases

Hence,VMsprovidegreatercontrolbutrequiremoretime andefforttoscaleeffectively.

Positive advantages of serverless and VM-based systems are combined in the hybrid cloud models. To take an example, real-time triggers can be done using AWS Lambda such that background processing is done using EC2instances,whichwillbebothscaleaswellasstable.It

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

is a commonly used model where cost and performance are considered on media streaming services and the Ecommerceplatforms.

Price is one of the factors that can be used to exclude eitherofthetwoarchitectures(serverlessandVM-based). In this section, the author examines the pricing models, thetotalcostofownership(TCO)andthetrade-off.

Serverless computing is a state of pay per execution wherein consumers pay on each request and on each GB secondofmemory.Onthecontrary,VMsareprovisionedbased and this type of billing applies such that expenses arepaiddespitetheeffectofusage.

Table-4: Pay-per-Use vs. Provisioned Billing.

Cost Component Serverless (AWS Lambda) VM (Azure B2s)

Compute Billing

Memory Billing

IdleCost

$0.20/million invocations

$0.00001667per GB-second

$0.042/hour (~$30/month)

Includedincompute

$0 ~20%ofprovisioned capacity

Serverless is more cost-effective for infrequent or burst workloads, while VMs are more economical for longrunning,predictabletasks.

TCO Comparison A comparison made at the level of Total Cost of Ownership (TCO) indicates that serverless would translatetoacostsavingsofupto6070percentofthecost oftheevent-drivenapplication,althoughVMusagescanbe less expensive in case of consistent workloads due to the fixedpricing.

4.3.3

Serverless comes with low upfront cost, but this will be costlywheninvokedmostofthe times with high memory consumption. VMs have more predictable costs at the expenseofmorepredictableperformance,especiallywhen idle, and are quite suitable to enterprise back ends or governedspaces.

The suitability of serverless or VM-based deployment dependsonthenatureoftheapplicationanditsworkload characteristics.

Serverlesscomputingisidealfor:

IoT applications, where millions of short, eventdriventriggersaregenerated.

Microservices architectures, where functions are lightweightandindependentlydeployed.

Real-time analytics, where responsiveness and autoscalingarecritical.

These applications benefit from the elasticity and costefficiencyoftheserverlessmodel.

Virtualmachinesarepreferredin:

Legacy applications, which require OS-level control andpersistentstorage.

Databases, where consistent performance and statefulconnectionsareessential.

Enterprise-grade systems, where compliance and infrastructurecustomizationarenecessary.

Thesescenariosdemandtheconfigurabilityandreliability ofVM-basedenvironments.

To meet the needs of the modern applications with their workloadvariety,ahybrid oneserveswell,whichoffersa middle ground: a combination between serverless functionalitytodeliverresponsivenessandVMs,toensure stability.Serverlessiscommonlyusedinorderprocessing on e-commerce sites and to manage any databases of inventoryaswellasVMs.

Thestudyhasputinadetailedcomparativeperspectiveof the serverless computing and VMs (VMs) in the cloud in relation to their performance, scalability, costeffectiveness, and applicability of use-cases. The results show that serverless computing is quite useful in eventdriven environments where auto-scaling was fast, there wasnoidle-costandcostofdeploymentwaslow.Butcold startlatency,limitsonconcurrencyandthelossofcontrol over the runtime environment are also limiting it. On the other hand, in virtual machines, the underlying infrastructure is consistent and very extensible, so they are better suited to applications that may run long-term, legacy systems and works with more demands when it comes to performance and control of operations. VMs are moreexpensiveinfixedcost,cannotguaranteeisolationas

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

wellaspredictabilitythatserverlessmodelsdonotalways provide as well as they can have their scaling done manually or through orchestration. The paper also uncovers that the paradigms of hybrid clouds, i.e., the combination of serverless architecture with VM-based architecture might be an effective way to balance the advantages and drawbacks of both paradigms. There is much growing importance of such models in intricate systemssuchase-commercesystems,healthcaresystems and enterprise IT systems. Finally, when it comes to the decision of serverless and VMs, one should be stimulated by the workload scenarios, its performance needs, cost efficiency, and architecture objectives. The research presentspracticalimplicationsandguidelinesondecisionmaking and strategies that would enable developers, IT architects, and organizations to integrate the cloud strategyandaddresstheparticularbusinessrequirements andsubsequentscalingtargets.

5.1.LIMITATIONS

Although the current research provides an in-depth comparison of serverless computing and the virtual machines on clouds, it is not timeless. The range of experimental evaluation can also be considered a major limitation since it was restricted to particular cloud providers, including Amazon Web Services (AWS), Azure, and Google Cloud, thus, the scalability of experimental evaluation may not be valid overall as other cloud providersorupdatedcloudservicesmaydifferintermsof performance and pricing. Also, the workload simulations arewidelyvariedandtheymightnotbeabletoprojectthe entire variety of behavior of real-world applications especially where the applications are too specialized or have a lot of regulations. Cost-efficiency analysis was calculatedaccording tothe existingpricingmodels, which can be modified, and possibly it does not reflect settle upon enterprise-wide discounts or subject to fluctuate utilizationpatterns.Besides,thehybridcloudmodelswere open to discussion only in a theoretical sense, but not on their implementation and complexity of orchestration in real-time.Theycanbeovercomeinthecontextofstudying the given problem in future by conducting the study on a wider variety of platforms and with the longitudinal analysiscarriedoutundertherealproductionloads.There is also the possibility to investigate deeper in hybrid architecture and especially strategies of orchestration, interoperability aswell asintelligent workloadplacement of AI or machine learning. It can also be a bit explored in terms of security, conformity and sustainability of both paradigms. Generally, to do more in this regard in future, attempts should be made to generalize findings within wider contexts and make dynamic decision support systems, which will aid in making real-time decisions whenitcomestotheapplicationofcloud.

[1] A. Singh and B. Sharma, “A Comparative Analysis of Serverless Computing and Virtual Machines in Cloud Computing,”InternationalJournalofCloudComputing,vol. 11,no.3,pp.203–216,2022.

[2]M.RobertsandM.Chapple,ServerlessArchitectureson AWS: With Examples Using AWS Lambda, 2nd ed. Sebastopol,CA:O’ReillyMedia,2021.

[3] A. McGrath and P. R. Brenner, “Serverless Computing: Design, Implementation, and Performance,” in Proc. IEEE 37th International Conference on Distributed Computing Systems(ICDCS),Atlanta,GA,USA,2021,pp.405–414.

[4]F.Faniyi,R.Bahsoon,andA.Al-Debagy,“Evaluatingthe Performance of Serverless Computing and VMs Using WorkloadPatterns,” Journal ofSystemsand Software,vol. 174,pp.110883,2021.

[5] B. Varghese and R. Buyya, “Next Generation Cloud Computing:New TrendsandResearchDirections,”Future Generation Computer Systems, vol. 79, pp. 849–861, Feb. 2018.

[6] A. Baldini, M. Castro, C. Chang, P. Cheng, S. Fink, V. Ishakian, N. Mitchell, and R. Muthusamy, “Serverless Computing: Current Trends and Open Problems,” in Research Advances in Cloud Computing, Springer, 2017, pp.1–20.

[7]J.Spillner,“ComparingPerformanceandCostofVirtual Machines, Containers, and Functions-as-a-Service,” in Proc. 9th IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Hong Kong,China,2017,pp.107–114.

[8]A.MohantyandD.J.A.Wood,“UnderstandingtheCold StartProbleminServerlessApplications,”ACMComputing Surveys,vol.55,no.1,pp.1–30,Jan.2023.

[9] S. Hendrickson, S. Sturdevant, T. Harter, V. Venkataramani,A.Arpaci-Dusseau,andR.Arpaci-Dusseau, “Serverless Computation with OpenLambda,” in Proc. 8th USENIX Workshop on Hot Topics in Cloud Computing (HotCloud),Denver,CO,USA,2016.

[10] M. Lin, C. Liang, and Y. Shen, “Cost-Aware Serverless Scheduling for Workflow Applications in Cloud,” IEEE TransactionsonCloudComputing,vol.10,no.4,pp.2092–2105,Oct.-Dec.2022.

[11]R.Khalaf,A.Taher,andJ.Pan,“PerformanceAnalysis of AWS Lambda and Microsoft Azure Functions,” in Proc. IEEECloudSummit,SanFrancisco,CA,USA,2020,pp.34–41.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

[12] K. Hightower, B. Burns, and J. Beda, Kubernetes: Up andRunning,3rded.Sebastopol,CA:O’ReillyMedia,2022.

[13] H. B. Lim, S. W. Lee, and W. J. Yoon, “Performance Evaluation of Cloud Virtual Machines under Different Hypervisors,”JournalofSupercomputing,vol.77,no.3,pp. 2345–2365,Mar.2021.

[14] M. Satyanarayanan, “The Emergence of Edge Computing,”Computer,vol.50,no.1,pp.30–39,Jan.2017.

[15] A. Sill, Cloud Native Infrastructure, Beijing: O'Reilly Media,2017.

[16] C. L. Abad, S. Yi, and A. Riska, “Cost-Aware Cloud Instance Selection for Elastic Applications,” in Proc. IEEE/ACM Int. Symposium on Cluster, Cloud and Grid Computing(CCGrid),Madrid,Spain,2021,pp.368–375.

[17] R. M. Dutta and M. P. Papazoglou, “Cloud Resource Management:ASurvey,”ComputerStandards&Interfaces, vol.77,pp.103518,Jan.2021.

[18] J. Jonas, A. Schleier-Smith, E. F. Witchel, and P. Warden,“CloudProgrammingSimplified:ABerkeleyView on Serverless Computing,” University of California at BerkeleyTechnicalReport,Feb.2019.

[19] D.Merkel, “Docker:Lightweight Linux Containersfor Consistent Development and Deployment,” Linux Journal, vol.2014,no.239,pp.2,Mar.2014.

[20] M. Ghobaei-Arani, A. S. Mahdavi, and S. Jabbehdari, “Resource Management Approaches in Cloud Computing: A Systematic Review,” Journal of Network and Computer Applications,vol.109,pp.1–12,May2018.

[21] A. Adya et al., “FaaSNet: Scalable and Fast ProvisioningofCustomServerlessContainerRuntimes,”in Proc.USENIXAnnualTechnicalConference(USENIXATC), Boston,MA,USA,2019,pp.943–956.

[22] M. Malawski, “Serverless Computing: A Survey of Opportunities, Challenges and Applications,” ACM ComputingSurveys,vol.55,no.6,pp.1–38,Dec.2023.

[23]A.GuptaandS.K.Peddoju,“EnergyandPerformance Trade-off in Cloud Computing Using Virtual Machines,” Future Generation Computer Systems, vol. 54, pp. 62–74, Jan.2016.

[24] B. Heller, S. Seetharaman, P. Mahadevan, Y. Yiakoumis, and N. McKeown, “Elastic Tree: Saving Energy inDataCenterNetworks,”inProc.USENIXSymposiumon Networked Systems Design and Implementation (NSDI), SanJose,CA,USA,2010.

[25]J. Cito, P.Leitner, T.Fritz,andH.C.Gall,“TheMaking of Cloud Applications: An Empirical Study on Software

Development for the Cloud,” in Proc. Int. Conf. Software Engineering(ICSE),Hyderabad,India,2014,pp.512–521.