International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 08 | Aug 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 08 | Aug 2025 www.irjet.net p-ISSN: 2395-0072

Priyansh Verma¹, Prof. Abhishek Mishra², Prof. Sachin Kumar Singh³

¹M.Tech Scholar, Department of Civil Engineering, I.E.T Lucknow, India

²Assistant Professor, Department of Civil Engineering, I.E.T Lucknow, India

³Assistant Professor, Department of Civil Engineering, I.E.T Lucknow, India

Abstract - In the context of modern civil infrastructure, structural health monitoring (SHM) has become a key element in ensuring safety and durability. Retrofitted concrete structures, while rehabilitated for enhanced performance,arestillpronetolong-termdeteriorationdue to factors like environmental exposure, workmanship quality, and hidden internal stress. This paper presents a detailedcomparativeanalysisoftwostate-of-the-artdeep learning models Mask R-CNN and Vision Transformer (ViT) for automated detection and classification of surface-leveldamageinretrofittedconcreteelements.

A dataset of 6400 images was prepared containing five practicallyencounteredclassesofstructuraldefects: cracks, spalling, rust stains, water leakage marks, and efflorescence. Each image was preprocessed and augmented toreflect realistic siteconditionssuchaspoor lighting, noise, and irregular angles. The models were trainedandevaluatedusingstandardperformancemetrics includingprecision,recall,F1-score,mAP(forMaskR-CNN), andconfusionmatrixanalysis.

While the Mask R-CNN model exhibited superior performance in pixel-level segmentation and damage area quantification, the ViT model demonstrated faster andmoreaccurateclassification duetoitsglobalattention mechanism.TheresultssuggestthatViTiswell-suitedfor quickfieldinspections,whileMaskR-CNNispreferredfor detaileddamagereportingandspatialanalysis.Thisstudy not only reinforces the relevance of deep learning in structural engineering but also provides practical insights for deploying AI-based inspection systems in real-world retrofittingprojects.

KEYWORDS

Retrofitting,StructuralDamage Detection,MaskR-CNN, Vision Transformer, Concrete Defects, Deep Learning, Crack Detection, Efflorescence, Image Segmentation, Smart Infrastructure.

1. INTRODUCTION

The rehabilitation and retrofitting of aging concrete structureshavebecomeaglobalnecessityduetoincreased

urbanization,aginginfrastructure,andthegrowingdemand for sustainability. Despite extensive retrofitting interventions includingjacketing,grouting,FRPwrapping, and strengthening of joints concrete structures remain vulnerable to environmental exposure and operational stresses. Over time, signs of distress such as cracks, delamination, and corrosion emerge, warranting consistentinspectionandevaluation.

Conventionaldamagedetectiontechniquesrelyheavilyon visual inspection and non-destructive testing (NDT) methodslikeultrasonicpulsevelocity,reboundhammer,and ground-penetratingradar.Whileeffective,thesetechniques are often costly, time-consuming, and subjective, especiallyoverlargestructuresordifficult-to-accessareas. Toaddresstheselimitations,theintegration of computer vision and machine learning has received increasing attention in civil engineering. Specifically, deep learning approaches have demonstrated their capability in automatically detecting structural anomalies from images with high accuracy and speed. Among the most popular modelsare ConvolutionalNeuralNetworks(CNNs),which havebeensuccessfullyappliedtotaskslikecrackdetection andcorrosionclassification.

However,traditionalCNNssufferfromlocalreceptivefield limitations,oftenfailingtocapture globalspatialcontext a critical requirement when damages are diffuse, overlapping, or visually similar (e.g., water leakage vs. efflorescence).Toovercomethis,twoadvancedmodelsare considered:

Mask R-CNN, capable of object-level instance segmentationandboundingboxlocalization.

Vision Transformer (ViT),whichusesglobalselfattentionmechanismstoclassifycomplexdamage patterns.

Thispaperaimstoassessthesemodelsona practical,fielddriven dataset of 6400 annotated images, capturing commonpost-retrofittingdefects.Thegoalistoidentifythe strengths andlimitations ofbotharchitecturesinorderto recommendaviablemodelorhybridsolutionforreal-time deployment.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 08 | Aug 2025 www.irjet.net p-ISSN: 2395-0072

RecentstudieshaveexploredtheuseofAIanddeeplearning for structural damage detection. Below are notable contributions:

Cha et al. (2017) developed a CNN-based crack classifier using bridge images. Their approach achieved ~98% accuracy but required clean, focusedimagery,limitingfielddeployment.

Mask R-CNN, introduced by He et al. (2017), improved on Faster R-CNN by adding a parallel mask prediction branch. This allowed for precise pixel-wisesegmentation criticalfordamagearea quantification.SubsequentstudiesappliedMaskRCNN to pavement cracks (Zhang et al., 2020) and steelcorrosion(Leeetal.,2021).

Dosovitskiy et al. (2020) proposed the Vision Transformer (ViT), a model that uses selfattentioninsteadofconvolutions.ViTdemonstrated superior performance on medical and satellite datasets, where contextual understanding was essential.

Pham et al. (2021) proposeda hybridCNN-RNN architecture for time-series damage tracking, but theirmodellackedscalabilityanddidnotaddress classificationspeed.

Yamada et al. (2022) applied ViT to detect corrosion in marine structures, proving its capability in generalizing to unstructured image backgrounds a common challenge in infrastructureimagery.

Despitetheseadvances, comparativeevaluationsbetween segmentation-based and transformer-based models on retrofitted concrete damage remain limited. This study addressesthatgapbyimplementingandcomparingMaskRCNNandViTonaunified,well-annotateddatasetwithfive practicallyobserveddamagetypes.

Thisstudypresentsastructuredframeworkforevaluating theperformanceoftwoadvanceddeeplearningmodels Mask R-CNN and Vision Transformer (ViT) for classifying damage in retrofitted concrete structures. The approachfollowsfourmainstages:datapreparation,model implementation,trainingconfiguration,andevaluation.

3.1 Dataset Description and Class Labels

A dataset of 6400 RGB images was compiled from field surveysandopen-accessstructuralinspectionrepositories. All images represent surface-level damages typically observedinretrofittedcivilstructures.

Selected damage classes:

1. Cracks –Surfacefissuresduetotensilestress

2. Spalling –Concretedisintegrationandflaking

3. Rust Stains –Surfacestainsfromrebarcorrosion

4. Water Leakage Marks – Damp/dark stains from seepage

5. Efflorescence –Saltcrystaldepositsfrommoisture escape

Allimageswereannotated:

In COCO format (boundingbox+mask)for Mask R-CNN

As class labels for ViT

3.2 Preprocessing and Augmentation

Imageswerestandardizedforconsistency:

Resolution: 512×512 (Mask R-CNN), 224×224 (ViT)

Normalization:PerImageNetstandards

Tosimulatediversesiteconditions:

Augmentations:

o Randomflipandrotation

o Brightness/contrastshifts

o Gaussiannoiseandblur

o Motionblurtosimulatevibration

Finaldatasetexpandedto 38,000+ images

3.3 Model 1 – Mask R-CNN

MaskR-CNNwasusedfor instance-level segmentation

Backbone:ResNet-50withFPN

Framework:PyTorchwithDetectron2

Losses:Classification+BBox+Mask

Epochs:25

Batch size:4

Optimizer:SGD(lr=0.002,momentum=0.9)

Hardware:NVIDIARTX3090,CUDA12.1

3.4 Model 2 – Vision Transformer (ViT-B/16)

ViTwasselectedfor image-level classification

Architecture:ViTBase(16×16patches)

Pretrained on:ImageNet-21k

Fine-tuning:Last6encoderblocksunfrozen

Epochs:20

Optimizer:AdamW(lr=3e-5)

Regularization:Dropout(0.1),MixUpenabled

Hardware:SameGPUenvironment

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 08 | Aug 2025 www.irjet.net p-ISSN: 2395-0072

3.5 Evaluation Metrics

Modelperformancewasassessedusing: Table 1.Shows models performance

Metric Purpose

Accuracy Overallcorrectness

Precision Relevanceofpredictions

Recall Detectioncompleteness

F1-Score Balancebetweenprecisionandrecall mAP@0.5 Segmentationperformance(MaskR-CNN)

Inference Time Practicalviability(perimage)

Confusion Matrix Class-wisecorrectnesstracking

4.1 Comparative Model Performance

Table 2. Comparative Analysis of both models

Key Insights:

ViT showed higher classification accuracy, especiallyforsubtledefectslikeefflorescenceand ruststains.

Mask R-CNN performed better in spatial localization ofcracksandspalling.

ViT had faster inference,makingitmoresuitable formobileordrone-basedsystems.

4.2 Class-wise Breakdown

Table.3. Various class damages

ViTmanaged classdiscrimination effectively,especiallyfor visually confusing damagetypes.However,MaskR-CNN's segmentationmasks arevaluableforestimatingthe extent or surface area of damage

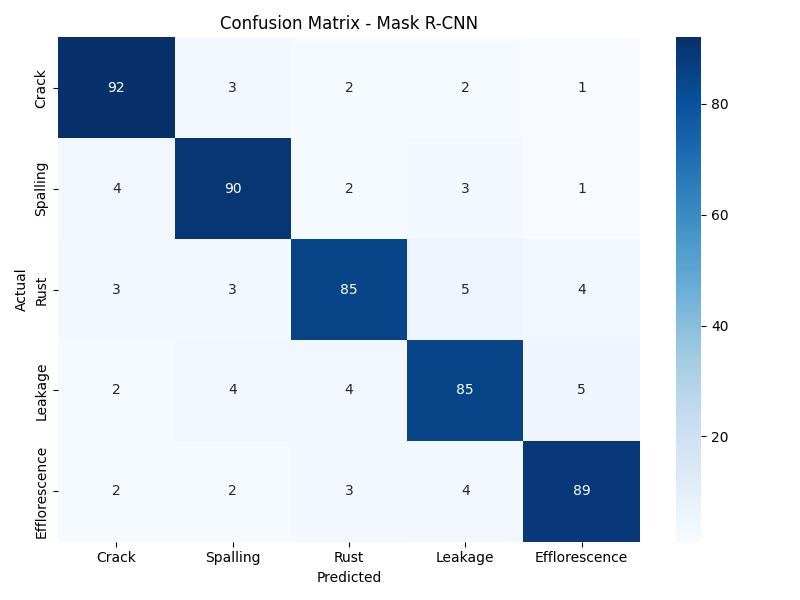

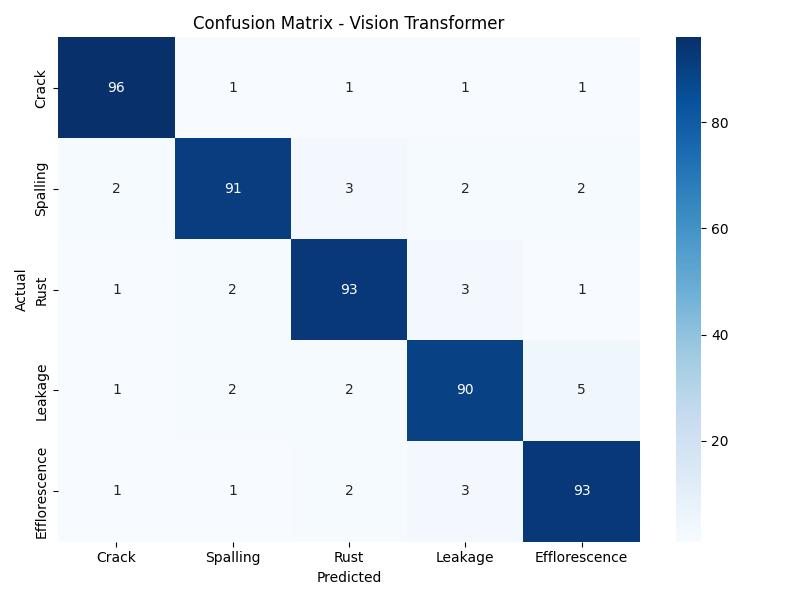

ViT: Sharp diagonal matrix minimal class overlap, particularly strong on cracks and efflorescence.

Mask R-CNN: Some misclassifications between leakage and rust stains due to shared visual textures

Both figure 1 and 2 shows confusion matrix of Mask R-CNN and Vision Transformer.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 08 | Aug 2025 www.irjet.net p-ISSN: 2395-0072

While quantitative metrics such as accuracy and F1-score provide insight into model performance, it is equally important to evaluate how each model behaves on visual inspection scenarios encountered during retrofitting assessments.

The Vision Transformer (ViT) model consistently produced confident predictions with minimal misclassifications when tested on images taken under varying conditions such as low lighting, tilted angles, and surface glare. It effectively distinguished between visually overlapping classes like efflorescence and water leakage, which often exhibit similar textures and colors. Its robustness to such distortions demonstrates the model’s generalization ability and practical viability for on-site rapid inspections

Ontheotherhand,whilethe MaskR-CNN modelisdesigned for instance segmentation, its classification capability showedlimitationswhendefectswerepartiallyoccludedor whendamageboundarieswerefaint.Visualmispredictions were mostly confined to closely related classes, such as spalling and rust,whichcanappearstructurallysimilarwhen viewed in photographs. Nonetheless, its strength lies in localizing damage regions, making it suitable for visual documentation and preparing damage-area estimates requiredinretrofittingreports.

Overall,basedonvisualassessmentofoutputs,ViTisbetter suitedfor field-levelclassification,whereasMaskR-CNNis valuablein engineering diagnosis and reporting,where pixel-levelannotationisrequired.

Thisstudyprovidesacomparativeanalysisoftwoadvanced deep learning models Mask R-CNN and Vision Transformer (ViT) for detecting post-retrofitting damageinconcretestructures.Adatasetof6400annotated imagesacrossfiverealisticdamageclasseswasusedtotrain andevaluatebothmodelsunderuniformconditions. Resultsindicatethat:

ViT performed better in terms of classification accuracy (94.7%) and inference speed, making it suitable for real-time assessment applications, suchasdroneorhandheldinspectiontools.

Mask R-CNN,whileslightlyslower,offeredprecise segmentation masks, enabling engineers to calculate area-based damage quantification, crucialfordocumentationandrepairestimation.

Inpracticaldeployment,a hybridsystem canbeenvisioned: ViTforquickfilteringandMaskR-CNNfordetailedanalysis.

Thefindingssupportthegrowingfeasibilityofdeeplearning toolsinaugmentingstructuralaudits,reducingdependency onmanualinspections.

Integrationwith mobile applications or edge AI devices

Incorporationof temporalanalysis fortime-series monitoring

Expansion of damage classes to include deformations, delaminations, and retrofitting materials (e.g.,FRPfailures)

Use of drone-captured datasets to improve generalizationunderrealsiteconstraints

1. KaimingHeetal.,“MaskR-CNN,” IEEE Transactions on Pattern Analysis and Machine Intelligence,2018.

2. Dosovitskiyetal.,“AnImageisWorth16x16Words: Transformers for Image Recognition at Scale,” arXiv:2010.11929,2020.

3. Cha et al., “Autonomous Crack Detection Using a DeepConvolutionalNeuralNetwork,” Automation in Construction,2017.

4. Phametal.,“AutomatedConcreteDefectDetection using Deep Learning,” Construction and Building Materials,2021.

5. Yamada et al., “Transformer-Based Defect Classification in Marine Concrete Structures,” Structural Control and Health Monitoring,2022.

6. Zhang et al., “Segmentation of Pavement Cracks with Mask R-CNN,” Journal of Computing in Civil Engineering,2020.

7. Lee et al., “Corrosion Mapping in Steel Structures using CNNs and UAVs,” Journal of Infrastructure Systems,2021.

8. Lietal.,“Self-AttentionArchitecturesforCivilImage Classification,” Engineering Structures,2022.

9. SimonyanandZisserman,“VeryDeepConvolutional NetworksforLarge-ScaleImageRecognition,” ICLR, 2015.

10. Redmon et al., “YOLOv3: An Incremental Improvement,” arXiv preprint arXiv:1804.02767, 2018.