International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

REAL-TIME EEG-BASED EMOTION RECOGNITION USING DEEP LEARNING AND STACKED META-LEARNING

Mayur G N1 , Kumar Swamy S2

1 Student , M.Tech IT, Dept. of Computer Science, University of Visvesvaraya College of Engineering, Bengaluru, India

2 Dr. Kumar Swamy S,Associate Professor, Dept. of Computer Science, University of Visvesvaraya College of Engineering, Bengaluru, India ***

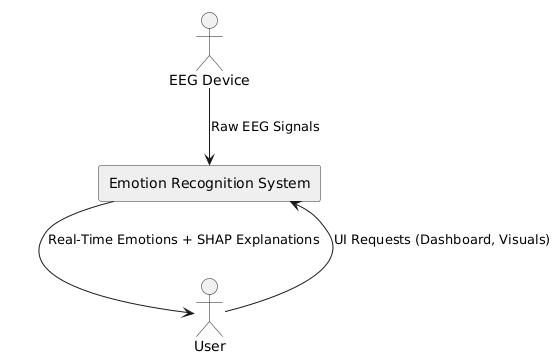

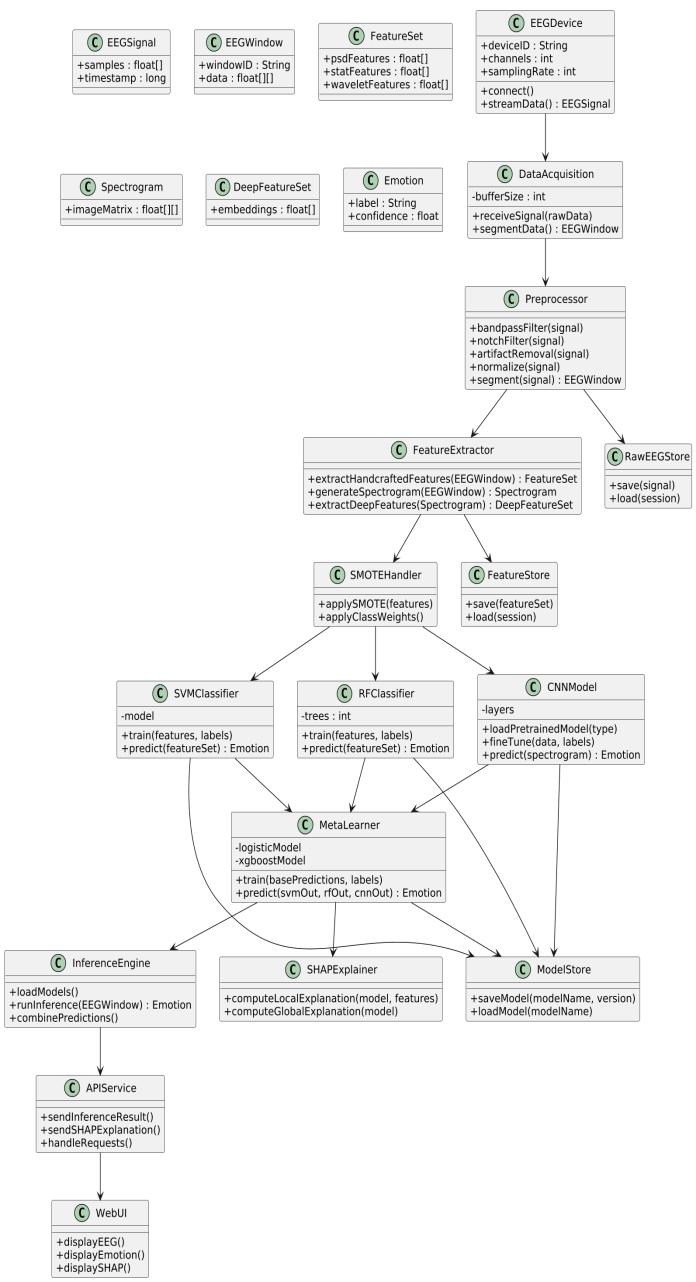

Abstract - This work presents a machine learning–based framework for EEG-based emotion recognition using a structured pipeline of preprocessing, feature extraction, and classification. EEG signals are processed through band-pass filtering and artifact reduction, followed by the extraction of statistical features (mean, variance, skewness, kurtosis), temporal features (Hjorth activity, mobility, complexity), and frequency-domain measures (delta, theta, alpha, beta, and gamma band power). These features are utilized by classical classifiers such as Support Vector Machines (SVM) and Random Forest (RF), along with deep learning models basedonConvolutionalNeuralNetworks (CNNs).

To enhance classification performance, the framework incorporates transfer learning using pretrained CNN architectures such as VGG and Res Net, along with a stacked meta-learning approach combining Logistic Regression and XG Boost for effective fusion of multiple model outputs. Class imbalance is addressed using SMOTE and class-weighted learning to ensure fair emotion classification. A SHAP-based explainability module is integrated to provide transparent interpretation of model predictions. The system is deployed through a Flask-based web interface supporting EEG uploads, real-time inference, visualization, and historical emotional analysis. The proposed architecture demonstrates accurate, robust, and interpretable emotion recognition suitable for mentalhealth andcognitiveapplications.

Key Words: EEG Signals, Emotion Recognition, Deep Learning, Stacked Meta-Learning, Transfer Learning, Feature Extraction, SHAP Explainability, Real-Time EEG Analysis, Affective Computing

1. INTRODUCTION

Emotion recognition using physiological signals has attracted considerable research interest due to its importance in mental health monitoring, cognitive analysis, and human–computer interaction. Among various bio signals, electroencephalogram (EEG) signals offeranon-invasiveandreliablemeansofcapturingbrain activity, enabling the identification of emotional states through underlying neural patterns. Unlike external behavioralcues,EEGreflectsunconsciousbrainresponses, making it particularly suitable for accurate and objective emotion analysis. However, conventional EEG evaluation

often relies on manual inspection and handcrafted analysis, which is time-consuming and demands significantdomainexpertise.

To overcome these limitations, this work presents an intelligent machine learning–driven framework for automated and real-time EEG-based emotion recognition. The proposed system integrates signal preprocessing, feature extraction, multi-model classification, and visualizationwithinaunifiedanduser-friendlyweb-based platform. Advanced learning strategies, including deep learning and model fusion, are employed to improve classification robustness and generalization across emotional states. In addition, the framework emphasizes interpretability and real-time usability, enabling transparent emotional assessment suitable for practical applications in mental health monitoring and affective computing.

1.1 Motivation

Early detection of emotional patterns is essential for understanding mental well-being and preventing the escalation of stress-related conditions. Traditional evaluationmethodsrelyheavilyonsubjectivefeedbackor clinicalobservation,whichcanresultininconsistencyand limited insight into rapid or subtle emotional changes. With recent advancements in machine learning and deep learning, there is a strong opportunity to improve

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

emotional assessment through automated, objective, and data-drivenanalysisofEEGsignals.

EEG-basedfeatureextractionandclassificationtechniques are well suited for identifying emotional states with high precision,astheycapturedirectneuralresponsesthatare difficult to manipulate consciously. However, many existing systems lack real-time processing capability, robustness across diverse users, transparent decisionmaking, and intuitive visualization for practical deployment. This project is motivated by the need to developaunifiedandintelligentframeworkthatcombines reliable preprocessing, advanced emotion classification, andinterpretableoutputs.Byaddressingthesechallenges, the system aims to support users, researchers, and practitioners with accurate, real-time emotional insights andimprovedoverallemotionalawareness.

1.2 Scope

Thescopeofthisprojectincludesthedevelopmentofan automatedandintelligentsystemcapableofdetectingand classifying emotional states using EEG signal analysis and advanced machine learning techniques. It focuses on building a structured pipeline for real-time processing, ensuringfastandaccurateidentificationofneuralpatterns associatedwithdifferentemotionalresponses.Theproject alsoincorporatesvisualinterpretationmodulestopresent EEG waveforms, feature representations, and emotion probabilitiesinaclearandintuitivemanner.

The system is designed to support multiple EEG data formats and is deployed through a Flask-based web interface for seamless user interaction and real-time inference. In addition to classical machine learning models,thescopeextendstotheuseofdeeplearningand model fusion strategies to improve robustness and generalization. Performance evaluation using standard metrics is integrated to validate accuracy, reliability, and consistencyacrossemotionalclasses.

The project aims to assist researchers and mental-health practitioners by providing an efficient and interpretable decision-support tool, with the potential for extension to larger datasets and advanced learning models. Furthermore, it lays the foundation for integration into remote monitoring platforms, emotion-aware applications,andreal-timeaffectivecomputingsystems.

1.3 Problem Statement

Current emotion recognition methods often lack accuracy, consistency, and real-time capability, as they rely heavily on subjective reporting or manual interpretation. These limitations can result in delayed or imprecise assessment of mental states. Although EEG signals provide an objective source for emotion analysis,

effective and reliable extraction of emotional patterns remainschallengingduetosignalnoiseandvariability.

Therefore, there is a need for an automated EEG-based emotion recognition system that can accurately and efficiently classify emotional states. This project aims to develop a machinelearning–based framework to improve the speed, reliability, and precision of emotion detection forpracticalcognitiveandmentalhealthapplications.

2. RELATED WORKS

Automated emotion recognitionhasreceivedincreasing research attention as mental health assessment moves toward objective, data-driven, and technology-assisted solutions. Early investigations into workplace stress and emotional well-being highlighted the limitations of observation-basedandself-reportedassessmentmethods, emphasizing the need for continuous and reliable monitoring systems. As awareness grew regarding the impact of psychological stress on productivity and longterm health, researchers began exploring machine learning–based models capable of identifying emotional imbalance with greater consistency using physiological signals.

Subsequent survey studies and global health reports emphasized the growing prevalence of mental health disorders and the importance of early detection through scalable and automated frameworks. Digital and e-health systems demonstrated the potential of computational approaches for emotional risk assessment and timely intervention;however,manyofthesesystemsstruggledto capturereal-timeemotional transitionsduetorelianceon subjective inputs and infrequent clinical evaluations. This limitation strengthened the motivation for physiological signal–based emotion recognition, particularly using EEG, which provides continuous and objective neural measurements.

In parallel, research on emotion and stress detection expandedtowardhybridandmultimodalframeworksthat integrated IoT devices, behavioral cues, and machine learning models. While such systems achieved improved classification performance, they often required complex hardware setups, multimodal synchronization, and extensive calibration, limiting their practicality. Ensemble learningapproachesfurtherdemonstratedthatcombining multiplemodelsorfeaturerepresentationscouldenhance robustness and stability, though increased system complexityremainedachallenge.

Within this evolving landscape, EEG-based emotion recognition emerged as a promising and efficient alternative due to its ability to directly capture neural responses associated with emotional states. Early EEGbased studies primarily employed classical machine learning techniques such as Support Vector Machines and

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

Random Forests using handcrafted statistical and frequency-domain features. Although these methods achieved reasonable performance, they were limited in handlingcomplexnonlinearpatterns,classimbalance,and cross-subject variability, and frequently lacked real-time interfacesandinterpretability.

More recent research introduced deep learning models, particularly Convolutional Neural Networks, to automatically learn spatial and spectral representations from EEG data. Transfer learning using pretrained architectures further improved performance in scenarios withlimitedlabeleddata.Additionally,techniquessuchas SMOTE were proposed to address class imbalance, while explainableartificialintelligencemethods,includingSHAP, were incorporated to enhance transparency and trust in model predictions. Despite these advancements, many existing systems addressed these challenges in isolation ratherthanwithinaunifiedframework.

Buildingupontheseresearchdirections,thepresentwork focuses on developing an integrated EEG-based emotion recognition system that combines classical machine learning, deep learning, model fusion, data balancing, and explainabilitywithinareal-timeweb-enabledplatform.By consolidating these advancements into a single, interpretable, and deployable framework, the proposed approach aims to overcome key limitations identified in existing literature related to usability, robustness, and continuousemotionalmonitoring.

3. IMPLEMENTATION AND WORKING

3.1

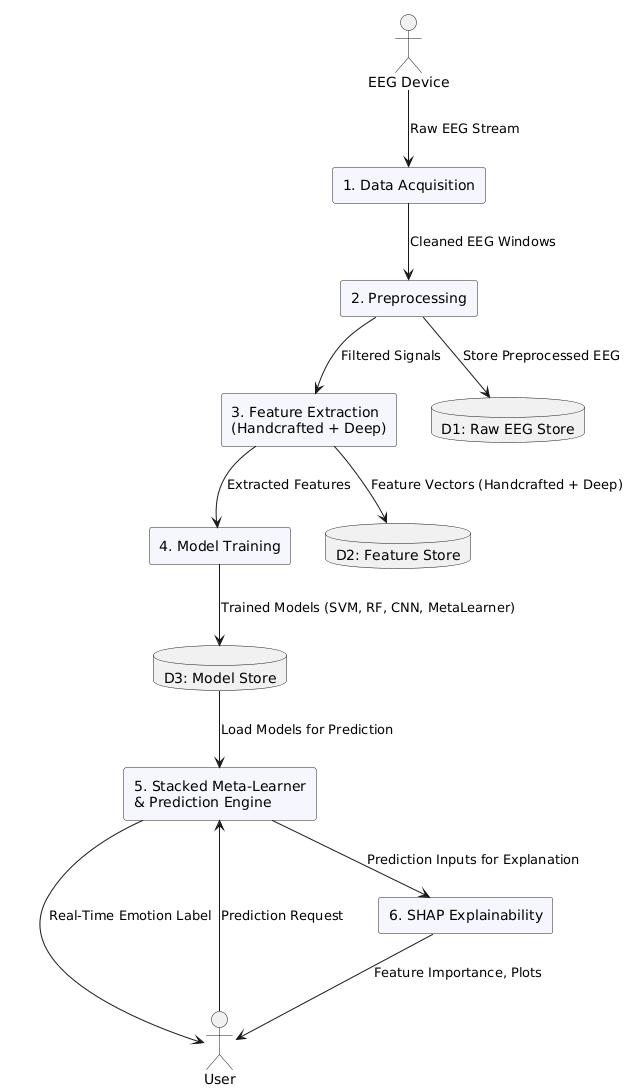

Data Collection

The system begins by collecting EEG recordings from publicly available datasets as well as controlled experimental sessions. These recordings correspond to multiple emotional states such as happiness, calmness, stress,andsadness.Toimprovegeneralization,thedataset includes signals from different subjects, recording environments,andnoiseconditions.Thisdiversityenables thelearningmodelstocapturegeneralizedneuralpatterns rather than overfitting to individual recordings, thereby improving robustness across users and experimental conditions.

3.2 Data Preprocessing

Before model training and inference, the EEG signals undergo a series of preprocessing steps to enhance signal quality and consistency. These steps include band-pass filtering to remove irrelevant frequency components, normalization of signal amplitudes, and elimination of corrupted or noisy segments. Artifact removal techniques are applied to reduce the effects of eye blinks and muscle movements.Inaddition,window-basedsegmentationwith overlapping frames is used to augment the dataset and support real-time analysis of EEG activity under practical conditions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

3.3 Feature Extraction and Labeling

After preprocessing, meaningful features are extracted from the EEG segments to represent emotional characteristics. Statistical descriptors, Hjorth parameters, and frequency-band energies are computed to capture temporal and spectral information. In parallel, EEG segments may be transformed into representations suitable for deep learning models to enable automated featurelearning.EachEEGsegmentisassignedanemotion label based on dataset annotations or experimental conditions, forming labeled feature sets that serve as groundtruthforsupervisedlearning.

3.4 Machine Learning Classification Module

The core emotion classification module employs multiple learning algorithms to analyze extracted EEG features. Classical machine learning models such as Support Vector Machines and Random Forest classifiers are used to model structured decision boundaries, while deep learning models based on Convolutional Neural Networks learn complex spatial and spectral patterns. To improve prediction reliability, outputs from multiple classifiers are combined using a stacked meta-learning approach, enabling more accurate and stable emotion classificationacrossdiverseemotionalstates.

3.5 Visualization Module

While classification models generate emotion predictions, visualization plays a crucial role in interpretability and user understanding. The visualization module produces EEG waveform plots, frequency-band power graphs, and emotion probability distributions. Thesevisual representationshelpusersobservetemporal variations in brain activity and understand how specific signal characteristics contribute to emotion predictions, thereby supporting validation and interpretability of systembehavior.

3.6 Combined Processing and Web Development

In practical operation, all system components are integrated through a Flask-based web interface. Users upload EEG files, which are automatically preprocessed and converted into feature segments. The classification pipeline evaluates these segments and predicts the dominant emotional state, while the visualization module simultaneously generates graphical outputs. The final results are displayed in a unified interface, combining automated prediction with intuitive visualization to ensure accurate analysis, reduced misinterpretation, and enhanced usability for research and mental-health applications.

3.7 Evaluation and Validation

System performance is evaluated using standard classification metrics such as accuracy, precision, recall, and F1-score. Confusion matrices are used to analyze misclassification patterns across emotional classes. To ensure reliability and generalization, testing is performed on unseen EEG data, and cross-validation techniques are appliedacrossmultiplesubjectsandrecordingconditions. These evaluation procedures verify the robustness, consistency, and practical applicability of the proposed framework.

3.8 Real-Time Working

During deployment, the system processes EEG data immediately after upload or acquisition. Preprocessing, feature extraction, and classification are executed in a streamlined pipeline, enabling near-instant emotion prediction. This real-time capability is particularly useful for live monitoring and experimental sessions, allowing researchers and clinicians to obtain automated emotional assessments with minimal delay through the interactive webinterface.

3.9 Output Generation

The final output consists of both numerical and visual summaries of the detected emotional state. This includes EEG waveforms, extracted feature representations, and probability distributions indicating the likelihood of each emotion. These outputs provide clear insight into how emotional states are inferred from neural signals and support faster understanding, improved interpretation, and informed decision-making in mental-health assessment,stressanalysis,andcognitiveresearch.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

4.

FUTURE SCOPE

The proposed EEG-based emotion recognition system offers several opportunities for further enhancement and real-world deployment. One important direction involves extending the framework to multimodal emotion recognition by integrating EEG data with additional physiological and behavioral signals such as ECG, GSR, facial expressions, or speech, which can improve emotionalunderstandingincomplexscenarios.

Future work may also focus on developing personalized emotionmodelsthatadapttoindividualusers,addressing cross-subjectvariabilityinEEGsignals.Thedeploymentof

lightweight and optimized deep learning models on mobile, wearable, and edge devices can further support continuous real-time monitoring in daily-life and clinical environments.

Additional extensions include expanding the range of detectable emotional and cognitive states, incorporating advanced explainability techniques beyond SHAP, and enabling long-term emotional trend analysis for preventive mental health care. Ensuring ethical compliance, data privacy, fairness, and security will remain essential as the system evolves toward broader adoption. With further validation through large-scale studies and interdisciplinary collaboration, the proposed framework has the potential to support next-generation intelligentandtrustworthyemotion-awaresystems.

Further research can explore the integration of domain adaptation and transfer learning strategies to improve cross-subject and cross-dataset generalization, reducing the need for subject-specific calibration. This would enhance the scalability of EEG-based emotion recognition systemsin real-worlddeploymentswhereuservariability issignificant.

Another promising direction involves incorporating temporal modeling techniques, such as recurrent neural networks or attention-based architectures, to better capture dynamic emotional transitions over time. Modeling temporal dependencies in EEG signals can improve recognition accuracy for rapidly changing or mixed emotional states, particularly in real-time monitoringscenarios.

The system can also be extended toward cloud-enabled anddistributedarchitecturesthatsupportlarge-scaledata collection, remote emotion monitoring, and centralized analytics. Integration with telemedicine platforms and mental health support systems would enable continuous emotional assessment and timely intervention, especially inremoteorunderservedregions.

In addition, future work may investigate adaptive feedback mechanisms that respond to detected emotional states in real time. Such mechanisms could enable emotion-aware human–computer interaction, neurofeedback applications, and assistive technologies that dynamically adjust system behavior based on the user’semotionalcondition.

Finally, rigorous clinical validation, longitudinal studies, and collaboration with psychologists and neuroscientists will be essential to establish the clinical relevance and long-term reliability of EEG-based emotion recognition systems. These efforts can facilitate regulatory approval and promote responsible adoption of emotion-aware technologiesinhealthcare,education,andworkplacewellbeingapplications.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

5. CONCLUSION

This project successfully designed and implemented an intelligentEEG-basedemotionrecognitionsystemcapable ofaccurateandreal-timeemotiondetection.Byleveraging EEG signals, the proposed system overcomes key limitations of traditional emotion recognition approaches thatrelyon facial expressions,speech, or text-based cues, which are often subjective and susceptible to external influences.

The integration of classicalmachinelearning modelssuch as Support Vector Machines and Random Forests with deep learning techniques, including Convolutional Neural Networks and transfer learning, enabled robust feature extractionandreliableemotionclassification.Thestacked meta-learning framework further enhanced prediction accuracy and generalization by effectively combining multiplemodeloutputs.Inaddition,theuseofSMOTEand class-weighted learning addressed data imbalance, while SHAP-based explainability improved transparency and trustinmodeldecisions.

Experimental evaluation demonstrates that the proposed framework is effective, reliable, and suitable for real-time operation. The system’s ability to provide interpretable emotional insights through a user-friendly web interface makes it applicable to domains such as mental health monitoring, adaptive learning systems, human–computer interaction, and smart environments. Overall, this work contributes a scalable and interpretable EEG-based emotion recognition solution and establishes a strong foundationforfutureresearchinaffectivecomputing.

6. ACKNOWLEDGEMENT

I extend my sincere gratitude to Professor Subhasish Tripathy (Principal), Dr. Thriveni J (HOD – CSE Department), Dr. Kumar Swamy S (Associate Professor and Project Guide), Teaching and Non- Teaching staffs of University of Visvesvaraya College of Engineering, Bengaluru-560001.

7. REFERENCES

[1] P. Verma and N. Shukla, “Real-time emotion analytics using Flask-based cloud deployment of EEG models,” International Journal of Intelligent Systems and Applications,vol.17,no.1,pp.85–98,Jan.2025.

[2] S. F. Shah and W. Malik, “Cloud-assisted EEG emotion monitoring with adaptive deep learning,” Journal of Ambient Intelligence and Humanized Computing, pp. 1–14, 2025,doi:10.1007/s12652-024-05978-1.

[3] T. A. Rahman, H. Liu, and S. Cho, “Hybrid transformer networks for EEG-based emotional state decoding,” IEEE

Transactions on Affective Computing, vol. 16, no. 2, pp. 245–259,2025.

[4] M. R. Siddiqui and D. Banerjee, “Multimodal affect recognitionusingEEGandspeechfusion withdeepcrossattention,” Neural Computing and Applications, vol. 37, no. 4,pp.4567–4582,2025.

[5] Z. Huang and X. Zhao, “Lightweight edge-optimized deep learning models for wearable EEG emotion detection,” Sensors, vol. 25, no. 3, p. 1124, Feb. 2025.

[6] Z. Tang, H. Yu, and M. Zhao, “Self-supervised contrastive learning for robust EEG-based emotion recognition,” Pattern Recognition,vol.145,p.109896,Feb. 2024.

[7] H. Lin, Y. Zhou, and K. Chan, “Deep domain adaptation for cross-culture EEG emotion classification,” Artificial Intelligence inMedicine,vol.140,p.102706,Mar.2024.

[8] T. Nguyen and D. Pham, “Temporal–spectral attention networks for improved EEG emotion classification,” CognitiveNeurodynamics,vol.18,no.1,pp.105–120,2024.

[9] R. Kaur and J. Anand, “Emotion recognition using hybrid CNN-BiLSTM with differential entropy features on EEG signals,” Computers in Biology and Medicine, vol. 161, p.106944,Feb.2024.

[10] K. Ghosh and S. Bhattacharya, “Multimodal affect recognition combining EEG and facial features using deep fusion networks,” IEEE Access, vol. 12, pp. 11874–11890, 2024.

[11]S.DinhandP.Tran,“EEG-basedaffectdetectionusing enhancedgraphconvolutionalnetworksformental-health applications,” IEEE Transactions on Neural Systems and Rehabilitation Engineering,vol.32,pp.145–159,2024.

[12] L. Jiang, Q. Sun, and M. Zhou, “Emotion recognition using transformer encoders and frequency-temporal fusiononEEG,” Knowledge-Based Systems,vol.275,p.110–129,2024.

[13] Y. Li, H. Zhang, and S. Chen, “EEG emotion classification using attention-based convolutional recurrent networks,” IEEE Transactions on Affective Computing,vol.14,no.2,pp.811–823,2023.

[14] X. Wang et al., “Cross-subject EEG emotion recognition using graph neural networks and adaptive feature alignment,” Neural Networks, vol. 165, pp. 270–284,2023.

[15] A. Tripathi and M. Singh, “Real-time emotion detection using EEG and edge-optimized deep learning models,” Sensors,vol.22,no.18,p.6750,2023.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume:12Issue:12|Dec2025 www.irjet.net p-ISSN: 2395-0072

[16] M. Alarcão and M. J. Fonseca, “Evaluating CNN and LSTM architectures for EEG-based emotion recognition,” Biomedical Signal Processing and Control, vol. 82, pp. 104561–104573,2023.

[17] S. Amini, R. Moradi, and A. Kazemi, “Transformerbased EEG emotion recognition using hybrid spatialtemporal learning,” Expert Systems with Applications, vol. 219,p.119678,2023.

[18] C. Zhao and W. Luo, “Differential entropy feature enhancement for EEG-driven emotional state classification,” Computers & Electrical Engineering, vol. 109,p.108783,2023.

[19] R. Moradi and M. Afshar, “EEG emotion recognition with deep spatial filtering and channel-attention mechanisms,” Neurocomputing, vol. 531, pp. 203–215, 2023.

[20] J. Kim, T. H. Nguyen, and S. Lee, “Cross-modal affect recognition using EEG and thermal imaging with deep representation learning,” IEEE Access, vol. 10, pp. 55211–55225,2022.

[21]Y.ZhangandG.Li,“Deepspatiotemporalnetworksfor emotion detection from EEG signals,” Neural Computing andApplications,vol.34,no.11,pp.8571–8584,2022.

[22] H. Xu and D. Zhou, “Graph convolutional LSTM networks for EEG emotion recognition,” Artificial Intelligence Review,vol.55,pp.2413–2432,2022.

[23] R. Mollah and P. Chakraborty, “Channel-wise attention CNN for emotion classification using EEG differential entropy features,” Cognitive Systems Research, vol.75,pp.72–84,2022.

[24] S. M. Rahman and M. Tasnim, “Fusion of EEG and audiocuesformultimodalemotionrecognitionusingdeep cross-attention networks,” Multimedia Tools and Applications,vol.81,pp.33381–33402,2022.

[25]D.HuandY.Huang,“Domainadversariallearningfor cross-subject EEG emotion recognition,” Pattern RecognitionLetters,vol.158,pp.24–32,2022.

[26]F.Li,N.Chen,andB.Xu,“AlightweightCNNmodelfor emotion classification using single-channel EEG,” BiomedicalEngineering Letters,vol.11,pp.125–135,2021.

[27] R. Al-Shargie, H. Tariq, and T. H. R. Al-Nuaimi, “Emotion recognition using optimized deep neural networks and multi-band EEG features,” Sensors, vol. 21, no.4,p.1322,2021.

[28] A. Zang, M. Khalil, and Y. Cao, “EEG-based emotion detection using hybrid LSTM-CNN models with attention

mechanisms,” IEEE Access, vol. 9, pp. 116727–116739, 2021.

[29] S. Li and H. Wang, “Transfer learning for emotion recognitionfromEEGdata withlimitedtrainingsamples,” NeuralProcessing Letters,vol.53,pp.3569–3587,2021.

[30] T. Rashid, F. Nasir, and S. Ali, “Continuous emotion tracking using EEG and deep temporal convolutional networks,” Cognitive Neurodynamics, vol. 15, pp. 987–1001,2021.