International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Namrata Deshmukh1 , Anuradha Purohit2

1M Tech Scholar, Dept. of Computer Engineering, SGSITS, M.P., India

2Professor, Dept. of Computer Engineering, SGSITS, M.P., India

Abstract - Object detection under unfavorable weather conditions, particularly in foggy environments, presents significant challenges due to reduced visibility and image degradation. Fog and clouds frequently hides objects in imagery captured by drones, especially in the early morning, making accurate detection difficult. Despite the rapid advancements in object detection algorithms across various domains, including autonomous vehicles, healthcare, and surveillance, achieving high performance in foggy conditions remainsacomplextask.Thispaperpresentsasurveyonobject detection techniques for foggy images, systematically investigatingtheworkflowandcoveringcriticalstagessuchas data collection, pre-processing, image enhancement, feature extraction,objectdetection,andevaluation.Specialemphasis is placed on advanced image enhancement and feature extraction techniques to improve object visibility. Furthermore, a comparative analysis of different methodologies,datasets,andperformancemetricsispresented through detailed tables and visualizations. This research not onlyhighlightscurrentchallengesbutalsoidentifiespotential directions for future advancements in object detection under foggy conditions, providing valuable insights for researchers and practitioners alike

Key Words: Object Detection, Foggy Images, Image Enhancement, Feature Extraction, Deep Learning, CNN.

Object detection plays a pivotal role in a wide range of computer vision applications. It involves correctly categorizing and locating things in a given image using a rectangular bounding box [1]. It finds broad applicability acrossdomainssuchasautonomousvehicles,surveillance systems,aviationsafety,industrialautomation,robotics,and otherautonomoustechnologies.Objectdetectionapproaches are quickly changing with advances in convolution neural networks, deep learning algorithms, and GPU computing power [2]. While standard models can identify objects effectively, doing so in foggy environments presents significant challenges [3]. Adverse weather condition like rain,fog,snowandclouddegradethefunctioningofcamera sensors and reduce visibility. Such that, tasks like classification, detection and segmentation works well in daylight because of high visibility but their performance graduallydecreasesduetoadverseweathercondition,Since real-worldscenariosofteninvolverapidweatherchanges. [4].Infoggyweatherconditions,flyingparticlescanreduce

visualqualitybyconcealingdetails,decreasingcontrast,and producing color distortion. This poses issues for the applicationofobjectdetectionalgorithms,highlightingthe need for additional investigation and solution. Fog affects visibilityandimagequality,makingimageshazy,dull,and blocked, reducing the accuracy, and efficiency of objects detecting models [5], [6]. Generally, to identify objects in hazyweather,bothde-hazedmethodsandobjectdetectors arecombinedtoachievethisgoal[7].Researchersworking onthisdomaincameupwithvariousinnovativedetection methods, which also lead to new problems/issues. In this survey paper, we try to answer the call for more robust object detection when it comes to operating in these environments. While general object detection has been studied extensively, the specific challenge of adverse conditions such as fog is less well-known [4]. This paper describesthefullflowforobjectdetectioninfoggyimages from pre-processing and image enhancement to feature extractionanddetectionmodel.Thiscontributesprevious workbyprovidingelaborativeworkdoneinobjectdetection especiallyinfoggyimages[7].

Thisstudy’smaincontributionsincludethefollowing:

1) Pre-processing and improvement approaches, including an overview, algorithm descriptions, and practicalimplementationunderhazyconditions.

2) In-depth analysis of image enhancement and feature extractiontechniquesandtheiradaptationsforfoggy environments.

3) Provide a list of areas of improvement with possible researchdirectionstoinspirationsforotherstudies.

This survey aims to be a fundamental reference tool for researchers, students, and practitioners by providing an extensiveroadmapofwell-establishedmethodshighlighting potential opportunities for innovation. This work aims to developeffectiveobjectidentificationalgorithmsinadverse weather,openingupnewopportunitiesforinnovationinthe space. In this survey paper, we studied and analyzed research papers published between 2006 and 2024 by reputablepublisherssuchasIEEE,ACM,Springer,andother renownedjournalsandconferences.Theremainderofthis paper is organized as follows: Section II reviews the background and related work; Section III outlines the challenges posed by fog; Section IV describes the dataset used;SectionVdetailstheproposedmethodology;SectionVI

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

defines the evaluation criteria; Section VII explores realworld applications; Section VIII discusses future research directions;andSectionIXconcludesthepaper.

Objectdetectioninfoggyimageshasreceivedmuchattention by researcher. The main challenge occurred from foggy weather is reduction in image quality, making reduced contrastandvisibilitywithcoloraberrationduetoscattering andattenuationofsunlight.Theseissueswereundertakento be solved by implementing specific pre-processing and augmentationmethodsforimagesappliedtoobjectdetection algorithms. The current section reviews some of the most significantcontributionsofresearchersanddiscussestheir methodology.

Nguyenetal.used[6]toaddressthescarcityofbenchmark datasetsforaerialobjectdetectioninfog,thisworkpresents Foggy-DOTA,asyntheticfog-augmentedversionoftheDOTA dataset.Theauthorsusetheimgauglibrarytocreaterealistic fogoverlaysandconductathoroughevaluationofcuttingedgeorientedobjectdetectionmodels(S2ANet,ReDet,RoI Transformer)in foggysituations.When models trainedon clear photos are tested on Foggy-DOTA, the results reveal considerablemAPloss,highlightingthedifficultyandneedof domainadaptationinfoggyaerialcircumstances.

Huetal.in[8]introducesHLNet,anovelmulti-tasklearning frameworkthatoptimizesbothpicturedehazingandobject detection to increase performance in foggy conditions. Instead of typical pixel-wise dehazing, HLNet employs feature-levelrestorationtoreducethedeleteriouseffectsof Batch Normalization on low-level feature learning. The architectureincludesasharedResNet-basedencoderanda dehazing decoder for fast end-to-end training. The model significantlyimprovesmeanAveragePrecision(mAP)across several detection backbones (RetinaNet, YOLOv3, and YOLOv5s) while eliminating the additional inference time generallyassociatedwithpre-processingsteps.

Hahner et al. in [9] proposes Foggy Synscapes, a fully syntheticdatasetthatincludes25,000photo-realisticfoggy driving sceneries created with physically-based rendering and correct depth maps. When tested against real fog benchmarks,theauthorsshowthatmodelstrainedpurelyon synthetic fog data outperform those trained on partially syntheticdatasets(suchasFoggyCityscapes).Theyfurther demonstratethatintegratingbothdatasetsresultsinthebest semantic segmentation performance, emphasizing the complimentarymeritsofpurelysyntheticandreal-domain adaptivedataforfogsceneinterpretation.

T. Singh et al. in [10] focuses on improving foggy driving moviesandrecognizingcloseobjectsusingtraditionalimage processing methods. The suggested approach improves contrastthroughadaptivehistogramequalizationanduses theextendedmaximumtransformtohighlightandidentify

possibleobjectsofinterest.Inaddition,anextraneousfeature removal algorithm eliminates useless items such as road markersandminortrash.Thetechniquepromotesreal-time drivingassistancebyincreasingvisualclarityandenabling automatedalerts,particularlyinlow-contrastfoggysettings.

Shitetal.in[11]proposesatransformer-basedarchitecture forreal-timeobjectrecognitionindeepfog,whichcombines an encoder-decoder transformer pipeline with a High ResolutionNetwork(HRNet)backbonetoextractrichspatial features. The method tries to overcome the constraints of existingdetectorsthatrelyonanchor-basedpostprocessing andnon-maximalsuppressionbyallowingdirectset-based prediction via bipartite matching loss. Comparative evaluations versus Mask R-CNN on the Foggy Cityscapes datasetshow that transformer attention methods perform better in fog heavy settings, indicating their robustness in low-visibilityconditions.

Fogiscomposedofminusculewaterdropletsintheairthat scatterthelightandcauseaformofvisualobstruction.Major impactsoffogontheimagequalityare:

1) Reduced Contrast and Brightness:Fogaugments thelowcontrastandreducethebrightnessofobjects producingagreatprobleminclearlydifferentiating themwithbackgroundbyordinarydetectionmodels [8],[13],[37].

2) Noise Introduction and Edge Blurring: Fog introducesnoisingeffectsandedgeblurringcause falsedetectionandlotsoffalsefeaturedetection[7], [11],[14].

3) VariabilityinFogDensity: Thedensityoffogdeter minesthevisibilitylevel.Asdefined,itvariesfroma light fog, which means that the visibility becomes slightly distorted to dense fog, which prevents detectionaccuracycompletely[4],[56].

4) Limited Real-World Datasets: Althoughartificial datasetslikeFoggyCityscapesandFoggy-DOTAoffer some useful insights, a massive bottleneck to the overall scenario is created by the scarcity of realworldannotatedlarge-scaledatasetsoffoggyscenes [6].

5) Computational Complexity: GAN-baseddetectors [44] and architectures of transformer type [45] make heavy computational requirements and therefore cannot easily be implemented in lowresource-basedenvironments.

Theseissuesrequireadvancedpre-processingmethodologies andpowerfulobjectdetectionnetworksthataresensitiveto low visibility conditions. This survey seeks to provide a complete examination of different strategies, emphasizing theiradvantagesanddisadvantages.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Objectdetectionbeginsbycollectingarepresentativesetof imageswithobjectsofinterestinthescene.Inthecontextof foggyimage detection,the dataset needs tocomprise both foggyandnon-foggyimages,inordertotrainrobustmodels. As foggy datasets are limited, synthetic approaches for generatingfogcanbeappliedtoexistingdatasetstoproduce foggyimages

Thissectioncoversthemostcommonlyutilizeddatasetsfor foggy object detection and their properties. Table -1 summarizesthedatasetsusedforobjectdetectioninfoggy conditions

1) Foggy Cityscape Dataset: A dataset for understandingsceneinfoggyurbanenvironments. Labeled images of the urban environment in simulating real-world fog conditions, containing various object classes like cars, pedestrians, and traffic signs [57]. The fog density has been categorized into three modes: Small Fog, Medium FogandLargeFogasshowninFig-2andFig-3

2) Foggy Driving Dataset: A dataset for object detection under foggy driving conditions with respect to focus on vehicles, road markings, and otherobjectsrelevanttodriving[58].

3) SyntheticFoggyDataset: Asyntheticallygenerated dataset of variable fog condition and object type with controlled scenarios for the sake of experimentation[59].

4) Real-World Foggy Dataset: Realimagesofscenes under foggy conditions collected from various sources and considered challenging for object detectiontasks[60].

Dataset Name Images Object Classes Train Valid Test

FoggyCityscape Dataset[57]

FoggyDriving Dataset[58]

SyntheticFoggy Dataset[59]

Real-World FoggyDataset[60]

0 20

5. METHODOLOGY

20,00 0 10,20 0

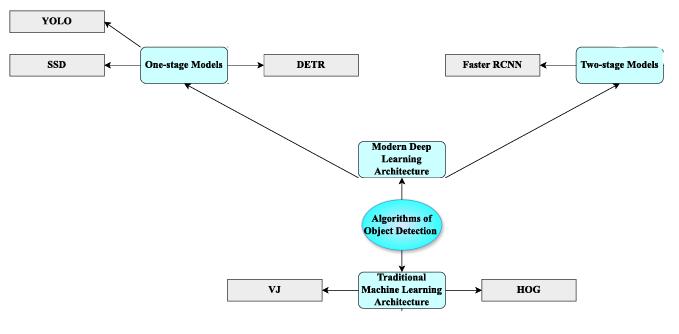

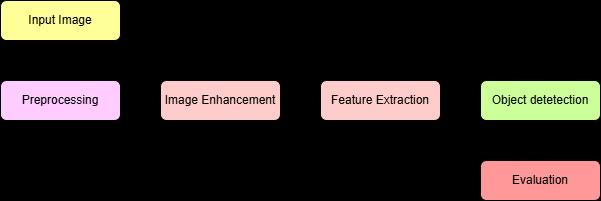

Objectdetectioninfoggyconditionisacollectionofcritical steps taken to reduce challenges occurring due to low visibility,contractandimagefalsification.Thevariousobject detection algorithms are widely classified into traditional machinelearning and new deeplearning architectures, as shown in Fig -1. The steps are widely categorized as data collection,preprocessing,imageenhancement,andfeature extractionandobjectdetectionmodelsasshowninFig-4.

1) Preprocessing: Preprocessingisconsideredasthe initialstepforanalyzingfoggyimages.Thisincludes resizing, normalization and minimizing noise. Specifically for foggy image techniques like the dehazingalgorithmisusedtooptimizetheimpact of fog, enhance the visibility and transparency of image.

2) ImageEnhancement: Imageenhancementaimsat enhancing the foggy image quality so that the objects in it are more identifiable. Techniques for increasingvisibilityanddetailsinimageshavebeen appliedsuchascontrastenhancement,fogremoval algorithmsandbrightnessalgorithms.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

3) Feature Extraction: Feature extraction is recognizingandgatheringinformationfromimages. Here the key features of objects from images are detectedsuchasedges,texturesorshape.

4) Object Detection Models: An Object detection model evaluates features from images to identify objects. In foggy images, the model is trained to address challenges occurred by fog, ensuring the objectisaccuratelyidentifiedeveninsuchadverse conditions.

Theworkdonebyvariousresearcherstoimprovethe performanceofobjectdetectionunderhazyconditions hasbeendiscussedbelowinthefollowingsubsequent sections.

5.1 Preprocessing

Preprocessing is the most fundamental step in object detection for foggy images. This section covers several preprocessingprocedures,includingtraditionalandmodern methods.Itimprovesinputimagesforsubsequentprocesses that involve enhancement, feature extraction, and object detection.

5.1.1 Traditional Preprocessing Techniques:

i. Image Resizing and Scaling: Resizingandscaling change the size of the images to fit the input requirementsof objectdetectionmodels.Thiswill makesurethatalldata is treated equally and therearenodiscrepanciesinthe data due to the different sizes of images [33]. Some common resizingtechniquesinclude:

NearestNeighbor Interpolation: Assigns thenearestpixelvaluetothenewgrid.

Bilinear Interpolation: Computes the pixel value as a weighted average of surroundingpixels.

ii. Normalization and Standardization: Normalization is a scale that maps pixel intensity values to a fixed range, such as [0, 1]. Standardizationrescalesthedatasothatithaszero meanandunitvariance.Thishelpstostabilizeand

enhancetheperformanceofdeeplearningmodels [16].Theformulafornormalizationisasfollows: x ′ = x – xmin xmax – xmin (1) andforstandardizationasfollows:

where μ isthemeanand σ isthestandard deviation.

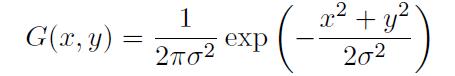

iii. Noise Reduction: Noise in the images due to environmental conditions is a common issue in foggyimages.Gaussianandmedianfilteringareused fornoisereductiontomaketheimagesmoothwhile keepingimportantdetailsintact[17].Theformula forGaussianFilteris:

where,

G(x,y)istheGaussianfunctionatposition (x,y),

σ isthestandarddeviationoftheGaussian distribution,

x and y are the horizontal and vertical distancesfromthecenterofthekernel, andforMedianFilteris: (4) where,

Ioutisthefilteredoutputimage.

I(x+i,y+j)isthevalueoftheinputimageat position(x+i,y+j)

Themedianoperationisappliedtotheset ofpixelvalueswithinthewindowdefined bythekernelsize,2k+1.

iv. Contrast and Brightness Adjustment: Contrast andbrightnessadjustmentimprovethevisibilityof the image under foggy conditions [21]. Contrast stretchingmapspixelvaluesfromtheinputrangeto a wider output range, enhancing details in lowvisibilityareas.

i. Deep Image Prior: Itisaself-supervisedapproach thatusesconvolutionalneuralnetworks(CNNs)to restoreimageswithoutusingexternaltrainingdata.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Insteadofusingapre-trainedmodel,DIPoptimizes arandomlyinitializedCNNtolearnanimagespecific priorandreconstructthetargetimage[19].

Theoptimizationtechniqueisasfollows: (5) where,

A(x)representsthedegradedimage,

yistheobservedinput,

R(x) is a regularization term that helps removenoiseandartifacts.

DIPhasbeeneffectivelyusedforimagedenoising, super-resolution, and fog removal, as it can learn the underlyingstructureofanimagewithoutrequiringground truthdata.

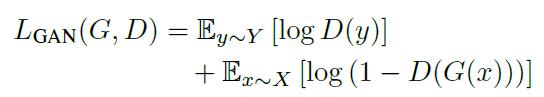

ii. GANforimagerestoration:GenerativeAdversarial Networks (GANs) have proven effective in image restoration tasks such as fog removal, deblurring, and super-resolution [20]. A GAN comprises two networks:

Generator G: Takes the degraded image andgeneratesarestoredversion.

Discriminator D: Evaluates whether an image is real (clear) or generated (restored).

TheobjectivefunctionforaGAN-basedrestoration modelisformulatedas:

(6) where, G(x) representsthegeneratedclearimagefromfoggy input x, and D aims to distinguish real clear images from generatedones.

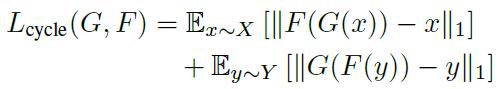

Furthermore,acycle-consistencylossisfrequentlyutilizedto verifythatarestoredimagemappedbacktothehazydomain isconsistent:

(7)

The preprocessing techniques ensure images are best preparedforthecomingstepsoftheworkflow.Thisrelieson theapplication’srequirementsandthequalityoftheinput data.Itissometimespossibletoobtainbetterresultswhen usingacombinationoftraditionalandadvancedtechniques, especiallyincomplexcasessuchasfoggyconditions.

Imageenhancementenhancestheclarityandqualityof foggy images. These techniques try to enhance the details obscured by fog and improve contrast and brightness for better object detection performance. This section covers different enhancement methods, including traditional approaches and advanced techniques that have been proposed by researchers for enhancement in foggy image detection.

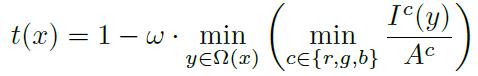

i. Dark Channel Prior: DarkChannelPrior(DCP)isa popular dehazing technique that exploits the fact thatmostnaturalimagesfeaturelowintensitypixels inatleastonecolourchannel[21].

Thetransmissionmapisestimatedusing:

(8) where

t(x)representsthetransmissionmap,

Ic(y)istheintensityofpixelyinchannelc,

Acistheglobalatmosphericlight,

ω isaparametercontrollingtheamountofhaze removed.

DCP effectively reduces haze and improves image contrast, making it ideal for outdoor surveillance, autonomousdriving,andobjectdetectioninharshweather situations. However, it may introduce halo artifacts, necessitatingadditionalpost-processingforrefinement.

ii. Retinex Based Method: Retinex-based methods improve images as a model of the human vision system,focusingonwhatlightdoestoobjects[22]. The Single-Scale Retinex (SSR) model can be expressedasfollows:

(9)

where,

R(x,y)isthereflectance,

I(x,y)istheintensityinput,

G(x,y)isaGaussiankernel,

∗ denotesconvolution.

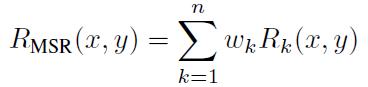

Multi-scalevariantssuchasMSRcombineoutputsfrom otherscalesforbetterresults:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

where wk aretheweightsfordifferentscales.

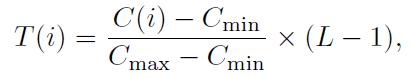

iii. CLAHE: is an advanced version of histogram equalization designed to improve image contrast withoutover-amplifyingnoise.Itworksbyapplying histogramequalizationtosmallimageregions(tiles) while limiting contrast amplification using a clip limit[23].Thetransformationfunctionisgivenby: (11)

where,

C(i)isthecumulativedistributionfunction(CDF),

Lrepresentsthenumberofintensitylevels.

CLAHE enhances local contrast, making it useful for medicalimaging,satelliteimageprocessing,andfoggyimage enhancement. However, improper parameter tuning may leadtounnaturalimageappearances.

5.2.2 Advanced Image Enhancement Techniques:

i. DehazeNET: It is designed for single-image dehazinganduseaconvolutionalneuralnetworkto trainamappingfromhazyimagestotransmission maps.Thenetworkistrainedend-toendtoestimate thetransmissionmap,whichisthenusedalongwith theatmosphericscatteringmodeltorecoveraclear image[24].

The atmospheric scattering model is presented as follows: (12)

where,

I(x)istheobservedhazyimage,

J(x)isthesceneradiance(clearimage),

t(x)isthetransmissionmap,

Aistheglobalatmosphericlight.

DehazeNetlearnstoestimatet(x)byoptimizingtheloss function: (13) where

tpred(x) isthepredictedtransmissionmap

tgt(x) isthegroundtruth.

ii. AOD-NET: TheAll-in-OneDehazingNetwork(AODNet)producesdehazedimageswithoutconsidering transmissionmapsoratmosphericlight.AOD-Net’s integrationwiththeatmosphericscatteringmodel

simplifies de-hazing and enables real-time performance[25].

TheAOD-Nettransmissionmodelisshownbelow: (14)

where,

J(x)representsthedehazedimage,

I(x)representstheobservedhazyimage,

Arepresentstheglobalatmosphericlight,and

β and γ arelearnableparameters.

AOD-Netistrainedwithareconstructionlossfunctionthat minimizes the difference between anticipated and actual clearimages,

where,

Jpred(x)isthepredictedclearimage

Jgt(x)isthegroundtruth.

iii. CycleGANforfogremoval: Forfogremoval,CycleConsistent Generative Adversarial Networks (CycleGAN) are often used for unpaired image-toimage translations. CycleGAN utilizes unpaired datasetstoenhanceimageclarity[26].

This ensures that a foggy image processed by G and then mappedbackbyFremainsunchanged,enforcingconsistency betweenthetwodomains.

TheTable-2showsthecomparisonofImageEnhancement Techniquessummarizingthediscussedtechniques.

Table-2: ComparisonofImageEnhancementTechniques

Technique Advantages Limitations Application Area

Dark Channel Prior[21]

Effectivehaze removal, contrast enhancement

RetinexBased Method [22] Improves visibility in low-light conditions

Prone to halo artifacts, requires refinement Outdoor Surveillance, autonomous driving

Mayintroduce Noiseand unnaturalcolors Image enhancement, medical imaging

CLAHE [23] Enhances localcontrast, preventsover amplification Maycause unnatural appearance ifnottuned Medical imaging, Satellite image processing

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

properly

DehazeNE T [24]

AODNET[25]

CycleGAN [26]

Data-driven, learns haze patternsfor restoration

End-to-end trainable, efficientfor single-imagedehazing

Unsupervised, learns mappings betweenfoggy and clear images

5.3 Feature Extraction

Requireslarge Labeled datasets, high computation

Maystruggle Withextreme haze conditions

Computationally expensive, large dataset required

Haze remov al, real-time vision systems

Object detection, autonomous driving

Image-toimage translation, fog removal

Inobjectdetection,featureextractioniscritical,itconsists oftheextractionofrelevantinformationfromimagesforuse in downstream tasks. For foggy images, robust feature extractionmethodsbecomeessentialinmitigatingproblems causedbyreducedvisibilityandnoise.

This section deals with different feature extraction techniques, from traditional handcrafted methods to deep learning-basedones.

5.3.1 Traditional Feature Extraction Techniques:

i. Histogram of Oriented Gradients (HOG): HOG extracts features by counting occurrences of gradient orientations in localized regions of an image[27].

It is particularly effective for detecting objects in varyinglightingconditions,

where G representsthegradientinalocalcell.

ii. Scale-Invariant Feature Transform (SIFT): SIFT identifiessignificantpointsinanimagethatremain constantacrossscale,rotation,andnoise[28].

Theprocedureincludes:

Scale-space representation by Gaussian blurring.

Extrema detection in the Difference of Gaussian(DoG)space.

Orientation assignment and computing descriptorsforeachkeypoint.

iii. Speeded-Up Robust Features (SURF): SURF providesafasteralternativetoSIFTwhichutilizes integral images for computation speed [29]. It approximatestheGaussiansecond-orderderivatives usingboxfilters,andthatiswhatmakesthescaleinvariantkeypointseasytodetect (17)

where H(x, y) istheHessianmatrix.

5.3.2 Advanced Feature Extraction Techniques:

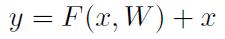

i. ResNet [Residual Network]: This introduces residuallearningtomitigatethevanishinggradient problemindeeparchitectures.Thekeycomponent ofResNetistheresidualblock,definedas: (18) wherexistheinput,F(x,W)representsasequenceof convolutionallayers,andtheidentityconnectionx allowsgradientflowthroughthenetwork[30].

ResNetemploysdifferentdeptconfigurationssuch as ResNet-18; ResNet uses various depth configurations,namelyResNet-18,ResNet-50,and ResNet-101, which enhance feature extraction capabilities.ObjectdetectionmodelssuchasFaster R-CNN and YOLO rely on it as a backbone feature extractor.

ii. HRNet[High-ResolutionNetwork]: HRNet’shighresolutionrepresentationsacrossthenetworkmake it suited for dense prediction tasks like object detection and segmentation [31]. HRNet keeps parallel high-resolution subnetworks and fuses multi-scalefeatures.HRNet’sabilitytoretainspatial details makes it effective for object detection in foggy conditions, where subtle edge and texture informationarecritical.

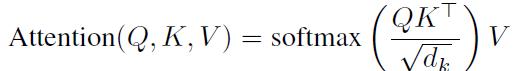

iii. Transformer Based: Transformer-based models, like Vision Transformer (ViT) and Swin Transformer, extract features using self-attention mechanisms instead of convolutional layers [32]. Thesemodelstreatimagesassequencesofpatches and compute global dependencies, improving featurerepresentation.Theprocedureofextracting featuresinvolves:

Patch Tokenization: TheinputimageI ∈ RH×W×Cisdividedintonon-overlapping patches, which are then flattened and linearlyprojectedintoafixed-dimensional featurespace.

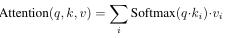

Self-Attention Mechanism: Multi-head selfattention (MSA) is used to compute relationshipsbetweenregions:

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Hierarchical Feature Learning: Models like Swin Transformer introduce hierarchicalstructuresandlocalwindowbased attention to capture multi-scale featuresefficiently.

Table-3analyzesandconcludestheabovediscussedfeature extractiontechniques.

Table-3: ComparisonofFeatureExtractionTechniques

Technique Advantages Limitations Application Area

HOG [27] Robust to lighting changes Ineffectivefor cluttered backgrounds Object detection

SIFT[28] Scale and rotation invariant Computationall y expensive Keypoint matching

SURF[29] Fasterthan SIFT Lessrobustto rotation Object recognition

ResNet[30] Efficient gradientflow, deepfeature extraction High computational cost Object detection, image classification

HRNet[31] Preserves high-resolution features, effective for densetasks Requires high memory Semantic segmentation, object detection

Transformer Based[32] Captures global dependencies, robust feature representation Computationally expensive, Largedataset requirement Image recognition, object detection

Detecting objects in foggy images relies on feature extractionalgorithms.Traditionalapproaches,includingHOG and SIFT, provide interpretable, computationally efficient features while modern deep learning-based techniques capture richer and more complex patterns. The approach useddependsonthedataset’sattributesandcomputational resources.

Objectdetectionlocatesandidentifiesthingsinimages. Self-drivingcars,surveillance,andenvironmentalmonitoring all rely on this fundamental challenge in computer vision. Foggyphotospresentuniquechallengesforobjectdetection duetolowcontrast,occlusion,andnoise.

Thischaptercoversarangeofobjectdetectionmodelswitha focusonmethodsappropriateforfoggyimages.

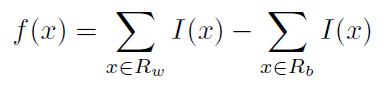

i. Viola-JonesAlgorithm:TheViola-Jonesapproachis astandardobjectdetectionframeworkthatemploys Haar-likecharacteristicsandanAdaBoostclassifier todistinguishfacesandobjects[33].

Mathematically,theHaar-likefeaturef(x)atposition xiscomputedas: (20) where Rw is white region, Rb is black region and I(x)isthepixelintensities.

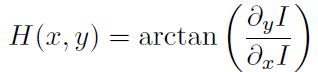

ii. HOG + SVM: Histogram of Oriented Gradients (HOG) combined with a Support Vector Machine (SVM)isafeature-basedobjectdetectiontechnique commonlyusedforpedestriandetection[34]. TheHOGdescriptorforanimageI(x,y)iscomputed as: (21) wheretheimagegradientsinxandydirections aredenotedas ∂xI and ∂yI,respectively.

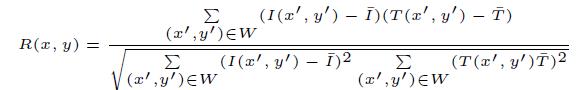

iii. TemplateMatching: TemplateMatchingisasimple object detection technique that compares a predefined template with different regions of the image to find the best match [35]. A common similarity measure is the normalized crosscorrelation(NCC),givenby: (22) where I(x’, y’) istheimageregion, T(x’, y’) isthetemplate, IandTaretheirmeanvalues.

5.4.2

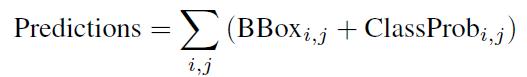

i. YOLO: TheYouOnlyLookOncedetectstargets in imagesquicklybyonlyglancingatthemonce.This method combines region proposal and detection intoasingleframeworkandtreatsobjectdetection as a regression problem. YOLO algorithms can simultaneously classify and position targets in images. (23)

YOLO is efficient and appropriate for real-time applicationsbutcanfailinsceneswithsmallobjects [36].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

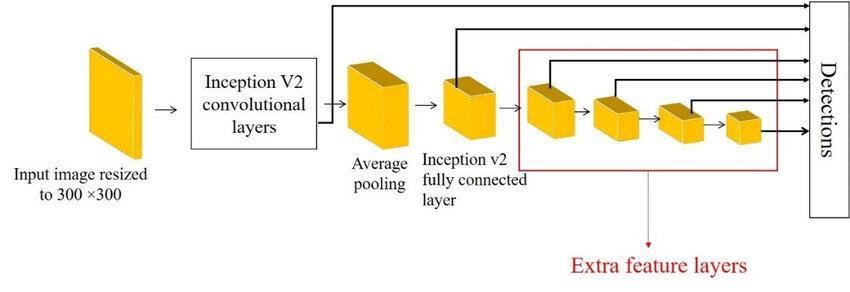

ii. SSD: SingleShotDetectorcreatesseveralbounding boxes of different sizes and aspect ratios from feature maps. It uses default boxes for detection acrossscales[37].Thisalgorithm’susageofamultiscalefeaturemaphasinfluencedsubsequenttarget detectiontechniques.Thearchitectureisshownin Fig-5.

Fig -5:SSDarchitecture[62]

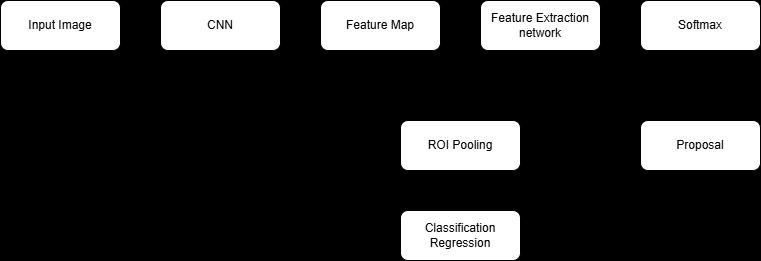

iii. Faster R-CNN: This approach utilizes Region Proposal Network (RPN) instead of a traditional selective search technique, shown in Fig -6. This RPN significantly reduces irrelevant region recommendations, leading to faster picture processing forthemodel [38],whichsignificantly acceleratesdetection:

RegionProposals=RPN (FeatureMaps) (24)

wheretheRPNproducesproposalsdirectlyfrom featuremaps.

-6:Faster-RCNN[54]

iv. Deformable DETR (Detection TRansformer): Deformable DETR is an extension of the original DETR model by adding deformable attention that enablesittofocusontherightregions:

(25)

Thisapproachiscomputationallyefficientandmore suited for handling occlusions and variability in foggyimages[39].

Table-4providesacomparativeanalysisofthediscussed models.

Model Advantage Limitations Application Area

ViolaJones Algorithm [32]

HOG+ SVM[33]

Template Matching [34]

Fast,realtimeface detection

Robustto variations, goodfeature representatio n

Simple, effectivefor known patterns

YOLO[35] Real-time processing

Highfalse positiverate, sensitiveto lighting

Face detection, objecttracking

Computationally expensive Pedestrian detection, vehicle recognition

Poorperformance with scale/rotation changes

Industrial inspection, pattern recognition

Struggleswith smallobjects Real-time detection

Table -4: ComparisonofObjectDetectionModels

Evaluatingobjectdetectionmodels’performanceisessential to understand their performance, especially inchallenging situations such as foggy images. Quantitative measures evaluate the performance of object detection models to ensureaccuracyandreliability.

Themostpopularmeasuresaredescribedhere:

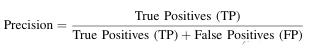

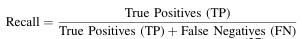

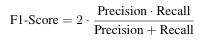

1) Precision, Recall, and F1-Score:

Precision refers to the proportion for detecteditemsthatarecorrectlyidentified. (26)

Recall is the proportion of correctly recognized objects relative to all ground truthobjects. (27)

F1-Score combines Precision and Recall using their harmonic mean, offering a balanced evaluation of a model’s performance (28)

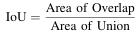

2) Intersection over Union (IoU): It evaluates the overlapoftheexpectedandactualboundingboxes.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

(29)

A greater IoU indicates more accurate object localization.

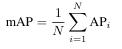

3) Mean Average Precision (mAP): Thestatisticfor object detection is calculated by averaging precisionsoverdifferentIoUthresholdsandclasses.

(30)

where, N is the number of classes, and APi is the averageprecisionforclassi.

7. REAL-WORLD APPLICATION

Object detection is one of the most effective real world applications,particularlyinautonomousdriving,surveillance systems, and traffic management. In these domains, more robustobjectdetectionmodelsarecriticaltoensuresafety andefficiency.

1) Autonomous Driving

Autonomous cars rely heavily on object detection systemsforsafenegotiationanddecision.Ofallbad weather, fog is probably the most challenging to autonomous car navigation as it reduces visibility and, hence, missed detections [37], [13], [14]. Implementationsusuallyentail:

IntegrationwithMulti-SensorData:Fora better detection accuracy, autonomous vehicles correlate camera data with less sensitiveLiDARandradarinputsduetofog effects. Due to such integration, cameras alongwithotheropticalsensorsdonotlose vigilanceeveninsuchscenarios[7],[40].

Adaptive Algorithms for Fog Density:In these systems, there are models that can learnadaptivethresholdsbasedonactual in-situ fog density measures to improve performanceevenwithchangingvisibility conditions[41],[42].

2)

Advanced models that include pre-processing techniqueslikecontrastenhancementanddehazing enhance reliability in surveillance systems under such scenarios [5], [43]. Some of the implementationsare:

Real-Time Processing: Fast object detectionmodelssuchasYOLOareatthe forefront, mainly because of its real-time capabilities that are highly in demand for surveillance applications, thus ensuring rapidresponsetime[44],[45].

Enhanced Image Clarity: Multi-scale RetinexalgorithmandGAN-baseddehazing

inthepreprocessingstepswillenhancethe qualityofimagesfedtothedetectionmodel [6],[46].

3) Traffic Monitoring and Control

Accuratevehicleandpedestriandetectionarevery important for managing traffic efficiently and optimizing it while improving the safety environment.Normalcamerasusedintrafficdonot distinguish objects when it is foggy, and hence accidents and jams occur [47], [48]. There are various ways through which advanced object detectionsystemscouldworktopreventthis:

Fog-ResilientModels: Asreportedin[49], [50], CNN and hybrid CNN-Transformerbased implementations maintain better accuracycomparedtotraditionalmodelsin casesofpoorvisibility.

DeploymentofPre-ProcessingPipelines: Strongpipelinesintrafficcamerasimprove video feeds in upwind that draw out performance for the subsequent object detectionalgorithmsasreportedin[4],[8].

Someofthepromisingfuturedirectionstowardsenhancing detectionperformanceinadverseweatherconditionsare:

1) Improved Data Augmentation: Advanced augmentation techniques, such as GAN-based synthetic fog generation, can be instrumental in generating multiple training datasets to result in increasedmodelrobustnessinvaryinglevelsoffog density[6],[45].

2) Lightweight Models: Developing lightweight models is a must for the applications of real-time computation. Techniques like model pruning, quantization,andknowledgedistillationcanreduce computingoverhead[44].

3) Hybrid Approaches: Thehybridapproachmakes use of the strengths of traditional methods like CLAHE along with deep learning techniques for improvingperformance[13],[47].

4) Multimodal Fusion: Combingtogetherdatafrom multimodality, i.e., thermal imaging plus radar be sides visual data leads to improving detection ac curacywhiledrivingthroughseverefogsituations [48].

5) Explainable AI (XAI): Integrating explainable AI techniques improves trust and interpretability in decisionsmadebythesemodelswhiledeployedin very safety-critical applications of autonomous drivingsystems[4].

6) Standardized Evaluation Frameworks: Developing standardized evaluation metrics and benchmarks tailored for foggy conditions can

2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

streamline performance assessment across differentmodels[40].

Focusing on future developments as propounded here promisestocontributetowardstheimprovementinrobust yetefficientsystemsoperatingunderadverseweather.

Detecting objects in foggy situations is an important researchtopicwithnumerousapplicationsindriverlesscars, surveillance,andenvironmentalmonitoring.Thechallenges introducedbyfog,suchaslowvisibility,lowcontrast,and distortion of images, necessitate robust and adaptive solutions. This survey has given an overview of all developmentsregardingpreprocessing,imageenhancement, featureextraction,andobjectdetectionmodelstailoredfor foggyenvironments.Buildingonpriorstudiesandblending recentdevelopments,wefurtherhighlightedstate-of-the-art approaches, such as Vision Transformers, GAN-based methods for dehazing images, and hybrid models that employpreprocessinginconjunctionwithpipelinedetection approaches[11],[13],[44].Comparativestudyrevealedthe trade-offsbetweenhighaccuracy,computationalefficiency and robustness, which indicated the necessity of context specificsolutions[45],[48].

Though tremendous success has been achieved, there are still challenges like domain generalization, diversity in datasets, and computational limitations. Future research should focus on constructing lightweight and explainable models, multi-modal data integration, and standardized evaluation frameworks [4], [40]. Adopting these methods willleadtomoredependableandefficientobjectdetecting systems,improvingsafetyandfunctionalityduringsevere weathersituations.Thissurveycombinesideasfromseveral studies and intends to help researchers and practitioners advancethefieldofobjectidentificationinfoggysituations by synthesizing insights and providing actionable recommendations.

[1] E. Arkin, N. Yadikar, X. Xu, A. Aysa, and K. Ubul, “A survey: object detection methods from CNN to transformer,” Multimedia Tools and Applications, vol. 82,no.14,pp.21353–21383,2023.

[2] D. Gudelj, A.-F. Stama, J. Petrovi´c, and P. Pale, “Visual object detection an overview of algorithms and results,” in Proc. 44th Int. Conv. on Information, Communication and Electronic Technology (MIPRO), 2021,pp.1727–1732.

[3] A.E.Abbasi,A.M.Mangini,andM.P.Fanti,“Objectand pedestrian detection on road in foggy weather conditions by hyper parameterized YOLOv8 model,” Electronics,vol.13,no.18,p.3661,2024.

[4] M.Kondapally,K.K.Naveen,C.Vishnu,andC.K.Mohan, “Towards a transitional weather scene recognition approachforautonomousvehicles,”IEEETrans.Intell. Transp.Syst.,vol.25,no.6,pp.5201–5210,2023.

[5] G. Revathy, E. Gurumoorthi, A. Y. Felix, and S. D. P. Ragavendiran,“Preciseobjectdetectioninchallenging foggydrivingconditionswithdeeplearning,”2023.

[6] N. D. Vo, P. Nguyen, T. Truong, H. C. Nguyen, and K. Nguyen,“Foggy-DOTA:Anadverseweatherdatasetfor object detection in aerial images,” in Proc. 9th NAFOSTEDConf.Inf.Comput.Sci.(NICS),2022,pp.269–274.

[7] W. Wu, H. Chang, Z. Chen, and Z. Li, “Plug-and-play robust aerial object detection under hazy conditions,” IEEEJ.Sel.Top.Appl.EarthObs.RemoteSens.,2024.

[8] K.Hu,F.Wu,Z.Zhan,J.Luo,andH.Pu,“High-lowlevel taskcombinationforobjectdetectioninfoggyweather conditions,”J.Vis.Commun.ImageRepresent,vol.98,p. 104042,2024.

[9] M. Hahner, D. Dai, C. Sakaridis, J.-N. Zaech, and L. Van Gool, “Semantic understanding of foggy scenes with purelysyntheticdata,”inProc.IEEEIntell.Transp.Syst. Conf.(ITSC),2019,pp.3675–3681.

[10] T. Singh, “Foggy image enhancement and object identificationbyextendedmaximaalgorithm,”inProc. Int. Conf. Innovations in Control, Communication and InformationSystems(ICICCI),2017,pp.1–5.

[11] S. Shit, D. K. Das, and D. N. Ray, “Real-time object detectionindeepfoggyconditionsusingtransformers,” inProc.3rdInt.Conf.Artificial IntelligenceandSignal Processing(AISP),2023,pp.1–5.

[12] B. Kumar, U. Garg, M.S. Prakashchandra, A. Mishra, S. Dey,A.GuptaandO.P.Vyas,“Efficientreal-timetraffic management and control for autonomous vehicle in hazy environment using deep learning technique,” in Proc. IEEE 19th India Council Int. Conf. (INDICON), 2022,pp.1–7.

[13] X.Liang,Z.Liang,L.Li,andJ.Chen,“AODs-CLYOLO:An object detection method integrating fog removal and detectioninhazeenvironments,”Appl.Sci.,vol.14,no. 16,2024.

[14] M. Mahaadev, D. Ghosh, M. Dogra, and G. Gupta, “A hybridapproachfordetectioninfoggyenvironmentsfor selfdrivingcars:YOLOandMSRCRtechniques,”inProc. 4th IEEE Global Conf. Advancement in Technology (GCAT),2023,pp.1–6.

[15] Q. Wang and Y. Yuan, “Learning to resize image,” Neurocomputing,vol.131,pp.357–367,2014.

[16] P. J. M. Ali and R. H. Faraj, “Data normalization and standardization:atechnicalreport,”Mach.Learn.Tech. Rep.,vol.1,no.1,pp.1–6,2014.

[17] B.Dhruv,N.Mittal,andM.Modi,“Analysisofdifferent filters for noise reduction in images,” in Proc. Recent

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Dev.Control,Autom.&PowerEng.(RDCAPE),2017,pp. 410–415.

[18] E. Lee, S. Kim, W. Kang, D. Seo, and J. Paik, “Contrast enhancementusingdominantbrightnesslevelanalysis and adaptive intensity transformation for remote sensingimages,”IEEEGeosci.RemoteSens.Lett.,vol.10, no.1,pp.62–66,2012.

[19] D.Ulyanov,A.Vedaldi,andV.Lempitsky,“Deepimage prior,”inProc.IEEEConf.Comput.Vis.PatternRecognit. (CVPR),2018,pp.9446–9454.

[20] J. Qiu and K. Xie, “A GAN-based motion blurred image restorationalgorithm,”inProc.IEEEInt.Conf.Software Eng.andServiceScience(ICSESS),2019,pp.211–215.

[21] S. Lee, S. Yun, J.-H. Nam, C. S. Won, and S.-W. Jung, “A review on dark channel prior based image dehazing algorithms,”EURASIPJ.ImageVideoProcess.,vol.2016, pp.1–23,2016.

[22] A. S. Parihar and K. Singh, “A study on Retinex based methodforimageenhancement,”inProc.2ndInt.Conf. InventiveSystemsandControl(ICISC),2018,pp.619–624.

[23] A. W. Setiawan, T. R. Mengko, O. S. Santoso, and A. B. Suksmono, “Color retinal image enhancement using CLAHE,”inProc.Int.Conf.ICTforSmartSociety,2013, pp.1–3.

[24] B.Cai,X.Xu,K.Jia,C.Qing,andD.Tao,“Dehazenet:An end-to-endsystemforsingleimagehazeremoval,”IEEE Trans. Image Process., vol. 25, no. 11, pp. 5187–5198, 2016.

[25] T. Zheng, T. Xu, X. Li, X. Zhao, F. Zhao, and Y. Zhang, “Improved AOD-Net dehazing algorithm for target image,” in Proc. 5th Int. Conf. Computer Eng. and IntelligentControl(ICCEIC),2024,pp.333–337.

[26] S.Liu,L.Dai,H.Dong,B.Zhang,andX.Wu,“Adehazing algorithmforendoscopicimagesbasedonCycleGAN,” inProc.7thIEEEInt.Conf.ElectronicInformationand Communication Technology (ICEICT), 2024, pp. 497–502.

[27] C.Tomasi,“Histogramsoforientedgradients,”Computer VisionSampler,vol.1,pp.1–6,2012.

[28] T.Lindeberg,“Scaleinvariantfeaturetransform,”2012.

[29] H.Bay,T.Tuytelaars,andL.VanGool,“Surf:Speededup robustfeatures,”inComputerVision–ECCV2006,Graz, Austria,May7–13,2006,pp.404–417.

[30] D.Sarwinda,R.H.Paradisa,A.Bustamam,andP.Anggia, “Deep learning in image classification using residual network (ResNet) variants for detection of colorectal cancer,” Procedia Comput. Sci., vol. 179, pp. 423–431, 2021.

[31] H.Zhangetal.,“HF-HRNet:Asimplehardwarefriendly high resolution network,” IEEE Trans. Circuits Syst. VideoTechnol.,2024.

[32] J. Beal et al., “Toward transformer-based object detection,”arXivpreprintarXiv:2012.09958,2020.

[33] Y.-Q.Wang,“AnanalysisoftheViola-Jonesfacedetection algorithm,”ImageProcess.OnLine,vol.4,pp.128–148, 2014.

[34] H. S. Dadi, G. K. M. Pillutla, et al., “Improved face recognitionrateusingHOGfeaturesandSVMclassifier,” IOSRJ.Electron.Commun.Eng.,vol.11,no.04,pp.34–44,2016.

[35] N. S. Hashemi, R. B. Aghdam, A. S. B. Ghiasi, and P. Fatemi,“Templatematchingadvancesandapplications in image analysis,” arXiv preprint arXiv: 1610.07231, 2016.

[36] X.ShiandA.Song,“DefogYOLOforroadobjectdetection infoggyweather,”Comput.J.,vol.67,no.11,pp.3115–3127,2024.

[37] A. Kumar, Z. J. Zhang, and H. Lyu, “Object detection in real time based on improved single shot multi-box detectoralgorithm,”EURASIPJ.Wirel.Commun.Netw., vol.2020,no.1,p.204,2020.

[38] B.Liu,W. Zhao,and Q.Sun, “Study ofobjectdetection basedonFasterR-CNN,”inProc.ChineseAutom.Congr. (CAC),2017,pp.6233–6236.

[39] X. Zhu et al., “Deformable DETR: Deformable transformers for end-to-end object detection,” arXiv preprintarXiv:2010.04159,2020.

[40] Q.Qin,K.Chang,M.Huang,andG.Li,“Denet:Detection drivenenhancementnetworkforobjectdetectionunder adverse weather conditions,” in Proc. Asian Conf. Comput.Vis.,2022,pp.2813–2829.

[41] ¨O.U.Akg¨ul,W.Mao,B.Cho,andY.Xiao,“VFogSim:A data-driven platform for simulating vehicular fog computingenvironment,”IEEESyst.J.,vol.17,no.3,pp. 5002–5013,2023.

[42] Z.Zhang,H.Zheng,J.Cao,X.Feng,andG.Xie,“FRS-Net: Anefficientshipdetectionnetworkforthin-cloudand fog-covered high-resolution optical satellite imagery,” IEEEJ.Sel.Top.Appl.EarthObs.RemoteSens.,vol.15, pp.2326–2340,2022.

[43] M.Yang,“Visualtransformerforobjectdetection,”arXiv preprintarXiv:2206.06323,2022.

[44] Y. Qiu, Y. Lu, Y. Wang, and H. Jiang, “IDOD-YOLOV7: ImagedehazingYOLOV7forobjectdetectioninlow-light foggy traffic environments,” Sensors, vol. 23, no. 3, p. 1347,2023.

[45] G. Li, Z. Ji, X. Qu, R. Zhou, and D. Cao, “Cross-domain object detection for autonomous driving: A stepwise domainadaptativeYOLOapproach,”IEEETrans.Intell. Veh,vol.7,no.3,pp.603–615,2022.

[46] F.Ma,M.Z.Shou,L.Zhu,H.Fan,Y.Xu,Y.Yang,andZ.Yan, “Unified transformer tracker for object tracking,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR),2022,pp.8781–8790.

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

| Page154

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

[47] L. Xie, Z. Li, F. Zhu, G. Xiong, and B. Tian, “Foggy nonmotor vehicle detection algorithm based on image enhancement and full-dimensional dynamic convolution,”inProc.IEEEInt.Conf.DigitalTwinsand ParallelIntelligence(DTPI),2023,pp.1–6.

[48] X. Meng, Y. Liu, L. Fan, and J. Fan, “YOLOv5s-Fog: An improvedmodelbasedonYOLOv5sforobjectdetection infoggyweatherscenarios,”Sensors,vol.23,no.11,p. 5321,2023.

[49] A.B.AmjoudandM.Amrouch,“Objectdetectionusing deeplearning,CNNsandvisiontransformers:Areview,” IEEEAccess,vol.11,pp.35479–35516,2023.

[50] M.A.Hossain,M.I.Hossain,M.D.Hossain,N.T.Thu,and E.-N.Huh,“Fast-D:Whennon-smoothingcolorfeature meets moving object detection in real-time,” IEEE Access,vol.8,pp.186756–186772,2020.

[51] Q.ZhangandX.Hu,“MSFFA-YOLOnetwork:Multiclass object detection for traffic investigations in foggy weather,”IEEETrans.Instrum.Meas.,vol.72,pp.1–12, 2023.

[52] S. Kar and M. El-Sharkawv, “Object detection using vision transformed EfficientDet,” in Proc. NAECON 2023–IEEENat.Aerosp.Electron.Conf.,2023,pp.214–220.

[53] M.Kriˇsto,M.Ivasic-Kos,andM.Pobar,“Thermalobject detection in difficult weather conditions using YOLO,” IEEEAccess,vol.8,pp.125459–125476,2020.

[54] M.T.Hosain,A.Zaman,M.R.Abir,S.Akter,S.Mursalin, and S. S. Khan, “Synchronizing object detection: applications, advancements and existing challenges,” IEEEAccess,2024.

[55] N. Guptha M, Y. K. Guruprasad, Y. Teekaraman, R. Kuppusamy,andA.R.Thelkar,“[Retracted]Generative adversarialnetworksforunmannedaerialvehicleobject detectionwithfusiontechnology,”J.Adv.Transp.,vol. 2022,no.1,p.7111248,2022.

[56] T. Nowak, M. R. Nowicki, K. ´ Cwian, and P. Skrzypczy´nski,“Howtoimproveobjectdetectionina driver assistance system applying explainable deep learning,”inProc.IEEEIntell.Veh.Symp.(IV),2019,pp. 226–231.

[57] https://www.kaggle.com/datasets/yessicatuteja/foggycityscapesimage-dataset

[58] https://www.kaggle.com/datasets/washingtongold/fog gy-driving

[59] https://github.com/MartinHahner/FoggySynscapes

[60] https://github.com/sakaridis/fog-simulation-DBF

[61] https://deep-learning-study.tistory.com/m/825

[62] https://www.researchgate.net/figure/Single-ShotDetector-SSDarchitecture/fig4/342684792

Namrata Deshmukh, M.Tech Scholar, Computer Engineering Department,SGSITS,Indore,MP. Dr. Anuradha Purohit, Head & Professor, Computer Engineering Department,SGSITS,Indore,MP.