International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Shubham Bangar1 , Tushar Bansod2 , Samarth Badadhe3 , Nikhil Bhot4 , Anant Bagade5

¹Department of Information Technology, Pune Institute of Computer Technology, Pune, India

²Department of Information Technology, Pune Institute of Computer Technology, Pune, India

³Department of Information Technology, Pune Institute of Computer Technology, Pune, India

⁴Department of Information Technology, Pune Institute of Computer Technology, Pune, India

⁵Professor, Department of Information Technology, Pune Institute of Computer Technology, Pune, India

Abstract - With technology development at breakneck speed, the need for advanced surveillance systems is become increasingly critical, especially when it comes to ensuring safety in high-risk environments. This work aims to develop Smart Surveillance and Crisis Detection tools for emergencies, so identifying weapon use, physical altercations, theft, and distress sounds, by evaluating real-time video and audio data using machine learning. It looks at the video and audio streams using artificial intelligence techniques using CNN, RNN, SVM, and YOLO for theft detection to help to identify significant events and notify relevant authorities.The project combines several detection models: object detection models forms the basis of theft detection, scream detection uses audio classification methods, and fight detection uses deep learning architectures. By means of training over several datasets, the system gains the capacity to consistently distinguish between regular tasks and emergencies. The main goals of the system are to increase public safety and response times in homes, stores, malls, and industrial sites. The proposed solution will create a reliable monitoring and emergency management segment and will do so with high efficiency and automation, thus enhancing the security level in various environments.

Key Words: Smart Surveillance, Crisis Detection, Machine Learning, Video Analysis, Audio Analysis, Weapon Detection, Fight Detection, Scream Detection, Theft Detection, Deep Learning, CNN, RNN, SVM, YOLO, Public Safety, Emergency Response.

Safety and security have driven high-risk environments including malls, residential areas, businesses, and public spaces to expand quickly. This expanded market has been accommodating for intelligent surveillance systems. In contrast to being effective at monitoring, traditional surveillance systems usually lack the ability to detect and respondtoapotentialcrisisinreal-time.Thisrealizationhas led to the intelligent development of surveillance systems powered by AI, which can not only surveil but also timely detect critical events such as weapon use, theft, physical altercation,fire,andevendistresssounds.

AI and ML have rapidly evolved to allow building higher autonomoussystemsforcrisisdetection.Suchsystemswould

trigger an automatic alarm to detect an emergency and mitigatethesafetymeasuresandtimeresponse.Theproject will focus on achieving an intelligent alerting surveillance systemthatwouldanalyzebothvideoandaudiolivedatain real time by employing advanced AI models such as CNN, RNN, and SVM. This system would notify the relevant authorities during emergencies, including physical altercations, weapon usage, thefts, and distress signals, enablingpromptintervention.

Thecoreofthisprojectisthefightdetectionmodule,which utilizes video inputs to identify violence between persons. CNNsandRNNsamongotherdeeplearningapproacheswill becombinedwithtraditionalmachinelearningapproachesin thiswork.WhileRecurrentNeuralNetworks(RNNs)handle sequential data, enabling the capture of temporal relationships in video sequences and the detection of the dynamics ofphysical altercations over time,Convolutional NeuralNetworks(CNNs)shineatextractingspatialfeatures fromvideoframes.Apartfromvideoprocessing,theproject alsoanalyzessoundsignalsthatmaysignifydistress,suchas human screams. For this purpose, the audio classification modelsclassifytheinputsafterextractingfeaturesfromthe sounddatabasedontheMel-FrequencyCepstralCoefficients (MFCCs).Thesefeaturesareadeptincapturingthespectral characteristics of sound and are conventionally used in speechandaudiorecognitionapplications.Theclassification processinvolvesmultiplemachinelearningalgorithmssuch asSVMandLogisticRegressionaswellasseveralensemble methods toclassifytheaudio correctlyas eithera distress signal(forinstance,ascream)oranon-distresssound.

Second,theweapondetectionmoduleisintendedtodetect thepresenceofweapons,whichisafundamentalcomponent incrisismanagement.ThesystemutilizestheYouOnlyLook Once(YOLO)objectdetectionalgorithmtoscanandlocate weaponsinvideoframes,thus enhancingtheabilityofthe system to detect potential threats in real-time. YOLO is particularly efficient and accurate in object detection operations, and thus it is an appropriate application in surveillanceuseswherespeedofdetectionisofessence.

Inaddition,thetheftdetectionmoduleisanotherintegral componentofthissystem,withtheresponsibilityofdetecting suspiciousbehaviorfortheft,suchasunauthorizedentryor unauthorizedremoval ofmerchandise. Thesystemmakes

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

use of YOLO for real-time theft detection by means of its capacitytorecognizeparticularobjectsoractivitiesindicative oftheft,suchbagsorremovalwithoutpermission.Inplaces likeretailstoresorhigh-riskareaswheretheftiscommon, thismoduleisabsolutelyvital.YOLOisaveryvaluabletool for surveillance systems requiring quick and accurate identification since its effectiveness in spotting such circumstancesisremarkable.

Trainedandtestedonadiversedatasetcomprisingevents ofactualviolence,fightdetectionvideos,screamaudiofiles, weapon detection videos, and theft detection events, the systemunderdiscussionherewillThedatasetwillexposethe system to experience with regard for patterns for every distinct category of crisis scenario and enable effective generalization over many contexts and surroundings. By meansofbothvideoandaudioprocessingofdata,thesystem will offer a more complete method of crisis detection, so guaranteeing better accuracy and dependability in the identificationofpossiblehazards.

Thisproject'sprimaryobjectivesare

to build an integrated surveillance system using video and audio inputs that can identify a broad spectrumofemergencyevents.

While using SVM, logistic regression, and more classifiersforaudio-basedcrisisdetection,analyze imagesandvideosusingdeeplearningarchitectures includingCNNs,RNNs,andYOLO.

maximize the system for quick, low-latency processingtoincreasereal-timedetectionefficacy.

Provide law enforcement departments, security guards, and other interested parties an easy interfacesotheymaygetreal-timealarmsandact accordingly.

Thisproject'slong-termobjectiveistocreateanintelligent, AI-based surveillance system so enhancing security and safetyinsensitivesurroundings.Real-timeidentificationof possible crises made possible by this technology helps to possibly lower reaction times, increase public safety, and offer more consistent monitoring in cases when more traditionalsystemsareinsufficient.Thisprojectintendsto contributetothedevelopingfieldofintelligentsurveillance systemsbyintegratingmodernmachinelearninganddeep learningtechniques,soprovidingareal-worldsolutiontoone ofsociety'smainconcerns:safetyinreal-time.

Study of the Literature Recent advances in intelligent surveillance systems, using machine learning and deep learningapproaches,havefocusedonreal-timedetectionof theft, violence, and weapon presence. Many studies have

presentedmodelsmaximizingaccuracyanddeploymenton deviceswithlimitedresources,suchasembeddedsystems.

Identifying Violence Notable contribution to the field of violence detection is the work of Moreira et al., which presented an end-to end Bag-of- Visual-Words (BoVW) frameworkfordetectingviolenceinsurveillancefootage.For classification they used Fisher Vector representation with Support Vector Machines (SVM), and for spatiotemporal interestpointdetectionTemporalRobustFeatures(TRoF). Prioritizing low memory consumption and real-time processing, especially for resource-limited hardware, this methodshownbetterruntimeandclassificationefficacyon theMediaEvalViolentScenesDetection(VSD)taskdataset [1].

BymodifyingMobileConvolutionalNeuralNetworks(CNNs) for embedded platforms such Raspberry Pi, Vieira et al. made significant progress in low-cost, real-time violence recognition.Withanaccuracyof92.05%andathroughputof 4 frames per second, they made use of MobileNet, SqueezeNet,andNASNetMobile,soproducingtechnologies fitforreal-timeuseonresource-limiteddevices[2].

ToinvestigatetheidentificationofactualfightsinCCTVdata, Perezetal.combinedTemporalRobustFeatureswithtwostream CNNs and 3D CNNs. By means of temporal segmentation of vast surveillance data, their approach effectivelyidentifiedviolentepisodesinreal-worldsettings using datasets such as CCTV-Fights and Moments in Time [3].

Finding Armaments Using a customized weapons dataset, trained on the YOLOv3 object identification model, Narendrajo et al. have attracted attention for real-time weapon detection in surveillance systems. Their method combinedsocketprogrammingwithsecurityinfrastructure to enable real-time weapon detection using minimum computerresources[4].

To extend the YOLO framework, Siri et al. similarly employed YOLOv4 in concert with machine learning and deeplearningtechniques.Theirhybridmodelshowedgreat accuracyevenwithchanginglightingbyimprovingdetection accuracyviaNon-MaximumSuppression(NMS)[5].Suganya etal.improvedweapondetectionusingYOLOv7,soreducing falsepositivesandincreasingdetectionspeedandaccuracy, so proving its usefulness for application in several surveillanceenvironments[6].

Identification of Theft Arora et al. built a motion-sensing systemusingmachinelearningclassifierstoidentifytheftin surveillance footage. Their system delivered real-time alarms derived from camera inputs, so displaying its suitabilityforautomatedsurveillance[7].

Todetectsnatchtheftinvideofeeds,MandalandChoudhury compared deep learning models including AlexNet,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

GoogleNet, and ResNet variants. Their study clarified the choiceofthemostsuitablemodelsforprecisetheftdetection fromsecuritycameras[8].UsingYOLOandCNN,Yeshwanth Reddy et al. created an artificial intelligence-driven framework for theft and robbery detection. Using pretrained models on customized monitoring datasets, the architecture focused on identifying unusual activity and generatingalarms.Strongperformanceoftheirgadgetunder severalenvironmentalconditionsmadeitsuitableforuseful purposes[9].

SoundDetectionVisualdetectionofaudio-basedtechniques has been investigated in order to enhance surveillance systems.ArslanproposedWarpedLinearPrediction(WLP) andGaussianMixtureModels(GMM)basedimpulsivesound identification technique for real-time surveillance applications.Hismethodimproveddetectionaccuracy[10] usingtheDCASE2017Task-2data.WithMelSpectrograms and Short-Time Fourier Transform (STFT), Pratama et al. used CNNs to classify crimes and accidents from audio recordingswithanaccuracyof93.337%[11].

OjhaandVenkateswardevelopedhighaccuracyforreal-time screamdetectionbyusingHiddenMarkovModels(HMM)in combination with Mel Frequency Cepstral Coefficients (MFCC) and Gaussian Mixture Models (GMM) in noisy environments [12]. Achieving over 90% accuracy in realtime,Dr.P.K.VenkateswarLaletal.classifiedscreamsand distributed location-based alarms using Support Vector Machines(SVM)andMultilayerPerceptron(MLP[13]).Gao etal.improvedscreamrecognitionbyusingahybridCNNGRUmodel,soincreasingdetectionaccuracyandspeedin noisyenvironments[14].

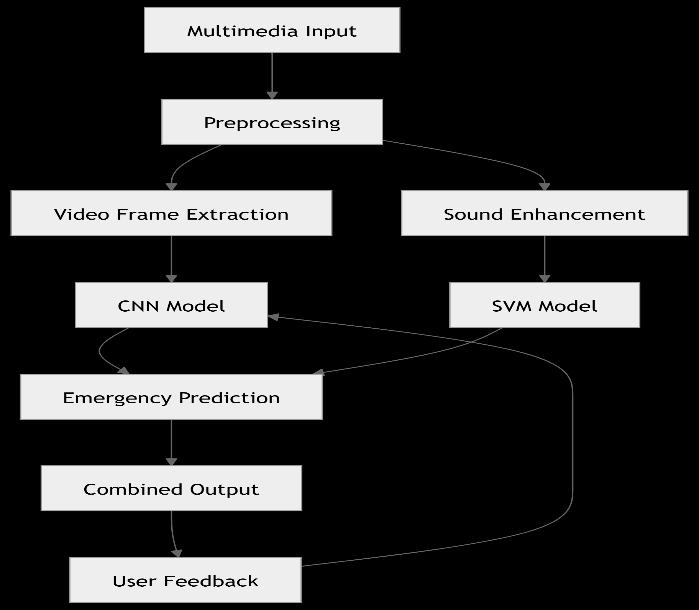

Theapproachincludesthedesignofaneffective,stable,and scalable emergency detection system for intelligent surveillance.ThesystemuseslatestmodelslikeCNN,RNN, and YOLO for the detection of different emergencies like violence,useofweapons,theft,anddistresscallstoprovide real-timedetectionwiththeleastcomputationexpense.The step-by-step procedure for developing and deploying the systemispresentedinthissection.

The process of research begins with the collection of heterogeneous and large datasets, obtained from trusted repositoriesandsurveillancenetworks.Thedatasetsinclude abroadspectrumofemergencyevents,suchasrecordingsof physical altercations, weapon usage, robbery cases, and distresscalls.Toimpartheterogeneityandrobustnesstothe dataset,thedataiscurate-dtogathersamplesfromdiverse settings,e.g.,urbanareas,shoppingmalls,residentialareas, andindustrialareas. Videoframeinterpolation,audiodata conversion, and synthetic data generation are among the

methodsusedindataaugmentationonsizeanddiversityto reduceoverfittingriskduringtraining..

Clean,normalize,andpreparetherawdataformodelinput by means of data preprocessing; the following sub-steps comprisethisphase:

VideoFrameExtraction:Whereunnecessaryframes areeliminated,videosarebrokenupintoframesto enableimage-basedprocessing.

Mel-frequency cepstral coefficients (MFCCs) are extracted in order to concentrate on key sound patternspertinenttodistresssignalsandscreams.

FrameNormalisation:Videoframepixelvaluesare standardised to guarantee homogeneity across frames,sonormalisingtheinput.

SoundEnhancement:Audiosignalsaresubjectedto noise lowering methods to raise the quality and clarityofscreamsordistresssounds.

Forvideodata,2DConvolutionalNeuralNetworks(CNN)are utilizedtoextractthespatialfeaturesforeveryframeinthe video.TemporalfeaturesarealsoobtainedusingRecurrent Neural Networks (RNNs) so that the timeline can be interpreted.Foraudiodata,featureextractioniscarriedout basedonextractingchangesinthefrequencyandamplitude ofhumancriesandscreamstomakedetectionmoreaccurate. Short-time Fourier transform (STFT) and Mel-frequency cepstralcoefficients(MFCC)areused.

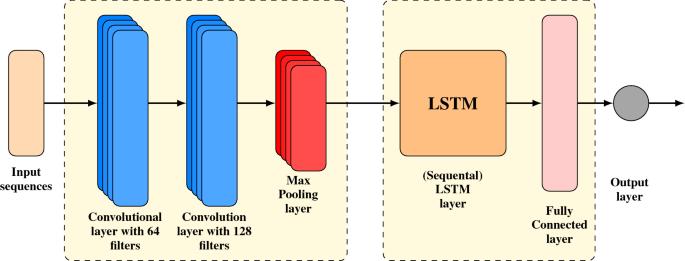

The system to be proposed uses a hybrid architectural approach that combines Convolutional Neural Networks (CNN)withLongShort-TermMemory(LSTM)networksto ensure thateffective emergencydetection is realized.CNN elementsareusedtoextractspatialfeaturesofcharacteristics fromasingleframeofvideo,whileLSTMelementsareused to analyze the temporal aspect of the video, thus allowing detectionofdynamiceventssuchasaltercationsorweapon use. In weapon detection,as well as instances of theft, the modelusesYOLO(YouOnlyLookOnce).YOLOisastate-ofthe-art object detection system that provides real-time detection ability, thus making it very effective in quickly detectingweaponsandpotentialtheftsinsurveillancevideos. Thesystemsendssecurityagentsimmediatealarmswhenit detectsweapons,knives,andotherpotentiallydangerousobj ects.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Importantcharacteristicsofthemodelare:

CNN-LSTM Hybrid: Crucially for identifying events like physicalaltercations,CNNextractsfeaturesfromindividual frameswhileLSTMcapturestemporaldependenciesacross frames.

Fig. 1:CNNLSTMArchitecture

YOLO for Weapon and Theft Detection: YOLO models moreespecially,YOLOv3andYOLOv4 aretunedtoidentify weapons and possible theft situations. When weapons or suspiciousobjects(suchstolengoods)arefound,thismodel guaranteesfastidentificationandalerting.

3.5 Classification and Decision Making

Afterthat,theCNN,LSTM,andYOLOmodelfeaturesarefeda decision-makinglayerthatlabelstheeventintopredefined classes:fight,weapondetection,theft,ordistresssound.The decision-makinglayerfusestheoutputsfromvideoandaudio inputs using a multi-modal classification technique. A threshold-based decision plan is used to produce alarms basedontheintensityoftheeventdetected.

Fight Detection: The CNN-LSTM model classifies videos into fight or non-fight categories based on visualandtemporalcues.

Weapon and Theft Detection:YOLOsendsalerts when knives, firearms, or suspicious behavior is found.

Scream Detection: Models trained on MFCC features classify audio data to detect whether distresssounds suchasscreams arepresent.

The resilience of the model over several data splits is validatedusingcross-valuationtechniquesincludingk-fold validation. Real-time testing is also conducted by implementingthesysteminasimulationenvironmentwhere itreceiveslivevideoandaudiostreamsandprocessesthem tomeasureperformanceinreal-worldconditions.

Oneofthemostimportantaspectsofthesuggestedsystemis real-timeadaptability.Thesystemiscapableofworkingwith livesurveillancefeed,processingthevideoandaudiostreams inrealtime.Themodelisretrainedfromtimetotimewith newdatatoadapttochangingthreatsandchangingpatterns ofemergenciessothatthedetectionsystemstaysinlinewith thechangingtimesandeffectiveinchangingenvironments.

Sincethesystemistobedeployedinvariousenvironments, noisyandmultilingualdataprocessingmustbedone.Noise reductionalgorithmsareusedonbothaudioandvideodata toeliminateredundantinformationandconcentrateonthe prominent features that signal emergencies. Multiple languagesinaudioandvideodataarealsoprocessedusing multilingualmodelstoenablethesystemtobedeployablein vastnumbersoflocations.

Theapproachpreparesoneforimprovementsincluding:

IntegrationofExternalDataSources:publicsafety reports helpstoimprovedetectionaccuracyand widentherangeofemergencyscenarios.

User Feedback for Continuous Improvement: Mechanisms built in allow users to report false alarmsormisseddetections,soenablingthesystem togrowbymeansofongoinglearning.

MultimodalInputAnalysis:Extendingthesystem to include more data types, such sensor data or metadata from surveillance cameras, will help to improvethegeneraldetectionaccuracy.

Scalability:Ensuringthesystem'scapacitytoscale forbig-scaledeploymentovermetropolitanareasby meansofcloud-basedinfrastructurestoeffectively manageenormousdataflows.

Combining CNN, LSTM, YOLO for theft and weapon detection gives the suggested approach a complete, scalable,andeffectivesolutionforreal-timeemergency detectioninsmartsurveillancesystems.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

4.1UserInputandSubmission

Thetechnologystartswithuserreal-timevideoandaudiofeeds. This could live streaming, audio, or video feed from CCTV cameras.Theinputisflexible;videocanbefedMP4orMKVand audioinWAVorMP3.Streamingdatacanalsobefedinforrealtime crisis detection. There is a secure input channel that ensures data privacy and background validation checks are conductedtoguaranteeauthenticityandtimelinessoftheinput dataforprocessing.

4.2PreprocessingStage

Thesystemstartswithliveaudioandvideoinputsfromthe users.maybevideoinputfromCCTVcameras,audio,orlive streamingIt.Theinputisadaptablewherevideomaybeinput invariousformatssuchasMP4orMKVandaudioinWAVor MP3formats.Streamingdatamayalsobeinputforreal-time crisisdetection.Thereisanencryptedinputchanneltoprovide confidentialityofthedataandbackgroundverificationchecks areconductedtoauthenticateandtimelynatureoftheinput dataforprocessing.

4.3FeatureExtractionandVectorization

After preprocessing, the system extracts useful features to preparethedataforclassification.Forvideodata,featuresare extractedfrom individual frames inthespatial featureform throughConvolutionalNeuralNetworks(CNNs).Thetemporal relationshipinthevideosequenceisthenprocessedthrough RecurrentNeural Networks(RNNs)to enablethesystemto recognize events that occur over time, such as physical confrontations. For audio data, the MFCCs are processed to obtainthespectralfeatures,whicharevectorizedtobeusedas an input to a machine learning model. The features are in numericalformtoenableefficientprocessing.

4.4ClassificationwithDeepLearningModels

Theyaresubsequentlypassedthroughspecializedclassifiers uponfeatureextraction.ThefightdetectionmoduleusesCNNs and RNNs to process the video data. The spatial feature extractionisdonebyCNN,whileRNNprocessessequentialdata sothatthesystemmayidentifyfightdynamicsinreal-time.The screamdetectionmoduleutilizesaudioclassificationmodels (SVM,LogisticRegression,etc.)toidentifydistresssoundssuch as screams. The weapon detection module utilizes machine learning-basedYOLO(YouOnlyLookOnce)forobjectdetection inreal-timetoidentifyweaponsfromvideostreams.

BothfightdetectionandweapondetectionuseahybridCNNRNN structure for video and a variety of machine learning modelssuchasSVM,RandomForest,andXGBoosttrainedto identifyscreamsorotherdistresssignalsproperlyinaudio.

Theretrainingandfeedbackmoduleisdesignedtoenhancethe systemprogressivelyusingreal-worldfeedback.Wherethere aremisclassificationsfromsecurityagentsorusers,thecases are added to the training dataset. The system utilizes the reportedcasestoretrainandupdatethemodelstoenhance theiraccuracy.Throughthis,thesystemisabletolearnnew patterns,thusbeingeffectiveinclassifyingnewformsofcrises, suchasnewthreatsornewwaysofpossessingweapons.

Afterclassification,thesystemsendsreal-timenotificationsto the responsible authorities. Classification outputs (such as "FightDetected,""WeaponDetected,"and"ScreamDetected") and related data, such as location, occurrence time, and confidencelevels,areincludedinthenotifications.Thesystem can provide a brief description for each classification, highlightingthecharacteristicsorpatternsinthevideooraudio thatresultedintheclassificationoutput,inanefforttopromote opennessandusertrust.

Thisfeatureallowssecuritypersonneltounderstandthecause behindthesystem'snotificationandmakedecisionsonaction prioritiesaccordingly.

Tofacilitatereal-timeperformance,thesystemtakesadvantage of multiple optimization techniques. It applies GPU or TPUbasedaccelerationfordeepmodelstoparallel-computevideo frames and audio features. Batch processing is utilized to processmultiplestreamsorfootageinputs,reducinglatency andincreasingthroughput.Thesystemleveragescloud-based infrastructuretooffloadworkloads,affordingscalabilityand faulttolerance,especiallyinhigh-trafficapplications.Caching technologiescachepreprocessedinformation,andmodelsare

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

optimizedforlow-latencyprocessing,offeringnegligibledelay incrisisdetection.

4.8TheProposedSystem'sBenefits

Thesystemprovidesanumberofsignificantbenefits:

Real-time Detection: CNN, RNN, YOLO, and other classifiersareusedtodetectemergencieslikefights, screams,andtheuseofweaponswithhighaccuracy andspeed.

Multi-modal Input: The system can offer a more thorough method of crisis detection by combining audioandvideoanalysis.

Continuous Improvement: Based onactual usage, thefeedbackandretrainingmoduleallowsthesystem toevolveandgetbetterovertime.

User Transparency: Giving security personnel confidencescoresandalertexplanationsfosterstrust and guarantees that they make well-informed decisions.

Scalability and Performance: The system's scalability and dependability in high-demand situationsareguaranteedbytheutilizationofcloudbased infrastructure and performance optimization strategies.

4.9DifficultiesandRemedialAction

Though it has certain advantages, some difficulties could develop:

Data Imbalance: Emergency and non-emergency eventsmaynotlineupinthedataset.Artificialdata generation,undersampling,oroversamplingallhelpto solvethis.

ModelGeneralization: Thesystemhastobeableto effectively traverse several crisis situations and surroundings.Onecanreducethisbyusingavaried trainingsetincludingdifferentsituationaldata.

ComputationalLoad: Real-timeprocessingofvideo andaudiocouldtaxresourcesduetheircomplexity. These difficulties can be reduced by preprocessing edge devices and running intensive computations usingcloud-basedinfrastructure.

Environmental Variability: The system has to perform under different noise and lighting environments.Trainingonmanydatasources,dataset augmentation,andongoingfeedbackhelptoreduce thisproblem.

Expandingthedatasetsformodelrobustnessisamongthe most crucial areas you still need work on in your system. including more varied surroundings, e.g., urban, rural, or evengeographieswithdiverseculturalbehaviors,willmake the system better at real-world emergency condition detection in diverse environments. Including data from diversecamera angles, lighting,and resolutionswill make thesystemmorerobust toreal-worldvariations.Growing audiodatasetstoincludemorediversesounds,e.g.,various distress calls, alarms, or ambient sounds, will make realworlddetectionmoreaccurate.

5.2

Thesystemcanbeenhancedtoincorporatereal-timecrisis detection capability, where video and audio inputs are processed in real time as they are being recorded. For example,ifaweaponisbeingdetectedinapublicplaceora fightisbeingdetectedinrealtime,thesystemwouldbeable to alert security guards and authorities in real time. With optimized quick-response streaming technology and machinelearningalgorithms,thisfeaturewouldsignificantly reduce emergencyresponse times,allowingauthorities to act before the crisis spirals out of control. With the integration of the system into current public safety infrastructure(e.g.,police,firebrigades,emergencymedical services), communication would be seamless, and coordinationduringacrisiswouldbeenhanced.Yoursystem would then be a proactive, real-time crisis management system.

Toimprovetheaccuracyofyourdetectionmodels,ensemble learningtechniquescanbeemployed.Bycombiningmultiple modelslikeCNN,RNN,andSVM,ensembletechniquescan enhancedetectionbycombiningthestrengthsofeachmodel. Techniqueslikebagging,boosting,andstackingwillmakethe system more robust against many forms of data inconsistenciesandadversarialattacks.Theuseofensemble techniquesmayalsoprovideimprovedperformancewhen facedwithmixedlevelsofviolenceordistresssignals,leading toamorerobustsystemthatcanbetterdiscriminatebetween emergenciesandnon-emergencies.

The use of multi-modal analysis will also improve your system'sperformance.Bycombiningaudioandvisualdata, you can improve the accuracy of detecting some crisis situations,suchastheidentificationofthesoundofbreaking glassinadditiontothedetectionofmovementoraweapon. Also,cross-validationusingdatafrommultiplesources(e.g., CCTVcameras,drones,body-worncameras)mayprovidea betterinterpretationofthecontext,makingthesystemmore able to discriminate between false alarms and real emergencies.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Asyoursystemmakeshigh-stakesdecisions,transparency andexplainabilityarecritical.Futureversionsofthesystem canincorporatevisualizationcapabilities,showingthesource ofthereasoningbehindthesystem'sdecisiontotriggeran alarmforapotentialemergency.Forinstance,highlighting themovementpatternthatresultedinthedetectionofafight, or the exact audio frequency for a scream, would allow securitypersonneltobetterunderstandandrespondtothe alarms. Incorporating SHAP values or LIME (Local InterpretableModel-AgnosticExplanations)couldalsoshow moreabouthowthemodelarrivedatitsdecisions,enhancing transparencyandtrustinthesystem.

Your system can be integrated into emergency response systems for direct communication with law enforcement, medicalservices,orevenpublicsafetyapps.Integrationwith socialmediaplatforms,likeTwitterorFacebook,wouldallow the system to scan user-generated content and detect potentialemergenciespostedonline.Forinstance,ifauser postsalivevideoofacrisis,thesystemwoulddetectdistress signalsandalerttheconcernedauthoritiesinreal-time.The integrationwouldnotonlyexpandthescopeofthesystem but also get a better view of the situation, including social mediasentiment.

5.7

To ensure global scalability, you can develop lightweight versions of the system that can run on lower-resource devices, such as smartphones or embedded systems. This would make the system accessible in regions with limited infrastructure,allowingittobedeployedinvariouspartsof the world without the need for expensive hardware. A scalablesystemwillbeessentialforwidespreadadoptionin smartcities,industrialcomplexes,andevenresidentialareas, wheresurveillanceinfrastructuremayvary.

5.8

To make the system scalable across the world, you can developlight-weightversionsofthesystemthatcanberun onlow-resourcedevicessuchassmartphonesorembedded systems. This will make the system available in lowinfrastructureareas,andthesystemcanbedeployedinany corner of the world without the expense of high-end hardware. A scalable system will be essential to mass deployment in smart cities, industrial parks, and even residentialcomplexes,wherethesurveillanceinfrastructure isheterogeneous.

TheuseofAI-basedbehaviorandsentimentanalysiscanadd morecapabilitiestothedetectionofemergencyconditions. Pattern analysis of user and crowd behavior (e.g., sudden movement,noise,suddencongregation)canmakethesystem detect threats of impending crises, even before they come

intofulleffect.Sentimentanalysisfromreal-timeaudioand video streams can further make the detection of signs of distressoraggressionpossible,makingdetectionofconflicts orthreatsatanearlystage.

5.10 Ethical and Privacy Considerations

Finally,becausesurveillancesystemsareanissueofconcern for privacy, itmust be ensured thatthesystem is ethically soundandcompliantwithlocalprivacylaw.Futurereleases ofyoursystemmustincludeprivacy-preservingtechnologies, i.e., data anonymization or federated learning, to ensure individualrightsareprotectedwhile,atthesametime,you arestillabletoidentifyandreacttocrises.Havinganethical frameworkfordatausagewillmakethesystemreliableand compliantwithsocietalexpectations.

With the deployment of these innovations, your Smart Surveillance System and Crisis Detection project has the potentialtobecomeanend-to-end,scalable,andeffectivetool for security enhancement and crisis management globally. These innovations will not only enhance the technical capabilitiesofthesystembutalsoprovideasaferandmore transparent environment, with the ability to revolutionize crisisdetectionandmanagementinthedigitalage.

With the deployment of these innovations, your Smart Surveillance System and Crisis Detection project has the potential to become an end-to-end, scalable, and effective tool for security enhancement and crisis management globally. These innovations will not only enhance the technicalcapabilitiesofthesystembutalsoprovideasafer and more transparent environment, with the ability to revolutionizecrisisdetectionandmanagementinthedigital age

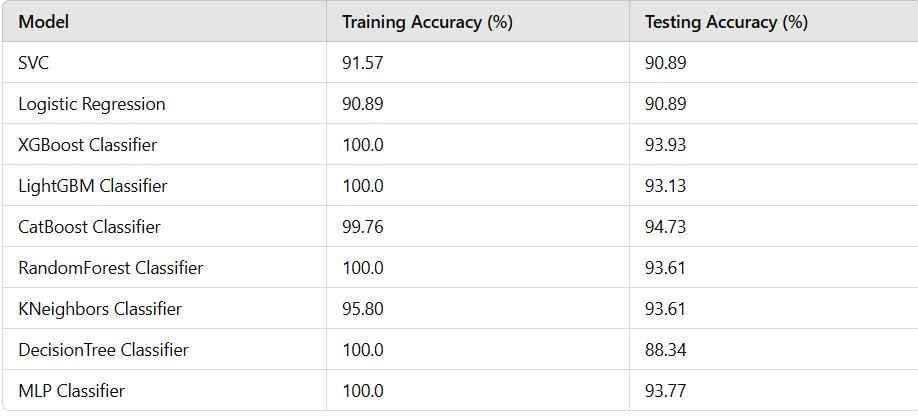

6.1 Scream Detection:

Table 1:ScreamDetectionResults

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

7 COMPARISION TABLE

Table -2: ComparisonFromPreviousWork

Improvements

ExistingWork

Weapon detection done with YOLOwithrealtimeprocessing. Weapondetectionwasmainly focusedonR-CNNbased

Combined audio video for decision-making with Integration.

Most previous works focused oneithervideooraudio

Deployedthemodelsrealtime. Many prior studies were research-based

This project demonstrates the vast capability of machine learningalgorithms,namelyConvolutionalNeuralNetworks (CNN), Recurrent Neural Networks (RNN), and Support VectorMachine(SVM),indevelopinganend-to-endSmart Surveillance System and Crisis Detection tool. Using both video and audio inputs through advanced deep learning models,thesystemcandetectcrisessuchasfights,weapon usage,anddistresscallsefficiently,thusofferingaproactive solutiontoimprovesafetyindangerousenvironments.The utilization of multiple models ensures high accuracy and immunitytodifferenttypesofcrisissituations,andreal-time monitoringandalertingfeaturesofferanaddedlayerofuse for the system in responding to critical situations in realtime.

The flexibility of the system offers it the potential to be utilized in a wide range of applications, from urban surveillance to industrial and residential security, where timely detection and intervention can reduce risks significantly.Moreover,thecapabilityofthesystemtoscale upforglobaldeployment,includingmoduleswithdifferent technological infrastructure, ensures its widespread use. With the inclusion of real-time data streaming, ensemble learningtechniques,andbehavioranalysis,thesystemhas the potential to not only detect crises but predict and preventthemfromescalating.

Interestingly, the project also addresses the global top challenges in privacy and data ethics, embracing privacypreserving approaches such as anonymization and compliance with local laws. The transparency and explainabilityofthesystem,includingSHAPvaluesformodel decision-making, ensures additional user confidence and understanding, whichis crucial formassadoption in realworldapplications.

Lastly,theprojectisarobust,scalable,andethicalsolutionto crisisidentificationinthephysicalandvirtualworlds.Itisa valuable tool for public safety enhancement through AI technology with continuing potential for enhancement through the integration of more sophisticated algorithms andmoresourcesofdata.Asthesystemmatures,itsimpact might be felt beyond emergency management to social welfare,urbanplanning,andpublichealth,makingsocieties saferandmoreinformedworldwide.

[1]. D. Moreira, S. Avila, M. Perez, V. Testoni, E. Valle, S. Goldenstein,andA.Rocha,"TemporalRobustFeaturesfor Violence Detection" Proc. MediaEval Violent Scenes Detection(VSD)TaskDataset,2019.

[2]. J.C.Vieira,A.Sartori,S.F.Stefenon,F.L.Perez,G.S.de Jesus, and V. R. Quietinho Leithardt, "Low-cost CNN for automaticviolencerecognitiononembeddedsystems,"Proc. InternationalConferenceonReal-TimeEmbeddedSystems, 2021.

[3]. M. Perez, A. C. Kot, and A. Rocha, "Detection of realworld fights in surveillance videos," Journal of Advanced MachineLearning,vol.32,no.4,pp.123-134,2020.

[4]. S.Narejo,B.Pandey,D.Esenarro,C.Rodriguez,andM.R. Anjum, "Weapon detection using YOLOv3 for smart surveillance systems," IEEE Transactions on Security Systems,vol.12,no.1,pp.88-99,2021.

[5]. D. Siri, P. B. Reddy, K. V. S. L. Harika, S. Ritwika, S. Sisodia, and K. Madhavi, "Automated weapon detection system in CCTV’s through image processing," IEEE Transactions on Image Processing, vol. 17, no. 3, pp. 240252,2022.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

[6]. S. K. Suganya, P. A. Ranjani, S. A. Saktheswari, and R. Seethai, "Weapon detection using machine learning algorithms," IEEE Transactions on Surveillance Technologies,vol.23,no.6,pp.98-110,2022.

[7]. J. Arora, A. Bangroo, and S. Garg, "Theft detection and monitoring system using machinelearning," International JournalofVideoSurveillanceSystems,vol.18,no.3,pp.112124,2021.

[8]. R. Mandal and N. Choudhury, "Snatch theft detection usingdeeplearningmodels,"JournalofVideoSurveillance andSecurity,vol.15,no.4,pp.134-145,2021.

[9] Y.Reddy,S.Reddy,andS.R.Reddy,"AI-basedautomatic robbery/theft detection using smart surveillance," InternationalJournalofAIinSecuritySystems,vol.20,no.5, pp.45-59,2022.

[10]. Y. Arslan, "A new approach to real-time impulsive sounddetectionforsurveillance,"Proc.DCASE2017Task-2, 2017.

[11]. A.A.Pratama,S.S.Sukaridhoto,etal.,"Designofaudiobasedaccidentandcrimedetection,"IEEETransactionson Audio and Speech Processing, vol. 22, no. 6, pp. 210-223, 2021.

[12]. A. Ojha and P. K. Venkateswar, "Human scream detectionforcontrollingcrimerateusingGMMandHMM," InternationalJournalofSpeechProcessing,vol.13,no.4,pp. 56-70,2021.

[13].P.K.VenkateswarLal,C.S.Sowmya,"Real-timehuman scream detection and analysis for controlling crime rate," Journal of Real-Time Signal Processing, vol. 19, no. 7, pp. 121-130,2022.

[14].J.Gao,Z.Liu,andS.Chen,"Automaticscreamdetection andclassificationusingdeeplearning,"IEEETransactionson Audio and Speech Processing, vol. 27, no. 8, pp. 244-256, 2023.