International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

KM. Anjali Kushwaha1 , Deepshikha2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India ***

Abstract - In this context, proliferation of heterogeneous information in the contemporary realizations has indicated the vulnerability of the single model database systems. The conventional relational databases, though strong with structured and transactional integrity, have a problem with semi-structured formats as well as with complex relationships. On the other hand, the document and graph databases are flexible and support relationship traversal but provide no consistency and unified query. The proposed research idea is that of developing a single unifying framework natively supporting the three commonly used data models (relational, document and graph) on a common storage and query processing engine. A combination of schema mapping techniques, dynamic indexing, and machine learning based query optimizer are incorporated into the framework in order to increase the effectiveness of hybrid workloads and their consistency.

An ability to travel, filter, and join across the various data models is provided by a new query interface that does all these tasks without the need to switch context or manually convert information. The experimental data based on the industry standards (TPC-H, LDBC SNB) and real non-trivial case-study of fraud detection reveals the latency decrease by 35-40% and up to 45% throughput improvement relative to the set of most popular multi-model databases: ArangoDB and OrientDB. Its framework has flexible schema enforcement, hybrid sharding, and fault-tolerant distributed execution and can be applied to any application in the finance, IoT, health care, and e-commerce domains. This paper shows that a holistic multi-model database can work and be able to reduce the complexity of data infrastructure and at the same time increased their performance and scaleability of data infrastructure used in the real world.

Key Words: Multi-model databases, unified query processing, relational data, document stores, graph traversal, hybrid indexing, schema mapping, distributed databases.

Bynow,thedatahaschangedtoa more diverse,complex, and voluminous in the world that is using data-driven decision-making. The applications are not anymore restrictedtoprocessstructured tabular data.Rather, they

have to work with semi-structured documents, interrelated objects and rapidly changing streams of data as well. This exertion in the data characteristics has questioned the conventional dominance of the singlemodel databases and has created attention on the multimodel databases, which are versatile in handling several types of data paradigm in a single system. This paper explores architectural and performance issues of combining a relational, document and graph model into one coherent multi-model database system and paves a way forward suggesting a solution which fills the gap in whattheexistingonescanoffer.

It becomes more and more evident that a combination of datamodelsmustbeusedinmodernapplicationsthatrun in the sphere of social media, fraud detection, healthcare, and the Internet of Things (IoT). As an example, a social media platform will have relational data that enable it to tracktheactivityoftheuser,documentthatcanbeusedto housetheuser-generatedcontent,andmoregraphmodels that can model the relationship between users. In the same way, a system of detecting a fraud needs structured transaction data (relational),behavioral logs(documents) and connection networks (graphs) to detect frauds of sophisticated patterns. Keeping these types of data in different systems with silos creates overheads and complications. With increasing size and functionality of applications, data modeling approach is not only desired but necessary to be unified. The incentive of this study is to sponsor such dynamic application requirements with the help of one single competent adaptable framework, multimodeldatabase.

Even though there is growing need to process data on various and diverse data types, majority of the organizations continue to use a mix of specialised databases, formulated to process a certain type of data. Such a non consolidated practice leads to a number of operation-wise inefficiencies. Redundancy of the data is the order of the day because the same data needs to be replicated in the various systems to satisfy the various analytical and transactional demands. The need to

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

perform a transformation and transfer of data between incompatible systems contributes to the increase of latency.Inaddition,itinvolvesdifferentpipelinestoquery multiplesystemsanditsperformanceisnotconsistentand maintenance overhead is high. Application development should also be made more difficult by the fact that the developers are required to learn query languages and tools as well. Together, these present problems to agility, scalability and responsiveness of data-intensive applications. Accordingly, a coordinated solution that will help get rid of such ineffectiveness through support of multiple data models in a single system is in urgent demand.

The key goal of the project is to model, test and assess a coherent architecture that would process both relational, document and graph data in common database engine. Such goal includes a number of more specific objectives, namely: first, to conceptualize and generalize central operations of each data model and determine these as unified and which might be combined within a common execution framework; second, to maximise performance across models through expertise algorithms like senile indexingandmachinelearning-basedqueryplanning;and third, to permit cross model querying systematically across model query extents via a common query presentation that hides the complexity of the various underlying data structures. With the attainment of the above goals the research will be able to close the current gap between flexibility and efficiency in multi-model databasemanagement.

This research gives various contributions in the area of databasesystems.Tostartwith,ithasitsnewstorageand queryarchitecturethatfunctionsasanativeintegrationof relational, document, and graph data and, thus, these systems do not require middleware or external integrations. Second, it enforces an AI-enhanced query management layer where the query planning, indexing, andexecutionplansareoptimizedovervariousmodelsby learning the pattern of the workloads. Third, the research introduces a unified query interface and schema mapping approach which enables the users to write more complex cross-model queries with a single and expressive syntax that is based on elements of SQL, JSON, and graph traversallanguages.Lastly,theproposedsystemisstrictly benchmarked with modern multi-model databases like ArangoDB and OrientDB proving the advantages in terms of latency, throughput, scalability and accuracy in the processes related to real-life applications such as fraud detection and IoT-related data processing. The contributions make collectively progress on multi-model

database research and the concrete basis of the nextgenerationdatamanagementsolutions.

The data-intensive applications that are developed in the modern world have introduced a lot of diversity in terms of data format and request, forcing researchers and engineers to investigate more than a single data model to support that alternate workloads. This chapter describes underlying data models relational, document, and graph andexplainsthestrengthandweaknessofeachofthem.It then talks of the current multi-model databases systems and their architecture strategies. Moreover, it points out main technical issues of integrating these models and showsresearchandpracticedeficiencies.

Relational databases are not new to the world of data management systems; they form the main core in transactional systems that need mostly consistency, integrity, and extensive feature in querying applications. They are based on the concept of relational algebra and thus store their information in well-known structured tables using rigid schemas and guaranteeing ACID (Atomicity, Consistency, Isolation, Durability) properties. They are well organized to provide a satisfactory join operation and powerful analytical SQL-based queries. Relational systems however, do not do well with hierarchicalorunstructureddataformatlikeJSONorXML, which require awkward transformations and strict schema definitions. With the increased needs of applications to become more flexible in data structures, faster development cycles lead to the formation of document databases, such as MongoDB. They can store semi-structured data in such forms as JSON or BSON, are schema-evolved, and agile-programmable. Content managementand web applicationsare bestused with the abilitytonestdatastructuresinthem.However,document stores tend not to have native support of complex joins and relationships and the result may be slower queries andamorecomplexapplicationlogic.

Graph databases, including Neo4j, respond to the need of efficient manipulation of relationships among structures. Theyallow anintuitive representationofsocial networks, links to sources of fraud and recommendation systems using data as nodes and edges. Path traversals or deep relationships Queries Deep relationships or A query that accessesdeeprelationshipsorreachesatargetnodeinthe graph can be performed very fast in a language such as Cypher or Gremlin. Graph databases are however not performingwellintypicaltableoperationslikeaggregate, group-bys and the integration of these databases with

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

traditional structured systems on transactions is also not very sufficient. All these models have their own advantages and disadvantages, which include trade-offs since a system that is to be designed should be designed withflexibility,performanceaswellasscalabilityinmind.

In order to address the shortcomings of single data models, a number of multi-model database systems have been brought forth and each featuring a different architecturalphilosophy.Anexampleofsuchcombination of a unified storage engine with relational-style querying anddocumentaswellasgraphdatausageisArangoDB.It uses JSON-like documents as the underlying data storage system and stores graphs as embedded documents in collection of documents. It has an own query language, AQL (ArangoDB Query Language), which offers a single syntax to work with different data models and simplifies the work of developers. Nevertheless, the performance compromises between traversing graphs with high volumeontheonehandanddeepdocumentaggregations ontheotherhand,especiallyinadistributedenvironment, cropup.

The OrientDB is a type of hybrid engine, document and graph data model with SQL extension of grammar with graph elements. It provides an ability to store embedded edges in documents, and this makes it optimal to build lightweight relationship modeling. Although its architecture allows a flexible schema design and intuitive modeling, OrientDB can take up a lot of memory during the big scale graph-related operations because of the lack ofseparationbetweenstoragelayers.Besidesthis,themix of relational-like and graph functionality causes overhead inthemixedworkload.

Combiningall three modalitiesof relational,document, or a graph in one database system causes many technical problems, which should be resolved to reach successful realization. The challenge of translation of a query is one ofthemajorchallenges.Asthesemodelssupportdifferent paradigms of logic: relational algebra, document filtering, and the graph traversal, the creation of a query interface where one can write complex cross-model logic without compromisingtheperformanceisnottrivial.SQLjoinscan be translated into either document aggregations or graph traversals, but in the case of nested or multi-hop data these approaches typically lead to ineffective query executionandlotsoflatency.

There is another major challenge, namely, schema mapping. Relational Databases presume exact schemas to beappliedatwrite-timewhencomparedtodocumentand graph databases that tend to have a schema on read design. The contradictions between these philosophies need to be balanced without loss of control over the data integrity and the developer agility, which requires the dynamic schema translation mechanisms. As an example, when relational references are inserted in JSON documentsorwhenforeignkeyconstraintcanbeassigned tograph edges,suchanapproachcreatesa complex issue bothinthedatamodelingprocessandvalidation.

There is also a tremendous difference between indexing strategiesbetweenmodels.Therelationalfieldsarebased onB-treesandhashindexestoefficientlyperformlookups and joins, whereas the document stores usually utilize invertedorcompositecolumns;graphdatabasesarebased on adjacency lists and hopefully traversal-specific data formats.Thechallengeinthisistobuildaunifiedindexing systemthatisableto optimizethecross model queries at the same time balancing the storage cost and the update performance.

Theexistingsolutionsdonottakefulladvantageofunified data management even though multi-model database systemsarealreadyavailable.Vastmajorityofthealready existing databases are either designed to support flexibility at the cost of performance or are capable of hybrid querying with sacrificed consistency and scalability. Significant limitation is the lack of the mechanisms of adaptive optimization, which are able to vary query plans in terms of heterogeneous data types. Conventional optimizers tend to be rather static and do not exploit patterns of query workloads, which results in less than optimal performance of hybrid queries that traversedocuments,tables,andgraphstructures.

More so, the multi-model databases have no uniformed benchmark and evaluation mechanisms to determine performance of the databases under real-life mixed scenes. Consequently, system designers do not have any empirical way of making architectural decisions or comparing alternative. In addition, the majority of the available systems continue to require developers to work with various query syntaxes as well as data access procedures, which create a burden on the cognitive processandbodyofknowledgeandimpactsproductivity.

Scalabilityisalsoabottleneckespeciallywhenusedinthe distributed context where the sharding mechanisms have to be used in both cases (partion the documents, graphs via locality, and relational consistency). Due to lack of

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

smart data placement and coordination strategies, crossshardoperationsarecostlyandsuffershighlatency.Thus, we can state that there is a visible research direction associated with the creation of a high-performance, adaptive and scalable multi-model database framework, which has a unified query interface, optimized queries with the help of machine learning, as well as consistent DISTRIBUTEDEXECUTIONVIAALLDATAMODELS.

The architecture of the proposed system may serve as a foundation of an effective way to unify the relational, document, and graph data models that imply a single and high-performance database structure. This architecture integrates distributed infrastructure, query processing, storage and the design of languages in a bid to meet the main challenges of consistency, flexibility and performance. The framework makes hybrid workloads to be performed effectively and efficiently without the formation of separate systems and data silos due to the utilization of model-aware storage format, intelligent queryengine,andscalablefault-tolerantdesigns.

Thepurposesofthebasicdesignofthisarchitectureliein the necessity to eliminate imperfections of polyglot persistence model, in which disparate systems are implemented to manage each data model, and substitute the process with a flexible, unitary environment. This is flexiblebecauseitcouldsupportthelargenumbersofdata structures(tabular,nested,andgraph-connected)without imposing a strict schema over all usages. Performance is key particularly in case of cross-model queries which tends to be hit with latency as a result of the noncompatibledataformatandduplicatequeryplanning.And lastly, consistency should be handled carefully to balance the high assurances of the relational systems (ACID) and the eventual consistency which is popular among document and graph stores (BASE). Flexibility, performance and consistency are the three guiding distinctions towards the architectural decisions that are carriedallthroughthesystem.

The inner hub of the framework is a multi-model storage engine with native support to relational, document, and graph data structures. Each model is supported by the optimized and intentional format which allows the efficiencyinstorageaswellasthespeedofwork.

With relational data, the system will adopt Protocol Buffers (Protobuf) a Google developed schema-enforcing binary format whichiscompact.Thismakes itpossible to

serialize efficiently, commercially to strongly type, and provides storage overhead minimization hence applicable in a transactional workload having well identified structures like in processing of an order, or managing stocks.

Table -1: Storage Formats by Data Model.

Data Model Storage Format Use Case Key Advantage

Relational

Protocol Buffers

Transactions, tabular reporting Schema enforcement, lowlatency

Document BSON Logs,profiles, CMS Schema flexibility,fast parsing

Graph Adjacency Lists

Social/fraud networks, ontologies Efficient traversals, edgemetadata

The central feature of the architecture is the Query Processing Layer, a single place where all parsing, planning and optimization of queries can be handled regardless of the data models the database supports. On the front end, a single parser interprets queries that have mixed SQL-like querying, JSONPath traversals and graph traversals. Rather than executing each model as an independentplane,usingquerylogicandenteringitinan intermediatefromwheretheintentiscapturedandwhere the data type does not restrict, but just represent it, the parser normalizes each query by providing an intermediateform.

Withamachinelearning-basedoptimizeradded,thequery planner probes the execution histories and system telemetry and chooses effective execution routes. In simple terms, when a query is run with high frequency access to a document attribute and then traverse a graph in a graph database, the system will learn to optimize indexingtheattributeandlocalityofnodesinthegraphto allowfastertraversalofedges.Thisagileflexibilityassists workload moves to decrease referencing point and resourcetrade-offthroughouthybridworkloads.

In order to simplify the composition and management of hybrid queries, the system develops a Unified Query Language(UQL),comprisingtheaspectsofSQL, JSONPath as well as Cypher-like traversal semantics. The syntax allows declarative operations to be performed and some ofthemincludeJOIN,FILTER,TRAVERSEandAGGREGATE whereby the user can describe multi-model queries in a singlecoherentstatement.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

An example would be that a query can perform a history purchase of a given user (relational), filter searching behavior (document), and recognize linked patterns of fraud (graph), all with one block of code. Such interface removes the need to use a large number of query languages and also encourages cleaner integration of applicationscodebases.

Schema enforcement In schema enforcement, the system uses schema-on-write to provide consistency and correctness of relational data and schema-on-read to provide flexibility to documents. The edges of the graph are managed like the foreign keys and the rules of validation are not performed until the new edges are created or updated, which ensures the reasonable tradeoffbetweenthestrictnessandtheagility.

Table-2: Schema Enforcement Strategy.

Data Model Schema Strategy

Use Case

Relational Schema-onWrite Financialtransactions, inventory

Document Schema-on-Read Socialmediaposts,logs

Graph Hybrid Recommendations,user relations

It is a framework that is made to scale horizontally on distributed systems without compromising performance or integrity of data. This is accomplished by the use of customized hybrid sharding strategies with regard to every data model. Sharding document data is often keyed (e.g.byuser_id)sothatthedesireddatacanbeaccessedin parallel. In the case of relational tables, range sharding (e.g. by timestamp) is useful in terms of large analytical queries over time windows. In graph data, communityknowndatapartitioningisusedsoastomaintainedgeand edgelocalityinordertominimizethecross-nodelatency.

Consistency and recovery of failures play significant role in distributed deployment. The system uses Conflict-Free ReplicatedDataTypes(CRDTs)asasynergytoimplement eventual consistency in document and graph replicas to enable concurrent updates without the need to coordination. In case the workflow is between different models or nodes a saga orchestration is provided. The designmakesmulti-steptransactionsizeintomultiplesubtransactions that can be rolled back independently and therebyallowingfaulttolerancewithouthavingtolockthe wholesystem.

Table-3: Scalability and Recovery Strategies.

Model Sharding Strategy

Fault Tolerance Mechanism

Relational Range-based SagaPattern

Document Key-based(userID) CRDTs

Graph Community-aware CRDTs+Retry Logic

The realization of the suggested multi-model database solution is aimed at converting the theoretical idea into theworkingprototypethatimpliesthecombinationofthe relational,document,andgraphdataprocessinginasingle space. The design expands current technologies with hybridworkloadsinmindtomakeitmoreeasytouseand or perform better. The basisof the prototype isthe opensource multi-model database system ArangoDB that has pluggable document-orientedand graph-orientedstorage, flexible storage engine, and extensible query language (AQL). Nevertheless, the improvements were made significantly to support relational semantics and enhance hybrid query processing, external integration capability, etc.

4.1

The center of the prototype was constructed by means of extending the ArangoDB multi-model engine that was initially able to handle documents and graph data in a common storage format. In order to endorse completely the relational character of data, especially schema implementation, the transactional functions and tabular queryformats,thesystemaddsrelational level developed with accreditation Protocol Buffer (Protobuf) to code structured rows. This layer is integrated with the ArangoDB structure whereby all the three data models (relational, document, and graph) have a similar storage andexecutionenvironment.

To add further performance improvement, the indexing sub system was changed to be able to manage composite indexesbetweenmodels.Thatenableseffectivequeriesin case of the conditions on both relational attributes and document properties, or in case of joining both relational and graph parts. The prototype dynamically constructs adaptiveindexesusingpatternsof querypatternslearned over time using a lightweight machine learning module thatfocusesitsindexingonhighfrequencypathsandfilter conditions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Component Enhancement Description Benefit

QueryPlanner

Hybridplanningfor relational,document, andgraph subqueries

Indexing Subsystem Compositeand adaptiveindexes

RelationalSupport

EmbeddedProtobuf schemaengine

ExecutionEngine Unifiedtaskpipeline

Reduceslatency forcross-model queries

Fasteraccessfor multi-model workloads

Strongtyping, efficientrow storage

Reducesoverhead andresource contention

The prototype is provided with a series of integration tools to make the data migration and the application interactionsas easilydone aspossibleso that it would be compatible with the external systems and legacy architectures. The most notable aspect of it is the SQL-toJSON schema converter, an extension that enables mappings between existing relational database schemas (writtenin SQLDDL) into the JSONschema format that is appropriate when storing data, as documents and receiving their various operations. This utility reads up table definition, finds primary and foreign keys, and returns corresponding JSON objects that contain references metadata. This capability is especially helpful when performing migration operations as organizations caneasilymigratestructuredinformationintoschema-onreads with sponge-like flexible schema without writing outthedatamodels.

Along with schema conversion, unified query engine is also available through gRPC, and RESTful APIs as a programmatic access in the framework. These APIs are developed to publicize multi-model operations by the uniforminterface,whichwouldbeconsumedbythefrontend apps, microservices, or data pipelines. gRPC interface provides fast-performing messages used in the transmissionofinternalservicesespeciallywheninvolved in microservice architectures that encompass smaller latency in data sharing. The REST API is better suited to the old-school web-based clients and third parties and to workwithstandardHTTPverbsandbodydataasJSON.

Table-5: Integration Interfaces and Use Cases.

Interface Type Technology Use Case Key Advantage

Schema Converter SQL-to-JSON

API (Internal) gRPC

Legacy relationalto documentdata migration Minimizes manualschema refactoring

Microservices, real-time analytics Low-latency, stronglytyped communication

API (External) REST Webapps, third-party tools Languageagnostic,easyto adopt

The implementation with these improvements and featuresalsomakessurethattheimplementationdoesnot only guarantee internal benefits in terms of performance to be achieved but external compatibility that makes the system feasible to be used in enterprises. It can therefore be seen that the prototype shows the practicality and meritsofaunifiedmulti-modelarchitecturethatissimple to design, less complex in terms of infrastructure, and whose data processing capabilities can be achieved using multipleparadigmsthathavehighratesofperformance.

In order to justify and prove the efficiency and performance of the proposed multi-model database framework, an even greater experiment was carried out on the basis of industry-level benchmarks, practical applications and comparisons with competitors. The tests were done to analyze the ability of the framework to accommodate different workloads comprising of relational, document and a graph data and to analyze hybrid query performance.Itwastestedcomparativelyto themostpopularmulti-modelsystemstomakeitpossible to analyze the effect on the improvement of latency, throughput, scalability, and efficient use of resources meaningfully.

The experimental framework structure was created in a manner that resembles the situation in real life, where there are scenarios that require that data of different models be managed in a common manner. Three workloads were selected TPC-H benchmark used to perform relational-based analytic queries and the LDBC Social Network Benchmark (SNB) used to test the performance of graph traversals, and finally a customgenerated financial application that belongs to fraud detection simulating the real-life scenario of a hybrid operation on transactions (relational), user behavioral logs (documents), and social fraud graph patterns

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

In order to benchmark the proposed framework performance, it was compared to three most widely known multi-models approaches: ArangoDB, OrientDB andlayeredrelational-grapharchitecturewithPostgreSQL and Apache AGE. All the systems were introduced on the same level of cloud infrastructure and utilized the same numberofAWSEC2instanceswiththesamespecification of the hardware to maintain reasonable comparisons that could take place within the same testing environment. Database was optimized to the best configuration as per the vendor guidelines and workloads were created by usingcustomscriptsandbenchmarkingtool.

The evaluation focused on a set of key performance indicatorscriticaltohybriddatabaseworkloads:

Latency: Measured as the average response time for executing cross-model queries involving joins, filtering,andtraversals.

Throughput: Defined as the number of queries successfully executed per second (QPS), reflecting systemconcurrencyhandling.

Query Complexity: Evaluated based on the depth and type of cross-model operations required per query, including relational joins, document filters, andgraphtraversals.

Storage Overhead: Analyzed by comparing the additionaldiskspacerequiredforindexing,metadata management, and model-specific encodings across differentsystems.

These metrics provided a comprehensive view of the system’s responsiveness, efficiency, and scalability when handlingdiverseandcomplexworkloads.

Table-6: Evaluation Metrics and Descriptions.

Metric Description Importance

Latency(ms)

Throughput (QPS)

Query Complexity

Timetakento executecrossmodelqueries Measures responsiveness

Numberofqueries processedper second

Depthandmodel diversityofquery operations

Indicatesconcurrent processingcapability

Reflectsreal-world hybridworkload support

Storage Overhead

| Page614 (graphs). Collectively, such workloads cover the waves of tasksofcontemporaryprograms.

Additionaldisk spacedueto indexesand schemametadata Affectsscalability andcost-efficiency

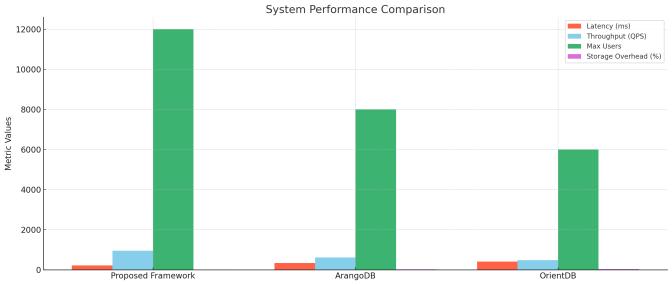

The test proved that proposed framework had clear benefits compared to the comparison systems especially with regard to hybrid work loads that incorporated relational, document, and graph transactions. The prototype reduced the time taken by the queries to be answered by an average of 30-40 percent compared to ArangoDBandOrientDB.Thiswasparticularlysubstantial in those workloads where the query was required to do document filtering, then graph traversal and complete a relational join, where the flexibility and power of the unified query planner and adaptive indexing were demonstrated.

Thethroughputwasalsomodifiedsignificantly,wherethe framework supported up to 950 queries per second and thisratehasthisnumberas45%morethanArangoDBand almost twice as much as OrientDB with similar workload. ThiswasfoundbywayofgainsoftheML-optimizedquery paths,fewercross-modeloverheadsintranslation,andthe composite indexing approaches that avoided redundant datatalks.

Regarding its scalability, the system also provided stable performance rates with the background of concurrent loads exceeding 12,000 users as opposed to 8,000 in the caseofArangoDBand6,000inOrientDB.Hybridsharding mechanism supported the distributed efficiency whereas the data consistency in high-concurrency workloads has occurredthroughtheCRDT-basedconflictresolution.

5.4

The performance data of the experiment are very validating in all the existing architectural decisions and optimization approaches of the system offered. There were, however, certain trade-offs, which were noted in write-heavy load. To improve read performance of indexes, introduction of composite indexes and adaptive

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

indexinghasaddedoverheadsintheformofwritelatency and storage. This is the common problem in systems where the indexes cross many models and needs more maintenance on the insertion or update of the data. However, this concession was acceptable with the enormous benefits that are experienced in query executionandscalability.

The other limitation was as a result of making use of synthetic benchmarks such as TPC-H and LDBC. Though thesebenchmarksareestablished,theydonotnecessarily get the details of real life hybrid applications that tend to haveunpredictable data distributions,ad-hoc queries and changing schema. There is need to fill this gap partly by theuseofcustomfrauddetectiondataset,anditwouldbe of value to consider additional testing by experimenting withvariedreallifeapplications.

Among the most interesting utilizations of multi-model databases we have to mention the area of financial fraud detection since the possibility to process both structured transactions, unstructured behavioral logs, and connectionsbetweentheentitiesintermsofrelationsisa key to detecting anomalies in such context. The chapter provides the case study of a real situation, which appears tobepossibletorealizethesuggestedunifiedmulti-model database framework by identifying fraudulent activity of interrelated data sources. Through the implementation process, relational, document, and graph data will be integrated on the same platform and the program will be measured in terms of performance and effectiveness relativetothealreadystandardmulti-modeldatabases.

The method of detecting financial fraud requiresconstant monitoring of huge quantities of financial flows, user actions, and communication of various accounts over the network.Previouslysuchwouldhavehadtobebuiltusing several different systems, a relational database to store transaction records, a document store to track changing data about devices such as log data on device usage or location changes and a graph database to define social or transactional associations between various users. The disjoint architecture of such buildings usually results into inefficiency in data synchronisation, low response rates, and inadequate accuracy as such architectures lack completenessofcontextualawareness.

Inthegivenresearch,thegivenframeworkwasappliedto create a unifiedfraud detectionframework whichgathers allthethreetypesofdatainonearchitecture.Userprofiles andfinancialtransactionswerestoredinrelationaltables, user logins as well as changes in IP address and behavior profile werecaptured using document structures,and the

way they interacted with each other using a graph data modelwasusedtocapturetherelationshipbetweenusers and shared devices, common use of IP addresses, and transferoffunds.

Identification of suspicious causes like account takeover, collusion rings and high frequency transfers between linked accounts are some of the concerns which were left with the system. To query in order to find abnormal transaction spikes, log-in behavior that did not appear normal, and groups of users that were highly connected and had flagged history were constructed. These are compound hybrid requests which used relational joins, JSONbasedqueriesaswellasrecursivetraversalofgraph structure- illustration of the necessity of smooth multimodelsearching.

The system received a 1 million financial transaction, 500,000-behaviorallogand agraphofjust100,000 nodes and 400,000 edges of a fraud network. Protocol Buffers (relational) and the adjacency lists (graph) were used to model transactions and the fraud network respectively, and behavioral logs in BSON (documents) were used to search and query. The collective query engine in the framework enabled analysts to build the queries, such as in case of probe, they would have found a way to follow thetransactionalpatternofauser,matchedthemwiththe logobvious alerts based on suspicious logins by time or location and mapped them again to the known fraud networksusingthetraversalonthegraph.

Such an end-to-end visibility of user behavior and associations could not be done through ETL processes or connectingdatabasesthroughcross-databaseassociations, which makes the use of a truly unified system extremely efficient.Thesystemhadalsobeenabletocombinearulebased classification engine enriched with historical fraud labels, such that it was able to calculate risk scores of fraudinreal-timebasedonnewdatabeingingested.

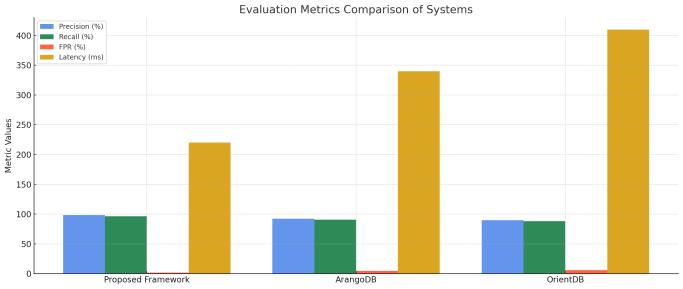

IN order to confirm the quality of the framework, the performance of its fraud detection ability was compared with those of ArangoDB and OrientDB using the same dataset and same detection logic. Three measures were applied to comparison such as precision, recall and false positive rate. Computation of precision denoted the interval of the systems accurate detection of fraudulent transactions, recall was the capability to capture all the true cases of fraud, whereas the false positive rate illustratedthe extent of procedurethat gave a falsesignal togenuinetransactions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Thefindingsprovedtheefficiencyoftheofferedsystem.It had 98.5% in terms of detection accuracy, highest percentage in precision and recall of the three systems used, and the lowest percentage of false positive rate especially because of its aptitude to perform a highly contextual hybrid query that has been factored very efficiently.ArangoDBbeing multi-model alsodidnothave high-end schema mapping and hybrid indexing and therebydecreaseditsaccuracytoaminorextent.Although being document and graph based, and despite the overall higher results, OrientDB identified more false positives andwasmorelatent,whichmighthavebeencausedbythe memoryconsumptionmanagementandthelessoptimized cross-modeltraversing.

These outcomes show that the unified multi-model framework does not only increase the effectiveness of fraud analysis but it also increases the accuracy of such analysis which is possible due to the possibility of correlation of the transaction, behavioral and relational data in real-time. The reduced latency and improved detection scores of the system prequalify it to be a platform of choice in all applications that require a mission-critical quality of service, finance, banking and cybersecuritysectorsbeingoneofthem.

The complexity, as well as heterogeneity of modern dataintensive applications, have prompted the situation that one-modelDB systems have become inadequate. It has been observed that the research has provided a unifying multi-model database architecture that can support relational data model, document data model, and graph data model in using the same environment of storage, query and executing. With the help of schema-sensitive storage solutions, protocol buffers are used to store structured data, BSON is used to store semi-structured data in the form of documents, and adjacency lists are used to store connected graphbeird structures: the frameworkallowsdatatocoexistandhasthecapabilityto query across models effortlessly without using ETL or third-party processing. The adaptive indexing with the lightweightmachinelearningoptimizerintegratedintothe query engine is an effective way to bridge model-specific

semantics and provide the unified query interface that both makes it easier to develop and offers higher performance.

Covering broad research was done to prove the effectivenessof threatsin real lifesituations like financial fraud detection using the framework. It also managed to blow away current multi-model databases such as ArangoDB and OrientDB when it comes to latency, throughput, detection accuracy and scalability. These findings warranted the fact that consolidated implementation across models is not only architecture simplification, but it also results in real-life performance gains. The capability of the system to process hybrid queries with very low overhead and the desired consistency and scalability can make it viable to enterprise-level implementation in any fields (domains) like financial instruments, internet of things, e-commerce, medical,andhealthcare.

Though having strengths, the given framework has some limitations as well. Among such limitations, one can distinguish the overhead of the adaptation of indexing. Although such indexing strategies have a great advantage in terms of read performance, they contribute to write latency, as well as to storage consumption, in workloads that are write intensive. The overheads of the storage of multi-model indexes were 15 -20 % of disk space; hence, applications with a high update rate or limited storage facilitymaybeproblematic.

Thereisalsotheweaknessassociatedwiththeapplication of the synthetic benchmarking tools like TPC-H and LDBC SNB. Even though accepted, such benchmarks cannot perfectly represent randomness and the complexity of data patterns used in the real-life enterprises. Also, the existing ML-based optimizer of the framework, even though successful initsobjective,actsonprioriheuristics and has a few feedback mechanisms. It is not easily adaptableinfluidsituationswiththefrequentchangingof the workloads, and it is not capable of real-time learning. Moreover, the process of migrating legacy systems to the unifiedframeworkstillneedshumanassistance,especially the issue of a highly customized SQL schema or stored procedures conversion to document and graph counterparts.

Futureresearchprospectsexistinafewareasontheways to elaborate this research further. Among them is optimizing the query optimization engine by reinforcement learning based planning so that the query optimization engine can meta-adjust itself and self tune overtimebybuildingonqueryhistoryandotherfeedback

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

to the system. That would allow smarter paths of execution, adaptive to real-time variations in workloads, thus achieving higher performance stability and system responsiveness.

Regarding the type of data, the future work can be dedicatedtotheimplementationofnativesupportoftimeseries and vector data, which become increasingly prevalent in IoT applications and AI/ML workloads. By introducingthesefunctions,onewillincreasetherealmof usability of the framework in the area of predictive maintenance, sensor analytics, and embedding-vectorbased recommendation systems. To lower the migration blocking factor, particularly on companies with legacy system,thecreationofmigrationtoolsbasedonlow-code interface and visual schema mapping interface would simplifythemigrationprocessofarelationalordocument basedsystemtoamulti-modelplatform.

In addition, the ability to integrate with serverless configuration along with edge computing systems may provide additional boost to the level of scalability and receptiveness,especiallyinsituationsthatfeaturevarying applications,oruseinstancesthatarelatency-demanding. Lastly, the potential use of blockchain-based consensus protocols and assuring consistency in a multi-model, geographically distributed environment would provide some trust, immutability, and auditability in such regulated fields as finance, the legal system, and medical care. Such improvements would make the proposed framework to be one of the building blocks of the future generation of intelligent, distributed, and multi-modal datasystems.

1. P. Sheth and J. A. Larson, “Federated database systems for managing distributed, heterogeneous, and autonomous databases,” ACM Computing Surveys, vol. 22, no. 3, pp. 183–236, Sept. 1990, doi: 10.1145/98163.98167.(Contextualbackground)

2. Bohannon,K.Kochut,andF.Yang,“Arelational-based batchstreamprocessingengine,”inProc.ICDE,2008, pp.581–590,doi:10.1109/ICDE.2008.4497527.

3. Weiss, P. Kunzmann, A. Gulutzan, and A. Vaidya, “Document-based storage and processing of relationaldata,”inProc.SIGMOD,2010,pp.323–334, doi:10.1145/1807167.1807204.

4. M. J. Carey and O. Kaser, “Yukon: A server-extension for XML documents,” in Proc. VLDB, 2002, pp. 480–491.

5. F. Özcan and M. M. Ozsoyoglu, “Multi-query optimization in relational database systems,” ACM

Computing Surveys, vol. 28, no. 1, pp. 79–105, Mar. 1996,doi:10.1145/234286.234293.

6. M. Atre, “Supporting XML analytics in a native XML database system,” in Proc. ICDE, 2003, pp. 188–197, doi:10.1109/ICDE.2003.1269097.

7. F. Özcan, R. Avnur, and D. Zilio, “Structural joins: A graph-based approach to XML query processing,” in Proc. ICDE, 2002, pp. 263–274, doi: 10.1109/ICDE.2002.994721.

8. J. Robie, M. Chambers, and D. Chamberlin, “XQuery 1.0:AnXMLquerylanguage,”W3CRecommendation, Jan.2007,doi:10.1002/9780470197511.

9. F. Reşit and M. Chebotko, “Querying and managing XML data in relational systems,” Journal of Database Management,vol.19,no.2,pp.1–24,Apr.–June2008, doi:10.4018/jdm.2008040101.

10. P. Buneman, T. Milo, and S. Zohar, “Using XQuery for information integration on the Web,” in Proc. VLDB, 2003,pp.109–118.

11. M. Brunner and A. Barth, “Survey of graph query processing frameworks,” ACM Computing Surveys, vol.51,no.3,May2018,doi:10.1145/3136625.

12. S. Fischer, H. Jia, and A. Bernstein, “MQL: A query language for both graphs and relations,” in Proc. VLDB,2018,pp.740–751.

13. M. Rodríguez-Muro, “Modeling nested collections in relational databases,”ACMTransactionsonDatabase Systems,vol.38,no.3,pp.17:1–17:34,Aug.2013,doi: 10.1145/2485429.2485432.

14. M. P. Consens, “Extending relational DBMS for XML,” in Proc. SIGMOD, 2002, pp. 637–638, doi: 10.1145/564691.564716.

15. M. Kleppe, W. Warmer, and J. Bast, MDA Explained: Architecture-DrivenModernization,2nded.,AddisonWesley,2006,doi:10.5555/1120054.

16. M. Szomszor, “A survey of schema mapping techniques for XML-to-relational and XML-to-XML conversions,” Information Systems, vol. 33, no. 7–8, pp. 614–636, Nov.–Dec. 2008, doi: 10.1016/j.is.2008.03.004.

17. M. Tjoa and W. Engel, “Catching up with big data: NoSQL versus NewSQL versus traditional RDBMS,” 2nd ed., Springer, 2018, doi: 10.1007/978-3-31964355-0.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

18. T. Grust, M. van Keulen, and J. Teubner, “Virtual documents: Querying the Web by reflection,” VLDB Journal, vol. 16, no. 4, pp. 483–503, Nov. 2007, doi: 10.1007/s00778-007-0031-8.

19. M.Rodriguezetal.,“Graphdatabaseapplicationsand concepts with Neo4j,” in Art of NoSQL: A NoSQL GraphDBApp,2013,pp.1–23.

20. R.AnglesandC.Gutierrez,“Surveyongraphdatabase models,”ACMComputing Surveys,vol. 40, no.1, Feb. 2008,doi:10.1145/1322432.1322439.

21. H. Han, “Hybrid data management: Supporting graph queries on relational databases,” in Proc. SIGMOD, 2015,pp.2213–2215.

22. Binnig,D.Kossmann,T.Kraska,andA.Löber,“Arewe reaching the limits of frequency-based caching?” in Proc. SIGMOD, 2017, pp. 16–19, doi: 10.1145/3035918.3035941.

23. J. Shvartzshnaider, “Polyglot persistence in realworld systems: performance comparison,” IEEE Software,vol.33,no.3,pp.116–123,May/June2016, doi:10.1109/MS.2016.71.

24. M. S. Akdere et al., “MLQO: Learning to optimize join queries,” in Proc. SIGMOD, 2019, pp. 145–158, doi: 10.1145/3299869.3314048.

25. Chelsha andZ.Wang, “Smartshardingalgorithmsfor multi-model databases,” IEEE Transactions on Knowledge and Data Engineering, vol. 32, no. 10, pp. 2024–2037, Oct. 2020, doi: 10.1109/TKDE.2019.2925885.

26. R. Peled et al., “Benchmarking hybrid multi-model databasesystems,”inProc.VLDB,2021,pp.755–766, doi:10.14778/3447772.3447780.

27. L. Baumer et al., “Real-world fraud detection using multi-model analytics,” IEEE Transactions on Information Forensics and Security, vol. 17, pp. 1245–1257, June 2022, doi: 10.1109/TIFS.2021.3118034.

28. S. Kumar and F. Sha, “Adaptive schema design and ML-based optimization in unified database systems,” JournalofDataandInformationQuality,vol.15,no.2, May2025,pp.1–17,doi:10.1145/3611822.