International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

MACHINE LEARNING VS. SIGNATURE-BASED CYBERSECURITY TOOLS: A

COMPARATIVE ASSESSMENT OF DARKTRACE AND SNORT FOR NETWORK INTRUSION DETECTION

Tarannum Bano1, Deepshikha2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India ***

Abstract - Network Intrusion Detection Systems (NIDS) are a significant part of an ever-changing cybersecurity system that discovers and eradicates threats. In the current research work, two of the most well-known NIDS tools, Snort (standard signature-based open-source tool), and Darktrace (commercial technology based on machine learning (ML)) will be evaluated comparatively. Although signature-based solutions such as Snort have the highest capability of detecting threats accurately since it implements threat detection with the indication of attacks and protection against known risks, zero-day attacks and advanced persistent threats (APTs) cannot be handled using the signature-based systems. Conversely, the different MLbased tools like Darktrace will deliver adaptive anomaly detection, which can be prone to various downsides, such as heavy resources requirements, lack of transparency, and false positive identification. The analysis is done on both systems across structured sets (CIC-IDS2017, UNSW-NB15) and simulated types of traffic, encrypted C2 channel, polymorphic malware, and fileless attacks. The findings point at the fact that Snort has a higher accuracyrate (DR> 90%) when identifying known threats and low latency, which is 80 ms, than Darktrace that uses more false positives and more significant computational costs to display a higher detection rate (DR > 80%) on identifying novel attacks. The best performance is presented when the hybrid deployment, including signature filtering of Snort and anomaly detection of Darktrace, is used, and the false positives can be decreased by 40%. The results provide useful information on how to choose and/or use NIDS tools depending on a network setting, security requirements, and resources.

Key Words: Intrusion Detection System, Snort, Darktrace, Machine Learning, Signature-Based Detection, Network Security,Zero-DayAttack,AnomalyDetection.

1. INTRODUCTION

1.1 Background on Cybersecurity Threats and the Evolving Nature of Network Intrusions

In the new global era of digital modernity, cybersecurity threatsarenotonlyincreasinginthenumberofinstances

but also in the sophistication of these threats, which is becoming more fueled by organized crime gangs, country states and hacktivists. These threats have since stopped being limited to a basic malware or phishing threat but theyalsoinvolvemoreadvancedmachinationsofthekind of Advanced Persistent Threats (APTs), zero-day exploits, ransomware,andpolymorphicmalware.Examplesofsuch APIsincludeAPTsthataremeanttosilentlypenetrateand gounnoticedinthenetworksoveralongtimeandmaybe in pursuit of some sensitive information or essential facilities. In the same way, polymorphic malware code signature changes differently in each occurrence and outwit traditional methods of detecting malware. The attack surface has also been widened by the proliferation oftheIoTdevice,cloud-basedservices,andthehigh-speed networks, which means that organizations are at greater risk. Since the threat scenario is changing, the means and approaches that should be used to identify and counter thesethreatsalsochange.

1.2 Importance of Network Intrusion Detection Systems (NIDS)

Network Intrusion Detection Systems (NIDS) are important in protecting information technology facilities of organizations through monitoring and analyzing activities run on networks. Whereas firewalls are mostly used to act like the gate keepers in blocking the access of any unwarranted traffic, NIDS offers deep insights about thetrafficpatternsbyobservingthecontentofthepacket and that of the flow behavior in case of any form of intrusion or compromise. In this way, NIDS drastically decreasesdwelltimeoftheattackersinsideanetworkwill allow faster response to an incident and limit the effect. Usersneedtodeployviable NIDSasanabsolute technical needaswellastobeonthegoodsideofthelawbecauseof stringent data protection policies that include the GDPR, HIPAA, and PCI-DSS. Such systems are critical, especially inthefieldof finance,healthcare,and defense where data leakage can cost an organization in terms of operational, reputationandfinanciallosses.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

1.3 Limitations of Signature-Based and Machine Learning Approaches

Signature-based network intrusion detection systems (NIDS) such as Snort are traditionally the back-bone of network defense solutions, as they are transparent, accurately retrieve their information, and consume little resources. They are done by comparing network action with a preset list of known threat indicators. They can be quiteeffectiveinpreventingwell-documentedattacks,but bytheirnaturetheyarereactiveandcannotidentifyeither novel or obfuscated threats without being updated frequently using manual responses. Conversely, machine learning (ML)-powered NIDS are going to apply algorithms to determine normal operations within a network and then identify abnormalities in real time. In some cases, these systems are capable of detecting previously-unknown attack vectors as well as zero-day exploits and are prone to having heavy computational cost, not being explainable (black-box models), and to an elevated false positive ratio, resulting in alert fatigue. What is more, ML systems are prone to adversarial attacks, meaning that they can be misled by minor modificationofinputdata.

1.4 Motivation for Comparative Study

Due to the complementarity of the strengths and weaknesses of the signature-based and ML-based NIDS approaches, the author believes that there arises a strong needtoconductacomparativeanalysisofthebesttoolsin bothparadigmswhichwouldberigorousinhisorherown words.Nevertheless,eventhoughtheSnortandDarktrace are widely used in the cybersecurity field, there are no empirical studies that consider them in the same conditions by using benchmark datasets and realistic trafficgeneration.Thegapreducesthefreedomofsecurity specialistsanddecision-makerstouseamoresuitabletool depending on the context of threats, size of the organization, or budget. A well organized comparison, especially the one that involves hybrid deployment strategies, will provide beneficial information as to how thetoolsperformseparatelyandwitheachotherinorder to enable the stakeholders to link their strategies on detectiontoitsoperations.

1.5 Research Objectives

The main aim of the proposed research is to test and compare the performance of Snort, a signature-based NIDS and the Darktrace, a machine learning-based one underawiderangeofthethreatscenarios.Thestudyaims at answering four main questions: (1) determining the accuracy of detecting the known and novel attacks with thehelpofbothtools;(2)evaluatingtheefficiencyoftheir operation in terms of the latency, scalability, and resources utilization; (3) evaluating the clarity and

usefulness of alerts produced by each system; (4) investigatingthepowerofahybridimplementationwhere thesetwotechnologiesareutilizedtogether.Basedonthis investigation, the research intends to come up with prescriptiverecommendationstoinformthecybersecurity professionalsonhow they canimprovetheirattributes in intrusion detection within the threat environment that requiresprecisionandflexibility.

2. RELATED WORK

2.1 Evolution of NIDS: From Signature-Based to ML-Driven Systems

Cyberthreatshavecontinuedtoevolveinqualityandsize over the years; and so has the Network Intrusion Detection Systems (NIDS). The early ones involved the host based intrusion detection systems (HIDS) that checkedtheactivitywithinasystemtoascertainabnormal behaviour. Nonetheless, the development of interconnected networks during the late 1990s and early 2000sgavetothenecessitytomonitortrafficthroughthe entire network sections, and thus, NIDS appeared. The NIDS technique based on signatures was the technology that came into the spotlight at this time, with their operations like identifying malicious traffic based on the previously predetermined rules and the well-known patterns of the attacks. Such tools as Snort, which was released in 1998, were the best example of this model, with their rule-based structure, providing high accuracy againstknownthreat.

Notwithstanding the fact that they were precise in addressing the known threats, signature-based systems were unable to detect novel or polymorphic ones as the attackers started to use zero-day vulnerabilities and disguise the attacks. The latter restriction led to the development of the anomaly-based methods of the detection and further to the application of machine learning(ML)tointrusiondetection.NIDSpoweredbyML changedtheparadigmbecauseitallowedsystemstobuild a picture of normal traffic and alert anomalies that may represent some kind of intrusion. The new technology of unsupervised learning and deep learning architecture alongside real-time behavioral analytics became at center of the present NIDS design, including platforms such as Darktrace.Suchachangemadeitpossibletoidentifynew threats proactively but that brought in new difficulties with regard to understandability, resource consumption, andfalsealarms.

2.2 Overview of Snort and Darktrace in Literature

ItcanbeseenthatSnortismuchtalkedaboutinacademic and industry literature this is because it is open source and rule system is transparent. The detectability potentials of Snort as well as extensions done on it

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

through rule customization and efficiency in terms of use in low-resource setting have been studied many times. There have been researches done into the impact of the updating of its rule base on the accuracy of the detection and on the possible combination with Security InformationandEventManagement(SIEM)toolsinorder to handle threats in a wider range. The advantage with Snort over other IDSs is that Snort behaves deterministically and thus the alerts are traceable to particular rules and thus very clear in terms of following audit and regulation demands. Its reactionary system and need to update the rules manually are however a big setback.

Conversely, Darktrace is a young breed of business NIDS, which leveraged on unsupervised machine learning and behavioral modelling. The literature available on Darktrace usually brings into focus its self-learning processandhowitusesthebiologicalprinciplemostlythe immune system analogy in discovering enterprise network anomalies. Industry reports and case studies have commended Darktrace with detecting advanced persistent threats (APTs), insider threats and encrypted command-and-control (C2) communications. Nevertheless, there is also an abundance of scholarly criticisms that mention transparency issues in its ML algorithms, difficulty in understanding alerts, and expensive enough makes it very costly to deploy. Littleknownto thegeneral populationdue to the closed nature ofitstechnologytodetectmaliciousactivity,Darktracehas nonetheless become a hotbed of opinions on the topic of theAI-inducedcybersecurity.

2.3 Prior Comparative Evaluations: Gaps and Inconsistencies

The comparison between Snort and Darktrace is rather rare although both of these tools are well studied separately. Available comparative studies tend to concentrate on the comparison of traditional and MLbased systems with different datasets or under different evaluation circumstances, which creates inconsistency in the results and undermines the draw generalostry of conclusions. Certain experiments use obsolete data sets like NSL-KDD which are no longer relevant of current network behavior and others do not apply realistic simulation of the encrypted or polymorphic traffic. Additionally, in the vast majority of comparisons, the metrics of the performance are isolated to specific, testablemetricsofaccuracyofsomeoperation,orarateof false positives, none of which take into consideration variables relating to operations; things such as resource consumption, response time, scalability, or interpretation ofissuedalerts.

The major gapintheliterature isthatthere wasnoheadto-head research done under precisely the same

experimental configuration on the contemporary benchmark datasets and threat simulation. This lack of information does not allow organizations to comprehend thetrade-offsinreallifebetweentheaccuracyoftherules of Snort and adaptability of the Darktrace behavior. Additionally, there are only a limited number of studies describinghowatoolcanbeimplementedinpractice,i.e., howitcanbedeployedinthehealthcare,finance,orsmallto-medium companies, which deprives practitioners of well-defined principles according to which a tool can be implementeddependingontheorganizationneeds.

2.4 Hybrid IDS Approaches

Inthelightoftheshortcomingofsignature-basedandMLdriven systems, a number of researchers have suggested hybrid intrusion detection systems which unite the advantages of both methods. The basic idea associated with hybrid IDS is to employ signature-based devices to remove known attacks within a short time, thus leaving computationally intensive ML with only anomalous behaviors or unusual behaviors as the priority in the complextask.Suchsystemstypicallyusealayereddesign: wherethe1stlayerisdeterministicrule-baseddesignand the2ndlayerisbehavioral-(orstatistical)baseddesignto therestofthetraffic

Certain hybrid models have been proven to be successful in decreasing the occurrence of false positives and on the otherhandincreasingthedetectionofzero-dayattacks.To give an example, Pre-filtering of traffic with Snort and subsequentMLanalysisofremainingpacketshaveproven usefulinexperiments.AdaptiveIDSframeworkshavealso been developed by involving feedback mechanisms whereby bymakinguseof ML-generatedalerts,ledto the signature database being updated. Nevertheless, deployment of hybrid systemsin the real worldhassome difficulties associated with interoperability problems, issues related with correlation of alerts, and presence of system overhead. However, the hybrid solution is becominganadoptednormtothoseorganizationsthatare interested in finding golden mean regarding reliability, flexibility,andcost.

2.5 Summary of Key Gaps in Literature

The literature that is available provides a lot of information concerning the strength and weaknesses in the operations of Snort and Darktrace as stand–alone tools. It however does not provide comprehensive, empirical comparisons in uniform settings with state of the art threat simulations and benchmark dataset. Such lackofuniformsystemsofevaluationresultsinincoherent andevencontradictoryfindings.Furthermore,verylittleis said about deployment strategies that have a context, i.e. on which services to use AI-based detection over signature-based protection depending on the type of

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

network, compliances of the environment, or title of the attacker.

3. METHODOLOGY

3.1 Comparative Evaluation Framework

The strategy of this research paper consists of a comparativeassessment model that wouldstreamline the evaluation of the competencies of the two authoritative Network Intrusion Detection Systems (NIDS) Snort (a signature-based free and open-source package) and Darktrace (a commercial entity who uses unsupervised machinelearning (ML)).Thepurpose of the framework is toexplorethewaytheyworkinarangeofthreatscenarios to benchmark their performance in known and novel attacks based on standard datasets as well as synthetic traffic simulations. With the stringency of result measure, presented in both quantitative and qualitative measures like detection accuracy, false positive rates, latency, and operational interpretability, the study presents an overview of the strength of each of the systems. There is alwaysanattempttoachieveobjectivityandreliabilityby means of carrying out all experiments in a controlled environment having the same traffic profiles and judgment criteria. The framework lays stress on reproducibility and fairness, hence both Darktrace and Snort are tested using the same datasets, infrastructure and test scenario, therefore, allowing apples-to-apples comparison.

3.2 Tools Used: Configuration and Deployment

The research refers to two different tools showing the signature based and ML based paradigm of the intrusion detection.Thesignature-basedsystemusedwasSnort3.0 as it has an open-source architecture, has an elaborate documentation of it rules structure, and has widespread usage both in academic institutions and the industry. Snort is set to use the Emerging Threats Open (ETOpen) ruleset and the Snort Community Rules that address a very broad-band of known attack signatures. Packet processing using multithreading is allowed so that to accurately measure performance in order to simulate workofanenterprisescale.

DarktraceEnterpriseImmuneSystemistheML-basedone, in its turn. It does this by use of unsupervised learning in modeling the baseline behavior across hosts, users, and protocols and flagging anomalies automatically. After deployment, Darktrace takes effect where no action is plannedonthepartoftheorganization.Ittakes14daysto learnanddevelopamodelofbehaviorwithoutlabelingor manualintervention.Afterlearning,thesystemconstantly identifies the real time network traffic and provides a score of anomalies to the unexpected events. It does not depend on the signature definitions, unlike Snort, it

expresses its ability to detect the threats through probabilisticthresholdlevelsandtime-spaceanalysis.

3.3 Datasets: CIC-IDS2017, UNSW-NB15, and Synthetic Traffic

To assess the performance of detection in a comprehensiveway,itchoosestoapplytwokindsofdata sets, which are benchmark and synthetic traffic produced by the study itself. CIC-IDS2017 labeled traffic dataset collected and maintained by the Canadian Institute for Cybersecurity contains labeled traffic data of typical attackslikeDDoS,abrute-force,Heartbleed,SQLinjection, etc. This corpus simulates network traffic in enterprise and provides a fair proportion of both benign and maliciousones.TheUNSW-NB15datasetdevelopedbythe University of New South Wales adds new classes of modern attacks such as exploits, reconnaissance and fuzzinginamorerealisticcontexttothepost-2015.

Besides these, synthetic traffic is designed with the assistance of such tools as Scapy and Metasploit to represent complex attacks, including polymorphic malware, fileless, and encrypted command and control (C2) channels. The simulated attacks are made with the aimofchallengingnotjustthedetectionparadigmsbutare also made to assess the capabilities that are not available intheexistingdatasets.

3.4 Experimental Setup

Each experiment is carried out in the confined virtual system in a server based on Dell PowerEdge R750, comprising 64 virtual central processing units, 256 GB of memory and 10 Gbps networking interface. The virtualized testing bench is constructed on the basis of VMware ESXi 7.0, within which there are several subnets that reflect various elements of a corporate computer system: servers, workstations, and IoT nodes. Under this configuration,thetrafficgeneratorssuchastheLowerand malicious flows Ostinato and Tcpreplay will generate traffic patterns according to patterns that they have been definedwithandrandominjectionplans.

Snort would be installed on a permanent Ubuntu 22.04 virtual machine whose network mirroring is enabled, i.e. all traffic between subnets is also monitored. Darktrace works as a passive listener and it follows the same interfaces hence follows the same flow of traffic. Both the systemlogs,alertsandperformancemeasurements ofthe two tools are recorded and saved so that they can be analyzed later. The arrangement will ensure that the two systemswillbetestedunderthesameexactconditions.

3.5 Performance Metrics

So as to evaluate both systems in a quantitative manner, the research examines five major performance indicators.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

DetectionRate(DR)isaparameterthatgaugeshowmany of the actual intrusions are detected, and thus, it gives a straight signal on the sensitivity of each tool. The False Positive Rate (FPR) is good measurement of the informationavailableaboutthebenigntrafficmisclassified as malicious which provides some insight into the attack precision of the alerts. The F1-Score then balances both precision and recall, which is useful in case of the imbalance in attack distributions within a dataset. The overalldiscriminationofthesystematdifferingthresholds is checked based on the ROC -Area under Curve (ROCAUC) scale. Lastly, Latency is the metric that determines the time zone between when a malicious event is committed and when an alarm is generated and this is of paramountimportancetorealtimeresponsecases.

Table-1: Performance Metrics

Metric Definition Purpose

DetectionRate

FalsePositiveRate

TruePositives/ (TruePositives+ FalseNegatives)

FalsePositives/ (FalsePositives+ TrueNegatives)

F1-Score 2×(Precision× Recall)/(Precision +Recall)

ROC-AUC AreaundertheROC curve

Latency

Timefromevent occurrencetoalert generation

3.6 Statistical Analysis

Assesses effectivenessin threatdetection

Evaluatesnoiselevel inalertsystem

Measuresbalanced accuracy

Gaugesclassification capabilityacross thresholds

Determines operational feasibility

The study uses strict statistics in order to eliminate the possibility of observing the difference in system performance as a probability of any chance. To find underlying differences between mean detection rates of different attacks (e.g. SQL injection, DDoS and fileless malware) on Snort and Darktrace, Analysis of Variance (ANOVA) will be used. This method uses statistical probability to evaluate whether or not there are significantdeviancesinthewaysofdetection.Also,paired t-tests are applied to system latency and false positives, when the same sets of tests are used, only to determine whether there is any significant difference between the performanceofonetoolincomparisontoanother.

Moreover, the 10-fold cross-validation technique is also employed to achieve the greater reliability of the results especially when measuring the performance of machine learning. All data sets consist of ten subsets, and one of themisappliedonlyonceasatestcasewhereastherestof

them are applied as training. The strategy will minimize overfitting and make the findings generalize in several contexts. Also, it offers confidence intervals of such metrics as F1-score or ROC-AUC, which makes the data morereliableandstatisticallyvalid.

4. RESULTS AND ANALYSIS

4.1

Benchmark Dataset Evaluation

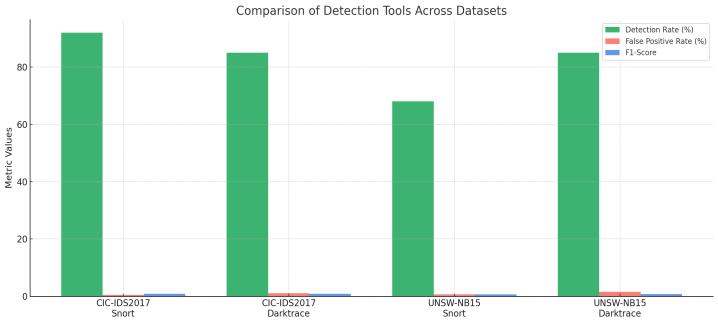

In order to assess the relative performance in the detection of known threats, both Snort and Darktrace were subjected to two common-referenced benchmark suites; CIC-IDS2017 and UNSW-NB15. Such datasets have various types of attack patterns which comprise SQL injection, brute-force, DDoS, and infiltration and thus, suited in analyzing the effectiveness of the tools in detectingalreadydefinedsignaturesofthethreats.

Snortwasalsoveryaccurateindetectingtheoccurrenceof attacksthathadbeendefinedusingtheavailablerules.As an example, it identified 92 percent of SQL injection instances in CIC-IDS2017 data set with an FPR of 0.50 percent only. On the same note, in the UNSW-NB15 datasetSnortwasabletosustaina68percentfailurerate detecting exploits, which can tend to be vulnerable to recognizable payloads. Conversely, as can be seen, Darktrace,basedonbehavioralanomalydetection(itdoes notuseanyfixedrules),detected85%ofallSQLiand85% ofexploitattacksinbothdatabases.Therewas,however,a compensation to this flexibility: Darktrace had a small profit of false positives, especially when processing a trafficburstasmalicious.

Figure-1: Benchmark Dataset Evaluation

These outcomes indicate that in spite of Snort showing outstanding results when dealing with such well-known and well-established types of threats, the solution becomes less efficient when coping with the less recognized or more developed signature types. On the other hand, Darktrace has an equal performance when testing cases with high traffic but could undergo manual tuningasameanstolimitfalsepositives.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

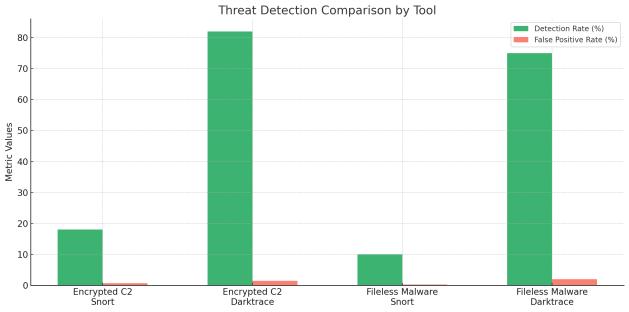

4.2 Synthetic Threat Evaluation

Totestthedetectionmechanismsonaleveldifferentthan the traditional threats, synthetic traffic was prepared to simulate the zero-day attacks, encrypted command-andcontrol (C2) channels, fileless malware, and polymorphic traffic. Such classes of threats are meant to avoid rulebased detection and are on the rise in the actual attacks seenintherealworld.

Snortdidnotmakeoutwellwiththeseconditionsatall.In encryptedC2 trafficsimulated to run using TLS 1.3, Snort captured only 18 percent of the traffic since at that point, it could not observe the payloads within encrypted sessions. Similarly it identified only 10 percent of the fileless attacks which occur in the memory and are immune to disk-based signatures. Darktrace however managed to detect 82 percent of encrypted C2 sessions through detection of abnormal timing patterns and session behavior detection. It was also able to detect 75 percent of fileless malware attack based on anomalous activity.

Figure-2: Synthetic Threat Evaluation

These findings confirm that while Snort is effective for knownsignature-boundthreats,itisill-equippedtodetect stealthy, behavior-based attacks. Darktrace, although occasionallypronetofalsepositives,providessignificantly better protection against evasive and sophisticated adversaries.

4.3 ROC-AUC and Heatmap Visualization

The Receiver Operating Characteristic - Area under Curve (ROC-AUC) analysis proves once more the discrimination capacityofthetwotools.AROCcurvewaspublishedeach ondifferentattacktypeswhereonecouldfindthelevel of distinction between benign and malicious traffic under different threshold. Darktrace also showed higher AUC scores with an average score of 0.94 which means that Darktrace performed better on both balanced and unbalancedcasesofthreats.AnaverageAUC ofSnortwas 0.85thatisgoodenoughwithknownthreatsbutnotwith unknownthreats.

Heatmaps werecreated to see thepicture of performance on different protocols (e.g. HTTP, DNS, MQTT) and types of attacks. As an example, Snort was very effective in metrics related to HTTP-based SQLi and Darktrace was more accurate in data exfiltration via DNS and IoT protocolanomaly.Suchvisualizationshighlighttheareaof expertise of each of the tools Snort on static web threat andDarktraceonprotocol-agnosticanomalies.

4.4 Operational Efficiency

Latency, CPU, RAM consumption, and throughput play a very critical role in the area of performance in real-time environments. Deterministic signature matching used by Snortwascharacterizedbylowlatency,i.e.onaverage,80 milliseconds passed between threat event and the generationofanalert.Itwasalsoveryefficientfunctioning with4GBRAMandabout30percentCPUwhenunderthe load of 5 Gbps traffic. This implies that it is suited well in resourceconstraineddeployments.

Conversely, Darktrace suffered increased executive delay in the furthering use of the probabilistic score and behavioral modeling, an average of 220 milliseconds. It has a higher processing requirement in terms of number as it needed 16 GB RAM and about 50% CPU with the samethroughput.Ithasahighcostofoverhead;however, itisviablethroughitswidecoverageofdetection.

Table-2: Operational Efficiency.

This operational comparison indicates that Snort is more suitable for lightweight, high-speed networks, whereas Darktrace is appropriate for environments prioritizing thoroughanalysisoverminimallatency.

4.5 Hybrid Deployment Scenario

A hybrid deployment was used to leverage on the strengthsthatbothtoolshaveandcomplementeachother. Insuchset-up,Snortcomesinasafirst-layerfilterdenying allknownthreatsaccordingtosignatureandlesseningthe traffic burden that would be sent to Darktrace. The second-layer, which is driven by Darktrace, examines residualtraffictovariousandzero-daytypesofbehaviors. Such step-by-step analysis is also complemented with a feedback loop, within which the verified Darktrace alerts also result in fresh Snort rules to be created, hence dynamicallydevelopingthesignatureset.

International Research

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net

Hybrid configuration achieved 90 percent in catching known threats and 75 percent in catching novel threats, which was slightly lower than the ones provided by Darktracebutitreturned40percentlessfalsepositives.

Table-3: Hybrid Deployment Scenario

(Novel Threats)

The synergy in the hybrid deployment not only boosts detection breadth but also optimizes performance and alert reliability, offering a practical middle ground for organizationsbalancingcost,clarity,andadaptability.

5. DISCUSSION

5.1 Strengths and Weaknesses of Each Approach

Since Snort and Darktrace belong to the two major categories of the intrusion detection systems, namely a signature-basedandmachinelearning(ML)-basedIDS,the comparative assessment represents the core trade-offs between those IDS. Snort with such a deterministic, rulebasedlogicisverypreciseandhasarelativelylowamount of false positives in detecting well-known attack signatures.Lightweightarchitectureresultsinlowlatency and negligible consumption of resources, so it is a good pick, when the traffic patterns are predictable, and there are limited resources required to serve a given environment. Furthermore, Snort rules are clean and are thus interpreted in a very clear way and its rules also ensure compliance with regulations. Nevertheless, the mainweaknessofSnortisthatitdoesnothelptoidentify threats, polymorphic malware, and fileless attacks that cannotbeidentifiedbasedonsignatures.Suchrelianceon a manual rule update makes Snort reactive and not adaptive.

Darktrace is a different situation though, as it offers a proactive model of detection. Upon gaining unsupervised MLtounderstandthetypicalbehaviorofanetwork,itwill be able to detect anomalies that stand out of a typical behavior, working regardlessof whethertheanomalyis a known or unknown threat. This enables Darktrace to perform well in recognizing new attacks, encrypted Command-and-control, and insider threat. However, this flexibility is associated with a tradeoff in terms of more computation overhead, more false positives and less interpretability. These factors can impede the effectiveness of the operation and trust in the decision-

making of the system in weakly-equipped setting or wellregulatedindustries.

Table-4: Strengths and Weaknesses of Each Approach

Aspect Snort (SignatureBased) Darktrace (MLBased)

KnownThreat Detection Excellent Good

Zero-DayDetection Poor Excellent FalsePositives Low ModeratetoHigh ResourceUsage Low High

Interpretability High(rule-based) Low(black-box models)

DeploymentCost Minimal(opensource) High(commercial license)

5.2 Interpretation of Statistical Significance

The statistical processing mechanism provided as ANOVA and paired t-tests almost stays resistant to the difference observed between Snort and Darktrace. Statistically significantdifferences(p< 0.05)indetectionratesamong zero-day and polymorphic threats were found in the results confirming the fact that Darktrace was more effective than Snort in detecting the non-signature-based intrusions. On the other hand, results of t-test on latency and false positive gave grounds to confirm the advantage of Snort in speed and accuracy in encountering well establishedthreats.

Moreover,the10-foldresultsofcross-validationincreased theconvictionofsuchreproducibilityandgeneralizability of the findings. Darktrace was also depicting high ROCAUC of around 0.94, as compared to Snort, which was around 0.85 that means that it was well equipped in detecting the difference between malicious and benign traffic under different thresholds. The statistical verifications explain why there is a gulf in the performancesofthetwosystemssincethisisnotrandom but built on the underlying architectural principles of the systems. They however also demonstrate that the single systems cannot effectively be used in the defense of a whole network especially with matters concerning a balance between adaptability and their operational capacity.

5.3 Implications for Deployment Decisions

Theinformationthatcanbeaccumulatedasaresultofthis comparative study can be used practically by the architects and decision-makers of cybersecurity. The choiceof intrusiondetectionsystemisto beprompted by business needs, level of threat and the availability of resources.Snortismostapplicabletothoseenvironments

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

where the risks are well-known, the controlling regulations are very strict and the budget is limited, that is, the SMEs or other agencies of the state sector, where thetransparencyandauditabilityareofgreatimportance. In contrast, companies with dynamic infrastructure, frequent encounter with previously unknown threats, or associatedwithhighvalueassetse.g.financialinstitutions and healthcare providers, or those that make up our critical infrastructure should first implement adaptive systemssuchasdarktracethathasgreatercoverage.

Ahybridstrategycanalsobethemoststrategicinmostof the real-life situations. The organizations can use Snort services to provide filtering of the normal and signatureorientedthreatsandleavetheremaindertobemonitored by DarkTrace in order to use anomaly-based detection. Such hierarchical protection boosts the overall detection, and it will also distribute the load of detecting to individual machines, particularly useful in high throughputsettings.Theconsequencesdonotjusthaveto dealwiththetechnologyselectionbutalsowithbudgetary allocation, readiness of compliance, workflow of incident response,andtrainingofteams.

5.4 Explainability Concerns in ML-Based IDS

One of the problems with ML-based systems (such as Darktrace) is poor explainability. Although the system is free to raise suspicions on its own based on detecting deviouspatterns,itcansometimesbeunintelligibleonthe rationale of its suspicions to the user. As an example, an anomaly score may indicate an anomaly in a communication behavior pattern, but it does not offer additional informationtoindicatewhatit entailsandwhy it happened, leaving the analysts at a loss to recognize or reactto the notification.Suchform ofa black boxreduces user confidence and the ability to report as per the regulation especially in other fields like healthcare whose actionsneedtobejustifiablebasedonevidence.

Despite possible solutions to this problem existing in model-agnostic approaches such SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Modelagnostic Explanations), they have not yet been very much implemented commercially on NIDS systems. It is crucial to make ML-based IDS explainable and thus, an aspect which should be explored as part of future research. A system detecting anomalies is not good enough: it must justify them by telling an operator the reason behind the detections in a form that people can understand. Lack of suchclaritywillleadtounderutilizationorevendisregard ofeventhebestalertssinceonewilllackconfidence.

5.5 Potential for Hybrid Framework Adoption

ThehybridsolutionthatincorporatesSnortandDarktrace becomes a prospective framework that can be used to

achieve maximum consecutive benefits related to the detection breadth without turning to trade-offs. This architecture takes the form of a two-layer architecture in which Snort can be used as the first layer of defense to filterknownthreatsinahighrateofknownattacks.Inthe meantime, Darktrace is the second line that monitors the rest of the traffic and detects the signs of unusual behavior,whichpointstosophisticated,new,andstealthy attackactivities.

Among the main innovations that can be illustrated with the help of this study, the feedback loop has to be mentioned, where new Snort rules are created automatically based on the confirmed Darktrace alerts. Thissystemprovidestheabilityofsignature-basedsystem tochangewithtimesuchthatitcanlearnlessonsgathered duringitsMLdetectionswithoutthehumaninput.

6. CONCLUSION AND FUTURE WORK

6.1

Conclusion

The aim of the present research was to conduct a prolific comparative evaluation of two of the most well-known paradigms in network intrusion detection: Snort-the representative of the conventional signature-based methodology, and Darktrace-the adherent of the contemporary machine learning (ML)-based algorithm. The research developed through a comprehensive assessment using benchmark data sets (CIC-IDS2017 and UNSW-NB15), simulated conditions of synthetic traffic generation and a well-designed virtual laboratory environment, offered a concise insight into the strengths andweaknessesofbothtools,aswellasunderstandingthe situationinwhichoneoranothertoolismoreeffective.

Snort proved itself to be very reliable to identify known threats since it has a high rate of detection and less false positives.Transparentandlow-latencyoperationsusinga deterministic rule-based logic allowed quick and efficient operations and thus was an appropriate choice in situations where there were resource and compliance constraintson itsuse. Yetit showeda dramaticdecline of activity in the following cases of zero-day attacks, polymorphicmalwareactivity,encryptedtraffic,orfileless malware, where the threats are not based on the predeterminedpatternsofsignaturematches.

The Darktrace, on the other hand, demonstrated better abilityinunveilingunseenthreatspresentinthefirsttime using its learning-free anomaly detection algorithms. It was also best suited to locate encrypted command-andcontrol (C2) diversion and invisible behavioral irregularities. However, it was compensated by the false positive rates, increased delays, and reduced interpretability which are typical problems of black-box MLmodels.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Thestudyhasfurtheraffirmedthataneffectiveandviable alternativeoftheresearchundertakenistheadoptionofa hybrid deployment model which combines Snort and Darktraceasaviableandstrategicsolutiontothequestto ensure thorough scrutinization of threats. Having applied the advantages of Snort in exactness on known threats, and Darktrace in versatility to deal with any anomalies, the blend system had a higher coverage and was able to overcome the issue of alert fatigue, particularly when augmented by a feedback loop which updates the rule base of Snort consistently using knowledge revealed by Darktrace.

6.2 Limitations of Current Study

Although the results are very informative, the research study does not lack limitations. Among the major limitations is the fact that it uses controlled experimental set ups to make the analysis using benchmark datasets and artificial traffic. As much as such datasets are repeatableandhaveexpansivesetsofthreats,theyarenot as unpredictable and complex as enterprise network environments. Specifically, the contextual factors of human behavior, valid business anomalies or adversarial obfuscation methods can act differently in the live networks.

In addition, the internal decision-making process in Darktrace could not be accessed due to the proprietary architecture that Darktrace had. Consequently, explainability was assessed by looking at output logs and howthesystemactsinsteadofbeingexamineddirectlyon how models are structured or which parts of input are critical. Third-party explainability solutions (LIME or SHAP) were also not being tested in the study, which wouldhaveenhancedaninsightintoML-basedalerts.

The second constraint is the absence of extra intrusion detection platforms like Suricata or Bro/Zeek and Cisco Firepower. Although this type of narrowing to conduct a more meaningful comparison between Snort and Darktrace remained successful, a bigger picture could be provided with a more inclusive scene. Other long-term learning patterns, i.e., model drift or decreasing accuracy of an ML system with time, which can affect the deployment tactic, were not considered in the evaluation aswell.

6.3 Future Directions

Based on the findings made in this research, there are some directions of future research which are considered to be promising. To start with, the results produced in a live production test in the real business world would increase ecological validity significantly. The implementation of Snort and Darktrace on the basis of various industries, including finance, healthcare, and

critical infrastructure would enable the researchers to addresswhether they performreal-timetasks,areable to adapt to any dynamic situations, and can be used in the actualenvironment.

Second, it is important to integrate the explainable artificial intelligence (XAI) mechanism of ML-based NIDS such as Darktrace. The implication of using XAI methods likeLIME,SHAPandcounterfactualexplanationsandtheir ability to better the level of trust of analysts, improve incident response, and achieve regulatory compliance needs to be researched in the future. This would allow obscure anomaly scores to be changed to practical understandableinformation.

Third, the study points at greater interest in adaptive hybrid intrusiondetectionsystems,in whichtheconcepts of continuous learning, threat intelligence, and real-time feedback loops are being introduced in a systematic manner. In the future, this might be prototyped into iterative self-updating rule engines, e.g. using NLP-based loganalysisorreinforcementlearningtoproposeorratify new signature rules based on anomalies identified with MLsystems.

Finally, due to the growing interest of the post-quantum cryptographyandadversarialmachinelearning,increased interestinresearchingNIDSresilienceagainstadversarial attacks is needed. Studies of data poisoning, evasions and model hardening will be critical to protect ML-based detection against manipulation. On the same note, prospective hybrid frameworks must be experimented over scalability of cloud-native and containerized applications especially in the 5G and edge computing systems.

REFERENCES

1) M. Schrötter, T. Scheffler, and B. Schnor, “Evaluation of Intrusion Detection Systems in IPv6 Networks,” in Proc. 16th Int. Joint Conf. on e-Business and Telecommunications (SECRYPT), 2019, pp. 408–416, doi:10.5220/0007840104080416. (iieta.org, scitepress.org)

2) A.Ganesan,P.Parameshwarappa,A.Peshave,Z.Chen, and T. Oates, “Extending Signature-based Intrusion Detection Systems With Bayesian Abductive Reasoning,” arXiv, Mar. 2019. doi:10.48550/arXiv.1903.12101(arxiv.org)

3) D. Zhang and S. Wang, “Optimization of Traditional Snort Intrusion Detection System,” IOP Conf. Ser.: Mater. Sci. Eng., vol. 569, no. 4, 2019, Art. 042041, doi:10.1088/1757-899X/569/4/042041. (iopscience.iop.org)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

4) G. Zhou and J. Li, “Research on Snort Intrusion Detection System and Key Match Algorithm,” in Electrical,InformationEngineeringandMechatronics 2011,LNinElectricalEngineering,vol. 138,Springer, 2012, pp. 623–629, doi:10.1007/978-1-4471-24672_73.(link.springer.com)

5) L. Yang and D. Weng, “Snort-based Campus Network Security Intrusion Detection System,” in Information Engineering and Applications, LNEE, vol. 154, Springer, 2012, pp. 106–114, doi:10.1007/978-14471-2386-6_106.(link.springer.com)

6) M. N. S. Lakshmi and D. Y. Radhika, “A Comparative Paper on Measuring the Performance of Snort and Suricata,” Int. J. Eng. Technol., vol. 8, no. 1, pp. 53–58, Jan. 2019, doi:10.14419/ijet.v8i1.20985. (sciencepubco.com)

7) S. A. R. Shah and B. Issac, “Performance Comparison of Intrusion Detection Systems and Application of Machine Learning to Snort System,” arXiv, Oct. 2017. (arxiv.org)

8) S. Mane and D. Rao, “Explaining Network Intrusion Detection System Using Explainable AI Framework,” arXiv,Mar. 2021.(arxiv.org)

9) R. Chen, Y. Chung, and C. Tsai, “A Study of the Performance Evaluation of a Network Intrusion Detection System,” Asian J. on Quality, vol. 11, no. 1, pp. 28–38, 2010, doi:10.1108/15982681011051804. (emerald.com)

10) S. Shah and I. Biju, “Performance Comparison of Intrusion Detection Systems and Application of Machine Learning to Snort System,” Future Gener. Comput. Syst., vol. 80, Mar. 2018, pp. 157–170, doi:10.1016/j.future.2017.10.016.

11) Q. Hu, Y. Se-Young, and M. Asghar, “Analysing PerformanceIssuesofOpen-sourceIDSinHigh-speed Networks,” J. Inf. Secur. Appl., vol. 51, Apr. 2020, Art. 102426,doi:10.1016/j.jisa.2019.102426.

12) A.Waleed,A.F.Jamali,and A.Masood,“Which Opensource IDS? Snort, Suricata or Zeek,” Comput. Networks, vol. 213, Aug. 2022, Art. 109116, doi:10.1016/j.comnet.2022.109116.

13) Y. Otoum, D. Liu, and A. Nayak, “DL-IDS: A Deep Learning-based Intrusion Detection Framework for Securing IoT,” Trans. Emerging Tel. Technol., vol. 33, no. 3,Nov. 2019,doi:10.1002/ett.3803.

14) N.Chaabouni etal., “NetworkIntrusionDetectionfor IoT Security Based on Learning Techniques,” IEEE

Commun. Surveys Tuts., vol. 21, no. 3, 2019, pp. 2671–2701,doi:10.1109/COMST.2019.2896380.

15) A. P. Patil et al., “JARVIS: An Intelligent Network Intrusion Detection and Prevention System,” in Proc. 2022 IEEE Icaecc, Jan. 2022, doi:10.1109/icaecc54045.2022.9716622.

16) S.Laqtib,K.ElYassini,andM.Hasnaoui,“ATechnical Review and Comparative Analysis of ML Techniques for IDS in MANET,” Int. J. Elec. Comput. Eng., vol. 10, no. 3, pp. 2701–2709, 2020, doi:10.11591/ijece.v10i3.pp2701-2709.(iieta.org)

17) M. Amar and B. El Ouahidi, “Hybrid Intrusion Detection System Using Machine Learning,” Network Security, no. 5, pp. 8–19, 2020, doi:10.1016/S13534858(20)30056-8.(iieta.org)

18) T. Widodo and A. Aji, “Implementation of IDS and Snort Community Rules to Detect Types of Network Attacks,”Int.J.Comput.Appl.,vol. 183,no. 42,pp. 30–35,2021,doi:10.5120/ijca2021921821.(iieta.org)

19) S. Abdulrezzak and F. Sabir, “An Empirical Investigation on Snort NIDS versus Supervised Machine Learning Classifiers,” J. Eng., vol. 29, no. 2, pp. 164–178, 2023, doi:10.31026/j.eng.2023.02.11. (iieta.org)

20) S. Othman, F. Ba-Alwi, T. Nabeel, and A. Al-Hashida, “Intrusion Detection Model Using ML Algorithm on Big Data Environment,” J. Big Data, vol. 5, 2018, doi:10.1186/s40537-018-0145-4.(iieta.org)

21) M. Conti, A. Dehghantanha, K. Franke, and S. Watson, “Internet of Things Security and Forensics: Challenges and Opportunities,” Future Gener. Comput. Syst., vol. 78, 2018, pp. 544–546, doi:10.1016/j.future.2017.06.035.(en.wikipedia.org)

22) H.H.Pajouh,R.Javidan,R.Khayami,K.K.R.Choo,and A. Dehghantanha, “A Two-Layer Dimension Reduction and Two-Tier Classification Model for Anomaly-basedIDSinIoTBackboneNetworks,”IEEE Trans. Emerg. Topics Comput., vol. 7, no. 2, pp. 314–323, 2016, doi:10.1109/TETC.2016.2631004. (en.wikipedia.org)

23) O. Osanaiye et al., “Ensemble-based Multi-filter Feature Selection Method for DDoS Detection in Cloud Computing,” EURASIP J. Wireless Commun. Netw., 2016, doi:10.1186/s13638-016-0650-1. (en.wikipedia.org)

24) N. Milosevic, A. Dehghantanha, and K. K. R. Choo, “Machine Learning Aided Android Malware Classification,” Comput. Electr. Eng., vol. 61, pp. 266–

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

274, 2017, doi:10.1016/j.compeleceng.2017.05.011. (en.wikipedia.org)

25) Y.YamamotoandS.Yamaguchi,“DefenseMechanism to Generate IPS Rules from Honeypot Logs: Application to Log4Shell,” Electronics, vol. 12, no. 14, p. 3177, 2023, doi:10.3390/electronics12143177. (ejournal.upi.edu)

1. NIST, “Guide to Intrusion Detection and Prevention Systems(IDPS),”NISTSP800-94,2020.

2. S. Hochreiter and J. Schmidhuber, “Long Short-Term Memory,”NeuralComputation,vol.9,no.8,pp.1735–1780,1997.

3. A. Krizhevsky et al., “ImageNet Classification with Deep Convolutional Neural Networks,” Advances in Neural Information Processing Systems, vol. 25, pp. 1097–1105,2012.

4. C. Szegedy et al., “Intriguing Properties of Neural Networks,”arXiv:1312.6199,2013.

5. Snort,“SnortUserManual,”2023.[Online].Available: https://www.snort.org.Accessed:Sep.1,2023.

6. K.ScarfoneandP.Mell,“GuidetoIntrusionDetection and Prevention Systems,” NIST Special Publication 800-94,2007.

7. R. Vinayakumar et al., “Deep Learning for Network Intrusion Detection Systems: A Comprehensive Analysis,” Engineering Applications of Artificial Intelligence,vol.96,2020.

8. M. Husák et al., “Survey of Attack Projection, Prediction, and Forecasting in Cybersecurity,” IEEE Communications Surveys & Tutorials, vol. 21, no. 1, pp.640–660,2019.

9. S. M. Kasongo and Y. Sun, “A Deep Learning Method with Filter-Based Feature Engineering for Network Intrusion Detection,” IEEE Access, vol. 7, pp. 38597–38607,2019.

10. F. Iglesias and T. Zseby, “Analysis of Network Traffic Features for Anomaly Detection,” Machine Learning, vol.101,no.1,pp.59–84,2015.

11. O. Depren et al., “An Intelligent Intrusion Detection System for Anomaly and Misuse Detection in Computer Networks,” Expert Systems with Applications,vol.29,no.4,pp.713–722,2005.

12. ENISA, “Threat Landscape 2022: Emerging Cyber Threats,” 2023. [Online]. Available: https://www.enisa.europa.eu.Accessed:Sep.1,2023.

13. R. Anderson, Security Engineering: A Guide to Building Dependable Distributed Systems, 3rd ed. Wiley,2020.

14. GDPR, “General Data Protection Regulation,” 2018. [Online]. Available: https://gdpr-info.eu. Accessed: Sep.1,2023.

15. M. Bishop, Computer Security: Art and Science, 2nd ed.Addison-Wesley,2018.

16. S. Garcia-Fernandez et al., “A Survey on the Use of Machine Learning for Network Intrusion Detection,” ACM Computing Surveys, vol. 54, no. 6, pp. 1–35, 2021.

17. A. Shiravi et al., “Toward Developing a Systematic Approach to Generate Benchmark Datasets for Intrusion Detection,” Computers & Security, vol. 31, no.3,pp.357–374,2012.

18. Y. Mirsky et al., “Kitsune: An Ensemble of Autoencoders for Online Network Intrusion Detection,”NDSS,2018,pp.1–15.

© 2025, IRJET | Impact Factor value: 8.315 | ISO

| Page