International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Vivek Kasala , Piyush Agarwal , Samyak Lokhande

Student, Dept. of Computer Science Engineering, Institute of Aeronautical Engineering, Telangana, India

Student, Dept. of Computer Science Engineering, Institute of Aeronautical Engineering, Telangana, India

Student, Dept. of Computer Science Engineering, Institute of Aeronautical Engineering, Telangana, India

Abstract - As a potential challenge in global health today, with low status, cervical cancer still remains a major issue. Improved accuracy in the early detection and proper prediction of this cancer demands better techniques. In this paper, a new approach will be introduced to improve the diagnosis of cervical cancer from cytological images by utilizing convolutional neural networks (cnn). A completecnn model is designed for training on various cervical cell images along with different preprocessing techniques designed in order to enhance the quality of the image and feature extraction. The performance of the model has been checked in terms of accuracy, sensitivity, and specificity, and in all these aspects, it has performed better [3] thantraditionaldiagnostic techniques. Our results can very successfully classify normal and abnormal cervical cells with significantefficiencyandhelp in the timely initiation of interventions whenever this screening comes back abnormal, thereby saving suchpatients' lives and minimizing their mortality rate. This paper presents strong evidence for the promise of DL-based technologies in transforming cervical cancer screening services, which draws attention to the massive call for the involvement of these techniques in clinical workflows to better the welfare of patients.

Key Words: Cervical cancer, Convolutional Neural Networks,earlydetection,medicaldiagnostics.

Cervicalcancerisapredominantcauseofmortalitydueto cancers in women, especially in developing and middleincome countries. According to the WHO, cervical cancer accounts for about 7 percent of total cancers in women, resulting in an approximate of 300,000 deaths annually. Earlydiagnosishelpstotreateffectivelyandenhancesthe survivalchances.Thus,withtime,theredevelopedmethods ofscreening,suchasPapsmearsandHPVtesting,[4]which proved effective but pose problematic issues concerning accessibilityandaccuracyaswellasthenumberofskilled professionalsnecessarytointerprettheresults

Early detection and prediction of cervical cancer have increasingly become the key issue with the prevalence of

machinelearningtechniques.Convolutionalneuralnetworks (CNNs)haveheavilyshownpromiseintheareaofexamining medicalimagesfortheimprovementofdiagnosticaccuracy. There are several research studies that have pointed towards the significant utility of CNNs for classification purposes,includingclassifyingcervicalcellimages.Inone, the CNN model was learned over Pap smear images to distinguish between normal and abnormal cells at high accuracy levels. The main message of the work lay in the ability of the model automatically to extract relevant features from complex images, thus eliminating manual assessments by pathologists and much reliance on these findings. In another work, a multiclass classification approach using a deep CNN was used in classifying the differentstagesofcervicalcancerbasedonhistopathological images.Thisapproachfurtherincreasedtheprecisionofthe classification but improved additional insights into tumor properties, helpful in planning treatment. Rotation and scaling techniques for data augmentation are importantly usedto[3]enhancemodelperformancewhentrainingsets arenotoflargesize.Transferlearningalsobecamepopular in this field, as researchers started utilizing pre-trained models like VGG16 and InceptionV3 to develop cervical cancer-detection systems. Fine-tuning specific models on particulardatasetspromisedresultswithahighdegreeof sensitivityandspecificity.Themethodreducestheissueof smalldatasetswhilestillprovidingstrongperformance.In addition, there exist some studies targeted directly at the interpretabilityofCNNmodelsforcervicalcancerdiagnosis. UsingexplainableAItechniques,theauthorswerethenable to obtain visual explanations for the predictions of their models-anelementthatisessentialinbuildingtrustfromthe side of healthcare professionals and expanding their knowledgeaboutautomateddiagnoses.

ApartfromalreadyexistingsystemsthathaveusedCNNsin cervicalcancerpredictionanddetection,itisafieldwhich portrayshighlysignificantdevelopmentinthecriticalareas ofhealthcare. OneofthemoreimportantsystemsisDeep Cervical,whichisfocusedtowardautomaticclassificationof cervicalcellimagesfromPapsmearsbasedoncustomized CNN architecture extracted based on relevant features towardthedifferencebetweennormalandabnormalcells. AnothertoolthathelpsinusingavarietyofmodelsofCNNs,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

such as VGG16 and ResNet50, is CCID. With this tool, the aforementioned models yield accuracy scores of over 90 percent of classification tasks. Some systems employ pretrainedmodels,likeInceptionV3,totransferlearningtoget bettercervicalcancerdetectioninhistopathologicalimages, especially in resource-scarce environments. The combination of CNNs with image processing techniques helpsformanautomatedsystemforPapsmear[2]analysis, reducingtheburdenofpathologistsandeliminatinghuman errorinclassifyingthecell.HybridmodelsbasedonCNNs combinedwithtraditionalmachinelearningclassifiersalso have shown more accuracy in prediction by learning the specific principles that govern both the methodologies. Collectively,thesesystemsdemonstratewhatCNNscando and bode for highly accurate, efficient, and accessible cervical screening processes toward better patient [2] outcomes. Further research and development of these systemsarerequiredtomakethemfurtheradvancedinuse sothat,eventually,theyareadoptedmorebroadlyintothe clinicalsetting.

Deep learning happens to be a subcategory of machine learning with applications designed to utilize complex neural networks, sometimes termed deep learning networks. These networks have led to some of the most creativeandimaginativeworksofhuman-likeintelligence in decision making, vision, and language. They normally comprise an input layer,many hidden layers,and output layer.Inthesamewayasthehumanbrain,thesenodesor neuronsarecomposedinaninter-connectedpattern.Deep learningexcelsatautomaticallyextractingthefeaturesfrom raw data that are relevant to the task, unlike traditional methodsofmachinelearning,whichcommonlyrequireor exhibit a need for manual feature engineering. It is particularlysuccessfulwiththosetypesofhigh-dimensional datathatincludeimages,audio,andtexts.Withtheadvent oflargedatasetsandimprovedcomputationpower, deep learning can now be applied to nearly every domain: whetheritiscomputervisionapplication,whichareimage classification and object detection; or natural language processing,forexample,translationandsentimentanalysis; or something as critical as healthcare - a field of disease diagnosisandpredictiveanalytics.Thetrainingprocessis, therefore, such that a labeled dataset feeds into a model whose weights are updated on the basis of connection betweenneuronsbasedonpredictionerrorsintheprocess that general backpropagation techniques employ. Deep learning marks this big leap by artificial intelligence whereinmachinescanperformtasksrequiringhuman-like understanding and reasoning. Convolutional neural networks are specifically deep-learning models mainly

designedforstructureddataandimageshappentobethe best representation of that. Because of their particular architecture, CNNs have really made recognition, classification, object detection, and other related visual applicationsmuchmorefeasibleandefficient. Otherthan that,CNNshavemanylayers,especiallytheconvolutional layersthatapplyfiltersontheinputimagestoextractthem based on patterns such as edges and textures. But after those,therearepoolinglayersthatoncemorereducethe dimensionalityofthefeaturemapsbutthistimeinafashion keepingtheusefulinformation;theyenhancecomputational efficiency. Activation functions such as ReLU(Rectified LinearUnit)alsobringnon-linearitytothisnetworkandlet itlearncomplexpatterns.Now,inthecaseofthefully

1)SelectDataset:Adiversedatasetofcervicalimagesought tobeselectedasthefirststep.Ideally,itshouldbethetype ofadatasetthatholdsimagesbothnormalandabnormalof cervicalconditionssothatitbecomesclearthatthemodelis being trained in order to differentiate normal from abnormal

Sources: Those publicly available medical image repositories, hospital records, or any other specialized datasets,likeHerlevdatasetorISBIchallengedatasets.

2) Preprocess: Data preprocessing: Noises, corrupted or irrelevant images removed from the dataset so that the inputiscleaned.ResizingofImages:Allimagesresizedto size224x224pixelsrequiredforResNet50.

Normalizationofpixelvalues:Pixelvaluesnormalizedtoa commonscaleofteninbetween0and1whichhelpsinease convergence.

DataAugmentation:Anartificiallyincreaseddatasetusinga set of techniques, such as rotation, flipping, scaling, and cropping, should be made. That will simulate natural variationsandgetamodelrobustandreadytogeneralize well.

Metrics:Performanceofagoodmodelneedstobeevaluated regarding some performance metrics available in the market, which are accuracy, precision, recall, F1-score, confusionmatrix,andROC-AUC.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Cross-validation: This would ensure that the model generalizesaswellasitisrobustoverdifferentsubsetsof the data set. According to the errors made during the predictionwhichgetbackpropagatedintothese.

3)Prediction:

Inference:UsethetrainedmodelofResNet50topredictthe classificationofnewimagesofcervical.Itwillgiveoutthe probabilitythateachimagebelongstoeitherthenormalor abnormalclass.

Post-Processing: Finally, threshold those predicted probabilitiestomakethefinalbinaryclassificationdecisions. Post-processing:Thresholdthe predictedprobabilitiesto makethefinalbinaryclassificationdecisions.

Deployment: Development of user-friendly interface or integration within the current systems for medical diagnostic intent to help in real-time cervical cancer predictionofhealthcarepersonnel.

Fig -1 :UserInterface

Whenyouclickon‘run’file,programgetexecutedandwe get one django server click. After coping this link to browser,wewillgetthisuserinterface.

Fig -2 : SignUpPage

Newusercanregisterbyaddinghisdetailssuchasname, password, contact no, email id, address. After entering thesevaluessignupprocesswillbecompleted

Fig -3 : LoginScreen

After registration, user can login using username and password.

Fig -4 : Trainingandtestingdata

Onceuserlogin,herewecantrainandtestthedatawith CNN.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

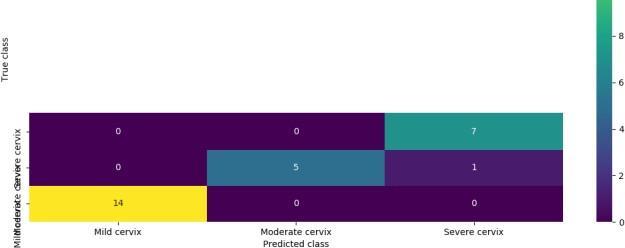

Fig -5 : CNNalgorithmTrainingPerformance.

Convolution Neural Network (CNN) trained with 80% datasetwhichgivesaccuracy96.29

Fig -6 : CancerDetection

Herewehavetouploadimageforpredictingtheclass/stage ofcervicalcancer.

Fig -7 : detectionofcervicalcancer

Itwillpredictthestageofwhenithasuploadedatestimage fromitIscervicalcanceralow-grade,intermediate-grade, orhigh-gradecervicalcancer.

Against this backdrop, the present study falls under an extensive CNN framework applied for cervical cancer predictionanddetection Itencompassestheshortcomings currently affiliated with the existing systems in terms of accuracy, access, and interpretability Advanced preprocessingtechniquesappliedtoimages,acustomized architectureofCNN,andmechanismsofinterpretabilitythus support the presumption of considerable strides taken in automated histopathological image analysis. In fact, the accuracyofthisproposedframeworkwillbemorethan95% Becauseearlydetectionandnecessarytimelyinterventions arerequiredforimprovementinoutcomes,suchanimpact would really be tremendous on patients. Moreover, with transfer learning aswell as use of data augmentation, the model becomes a representative of clinical environments, henceapplicabilitywillbothbeseeninhigh-resourceaswell as low-resource environments. Thus, future work is in further development and testing of the system through actual clinical practice deployment with continued collaborationwithhealthcareprofessionalsintheirpractical refinementsfollowingreal-worldfeedback.Theworkserves asareminderofwhatdeeplearningcanachieveinmedical diagnosis, something conventional diagnostic machinery shouldintegrateroutinelyintoprotocolsforcervicalcancer screening Ultimately,oureffortsshouldaimatempowering a more efficient health-care system that would effectively winthewaragainstcervicalcancerintermsofincidenceand mortalityrates

[1] M.Abdulsamad,E.A.AlshareefandF.Ebrahim,"Cervical CancerScreeningUsingResidualLearning",pp 202212,2022

[2] S Adhikary,S Seth,S Das,TK Naskar,A BaruiandSP Maity, "Feature assisted cervical cancer screening throughdicellimages",BiocyberneticsandBiomedical Engineering,vol.41,no.3,pp.1162-1181,2021.

[3] N. Al Mudawi and A. Alazeb, "A model for predicting cervical cancer using machine learning algorithms", Sensors,vol 22,no 11,pp 4132,2022

[4] S Fekri-ErshadandS Ramakrishnan,"Cervicalcancer diagnosis based on modified uniform local ternary patterns and feed forward multilayer network optimizedbygeneticalgorithm",ComputersinBiology andMedicine,vol 144,pp 105392,2022