International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Pranav Vinod Chaudhari1 , Devyani Prakash Badgujar2 , Mandar Ravindra Visave3 , Akanksha Bharat Chaudhari4, Manisha Shantaram Patil5

1Student, Dept. of AIML, R C Patel Institute of Technology, Shirpur, Maharashtra, India

2Student, Dept. of AIML, R. C. Patel Institute of Technology, Shirpur, Maharashtra, India

3Student, Dept. of AIML, R C Patel Institute of Technology, Shirpur, Maharashtra, India

4Student, Dept. of AIML, R C Patel Institute of Technology, Shirpur, Maharashtra, India

5Assistant Professor, Dept. of AIML, R. C. Patel Institute of Technology, Shirpur, Maharashtra, India ***

Abstract - Diabetic Retinopathy (DR) is a critical complication of diabetes that leads to progressive vision impairment and, if left untreated, permanent blindness. With the increasing prevalence of diabetes worldwide, early and accurate detection of DR has become essential for timely intervention.Traditionalmanualscreeningmethodsaretimeconsuming, prone to human error and require skilled ophthalmologists, which limits their scalability. Recent advances in deep learning have enabled the develop ment of automated systems that can detect and classify DR from retinal fundus images with high accuracy. However, most of these models function as ”black boxes” and lack transparency, making their predictions difficult to interpret in clinical settings.Thispaperpresentsacomprehensiveandexplainable DR detection pipeline using Convolutional Neural Network (CNN) architectures such as VGG16, Xception, ResNet50 and Sequential CNN. A publicly available real-world dataset of labeled fundus images is used for training and evaluation. Throughcomparativeanalysis,theperformanceofeachmodel isassessedbasedonkeymetricsincludingaccuracy,precision, recall and F1-score. The ultimate goal is to aid ophthalmologists with a reliable, accurate and interpretable diagnostic tool, while also laying the groundwork for the future incorporation of explainable AI techniques such as Grad-CAM and synthetic image generation using GANs

Key Words - Diabetic Retinopathy, Deep Learning, CNN, Medical Imaging, Transfer Learning

1.INTRODUCTION

By2040,approximately600millionpeoplearepredicted tohavediabetesandonethirdareexpectedtohavediabetic retinopathy[1].Diabetesreduceslifeexpectancybyfiveto 10 years [2]. Diabetic retinopathy is the most common microvascularcomplicationindiabetes[3].Itaffectspeople with diabetes and is the result of prolonged high blood glucose levels that damage retinal blood vessels. Early detection can prevent vision loss, but access to ophthalmologistsisoftenlimited.Retinalimagingisthemost widelyusedmethodduetoitshighsensitivityindetecting retinopathy[3].

As the number of patients with diabetes increases, the numberofretinalimagesproducedbyscreeningprograms willalsoincrease,placingalargeburdenonmedicalexperts and healthcare systems. This could be alleviated with an automated system. Studies show that automated systems based on deep learning neural networks can achieve high sensitivity and specificity in detecting referable diabetic retinopathy. Other referable eye complications such as diabetic macular edema and glaucoma have also been explored[3].

Artificial Intelligence (AI), especially deep learning, has becomeapowerfulallyinhealthcare.ConvolutionalNeural Networks(CNNs)canautomaticallyclassifyretinalimages anddetectDRstages.Despitetheirpotential,thesemodels oftenlacktransparencyintheirpredictions,whichcanbea barrier to clinical trust. This research proposes a deep learning pipeline for DR detection using various CNN architectures.Weemphasizeaccuracy,robustnessandthe foundationforfutureinterpretabilityenhancements.

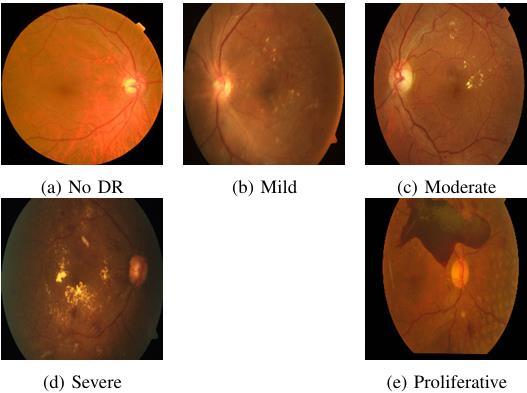

We used a fundus image dataset containing 3630 labeled imagesacrossfiveDRclasses:NoDR,Mild,Moderate,Severe andProliferativeDR.

2.1 Preprocessing

• Allimageswereresizedto224×224pixels.

• Pixelintensitieswerenormalizedto[0,1].

• Data augmentation(rotation, flip, brightness) was usedtobalanceclassesandreduceoverfitting.

• One-hotencodingwasusedforthelabels.

2.1 Dataset Description

We used the publicly available APTOS 2019 Blindness DetectiondatasetcollectedbyAravindEyeHospital,India[4]. Itoriginallyhad3630Images,butafterdatasetbalancingit nowcontains4530labeledimagesratedforDRseverityona scaleof0to4.Distribution:1016(NoDR),840(Mild),999

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

(Moderate),790(Severe),885(ProliferativeDR).Wesplitthe dataset into training (80%), validation (10%) and testing (10%)subsets.

• Normal Retina:Clearopticdisc,distinctvessels,no hemorrhagesorexudates.

• Mild DR:Microaneurysmsvisibleassmallreddots.

• ModerateDR:Scatteredexudatesandhemorrhages.

• Severe DR:Widespreadhemorrhagesandexudates.

• Proliferative DR:Signsofneovascularization.

To build a robust diabetic retinopathy (DR) detection sys tem, four pre-trained convolutional neural network (CNN) models were adapted and evaluated: Xception,ResNet50,VGG16andInceptionV3.Eachofthese architecturesoffersdistinct advantagesintermsof depth, computational efficiency and feature representation capabilities,whicharecriticalforthecomplextaskofretinal imageanalysis[5],[6].

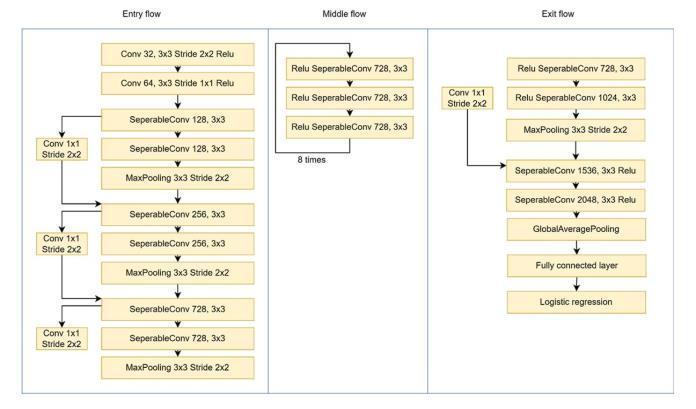

The Xception architecture replaces standard convolutionallayerswithdepthwiseseparableconvolutions, enabling inde pendent spatial and channel-wise filtering. Thisdesignsignificantlyreducesmodelcomplexitywithout compromising performance [7]. Structurally, Xception is divided into three primary components: the entry flow, middle flow and exit flow, which progressively learn increasingly abstract representations of input images. Its architecture is well-suited for identifying minute retinal abnormalitiessuchasmicroaneurysmsandhemorrhages.In

this project, the Xception model consistently achieved the highest classification accuracy among the com pared networks,indicatingitseffectivenessinfine-grainedmedical imageclassificationtasks[7].

ResNet50isa50-layerdeepCNNthatincorporates residualconnections,orskipconnections,toenablestable trainingofdeepmodels.Theseconnectionsallowgradients to propagate more efficiently during backpropagation, therebyaddressingthevanishinggradientproblemcommon in deep architectures [6]. Though ResNet50 showed moderate training accuracy in this study, its validation performancewaspromising,indicatingpotentialforfurther optimization. Its depth and design make it suitable for learninghierarchicalandcomplexvisualpatternsinretinal fundusimages.

VGG16isawell-knownCNNmodelcharacterizedby its uniform use of small (3×3) convolutional kernels throughoutthenetwork.Comprising13convolutionallayers and3fullyconnectedlayers,thearchitectureisbothdeep andstraightforward.Despiteitsrelativelylargeparameter count,VGG16hasprovento behighlyeffectiveintransfer learningscenarios[5].Inthisresearch,themodelexhibiteda strong balance between training and validation accuracy, demonstrating excellent gen eralization capability for DR classification.Itspredictablestructureandstabilitymakeit anidealchoiceformedicalimagingproblems.

The InceptionV3 model employs a sophisticated designthatutilizesinceptionmodules,whichapplymultiple filter sizes in parallel to capture information at different scales. Additionally, it incorporates techniques such as factorized convolutions and dimensionality reduction to enhancecomputationalefficiencywhilepreservinglearning capacity. This multi-path approach enables the model to extract a rich set of features from high resolution retinal images.Inourexperimentation,InceptionV3demonstrated consistent performance, particularly in distin guishing betweenintermediateDRstages,duetoitsabilitytodetect bothcoarseandfinepatterns[6].

The transfer learning strategy included the following stepstofine-tunethemodelsforDRclassification:

• Applied ImageNet pre-trained models: Xception, ResNet50,VGG16andInceptionV3[6],[8].

• Removed original top layers using include top=False.

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

• Inputdimensions: Xception at299×299,othersat 224×224.

• Frozebaselayerstopreservepre-trainedweights.

• Added a custom classification block:– Global averagepooling–Dropoutlayer(rate=0.5)–Dense layerwithsoftmaxactivationfor5DRclasses

• Lossfunction:categoricalcross-entropy

• Optimizer:Adam(learningrate=0.0001)

• Trainingsetup:25–30epochs,batchsizeof32

• Training callbacks: EarlyStopping, learning rate scheduler

• Augmentation: random flip, rotation, zoom and brightnessshift

Model Params Input Size Key Features

hlineXception ∼22M 299×299 Separable convolutions

ResNet50 25M 224×224 Residualconnections

VGG16 ∼138M 224×224 Uniform3x3kernels

InceptionV3 ∼23M 224×224 Multi-scalemodules

Table -1: ComparisonofCNNModels

Fig-2:XceptionArchitecture

4. TRAINING AND EVALUATION

4.1 Training Strategy

The training pipeline involved fine-tuning several deepconvolutionalneuralnetworkstoclassifyretinalfundus images into five diabetic retinopathy (DR) stages: No DR, Mild,Moderate,SevereandProliferativeDR.Thedatasetused forthistaskcomprised4,535annotatedfundusimages.

To enhance model performance and reduce overfitting, the following preprocessing strategies were employed:

• Image Resizing: All images were resized to 224 × 224pixelstoconformtotheinputrequirementsof architecturessuchasMobileNetV2andXception.

• Normalization:Pixelvalueswerescaledtotherange [0, 1] to improve optimization consistency and convergencespeed[5].

• Data Augmentation: Techniques such as random rota tion, flipping, zooming and brightness adjustmentswereappliedtoimprovegeneralization andpreventoverfitting[6].

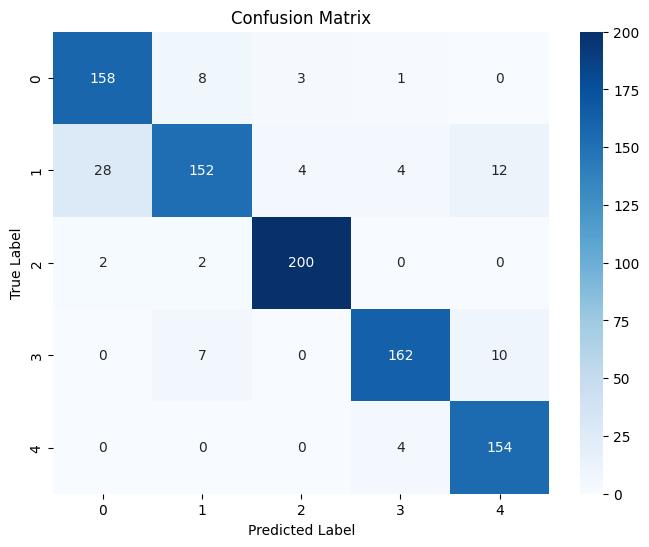

Themodelswereevaluatedusingstandardclassification metrics:

• Accuracy: Measures the overall correctness of predictionsacrossallclasses.

• Precision: Indicatestheratioofcorrectlypredicted pos itive observations to total predicted positives, helpingminimizefalsepositives.

• Recall (Sensitivity): Representstheproportionof cor rectly predicted positives among all actual positives,whichisvitalinmedicaldiagnostics.

• F1-Score: Harmonic mean of precision and recall, par ticularly effective when dealing with class imbalance[7].

• AUC-ROC: Evaluatesthemodel’scapabilitytodistin guish between classes and is considered robust acrossimbalanceddatasets.

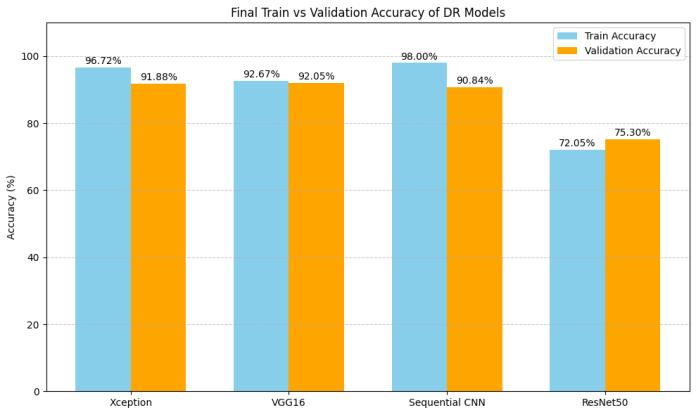

The performance of various CNN models is summarized in Table II. Notably, the Xception model achieved the best balance between accuracy and generalization.

8.315

Table - 2:ModelPerformanceonDRClassification

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

These findings align with prior research, such as Sahlsten et al., who demonstrated the effectiveness of Inception-based and ensemble models for DR grading [5] and other studies advocating preprocessing and augmentationascriticalstepsintrainingrobustmodels[5].

5. RESULTS AND ANALYSIS

Xception outperformed other models in overall accuracy.VGG16showedstabilityandgoodgeneralization. ResNet50 underperformed possibly due to shallow finetuningorinsufficientfeatureextraction.

6. CONCLUSION AND FUTURE SCOPE

Deeplearning,particularlyCNNarchitectureslike XceptionandVGG16,showgreatpromiseforDRdetection. Preprocessinganddataaugmentationplayacriticalrolein performance.

6.1 Future Scope

• Explainability: IncorporateGrad-CAMandLIME.

• GANs: Use Patho-GAN for synthetic image generation.

• Web Integration: Deploy model in clinical workflows.

We thank Dr. Manisha S. Patil for her constant guidanceandsupportthroughoutthiswork.

[1]D.S.W.Ting,C.Y.-L.Cheung,G.Lim,G.S.W.Tan,N.D. Quang,A.Gan,H.Hamzah,R.Garcia-Franco,I.Y.S.Yeo,S.Y. Lee et al.,“Development andvalidation ofa deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes,” JAMA, vol. 318, no. 22, pp. 2211–2223, 2017. [Online]. Available: https://doi.org/10.1001/jama.2017.18152

[2]A.F.SallyMMarshall,“Preventionandearlydetectionof vascular complications of diabetes,” BMJ, 2006. [Online]. Available: https://www.bmj.com/content/333/7566/475/rapidresponses

[3] J. K. L. t. e. J. K. H. . K. K. Jaakko sahlsten, Joel Jaskari, “Deep learning fundus image analysis for diabetic retinopathyandmacularedemagrading,”NaturePortfolio journal, 2019. [Online]. Available: https://doi.org/10.1038/s41598-019-47181-w

[4]G.C.G.T.M.I.L.R.H.M.G.b.Mar´ıaHerrero-Tudelaa, RobertoRomero-Ora´aa,“Anexplainabledeep-learningmodel revealsclinicalcluesindiabeticretinopathythroughshap,” Elsevier, Biomedical Signal Processing and Control, 2024. [Online].Available:

https://doi.org/10.1016/j.bspc.2024.107328

[5] J. K. L. T. E. J. K. H. . K. K. Jaakko Sahlsten, Joel Jaskari, “Deep learning fundus image analysis for diabetic retinopathyandmacularedemagrading,”NaturePortfolio journal, 2019. [Online]. Available: https://doi.org/10.1038/s41598-019-47181-w

[6]M.Herrero-Tudela,R.Romero-Ora´a,R.Hornero,G. C. Guti´errez Tobal, M. I. L´opez, and M. Garc´ ıa, “An explainable deep-learning model reveals clinical clues in diabetic retinopathy through shap,” Biomedical Signal ProcessingandControl,vol.102,p.107328,2025.[Online]. Available:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

https://www.sciencedirect.com/science/article/pii/S17468 09424013867

[7] H. S. Alghamdi, “Towards explainable deep neural networks for the automatic detection of diabetic retinopathy,” Applied Sciences, vol. 12, no. 19, 2022. [Online].Available:https://www.mdpi.com/2076-3417/12/ 19/9435

[8] Y. Niu, L. Gu, Y. Zhao, and F. Lu, “Explainable diabetic retinopathydetectionand retinal imagegeneration,”IEEE JournalofBiomedicalandHealthInformatics,vol.26,no.1, pp.44–55,2022.

© 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page444