International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

Punithavathi Arikrishnan1 and Dr. Padmapriya Arumugam2

1 Research Scholar, Department of Computer Science, Alagappa University, Karaikudi, Sivaganga, Tamil Nadu, 630003, India.

2 Professor & Head, Department of Computer Science, Alagappa University, Karaikudi, Sivaganga, Tamil Nadu, 630003, India.

ABSTRACT- The ability to accurately predict health care data outcomes is vital for successful disease management. The purpose of this work is to undertake a complete evaluation of several supervised machine learning algorithms for predicting health outcomes using four distinct datasets: Polycystic Ovary Syndrome (PCOS), Breast Cancer, Heart Disease, and Lung Cancer. The research includes preprocessing the data, collecting the most significant features, and training seven prominent machine learning models: Random Forest, K-Nearest Neighbors (KNN), Gaussian Naive Bayes, Logistic Regression, CatBoost, XGBoost, and LightGBM. The models were tested using important performance metrics such as accuracy, precision, recall, and F1score. Additionally, the training and prediction times of the models were also considered to assess their practical applicability. The results showed that among all the models, Logistic Regression consistently outperformed the other models across the datasets, achieving the highest average accuracy, precision, recall, and F1score. The model also exhibited relatively low training and prediction times, making it a promising choice for real-world healthcare applications. Other top performing models included Random Forest and XGBoost, which also exhibited strong predictive capabilities. The findings of this study provide valuable insights into the comparative strengths and weaknesses of various ML algorithms for predicting health outcomes. The results can inform the selection of appropriate models for specific healthcare tasks, ultimately contributing to the development of more accurate and efficient decision support systems in the healthcare area

Keywords: Machine Learning, PCOS, Heart disease, Breast cancer, Lung cancer, Logistic Regression

Machine learning, which is a branch of artificial intelligence, is developed to train algorithms on patterns inpreviousdatasothatitcanpredictthefutureandmake decisions on its own without being explicitly programmed.Itsapplication inhealthcarehascompletely revolutionized various approaches toward the prediction of diseases by different researchers and practitioners,

although developing predictive tools that will be most useful for automation of diagnosis procedures. Thereby, the use of machine learning in the prediction of diseases ultimatelyhasthepotentialtoeasetheburdenonhealthcare practitioners through increased accuracy in the diagnoses, reduced costs, and timely interventions Precise prediction of the course of diseases has now become one of the most important tasks. The potential of insightsfromdatawithinthehealthcaresectoropensnew waysforinnovation;hence,thishasanincreasinginterest in utilizing machine learning for various purposes. This workfocusedonthevariousmachinelearningalgorithms used for predicting the outcome of four major diseases: Polycystic Ovary Syndrome, breast cancer, heart disease, and lung cancer. The chosen four have been selected becausetheserepresentawidespectrumofdisordersthat differ in their complexity, prevalence, and importance to public health. For instance, PCOS is one of the most common endocrine disorders among reproductive women, but its sophisticated pathophysiology and numerous symptoms have always made its treatment ignored.Meanwhile,breastcancerhasnotstoppedbeinga common kind of cancer in all parts of the world, the survival rate being largely affected by the time of its detection. Heart diseases and lung cancers have historically been in the foreground, both being leading causesofmorbidityandmortality.Sincemachinelearning algorithms can handle huge and complex data with different data types, such as numerical, categorical, and unstructured data, they are found to be useful in disease prediction. General uses of datasets in healthcare include EHR, clinical measures, imaging data, genetic data, and patient demographics. It will be quite difficult to ensure that the derived patterns in this data are meaningful in order to predict the right state or stage of the disease. Machine learning algorithms generally learn a relation between input features and predefined outcomes by training the models over the labeled data. Data preprocessing and feature selection are the two major process that need to be done before machine learning models applied. One of the significant tasks in machine learningis the methodof choosing the featuresknown as Feature Selection, in a dataset that is most relevant and usefulformakingcorrectpredictions.Itis,therefore,very

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

important to find a subset of the most informative features to establish reliable prediction models. After the preprocessing and feature selection, these datasets were used to train machine learning models. During training, themodelslearntheclinicalcharacteristicsinthedataset that govern a variant disease outcome. All the algorithms learned have a different mode of learning. Within these years, many algorithms of machine learning have been suggested and enhanced. This paper introduces the results for seven models that are Logistic Regression, CatBoost, XGBoostRF, Random Forest, KNearest Neighbors, Gaussian Naive Bayes, and LightGBM. In this paper, accuracy, precision, recall, and F1score are the important metrices to provide the effective prediction of disease outcomes by comparing these models used four different disease datasets to train. These performances were evaluated using the test datasets not being exposed inanytrainingprocess.Inaddition,inthispaper,training and prediction times shall also be considered in an effort to appraise applicability in clinical environments where making timely decisions is critical. The results of this studyprovidevaluableinsightsontherelativeadvantages and disadvantages of various machine learning models withrespecttodiseaseprediction.

Asif et al. [5] cardiovascular disease prediction research has explored into a various machine learning techniques to enhance diagnosis accuracy. Previous research used algorithms such as J48, KNearest Neighbor, and Random Forest, yielding results ranging from 56.76% to 87% accuracy. Ensemble methods, such as hard voting classifiers,haveshowedgreatpromise,reachingupto90% accuracyinsomecircumstances.Thisstudycontributesby testing twelve machine learning algorithms, which resulted in 92% accuracy using ensemble voting classifiers, highlighting machine learning’s potential to improvepredictiveaccuracyincardiovasculardisease. Ali etal. [4] discussedaboutcardiacdiseasepredictionusing anumerussupervisedmachinelearningtechnique.Usinga Kaggle dataset, assessed the performance of techniques . ThestudydiscoveredthattheRFalgorithmoutperformed with highest in metrices, making it a viable tool for early stage of heart disease prediction. The work enhances the ability of machine learning to improve clinical decision makingandreducemisdiagnosisrates.Sawhneyetal.[16] conducted a correlation of various artificial intelligence models for the early detection of chronic kidney disease (CKD). The study compares the models in terms of random forests , support vector machines and deep neural networks , concentrating on accuracy, specificity , and sensitivity. The DNN model outperformed all of the other models, with the highest accuracy. This study highlights the ability of AI in improving early detection of CKD. Khanam and Foo[12]did an analogy of various machine learning methods for diabetes prediction. Using

the PIMA Indian Diabetes dataset, this research investigated techniques such as support vector machine (SVM),logisticregression(LR),knearestneighbors(KNN), decision tree (DT), and random forests (RF). The study discovered that the RF algorithm surpassed others in performance metrics. ALAM SUHA [3] investigated the prediction of Polycystic Ovary Syndrome (PCOS) using machine learning approaches based on patient symptom data and ovarian ultrasound images. This work assessed the various algorithms for predicting PCOS, including support vector machines (SVM) and convolutional neural networks (CNN). This study emphasizedtheCNN model’s superior performance in reliably detecting PCOS from ultrasound pictures. This study combining machine learning with medical imaging to improve diagnosis accuracy and patient outcomes. Suha andIslam[19] did a comprehensive study of computer aided strategies for detecting polycystic ovary syndrome (PCOS). The study compared various machine learning algorithms, such as supportvectormachines(SVM),randomforests(RF),and convolutional neural networks (CNN), concentrating on accuracy and efficiency. The analysis highlighted CNNs’ potential for identifying PCOS from ultrasound images, as well as the importance of high-quality data and effective feature selection algorithms. Iftikhar et al. [11] analyzed the performance of various machine learning models for predicting chronic renal disease. The study utilized a dataset from the Buner district in Khyber Pakhtunkhwa, Pakistan The SVM with the Laplace kernel function outperforms the other models based on performance metrices. Akkaya et al. [2] conducted a study on heart disease prediction by various machine learning models. The research evaluated algorithms such as logistic regression, support vector machine, knearest neighbors, decision tree, and random forests. The study found that the RF algorithm achieved the outperformed accuracy amongthemodels.Hassanetal.[9] conductedastudyon predicting chronic kidney disease (CKD) using machine learning techniques on patients’ clinical data. The study evaluated various algorithms, including neural networks (NN), bagging tree models (BTM). The research pinpointed the Exemplary performance of the RF with high accuracy. Ahmad et al. [1] an analysis on the optimum medical diagnosis of heart disease using machinelearningtechniques,withandwithoutsequential feature selection. The research evaluated algorithms such as lineardiscriminantanalysis (LDA), RF,GBC, DT, SVM, and KNN. The analysis found that RF and DT with sequentialfeatureselectionachievedthehighestaccuracy, demonstrating the importance of feature selection in enhancingmodelperformance. Chotrani[6]conductedan in depth study on various machine learning models for predicting disease. The study evaluated ml algorithms across multiple datasets. The research highlighted the superior performance of ensemble methods, particularly random forests (RF) Gupta and Gupta[8] conducted comparison on deep learning methods for predicting

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

breast cancer survivability. The study evaluated models such as artificial neural networks (ANN), Restricted Boltzmann Machines (RBM), Deep Auto encoders, and Convolutional Neural Networks (CNN). The RBM model achieved the highest accuracy, followed by Deep Autoencoders and CNN. Uddin et al.[20] did a comparative performance analysis of various Knearest neighbour (KNN) algorithm variants for disease prediction. The study evaluated different KNN variants, including , adaptive KNN, locally adaptive KNN, classic KNN, kmeans clustering KNN, mutual KNN, fuzzy KNN, Hassanat KNN, and generalized mean distance KNN. The research found that the Hassanat KNN variant achieved the highest average accuracy (83.62%), followed by the ensemble KNN (82.34%). Sharma and Mishra[17] conducted a performance analysis of machine learning based optimized feature selection approaches for breast cancer diagnosis. The research utilized three feature selection methods: correlation-based selection, information gain-based selection, and sequential feature selection. The ensemble-based Max Voting Classifier, combining the top three performing models, achieved an accuracy of 99.41%. Debal and Sitote[7] discussed the predictionofchronickidneydisease(CKD)usingmachine learning techniques. The study evaluated models such as randomforests(RF),supportvectormachines(SVM),and decision trees (DT), focusing on both binary and multiclass classification for CKD stages. The research highlights the superior performance of the RF model, particularly when combined with recursive feature elimination and cross validation, achieving the highest accuracy among the tested models in terms of accuracy, precision, f1 score, recall, specificity and sensitivity Ramesh et al. [14] conducted a study on predictive analysis of heart diseases using various machine learning approaches. The research evaluated algorithms such as Naive Bayes, support vector machine (SVM), logistic regression (LR), decision tree (DT), random forests (RF), andknearestneighbors(KNN).ThestudyfoundthatKNN witheightneighborsdemonstratedsuperiorperformance in terms of precision, accuracy, effectiveness and sensitivity. Srikanth [18] explored the chronic kidney disease (CKD) prediction using various machine learning algorithms. The research highlighted the superior performance of the RF algorithm in terms of accuracy, f1 score,precision,recall,Jaccardscoreandlogloss.Ibrahim and Abdulazeez [10] ]this paper reviewed the seven machine learning algorithms for diagnosis from the medical database. It includes Decision Tree, Logistic Regression, Knearestneighbor,Kmeansclustering,Naive Bayes and Random Forest. This review discovered that many algorithms showed good accuracy for predicting such as Decision Tree, KNN, Random Forest and SVM. Rawal [15]]explored the Breast cancer Prediction using four machine learning algorithms such as logistic regression LR, SVM, KNN, and RF. This research work involves seven phases in terms of Preprocessing data,

Data Preparation, Feature Selection, Feature Projection, Feature scaling, Model selection and Prediction. The research highlightedtheSVMmodelhadbestperformance intermsofefficiencyandeffectiveness basedonrecall and accuracy Li et al. [13] discussed different machine learning classifiers, including Support Vector Machine (SVM), Naive Bayes (NB), Logistic Regression (LR), KNearestNeighbor(KNN),DecisionTree(DT),andArtificial Neural Network (ANN) for heart disease prediction. The researcher utilized the feature selection algorithms like Minimal Redundancy Maximal Relevance (MRMR), Relief, LocalLearningBasedFeatureSelection(LLBFS),andLeast Absolute Shrinkage Selection Operator (LASSO). Additionally, propose a novel feature selection algorithm Fast Conditional Mutual Information (FCMIM) . Key contributions of this research are, addressing feature selection challenges using both standard and novel algorithms. Demonstrating that the proposed FCMIMSVM combinationoutperformedthanothermodels,achievinga classification accuracy of 92.37%. Highlighting the importance of selecting relevant features for increasing model accuracy and computational efficiency. The work concludedthattheFCMIMSVMmodeliseffectiveforheart diseasediagnosis.

Thissectionoutlinedtheresearchsteps,asillustratedin this figure 1 . It includes the steps we took for data collection, preprocessing, feature selection, data modeling, evaluating the models and data visualization. Each step is designed to ensure accurate and reliability of this research. Following subsection provides the detaileddescriptionofeachstep.

Figure 1: Block Diagram Illustrating the Evaluation ofMachine Learning Models for Disease Prediction

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

In this section, gather the four different dataset whichareusedtodiseasepredictionrelatedtofrom the Kaggle repository. The four comprehensive datasetswithspecificmedicalconditionsincludes:

1. PCOS with and without infertility Dataset: It contains 541 rows about the patients data includesclinicalmeasurementsandPCOSstatus whether it is yes or no. Here there are two differentdatasetsrelevanttoPCOS,

o One is PCOS with Infertility dataset contains collection of data on patients with PCOS and their associated clinical measurements.

o Another one is PCOS without Infertility dataset: This dataset includes similar clinical measurements but for patients withoutinfertility.

Finally, the two datasets are merged based on ‘Patient File No.‘ field, and irrelevant columns aredroppedtoformacomprehensivedataset.

2. Breast Cancer Dataset: It has 4024 rows and 16 columnsaboutclinical measurementsrelated to breastcancer(BC)diagnosis.

3. Heart disease Dataset: This dataset consists of 303 rows and 14 columns filled with patient data, including age, sex, cholesterol levels, and various clinical characteristics linked to heart health.

4. Lung Cancer Dataset: This dataset provides the overview of potential contributors to cancer risk,involving1000rowsand26columns.

3.2 Data Preprocessing

The initial step of data preprocessing involves thorough data cleaning to prepare the datasets foranalysis.Itincludes:

HandlingMissingValues:Missingvalues werehandledusingmedianimputation, arobustmethodagainstoutliers.

Column Renaming: Column names are stripped of leading and trailing spaces toensureconsistency.

Drop the Features: This removing the irrelevant features and ensures that no duplicationexistedinthedatasets

3.3

Data Transformation: Categorical features were transformed using onehot encoding, converting them into binary (0 and 1) variables to facilitate machinelearning.

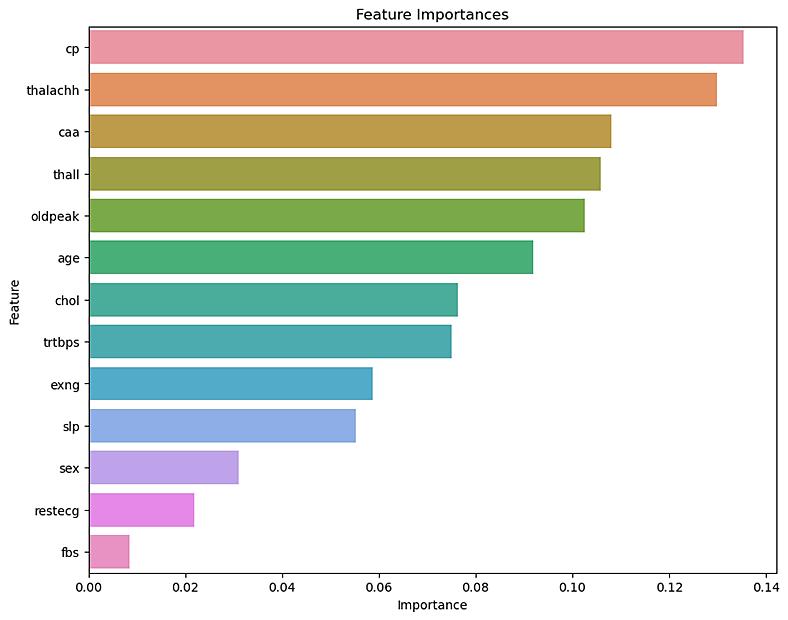

This section involves feature importance analysis for selecting the top-ranking features, as visualized in the figures below. Here, a Random Forest classifier (RF) is used to evaluate the importance of the various features in datasets (PCOS, BC, HD, LC). Feature Importance scores are computed, allowing for the selection of topperforming features that contribute effectively to predictive accuracy. This selection aims to reduce the dimensionality and enhance computational efficiency. Finally, a bar plot is generated to visualize the importance of the top features (see Figures 2, 3, 4, and 5).

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

4 :Feature importance analysis for Heart Disease Dataset

Figure 5: Feature importance analysis for LungCancer Dataset

In the data modeling section, Multiple machine learning models are trained with labeled data in the dataset undersupervisedlearning.SevenMLmodelsareLogistic Regression, Gaussian Naive Bayes, Random Forest, K nearest Neighbor, CatBoost, XGBoostRF,Support Vector Machine, and LightGBM. Random Forest Here’s a more detailedexplanationofeachmachine-learningmodel:

1. Random Forest: In order to improve accuracy and manage overfitting, this ensemble learning model constructs several decision trees during thetrainingphase andcombinestheir output.A random subset of the data is used to train each tree, and a majority vote is used to determine thefinalclassificationprediction.Thistechnique contributestothemodel’sincreasedrobustness.

2. Logistic Regression:It is a statistical technique for binary classification that calculates the likelihoodthataninputbelongsintoaparticular class. It squeezes a linear equation’s output between 0 and 1 using the logistic function. Despiteitsname,itisacommonchoiceformany classificationproblemssinceitisa linearmodel thatiseasytoimplementandinterpret.

3. Gaussian Naive Bayes: The Bayes theorem is applied by this probabilistic classifier under the presumption that characteristics are independent of class label. It makes the assumption that each feature’s continuous values are distributed using a Gaussian distribution. This model is renowned for its simplicity and speed, and it works especially wellwithhigh-dimensionaldatasets.

4. K Nearest Neighbor (KNN): A non-parametric learning method for regression and classificationthatisinstance-based.Adatapoint is classified by KNN according to the feature space’sKnearestneighbors’majorityclass.

5. Support Vector Machine (SVM): One supervised learning model that works well for bothregressionandclassificationapplicationsis SVM. By determining which hyperplane in the feature space best divides the classes, it optimizes the margin between the nearest points of the classes. With a range of kernel functions, SVM can handle nonlinear data and performswellinhigh-dimensionaldomains.

6. CatBoost: An approach for gradient boosting that handles categorical information without requiring a lot of preprocessing. Ordered boosting is used to improve accuracy and decreaseoverfitting.

7. XGBoostRF: A gradient-boosted decision tree solution intended for efficiency and speed. The advantages of XGBoost and Random Forest are combined in XGBoostRF. Because of its efficacy and scalability, it is frequently utilized in realworld applications and machine learning contests.

8. LightGBM: Tree-based learning methods in a gradient boosting framework. LightGBM can handle massive datasets with less memory utilization because of its highly efficient and scalable design. LightGBM is renowned for its quickness and effectiveness in jobs involving bothregressionandclassification.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

The datasets are split, or train-test split, prior to the trainingphase.Eachdataset’sdata wasdividedinto two categories: training data (70 percent) and testing data (30 percent). Every machine learning model trained usingtrainingdata.

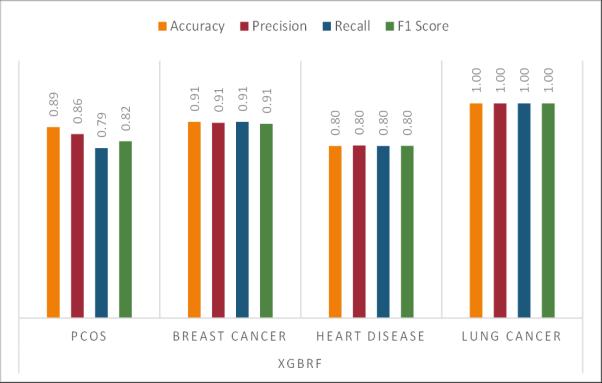

The performance metrics were evaluated using the test datasets not being explored in any training phase in terms of accuracy, precision, F1-score, and recall (see Figures 6, 7, 8, and 9). Additionally, training time and predictingtimeofeachwererecorded.

Accuracy: the proportion of cases that were accuratelypredictedtoallinstances.

ccuracy (1)

Precision: The quality of the positive class predictions is indicated by the ratio of true positivepredictionstoallpredictedpositives.

Precision (2)

Recall:theproportionofactualpositivestotrue positive predictions, highlighting the model’s capacitytoidentifyeveryrelevantcases.

Recall (3)

F1 Score: A balanced metric that takes consideration of both false positives and false negativesistheharmonicmeanofprecisionand recall.

F -score precision recall precision recall (4)

6:

7:

8:

3.6

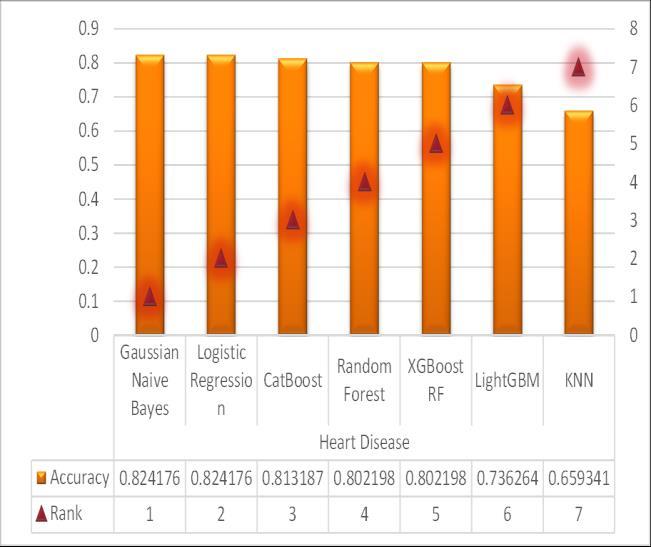

Utilizing libraries such as Seaborn and Matplotlib, bar plots were generated to depict model comparison (MC) in terms of performance metrics (Accuracy, Precision, F1 Score, and Recall) of models effectively (see Figures10,11,12,13,14,15,16,17,and18).

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

11: Performance Analysis of RF for 4 datasets

13: Performance Analysis of GNB for 4 datasets

14: Performance Analysis of CBfor 4 datasets

Figure 15: Performance Analysis of LR for 4 datasets

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Infigure18,theprimaryaxisisusedtoplotthetraining time, and at the same time secondary axis for plot the prediction time for better visualization. As shown in figures19,20,21,and22,Anotherbarplotwasgenerated to visualize the rank of each model, that lower ranks indicatebetterperformance.

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

In figures 19, 20,21,22 used secondary axis to plot the rankofMLmodelsforclearvisualization.

This research presents the performance of seven machine learning models on four different medical datasets: PCOS, Breast Cancer (BC), Heart Disease (HD), and Lung Cancer (LC). Additionally, each of the models was analyzed based on its performance metrics. In the caseofthePCOSdataset,LogisticRegressionreachedan accuracy of 90.80%, with the highest F1 Score-85.15%reflectingappropriatepredictionanda balancebetween false negatives and false positives. Random Forest achieved 89.57% accuracy but exhibited slightly lower recall and F1 scores, indicating reduced effectiveness in identifying positive cases. Other models, KNN, Gaussian Naive Bayes, CatBoost, XGBoost, and LightGBM performed reasonably well, with their accuracy falling between79.14%and88.96%.

RF performed better on the Breast Cancer dataset, having an accuracy of 91.56% and still possessing very good Precision and Recall values. Next came CatBoost, with90.89%,buttheverylongtrainingtimemadeitless practical than RF. Logistic Regression also showed good results (89.90%), lagging behind these models. In the heartdiseasedataset,GaussianNaiveBayesandLogistic Regression performed best, with eachof themachieving an accuracy of 82.42%, while all the metrics were wellbalanced. Though competitive, the results from Random Forest, CatBoost, and XGBoost in this problem not show good performance against simpler models, which showed satisfying results with less training time. The Lung Cancer dataset quite an exceptional case where all the metrics were perfect at 100% for the models RandomForest,KNN,CatBoost,andXGBoost.Thismeans that this may be one of the easiest datasets to classify. GaussianNaiveBayes,whilenotperfect,performedwell with91.67%accuracy,makingit an effectivealternative model. Overall,As shown in Figure 23, Logistic Regression was found to be quite consistent across all datasets.Especiallyforthe PCOSdataset(seefigure 19), this seemed to perform the best. Random Forest performed remarkably well in both the classification tasks of Breast Cancer and LungCancer, while Gaussian Naive Bayes turned out to be the best model for heart diseaseprediction.WhereasCatBoostandXGBoostwere givingpromisingresults,timeandagaintheywerefound to be outperformed by simpler models computationally efficiently as well as in terms of accuracy, considering thetrainingandpredictiontimes.Itreflectsthefactthat although complex models could result in high accuracy, simpler models like Logistic Regression and Random Forestyieldbetterefficiencywithgoodperformance.

Thisstudyhighlightedvariousbenefitsanddrawbacksof different machine learning models applied to various medical datasets. Logistic Regression and Random Forest were consistently very versatile, with the performance of Logistic Regression better on the PCOS dataset,whereasRandomForestperformedverywellon the Breast Cancer and Lung Cancer datasets. Gaussian Naive Bayes shows an ideal balance between performance and computational efficiency on the heart disease dataset. The simple model may be used where speed and efficiency are required, such as Logistic Regression and Gaussian Naive Bayes. Complex models like Random Forest and CatBoost are suitable for scenarios requiring high accuracy, provided there is sufficient time for training. In summary, selecting the best machine learning model for medical prediction depends on balancing accuracy, computational efficiency,anddatasetcharacteristics.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

CRediT Author Statement

Conceptualization: Padmapriya Arumugam; Methodology: Punithavathi Arikrishnan; Formal Analysis: Punithavathi Arikrishnan; Validation: Padmapriya Arumugam; Writing - original draft preparation: Padmapriya Arumugam and Punithavathi Arikrishnan; Writing - review and editing: Padmapriya ArumugamandPunithavathiArikrishnan.

ACKNOWLEDGEMENT

This work was supported by the Alagappa University ResearchFund(AURF)-ResearchFellowship[videLetter No. Rc.R2/Ph.D./R20223225/AURF Fellowship/2024, Alagappa University,Karaikudi,India,Date 28 November 2024], and I would like to thank my Supervisor Dr.Padmapriya A for her assistance throughout the research.

Conflicts of Interest

Theauthorsdon’thaveanyconflictofinterest.

Ethics Approval and Consent to Participate

This study did not involve human participants, and therefore, ethical approval and content are not applicable.

Consent for Publication

Consenttopublishhasbeengrantedbyeachauthor.

Availability of Data

PCOSwithandwithoutinfertility Dataset,BreastCancer Dataset,Heart disease Dataset,Lung Cancer Dataset,(Accessedon8thAugust2024).

Abbreviations

HD:HeartDisease

PCOS:PolycysticOvarySyndrome

LC:LungCancer

BC:BreastCancer

RF:RandomForest

LR:LogisticRegression

GNB:GaussianNaiveBayes

XGBRF: eXtreme Gradient Boosting RandomForest

KNN:K-NearestNeighbors

LightBGM: Light Gradient Boosting Machine

[1] Ahmad, G.N., et al., 2022. Comparative study of optimummedicaldiagnosisofhumanheartdiseaseusing machinelearningtechniquewithandwithoutsequential featureselection.IEEEAccess10,23808–23828.

[2] Akkaya, B., Sener, E., Gursu, C., 2022. A comparative studyofheartdiseasepredictionusingmachinelearning techniques, in: 2022 International Congress on HumanComputer Interaction, Optimization and Robotic Applications(HORA),IEEE.

[3] ALAM SUHA, S.A.Y.M.A., 2022. Predicting polycystic ovary syndrome through machine learning technique using patients’ symptom data and ovary ultrasound images. Diss. Department of Computer Science and Engineering,MIST.

[4] Ali, M.M., et al., 2021. Heart disease prediction using supervised machine learning algorithms: Performance analysis and comparison. Computers in Biology and Medicine136,104672.

[5] Asif, M.A., et al., 2021. Performance evaluation and comparative analysis of different machine learning algorithms in predicting cardiovascular disease. EngineeringLetters29.

[6]Chhotrani,A.,2022.Comparativeanalysisofmachine learningmodelsfordiseaseprediction.JournalofScience andTechnology3,10–20.

[7] Debal,D.A.,Sitote,T.M.,2022.Chronickidneydisease predictionusingmachinelearningtechniques. Journalof BigData9,109.

[8] Gupta, S., Gupta, M.K., 2022. A comparative analysis ofdeeplearningapproachesforpredictingbreastcancer survivability. Archives of Computational Methods in Engineering29,2959–2975.

[9] Hassan, M.M., et al., 2023. A comparative study, prediction and development of chronic kidney disease using machine learning on patients’ clinical records. Human-CentricIntelligentSystems3,92–104.

[10] Ibrahim, I., Abdulazeez, A., 2021. The role of machine learning algorithms for diagnosing diseases. JournalofAppliedScienceandTechnologyTrends2,10–19.

[11] Iftikhar, H., et al., 2023. A comparative analysis of machine learning models: a case study in predicting chronickidneydisease. Sustainability15,2754

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net

[12] Khanam, J.J., Foo, S.Y., 2021. A comparison of machinelearning algorithms for diabetes prediction. Ict Express7,432–439.

[13] Li, J.P., et al., 2020. Heart disease identification method using machine learning classification in ehealthcare. IEEEAccess8,107562–107582.

[14] Ramesh, T.R., et al., 2022. Predictive analysis of heart diseases with machine learning approaches. MalaysianJournalofComputerScience,132–148.

[15] Rawal, R., 2020. Breast cancer prediction using machinelearning. JournalofEmergingTechnologies and InnovativeResearch(JETIR)13,7.

[16] Sawhney,R.,etal.,2023.Acomparativeassessment ofartificialintelligencemodelsused for early prediction and evaluation of chronic kidney disease. Decision AnalyticsJournal6,100169.

[17] Sharma, A., Mishra, P.K., 2022. Performance analysis of machine learning based optimized feature selection approaches for breast cancer diagnosis. International Journal of Information Technology 14, 1949–1960.

[18]Srikanth,V.,2023.Chronickidneydiseaseprediction usingmachinelearningalgorithms,106–109.

[19] Suha, S.A., Islam, M.N., 2023. A systematic review and future research agenda on detection of polycystic ovarysyndrome(pcos)withcomputer-aidedtechniques. Heliyon.

[20] Uddin, S., et al., 2022. Comparative performance analysis of k-nearest neighbour (knn) algorithm and its different variants for disease prediction. Scientific Reports12,6

2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page531