International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

SHANMUGA PRIYA R1, JEEVA M2

1M. Tech Student, Department of Computer Science and Engineering, PRIST University, Thanjavur, Tamil Nadu, India.

2M.E., Assistant Professor, Department of Computer Science and Engineering, PRIST University, Thanjavur, Tamil Nadu, India. ***

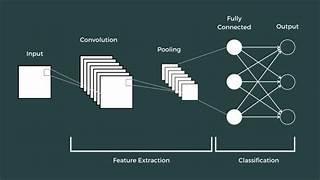

ABSTRACT- Retinal diseases (e.g. diabetic retinopathy, age-related macular degeneration, glaucoma, and retinal detachment) constitute some of the foremost causes of vision loss and blindness across the world. The early and accurate diagnosis of diseases in the retina remains paramount to effective treatment, and hence patient outcomes. This research proposes a deep learning approach that uses convolutionalneuralnetworks(CNNs)toidentifyandclassify different eye disorders. A large dataset of retinal images labelled with sickness categories is used to train the classifier.Toensureconsistencyininputforimprovedfeature extraction, pre-processing is applied to the retinal images. Data augmentation methods are also implemented to improve dataset quality and prevent overfitting. Convolutionallayersforfeatureextraction,poolinglayersfor image down sampling, and fully linked layers for classification comprise the CNN architecture. The labelled dataset is used to train the model through supervised learningtechniques.Validationlossisusedtocloselymonitor performance in order to avoid overfitting. Model evaluation is performed on a separate test dataset, and results are reported as accuracy, precision, recall, F1-score, and area underthereceiveroperatingcharacteristiccurve(AUC-ROC).

In addition, post-processing methods are used to filter lowconfidence predictions to improve the reliability of the systemforfieldperformanceinclinicalscenarios.

Key Words: AUC-ROC, Convolutional Neural Network, Data Augmentation, Image Preprocessing, Retinal Diseases,SupervisedLearning,VisionLoss

The human retina is a fragile and complex tissue, responsible for transducing incoming light stimuli, into neural signals, which is how we perceive the visual world. Unfortunately, there are many diseases of the retina (i.e. diabetic retinopathy, age related macular degeneration, glaucoma, retinal detachments), that can affect the function of the retina, and if not diagnosed or treated, they can lead to irreversible visual impairment, or complete vision loss. Therefore, timely and multiple ocular disease-related diagnoses are critical to effective treatment, and ultimately betterpatientoutcomes.

Recent developments in artificial intelligence, especially in deep learning, have produced new tools in medical diagnostics. In particular, convolutional neural networks (CNNs) have advanced the state of the art in image-based analysis.Thereissubstantialevidenceoftheir effectiveness across a variety of medical domains including ophthalmology. They have quickly been adopted by the communityincomputingthedetection,andclassificationof retinal disorders. This research tackles the challenge of an automateddiagnosticsystem,whichappliesCNNstoretinal images in order to predict retinal diseases. This work proposes a methodology using well-annotated, diverse retinal image datasets to create a CNN model, to accurately categorize and detect the presence, in addition to the severity, of a variety of retinal diseases. CNNs provide so manybenefitsinthissetting,forexample,CNNscanhelpto learn more complex features and patterns that are not known to expert human raters, and they allow for faster diagnoses, which can provide timely medical treatment. In addition,automatedsystemssuchasthis,couldhelpaddress shortages of trained ophthalmologists, notably in underservedareas.

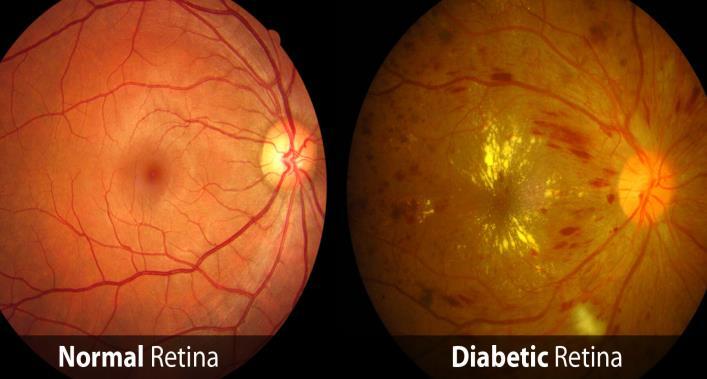

Figure 1 shows a retinal image with the common characteristic areas of the various retinal diseases marked clearly.

Hasan, Md Kamrul, et al. [1] developed an ensemble method that combines different machine learning classifiers to predict diabetes more accurately. The

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

ensemble approach demonstrated increased robustness against the disadvantage of individual classifiers. Integration with classifiers, including decision trees, SVM, and logistic regression, helped to improve the overall performance of the classifiers. The authors experimented with predefined diabetes datasets and produced results with higher precision and recall than single classifiers. In the course of their study, they also focused on the importance of data preprocessing and feature selection to improve performance. The authors discussed the challengesofdataimbalanceandofferedviablesolutionsto this problem. The findings in the study showed that ensemble learning offers reliability and validity of early detection of diabetes and would be beneficial if incorporatedintothehealthcaresystem.

Inanalyzing,Tigga,NehaPrerna,[2]reviewedclassification methods, including logistic regression, KNN, SVM, and random forests to predict type 2 diabetes. The authors adjusted models properly and used a rigorous data preprocessingtechniquetoaddressanymissingvaluesand normalize inputs across the dataset. The authors reported randomforestsandSVMhadthebestperformanceinterms of accuracy and sensitivity. The authors highlighted the need for models that are interpretable and noted glucose level and BMI were the most predictive features. The authors looked at real-world performance issues and statedfurtherresearchintomachinelearningandwearable technologies that support monitoring and management of diabeteswouldbebeneficial.

A healthcare remote monitoring system was developed by Ramesh, Jayroop, Raafat Aburukba, and Assim Sagahyroon [3] that utilizes machine learning for diabetes prediction model through data collected from wearable devices. The framework provided real-time data processing capabilities and timely alerts to patients and doctors. Using classifiers such as decision trees or neural networks, the machine learning approach was able to classify diabetes risk measuring physiological and lifestyle parameters. The authors included well-discussed considerations like the accuracyofthesensorsandprivacyofthedata.Theyfound it was possible to bring together the IoT and artificial intelligence to optimize the monitoring needs of their patient group and decrease hospital visits in turn improvingpatients’livesthroughbetterhealth.

Gupta, Himanshu et al. [4] focused on quantum machine learningmodelsandcomparedthemwithclassicalmachine learningdeeplearningpredictionmodelsthatoffersimilar predictive diabetes modelling capabilities. The authors demonstrated that quantum classifiers could offer similar or better prediction modelling capabilities than classical models, especially when factoring into account the probability of a very large feature space that builds high dimensional data sets. The paper detailed the theoretical benefitsofquantumcomputingandthecurrentlimitations of hardware available for quantum computing, further

showing that hybrid approaches using quantum and classical form factors is the current best solution. The authors summarized with an optimistic view on the future of quantum machine learning in the analysis of medical data and recommend interdisciplinary collaboration to develop this area of research to better predict analytics relatedtohealthcare.

Ahmad,HafizFarooq,etal.[5]aimedtoidentifykeyhealth features affecting diabetes prediction using machine learning. They used feature selection and classification methods such as random forests, and gradient boosting, and assessed clinical and lifestyle factors. Their results showed it is critical to consider variables like blood glucose, insulin resistance, and physical activity. Ahmad et al.discussedthatdevelopingafeatureengineeringprocess, as well as model interpretability is essential in clinical settings. Their work supports the application of datadriven insight in personalized medicine and daily diabetes screening,whichisacriticalcomponentinaddressingearly diagnosisandpreventativemethods.

Gayathri, S., et al. [6] created an automated model for binary and multiclass classification of diabetic retinopathy (DR) using Haralick texture features, and multiresolution imageanalysis.Theywereabletousetechniquestoextract discriminative features from retinal images, to correctly classify DR stages. The model implemented machine learning classifiers and showed highly accurate results in cases of early DR, and advanced DR. They argued that texture-based features could detect subtle changes to the retina.Thearticlenotedhowfeaturefusionand multiscale analysis, was critical in overall DR classification performanceevaluation.

Al-Antary, Mohammad T., and Yasmine Arafa [8] proposed a multi-scale attention network to improve diabetic retinopathy classification by focusing attention on importantareasofa retinal imageatmultiplescales.Their network used attention to assign high weights to relevant retinal features before it carried out the classification task which resulted in effective localization of lesions and improved classification performances. The performance of the multi-scale attention network was shown to surpass traditionalCNNsowingtoitssuccessfulcaptureofboththe global context and finer details in fundus images. Additionally, the authors highlighted the network's robustness towards distortions in image quality and lighting, evidencing that it could be used for clinical screening.

Zhou, Yi, et al." [9] created DR-GAN, a conditional generative adversarial network (GAN) that synthesizes fine-grainedlesionsseenindiabeticretinopathyimagesfor augmentinga trainingdataset.ThroughtheirGAN,Zhouet al.producedrealisticandnewlesionvariationstoincrease variation in their training samples and mitigate the effects of the small datasets that are characteristic to many

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

medicalimagingtasks.Infact,theGAN'sabilitytogenerate diversepathologicalfeaturesimprovedtheperformanceof thediabeticretinopathyclassificationmodelswhentrained with augmented datasets. Zhou et al." showed that synthetic lesion generation can be a valuable resource for creatingbroaderandbettercurateddiagnosticsystems.

Abdelmaksoud, Eman, et al. [9] described an automatic grading system for diabetic retinopathy for detecting multiple retinal lesions including microaneurysms, hemorrhagesand exudates. Inaddition tolesiondetection, they devised a way to combine severity grading for complete DR assessment. Employing a combination of advanced image processing and deep learning, they achieved accurate lesion localization and classification. TheirapproachalsomadeiteasiertointerpretDRgrading models by connecting the detected lesions directly with clinical severity levels. The system produced very promising results, and was indicated to aid ophthalmologistsinscreeninganddiagnosis.

Araújo, Teresa, et al. [10] described a use case for improving proliferative diabetic retinopathy (PDR) detection using data augmentation applied to eye fundus images. They applied several transformations relating to rotation, flipping and color to increase variability in the datasets, this provided the model with a more expansive dataset (meaning overfitting decreased and generalization increased). The datasets produced through augmentation methods improved detection sensitivity and specificity in regards to classification tasks regarding PDR. The authors note the value of the augmentation step within the development of their deep learning workflow, particularly within datasets of limited size and nature (imbalanced medical image datasets). Their conclusions underscore the positive implications augmentation can have related to diagnosticaccuracyinmedicalpractice.

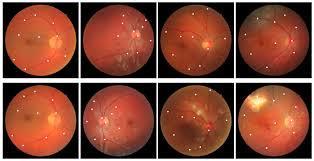

The current paradigms for predicting diabetic retinopathy from retinal images usually use a multi-step process that blends image processing and machine learning techniques with medical knowledge of diabetic retinopathy and eye anatomy. Usually, the process begins with retinal scans using state-of-the-art imaging technology. (e.g. fundus camera, Optical Coherence Tomography (OCT) scanner). Retinal images may include fundus photographs, fluorescein angiograms, or Optical Coherence Tomography scans, which allow for critical visible information to be used to support the diagnosis. Once images are obtained, them undergo preprocessing procedures to remove any undesired qualities to the dataset and standardize the images to a consistent degree. Pre-processing procedures can include techniques such as reducing noise, enhancing contrast, and image normalization. Once the images have been pre-processed, other preprocessing procedures

employ spatial registration to align images and to remove the undesired variations between images that prevent themfrombeingcomparable.Featureextractionisacrucial form of analysis in the diagnostics pipeline. It detects clinical visual features related to diabetic retinopathy: microaneurysms, haemorrhages, exudates, vessel tortuosity, and macular thickness for analysis and will suggestthe presence orseverity of disease.Imageanalysis methods are used to extract clinical markers that are then used to train machine learning models to predict the disease.TherehasalsobeensomeexplorationintotheGNN orGraphNeuralNetworkmethodsrecently,whichisnovel to retinal image analysis and would replace the feature extraction and image analysis with GNN based models. Retinal image analysis via GNN remains particularly important in this regard as diabetic retinopathy is an important complication for diabetic patients, and early detection or treatment for diabetic retinopathy is paramount to preventing irreversible vision loss. GNN models evolve from interpreting retinal images as graphs, fromwhereeachnoderepresentsitspixelsorregionswith edges creating a spatial structure. Using GNN-based representations of the retinal images assists in improving predictive performance by attaching contextual and complex spatial relationships. GNN datasets typically include retinal images of eye health from diabetic patients compared to healthy or non-diabetic patients, with preprocessing to extract image features and constructed graphs.

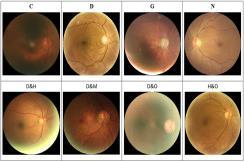

To reduce these complications, we proposed a modern strategy through the use of Convolutional Neural Network (CNN) for improving the accuracy and effectiveness of diagnosing several retinal diseases. The prepared system is making use of a full and varied dataset containing retinal images for multiple different eye conditions including diseases like diabetic retinopathy, glaucoma, retinal detachment, and age-related macular degeneration. After establishing our dataset for a manageablemodelineffectivetraining,wewillemploythe datasettoconductpreprocessingtechniqueovertheretinal images. Preprocessing involves manipulating the image size and pixel brightness and contrast as required for the best outcome for eye disease classification. Our preprocessing steps also include rotation and flipping the retinalimagesintomanydifferenttransformations.Wewill also employ a data augmentation technique in order to artificially extend our dataset and add any variability. All preprocessing and augmentation accepted methods ensure consistency amongst all input data offered to the CNN model, while arming it with a high-quality training dataset with ample insights for learning and most importantlyclassifyingdifferenteyediseases

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

Data source

Image preprocessing

Data augmentation

Augmented data to CNN

CNN feature extraction

Adatasetcontainingsimilarretinalimageswillbecollected acrossmultiplediseasecategories.Theimageswillundergo preprocessing techniques to improve clarity and standardization.Thepreprocessingtechniqueswillinvolve the resizing of the images to have the same dimension, enhancement of image contrast, and the use of noiseretentionfilterstoreduceoreliminateunwantedartifacts.

To increase the diversity of the model's training dataset and limit potential overfitting when training the model, different augmentation techniques are also performed. Augmentationtechniquesincluderotations,horizontal and vertical flipping, varying the zoom, or brightness. Augmentation techniques mimic the potential spatial variancesofretinalimagesinreal-worldsettings.

Feature Extraction via Convolutional Neural Network (CNN)

A Convolutional Neural Network (CNN) is created and trained to automatically learn and extract the discriminative features from example retinal images. The CNN architecture is made up of layers of multiple convolutional layers, each given corresponding activation functions and pooling layers to aid in learning spatial hierarchiesoffeatureswhilereducingdimensionality.

Model training and validation

Disease prediction

Classification

The characteristics that the CNN extracted are passed into deliveringtheclassificationthroughfullyconnectedlayers. Theappropriateactivationfunction(forexample, SoftMax) isusedintheoutputlayertocategorizetheimagedatainto specific classes for retinal disease, for instance, diabetic retinopathy examples and additional classes. Figure 4 showsCNNLayers.

The CNN model is trained using supervised learning, and makes use of labelled images for guidance of the learning process. An appropriate loss function (for example,

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

categorical cross-entropy) is minimized and can be done with optimization algorithms like Adam or SGD. Regularization methods (for example, dropout and early stopping)canbeusedtoimprovegeneralization.

The performance of the system is evaluated by using different metrics such as accuracy, precision, recall and an F1 score to evaluate the model on a separate test dataset. Cross-validation techniques can guide robustness andreliabilityonthemodel.

Transfer learning techniques can also be used to fine-tune the deep learning model and improve its performance. Once the deep learning model has been trained, it can be evaluated on a separate test dataset to assess its accuracy and generalizability. The model can also be optimized by adjusting its hyperparameters or by using techniques such as data augmentation to improve its performance. In this module, we can classify the diseases whetheritisdiabeticornotandalsoidentifythemulti-level diabetics. And also predict the Glaucoma diseases with precautiondetailswithimprovedaccuracyrate.

The proposed system was thoroughly evaluated in the lab to determine its detection and classification accuracy of retinal diseases using fundus images. The procedure consistedoftrainingtheCNNnetworkonalabelleddataset andvalidatingitsperformanceonanunseentestdataset.

The training performance showed that the model made consistent progress in increasing accuracy (and subsequently, decreasing loss) and, therefore learned features of the retinal images. The validation data also showed that the model generalized well and did not result in overfitting, which correlates to our use of data augmentationduringtraining(and,regularization).

The over-all accuracy on the test dataset was high for the model.ThemodelwassuccessfulinclassifyingtheHealthy and diseased retinal images. There was also strong classified accuracy for retinal conditions such as diabetic retinopathy, which indicates that the model was able to discriminatebetweenclassesandidentifysubtleaspectsof diseaseintheimages.

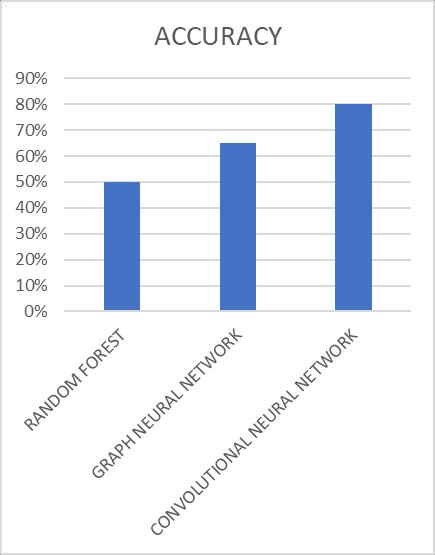

3: Accuracy chart

When evaluating the confusion matrix, it was confirmed that the model was capable of correctly classifying the majorityofsamplesinallcategoriesofdiseasewithitbeing misclassified occasionally. Areas in which the model proved to misclassify were few and were generally betweenadjacentstagesofdisease,whicharealreadysubareasofdisease.Thesefindingswillprovidepotentialareas forimprovement.

Key performance metrics such as precision, recall and F1 scoreprovidesabalancedperspectiveaboutmodelquality. High precision suggests few false positives, whilst high recall suggests that most cases of disease were correctly identified.TheF1scoresuggestsanoverallgoodqualityof classification.

Thedevelopedsystemperformedbetterthanbothbaseline models and previous methods, both in terms of accuracy andcomputationalefficiency.Thisimprovementwaslikely duetotheoptimallyconstructedCNNarchitectureand,the extensivepreprocessingsteps.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

In summary, the results of the experiments give strong evidence that the system is a clinically valid and helpful tool for the automated diagnosis of retinopathy, allowing fortimelyandeffectivedecision-makingbyclinicalstaff.

The project successfully developed and executed an automated retinal disease detection system that utilizes deep learning methodology, specifically convolutional neural networks (CNNs). The system effectively analyzes retinal fundus images to detect more than one retinal condition with an acceptable degree of accuracy, demonstrating that it can be used as a supporting tool to aidintheearlydiagnosisofretinaldisease.Usingadvanced imagepreprocessingandfeatureextractiontechniques,the model was able to learn the important patterns and efficientlydistinguishhealthyfromdiseasedretinalimages. The experimental results demonstrate that the proposed approachprovidesstrongperformancevaluesforaccuracy, precision, recall and F1-score, indicating the strength and utility of the system in real-world use. Thus, the provided automated detection system is a useful tool that could allow ophthalmologists to reduce their workloads, decrease human misdiagnosis error and provide quicker diagnostic results leading to improved patient outcomes. Ultimately,allofthiscanfacilitateanon-invasive,low-cost, accessible and AI-based solution for retinal disease screening from fundus images. Future work could lead to improvements in the current system by further expanding thetrainingdatasetsothatthemodelistrainedwithmore diverse and larger distributions of images in order to generalize better across various populations. The system's diagnosticperformancemayalsobeimprovedbyincluding different imaging modalities or clinical data into the integrated diagnostic process. Regardless, this work provides an excellent foundation for developing intelligent tools to support the early detection and management of otherretinaldiseases.

[1] Hasan, Md Kamrul, et al. "Diabetes prediction using ensembling of different machine learning classifiers."IEEE Access8(2020):76516-76531.

[2]Tigga,NehaPrerna,andShrutiGarg."Predictionoftype 2 diabetes using machine learning classification methods."Procedia Computer Science167 (2020): 706716.

[3] Ramesh, Jayroop, Raafat Aburukba, and Assim Sagahyroon. "A remote healthcare monitoring framework fordiabetespredictionusingmachinelearning."Healthcare TechnologyLetters8.3(2021):45-57.

[4] Gupta, Himanshu, et al. "Comparative performance analysis of quantum machine learning with deep learning

for diabetesprediction."Complex &Intelligent Systems8.4 (2022):3073-3087.

[5]Ahmad,HafizFarooq,etal."Investigatinghealth-related features and their impact on the prediction of diabetes using machine learning."Applied Sciences11.3 (2021): 1173.

[6] Gayathri, S., et al. "Automated binary and multiclass classification of diabetic retinopathy using haralick and multiresolution features."IEEE Access8 (2020): 5749757504.

[7] Al-Antary, Mohammad T., and Yasmine Arafa. "Multiscale attention network for diabetic retinopathy classification."IEEEAccess9(2021):54190-54200.

[8] Zhou, Yi, et al. "DR-GAN: conditional generative adversarial network for fine-grained lesion synthesis on diabetic retinopathy images."IEEE Journal of Biomedical andHealthInformatics(2020).

[9] Abdelmaksoud, Eman, et al. "Automatic diabetic retinopathy grading system based on detecting multiple retinallesions."IEEEAccess9(2021):15939-15960.

[10] Araújo, Teresa, et al. "Data augmentation for improving proliferative diabetic retinopathy detection in eye fundus images."IEEE Access8 (2020): 182462182474.

[11]F.Jia-Wei,Z.Ru-Ru,L.Meng,H.Jia-Wen, K.Xiao-Yang, and C. Wen-Jun, ‘‘Applications of deep learning techniques fordiabeticretinal diagnosis,’’J.Automatica Sinica,vol.47, no.5,pp.985–1004,2021.

[12]N.Gharaibeh,O.M.Al-Hazaimeh,A.Abu-Ein,andK.M. O. Nahar, ‘‘A hybrid SVM Naïve–Bayes classifier for bright lesionsrecognitionineyefundusimages,’’Int.J.Electr.Eng. Informat.,vol.13,no.3,pp.530–545,Sep.2021.

[13] O. M. Al-Hazaimeh, A. Abu-Ein, N. Tahat, M. Al-Smadi, and M. Al-Nawashi, ‘‘Combining artificial intelligence and image processing for diagnosing diabetic retinopathy in retinal fundus images,’’ Int. J. Online Biomed. Eng., vol. 18, no.13,pp.131–151,Oct.2022.

[14] W. Ren, A. H. Bashkandi, J. A. Jahanshahi, A. AlHamad, D. Javaheri, and M. Mohammadi, ‘‘Brain tumor diagnosis using a step-by-step methodology based on courtship learning-based water strider algorithm,’’ Biomed. Signal Process. Control, vol. 83, May 2023, Art. no. 104614, doi: 10.1016/j.bspc.2023.104614.

[15] D. Chen, W. Yang, L. Wang, S. Tan, J. Lin, and W. Bu, ‘‘PCAT-UNet: UNet-like network fused convolution and transformer for retinal vessel segmentation,’’ PLoS ONE, vol. 17, no. 1, Jan. 2022, Art. no. e0262689, doi: 10.1371/journal.pone.0262689.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN:2395-0072

[16] J. Wei and Z. Fan, ‘‘Genetic U-Net: Automatically designed deep networks for retinal vessel segmentation usingageneticalgorithm,’’2020,arXiv:2010.15560.

[17] A. Karaali, R. Dahyot, and D. J. Sexton, ‘‘DR-VNet: Retinal vessel segmentation via dense residual UNet,’’ 2021,arXiv:2111.04739.

[18] K. Aurangzeb, S. Aslam, M. Alhussein, R. A. Naqvi, M. Arsalan, and S. I. Haider, ‘‘Contrast enhancement of fundus images by employing modified PSO for improving the performance of deep learningmodels,’’IEEEAccess,vol.9, pp.47930–47945,2021.

[19] M. Alhussein, K. Aurangzeb, and S. I. Haider, ‘‘An unsupervised retinal vessel segmentation using Hessian and intensity-based approach,’’ IEEE Access, vol. 8, pp. 165056–165070,2020.

[20]T.M.Khan,M.Alhussein,K.Aurangzeb,M.Arsalan,S.S. Naqvi,andS.J.Nawaz,‘‘Residualconnection-basedencoder decoder network (RCED-Net) for retinal vessel segmentation,’’ IEEE Access, vol. 8, pp. 131257–131272, 2020.

[21] K. Aurangzeb, R. S. Alharthi, S. I. Haider, and M. Alhussein, ‘‘An efficient and light weight deep learning model for accurate retinal vessels segmentation,’’ IEEE Access, vol. 11, pp. 23107–23118, 2023, doi: 10.1109/ACCESS.2022.3217782.

[22]D.Jha,S.Ali,N.K.Tomar,H.D.Johansen,D.Johansen,J. Rittscher,M.A.Riegler,and P.Halvorsen,‘‘Real-timepolyp detection, localization and segmentation in colonoscopy usingdeeplearning,’’IEEEAccess,vol.9,pp.40496–40510, 2021,doi:10.1109/ACCESS.2021.3063716.

[23] J. Gao, Y. Jiang, H. Zhang, and F. Wang, ‘‘Joint disc and cup segmentation based on recurrent fully convolutional network,’’PLoSONE,vol.15,no.9,pp.1–23,2020.

[24] M. Tabassum, T. M. Khan, M. Arsalan, S. S. Naqvi, M. Ahmed, H. A. Madni, and J. Mirza, ‘‘CDED-Net: Joint segmentation of optic disc and optic cup for glaucoma screening,’’IEEEAccess,vol.8,pp.102733–102747,2020.

[25] B. Liu, D. Pan, and H. Song, ‘‘Joint optic disc and cup segmentation based on densely connected depth wise separableconvolutiondeepnetwork,’’BMCMed.Imag.,vol. 21,no.1,Dec.2021,Art.no.14,doi:10.1186/s12880-02000528-6

[26] M. Bansal, M. Kumar, and M. Kumar, ‘‘2D object recognition:AcomparativeanalysisofSIFT,SURFandORB feature descriptors,’’ Multimedia Tools Appl., vol. 80, no. 12, pp. 18839–18857, Feb. 2021, doi: 10.1007/s11042021-10646-0.

[27] L. Alzubaidi, J. Zhang, A. J. Humaidi, A. Al-Dujaili, Y. Duan, O. Al-Shamma, J. Santamaría, M. A. Fadhel, M. AlAmidie,andL.Farhan,‘‘Reviewofdeeplearning:Concepts, CNN architectures, challenges, applications, future directions,’’J.BigData,vol.8,no.1,pp.1–74,Mar.2021

[28] A. Ding, Q. Chen, Y. Cao, and B. Liu, ‘‘Retinopathy of prematuritystagediagnosis usingobjectsegmentationand convolutional neural networks,’’ in Proc. Int. Joint Conf. NeuralNetw.(IJCNN),Jul.2020,pp.1–6

[29] A. Z. H. Ooi, Z. Embong, A. I. A. Hamid, R. Zainon, S. L. Wang, T. F. Ng, R. A. Hamzah, S. S. Teoh, and H. Ibrahim, ‘‘Interactivebloodvesselsegmentationfromretinalfundus image based on Canny edge detector,’’ Sensors, vol. 21, no. 19,p.6380,Sep.2021.

[30] Y. Liu, J. Tian, R. Hu, B. Yang, S. Liu, L. Yin, and W. Zheng,‘‘Improvedfeaturepointpairpurificationalgorithm basedonSIFTduringendoscopeimagestitching,’’Frontiers Neurorobotics,vol.16,Feb.2022,Art.no.840594.

Mrs.ShanmugaPriyaR,is presently pursuing M.Tech., in Computer Science and Engineering at PRIST University, Thanjavur, Tamil Nadu, India. With 18 years of substantial teaching experience in computer science and engineering, she is currently a lecturer in the department of computer engineeringatWomen'sPolytechnic College in Karaikal, Puducherry, India

Mrs. M. Jeeva has been working as an Assistant Professor in Computer Science and Engineering at PRIST University, Thanjavur, Tamil Nadu, India,wheresheteachescourses on the Design and Analysis of Algorithms as well as the Fundamentals of Data Science and Analytics.