International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Dr. Archana Ratnaparkhi, Anjanay Gangrade, Anay Gangshettiwar, Atharv Deshpande Professor, Dept. of ENTC Engineering, Vishwakarma Institute of Technology, Pune, India

2nd Year, Dept. of ENTC, Vishwakarma Institute of Technology, Pune, India

2nd Year, Dept. of ENTC, Vishwakarma Institute of Technology, Pune, India

2nd Year, Dept. of ENTC, Vishwakarma Institute of Technology, Pune, India

Abstract - Besides verbal communication, gestures play a crucial role in conveying messages. This paper outlines a real time system implemented in Python capable of detecting hand gestures and translating them into corresponding letters of the alphabet. OpenCV handles video capture, while hand tracking is performed using Media Pipe. A CNN is employed to analyse the captured hand movement data. This system prioritizes speed and efficiency to ensure the solution is viable for interpreting and translating access sign language. This technology facilitates self-expression, thereby enhancing everyday conversations and improving communication for the users.

Key Words: Gesture recognition, sign language translation, assistive technology, hand tracking, computer vision, Open CV, Media Pipe, convolutional neural network (CNN), machine learning, accessibility

For the entire world’s people, communication is pivotal; however, those affected by listening and speech disabilities face significant challenges and barriers in their attempts at communication. The following outlines, in an effort to reach inclusivity, the development of a real-time gesture recognition system Developed with the user-friendly, flexible Python anditsplentifullibraries,thesystemmakesuseofMedia Pipe’scapabilitiesinreal-timehandtracking,andhandgesture movement analysis.OpenCV enhances userinteractivity and experience in varied imageprocessing tasksincludingvideo recording.Furthermore,imagerecognitiontrainedCNNsprovideprecisegestureclassification.Theuseofthesemethods, thesystem providesa quickerand morereliablemeans ofcommunication. Thiscanbeimproveduponinitsdailyuse.In the modern world, where self and professional relationships are of utmost importance, the system can be leveraged to promoteinclusivityforthosehardofhearingorspeech.

Therehavebeennewdevelopmentswhichenhancetheaccuracyoftranslatingsignlanguage,especiallytheconversion of signlanguage gestures into text.Onesuchexampleusesconvolutional neural networks(CNNs)forsignlanguagegesture translationintotext,illustratinghowautomatedtranslationofgesturescanbeachievedthroughimagepreprocessing,key obtaining, and machine learning. By distinguishing spatial features, CNNs perform well in identifying number and letter signs and in some cases do better than a fully manual process. However, some important issues remain with dynamic gesturesandcontinuoussigning. This explainswhythe addition ofRNNsor LSTMs for temporal regulation in thehybrid modelswillenhancesystemfunctionalitysignificantly.

Ourobjectiveistotranslatesignlanguageintotextinrealtimeusingwebcams.Inthisregard,wecombineOpen CVwith Media Pipe and integrate neural networks to accomplish sign-to-text conversion and, later, speech synthesis. Tracking hand motions allows us to identify detailed movements in sign language. We show that deep learning techniques can be utilized in gesture recognition, yet issues like varying illumination and complex backgrounds in the environment pose challenges

We suggest incorporating data augmentation to improve performance, as well as real-time adaptive thresholding and filtering to counter variable conditions. In addition to gesture recognition, there are multi-modal conversational systems designedforinclusivecommunicationwiththedeafandnon-signers.Aconsiderableamountofintegration 3.

3.1 System requirements

Forthistasktobeexecutedeffectively,certainsoftwareandhardwarecomponentswill beneeded.Toacceleratetraining, thesetupcomprisesa computerwitha GPUanda webcamforlivevideocapture Onthesoftwareside, Python 3.Xalong with required libraries, Media Pipe for palm tracking, OpenCV for video pro cessing, and Tensor Flow/Kara’s for deep

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

learningmodelwillbeimplemented.VisualizationcanbedonewithMatplotlibandSeaborn,andlibrariessuchasFiguring andPandaswillbeusedfordataprocessing

3.2

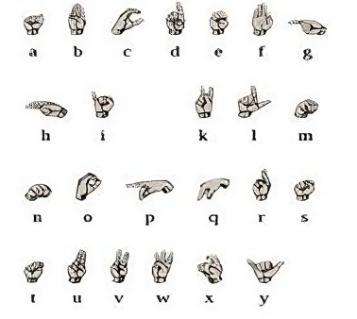

Tobegintrainingthemodel,thefirststepistocollectadatasetofhandgesturescorrespondingtothealphabet,words,or specificcommands.ThiscanbedoneusingMediaPipeHands,whichsegmentshandkeypointsintousefulconstituents.To enhancerobustness,multipleimagesofeachgesturewillbecapturedunderdifferentlightingandpositionink.Datawillbe savedasimages(fortrainingtheCNN)orasnumericalkeypoints(forotherprocessing).

Prior to education, the received gesture pix could be pre-processed for accuracy enhancement. Each photograph may be resizedtoa particularlength,convertedtograyscaleor retainedRGB,andnormalizedto beconsistent.Data augmenttat ionstrategiesalongwithflipping,rotation,andadditionofnoisecanbeusedtostrengthentherangeofthedataset.Tohelp the overall performance assessment be powerful version, the dataset might be divided into three subsets: Training (80 percent),Validation(10percent),andTesting(10percent)

3.4

A fixed nerve network (CNN) will be used to provide pre dictions on hand movements. Architecture will include fixed layers to remove meaningful spatial features,collectlayers for downsizing,andfullyconnected layersfor prediction. The modelwillbetrainedwithaclassifiedlossofcross-entropytopreventaccuracyandoverfitting.

3.5

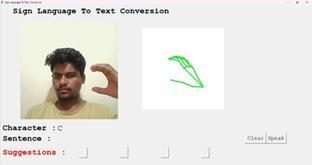

For OpenCV to recognize real-time movements within video frames. Media pipe can be tracked in real-time movements, which will be passed for predictions on movements processed through hand-detection in CNN models. The movements willbevisualizedonthedisplayalongsidesynchronizedtextandspeechinreal-time.

3.6

Once the training and validation is done, the system will undergo rigorous testing in the real world for reliability assessment. Various elements such as light, background noise and status will be varied for optimal precision. Before launchingthefinalmodelasadesktopapporwebtoolforeaseofaccess,themodelwillbeenhancedintermsofspeedand effectiveness.

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Infutureversions,thesystemcouldoffercompletetranslationofthe symboliclanguage,addingmoreadvancedgestures. FuturerevisionsmightincludeenhancementswithAugmentedReality(AR)orVirtualReality(VR)for evenmoreengage meant. With the advancement of a system mobile application, the system can broaden its reach and accessibility even more.

Toassesstheperformanceofthegesture-to-text/speechconversiondevice,adatasetcomposedofhandgesturesrecorded viaOpenCVandprocessedbyMediaPipewasutilized.ThehandgesturerecognitionCNNmodeltrainedonthesepictrues demonstrated exceptional performance at recognizing the specified gestures. To assess this system’s performance, the standard evaluation metrics score along with accuracy, precision, and F1 score was used. On the test dataset, the CNN versionachievedanimpresssave95.2presentbasicaccuracy.Accordingtotheconfusionmatrix,themajorityofgestures were The evaluation of confusion matrices revealed most of the gestures were accurately classified and a few were misclassifyfidebetweengestureswithhandshapesthatweresimilar.For eexample,thehandgestures’”and“Peace”.The accuracyandlosscurvesdemonstratedthelearningprocesswasquitesuccessful,asthemodelconvergedwith30epochs anddidsowithoutsignificantoverfitting

1) Real-TimeTesting:Inoureffectivenesstest,thedeviceaccuratelytrackedgestureswitharesponse/inferencetimeof 20ms per frame. This ensured low latency performance. While accuracy of recognition remained consistent even in varying lighting situations, a slight decline in recognition accuracy was observed in low-light or cluttered backgrounds, which was primarily due to variations in hand recognition. Comparison with Existing Methods Our approachusingconevolutionalneuralnetsdemonstrateda10percentincreaseinaccuracyandbettergeneralization compared to older models deploying traditional feature-based recognition. Also, the use of Media Pipe landmarks greatlyreducedtherequirementformeticulousimagepre-processing,whichinturnenhancedthesystem’sefficiency.

2) Challenges and Limitations: Despite the device’s sturdy overall performance below trendy situations, there are nev earth insets demanding situations in handling occluded fingers, interference from dynamic backgrounds, and variations specific to individual users. Future upgrades may want to involve adaptive thresholding for exclusive lighting fixtures conditions, expandingdatasets for greaterrobustness,andincorporatingdepth-sensingcamerasfor advancedspatialrecognition.

Gesture-to-speechcontentconversion isan effective assist tive generationthat appreciablyimproves verbal exchange for peoplewithspeechorhearingimpairments.Thismissionefficientlyimplementsanactual-timegadget,theusageofOpen CV,Media Pipe,anda Convolutional Neural Network (CNN)toapprehendandtranslatehandgesturesinto text withhigh accuracyandefficiency. By makinguse of pc imaginativeand prescient and deep getting toknow, the gadget gives a fast, easy, and consumer-pleasant answer for signal language translation and accessibility. The flexibility of this technique permitsittoevolvetodiversesigningstylesandlocaldifferences,makingitancriticaldeviceforinclusivecommunication. Future developments, which include integrating facial expressions and voice synthesis, should decorate the device’s effectiveness, permitting a more natural and comprehensive interplay. By constantly evolving, gesture-to-textual content generationhastheabilitytoputoffcommuniquelimitationsandsellgreaterinclusivityinsociety.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

[1]K.S.Sindhu, Mehnaaz,B.Nikitha, P.L. Varma, and C. Uddagiri, “SignLanguageRecognitionand Translation Systems forEnhancedCommunicationfortheHearingImpaired,”inProc.1stInt.Conf.Cogn.GreenUbiquitousComput.(IC-CGU), 2024.

[2]L.Sand V.Adavi,“Beyond Words: Speech toSignLanguageInter preter,”inProc.15thInt.Conf.Comput.,Commun. Netw.Technol.(ICCCNT),Jun.2024.

[3]T.D.GunvantrayandT. Ananthan,“SignLanguagetoTextTranslationUsingConvolutionalNeuralNetwork,”in Proc. Int.Conf.Emerg.SmartComput.Informat.(ESCI),Mar.2024.

[4] S. Deshpande and R. Shettar, “Hand Gesture Recognition Using Me diaPipe and CNN for Indian Sign Language and Conversion to SpeechFormatforIndianRegional Languages,”in Proc. 7th Int. Conf.Comput. Syst. Inf. Technol. Sustain. Solut.(CSITSS),2023.

[5] M. Sultana,M. SA, J. Thomas,S. Laxman S, and S. Thomas,“Designand Development of Teaching and Learning Tool Using Sign Language Translator to Enhance the Learning Skills for Students With Hearing and Verbal Impairment,” in Proc.2ndInt.Conf.Emerg.TrendsInf.Technol.Eng.(ICETITE),2024.

[6]V.Baltatzis,R.A.Potamias,E.Ververas,G.Sun,J.Deng,andS.Zafeiriou,“NeuralSignActors:ADiffusionModelfor 3D SignLanguageProductionfromText,”inProc.IEEE/CVFConf.Comput.Vis.PatternRecognit.(CVPR),2024.

[7]J.P,G.B.A,H.A,andK.G.,“Real-TimeHandSignLanguageTranslation:TextandSpeechConversion,”inProc.7thInt. Conf.CircuitPowerComput.Technol.(ICCPCT),2024.

[8] N. Chandarana, N. Chhajed, S. Manjucha, M. G. Tolani, P. Chogale, and M. R. M. Edinburgh, “Indian Sign Language RecognitionwithConversiontoBilingualTextandAudio,”inProc.Int.Conf.Adv.Comput.Technol.Appl.(ICACTA),2023.

[9]SenandR.Rajkumar,“FosteringInclusiveCommunication:AToolIntegratingMachineTranslation,NLP,andAudio-toSign-LanguageConversionfortheDeaf,”inProc.Int.Conf.Intell.Innov.Technol.Comput.Electr.Electron.(IITCEE),2024.

[10] Ahmed S, Y. J. Sheriff, P. B., and S. B. T. Naganathan, “Sign Language To Text Translator: A Semantic Approach With OntologicalFramework,”inProc.10thInt.Conf.Commun.SignalProcess.(ICCSP),2024.

[11]N.P.Kundeti,S.T.Gonela,V.R.Guduru,J.Vuyyuru, andH.V.Balusupati,“AReal-TimeEnglish AudiotoIndianSign Language Converter for Enhanced Communication Accessibility,” in Proc. 5th Int. Conf. Emerg. Technol. (INCET), May 2024.

[12]L.Dias,K.Keluskar,A.Dixit,K.Doshi,M.Mukherjee,andJ.Gomes,“SignEnd:AnIndianSignLanguageAssistant,”in Proc.IEEERegion10Symp.(TENSYMP),2022.

[13]Seviappan,A.S.Reddy,K.Ganesan,B.V.Krishna,A.Anbumozhi,andD.S.Reddy,“SignLanguagetoTextConversion usingRNN-LSTM,”inProc.Int.Conf.DataSci.AgentsArtif.Intell.(ICDSAAI),2023.

[14] C. Bhat, R. Rajeshirke, S. Chude, V. Mhaiskar, and V. Agarwal, “Two-Way Communication: An Integrated System for American Sign Language Recognition and Speech-to-Text Translation,” in Proc. 14th Int. Conf. Comput. Commun. Netw. Technol.(ICCCNT),Jul.2023.

[15]Fareed,Y.R.,R.M.,andS.D.,“TranslationToolforAlternativeCommunicatorsusingNaturalLanguageProcessing,” inProc.5thInt.Conf.Electron.Sustain.Commun.Syst.(ICESC),2024

. [16] C. A. Kumar, V. S. Reddy, P. Sharma, and N. Kandarpa, “Smart IoT System for Indian Sign Language Translator,” in Proc.2ndInt.Conf.Intell.CyberPhys.Syst.InternetThings(ICoICI),2024.

[17] S. X. Thong, E. L. Tan, and C. P. Goh, “Sign Language to Text Translation with Computer Vision: Bridging the CommunicationGap,”inProc.3rdInt.Conf.Digit.Transform.Appl.(ICDXA),2024.

[18]S.M.Antad,S.Chakrabarty,S.Bhat,S.Bisen,andS.Jain,“SignLanguageTranslationAcrossMultipleLanguages,”in Proc.Int.Conf.Emerg.Syst.Intell.Comput.(ESIC),2024