International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

Prof. S. B. Bele1 , Neha G. Parshette2, Riyasha G. Sonawane3, Sakshi R. Jadhav4, Jesika P. Chourpagar5 .

1Prof. S. B. Bele (MCA Department Of Vidya Bharati Mahavidyalaya, Amravati )

2Neha G. Parshette, 3Riyasha G. Sonawane, 4Sakshi R. Jadhav (Students, MCA Department Of Vidya Bharati Mahavidyalaya, Amravati).

5Jesika P. Chourpagar (Student, Department Of Computer Science, Vidya Bharati Mahavidyalaya, Amravati) ***

Abstract: Deep learning transformed healthcare by extending beyond conventional computer-aided diagnosis (CAD) schemes. Rather than depending on handcrafted features, deep models learn raw data, driving innovation in various areas such as oncology, neurology, and pathology. Models are employed for important tasks including image classification, segmentation, detection, and multimodal analysis. Althoughstrong, this technology is confrontedby a number of challenges such as data availability, interpretability, and ethics. Future directions include Explainable AI (XAI) to establish confidence, federated learning to maintain patient privacy, multimodal fusion for integrated patient models, and digital twins to treat virtually. Successful integration of AI in medicine ultimately hinges on collaboration, rigorous regulations, and trust betweencliniciansandAIsystems.

Keywords: AI in healthcare, CAD, medical imaging, oncology, neurology, pathology, segmentation, XAI, federated learning, digital twins.

1. Introduction:

Artificial intelligence (AI) is revolutionizing healthcare in anunprecedentedway.Thesurgeinmedicaldata ranging from MRI and CT scans to electronic health records and genomic data haspresentedanopportunity aswell asa challenge. Handcrafted features and rule-based systems were used by traditional CAD systems, which were introduced in the late 20th century. Though these systems were revolutionary then, they were not robust, adaptable, orscalable.Theintroductionofdeeplearningin2012was the big wake-up call. The win by AlexNet in ImageNet competitionshowedthecapabilityofCNNstolearnsubtle features directly from raw data. Within a short time, this innovation percolated into the field of healthcare. Deep learningalgorithmsnowidentifydiabeticretinopathywith the same performance as ophthalmologists, identify lung nodulesinCTscans,andisolatetumorsinbrainMRIswith great accuracy. This article summarizes the roots of deep learning in medicine, discusses clinical applications,

highlights significant challenges, and describes promising areas of future development. It aims to offer a technical and practical overview of how deep learning is advancing healthcare'sfuture.

Background

2.1 Convolutional Neural Networks (CNNs):

CNNsarethebedrockofclinicalimaging.Theircapacityfor recordingspatialhierarchiesmeansthattheyareperfectly suited to disease classification and localization. ResNet, Dense Net, and Efficient Net architectures are particularly popular. For instance, CNN-powered COVID-19 models were able to reach accuracy levels above 95% on chest Xrays, illustrating their capability in high-speed triage applications.

2.2 Recurrent Neural Networks (RNNs) and LSTMs:

Sequential clinical information like ECG, EEG, and patient monitoring data need to be temporally modelled. LSTMs and gated recurrent units (GRUs) are capable of holding long-term dependencies, allowing the early detection of arrhythmias or seizures. RNNs are utilized in some ICU systemstopredictsepsissixhoursaheadoftime.

2.3 Autoencoders and VAEs:

Autoencoders compact data, filter out noise, and reveal underlyingpatterns.Variationalautoencodersaddtothese skills with generative modeling, creating synthetic scans orpathologyimagesforuncommondiseases.

2.4 Generative Adversarial Networks (GANs):

GANs create realistic medical images, conduct modality translation (CT-to-MRI), and augment datasets. They are especially beneficial when balancing datasets in uncommon conditions, allowing models to generalize better.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

2.5 Transformers:

Transformers take advantage of self-attention to learn global context. Vision Transformers and Swin Transformers have achieved state-of-the-art in pathology slideexaminationandorgansegmentation.

2.6 Hybrid Architectures:

Recent studies merge CNNs with transformers or RNNs. Hybrid CNN-RNN models, for instance, examine echocardiography videos by integrating spatial and temporalinformationtoenhanceheartdiseasedetection.

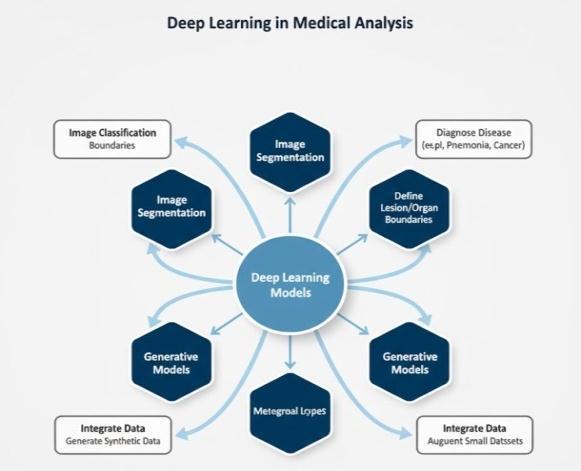

3. Deep Learning Methodologies in Medical Analysis:

3.1 Image Classification:

Classification labels images as "benign" or "malignant." CNN-based systems identify pneumonia, tuberculosis, and breast cancer. Transfer learning with big data such as ImageNet enables fitting to small medical datasets, thus enablingAIinlow-resourcesettings.

3.2 Image Segmentation:

Segmentation defines organ or lesion boundaries.U-Netis stillthegoldstandard,withotherssuchasAttentionU-Net and TransUNet performing better. Segmentation in radiotherapyplanninghelpsplantumourtargeting.

3.3 Detection and Localization:

Detect models like Faster R-CNN and YOLO are employed for polyp detection in colonoscopy or lung nodule detection in CT scans. Clinical research indicates that AIassisted colonoscopy decreases missed polyp rates by about30%

3.4 Multimoda land Multi-Task Learning:

Integration of imaging, genomics, clinical notes, and lab tests offers a richer understanding of the patient. Multimodal learning enhances predictions, i.e., survival ratesinglioblastomapatients.

3.5 Generative Models and Data Augmentation:

GANs and diffusion models enlarge small datasets, create uncommon pathology slides, and mimic disease progression. These are important for constructing strong modelswherelabelleddataislimited.

4.1 Oncology:

AI is revolutionizing oncology through the capability for early cancer detection, tumour grading, and treatment outcome prediction. CNNs detect breast cancer from mammograms, prostate cancer from biopsy slides, and lung cancer from CT scans. Predictive models estimate therapy response and survival, aiding personalized medicine

4.2 Cardiology:

Deep learning enables arrhythmia detection, heart failure prediction, and real-time diagnosis of coronary artery disease. AI from echocardiograms detects structural disorders,whileRNNpoweredwearableECGdevicesdetect abnormalrhythmsandnotifycliniciansremotely.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

AI helps diagnose Alzheimer's, Parkinson's, epilepsy, and multiple sclerosis. MRI-based models identify neurodegenerative alterations years before the onset of clinical symptoms. Segmentation networks help neurosurgeons locate tumours. AI also helps in quick detectionofstroke,enablingearlyintervention.

4.3 Ophthalmology:

OphthalmologyisamongthefastestadoptersofAI.Mobile fundus cameras equipped with CNNs enable diabetic retinopathy screening in rural areas. AI tools also detect glaucomaandmaculardegeneration.Regulatoryapprovals inthisfieldhighlightAI’smaturity.

4.4 Pulmonology:

InCOVID-19,deeplearninghasbeenusedtoreadchestCT andX-raysforinfectionidentificationandtracking.Outside ofthepandemic,AIaidsinCOPDdiagnosisandlungcancer screening. Research indicates AI minimizes inter-observer variationamongradiologists.

4.5 Pathology:

AI aids digital pathology in reading gigapixel slides. CNNs identify tumour grades, recognize genetic mutations, and makeprognosis predictions. Pilothospital initiativesshow howAIalleviatesworkloadandimprovesconsistency.

5. Challenges:

5.1 Data Scarcity and Annotation

Largeannotateddatasetsdonotexist.Annotationexpense and privacy regulations hold things back. Synthetic data andfederatedlearningprovideonlysomereliefbutcannot fullysubstituteforheterogeneousclinicaldata.

5.2 Generalization and Domain

ShiftModelstendtofailwhendeployedatnewhospitalsas a result of scanner variation and patient populations. Domainadaptationisanactiveresearcharea.

5.3 Interpretability

Clinicians do not like black-box systems. Saliency maps, heatmaps of attention, and explainable AI methods are beingcreatedtoenhancetrust.

5.4 Computational Costs

Training large models is GPU or TPU-intensive, expensive. Cloudsolutionsassistbutraiseprivacyissues.

5.5 Ethical and Legal Concerns

Datasetbiasmay reinforce inequality.Liability inAI-based misdiagnosis is still an open question. GDPR and HIPAA compliancecomplicatestheissue.

5.6 Data Security and Acceptance

Cybersecuritythreatsanddistrustimpedeadoption.Social acceptance, particularly by clinicians, is as crucial as technicaladvancements.

6 Future Directions:

6.1 Explainable AI

Future systems will need to design interpretability into theirarchitecture.Clearmodelswillenhancecliniciantrust andadoption.

6.2 Federated Learning

Federatedlearningallowshospitalstotrainsharedmodels without data centralization, maintaining privacy while enhancinggeneralization.

6.3 Self-Supervised Learning

Self-supervised methods use unlabelled medical data to reduce dependency on costlyannotation. This approachis expectedtorevolutionizetrainingpipelines.

6.4 Multimodal Fusion

Combining imaging, genomics, lab tests, and clinical notes providesholisticmodelsalignedwithprecisionmedicine.

6.4 Digital Twins and IoMT

Digital twins virtual patient models combined with IoMT devices could simulate treatment outcomes and monitorpatientscontinuously.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

Aside from diagnostics, deep learning speeds up drug discovery through forecasting molecular interactions and mimickingclinicaltrials.

7. Conclusion:

Deeplearningreallyshook-upmedicalanalysis.Itbeatsout those old CAD systems when it comes to classifying stuff, segmenting images, or spotting issues. You see it popping up all over in oncology, cardiology, neurology too. That shows how versatile the thing is. Still, even with all these stepsforward,problemshangaroundlikenotenoughdata, figuring out how it works, ethics stuff. Things like explainableAI,federatedlearning,digitaltwins,theyseem to help fix that. AI isn’t about kicking clinicians out. It wants to team up with them, make healthcare safer, fairer, morecreativeinaway.

References:

1. M. A. Zulkifley, S. R. Abdani, and N. H. Zulkifley, "Automated bone age assessment with image registration using hand X-ray images," Applied Sciences,vol.10,no.20,p.7233,2020.

2. Y. Gao, T. Zhu, and X. Xu, "Bone age estimation based ondeepconvolutionalneuralnetworkintegratedwith segmentation," Int. J. of Computer Assisted Radiology andSurgery,vol.15,no.12,pp.1951–1962,2020.

3. J. Long, E. Shelhamer, and T. Darrell, "Fully convolutional networks for semantic segmentation," inProc.CVPR,pp.3431–3440,2015.

4. M. Ahsan, M. Based, J. Haider, and M. Kowalski, "COVID-19 detection from chest X-ray images using featurefusionanddeeplearning,"Sensors,vol.21,no. 4,p.1480,2021.

5. A. S. Al-Waisy et al., "COVID-CheXNet: hybrid deep learningframeworkforidentifyingCOVID-19virusin chestX-rays,"SoftComputing,vol.27,no.5,pp.2657–2672,2023.

6. X.Li,W.Tan,P.Liu,Q.Zhou,andJ.Yang,"Classification ofCOVID-19chestCTimagesbasedonensembledeep learning,"J.HealthcareEngineering,2021.

7. Y. Pathak, P. K. Shukla, and K. V. Arya, "Deep bidirectional classification model for COVID-19 patients," IEEE/ACM Trans. Comp. Biol. Bioinformatics,vol.18,no.4,pp.1234–1241,2021.

8. M. J. Horry et al., "COVID-19 detection through transfer learning using multimodal imaging data," IEEEAccess,vol.8,pp.149808–149824,2020.

9. V. I. Iglovikov et al., "Paediatric bone age assessment usingdeepCNNs,"inDeepLearninginMedicalImage Analysis,Springer,2018.