International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Mohammad Shahbaz1, Deepshikha2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India 2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

Abstract - The expansion of cloud computing exponentially has ensured that cloud has become an essential service in the digital space. Nonetheless, costeffective and efficient control over the resources is an ongoing problem since the cloud workloads are dynamic and unpredictable. The poor resource allocation methods that include traditionalprovides like static provisioningand rule based systems, usually result to over-provisioning, under-utilization and high costs of operations. The current study is based on several shortcomings; hence, to overcome them, this study suggests an Artificial Intelligence (AI) powered forecasting model of proactive and adaptive cloud resourcemanagement.

In the study, they investigate machine learning (ML) and deep learning (DL) algorithms (Random Forest, Gradient Boosting, ARIMA and Long Short-Term Memory (LSTM) networks, etc.) that supposedlycould be used to forecast the future needs in the resources foresight of the historical data on workload. These forecasts are combined in an adaptive framework to manage resources which make allocation decisions in real-time as optimally possible with regard to bothperformanceandcost.

Its evaluation is done in the simulated and real cloud environments with available datasets including Google Cluster Trace and Azure Public Dataset. The major performance indicators should be the accuracy in forecasting, efficient use of resources, level of cost savings, and the performance of system in different levels of workloads. The findings of the conducted experiment indicate that the proposed AI-based strategy is much better thanmethodsofthetraditionaltypesintermsoftheoptimal resourceconsumptionandlowoperationalexpenses.

Key Words: CloudComputing,ResourceManagement,AIBased Forecasting, Cost Optimization, Machine Learning, Deep Learning, Workload Prediction, Dynamic Resource Allocation.

Cloudcomputinghastransformedhowservicescanreach computing services and give scalable and on-demand computingresourceslikestorage,networkandprocessing capabilities. It has provided the opportunity of allowing

businesses and people to develop flexible and cost effectiveITinfrastructures, andthiscanbemadepossible without any major upfront expense investing on hardware. As much as this has very amazing advantages, the management of resources in the cloud is one of the core challenges in the cloud environment especially with regardstothefactthattheworkloadismoredynamicand unpredictable. This study deals with this issue by examining the opportunities ofusingartificial intelligence (AI), particularly, the AI-based forecasting models, in order to get the best cloud resource management approach.

The cloud computing allows access to shared pools of configurable computing resources on demand over the internet and allows much broader set of application and services supported. The use of clouds in the healthcare, financialsectors,educational,andweb-basedbusinessesis facilitated by scalability, flexibility, and cost saving characteristics of the cloud technology. Infrastructure-asa-service (IaaS) model, especially, provides virtualized resourcestotheuserwhichtheycanexpandorreduceon the basis of their need hence it is the best option when it comes to dynamic load. Nevertheless, the growing complexity of cloud environments has raised serious problems in the efficient management of such resources and particularly, when dealing with the constantly changingdemand.

Figure-1: Cloud Computing.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Management of resources in cloud computing plays a significant role in providing maximum performance, availabilityoftheservices,andcost-effectiveservices.The resources, like CPU, memory, storage and bandwidth are shared between users and applications, which requires smartallocationapproachessothatover-provisioningand under-utilization can be avoided. With the help of cloud resource management the cloud provider can run leaner operationandenhanceprofitsandtheuserdoesnothave to pay for resources that he is not making use of. This problem might cause a reduction in the quality of performance, energy output and service level agreement (SLA) breaches thus affecting user satisfaction and providercredibility.

The fact is that the cost-efficiency of cloud resources management requires a complicated undertaking because the cloud workloads possess a complex nature related to its dynamics and unpredictability. Static resource allocation plans, e.g. static provisioning, using a rule system,likeusingthresholds,areusuallynotgoodenough in dealing with high dynamicity of workloads. The approaches will either not deliver resources efficiently thus leading to unnecessary wastage and excessive resources or they will fall short delivering resources causing bottleneck in performance. Other issues such as scalability and adaptability, and real-time respond to changing user requirements are also added to them. With advances in cloud services, there arises the need to be more intelligent and data-centric in the application of services,awaythatcanbeusedtoresolvethechallengeof anticipating resource requirements and assigning resources.

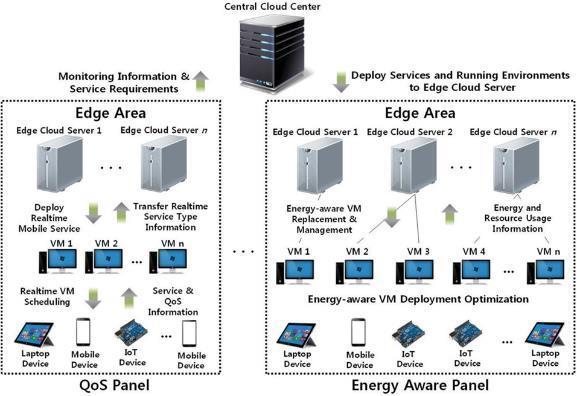

Figure-2:The Fog computing architecture with virtualization enabled.

The issues of resource management in cloud computing have a promising way out in the form of Artificial Intelligence (AI), which, in particular, means machine learning (ML)and deeplearning (DL). Withthe use of AIbased models of forecasting, it is possible to analyze the pastdataonworkloadandfindthepatternsinit,aswellas predict the necessary resources in the future with high precision. When such predictions get incorporated into cloudmanagementsystemsthenitispossibletoautomate the process of resource allocation proactively in an adaptivefashion.Asanexample,AIalgorithmscanforesee the separation of the requirement and pre-deliver more resources, which will prevent the interruption of services and reduce unreasonable spending. Such a move to be predictive instead of reactive resource management does not only have the attribute of increasing the efficiency of operations, but also aids the sustainability of cloud operationpractices.

Even though AI has potential applications in the management of cloud resources, current practical solutions are mostly based on traditional approaches, which lack the requirement in using resources in fluctuating cloud environments. Intelligent resource allocators are needed, as both a rule-based and a static allocation systems cannot be generalized to real-world workloads, which would mean poor resource utilization and an increase of costs. Even though AI applications are describedinsomestudiesinthisfield,thereisstillalarge gapindevelopmentandpractical testingof integratedAIbased frameworks of forecasting that are specifically carried out to cloud infrastructure. This paper aims at filling this gap as it discusses how cost-efficiency and performance can be enhanced through the procedureand the efficiency of implementing such an implementation framework.

The primary objective of this research is to design and evaluate an AI-based forecasting model for cost-effective resource management in cloud computing environments. Thestudyaimsto:

Develop predictive models using ML and DL algorithms to accurately forecast future resource demands.

Integrate these models into a dynamic resource management framework capable of automated and real-timeresourceallocation.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Testtheframeworkinbothsimulatedandreal-world cloud environments to assess improvements in cost savings, resource utilization, and system performance.

Provide a comparative analysis against traditional resource management approaches to demonstrate theadvantagesofAI-drivensolutions.

Theliteratureoncloud resource managementshowshow the current trend is gradually shifting to intelligent predictive solutions relying on artificial intelligence (AI). In this review, the researcher will talk about the drawbacks of conventional approaches and explain how ML and DL can also change forecasting and cost optimizationsolutionsinthecloud.Moreover,ithighlights the current gaps in the research conducted and thus lays downthefoundationoftheAI-basedforecastingschematic offeredbythecurrentstudy.

Early strategies of cloud resource management were deterministic with a goal of ascertaining resource distributionsasasetofrulesordefinedbyaveragesofthe past or a case of the worst outcomes. These techniques were simple to adopt but could not fit dynamic and heterogeneous workloads as are the characteristic propertiesofmoderncloudcomputing.

2.1.1.

Static allocation techniques refer to the methods characterized by allotment of constant resources using past patterns or the pessimistic demand estimates. These are back ofthe envelopetechniquesand can be used only when the work load is predictive. Nevertheless, they are not inefficient per se in dynamic conditions since they have a propensity to over-provision and other idle resources or under-provision and cause the performance deterioration and SLA breaches. The simplistic solutions are the static solutions that have no way to handle the variations in demand in a real-time, an aspect that makes the approach obsolete in the current elastic cloud infrastructure.

Rule-based systems also strive to provide certain degree offlexibilitybymeansofconditionallogic(e.g.,whenCPU usage>80%thenaddanotherVM).Eventhoughtheyare more permissive as compared to the static approaches, they are rigid and devoid of contextual knowledge. Such systems are quite limited in their scopes and have limitations to generalize in the other applications and

usage directions. As the workload is increasingly unpredictable,therule-basedapproacheshaveahardtime providing the best performance due to the need to be manuallyadjustedatalltimes.

In order to eat the cake and have it too, Heuristic and Metaheuristic algorithms have been introduced that include Genetic Algorithms (GAs), Particle Swarm Optimization (PSO) and Ant Colony Optimization (ACO). Thepurposeofthesealgorithmsistoidentifysolutionsof the complex allocation problem at a near-optimal level withthehelpofconsecutivesearches.Theyprovidebetter flexibility, but usually they are complex in terms of computational resources and tune up. First parameters and workload-specific features of their functioning significantly affect their performance, which is why their scalabilityandthecapacity towork in real timeandto be applicableinhigh-demandcontextsarealsolimited.

Particularly,theMLandDLAIhavetakenformasahighly disruptive technology in terms of cloud resource forecasting.TheAImodelscantrackpatternsonhistorical data of workload and allows them to actively allocate resources, mitigate cost and performance risk. Here the primarymethodsbasedonAIareconsidered.

TheRandomForest,DecisionTrees,GradientBoostingand SupportVectorRegression(SVR)modelsarepropertiesof machine learning that have been largely utilized in workload prediction. These models are talented to discover non linear patterns and are characterized by interactions in historical data. An example is Random Forest which can deal with a lot of input variables and does not get over-fitted and hence applicable with highdimensional workload data. Gradient Boosting enhances accuracyofpredictions bycorrectingmistakescommitted by the earlier models one by one. These ML methods are computationally practical and offer interpretable outcomes, and thus these can be used in most forecasting responsibilitiesofcloudresources.

Such models as Long Short-Term Memory (LSTM) networks and Convolutional Neural Networks (CNNs) have been shown to perform better when completing time-seriesforecastingtasks.TheLSTMsareusefulwhere there is some degree of temporal dependencies in the workload:theabilitytoforecastappropriatelyinthelongterm even when the environment is highly dynamic. Although CNNs are customarily applied in the image processing domain,they can be modified to derivespatial

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

aswellassequentialcharacteristicsinworkloaddata.The models are more accurate compared with the traditional ML methods but need more processing abilities and data totrain,whichissometimesalimitationtothesemodels.

Hybrid models were created to take advantage of the power of the two, ML and DL. An example is that a Random Forest model is adopted to do feature selection, and an LSTM model is applied to do the sequential prediction work. Otherwise, neural networks can be used along with ARIMA models to seize linear development trends as well as non-linear variability in the patterns of workloads. Presence of these hybrid systems enhance robustness and accuracy in prediction particularly in sophisticated and dynamic cloud environments. They are however complex to architecture and have to be incorporatedintothearchitecture.

Withtheincreaseintheuseofclouds,avoidinghighratios of costs but at the same time ensuring that high quality services are maintained has become an imperative. AI is becoming an essential part of cost optimization proceduresasitallowstimelyinformation-baseddecisionmakingthatwouldbehardtoachieveinanotherway.

Auto-scaling, better known as dynamic scaling, is used to enable cloud systems to scale resource to a degree where it is necessary due to high workload. Reactive scaling utilizes existing performance data and proactive scaling worksbasedonaforecastmodeltopredictademand.The latterworksbetterindealingwithanincreaseinworkload because it mobilises resources before the demand soars. Automated AI-enabled scalingreducescostandlatency to the minimum, by preventing last minute provisioning, as wellasincurringidlecapacitythatmakesnouse.

Thedatacentersoncloudsconsumeenormousquantityof power which renders the cost of operations high and poses environmental threats. Techniques to optimize workloads placement with the aim to minimize energy consumption, include consolidation of Virtual machines (VMs) or the dynamic voltage and frequency scaling (DVFS). Moreover, scheduling algorithms may prioritize the task activities according to energy efficiency, so predictive models could put less urgent tasks on a low demand hour or when green energy is being produced, whichresultsinbettersustainability.

Machine learning algorithms and reinforcement learning models of AI can resolve optimal schedule on available cloud resources. These models are to learn policies that will be able to minimize costs and at the same time meet theperformance.Theyarealsoabletoadjusttotheirkind ofpricemodelslikethespotmodel,reservedmodelorthe on-demandinstancesthroughpredictingthepriceandthe availability of the resources. This flexibility is the guarantee that users are provided with the most efficient performance at the minimum cost, thus the optimization oftheresourceandthereductionofthewastes.

Although AI-based methods of forecasting and management of resources are relatively promising, a number of gaps exist. Most of the available solutions are eithernotempiricallydemonstratedorjusttheorydriven. Not much research has been done regarding the incorporation of the AI forecasting model into end-to cloud resource management framework especially the real-time operation of live data. Also, in the majority of them, there is no clear investigation of indices of costefficiencyoracomparisonoftheAI-driventechniquesand those of a conventional system in varied workload characteristics. It has also not been adequately investigated how and to what extent AI-based solutions could be scaled and generalized into various forms of cloud services (IaaS, PaaS, SaaS) and providers. In this study, the researcher tries to address these gaps by designing and testing an usable, AI-inclusive model of cost-effective management of resources in real and simulationconditions.

In this section, the methodology of the research used to design, develop and evaluate AI-based predictive framework to be used in cloud computing to aid in costeffective management of the available resources is highlighted. The methodology includes six core elements, which are research design, description and preprocessing ofdataset,selectionofAImodel,trainingandevaluationof the model, framework architecture and lastly, tools and technologiesfollowed.

The study will have a quantitative and an experimental design to evaluate the way in which AI-based models could help increase the quality of forecasting as well as optimize the utilization of the cloud resources. The approach will involve the four major steps that will have different roles to play towards developing the proposed framework.

3.1.1.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

The first step was a thorough search of the already published works on the field of cloud resource management and cloud resource management using AIdriven forecasting techniques. This served to give an idea as to the shortcomings of the conventional resource distribution conduct and the AI advantages in solving these issues. It also assisted in the development of problem statement and actualization of objectives of the research.

3.1.2.

The second phase was the one that aimed at designing AI models to predict workloads. Several machine learning and deep learning models were tried to estimate the future workload requirements based on past workload statistic. Its models were trained and validated and includedRandomForest,ARIMA,andtheLongShort-Term Memory(LSTM).

3.1.3.

Duringthisstep,themosteffectiveforecastingdesignwas incorporated into the framework of dynamic resource management.Theframeworkwasmadeinsuchawaythat resources in the cloud would automatically be assigned basedonwhatthemodelspredictthussavingoncostsand betterutilizationoftheresources.

3.1.4.

The last step was the approval of the framework to simulated environment (CloudSim) and real one (AWS, Azure).Theframework performancewasevaluatedbased on the indicators like Mean Absolute Error (mae), Root Mean Squared Error (RMSE), R2 score, cost savings, and system performance when different workload conditions arefulfilled.

In this study publicly available data of cloud workloads will be used where the most popular ones can be Google Cluster Trace Dataset and Azure Public Dataset. Such datasetscompriseresourceusagelogs,detailedrecords,of the CPU load, memory usage, disk input and output, and thenetworkusage.

PreprocessingSteps:

Data Cleaning: Removal of null values and inconsistencies.

Normalization: Scaling of CPU and memory usage valuesbetween0and1.

Feature Selection: Time of day, task duration, CPU loadhistory,etc.,wereselectedaskeyfeatures.

Data Splitting: Thedatasetwasdividedintotraining (70%), validation (15%), and testing (15%) sets to ensurereliablemodelperformanceevaluation.

3.3.

Several AI models were considered for workload forecasting:

Table1-1: Several AI Models

Model Type Algorithm Justification

Machine Learning Random Forest Handlesnon-lineardata wellandisrobustto overfitting.

Machine Learning Gradient Boosting Effectiveforcomplex workloadswithfeature interactions.

Deep Learning LSTM Capturestime-series dependenciesandlongtermtrends.

Statistical ARIMA Usefulforlineartimeseriesforecastingasa baselinemodel.

The last criteria were the balance between accuracy, the efficiencyofthecalculationsandtheaptnesstotime-series forecasting. LSTM and Random Forest have proved to be themostsuitabletoformpartoftheframework.

The trained models were educated on the preprocessed datasets and the hyperparameters were tuned by grid searchtechniquealongwiththecross-validation.

3.4.1.

The following metrics were used to evaluate model performance:

Mean Absolute Error (MAE): Measures average absolute differences between predicted and actual values.

Root Mean Squared Error (RMSE): Penalizes large errorsmorethanMAE.

R² Score: Represents the proportion of variance explainedbythemodel.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Table-2: evaluate model performance.

3.6.1.

AWS (Amazon Web Services): Used for real-world deploymentofforecastingandallocationmodels.

Microsoft Azure: Integrated for additional testing usingAzuredatasetsandAPIs.

Google Cloud Platform (GCP): Offered dataset sourcesandcomputeinstancesforexperimentation.

As seen in the table, the LSTM model provided the best forecasting accuracy and was therefore selected for integration into the dynamic resource management framework.

Theproposedframeworkincludesfourcoremodules:data collection, workload forecasting, dynamic resource allocation,andperformancemonitoring.

3.5.1. Data Collection

Thismodulegathersreal-timeandhistoricalmetricsfrom cloud infrastructure, such as CPU and memory usage, application logs, and network traffic. These inputs are continuouslyfedintotheforecastingmodule.

3.5.2. Workload Forecasting

The selected AI model (e.g., LSTM) predicts future resource demand based on historical patterns. Forecasts are generated in real-time and used as input for the resourceallocationmodule.

3.5.3. Dynamic Resource Allocation

Based on the forecasts, the framework dynamically provisions or deallocates resources. For example, if an upcomingspikeispredicted,theframeworkautomatically scales up virtual machines or containers to handle the load.

3.5.4. Performance Monitoring

This module evaluates the efficiency and performance of the system in real-time using SLA compliance, response time, throughput, and cost metrics. Feedback from this module is used to update forecasting models and allocationstrategies.

3.6. Tools and Technologies Used

To build and validate the framework, various platforms andtoolswereutilized.

3.6.2.

TensorFlow and Keras: Used for building and training LSTMandCNNmodels.

Scikit-learn: Employed for implementing Random Forest andGradientBoosting.

Statsmodels: UsedforstatisticalmodelslikeARIMA.

The experimental set up that has been adopted in the evaluation of the performance of the proposed AI-based resourceforecastingframeworkinprovidingcosteffective resource management in cloud computing explained in thissection.Itsassessmentwasdoneonthesimulatedand reallifesettingstomakeitrobust,scale-ableandpractical. The evaluationoftheperformance wascarriedout with a number of measurements such as efficiency of the cost, resources, ability to forecast, and system overall performance.

The imitation of real world cloud experiences in a controlled environment was performed by use of the CloudSimsimulator.DynamicsimulationAsanextensively usedsimulationtoolkit,CloudSimcan beused tosimulate cloud infrastructures along with virtual machines ( VM ), data centers, network topology and user workloads. This helped to perform testing of the framework in many conditions without observing any financial expenses or beingenclosedtothephysicalinfrastructure.

A data center that was 100 physical hosts in number was established in the simulated environment. All the hosts carried several virtual machines with different configurations in regard to CPU, memory and bandwidth. Workloads were defined to represent the reality scenario of steady, bursty, and periodical demand pattern. The neural network-based forecasting model is based on LSTM, which allowed predicting future workloads, and resourcing module re-allocated resources thereupon. A numberoftestcaseswereexecuted,includingunexpected upsurge of traffic, idle time, and time-consuming jobs to investigatetheflexibilityofthemodelanditsaffordability.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Tovalidatetheframework'sfeasibilityinreal-worldcloud environments, the model was deployed on public cloud frameworks e.g. Amazon Web Services (AWS) and Microsoft Azure. Compute resource provisioning was performed using services such as EC2 (AWS) and Virtual Machines (Azure), whereas performance monitoring services (e.g. AWS CloudWatch and Azure Monitor) had alsobeenused.

In thiscase, real workloads were created with the help of Google Cluster Trace Dataset and Azure Public Dataset carrying data that include CPU usage patterns, memory consumption, and network traffic data. The datasets provided good collection of historical trends and variability thus making it the best in training and testing theforecastingmodels.Thepracticaltestingwasbasedon theefficiencyofthisframework toreacttothechangesin working loads, to arrange resources in an effective way and reduce expenses without affecting the quality of services.

The most common test was that of deploying a web application,whoseresourcerequirementsdiffered.TheAI system predicted future demand of resources and the systemeitherscaleddownorup.Comparisonsweremade on the outcomes of these experiments with those of the traditionalmethodsofrulebasedandstaticallocationina bidtomeasuretheimprovementinperformance.

A number of quantitative indicators were adopted to assess the success of the suggested framework. These are costeffectiveness,useofresources,accuracyofforecasting and performance of the system measured in terms of latencytime,throughputandSLAcompliance.

The cost efficiency can be calculated as the overall reductionintheoperationscostthatcanbeobtainedusing the application of the intelligence in allocation of resources. The metric looks at the cost of compute instances, storage and bandwidth consumed during execution of application workload. The framework has been discovered to have a great potential of excessprovisioning and underutilization of resources through avoidingandreducingcosts.

Table-3: Cost Efficiency.

This statistic shows the effectivity of utilising the given resources. It can be defined as used/allocated resources (CPU, memory and network bandwidth). Increase in the utilizationmeanslesswastageandbetteruse.

Table-4:Resource Utilization.

4.3.3.

The accuracy of the forecasting model is critical for ensuring timely and appropriate resource provisioning. Three metrics were used: Mean Absolute Error (MAE), RootMeanSquaredError(RMSE),andR²score.

Table-5: Forecasting Accuracy.

The LSTM model showed the best performance in capturing workload trends, leading to more precise and timelyresourceallocation.

The performance of the systems was measured using the measurements of latency, throughput as well as SLA compliance. These are the indicators of the capability of the framework to endorse the end-user experience at the differentworkloadscircumstances.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Latency: Thetimetakentorespondtoincominguser requests. Lower latency indicates better performance.

Throughput: The number of tasks completed per unitoftime.Higherthroughputisdesirable.

SLA Compliance: Percentage of requests served withinSLA-definedlimits(e.g.,95%within1s).

This section reports and discusses the findings that were yielded after the application of the AI forecasting framework in the management of resources in cloud computing in a cost effective way. The assessments involve thecomparisonofthevarious forecasting models, their influence on consuming the resources, the cost, the system-level performance as well as the comparison against the conventional resource management strategies used. It also raises the major facts and points of the limitationsofsuggestedapproach.

Inordertoidentifythemostpreciseforecastingmethodto make in the workload prediction, a variety of machine learning and deep learning models was applied, among which one might single out ARIMA, Random Forest, and Long Short-Term Memory (LSTM) networks. Each model wasevaluatedbasedonthreeperformance metrics:Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), andR²score.

Table-7: Comparison of Forecasting Models.

Through it, LSTM performed better than other models, as itprovidedtheminimumerrorlevelsandthemaximumR 2 score, which indicate high capabilities in creating a temporal model within workload data. As a result, LSTM wasincorporatedintheresourcemanagementstructure.

It is notable that the usage of AI-based forecasting enhancedtheutilizationofresourcesgreatlyinthesphere of CPU, memory, and network bandwidth. The system helped to reduce underutilization as well as overprovisioning of resources since it was able to forecastthe future workload requirements precisely so as to improve thecostperformanceandenvironmentalsustainability.

This aside capacity and use, the AI-based framework was also evaluated in terms of its capability to keep the performance of the system consistent under dynamic workload operation. The major performance indicators werelatency,throughputandSLAachievingrate.

Table-8: System Performance Analysis.

There was enhanced level of responsiveness and task processing ability of the system. There was also an increment in SLA compliance, which means there was enhanced compliance of the SLA of the service quality which is crucial in the commercial and enterprise cloud usage.

Toemphasizeonthevalue oftheforecastingusingAI,the proposed framework performance was compared with that of static allocation and the use of rules, which is not based on rules. Although the traditional methods were easier to use since they were simple to adopt, they could not respond to change in work load patterns, therefore, leading to either overutilisation of the resources or degradationoftheperformance.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

Table-9: Benchmarking Against Traditional Methods.

The AI-based approach consistently outperformed others across all benchmarks, emphasizing its adaptability, efficiency,andreliabilityindynamicenvironments.

Theexperimentalresultsprovideseveralkeyinsights:

AI Integration Enhances Efficiency: Incorporating LSTM-based forecasting into resource management significantly increases utilization and reduces operational costs,demonstratingthevalueofpredictiveintelligencein cloudinfrastructure.

Dynamic Resource Allocation is Crucial: Staticandrulebased systems fail to address unpredictable workload spikes. AI-based methods enable proactive scaling, ensuring continuous service performance and resource economy.

Generalization Potential: The framework, while tested primarilywithCPUandmemorymetrics,canbeextended to other dimensions such as storage, bandwidth, and multi-tenantperformanceforecasting.

However,thestudyalsohascertainlimitations:

Computational Overhead: Deep learning models like LSTM require substantial training time and resources, whichmaynotbeidealforsmall-scalecloudplatforms.

Data Dependency: Modelaccuracyreliesheavilyonhighqualityhistoricalworkloaddata.Incaseswheresuchdata isscarceornoisy,modelperformancemaydegrade.

Real-Time Adaptability: Although the system adapts dynamically,the speed at whichnew data is incorporated intothemodel(i.e.,onlinelearning)couldbeimprovedfor real-timeresponsiveness.

The proposed direction of AI-based forecasting of costefficient resource management in cloud computing was discussed in a thorough way in this paper. Due to the

inbuilt progressive machine learning and deep learning models, especially LSTM, into a dynamic resource allocation system, the study was capable of proving that performance and cost-effectiveness in cloud systems can be improved by predictive analytics. The proposed model could also actively predict resource requirements using the past workload behaviors so that resources could be availed at the right time at optimal level. Both the simulated and the real-cloud environment assessment reflected the existence of notable enhancementin the key performance figures such as cost optimization, use of resources, system throughput, and SLA compliance. The AI-powered framework delivered a more intelligent, scalable and adaptive way of resource management to a greater extent as compared to traditional static and rulebasedsystemswhichwhenpittedagainstthedynamicand unpredictable workload environments of the present can beseentobemoreappropriateinsuchcases.

Asmuchasthestudyshowspositiveresults,ithasitsown limitations. The extensive calculation power and training required by suchdeeplearning modelsasLSTM poseone significant limitation because such models might not fit the real-time application in smaller or even a cloud infrastructure that may have limited resources. Also, the workload historical information is very critical to the performance of the framework. When surrounding information contains few or inconsistent data, the correctness of the predictions, and subsequently the effectiveness of the use of resources, might be put at risk. The other drawback is the limited scope of IaaS models, which do not cover application to either PaaS or SaaS environment where resource dynamics and management challenges are dissimilar. Moreover, the existing system worksonthe basisofthe nearlyreal timefunctioning but actual implementation of the online learning aspects is a challenge yet to be explored further. Such limitations can beovercometopossiblyleadtostrongermethodsthatare more generalized and ready to go to production to optimizecloudresourcesusingAI.

1) P. Mell and T. Grance, “The NIST Definition of Cloud Computing,” National Institute of Standards and Technology, Gaithersburg, MD, USA, Special Publication800-145,2011.

2) M. Armbrust et al., “A View of Cloud Computing,” CommunicationsoftheACM,vol.53,no.4,pp.50–58, Apr.2010.

3) B. Sotomayor, R. S. Montero, I. M. Llorente, and I. Foster,“VirtualInfrastructureManagementinPrivate andHybridClouds,”IEEEInternetComputing,vol.13, no.5,pp.14–22,Sep.–Oct.2009.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 06 | Jun 2025 www.irjet.net p-ISSN: 2395-0072

4) J. Calheiros, R. Ranjan, A. Beloglazov, C. De Rose, and R. Buyya, “CloudSim: A Toolkit for Modeling and Simulation of Cloud Computing Environments and Evaluation of Resource Provisioning Algorithms,” Software PracticeandExperience,vol.41,no.1,pp. 23–50,Jan.2011.

5) A. Beloglazov and R. Buyya, “Optimal Online DeterministicAlgorithmsandAdaptiveHeuristicsfor Energy and Performance Efficient Dynamic Consolidation of Virtual Machines in Cloud Data Centers,”ConcurrencyandComputation:Practiceand Experience,vol.24,no.13,pp.1397–1420,Sep.2012.

6) Q. Zhang, L. Cheng, and R. Boutaba, “Cloud Computing: State-of-the-Art and Research Challenges,” Journal of Internet Services and Applications,vol.1,no.1,pp.7–18,May2010.

7) H. Xu and B. Li, “Anchor: A Versatile and Efficient Framework for Resource Management in the Cloud,” IEEE Transactions on Parallel and Distributed Systems,vol.24,no.6,pp.1066–1076,Jun.2013.

8) L. Wu, S. Garg, and R. Buyya, “SLA-Based Resource Allocation for Software as a Service Provider (SaaS) in Cloud Computing Environments,” IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing(CCGrid),pp.195–204,May2011

9) S. K. Garg, C. S. Yeo, A. Anandasivam, and R. Buyya, “Environment-Conscious Scheduling of HPC Applications on Distributed Cloud-Oriented Data Centers,” Journal of Parallel and Distributed Computing,vol.71,no.6,pp.732–749,Jun.2011.

10) T. Lorido-Botran, J. Miguel-Alonso, and J. A. Lozano, “A Review of Auto-Scaling Techniques for Elastic Applications in Cloud Environments,” Journal of Grid Computing,vol.12,no.4,pp.559–592,Dec.2014.

11) S. Islam, J. Keung, K. Lee, and A. Liu, “Empirical Prediction Models for Adaptive Resource Provisioning in the Cloud,” Future Generation Computer Systems, vol. 28, no. 1, pp. 155–162, Jan. 2012.

12) Y.Mao,C.You,J.Zhang,K.Huang,andK.B.Letaief,“A Survey on Mobile Edge Computing: The Communication Perspective,” IEEE Communications Surveys & Tutorials, vol. 19, no. 4, pp. 2322–2358, Fourthquarter2017.

13) C. Tang, S. Su, J. Li, Q. Huang, and K. Shuang, “Optimized Resource Provisioning and Scheduling in IaaS Cloud Environment,” IEEE Transactions on Services Computing, vol. 12, no. 4, pp. 593–606, Jul.–Aug.2019.

14) H. Ye, C. Yin, Z. Ding, and A. Nallanathan, “A Deep Reinforcement Learning Framework for Resource AllocationinCloudComputing,”IEEETransactionson Network and Service Management, vol. 17, no. 4, pp. 2433–2445,Dec.2020.

15) M. Zhang, W. Wang, S. U. Khan, and J. Chen, “EnergyEfficient Scheduling for Real-Time Systems Based on Slack Reclamation,” IEEE Transactions on Industrial Informatics,vol.10,no.1,pp.75–85,Feb.2014.

16) J. Dean and S. Ghemawat, “MapReduce: Simplified Data Processing on Large Clusters,” Communications oftheACM,vol.51,no.1,pp.107–113,Jan.2008.

17) A.Ghosh,A.Singh,andR.Bera,“ComparativeStudyof ARIMA and LSTM for Predictive Analytics in Cloud Workload Forecasting,” International Journal of Cloud Computing and Services Science (IJ-CLOSER), vol.10,no.1,pp.49–57,2021.

18) Y. Zhang, W. Sun, Y. Yang, and J. Hu, “Time-Series Forecasting with Deep Learning: A Survey,” PhilosophicalTransactionsoftheRoyalSocietyA,vol. 379,no.2194,p.20200209,Jun.2021.

19) D. Mishra, A. Sharma, and R. Ghosh, “AI-Based AutoScaling in Cloud: Techniques, Challenges and Research Directions,” Journal of Cloud Computing, vol.11,no.1,p.23,Mar.2022.

20) R. N. Calheiros, R. Buyya, and A. Beloglazov, “WorkloadPredictionUsingHybridModelsforCloud Resource Management,” IEEE Transactions on Cloud Computing,vol.8,no.3,pp.901–914,Jul.–Sep.2020.