Automation Agent For Task Completion

Surabhi

M1 ,

Mehraj Fathima

Ansari2 , Umme

Kulsum

Ansari3 , Muskan Sahani4 , Ms. Ambili K5

1234Students, Department of CSE-AIML, AMC Engineering College, Bengaluru, KARNATAKA, INDIA

5Assistant Professor, Department of CSE-AIML, AMC Engineering College, Bengaluru, KARNATAKA, INDIA

Abstract - With the increasing complexity of applications andrepetitive tasks like grocery booking, foodordering, Cab booking, and social media interactions there is a growing demand for intelligent automation solutions. This paper presentsthedesignanddevelopmentofanAutomationAgent that enables users to perform multi-step tasks across Web applications using text or voice The system leverages Gemini for interpreting user intent and generating dynamic task flows, while UiPath is used to automate interactions withinapps.

The architecture is modular, comprising four key components: a native Web interface for capturing input and initiating actions, a cloud-based AI interpreter for understanding tasks, a backend service for maintaining user memory and preferences, and an automation engine that converts planned steps into real-time UI actions. By integrating technologies such as MongoDB and Google’s Geminimodel,theassistant intelligentlyadaptsitsresponses anddynamicallyautomatesworkflows

Key Words: Automation Agent, Gemini, UiPath, Task Automation, Voice Commands, Natural Language Processing

1. INTRODUCTION

Intoday’smobile-centricworld,usersincreasinglydepend onapplicationsfordailyneedssuchasbookinginOla/Uber, orderingfood,GroceryShopping,WhatsAppMessagingand Call, Cab booking. Despite advancements in voice assistants, these routine tasks often require repetitive navigation across app interfaces, filling out forms, and confirmingactionsmanually.Thisnotonlyconsumestime butalsopresentsusabilitychallengesforuserswithlimited digital literacy or accessibility needs. The demand for a more intelligent and user-friendly solution is growing rapidly.

1.1 Problem Statement

TraditionalvirtualassistantslikeGoogleAssistantand Siri are limited in their ability to perform complex tasks inside third-party applications. These systems areprimarilybuiltaroundpredefinedvoiceshortcutsor app-level integrations and often cannot adapt to changing user interfaces, routine-based tasks, or complex workflows. They also lack robust interaction capabilities and cannot autonomously execute a sequence of steps across different apps.Existing

assistants cannot perform deep, personalized, crossapplicationautomationsuchasbookingcabs,orderingfood, or sending messages. There is a clear need for a unified AIdriven system that understands natural language, works across different platforms, and automates repetitive tasks efficiently.

Objectives

Develop a voice/text-based AI assistant using Gemini.

Automate app workflows without using external APIs

Learnuserpreferencestopersonalizeresponses

1.2 Significance

The system simplifies application interactions, especially foruserswithlimiteddigitalskillsoraccessibilityneeds.It enhances productivity by enabling hands-free control and personalizedautomationofeverydaytasks.

1.3 Scope

The automated agent is designed for Web Apps which interactswithappslikeOla/Uber,BlinkitandWhatsApp.It navigatesappinterfacesinreal-timeusingscreencontent, without relying on external APIs. A backend stores task historyanduserpreferencesforpersonalizedautomation.

2. LITERATURE REVIEW

Thepaper[1]presentsanovelapproachthatleverages vision-based UI understanding combined with large language model planning by translating screenshots of mobile app interfaces into natural language descriptions. This enables task automation without requiring access to theunderlyingappviewhierarchies,makingitsuitablefor more restricted or closed environments. Despite its innovation,thesystemfaceschallengeswhendealingwith animatedorfrequentlychanginguserinterfaces,whichmay leadtodecreasedaccuracy.Thesystemdoesnotcurrently supportpersonalizeduserworkflows

The paper [2] reveals that AI techniques, especially pre-traineddeep-learningmodels,arewidelyusedinareas suchaspersonalizationandmediaprocessing.However,it highlights significant gaps, including the lack of real-time adaptive learning and agent-driven task execution within mobileenvironments.Furthermore,thestudypointsoutthe absence of integration with large language models for natural language command interpretation, emphasizing a International Research

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

need for intelligent systems thatcan interactmore fluidly andautonomouslyonusers’behalf.

The paper [3] integrates large language models with mobileUIautomationtoenabletheexecutionofcomplexinapptaskssuchasticketbookingsandpayments.Itproposes a comprehensive system that handles instruction parsing, decomposestasksintoactionablesteps,detectsUIelements, and predicts appropriate user actions. The approach effectivelycombinesnatural languageunderstanding with UImanipulationto automatemulti-step workflowswithin specificapplications.However,thesystem’sapplicabilityis limited to apps, showing minimal generalization across different platforms. Furthermore, it lacks mechanisms for retaining long-term user preferences or adapting dynamically to changes in app interfaces, which affects robustnessanduserpersonalization.

The paper [4] surveys recent advances in chatbot technology, tracing development from early rule-based systemstomoderntransformer-basedmodels.Itdiscusses challenges such as maintaining context in multi-turn conversations,enhancinguserengagement,andimproving intentrecognition.Whilethereviewcoversvariouschatbot architectures and capabilities, it identifies a critical limitation:thecurrentfocusispredominantlyontext-based dialoguesystemswithoutaddressingtheexecutionofrealworld tasks or device-level automation. There is a lack of explorationintochatbotsfunctioningasproactivepersonal assistantscapableofcontrollingmultipleapplicationsand performingcomplextasksacrossplatforms.

3. PROPOSED SYSTEM

The proposedsystem is an AI Powered automation Agent that understands English language commands to perform tasks like WhatsApp messaging and calls , ordering Groceryorproduct,playingmusic,settingalarm, ordering food.Itusesa largelanguagemodel forinterpretingvoice or text input and leverages UiPath RPA to navigate app interfaces, detect UI elements, and perform user-like actionssuchastapsandscrolls.MongoDBisintegrated to store user preferences and interaction history, allowing forbasicpersonalizationandcontinuityacrosssessions. It provides real-time voice feedback Unlike conventional assistants limited to specific apps or commands, this system offers app-independentautomation bydynamically analyzing and interacting with different app layouts. It aims to deliver a real-time, flexible, and user- focused solutionformobiletaskautomation.

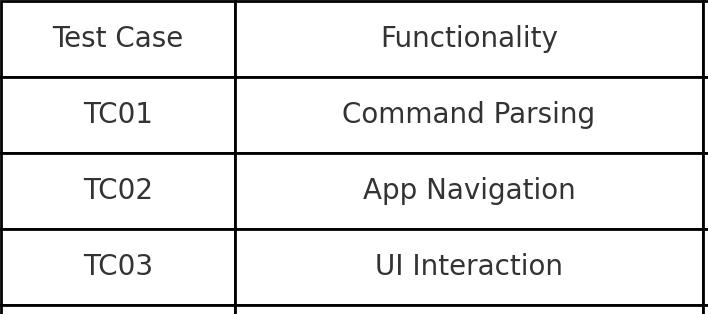

Table 1: FunctionalTestCases–InputCommandsand ExpectedOutcomes

Input Example Expected Outcome “BookcabfromJayanagar toElectronicCity” Ola/Uberopens,pickupanddrop set,rideoptionsshown “PlayPalPalsongon YouTubeMusic” YouTubeMusicopens,“PalPal” searched,songplays “Send‘hi’messageto Reena” WhatsAppopens,Reena’schat selected,“hi”sent

4. SYSTEM ARCHITECTURE

The system consists of four main components: the application interface, language processing module, task planner,andautomationengine.Userinputiscapturedvia voice or text on the application. The input is processed to identify intent, which the task planner converts into stepby-step actions. These actions are executed using UiPath RPAtonavigateandcontrolotherapps.Amemorymodule storesuserpreferencesforpersonalizedresponses.

5. RESEARCH METHODOLOGY

Researchmethodologyreferstothestructuredprocessand techniquesadoptedtodesign,implement,andevaluatethe proposedon-deviceAutomatedAgent.Itensuresthateach phase from problem identification to system testing is carriedoutsystematicallytoachieveaccurate,reliable,and replicableresults.

5.1 Methodology Framework

The proposed on-device Automated Agent is developed using a structured methodology that combines usercentered design with agile development principles. The process begins with a detailed analysis of existing limitationsincurrentvirtualassistantsystems,particularly their inability to handle multi-app automation. Based on these observations, system requirements are defined, focusing on real-time command processing, app-agnostic execution, and user personalization. Key technologies are selectedaccordingly largelanguage

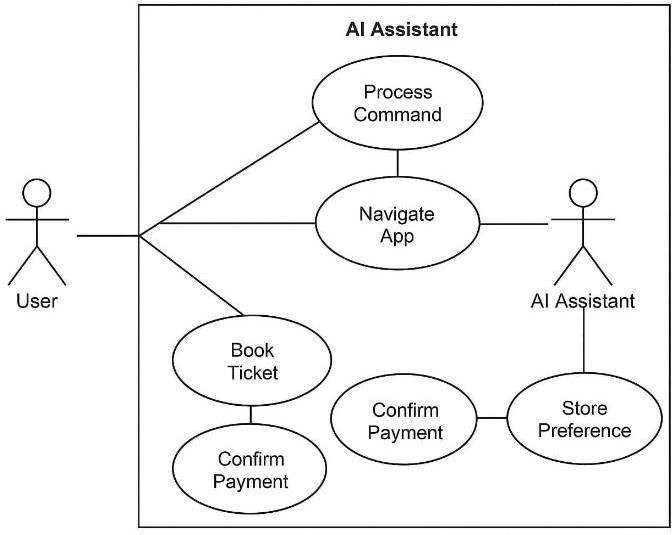

Fig 1: UserUseCaseDiagram

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net

models (LLMs) are used for natural language understanding.

Service enables UI-level control, and MongoDB provides cloud-basedstorageforuserpreferences.

Each core function of the agent is implemented as a modular component: one handles input interpretation, another manages interaction with Web App UIs, and a backendmodulestoresandrecallsuserdata.Thismodular approach allows independent development and testing of eachunit, ensuringflexibilityandeaseofdebugging. Once themodulesarevalidatedindividually,theyareintegrated andevaluatedthroughscenario-basedsimulationssuchas booking cab or ordering food. This methodology ensures that the final system is dynamic, responsive, and capable of real-world mobile automation across diverse applications.

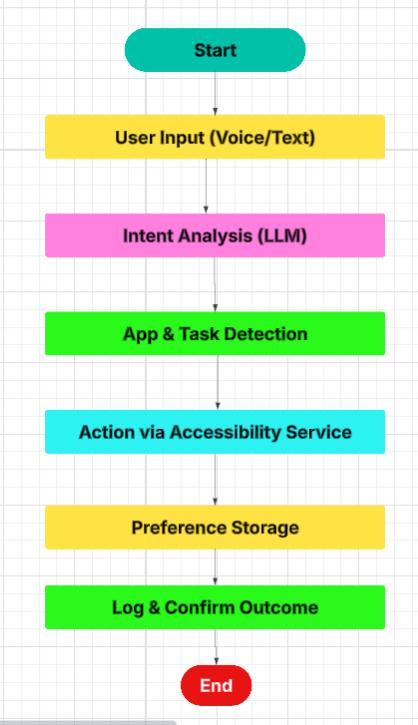

5.2 Methodology Flowchart

The overall methodology flow can be described in the followingstages:

Step1:Userprovidescommandviavoiceortext.

Step2:LLMprocessesthecommandtounderstandintent.

Step 3: System identifies the target app and specific task.

Step4:UiPathRPAexecutesactionsonappUI.

Step 5: MongoDB stores and retrieves user preferences.

Step 6: Task outcome is confirmed and logged for future

6. IMPLEMENTATION

Theautomationagentwasdevelopedusing

Frontend:ReactNative(ExpoCLI)

Backend:Node.js

AIModel:Gemini(GoogleAIStudio)

Automation:UiPathStudio(CommunityEdition)

Database:MongoDB

Tools:VSCode

Workflow

User→Voice/Text→Gemini→IntentExtraction→UiPath Workflow→MongoDBLogging→ResponsetoUser

Example

Command “Send amessagetoRiyaon WhatsApp” →Gemini extractsintent→UiPathexecutesWhatsAppworkflow→ ConfirmationloggedinMongoDB.

7. RESULTS AND DISCUSSIONS

Itwastestedacrossmultiplereal-worldapplicationssuch asEatSure, Blinkit, andWhatsApp(messagingandcalling).

Command Expected Outcome Actual Result

Olaworkflow reference.

“BookCabfrom Ola”

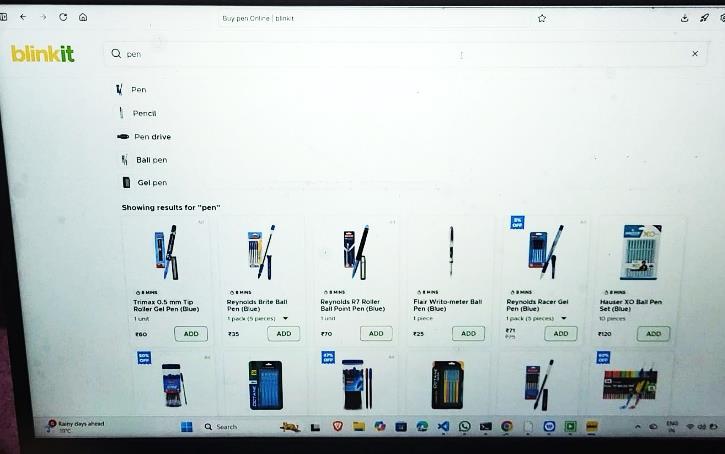

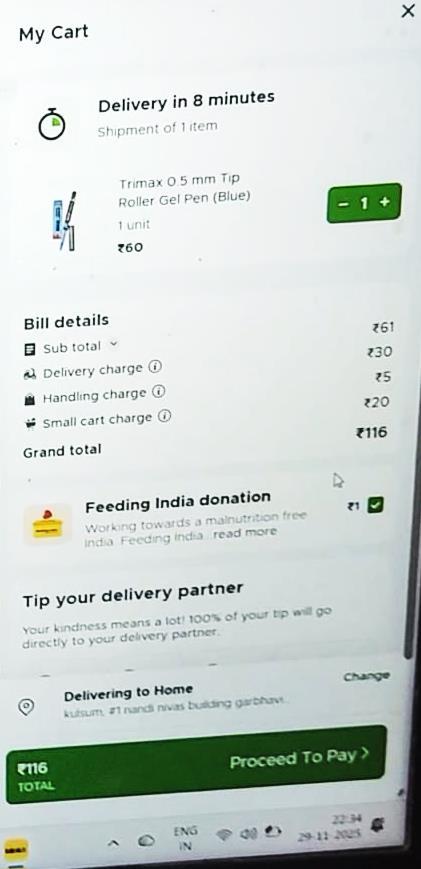

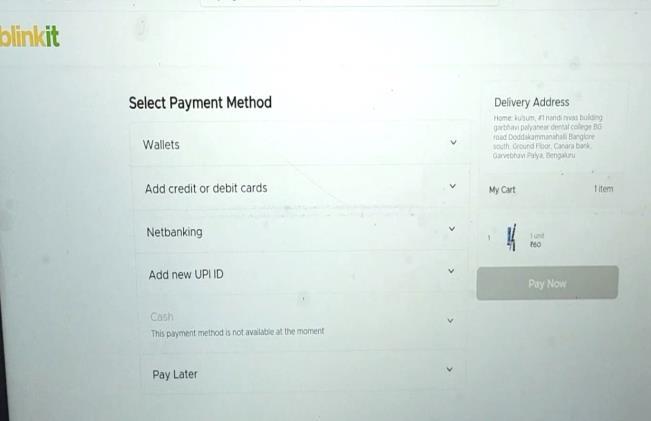

“OrderaPen fromBlinkit”

“Orderfoodfrom mycart” “Sendmessageto

Triggered,Cab booked Itemfetchedand addedtobasket

EatSureworkflow executed

Messagesent

Success

Success

Partial(delay incheckout) Riyaon WhatsApp” successfully

Success “CallRahulon WhatsApp” WhatsAppvoicecall initiated

7.1 Performance Summary

Task Success Rate: 93%

Success

Average Response Time: 4.1seconds

Error Rate: 7%

User Satisfaction: 9/10

Voice Accuracy: 92%

Observation

Gemini improved intent recognition accuracy and handleduser inputs effectively.Thesystem achieved higher automation flexibility than traditional assistantslikeGoogleAssistantorSiri.

Fig 2: FlowchartillustratingtaskautomationviaLLM andRPA

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net

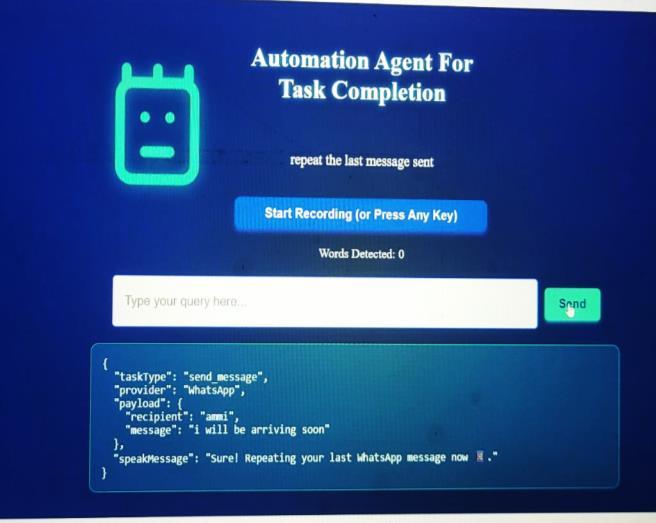

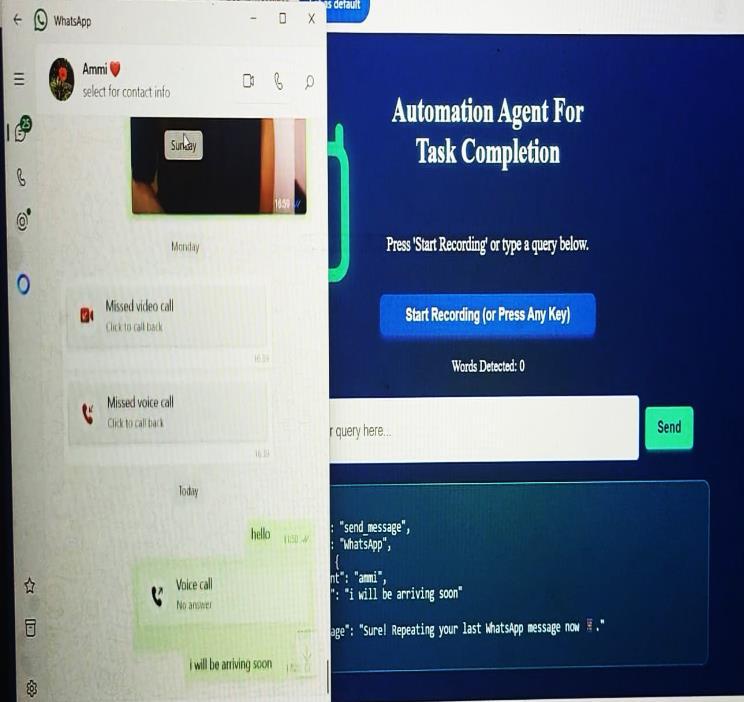

Fig 7 3 Automated workflow sending a WhatsApp message to recipient Ammi

Fig 7 4 Intent-driven WhatsApp message sent stating I’ll be arriving soon

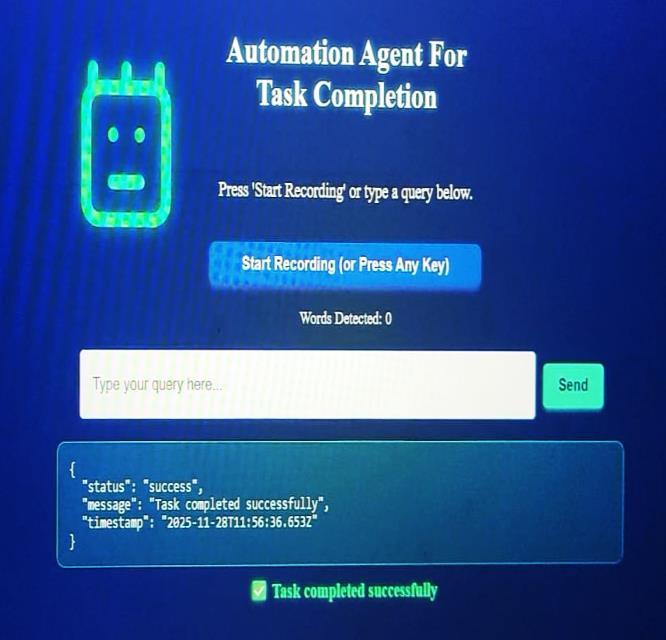

Fig 7 5 Automation Agent executed the task successfully

Fig 7 1 Welcome Page

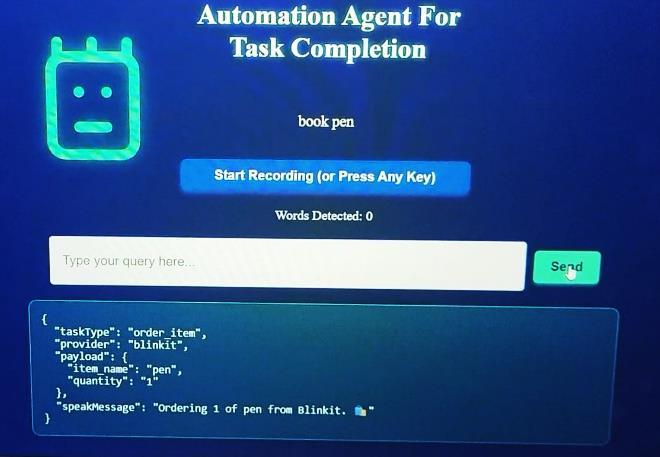

Fig 7.2 Automated Agent Interface

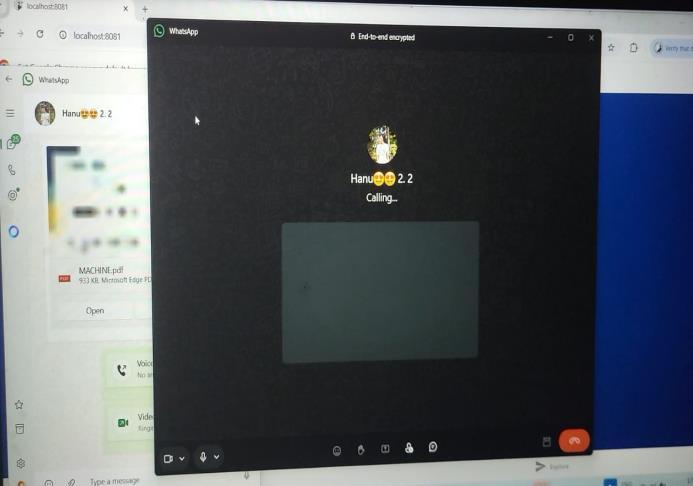

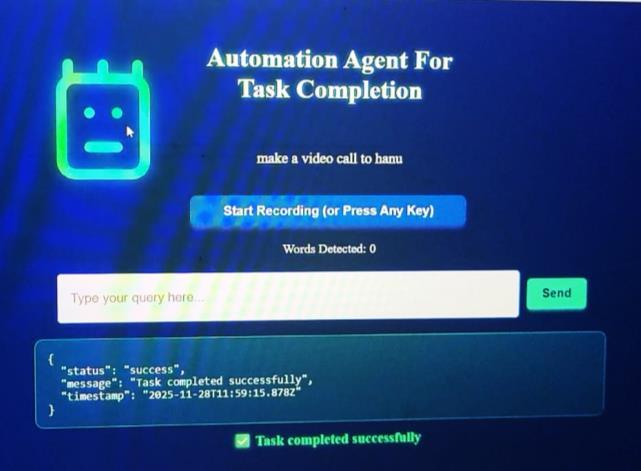

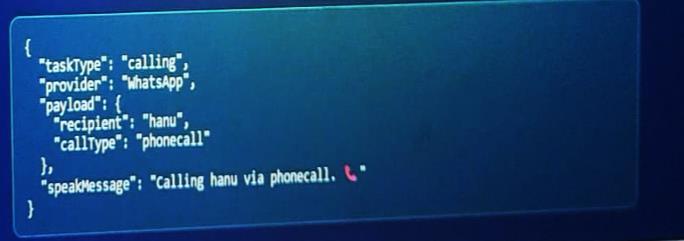

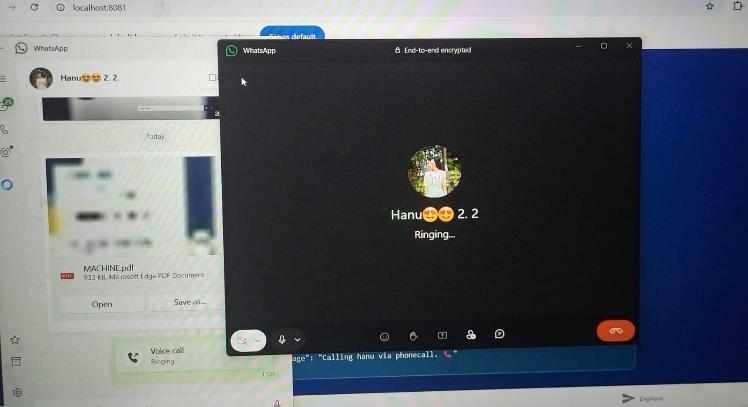

Fig 7 8 Automated WhatsApp workflow placing a Phone call to recipient Hanu

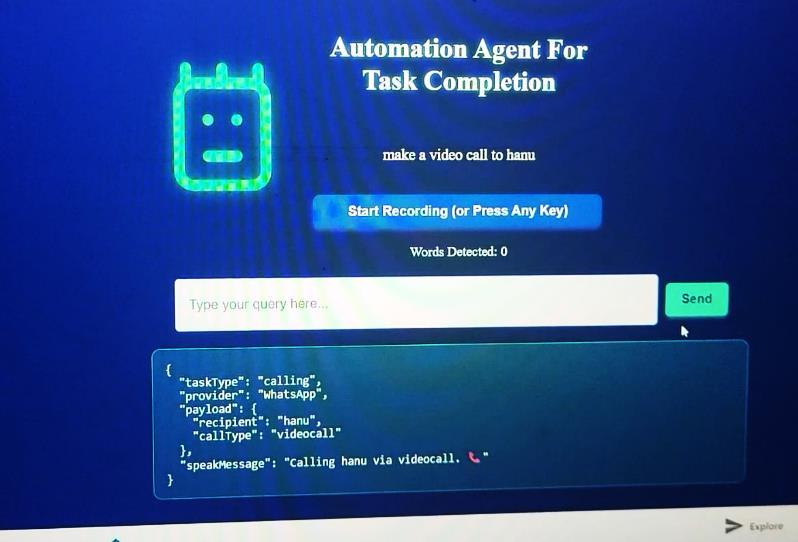

Fig 7 6 Automated WhatsApp workflow placing Vedio call to recipient Hanu

Fig 7 7 Automation Agent executed the task successfully

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net

Fig 7 9 Automation Agent executed the task successfully

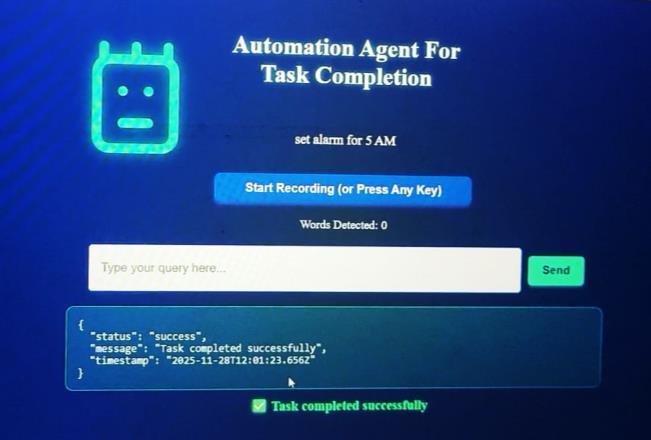

Fig 7 11 Automation Agent executed the task successfully

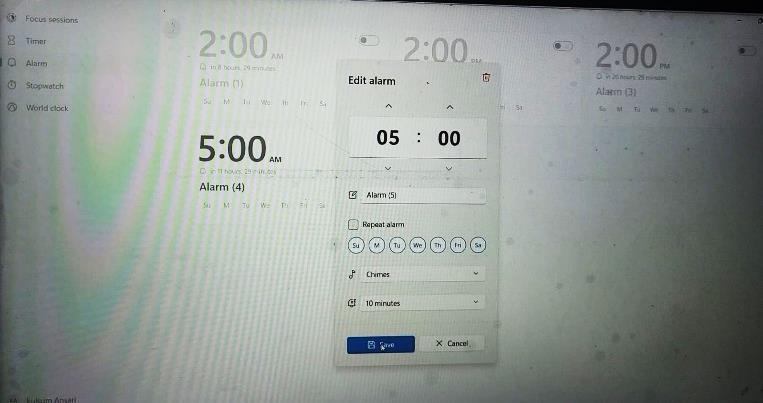

Fig 7 10 Automated Alarm setup workflow for 5:00 am

International Research Journal of Engineering and Technology (IRJET) e-ISSN:

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net

And

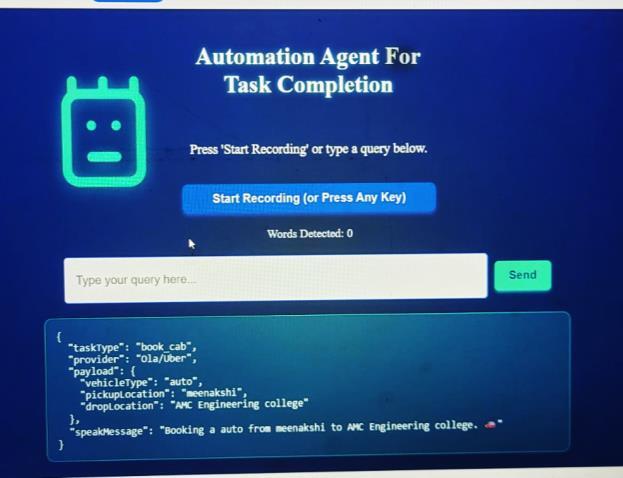

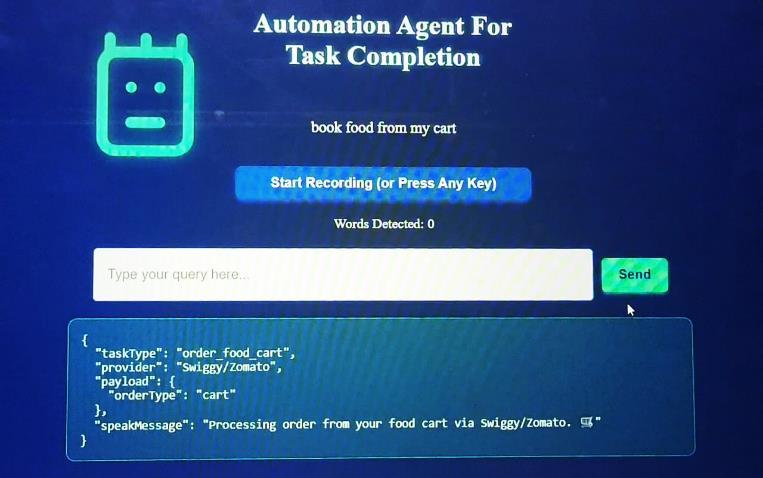

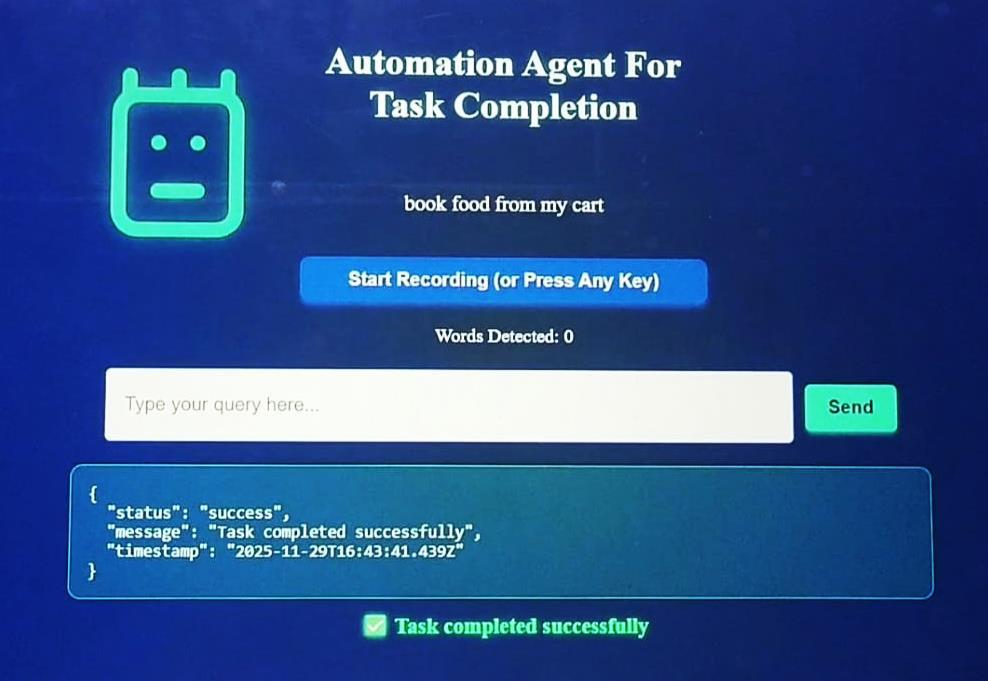

Fig 7 14 Capturing User Intent Through The Task Agent Interface

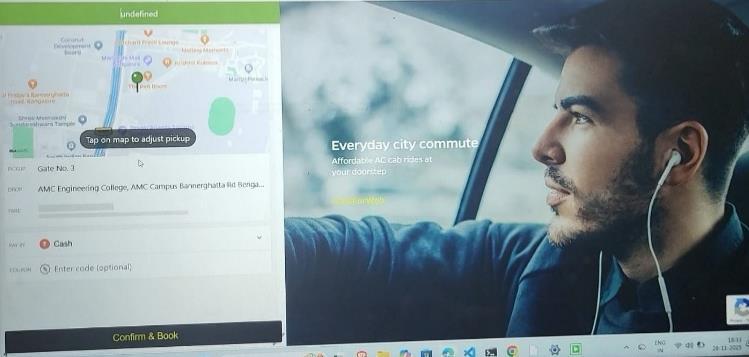

Performing Cab/Auto Booking On The Ola Web

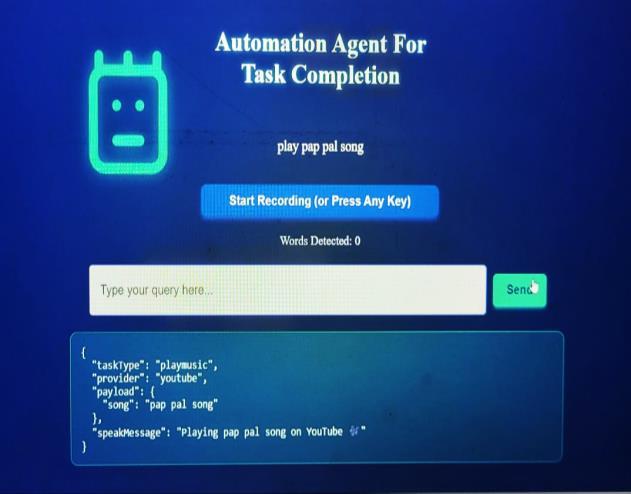

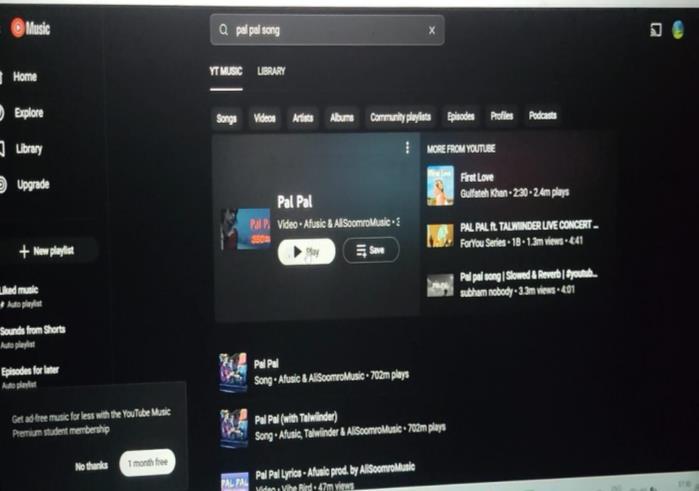

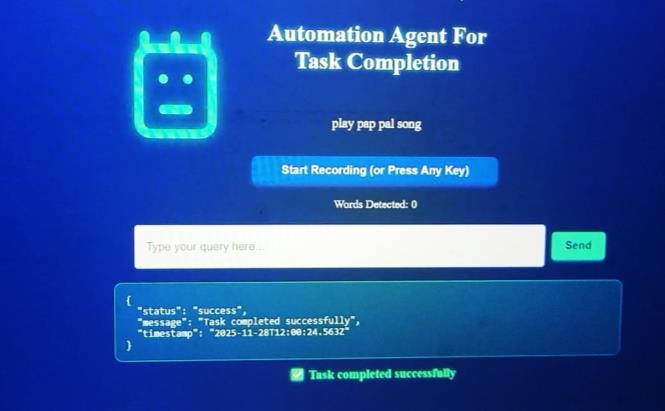

Fig 7.12 Automated Music Playback Via YouTube Music- Playing Pal Pal Song

Fig 7.13 Automation Agent executed the task successfully

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

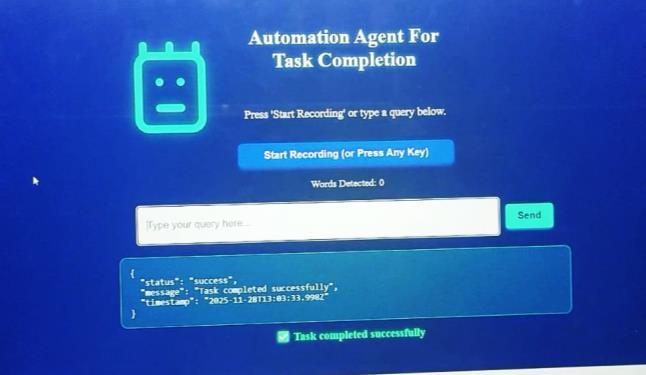

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net

Fig 7 16 Automated Blinkit Workflow For Ordering A Product

Fig 7 18 Selecting Payment Method

Fig 7 15 Automation Agent executed the task successfully

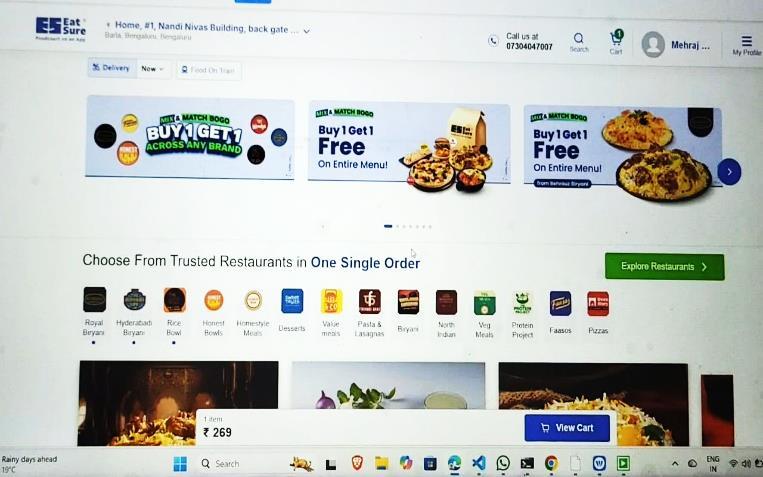

Fig 7.17 Product Added to My Cart and proceed to Pay

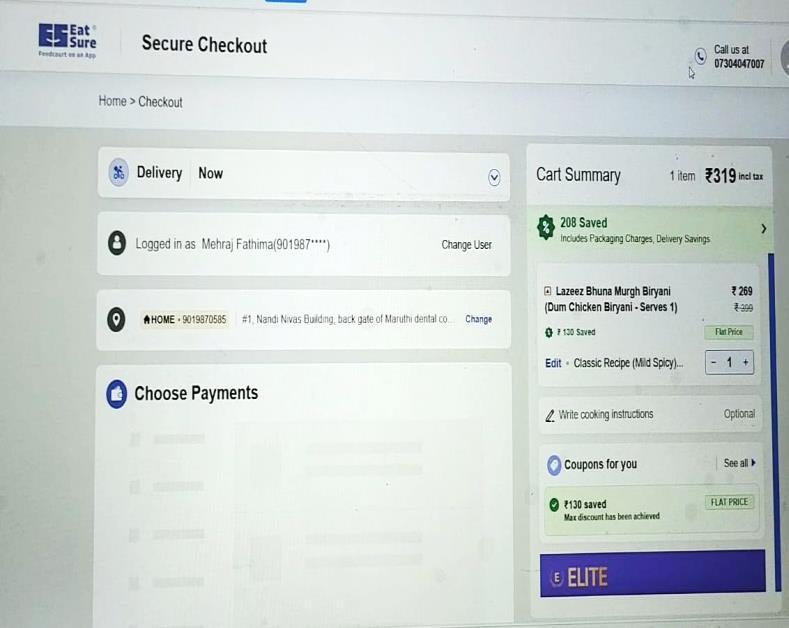

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Fig 7 19 Automated EatSure Workflow For Ordering Food

Fig 7 20 EatSure Food Checkout with Payment Options

Fig 7 21 Automation Agent executed the task successfully

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

8. UNIQUENESS AND DISTINCTIVE FEATURES

This system uniquely combines natural-language understanding with RPA to perform end-to-end task automation Unlike standard assistants, it executes actions directly within apps through UiPath workflows instead of offering suggestions It features accessibilityfocused voice guidance, dynamic intent extraction, fast workflow execution, and support for multiple service providers Its modular, extensible design enables personalizedautomationandworksacrossappswithout APIs This hybrid AI-RPA approach offers greater flexibility, depth, and reliability than conventional assistants.

9. SYSTEM EVALUATION/TESTING

Testing focused on functional correctness, usability, and performance reliability across different task categories

Test ID Scenario

TC01

TC02 IntentdetectionviaGemini 92%

TC03 WorkflowexecutionviaUiPath 90%

TC04 APIcommunicationwithMongoDB Successful

TC05 commandprocessing Successful

Testing Outcomes

The system consistently executed automation tasks for ordering, messaging, and calling. MongoDB ensured stable storage and retrieval of task data Minor issues occurred during UI layout changes and in noisy environmentsduringspeechinput.

10. SYSTEM PERFORMANCE

App-wise Success Rate

EatSure–90%,Alarm–85%,YouTubeMusic-89%,Blinkit –91%,WhatsAppMessage–96%,WhatsAppCall–93%.

Observation

Gemini’s language understanding improved accuracy by ~14% compared to rule-based NLP. UiPath workflowsensuredconsistentexecution

11. SCALABILITY & MARKET POTENTIAL

The system has strong market potential across consumer,enterprise,andaccessibilitysectors Itcanbe adopted by students, professionals, visually impaired individuals, and organizations seeking automation Its architecturesupportsaddingmoreworkflows,apps,and automation modules, making it scalable for education, healthcare, customer service, and corporate operations With the growing demand for AI-powered productivity tools, the solution can expand into mobile automation, enterprise SaaS, and smart-assistant markets serving millionsofdigitalusers.

12. ECONOMIC SUSTAINABILITY

The solution can be commercialized as a subscriptionbased assistant, enterprise automation tool, or customizable automation platform. It reduces manual effort, saving time and operational costs for businesses Asnewworkflowsareadded,revenuecangrowthrough enterpriselicensing,workflowbundles,andintegrations Its low-cost AI processing and scalable architecture support long-term economic sustainability, driven by risingdemandfordigitalautomationacrossindustries

13. ENVIRONMENTAL SUSTAINABILITY

The system promotes digital efficiency by reducing repetitive deviceinteractions,lowering screen time,and minimizing manual operations Automated workflows help organizations cut down on paperwork, energy use, and unnecessary human effort. By enabling remote task execution and digital transactions, it indirectly reduces travel, printing, and resource waste Its accessible, digital-firstdesignalignswithsustainabletechpractices, supportingeco-friendly,low-resourcecomputing.

14. CONCLUSION

The project showcases the development of automated agent capable of understanding user commands and automatingtasksacrossvariousappsusingvoice ortext input. By combining natural language processing, task planning, and automation, the system offers a more personalized and action-oriented experience than conventional assistants. It provides a strong foundation for future enhancements in intelligent application interaction.

15. FUTURE SCOPE

The proposed Automated agent holds significant future potential in enhancing human-device interaction through seamless voice and text-based automation. As applications continue to expand in functionality and

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

complexity, integrating this automated agent with a broader range of apps like ticket booking and shopping can lead to a fully autonomous digital agent capable of handling personal scheduling, healthcare reminders, financial tasks, and even educational assistance. Incorporating advancements in on- device machine learning, the assistant can evolve to offer improvedpersonalization,privacy,andresponsiveness.

Futureintegration withmultimodal interfaces suchas gesture recognition, eye tracking, and emotional context canalsomaketheassistantmoreintuitiveand adaptive. Moreover, by building support for offline functionality, and accessibility features, this system can serve a wider demographic, including the elderly and differently abled, thereby making digital ecosystems moreinclusiveandintelligent.

REFERENCES

[1]Yunpeng Song, Rui Liu, Yu Liu, Yujun Shen, Jingren Zhou, Yizhou Sun, “VisionTasker: Mobile Task Automation via Vision-Based UI and LLM Planning,” arXivpreprintarXiv:2312.11190,2023.

[2] Yinghua Li, Bin Liu, Yulei Sui, Wenjie Zhang, “An Empirical Study of AI Techniques in Mobile Applications,”arXivpreprintarXiv:2212.01635,2022.

[3] YanchuGuan,XiaonanLi,MingyuLi,BowenLiu,Zeyu Xu, Xinyu Zhang, Yuan Zhang, “Intelligent Virtual Assistants with LLM-Based Process Automation,” arXiv preprintarXiv:2312.06677,2023.

[4] Guendalina Caldarini, Michele J. McGonagle, Fabio Gobbo, “A Literature Survey of Recent Advances in Chatbots,”arXivpreprintarXiv:2201.06657,2022.