International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

Dr. K M Mahesh Kumar1 , Rakshitha M N2 , Rahul G R3 , Santhosha K S4 , Shivaprasad M5

1Associate Professor Dept. of Electrical and Electronics Engineering, PES COLLEGE OF ENGINEERING- Mandya , Karnataka, India

2,3,4,5 U.G. Student, Dept. of Electrical and Electronics Engineering, PES COLLEGE OF ENGINEERING- Mandya , Karnataka, India ***

Abstract - Designing assistive mobility devices for patients with severe locomotor disorders like motor neuron disease or post-polio paralysis demands intelligent systems that minimize or eliminate limb use. While EEG-based systems have been explored, they rely heavily on interpreting noisy brain signals, making real-time control difficult and prone to false positives.

In this project, we propose an EMG-controlled smart wheelchair, which leverages electromyography (EMG) signals generated by voluntary muscle contractions to control motion. This approach enables individuals with partial motor function (e.g., ability to flex a specific muscle) to navigate without complex cognitive interfaces.

By placing EMG sensors on active muscle zones (such as the biceps or forearm), the system captures muscle contractions, filters and amplifies them, and translates them into directional commands using an Arduinomicrocontroller. The processed signals then drive the wheelchair's motors, allowing for intuitive and responsive control. Compared to EEG systems, EMG-based control offers a more stable, user-friendly, and cost-effective alternative.

Key Words: Electromyography,Arduino,HC-50,EEG,BCI

Mobility is a fundamental aspect of human independence. However, individuals suffering from severe motor disabilities suchasthosewithmusculardystrophy,postpolio paralysis, or partial spinal cord injuries often face significant challenges in operating traditional electric wheelchairsthatrequirehand-operatedjoysticksortouch interfaces.[1] While several alternative input systems like EEG-basedcontrol,headtracking,speechrecognition,and eye-gazetrackinghaveemerged,eachofthesehasinherent limitations, especially for users with high degrees of paralysisorinconsistentsignalaccuracy.[2]

Recentinnovationsinassistivetechnologiesaimtoempower individuals with limited motor control to regain independence without relying on third-party assistance. Brain-Computer Interface (BCI) systems using EEG (electroencephalography) have shown promise in translatingbrainwavepatternsintowheelchaircommands. However,suchsystemsareoftenexpensive,highlysensitive tonoise,requireextensivetraining,anddemandcontinuous

concentration,makingthemimpracticalforwidespreadreallifeapplication.[3]

In contrast, Electromyography (EMG) offers a more intuitive and reliable method for mobility control. EMG captures the electrical activity generated by muscle contractions. This technology is especially suitable for individualswhomayretainpartialmusclecontrol suchas in their forearms, biceps, or facial muscles even if they cannotperformgrossmotortasks.Bydetectingthesemuscle signalsandprocessingthemthroughamicrocontroller(like Arduino), a smart wheelchair can be controlled using natural,low-effortmovements.

Thisprojectproposesthedevelopmentofa low-cost EMGcontrolled smart wheelchair system that can effectively interpretmuscleactivitytocontrolmovement.Theprimary objective is to design a safe, portable, and user-friendly wheelchair interface that does not depend on complex brainwave readings or speech input but instead uses accessibleEMGsignals.ThesystemincludesEMGsensorsto capturemusclesignals,amplifierstoprocessthem,andan Arduino-basedcontrollertotranslatethemintodirectional commandsforthewheelchair'smotors.

Our approach specifically targets users with upper-limb muscle activity but who lack full limb mobility, offering a balance between affordability, reliability, and ease of use. The system also integrates safety mechanisms to prevent unintendedmovementandaimstosignificantlyreducethe physicalandcognitiveburdenonusers.

Mobilityremainsamajorchallengeforindividualswith physical impairments, especially in hospitals and at home. Despite having full cognitive abilities, many disabled individualsstrugglewithconventionalwheelchaircontrols. Currentmobilitysolutionsfailtoconsidertheuser'smental capacityasacontrolsource.Thisprojectaddressestheneed forasmartersolutionbyenablingwheelchaircontrolusing brain or muscle signals, allowing users to move independentlythroughthought-drivencommands.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

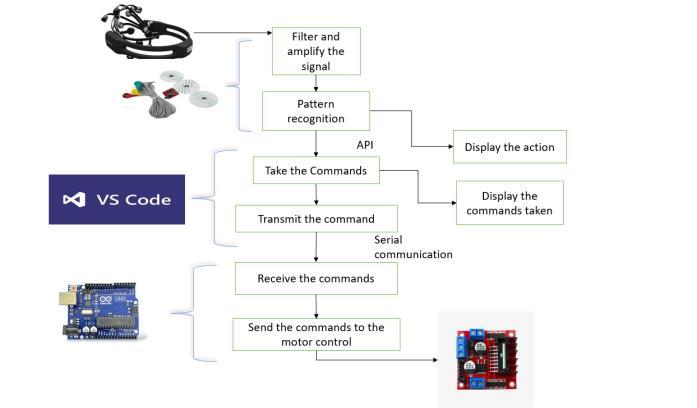

The proposed system utilizes Electromyography (EMG) signals to enable users to control a wheelchair through muscle activity. The system comprises EMG electrodes placed on the skin to detect electrical signals generated by muscle contractions. These signals are processedtoremovenoise,amplifythedata,andextractkey features relevant to movement. The processed signals are transmitted wirelessly via Bluetooth to an Arduino microcontroller, which interprets the data to control the wheelchair'smovement.Thewheelchair,equippedwithfour brushlessDCmotors,respondstocommandssuchasmoving forward,backward,orturning,basedontheuser’smuscle activity.Thisnon-invasivecontrolmechanismprovidesan intuitiveandefficientsolutionforindividualswithlimited mobility,enablinghands-freeoperationofthewheelchair.

1.2.1

1.2.2 KEY COMPONENTS

EMGSensors

i. Heartratesensor(MAX30100)

ii. SpO2sensor

iii. Temperaturesensor(LM35)

iv. ArduinoUno

v. LCDdisplay

vi. DCmotors&Battery

vii.BluetoothModule

1. Signal Acquisition: EMG sensors detect electrical activityfromeyebrowmuscles,whilephysiological sensorscollecthealthdata.

3. Control Execution: Processedcommandsdrivethe wheelchair motors via the L298 driver. Obstacle detection is handled by IR sensors for collision avoidance.

4. User Feedback: The LCD displays navigation commands, sensor readings, and warnings (e.g., "LowSpO2").

These are the following things being done to achieve the result

Eyebrow raise was successfully used to switch modes (e.g.,forward,left,right,stop).

After selecting a mode, muscle stress (like jaw clench)triggeredthe movement.

2. Data Processing: Arduino processes EMG signals usingcustomalgorithmstoclassifymovements(e.g., doubleeyebrowraise="stop").Physiologicaldata triggersalertsifthresholdsareexceeded(e.g.,high heartrate).

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

The system responded in real-time with good accuracy.

Userscouldcontrolthewheelchair without hands, usingonlyEMGsignals.

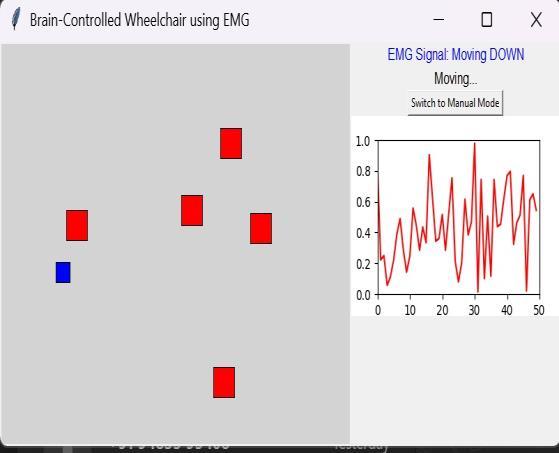

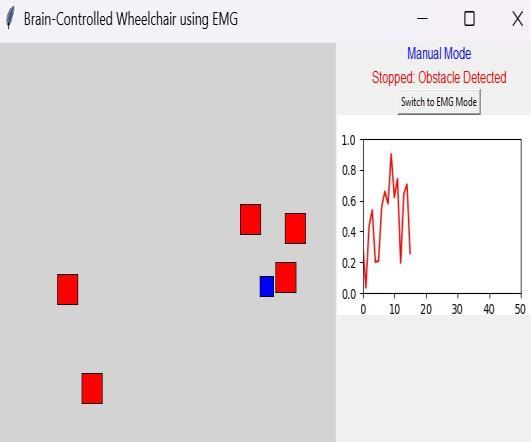

1.3.1 SIMULATION RESULTS

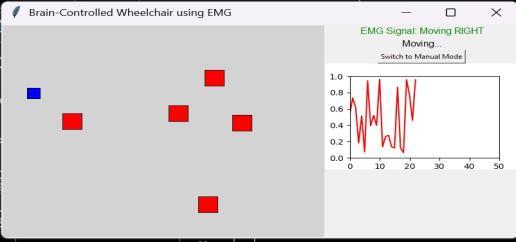

Fig -5:wheelchairmovingright

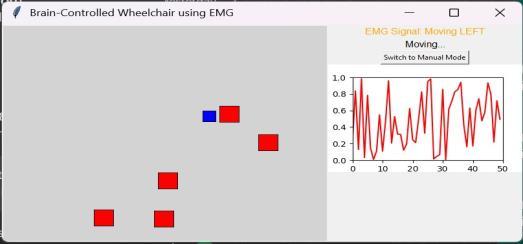

Fig -6:wheelchairmovingleft

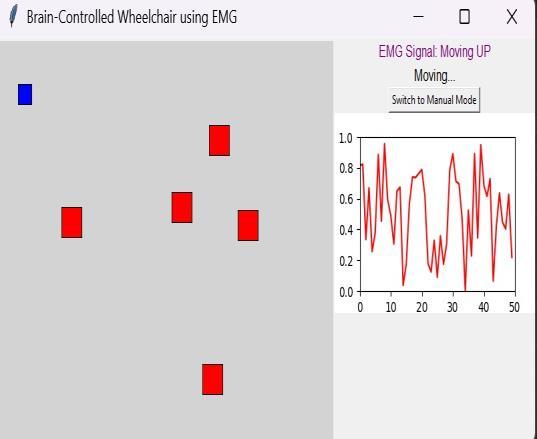

Fig -7:wheelchairmovingUp

Fig -8:wheelchairmovingdown

Fig -9:wheelchairstoppedduetoobstacledetection

EMG Signal Processing & Movement Control Simulates EMGsignals(randomvaluesbetween0and1).Updatesthe graphdynamicallytoshowlivesignals.Usesthreshold-based movement:

>0.7→MoveRight

0.5-0.7→MoveDown

0.3-0.5→MoveLeft

<0.3→MoveUp

ManualControl(ArrowKeyMovement)WheninManual Mode,theusercancontrolmovementwitharrowkeys.

The development of an advanced neuroprosthetic wheelchair system presentsapowerfulsolutiontoenhance mobility and independence for individuals with severe motorimpairments.ByintegratingArduino,EMGsensors, andphysiologicalsignals,thesystemoffersintuitive,handsfreecontrolwithreal-timefeedback.

This project follows a structured approach design, integration, testing, and refinement while focusing on safety, usability, and accessibility. It empowers users to navigate their surroundings with greater confidence and autonomy.

Futureworkwillfocusonimprovingsystemrobustnessand user experience, ensuring adaptability to evolving technologiesanduserneeds.Thisinnovationholdsstrong potentialtoimprovequalityoflifeandpromoteinclusionfor peoplewithdisabilities.

[1] Luzheng Bi, Xin-An Fan, and Yili Liu, “EEG-Based BrainControlled Mobile Robots: A Survey,” IEEE TransactionsOnHuman-MachineSystems,vol.43,no.2, March2013.

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page1426

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

[2] Jonathan R. Wolpaw, Niels Birbaumer, Dennis J. McFarland,GertPfurtschellerandTheresaM.Vaughan, “Brain computer interfaces for communication and control,” Clinical Neurophysiology, pp 767791, March 2021

[3] MEidelandA.Kübler,"WheelchairControlinaVirtual EnvironmentbyHealthyParticipantsUsingaP300-BCI Based on Tactile Stimulation: Training Effects and Usability",FrontiersinHumanNeuroscience,2020.

[4] Yash Tulaskar, “NEUROCONTROLLED WHEELCHAIR USING ARDUINO UNOi,” Department of Mechatronics, MumbaiUniversity,Thane,MaharashtraApr2021

[5] https://en.wikipedia.org/wiki/Mindcontrolled_wheelchair

[6] A novel brain-controlled wheelchair combined with computer vision and augmented reality – BioMedical EngineeringOnLine(Japan)–26JUL2022