International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

S.P. Kullarkar 1 , Anshu Gudhewar 2 , Sidhesh Dhande 3 , Ravi Shripad4 , Kautuk Butle 5

1Assistant Professor, Dept. of AI&DS, KDK. College Of Engineering, Maharashtra, India

2Student, Dept. of AI&DS, KDK. College Of Engineering, Maharashtra, India

3Student, Dept. of AI&DS, KDK. College Of Engineering, Maharashtra, India

4Student, Dept. of AI&DS, KDK. College Of Engineering, Maharashtra, India

5Student, Dept. of AI&DS, KDK. College Of Engineering, Maharashtra, India ***

Abstract -Communication is key to human life, but for those who are deaf or Problem of hearing, communication across species borders can be complicated, not everyone knows sign language. This creates a communication outlet which restricts their ability to interact socially, attend school or work in public. To fix this, our project proposes AI Based Sign Language Interpreter Glove, that will convert any hand gesture for signlanguage into a speech or text.

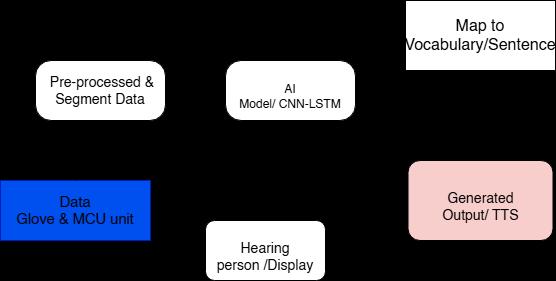

The glove is designed to include flex sensors and motion sensors to measure fingers and hands bending and moving. That information goes to a microcontroller, which then processes the data and sends it to an AI model/Deep Learning Model trained to interpret various sign language gestures. After the detection of the signs, the signs are translated into text appearing on the monitor or the display attach to Glove then Converted into voice through a Text-toSpeech (TTS) system.

The presented solution is low-cost, portable, and easy-to-use, allowing daily use. It has the possibility of creating the conditions for individuals with disabilities to truly be themselves, participate in society, and experience the potential they have by allowing them to communicate freely and effectively with normal People.

The most important advantage of this project was its practicability. Compared to vision-based systems based on cameras and controlled environments, a glove-based solution is low-weight, portable and not reliant upon the lighting or background. It is also economical and affordable, making it an appropriate device for daily aid. The design is also scalable, too, so more gestures and more than one sign language can be included in the future.

Key Words: Sign Language, Interpreter Glove, Artificial Intelligence, Deep Learning, Flex Sensors, Motion Sensor, Communication, Text-to-Speech, microcontroller, Embedded Systems

Communication is the foundation of human development, the individuals with speech and hearing Problem faces significantbarriersinexpressingthemselvesandnotbeing

understand in real time. Traditional sign language, are highly used within the deaf and mute People and is not widely understand by the Majority population, thus creating a communication gap between them. This gap must be filled by a new type of solution that would read sign language, interprets it and translate it to a universal formatsuchastextorspeech.

ThisworkisaimingtoexploreAI-poweredsmartwearable glove for near real-time sign language detection and translation. Multiple sensors (e.g., flex sensors, accelerometers, and gyroscopes) will be mounted on the glove to measure precise finger bending motions, hand postureandgestures.Thesesensorsignalswillbeanalyzed with the help of Advance deep learning techniques to reliably capture sign language patterns. Upon detection of the signs, the system will convert them into text or speech output so that sign language users and non-signers could communicatesmoothly.

The inclusion of Internet of Things (IoT) data connectivity also makes the system versatile in that via wireless or wired means, the identified output can be viewable on smart phones, computers, terms etc. This not only makes the technology portable and accessible but also ensures that it can be used in diverse real-world scenarios such as education, healthcare, workplace communication, and publicservices.

A. In 2024, Zhang has developed the wearable gesture recognition glove that recognize gesture in real time with95%accuracy.Theoverall systemiscombination ofemgsensorandcomputervisionthatdemonstrating thefeasibilityandportabilityingesturerecognizing.

B. In2024,Filipowskaandtheteambuilda devicewhich usesneuralnetworktorecognizegestures.Thisdesign focuses on fixing sensors drift and difference between finger movement. This are the combination of on device and cloud processing to make it reliable in real timeprocessing,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

C. In2023,Someorganizationsworkonfulltranslationof gestures, where glove captures gestures and directly converted into a text and speech in real time. This system highlights the importance of fast system, energy efficient and make balanced between glove processingandphoneorcloudsupportsystem.

D. In2023, researcherslikeAchenbachdevelopthe“Give me a sign” dataset, which includes 64 American gestures language handshapes in which recorded with aglove.Theirprojecthasproventhehandgesturescan be recognize with trustworthy accuracy, also provide valuabledatasetforfuturedevelopment.

E. In 2022, Saeed and their team published a review of glove-based Techniques. In this they highlighted the newly trends like combining flex sensors and motion sensors,usingneuralnetworksforbetteraccuracyand connecting glove with mobile and web applications. Their review paper given a clear direction for designing and implementation of more practical system.

F. In 2021, Deshmukh et al. Apply the supervised machine learning algorithms to improve gestures classification using large datasets. While accuracy improved high computational requirement which cause restriction in deployment on low power microcontrollers.

G. In2020SaggioandtheirteamfindoutthatCNNworks best when was enough data by comparing traditional recognition method with deep learning models and simpler model like k-nn were still effective when resourcesarelimited.

H. In2019,Gupta andMehta enhancedthetechniques by combining flex sensor with an accelerometer to detect both finger and hand orientation. Their model translated gestures into recorded voice messages, the accuracy declined under hand position, reveals the needforbettersensors

I. In 2018, Sharma presents the low-cost hand gesture recognition system by integrating flex sensor with an Arduino UNO this prototype translates specific gestures into a text. This technology term as assistive technology but fall behind advanced motion tracking andspeechoutputfeatures.

This methodology is adopted in the research of designing, developing, and implementation of Sensor-Based Sign Language Recognition using a Smart Glove capable of translating gestures into audio or text translation. This system integrates sensor-based techniques with a Deep learningbidirectionalLSTMmodel(hybridmodel).

The entire workflow is divided into Several phases, as described as follows: 1) System design and hardware 2) Data Acquisition 3) Data Preprocessing 4) Model Development 5) Model Deployment 6) Real-Time Testing andValidation.

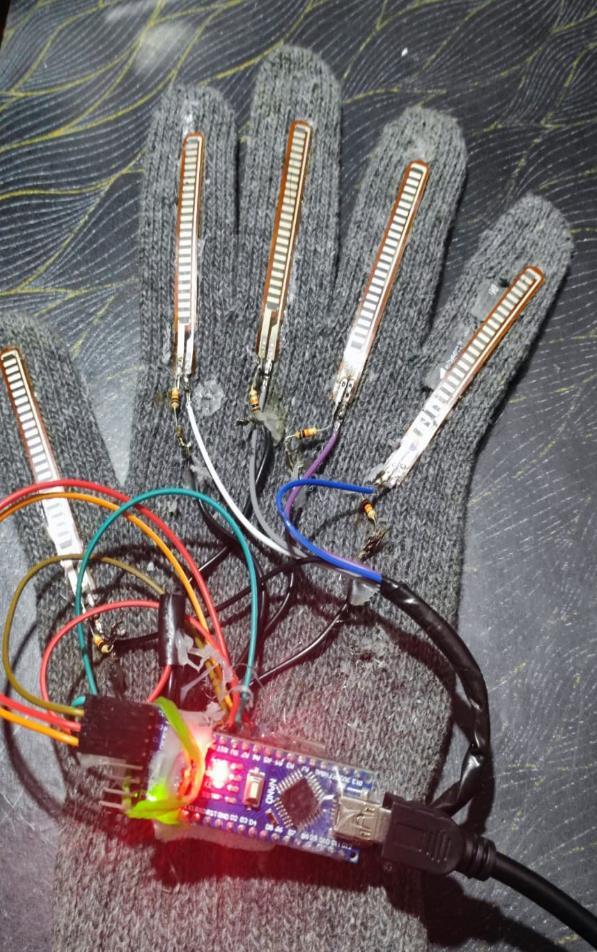

This system architecture is designed around a glovebased system, which consists of a combination of a flex sensor, an Arduino nano, an MPU6050 (accelerometer gyroscope module), jumping wires, a speaker and a Glove. The Flex Sensor is mounted on all five figures which are attach to the MPU6050 and Arduino nano to record bendingandhandorientation,aswellasfingersmovement. The flex sensors are corresponded to each finger. Their resistance changes when they are bended more with the movement of fingers and hand, which is read by Arduino Nano as varying analogy voltage levels. The MPU6050 provides real time hand movement and finger movement data,addanadditionalLayeroforientationtracking.

TheArduinonanoisthecentralprocessingsystemfordata acquisition. It interacts with the sensors and records their values through analogy pins and I2C pins, and transmits the readings to the computer for further processing. The entire setup is powered by USB or Battery pack. Ensures portabilityand easeofuse.

Theentiresystemisassembledonpalmofgloveandthe Arduino nano is connected to mpu6050 and mpu6050 is connected to the 5-flex sensor which are mounted on figurestorecordreadingsofmovementtheentiresystemis power by USB or a battery pack, ensuring portability and easeofuse.Ensuresportabilityand easeofuse.

In 1998, the first classical CNN architecture was designed by LeCun et al., which achieved impressive performance in the handwriting digit recognition task. Different from the traditional neural network structures, there is local connectivity and weight sharing in CNNs, which improves effective learning and reduces the overfittingproblemofparameters.

There are three main layers of a CNN: convolution, pooling, and fully connected. The convolution layer, pooling layer and fully connected layer. The convolution layer takes small areas of the input data and preprocesses them one at a time using filters (known as kernels). These filters can find pattens or features, such as edges, colors Then,a ReLU functionremoves all the negativevaluesand retains only the useful positive values to make the result cleanandeasytoworkwith.Thepoolinglayerreducesthe di mentality of feature map by max or average pooling

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

operations, making simple computations and providing spatial invariance. The fully connected layer usually consists of a few hidden layers and a soft max function, which extracts features from the data and outputs the probabilitydistributionofeachclass.

The Recurrent Neural Network (RNN) are employed for data processing tasks since they possess short-term memory capacity and can handle temporal dependencies. However,classical RNNshavedifficulties in capturinglong term dependencies, as noted by Greff et al. in 2015, the LSTM model is proposed to address this by maintain long term dependencies better than RNNs, taking advantage of memorycellswithgates.

An LSTM contain three gates: forget gate, input gate and output gate. In which the forget gate decides which information throw away from the previous cell state, the inputgatesdecidewhichinformationaddedtocurrentcell stateand outputgatedecideswhich information passedto the result. These gates contain a nonlinear activation function ‘sigmoid’ or ‘tanh’ let LSTM network to regulate information flow in a dynamic way thus being able to capturelongrangedependenciesinsequentialdata.

However,ithasadrawbackthatthestandardLSTMhandle information in one direction only, store everything they haduntilthattimeandcannotusefuturespatialcontext.To solve this problem the Gravel et al. introduce the Bidirectional LSTM (BiLSTM) in 2013. It consists of LSTM layerinoppositedirection,onereadthedataforward(past tofuture)andonereadsthedatabackward(futuretopast). It combines the result from both directions to model dependenciesforbothpastandfuturecontext.

Because LSTM looks at data from both directions, it can understand the full context of information in a more effective way. which helps it to perform better in gesture prediction, emotional prediction, and language modelling. Therefore, BiLSTM is an enhanced version of LSTM, which captures more complex temporal features from input data sequencespaceandprovidesbetterpredictionaccuracy.

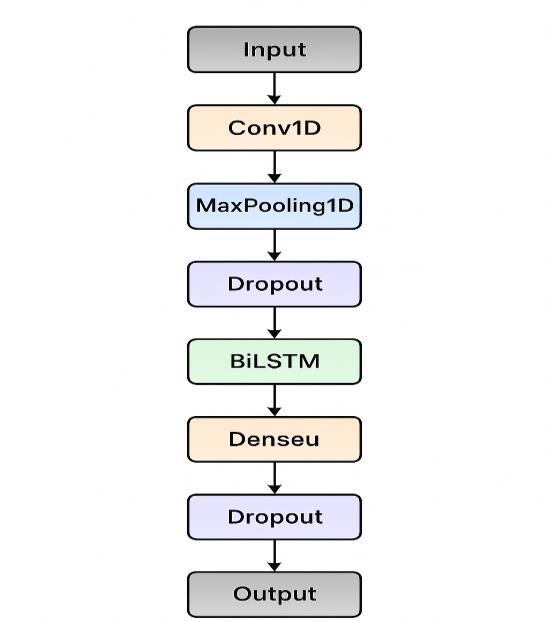

ThearchitectureoftheCNN+BiLSTMmodelcombinesthe ability of CNN and sequential learning from BiLSTM networks. The CNN layer serves as a primary feature extractor. Local spatial patterns are captured from input images or time series data throughout convolution and pooling operations to reduce noise and dimensions, while keepingrelevantfeatures.

TheresultingfeaturemapcreatedbytheCNNareflattened and fed into the BiLSTM layer, which are capable of capturing temporal poisoning sequence and context relationship between features. The forward and backward layerslearnhowfeatureschangeoveratimeandhowthey

related to each other. It can use this ability to process dependencies in both directions improving its contextual understandingaswellaspredictionperformance.

Thefullyconnecteddenselayercollectsallthefeaturesand supplies them to a softmax output layer for classification. This combination of spatial and time-series based learning is very useful for solving challenging pattern recognition problems including detection of defects, emotion analysis, and gesture predictions. This CNN + BiLSTM model can be seen as a new hybrid deep learning architecture that links between features extracted by static images and dynamic sequences,enablingtherobustandefficientprediction.

In this research, we have developed the gesture dataset using sensor-based data acquisition to recognize and classify 50 different hand gestures. The data are gathered through a glove prototype based on Arduino Nano integrated with five flex sensors and an MPU6050 sensor (3-axis accelerometer and 3-axis gyroscope). A custom dataset was created to match unique movements of the hand.

The data collected from five participants where each performed all 50 gestures five times, providing total of 1,250 samples for the data collection. For every gesture, 5 secondsofdatawerecollectedatasamplingrateof100Hz resulting in generating both angular velocity and acceleration data for the x, y and z axes. These configurations ensure that each gesture includes unique space-temporal information to obtain high recognition accuracy.

In order to maintain data integrity, we applied a noise reduction process by using a Butterworth low-pass filter

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

(cutofffrequency:20Hz)andnormalizedthesignalsviaZscore standardization. The cleaned data were segmented for 2s duration with a 50% overlap with each window being labelled with the occurrence of its corresponding gesture. At last, we split the dataset into 70:15:15 ratio, 70% for training, 15% for validations and 15% for testing sections. This 50-gesture dataset is quite ideal for deep learning model (cnn+bilstm) to predict gestures efficiently andreal-timefromsensordata.

4.1 System initialization

When westart the system upon the power up of wearable glove, it first checks all the hardware components such as flexsensorsandmotionsensorstoensuretheyareworking correctly. At the same time, the pre trained CNN+BiL STM modelisloadedintomemory.

4.2 Sensor Data Acquisition

Fig.4.1 FlowofSystem

In this part, when glove is in use, its continuously collects the real time sensor data of figures movements and hand movements from the embedded sensors. The spatial orientationistrackedbytheaccelerometerandgyroscope. Thesearethenrecordtherealtimeandtheuser’sgestures can be captured. Which in return assist the Model to recognize the Gestures. This stream of data is then passed on for processing. This data stream is then forwarded to thenextprocess.

4.3

In this part, the raw data is refined and structured to enhanceprocessing.Thenoiseiseliminatedandsignalsare smoothed and normalized. The resulting data is divided into small time windows representing a single gesture each. From these chunks, the relevant features are extracted, such as finger bending, hand movements, and orientation changes. This ensures that the input data is giventorecognizethemodeliswellorganizedandconsists ofessentialsignalsneededforaccurategestureprediction.

In this stage, the pre-processed features are input to a hybrid deep leaning model with CNN and BiLSTM. The convolution layer captures the spatial dependencies and local patterns from input sequences, whereas the BILSTM layerscanlearnthetemporal relationshipsinforward and backwards. This combination is beneficials to gesture recognitionimprovementofaccuracyandrobustnessofthe model

Once the gesture is recognized, it is translated into text, converting the hand movement into words that can be easily understood. For example, if the glove identifies the gesture,itimmediatelymapstheoutputtothedataflowin asmoothwaytothespeechsynthesis.

It then uses, text-to-speech (TTS) engine to read the response. It was this process that enables the system that “speak” the gesture for those who have difficulty communicating with people who do not understand sign language.

4.7

The resulting output speech is then played through a speaker, audio device. A corresponding text can also be shown on screen or mobile or web application directly by theuserforconvenientinteractionandaccessibility.

5. Limitation

Action and interaction are certain and more precise than context in themselves. However, the dataset was created arebiased.Meaningthat,theresultcannotbeappliedtoall signlanguage.Sincelanguagedoesnothaveanyfixedpath or linear structure. the researchers force them into one which does not match how spoken language works. Since cultureandethicsaredeeplyinvolvedinthesignlanguage. it is important that the deaf people actively involved and creatingdevelopingsystems.

Thegesturepredictionsystemiseffective, butsomeissues canaffectitsperformanceandscalabilityofthesystem.The variability of data requires additional research. The data related to the size of the user’s hand, the speed of hand gestures and the tightness of the glove leads to instability in sensor readings, which influence the reliability and predictability of model. The dataset is limited sized i.e. 50 gestures and 1250 samples does not allow the model to makegeneralizingtheproposedmodeltomakereasonable

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

predictions for gestures not seen in the dataset. Additionally,theabsenceofaGUIshouldbenoted;nowebbased user control tools can be used because the device is unabletohandlesuchdataset.

There is lot of more noise in the sensor data due factors such as sensors movements in different positions, during roomsetuporsignaldrift.Thenoisecouldnotberemoved for idealistic analysis, but it is up to the future work to design advanced data preprocessing methods. Despite the ability to recognize gestures in real-time, delays are introduced from continuous data retrieval and computation,andthetimetomovethesensortoadifferent labelafterthecodehasalreadybeentrained.

REFERENCES

[1]B. Sarada, Ch. Sri Divya, S. Begum, P. Kousalya, and E. Simhadri,“SmartGloveforGestureRecognitionusingIoT,” InternationalJournalofScientificResearchinEngineering andManagement(IJSREM),Apr.2025.

[2]F. Berjin Singh et al., “GestureInterpretation Glove for Assistive Communication,” Asian Journal of Applied ScienceandTechnology(AJAST),Apr.–Jun.2025.

[3]R.Damdooetal.,“AnIntegrativeSurveyonIndianSign Language Recognition and Translation (ISLRT),” Springer OpenAIApplicationsJournal,2025.

[4]Sign Speak Research, “Comparing Sign Language AI andHumanInterpreters:EvaluatingEfficiencyforGreater SocialSustainability,”SustainabilityinTechnologyJournal, 2025.

[5]B. Batte et al., “AI-Powered Sign Language Translation System:ADeepLearningApproachtoEnhancingInclusive Communication and Accessibility in Low-Resource Contexts,”IEEEAccess,2025.

[6]M. Kumar and N. Gupta, “Real-Time Hand Gesture RecognitionusingDeepLearning,”InternationalJournalof ComputerScienceTrendsandTechnology(IJCST),vol.13, no.1,2024.

[7]Chakrabortyetal.,“DeepNeuralNetwork-basedIndian Sign Language Recognition,” IEEE Access, vol. 12, pp. 11834–11845,2024.

[8]P. R. Bhatia et al., “Hybrid CNN-BiLSTM Approach for GestureRecognition,”IJITEE,vol.13,no.6,2024.

[9]Y.Zhangetal.,“Artificial Intelligencein SignLanguage Recognition,”MDPISensors,vol.24,2024.

[10]TechScience,“RecentAdvancesonDeepLearningfor Sign Language Recognition,” TechScience Press Journal, vol.12,no.8,2024

[11]N. Jain and R. Sharma, “Sensor-based Sign Recognition for Indian Languages,” IJRTE, vol. 12, no. 2, 2023.

[12]WuJ,RenP,SongB,ZhangR,ZhaoC,ZhangX(2023) Data glove-based gesture recognition using CNN-BiLSTM modewithattentionmechanism.

[13]P. K. Rao et al., “Low-Cost Smart Glove for Sign LanguageInterpretation,”ProcediaComputerScience,vol. 218,2023.

[14]Patel et al., “Wearable Smart Glove for CommunicationAssistance,”JournalofIntelligentSystems andComputing,vol.35,pp.255–268,2023.

[15]H. Alzarooni et al., “AI-Based Sign Language Interpreter (GestPret),” International Journal of Artificial IntelligenceandApplications,2023.

[16]R. Banerjee and A. Ghosh, “Sensor-Fusion-based Indian Sign Language Detection using Machine Learning,” JETIR,vol.10,2022.

[17]Thakur and D. Sharma, “A CNN-LSTM Hybrid Model for Gesture Recognition,” IEEE International Conference onIoTandAISystems,pp.213–220,2022.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

[18] M. Joshi et al., “Hand Motion Recognition using Accelerometer and Gyroscope Sensors,” International Conference on Computing and Communication Systems (ICCCS),2021.

[19] T. G. Kumar and S. K. Pillai, “Real-time Gesture RecognitionusingSmartGloveandMLModels,”IJRTE,vol. 9,no.5,2021.

[20] R.Rastgooetal.,“SignLanguageRecognition:ADeep Survey,”ExpertSystemswithApplications,2021.

[21] P.AgarwalandS.Kumar,“SmartWearableforIndian Sign Language Detection using IoT and AI,” International Conference on Smart Computing and Communication, 2020.

[22] N. K. Bansal et al., “Feature Extraction and Classification of Sign Gestures using CNN,” IEEE INDICON Conference,2020.

[23] Baumgärtner et al., “Automated Sign Language Translation: The Role of Artificial Intelligence in Sign Language Translation,” Frontiers in Artificial Intelligence, 2020.

[24] S. Chatterjee, “Motion Sensor-based Hand Gesture Recognition,”IJERT,vol.8,no.7,2019.

[25] Raj and R. K. Singh, “Wearable Glove for Sign-toSpeechConversion,”IJCSIT,2019.

[26] R. S. Patil and P. Deshmukh, “Accelerometer-based IndianSignLanguageRecognition,”IRJET,2019.

[27] R. S. Patil and P. Deshmukh, “Accelerometer-based IndianSignLanguageRecognition,”IRJET,2019.

[28] Sharma andN.Saini,“Arduino-basedSmartGlovefor Speech-ImpairedCommunication,”IJIRSET,2018

[29] P.Guptaetal.,“SignLanguageRecognitionusingFlex Sensors and Microcontrollers,” ICCES Conference Proceedings,2018.

[30] S. N. Verma, “A Low-Cost Prototype for Sign Gesture Identification,”IJCSMC,2018.