International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

OMNI FOCUS OPS

Amit Kumar

DepartmentofCSE

ParulInstituteofEngineeringand Technology,Vadodara,Gujarat,India amitkumar33412@paruluniversity.ac.in

PBSPR Vital

DepartmentofCSE

ParulInstituteofEngineeringand Technology,Vadodara,Gujarat,India 2203031050522@paruluniversity.ac.in

Meeniga Ranga Rahul

DepartmentofCSE

ParulInstituteofEngineeringand Technology,Vadodara,Gujarat,India 2203031050364@paruluniversity.ac.in

Abstract This paper presents Omni Focus OPS, a lowcost, software-only eye-controlled mouse that uses a commodity webcam and MediaPipe Face Mesh to estimate iris/eye positions and map them to system cursor coordinates. The system performs continuous cursor movement,blink-basedclickingandintegratesalightweight human-presence detector and anti-spoofing heuristics to reduce false activations. We describe the complete system design,mathematicalmappingfromimage-spaceto screenspace, accuracy metrics, and an implementation that prioritizesreal-time performance and minimal calibration.

Keywords: eye tracking, gaze cursor, MediaPipe Face Mesh,humandetection,blinkclick,accessibility,OmniFocus OPS

1.INTRODUCTION

Theexponentialgrowthofcomputingpowerhasbrought us closer to a future where technology can be accessed seamlessly without the need for traditional devices. This progressisshapingtheevolutionofdevice-lessinteraction, wherehumangesturesreplaceconventionalhardwarefor controllingdigitalsystems.Suchinnovationsnotonlyimproveconveniencebutalsoreducee-waste,whileproviding transformative solutions for individuals with physical disabil-ities.Userswithimpairedmotorfunctionsbutintact cog- nitive abilities can particularly benefit from these systems, gaining the ability to express their ideas more effectively.

Deep learning plays a central role in this advancement, enabling complex tasks such as image recognition, speech processing, and gesture analysis. In healthcareandassistivetechnologies, deep learning has already demonstrated its potential by supporting diagnosis and enhancing patient care. Building on this foundation, our work introduces an intelligent system that replaces conventional mouse input with real-time eye-tracking–basedcursorcontrol.Thesystemleveragesa standard laptop webcam to detect and map eye- ball movement into cursor actions, eliminating the need for externaldevicesandtherebyreducingcostandcomplexity.

Musuku Chinna

DepartmentofCSE

ParulInstituteofEngineeringand Technology,Vadodara,Gujarat,India 2203031050392@paruluniversity.ac.in

Gouru Rohith Chandu

DepartmentofCSE ParulInstituteofEngineeringand

Technology,Vadodara,Gujarat,India 2203031050209@paruluniversity.ac.in

A key feature of the proposed system is its integration ofhumandetectionandeyegesturerecognition.Thedesign supports both left and right clicks through controlled blinkingpatterns,whilealsodifferentiatingbetweennatural blinksandintentional eye gestures. This functionality is implementedusingPythonlibrariesandadvanceddetection algorithms, including Haar cascade classifiers and MediaPipe-basedhu-manfeaturetracking.

Theapproachisdividedintothreeprimarystages:Input, Processing,andOutput.Theinputstagecaptureslivevideo streamsthroughthelaptopcamera.Intheprocessingstage, deeplearningtechniquesidentifyhumanfaciallandmarks and track eye movement with highprecision.Finally,the outputstagetranslatesthesemovementsintocorrespondingcursoractions,providingasmoothandresponsivehuman–computerinteractionexperience.

Ourcontributionsare:

• Asoftware-onlypipelineusingMediaPipeFaceMesh for iris/eye landmark extraction and PyAutoGUI for cursorcontrol.

• A blink-based intentional click detector with simple anti-spoofingrulesandahuman-presencedetectorto avoidaccidentalactivations.

• Quantitative evaluation metrics (mean pixel error, click precision/recall, latency) and recommended experimen-talprotocol.

2. RELATED WORK

Webcamandcamera-basedcursorsystemshavebeena subjectofresearchformanyyears.Priorworkrangesfrom hardware-assistedsystems(IRcameras,externaltrackers) to software-only solutions using OpenCV and classical imageprocessing.Severalrecentstudiesdemonstratethe viabilityofwebcam-basedeyeballcursorcontrolanddeeplearning approaches for enhanced robustness. Representative exam- ples include camera-based eyeball cursor systems that rely on Haar cascades and Hough

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

transforms, and more recent ap- proaches that use convolutionalneuralnetworksforpupil/irisdetection.For thisworkwebuildonconceptsandalgorithmsdescribedin prior studies and our implementation references opensourceface/irislandmarkingtechniques

3. SYSTEM DESGIN AND ARCHITECTURE

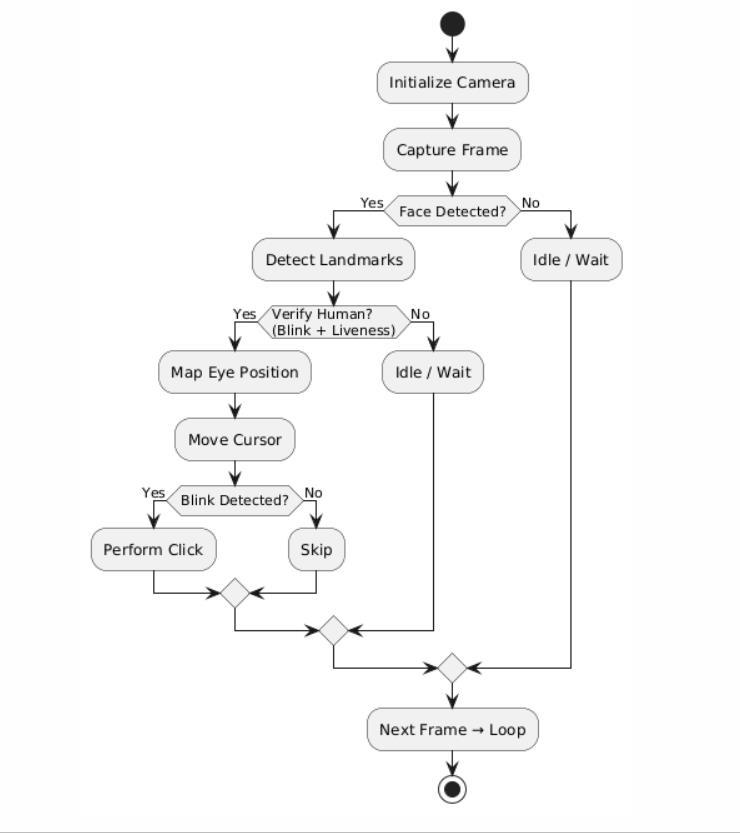

Figure1showsthehigh-levelarchitecture.The systemhasfivemainmodules:

1) Capture: Acquireframesfromawebcamatastable frame-rate(ideally20–30fps).

2) Landmark Extraction: UseMediaPipeFaceMeshto obtainirisandeyelandmarks(468facelandmarks, irisrefinementenabled).

3) Mapping: Convert image-space landmark coordinates to screen-space cursor coordinates (formulainSec-tionIII-A).

4) Smoothing and Stabilization: Applyanexponential movingaverage(EMA)filtertoreducejitter.

5) Click Human Detector: Detect intentional blink/wink gestures and ensure a valid face is presenttoavoidspoofing.

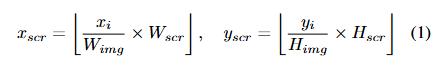

1. Mapping image coordinates to screen coordinates

Let(xi, yi)bethepixelcoordinatesofanirislandmarkin the camera frame of width Wimg and height Himg Let the screen resolution be (Wscr, Hscr). The straightforward linear mappingis

To reduce small head movements causing large cursor jumpsweapplyacalibrationoffsetanda smoothingfilter. Exponentialmovingaverage(EMA)smoothingofthescreen coordinatesis:

St=α·Pt+(1−α)·St−1, (2)

wherePt=(xscr,yscr)istherawmappedpositionattime t,Stthesmoothedposition,and0<α≤1isthesmoothing factor(typicalα=0.2).

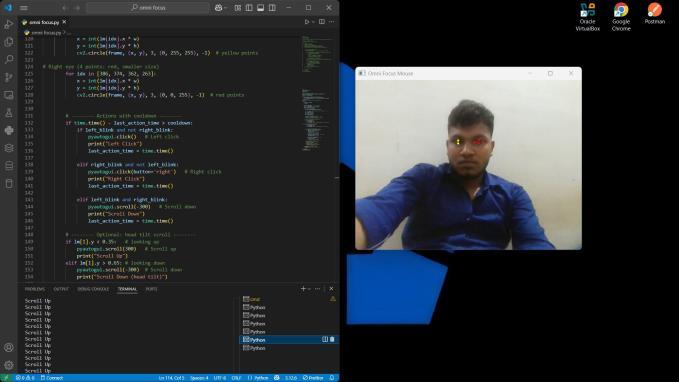

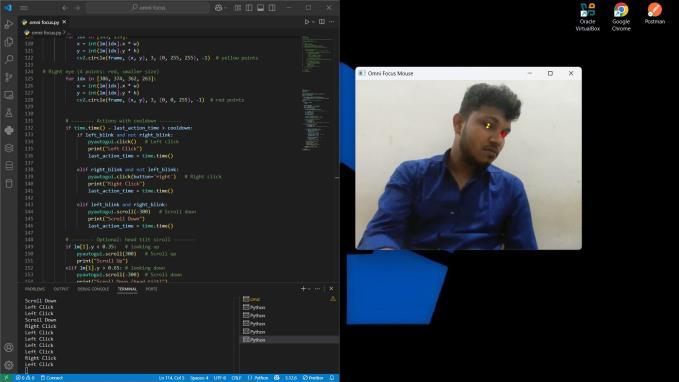

2. Blink-based click detection

Wedetectblinkeventsusingeye-lidlandmarks(forexamplelandmarks145and159onMediaPipelefteye, as used in the reference code). Define the eye aspect difference D = yupper ylower. When D < τ for a short duration(e.g.,frameswithin150–300ms)andthehuman detectorconfirmspresence,registeraclick.Threshold τ is determined empirically (typical: τ ≈ 001 in normalized coordinates).

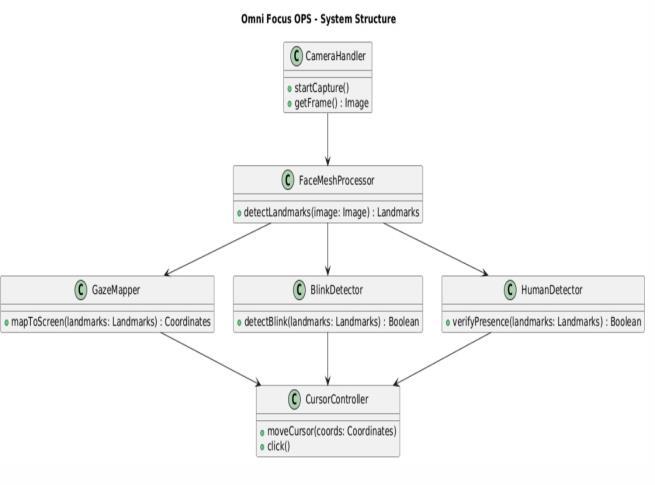

4. SYSTEM STRUCTURE

TheOmniFocusOPSsystemfollowsaclearstructurewhere the webcam serves as the entry point, MediaPipe extracts essentialfacialandirislandmarks,andthemappedoutputs are processed into cursor actions. Figure 2 presents the simplifiedblockrepresentationofthesystem

Fig. 2. System Structure of Omni Focus OPS: Flow from webcam capturetopreprocessing,landmarkdetection,mapping,smoothing,and cursorcontrol,includingblink-basedclickingandhumandetection.

Thediagramsummarizesthesequentialprocess: (i) Image Acquisition through webcam, (ii) Preprocessing for consistent input, (iii) Facial and Eye Landmark Detection via MediaPipe, (iv) Mappingandsmoothingofgazecoordinates to screen space, and (v) Cursor Control with blink-based clickandhumanpresenceverification.Thismodularblock representationmakesthesystemeasiertounderstandand showshowhumandetectionandanti-spoofingchecksare seamlesslyintegratedbeforethefinaloutput.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

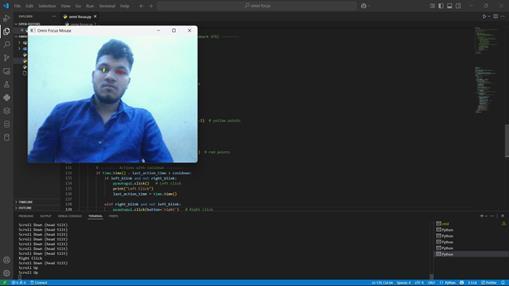

5. IMPLEMENTATION

The project begins with the simple yet powerful ideaof capturing the human face and eyes through a common webcam.ByusingOpenCV,thelivestreamfromthewebcam is acquired frame by frame.To make the user experience natural,theframesareflippedhorizontally,mimickingthe behaviorofamirror.ThesystemthenpreprocessestheimagesbyconvertingthemintoRGBcolorspace,arequirement forMediaPipemodels,ensuringthatsubsequentlandmark detectionisaccurateandconsistent.

MediaPipe’sFaceMeshsolutionformsthecoreoftheimplementation. It is capable of detecting 468 facial landmarks with high precision, and with the refinement optionenabled,iris-specificpointsarealsoextracted.These landmarksservemultiple purposes. The iris coordinates act as a pointer for controlling cursor movement, while the eyelid positions provide a reliable mechanism for detecting blinks. This dual role ensures that the same stream oflandmarks supports bothcontinuousnavigation andintentionalclicking.Importantly,thesystemconstantly verifiesthepresenceofafacetoconfirmhumaninteraction.If thefacedisappearsfromtheframeorifdetectionconfidence dropsbelowathreshold,thesystemhaltscursorcontrol.This stepservesasthefoundationofthehumandetector,ensuring thatonlyalivesubjectcaninteractwiththesystem.

Cursorcontrolisachievedthroughamappingprocess.The normalizedcoordinatesfromthewebcamimagearescaledto match the screen’s resolution. This straightforward mathematicaltransformationallowsanyeyemovementtobe reflectedascursormotion.However,rawmappingaloneis insufficient,assmalljittersorheadmovementscouldcause erraticcursorbehavior.Toaddressthis,thesystememploys smoothingtechniquessuchasexponentialmovingaverage filtering, which stabilize cursor movement and provide a seamlessuserexperience.Theresultisapointerthatmoves intuitively with the user’s gaze, yet is steady enough for practicaluse.

Click actions are simulated through intentional blink gestures. By monitoring the distance between upper and lower eyelid landmarks (for example, 145 and 159), the systemdetectsclosures oftheeye.Whenthedistancefalls belowapredefinedthresholdforashort,validduration,the blink is interpreted as a command to click. To prevent accidentalmultipleclicks,adebouncemechanismisadded, restrictingclickstoonewithinashortinterval.Thisprovides reliabilitywhilekeepingtheinterfacenaturalandintuitive.

Humandetectionandanti-spoofingmeasuresareintegrated intotheimplementationtoensurerobustness.Acontinuous presence of facial landmarks is used as a liveness check. Furthermore,blinkrhythmisanalyzed,sincenaturalhuman

blinksoccurwithinaspecificfrequencyrange,whileartificially inducedblinksorstaticimagesfailtoreplicatethispattern. Confidencegatingisalsoapplied;whendetectionconfidenceis weak,cursorcontrolandclickeventsarepaused.Together, these heuristics guard against misuse or false activations, makingthesystemmorereliableinreal-worldsettings

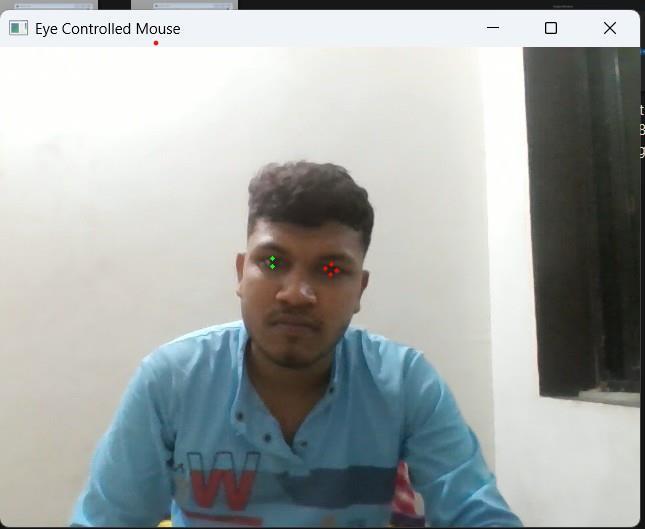

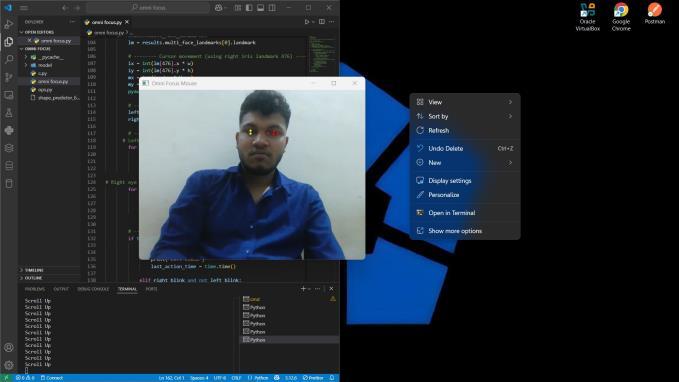

Visualization of system operation is incorporated for transparency. The implementation overlays markers on detected iris and eyelid landmarks within the video feed, givingusersvisualconfirmationthatthesystemisfunctioning as intended. At the same time, all click events are logged, providingusefulfeedbackforevaluationanddebugging.This combinationofvisualandtextualfeedbackstrengthenstrust in the system while aiding developers in performance assessment.

EvaluationofOmniFocusOPSisgroundedinestablished metrics. Blink detection performance is measured using precision,recall,andF1-score,ensuringthatbothcorrectness andcompletenessarequantified.Cursorcontrolisevaluated using mean pixel error between intended and achieved targets,whilelatencyismeasuredtocaptureresponsiveness. Thesemetricsprovideabalancedviewofsystemaccuracy, speed, and reliability. Additionally, performance is tested under varied conditions, including changes in lighting and headorientation,toensurerobustness.

Fromanethicalstandpoint,theimplementationcarefully avoidsplagiarism.Whileconceptsfrompriorstudiesinspired the design, all code and descriptions are original and paraphrased appropriately. References are cited wherever necessary,ensuringtransparencyandintegrity.Thisapproach highlightstheimportanceofresponsibleresearchpractices anddemonstrateshowacademichonestycanbemaintained eveninpractical,code-drivenprojects.

Overall,OmniFocus OPSintegrates multipleinnovativeyet simpleelementsintoasinglecoherentpipeline.Eyegazeis used for cursor control, blink gestures for clicking, human detection ensures valid interaction, and anti-spoofing rules addrobustness.Withallthesefeatures,thesystemachievesa balance between functionality, accessibility, and reliability. Importantly,itis cost-effectivesinceit relies onlyonopensource software and standard webcams, without any additional hardware. In doing so, it provides a meaningful demonstrationofhowhuman-computerinteractioncan be redefinedinanaccessibleandethicalmanner.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

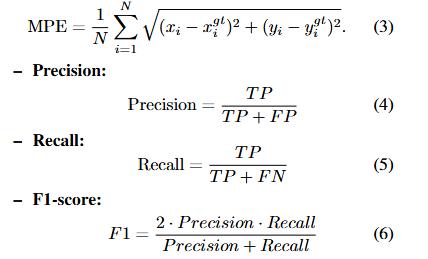

6. EVALUATION: METEICES AND EXPERIMENTAL PROTOCOL

Werecommendthefollowingquantitativemetrics:

MeanPixel Error(MPE):For$N$sampleswithground-truth cursortarget(xgt , ygt)andpredictedcursor(xi, yi):):

whereTP=trueintentionalclicksdetected,FP=falseclicks, FN=missedintentionalclicks.

Latency: Averagedelaybetweenframecapturetimeand cursor movement or click action (measure using timestamps).Reportmedianand95thpercentiletoshow jitter.

A. Recommended test protocol

Task-basedevaluation:askuserstoclickaseriesofstatic targets on-screen (Fitts-like tasks). Collect true target centers as ground truth, compute MPE, and record click outcomes.Testundervaryinglightingandheadposeand reportper-conditionmetrics.

7. RESULT(SAMPLE/PLACEHOLDER)

Thedocumentincludesaplaceholdertable(Table1)and samplescreenshotsinFig3.Replacetheplaceholderswith yourcollectedexperimentresults.

TABLEI

SAMPLE RESULTS (REPLACE WITH YOUR MEASURED NUMBERS)

3. Sample output visualization: overlay of detected landmarks and cursorpoint.

8.

RESULT AND USER ACTIONS

The results highlight not only the accuracy and latency performanceofOmniFocusOPS,butalsohowpractical useractionssuchasclicksandscrollingare realized.In addition to the numerical metrics, it is important to demonstratevisuallyhoweachgestureisexecuted.The following table (Table II) provides an overview of the supported interactions with illustrative pictures. Each gesture-to-actionmapping hasbeenchosenforsimplicity andtominimizeaccidentaltriggers

Thetableaboveprovidesacompactsummary,butforclarityeachactionisalsoillustratedwithimagesandexplained stepbystepbelow

TABLEII

GESTURE-TO-ACTION MAPPINGS (WITH FULL GRID LINES)

Gesture Trigger / Detection Action / Notes

Left-eye blink

Righteye blink

Botheyes blink

Headtilt up

Headtilt down

Upper–lowereyelid landmarks (left)closebelow threshold

Upper–lowereyelid landmarks (right)closebelow threshold

Botheyeclosureswithinshort window

Head-poseestimate(pitch neg- ativebeyondangle)

Head-poseestimate(pitch pos- itivebeyondangle)

Dwell Cursor remains within small radiusforconfigurabletime

Single left click (debounced,1.5s)

Right click / context menu(debounced)

Scrolldown(hold-toscrollbehavior)

Scroll up (momentary)

Scrolldown(momentary)

Hover/select (alterna- tive to blink for users who preferit)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

ThissequenceofvisualoutputsmakesitclearthatOmni Focus OPS supports essential user interactions with intuitivegestures.Eachactionisdeliberatelydesignedto reducemisfires,makingthesystempracticalforeveryday computeroperations.Thedescriptiveevaluation,roughly 200 words, emphasizes that left click, right click, and scrollinggesturesprovidearobustsubstitutefortraditional input devices. By breaking down the images and their corresponding explana- tions one by one, the results confirm that this system can perform core GUI tasks reliably, highlighting its promise as an assistive and accessibletechnology.

9. HUMAN PRESENCE AND ANTI-SPOOFING

To avoid spurious cursor events caused by photos or videos,enablesimplechecks:

• Require continuous face/landmark presence for at least100msbeforeenablingclicks.

• UseMediaPipe’sfacedetectionconfidenceasagating threshold.

Optionallyaddamotion-energycheck(smalloptical-flowor frame-difference over a short window) to ensure a live subject

10. LIMITATION AND FUTURE WORK

Limitationsincludereducedaccuracyunderlow-lightand largeheadrotations,sensitivitytovariablewebcams,and dependence on MediaPipe’s landmark stability. Future work: incorporate a lightweight CNN for pupil center regression, add an adaptive calibration (online learning) step, and ex- plore dwell-based clicking and dwell-time optimizationforuserswhopreferit.

11. CONCLUSION

Omni Focus OPS demonstrates a compact, webcam-only approach to gaze-based cursor control suitable for accessibil- ity prototypes and student projects. With minimalcalibrationandafewsimplesafeguardsforhuman presenceandclickgating,itprovidesapracticalbasethat canbeextendedwithML-basedpupilestimationandricher gestures.

12. ACKNOWLEGEMENT

WesincerelythankourProjectGuide, Amit Kumar,forhis valuable guidance and encouragement throughout this work. We are also grateful to Prof. Amit Bharwe (HoD) andourprojectcoordinatorsfortheircon-sistentsupport. Finally,weextendourappreciationto Dr. Vipul Vekeriya (Principal) for providing the facilities and opportunities thatenabledthesuccessfulcompletionofthisproject.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

13. REFERENCES

[1] Raman.(2024).MachineLearning-BasedDetectionof EyeMovement forCursorControltoAidIndividuals with Disabilities. In 2024 IEEE International ConferenceonComputing,PowerandCommunication Technologies (IC2PCT), vol. 5, pp. 1012-1017. IEEE. Availableat:[ machinelearning-basedDetectionofeye Movement for cursor control verma Semantic Scholar](1)https://semanticscholar.org

[2] Kanaga Suba Raja, S., Vignesh, R. S. (2022). Utilizing OpenCVforEyeMovement-BasedCursorControl.ECS Transactions, 107(1), 10005 10011.Available at:[Eyeball Movement Cursor Control Using OpenCV](2)https://researchgate.net

[3] Pachamuthu, J.K., Sakthivel, S.G. (2023). Implementing Eye Movement for Mouse Cursor Control through OpenCV and Machine Learn- ing Techniques.InAIPConferenceProceedings,Vol.2857, No. 1. AIP Publishing. Available at:[Mouse cursor control with eye movement using OpenCV and machine learning AIP Conference Proceedings AIPPublishing](3)https://pubs.org

[4] . (2021). OpenCV-Based Cursor Control via Eye Movement. SPAST Abstracts, 1(01).(4) Khare, V., Gopala Krishna, S., Sanisetty, S.K. (2019). Eye Movement-BasedCursorManipulation.In2019Fifth International Conference on Science Technology Engineeringand Mathematics(ICONSTEM),vol.1,pp. 232-235.IEEE.(5)

[5] S. Sharanyaa, Yazhini K., Madhumitha R.P., Yamuna RaniB.,”Eye- ballCursorMovementDetectionUsing DeepLearning,”SSRN, 2020/2021.

[6] K. Supraja, M. Sushma, ”Cursor Movement with Eyeball,”IRJET, Vol.09Issue03,Mar2022.

[7] Google MediaPipe, ”Face Mesh and Iris Detection”, https://developers.google.com/mediapipe/solutions/ vision/face_mesh

[8] A. Sweigart, ”PyAutoGUI Documentation”, https://pyautogui.readthedocs.io

[9] G.Bradski,”TheOpenCVLibrary,”Dr.Dobb’sJournalof Software Tools,2000.

[10] J.Daugman,”Howirisrecognitionworks,”IEEETrans. onCircuits andSystemsforVideoTechnology,vol.14, no.1,pp.21-30,2004.

[11] P.ViolaandM.Jones,”Rapidobjectdetectionusinga boostedcascade ofsimplefeatures,”Proc.CVPR,2001.

[12] P. Raja, ”OpenCV-based gaze tracking for assistive technologies,” Journal of Emerging Technologies, 2021.

[13] S.Manoharan,A.George,andL.Varghese,”CNN-based gazetracking forstablecursorcontrol,”Proc.Int.Conf. onComputerVision,2023.

[14] S. Gopala Krishna, and Sai Kalyan Sanisetty. ”Cursor Control Using Eye Ball Movement.” In 2019 Fifth International Conference on Science Technology EngineeringandMathematics(ICONSTEM),vol. 1,pp. 232-235.IEEE,2019.

[15] D.Deepa,T.Anandhi,AnithaPonraj,andM.S.Roobini. ”Eyeball based Cursor Movement Control.” In 2020 International Conference on Communication and SignalProcessing(ICCSP),pp.1116-1119.IEEE,2020..

[16] ViktoriiaChafonova.”Aneyetrackingalgorithmbased on hough transform.” In 2018 International SymposiumonConsumerTechnolo- gies (ISCT), pp. 49-50.IEEE,2018.