International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

Sangayan Uke, Atharv Timane, Utkarsh Pawar, Urvendrasingh Sisodia, Nikhil Thorat, Suraj Ugale

1 Department of Information Technology, Vishwakarma Institute of Technology (VIT), 666 Upper Indiranagar, Bibwewadi, Pune, Maharashtra, India – 411037

2 Department of Information Technology, Vishwakarma Institute of Technology (VIT), 666 Upper Indiranagar, Bibwewadi, Pune, Maharashtra, India – 411037

3 Department of Information Technology, Vishwakarma Institute of Technology (VIT), 666 Upper Indiranagar, Bibwewadi, Pune, Maharashtra, India – 411037

4 Department of Information Technology, Vishwakarma Institute of Technology (VIT), 666 Upper Indiranagar, Bibwewadi, Pune, Maharashtra, India – 411037

5 Department of Information Technology, Vishwakarma Institute of Technology (VIT), 666 Upper Indiranagar, Bibwewadi, Pune, Maharashtra, India – 411037

6 Department of Information Technology, Vishwakarma Institute of Technology (VIT), 666 Upper Indiranagar, Bibwewadi, Pune, Maharashtra, India – 411037

Abstract - In a world where we're constantly plugged into devices and notifications, it's easy to lose sight of our inner selves. While we stay connected to the outside world 24/7, many of us silently struggle with emotional disconnection, often feeling drained, isolated, or unheard. That’s exactly why Moody was created an AI-powered website built to help you pause, check in with yourself, and feel a little lighter. Moody gently bridges the gap between technology and emotional well-being. It uses a smart combination of real-time heart rate monitoring, Natural Language Processing (NLP), and powerful Python libraries to sense how you're feeling. Whether you’re battling stress, feeling low, or just need a mental boost, Moody offers supportive prompts, soothing messages, and motivational nudges tailored just for you. What makes Moody truly special is its deep respect for your privacy. Every bit of your data emotional and physiological is securely encrypted. Nothing is saved or shared, so you can speak your mind freely, knowing your personal space is protected. Moody is more than just a chatbot. It’s a comforting presence, a listening ear, and a quiet reminder that your feelings matter. With Moody, emotional care meets thoughtful technology right when you need it most.

Key Words: AI (Artificial intelligence), NLP (Natural language processing), Arduino, TTS(Text-to-speechrecognition), CNN (convolutional neural networks)

With growing awareness about mental health and emotional well-being, many people are now looking for ways to feel supported, especially when professional help isn’t always available. In this project, we introduce “Moody” – a smart virtual assistant designed to understand how you’re feeling, talk to you like a friend, and help you cope when you’re stressed or feeling low.

Moodyusesa mixoftechnologieslikespeech recognition, natural language processing (NLP), and machine learning to detect emotions from your voice and words. It’s built using a powerful emotion detection model (based on the Distil Roberta transformer), and it also talks back using text-to-speech, making the interaction feel natural. All conversations are saved securely in an encrypted local database, so it respects your privacy. One of the coolest features of Moody is the heart rate sensor. By checking your pulse in real time, Moody can tell if you’re feeling anxious or overwhelmed even if you’re not saying it out loud. If it notices a high heart rate and negative emotions like sadness or anger, it gently suggests calming activities like breathing exercises or offers comforting responses. These small actions can make a big difference when someone is having a tough moment. The system also includes an Arduino setup that responds physically using things like LEDs, buzzers, and vibration motors. For example,aredLEDmightlightupwhenyou’reangry,ora softvibrationmightguideyouthroughbreathingexercise. This kind of feedback makes Moody more interactive and helpful, especially for users who prefer visual or physical cues. Overall, our goal with Moody is to show how combining AI and simple hardware can create a low-cost, accessible emotional support tool. As first-year students, this project helped us learn how to apply what we’ve studied in a real-world way that could actually help people. In this paper, we’ll explain how we built it, how it works, and why it could be useful for anyone needing a littleextrasupportintheirday.

Emotion-sensing technologies have come under the spotlightinrecentyears, especiallyforimprovinghumancomputerinteractionusingnaturallanguageprocessing.A plethora of methods and types of data (modalities) have been investigated by researchers to detect emotions and

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

produce right, empathetic replies. This section summarizes recent research that has advanced emotion recognition systems as well as emotionally intelligent chatbots. Hu et al. (2023) created a voice-controlled conversational agent that can detect emotions based on speech features like pitch, loudness, and Mel-frequency cepstral coefficients (MFCCs) [6]. Their system illustrated that processing these vocal signals can make chatbots much more emotionally intelligent and responsive. The study highlights the promise of combining diverse data types termedmultimodalemotionrecognition towards more humanlike interaction. Along the same lines, the EMMA bot was developed to enhance user well-being by identifyingemotionalstatesandreactingsupportively[4]. EMMA employs self-reported affect and passive data collection to adapt conversation and provide suitable interventions. This research demonstrates the utility of not just sensing emotions but also reacting in ways that encourage users' mental and emotional well-being. Another significant contribution is a music recommendation system that tracks physiological signals via wearable devices to make inferences about emotions [5]. While not a chatbot, this work implies that real-time physical measures can enable systems to adapt their responses to users' emotional states further enabling future emotion-aware chatbots that take verbal and physiological inputs into account. In content personalization, a study utilized deep learning to create a movie recommendation system that teaches users' emotions from written feedback [3]. Methods such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) were applied in examining emotional content within text to demonstrate how deep learning can capture emotional context from language. Furthermore, affect-aware recommendationsystemshave been explored for text-based personalization [2]. By analyzing user-authored text based on emotional dictionaries and sentiment analysis, these systems enhance the relevance of content. These approaches can be easily extended to chatbots so that they can effectively interpret and respond to user sentiment in dialogues. Lastly, an emotion recognition and helpful response recommendation chatbot was suggested based on natural languageprocessingandrule-basedreasoning[1].Itinfers the user's emotional state from text and provides advice or solutions with respect to that mood, showing a realworld solution for emotion-sensitive conversation. Together, these research efforts provide significant guidance for developing an emotion-detecting chatbot. They investigate both one-mode (speech or text) and multi-mode (e.g., text and physiological data) emotion detection and demonstrate how such systems are able to respond to the user differently depending on their emotional state. With the ongoing interest in empathetic AI, these advancements bring into focus the requirement forsystemsthatareabletoactuallyunderstandandassist users notsimplyprocessingwhattheyutter.

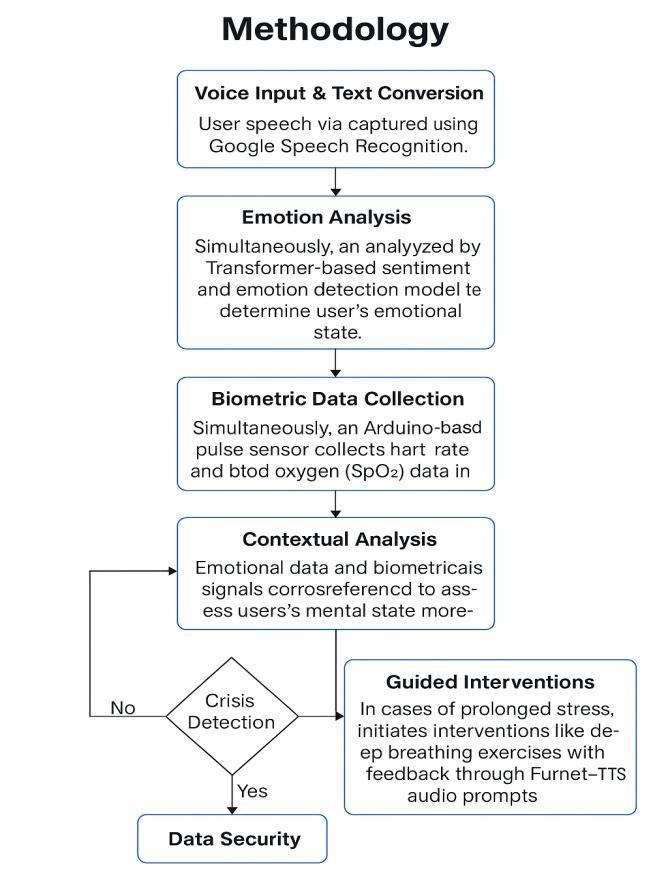

The proposed system, Moody, is a multimodal AI-based emotional companion that integrates Natural Language Processing (NLP) and biometric sensing to identify and respond to a user’s emotional state in real time. The overall architecture comprises three major modules: input acquisition, emotion analysis, and feedback generationthroughArduino-basedcomponents.

The process begins with the user’s voice input, which is capturedviaamicrophoneandconvertedintotextusing theGoogleSpeechRecognitionAPI.Thistranscribedtext is then analysed using a Transformer-based sentiment analysis model (Distil Roberta) to classify the user’s emotional tone such as sadness, anger, happiness, or neutrality. In parallel, a pulse sensor connected to an Arduinoboardcontinuouslyrecordstheuser’sheartrate andoxygensaturation(SpO₂)levels.Thesephysiological parameters are processed in real time, and any significantdeviation suchasaheartrateexceeding100 bpm mayindicateanxiety,stress,oremotionalarousal.

Both textual and physiological data streams are fused together to achieve a more accurate emotional assessment. This multimodal fusion enhances reliability compared to traditional text-only emotion detection systems. Once the emotion is identified, Moody generates an appropriate, empathetic textual response. ThesystemalsousesText-to-Speech(TTS)technologyto speak the response aloud, ensuring a natural and engagingconversationexperience.

Arduino components play an essential role in providing physical and visual feedback to the user. The system employs LEDs, buzzers, and vibration motors to reflect orregulateemotionalstates.Forinstance,aredLEDmay light up when anger is detected, a blue LED may glow during sadness, or a soft vibration may guide the user through breathing exercises during stress. This physical feedback creates a more immersive and interactiveuser experience.

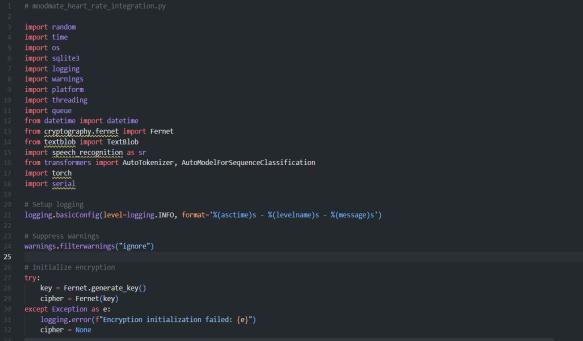

To maintain data privacy, all user interactions including transcribed text, detected emotions, and biometric data are encrypted using Fernet symmetric encryption before being stored locally in an SQLite database. No information is transmitted to external servers, thereby ensuring complete data confidentiality andusertrust.

Additionally, the system incorporates a crisis detection mechanismthatcontinuouslymonitorsfordistressingor self-harm–related language patterns such as “I want to end it all” or “I don’t want to live.” When such phrases appearincombinationwithelevatedheartratereadings,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

the system automatically triggers a safety protocol. It provides calming voice responses, motivational messages, and emergency helpline information to supporttheuserduringcriticalmoments.

When prolonged negative emotional patterns or stress markers are detected, Moody activates guided interventions such as deep breathing instructions, mindfulness exercises, and ambient light adjustments. Theseinterventionsaimtoprovideimmediateemotional support and help the user regain calmness. While not a substitute for professional therapy, this feature demonstrates how AI-driven empathetic interaction can assistusersinmomentsofdistress.

Real-time heart rate data is collected through a pulse sensorconnectedtoanArduino.Rawdatapassesthrough intense temporal analysis within the system to locate its spikes.Wearealsoabletosetthresholds(e.g.>100 bpm) for different grades of physical stress. Data suchas thisis thensynchronizedwithemotionanalysistocreateamore comprehensiveuserprofile[3].

increased heart rate, prompting immediate support responses. This safety mechanism draws inspiration from emotion-aware conversational agents with crisis-responsecapability[1].

D) Guided Intervention - In this way Adaptation is (see below) entirely managed by MoodMate. If it detects prolonged negative sentiment or stress markers in biometric data, guided interventions are initiated by MoodMate. These include breathing exercises and aiming audiovisual aids via TTS to lead theintervention.Thisisconsistentwithpracticesused inemotionalsupportbotslikeEMMA[4].

E) Encryption and Secure Logging - All user interaction, including voice transcripts and detected emotions, is encrypted in the local SQLite database before being sent over Fernet (symmetrical encryption). This way we protect user data and privacy - a must for any application with either the slightest tinge of social aspect. This approach follows the security protocols recommended in affectsensitivesystemdesign[2].

Figure 3. Flowchartoftheproposedmethodology

4.Results and discussions

C) Crisis detection - The system detects use of crisis language ("end it all", "don’t want to live") and

The performance of Moody was evaluated through controlled experiments designed to measure emotionrecognition accuracy and system responsiveness. Two primary components were analysed: (1) the Natural Language Processing (NLP) model for text- and voice-

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net

based emotion detection, and (2) the Arduino-based biometricmoduleforreal-timephysiologicalmonitoring.

A. Experimental Setup

Ten volunteer participants interacted with Moody over multiplesessions.Eachsubjectexpressedshortutterances representing the five primary emotional states happiness, sadness, anger, fear, and neutrality both verbally and textually. Simultaneously, heart-rate data were captured via an Arduino pulse sensor, while the Distil Roberta transformer classified the corresponding textual emotions. Ground-truth labels were manually assigned according to the participants’ intended expressions.

B. Emotion-Recognition Performance

The transformer-based model demonstrated satisfactory recognition accuracy across all categories. Quantitative metricsaresummarizedinthefollowingtable.

Table -1: PerformanceMetricsforEmotionDetection

Discussion

Theobtainedresultsdemonstratethat Moody can reliably identify emotional states and deliver responsive, multimodal feedback using lightweight hardware and open-source NLP frameworks. The integration of textual sentimentwithbiometricindicatorsimprovedrobustness, particularly in ambiguous cases. Future work will include large-scale data collection, fine-tuning of transformer parameters, and incorporation of additional bio signals to furtherenhanceemotionalinferenceandpersonalization.

5.FUTURE SCOPE

The emotional support chatbot developed in this project has significant potential for enhancement and broader application. The following future scope outlines areas for technicalgrowth,socialimpact,andresearchcontribution:

1. Multimodal Emotion Detection:

While the current implementation relies on textual and speech-based emotion recognition, future versions can integrate multimodal analysis using facial expression detection via a camera and physiological signals (e.g., heart rate variability, galvanic skin response) for more accuratemooddetection.

2. Language Expansion and NLP Improvements:

The model achieved the highest accuracy for distinct emotional expressions such as happiness and neutrality, whereasemotionswithsubtlerlinguisticmarkers,notably fear, exhibited comparatively lower precision. These outcomes align with prior research on transformer-based affectivemodelling.

C. Biometric Integration and System Latency

The Arduino pulse sensor yielded an average deviation of ±3 bpm when benchmarked against a commercial fitness tracker, indicating acceptable precision for physiological correlation. End-to-end latency from audio input to spokenfeedback averagedapproximately1.8s,ensuring near-real-time interaction suitable for conversational applications.

D. User Evaluation

A post-session survey revealed that 90 % of participants perceived Moody’s responses as empathetic and contextually appropriate. Users identified the dynamic LED lighting and voice modulation as the most effective feedback features. Minor performance degradation was observed under high ambient-noise conditions, occasionallyaffectingspeechrecognitionaccuracy.

In future the chatbot can support languages like (Hindi, Marathi, Spanish, French, and German), future iterations can include real-time language detection, regional dialect support, and contextual translation accuracy improvements using transformer-based multilingual modelslikeM2M100orNLLB.

3. Personalized Emotional Intelligence:

By integrating machine learning models that learn from past conversations, the chatbot could develop userspecific emotional profiles, enabling personalized mental health support, adaptive responses, and more natural dialogue.

4. Integration with Health Devices:

Integration with wearable health devices like smartwatches and fitness bands can help track real-time biometrics (e.g., heart rate, sleep patterns, oxygen levels), allowing the chatbot to provide proactive emotional supportbasedonphysiologicalindicators.

5. Mental Health Support Features:

The chatbot can evolve into a mental health companion by incorporating CBT (Cognitive Behavioral Therapy) techniques,moodjournaling,meditationguides,andcrisis intervention resources, while ensuring ethical AI use and dataprivacy.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 11 | Nov 2025 www.irjet.net p-ISSN: 2395-0072

Packaging the chatbot into cross-platform applications (Android, iOS, Web) with a user-friendly interface and accessibility options can significantly broaden its reach andusability,especiallyinremoteorunderservedregions.

With user consent and anonymization, the conversation logs and emotional trends can be analyzed to generate population-level mental health insights. These findings could assist psychologists, researchers, and policymakers in understanding emotional behavior patterns across differentdemographics.

By incorporating lightweight NLP models and offline translation/recognition capabilities, the chatbot can support low-bandwidth or no-internet environments, increasingitsutilityinruralordisaster-struckareas.

6.Conclusion

To sum up, Moody is more than just a piece of technology it’s a thoughtful blend of artificial intelligence, IoT, and human-centred design aimed at supporting emotional well-being. By combining speech recognition, emotion detection through natural language processing, and real-time responses using biometric feedback, Moody offers a sensitive and accurate way to understandhowapersonisfeeling.

WhatmakesMoodystandoutisitsabilitytonotonlyreact to emotions but to truly support users in moments of distress. With features like crisis detection, personalized voice feedback, and suggestions such as breathing exercises, it becomes a comforting presence, not just a device. Its ability to adapt to individual users and even respond to external factors like the weather makes the interactionfeelmorenaturalandempathetic.

Asitcontinuestoevolve,Moodyhasthepotentialtomake mental health support more accessible and meaningful. Withdeeperemotionalinsights,richerinputmethods,and future clinical validation, Moody could become a trusted emotional companion for anyone navigating the ups and downsofdailylife

References

[1] A. B. M. Moniruzzaman and M. A. Rahman, "An emotion-aware chatbot using NLP and rule-based reasoning,"in*2022InternationalConferenceonArtificial Intelligence and Computer Science (ICAICS)*, Dhaka, Bangladesh,pp.120–125,2022.

[2] C. Wang and Y. Lin, "Affective-aware recommendation systems based on text sentiment analysis," *IEEE

Transactions on Affective Computing*, vol. 12, no. 4, pp. 733–741,Oct.–Dec.2021.

[3] D. Kumar and P. Mehta, "Emotion-based movie recommendation using CNN and RNN models," in *2021 IEEE Conference on Big Data and Smart Computing (BigComp)*,Jeju,Korea,pp.345–350,2021.

[4] M. K. Shih and T. Huang, "EMMA: An emotionally intelligent conversational agent for mental wellness," in *Proceedings of the 18th International Conference on Intelligent Virtual Agents (IVA)*, Sydney, Australia, pp. 103–110,2022.

[5] R. Zhang, T. Liu, and S. Patel, "Music recommendation based on physiological signal monitoring using wearable devices," in *2023 IEEE International Conference on HealthcareInformatics(ICHI)*,Houston,TX,USA,pp.221–226,2023.

[6] J. Hu, K. Tang, and X. Zhao, "Speech emotion recognition in voice-based virtual assistants using MFCC and CNN-LSTM architecture," *ACM Transactions on Interactive Intelligent Systems*, vol. 13, no. 2, pp. 1–22, 2023.