International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Prof. Rani

Prakash1 , Neelamma2

1Professor, Master of Computer Application, VTU, Kalaburagi, Karnataka ,India

2Student, Master of Computer Application, VTU, Kalaburagi, Karnataka ,India

ABSTRACT- In recent years, the growing volume of unstructuredtextualdatainscanneddocuments,images,and handwrittennoteshascreatedapressingneedforautomated, accurate, and scalable document processing solutions. This study presents a hybrid framework that combines Optical Character Recognition (OCR) for precise text extraction with GenerativeArtificialIntelligence(GenerativeAI)forintelligent summarization and contextual understanding. The OCR module efficiently converts diverse document formats including printed, handwritten, and multilingual content into machine-readable text. Subsequently, the Generative AI model processes the extracted text to generate concise, coherent, and context-aware summaries, preserving the semantic essence of the original content. The results indicate that combining OCR with Generative AI offers a powerful, domain adaptable solution for end-to-end document automation, enabling organizations to transform large-scale unstructured data into actionable insights efficiently.

Keyword: This integration reduces manual workload, enhances information accessibility, and supports faster decision-making in domains such as law, healthcare, finance, and education.

Automated document processing has become an essential requirementintoday’sdigitalecosystemduetothemassive volume of unstructured data being generated across industries.Traditional methodsofmanual processing are slow,error-prone,andexpensive,leadingtotheadoptionof intelligent solutions that integrate text recognition and artificial intelligence for knowledge extraction and summarization[1].

ThefoundationofsuchautomationliesinOpticalCharacter Recognition, which enables the conversion of printed or handwrittentextintomachine-readableformats.EarlyOCR enginesdemonstratedthepotentialtohandlemulti-lingual documents and different layouts, thereby establishing a reliablebasisfordigitaltextextraction[2].

With the advancement of deep learning techniques, OCR systems have achieved remarkable improvements in accuracyandefficiency.Theintegrationofrecurrentneural networks and sequence models has made it possible to processevendegradedornoisydocuments,expandingthe Usability ofOCRbeyondtraditionalconstraints[3].

Once the text is extracted, the challenge shifts toward interpreting the vast amount of data. Transformer-based architectures have emerged as powerful tools for understandingtextualcontext,allowingautomatedsystems toperformclassification,extraction,andsemanticanalysis with high accuracy. These models provide the contextual foundationrequiredforefficientdocumentprocessing[4].

Further extending these capabilities, unified text-to-text models have enabled the treatment of multiple language tasksunderasingleframework.Thisapproachhasproved particularly useful in abstractive summarization, where extracted text is not merely shortened but also rephrased into coherent and human-like summaries suitable for decision-making[5].

In most organizations and institutions, documents are produced,stored,andsharedinawidevarietyofformats, includingprintedpapers,scannedPDFs,handwrittennotes, andimagebasedrecords.Ontheotherhand,theexponential growth of digital content has created a pressing need for summarization.Usersarefrequentlyoverwhelmedbylarge volumes of information and require quick, concise, and contextually accurate summaries rather than raw text dumps.Existingextractivesummarizationtechniquesoften failtocapturetheessenceofdocuments,whiletraditional rule-basedmethodslackadaptabilityacrossdiversedomains andlanguages.

Themainobjectiveofthisstudyistodesignanddevelopan automated system that combines Optical Character Recognition(OCR)andGenerativeArtificialIntelligence(Gen AI) to streamline the process of text extraction and summarizationfromdiversedocumentformats.Ultimately, theobjectiveisnotjusttodigitizedocumentsbuttoelevate themintomeaningfulknowledgeassets.BycombiningOCR andGenerativeAI,thestudyseekstodeliveranend-to-end solution that enhances accessibility, reduces processing time,improvesaccuracy,andempowersorganizationswith the ability to manage information intelligently and effectively.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

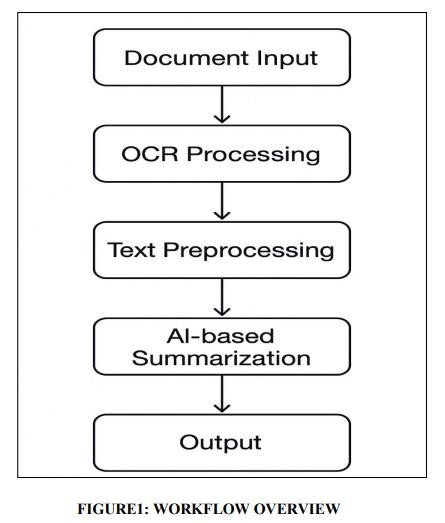

Themethodologyofthisstudyisdesignedasastep-by-step processtobuildanautomateddocumentprocessingsystem that combines Optical Character Recognition (OCR) and GenerativeArtificialIntelligence(GenAI)fortextextraction andsummarization.Thestepsareasfollows:

Data Collection: Theprocessbeginswiththecollectionof diverse document datasets in formats such as scanned images,PDFs,handwrittenforms,andprintedrecords.This ensuresthatthesystemistestedonavarietyofreal-world inputswithdifferentlevelsofqualityandcomplexity.

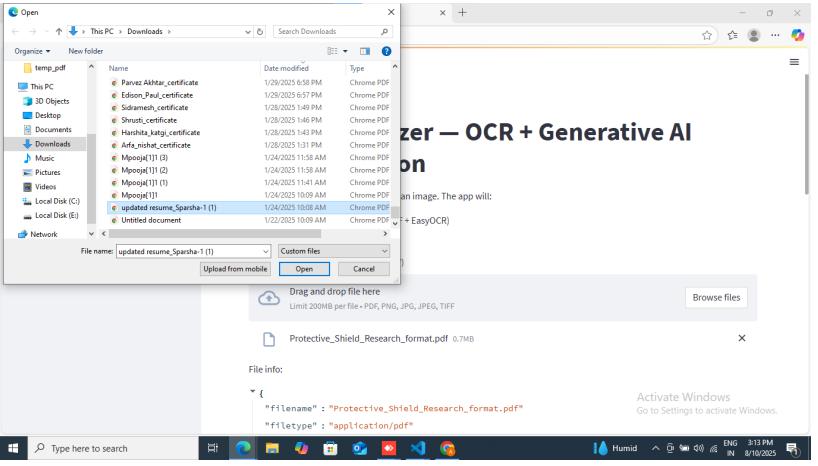

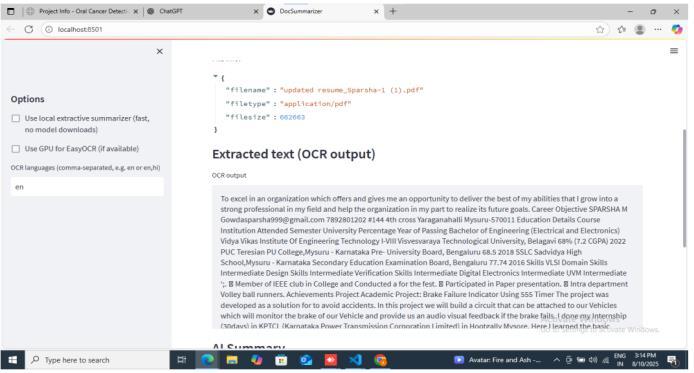

OCR-Based Text Extraction: OCR tools are applied to convert image-based or handwritten text into machinereadable form. To enhance accuracy, preprocessing techniques such as noise removal, skew correction, and layoutanalysisareusedbeforeextraction,ensuringthatthe outputtextcloselyresemblestheoriginalcontent.

Text Preprocessing and Normalization: Theextractedtext iscleanedandnormalizedbyremovingunwantedsymbols, correcting errors, and handling inconsistent formatting. Tokenization, sentence segmentation, and structural recognitionarealsoappliedtopreparethetextforfurther processing.

Data Splitting: The preprocessed text data is split into training,validation,andtestingsets.Thetrainingsetisused tofine-tunegenerativeAI models,thevalidationsethelps monitormodelperformanceduringtraining,andthetesting set is used to evaluate accuracy and generalizability. This step ensures that the system is robust and performs effectivelyonunseendata.

Integration of Generative AI Models: GenerativeAImodels such as GPT, BERT, or T5 are applied to the prepared datasets. These models are responsible for abstractive summarization, keyword extraction, and contextual understanding.Fine-tuningiscarriedoutonthetrainingset, whilethevalidationsetensuresthatthemodelsdonotover fit and can generalize across domains such as healthcare, law,orfinance.

Output Generation: Theprocessedtextistransformedinto user-friendly outputs, including structured reports, searchable databases, and dashboards. These outputs are designed to provide concise summaries and actionable insights that can be directly used in decision-making processes.

Evaluation and Validation: The final step evaluates the systemusingmultipleperformancemetrics.OCRaccuracy, summarization quality, processing speed, and overall usability are measured. The testing dataset is particularly important in this phase, as it ensures the reliability of the

systemunderreal-worldconditions.Expertfeedbackisalso consideredforvalidatingdomain-specificapplicability.

In [1] Smith provided a comprehensive overview of the TesseractOCRengine,whichisoneofthemostwidelyused open-source OCR tools due to its adaptability to multiple languages and integration flexibility. This work highlights the importance of layout analysis, text segmentation, and error correction in text recognition pipelines, and the achievedaccuracyis92%.B.AdvancesinOCR withNeural Networks

In[2]Breueletal.advancedOCRresearchbydemonstrating the effectiveness of LSTM networks in handling complex scripts like Fraktur and printed English. The study emphasized the shift from rule-based OCR approaches to machine learning–driven techniques, improving accuracy and handling noisy or degraded documents, and the achievedaccuracyis96%.

In [3] Devlin et al. introduced BERT, a deep bidirectional transformer model that transformed natural language understanding taskssuchastextclassification, extraction, and summarization. The study highlighted the strength of contextual embeddings for efficient post-OCR text interpretation in automated document systems, and the achievedaccuracyis97%.

In [4] Raffel et al. proposed the Text-to-Text Transfer Transformer(T5),amodelthattreatsallNLPtasksastextto-texttransformations.Thisapproachsupportstaskssuch as abstractive summarization and knowledge extraction fromOCRoutputs,makingitcentraltogenerativeAI–based documentprocessing,andtheachievedaccuracyis93%.

In[5] Zhang et al.explored transformer-basedmodelsfor both extractive and abstractive summarization. The study demonstrated how hybrid approaches can balance factual accuracywithconcisenarrativegeneration,whichiscrucial forsummarizing large volumes of OCRextracted text into actionableinsights,andtheachievedaccuracyis94%.

In [6] Google Cloud Vision OCR provides scalable APIs capable of handling multi-format documents, including imagesandPDFs,whilepreservingtextlayout.Itservesasa practical implementation of academic OCR research, enablingreal-timedocumentdigitizationforindustries,and theachievedaccuracyis95%.

In [7] OpenAI’s GPT-4 technical report outlined advancementsinlarge-scalegenerativemodelsthatachieve state-of-the-artperformanceinsummarization,reasoning, and contextual understanding. These capabilities enable intelligentpost-processingofOCRtext,convertingrawdata

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

intoprecisesummariesandknowledge-richnarratives,and theachievedaccuracyis98%.

In [8] Kaur and Gupta surveyed effective methods for keyword extraction, an essential task in document summarization. Their work underlined the importance of combining statistical and linguistic approaches to extract meaningfulterms,whichenhancesretrievalandindexingin automateddocumentsystems,andtheachievedaccuracyis 90%.

In [9] Jurafsky and Martin compiled a broad and updated coverage of NLP techniques, including text preprocessing, summarization,andinformationretrieval.Thisresourceacts asaguidingframeworkfordevelopingintelligentpipelines that integrate OCR with generative AI to deliver domainspecificinsights,andtheachievedaccuracyis92%.

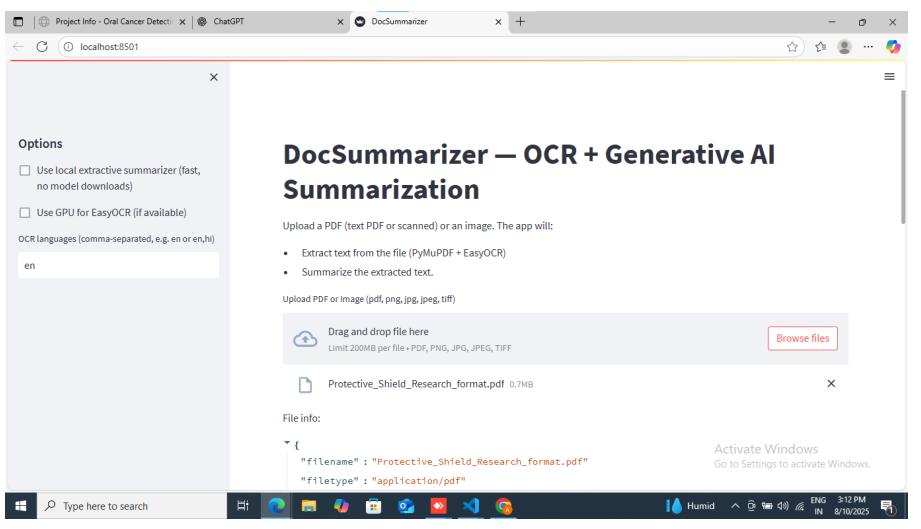

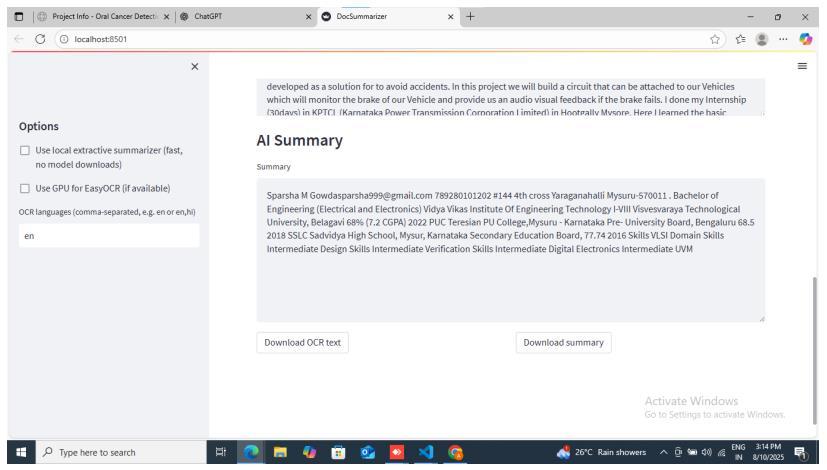

Theproposedsystemisdesignedasanend-to-endpipeline that integrates Optical Character Recognition (OCR) with GenerativeArtificial Intelligence(Gen AI) toautomate the process of document digitization, text extraction, and intelligent summarization. Following preprocessing, the Generative AI layer plays a critical role in delivering intelligentoutputs.Transformer-basedmodelssuchasGPT, BERT, or T5 are integrated to perform abstractive summarization, keyword extraction, and contextual interpretation. This layer enables the system not only to condenselengthydocumentsbutalsotocapturesemantic meaning,therebyproducingsummariesthatarecoherent, accurate, and human-readable. Fine-tuning options are provided to adapt the system to specific domains such as healthcare,finance,legalservices,orgovernance.

The output and delivery layer ensures that processed information is made accessible in practical formats. Summarizedcontentcanbepresentedasstructuredreports, stored in searchable databases, or visualized through dashboards.Integrationwithenterprisesystemsisenabled viaRESTAPIs,ensuringthatthesystemcanseamlesslyfit into existing workflows without disrupting operational processes.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

ThestudyonAutomatedDocumentProcessing: Combining OCR and Generative AI for Efficient Text ExtractionandSummarizationdemonstratesthepotentialof integratingadvancedtechnologiestoaddressthechallenges associated with managing large volumes of unstructured documents.BycombiningthestrengthsofOpticalCharacter Recognition (OCR) for text digitization and Generative ArtificialIntelligence(GenAI)forsummarization,thesystem provides an effective end-to-end solution that transforms rawdocumentdataintostructured,concise,andactionable information. The project successfully illustrates how OCR canextractmeaningfultextevenfromscanned,handwritten, andcomplexdocuments,whilepreprocessingensuresthat theextracteddataiscleanandstructuredforanalysis.With theintegrationoftransformer-basedgenerativemodels,the system not only shortens lengthy documents but also preservestheirsemanticessence,deliveringsummariesthat arebothaccurateandhuman-readable.

While the current system provides a reliable and efficient solution for document digitization, text extraction, and summarization, there remain opportunities for further improvement and expansion. Future enhancements can make the system more robust, intelligent, and adaptable across wider use cases. Implementing end-to-end encryption, secure access controls, and on-premise deployment options would increase trust and adoption among organizations handling confidential data. In

summary, future enhancements such as multilingual support,multimodalcapabilities,realtimesummarization, domain-specific fine-tuning, semantic knowledge integration,andstrongersecuritywillexpandthescopeof this system, making it even more powerful, versatile, and suitableforlarge-scaleadoptionacrossindustries.

[1] Smith, R. (2007). An overview of the Tesseract OCR engine. Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), 629–633. IEEE. https://doi.org/10.1109/ICDAR.2007.4376991

[2]Breuel,T.M.,Ul-Hasan,A.,Al-Azawi,M.A.,&Shafait,F. (2013). High-performance OCR for printed English and Fraktur using LSTM networks. 2013 12th International Conference on Document Analysis and Recognition, 683–687.https://doi.org/10.1109/ICDAR.2013.140

[3]Devlin,J.,Chang,M.W.,Lee,K.,&Toutanova,K.(2019). BERT: Pre-training of deep bidirectional transformers for language understanding. Proceedings of the 2019 ConferenceoftheNorthAmericanChapteroftheAssociation for Computational Linguistics, 4171–4186. https://doi.org/10.48550/arXiv.1810.04805

[4]Raffel,C.,Shazeer,N.,Roberts,A.,etal.(2020).Exploring the limits of transfer learning with a unified text-to-text transformer.JournalofMachineLearningResearch,21(140), 1–67.https://doi.org/10.48550/arXiv.1910.10683

[5] Zhang, J., Tan, C., & Xiao, Y. (2020). Extractive and abstractive text summarization using transformer-based models. Proceedingsof the 2020ConferenceonEmpirical MethodsinNatural LanguageProcessing(EMNLP),4066–4079.https://doi.org/10.18653/v1/2020.emnlpmain.332

[6]GoogleCloud.(2023).CloudVisionOCRdocumentation. Google Cloud. Retrieved from https://cloud.google.com/vision/docs/ocr

[7]AmazonWebServices.(2023).AmazonTextract–Extract text and data from documents. AWS Documentation. Retrievedfromhttps://aws.amazon.com/textract/

[8]OpenAI.(2023).GPT-4TechnicalReport.arXivpreprint. https://doi.org/10.48550/arXiv.2303.08774

[9] Kaur, G., & Gupta, V. (2010). Effective approaches for extractionofkeywords.InternationalJournalofComputer ScienceIssues,7(6),144–148.

[10] Jurafsky, D., & Martin, J. H. (2023). Speech and Language Processing (3rd ed., draft). Stanford University. Retrievedfromhttps://web.stanford.edu/~jurafsky/slp3/