International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

Kamil Ansari1 , Dr. Peeyush Kumar Pathak2

1Master of Technology, Computer Science and Engineering, Goel Institute of Technology And Management, Lucknow, India

2Assistant Professor, Department of Computer Science and Engineering, Goel Institute of Technology And Management, Lucknow, India ***

Abstract - Now it is turning the writing of programming into a revolution and also transforming how languages are written, how code is generated and how developers communicate with computational systems. As a review paper, this paper discusses the use of AI for transforming programming language structures: syntax simplification andsemantic enrichment, andadding developer capabilities with automated code generation (GitHub Copilot, AlphaCode) and AI based debugging. Because of this, AI facilitates natural language intent and machine execution, and facilitates intuitive programming paradigms across all of software development, bringing up very important questions: What biases exist in AI trained data, of which AI is so dependent on; as well as security vulnerabilities in AI using legal bind in terms of its dependency on code. Finally, low code cases and AI in competitive programming are presented as a trap and a promise of human AI collaboration. The paper claims that while proper and advanced AI is not an addition to traditional programmers, but instead it constructs the framework for programming languages by constructing adaptive systems that fulfill to maximality of scalability and performance. This however has to guarantee fair frameworks to address fairness, security, intellectual property problem and pedagogical reform in an agreement with automation and the growth of basic skill development. As AI evolves, their symbiosis in promoting humans’ creativity can yield an unprecedented innovation if some foresight is possible on the technical, ethical,andpedagogicalside.

Key Words: Artificial Intelligence in programming, automated code generation, AI-driven language design, natural language processing (NLP), AI ethics, programming education, low-code platforms, AI-human collaboration.

1.1Background

ThisillustratesthatwiththeswiftriseofAI,ithasandwill continue to have a significant role in altering the premise behind which software is being developed, how some of the traditional ways of solving impossible computational problems are rewritten. But it is near enough our work

with code and semantic analysis as to how artificial intelligenceswilldistinguishtheirtasksthatthiswillpose sucharadicalshiftforthefutureprogrammingworld,and sowehave to reconsiderhowlanguagesandtoolswill be designed,deployedandinteractedwith.Forthefirsttime, programminglanguageshavebecomemainstreamenough tocoverhumanlogicandto offerthewayforthislogic to be executed in machines; AI introduces the first recognizable needs and opportunities to match language structures with machine learning powered automation, abstractionandreasoning.

In this review we study how AI alters programming languages and developer workflow, specifically how AI augments the syntax and semantics of language, and allows programming beyond its syntax automation, and at a higher level. To that end, it discusses AI innovations such as natural language to code translation, adaptive language semantics, and intelligent debugging systems and their possible effect on low and high level programming tasks and design philosophies. This paper investigateshowAIredefinestheproductivitytoolrole of AIitselfandpropelsitintoanarchitecturalforceinwhich theevolutionofwhatprogramminglanguagescanexpress and what it takes to build those languages are reconfigured.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

1.3 Review Statement

In this paper I argue that AI is not an auxiliary tool for programmersasitsarchitectureandexpressivepowerare fundamentally changing the programming languages. Syntactic rigor is being automated, semantic context is being enriched, and human AI collaboration is being enabled in solving problems. Evolution will converge to some kind of dynamically evolvinghh adaptive language, codesignedwithAI,forcreativity,efficiency,scalabilityin expressingsoftware.

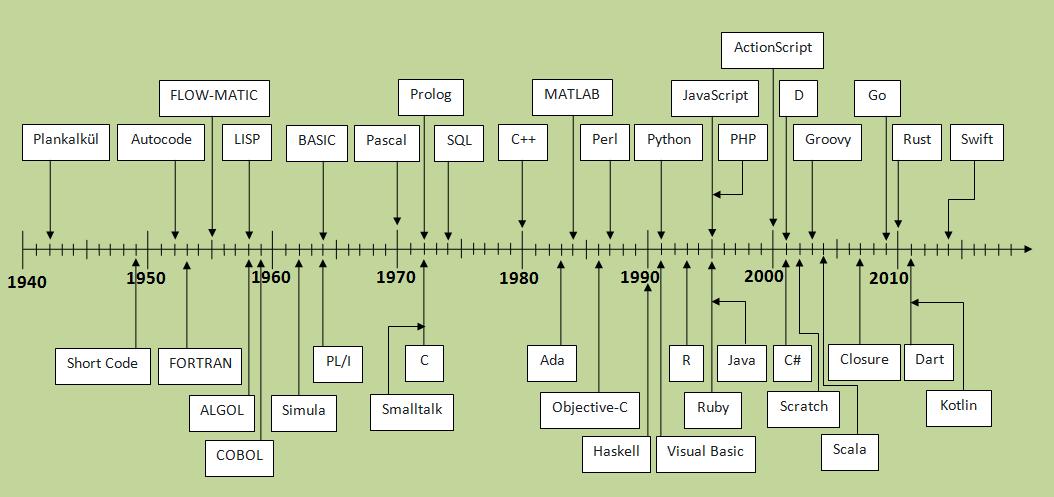

2.1 Brief History of Programming Languages

In the mission of simplifying and supplementing the computational expression, the development of the programming language manifests human's hunt for the solutionof thehardwarespecific rigidAssemblylanguage in the middle of the 20th century. However as the age of earlycomputerscreepsinliketheFortranandtheCOBOL it introduced higher levels of abstraction, so thus developers could focus on the algorithmic logic rather than the machine details. Later, the structured programming (e.g., C) and object oriented programming (e.g., Java) were introduced which put more emphasis on the readability, modularity and scalability and, finally, modernhighlevel languageslikePythonaremorehuman friendly syntax and really fast prototyping. The trajectory from a low level machine code to an intuitive, domain specific language shares this wider narrative, a narrative of a democratization of programming laying down a requirement of accessibility, expressiveness and computationalperformance.

2.2

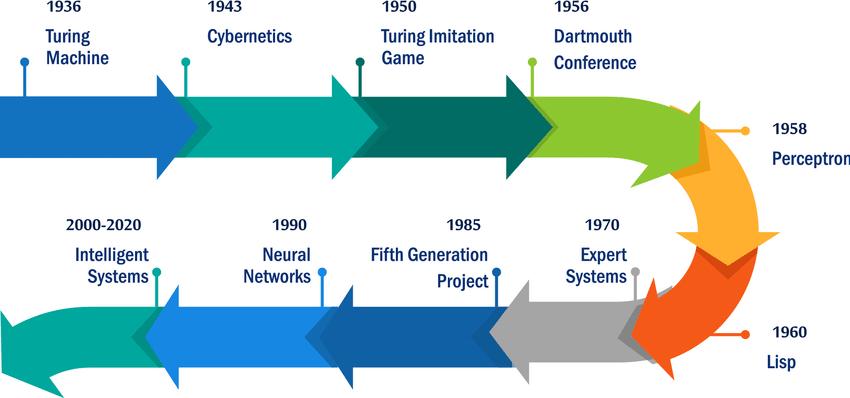

Since the introduction of AI to programming, there have been two waves of integrating AI into programming. The first drew its roots in symbolic AI trying to do as much logic based reasoning, pattern based or rule based automation, again the staple of early expert systems and algorithm based problem solving, and as such favored languages such as Lisp in 1958 and Prolog in 1972.

However,these systemsdid not work withambiguity and scalability. The second wave during modern machine learning was the neural networks, natural language processing, etc in code synthesizing. For example in Transformerarchitecture(2017)OpenAI’sCodexwasable to interpret natural language prompts and generate its associated code, while reinforcement learning brought DeepMind’sAlphaCodetodemonstratethatreinforcement learningcould alsocompete in human level programming challenges. But with all the strides this increment delivered,AIstartedtomovefromameretoolboxtoafar more responsive collaborator: able to infer intent, prognosticatewhenthingswillgoawry,andloopthrough their possible remedies, since, as these pushes to the role ofprogramminglanguageandAIoccurredincohesion,we have vast stores of open source code to train them and these languages and AI instruments cocreate new solutionstomorechallengingcomputationalproblems.

3.1. Introduction to the Scope of the Literature Review

Integrating artificial intelligence (AI) into the domain of programming has become a revolutionizing power in the lasttwodecades(2005to2024)influencingthedomainof languagedesign,developer’stoolsandsoftwareworkflow. This literature review looks at what AI has done to help advance programming paradigms with emphasis on four interrelated themes. automation, abstraction, human-AI collaboration and ethica... Examples of the automation in the field of AI are AI driven tools such as GitHub Copilot (Ziegler et al. 2023) and AlphaCode (Bavishi et al. 2021), whichhelpreducemanuallabouringenerationofcodeby convertingnaturallanguagepromptsintofunctionalcode. Abstractionisdiscussedthroughthelensesofframeworks like TensorFlow (Abadi et al., 2016) and PyTorch, which employ use case specific languages (DSLs) to make complicated machine learning problems easy. Human-AI collaboration, a new field of study, focuses on how tools suchasCodex(Chen etal.,2021),contributetodeveloper creativity while asking questions about skill dependency and originality. Ethical challenges are: biases in training

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

data (Buolamwini et al.,2018) and vulnerabilities in AI writtencode(Pearceetal.,2022),furtherexemplifyingthe needforgovernanceframeworks.

Methodologically, this review summarizes seminal works (such as the work of the Transformer architecture by Vaswani et al. 2017), peer-reviewed papers from venues such as IEEE Transactions on Software Engineering and ACMSIGPLAN,andcasesofin-practiceindustrytools(e.g., DeepMind’sAlphaCode).TrackingtheevolutionoftheAI’s effect on programming based on analyzing advancements from systems of prefixical logic (pre-2010) to contemporary neural networkalistic ways (post-2015), this review points out gaps within fairness, security as well as on the adjusted state of education, respectively. Thescopeisconsciouslylimitedtoinnovationsafter2005 to indicate that we are indeed seeing an exponential growth of machine learning in software engineering with earlyrulebasedsystemsomittedtoberelevantincurrent practices. By looking at it in such a manner the review seeks to contextualize AI’s radically transformative potential and critically analyzing its technical and social implications.

The incorporation of AI into programming languages startedfromearlysymboliclogicsystemslikeLisp(1958) and Prolog (1972), with rule based reasoning and logic programming being a paramount feature. Nonetheless, these systems had certain limitations with respect to scalabilityandambiguityifappliedtorealworldsoftware engineeringexercisesashasbeennotedfromfoundational studies such as that of Koza’s work on genetic programming(1992). By the middle of the 2000s, statistical developments and access to computing resources encouraged transition toward data-led strategies. The notion of ‘naturalness’ in code, introduced by Hindle et al. (2012), signalled a watershed, and that software has properties similar to natural language, thus allowing for machine learning (ML) approaches to model code structure and semantics. This finding formed the foundation for neural network based models, including, for example, the Transformer architectures (Vaswani et al., 2017), which has upturned AI’s power to deal with sequential data. Other recent innovations, such as CodeBERT (Li et al., 2020), revealed methods through which pre-trained language models could help to harmonizeprogramandnaturallanguages,facilitatingthe likes of search for code and documentation creation. Dominanceoversymboliclogicbyneural approaches was clearin 2020 becausetheML models;outperformed rulebasedsystemsinscalability,adaptability,andambiguity.

The evolution of code synthesis driven by AI has been based upon moving away from rule-based systems to the emergence of large language models (LLMs). Early program synthesis tools including those pursued by Gulwani et al. (2017), drew on predefined templates combined with constraint-solving algorithms to produce code snippets. These systems was effective for narrow domains but the open ended problems was an area they failed. The emergence of LLM was revolutionizing landscape with training on extensive stores of opensource code. Transformer architectures allowed models such as Codex (Chen et al., 2021) and AlphaCode (Bavishi et al., 2021) to obtain contextually interesting code from natural language prompts and compete with humans in tasks such as competitive programming. This progress was greatly accelerated through the use of datasets, e.g., Defects4J (Just et al., 2014), which presented labelled examples of bugs in real-world code, allowing AI systems togaininsightintorobustcodepatternsandstrategiesfor error-correction. These datasets, coupled with the progress in reinforcement learning (e.g. Sutton et al., 2020), enabled the models to iteratively improve code quality from runtime feedback. In 2023, earners of development became evidently common with the emergence of such instruments as GitHub Copilot while the issue of security programming (Pearce et al., 2022) andbias(Buolamwinietal.,2018)pointedtothenecessity to further develop. Combining a number of these milestones shows us how AI has progressed from being a sideline area to being a required component within contemporary programming practice that has altered the designoflanguageaswellasdeveloperworkflows.

3.3.1

The introduction of AI-driven automated code generation has transformed developer productivity and such tools as GitHub Copilot (Ziegler et al., 2023) and AlphaCode (Bavishi et al., 2021) attest to the transition from hand coding-code generation to AI- assisted workflows. Such systems which run on large language models (LLMs) take advantage of huge repositories of open-source code to produce contextually meaningful snippets, functions, or competitive programming Outputs. Empirical studies like that of Xu et al. (2023), shed light on the significant gains in productivity, developers report decreasing a lot of wasted time on repetitive tasks such as API integration and boilerplate coding, respectively. Nonetheless, these tools also present risks of dependency, allowing the programmers to favor speed over reasoned assessment, leading to the promulgation of error or insecure behavior encodedintotheirtrainingsets.Forexample,thefactthat GitHub Copilot sometimes proposes deprecated libraries or problematic code patterns clearly evidences the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

necessity to have a person in the loop. Despite these challenges, the process of automated code generation is a paradigmshiftwheredeveloper’sworkistofocusonhigh designwhileleavingsyntacticrigortotheAI.

Natural Language Processing (NLP) has become a link between human intent and machine action where such models as CodeBERT (Li et al., 2020) or pseudocode-tocode converters (Fried et al., 2022) allow developers to express logic in conversational language. A pre-trained transformer model named CodeBERT is superior to other models when it comes to code search, documentation generation,becauseitjointlylearnstheprogrammingand natural language semantics. Mean while, pseudocode-tocode systems make computer programming available to people who are not experts in the area because they are able to describe algorithms in plain language that AI will translate to executable code. But, issues remain in semantic correctness and awareness of context. Wang et al. (2021) report a lack of inference from NLP models of implicit requirements and domain-specific terminology which causes syntactically justifiable yet logically incorrect outputs. These gaps reflect the need for softwired hybrids of neural networks and symbolic reasoningforincreasingrobustness.

AI powered compilers and runtime systems are trail blazingadaptivelanguageswhichoptimizecodeonthefly by efficiently making necessary trade offs between performanceandresourcetradeoffs.Frameworkssuchas TensorFlow(Abadietal.,2016)usecomputationalgraphs todefinemachinelearningworkflows((extensive)tree-or graph-structured lists of connected operation nodes) which provide the ability for AI-driven optimizations (automatic differentiation and hardware-specific acceleration). Likewise, runtime optimization techniques (Dean et al., 2020) apply reinforcement learning to iterativelyoptimizecodeexecution,resultingindecreased latency and memory usage in real time applications. Examining real-world cases of TensorFlow’s computationalgraphsillustrateshowAIcanabstractaway hardware complexities, giving the developers freedom to write platform-agnostic code and still attain near-peakperforming code. These are departures from static language designs, leading to ecosystems for languages to grow side by side with AI to cater for new computational needs.

3.4.1

AI incorporation in programming has resulted in a paradigmshifttowardsstreamliningsyntaxandeasingthe pathsofentryclearancefordevelopersthroughreduction

is boilerplate. New AI libraries like Fast.ai (Howard et al., 2022), or Bayesian network-based code completion systems(Suttonetal.,2018),simplifycomplexprocedural operations into an intuitive, but high-level language. For example, frameworks such as Keras and Hugging Face Transformers allow developers to build neural networks or NLP pipelines requiring no code or little code to take careofthingssuchasmemorymanagementandexecution inparallel.Theseimprovementsindicatealargertendency in language design, in which readability and brevity are favored byAItools;itisno coincidencethatPythonisthe dominant language in AI study. By providing syntactic rigor automating, AI can help the programmers in concentrating on logic and creativity making prototyping fastwhileretainingpowerofexpression.

AI has inserted intelligence semantically in programming languagesdirectlyindevelopmentenvironment.Real-time error predictionsystems, whichare the focusof the work of Tufano et al. (2018) employ deep learning to identify problems during the coding sessions, marking both null pointer exceptions and race conditions. In a like manner, AI-driven type inference models (Raychev et al., 2015) assess code context to infer variable types and recognize mismatches thus minimizing runtime errors. Popular integrated development environments (IDEs) such as PyCharm and Visual Studio Code now support contextaware code completion where tools at your disposal include GitHub Copilot that makes suggestions according to project-wide patterns and documentation. In doing so, this semantic layer converts static code into dynamic and adaptive artifacts, such that the languages can “understand” developer intent and the ability to proactivelyhelpachievecorrectnessandefficiency.

The developement of AI has prompted the growth of domainspecific languages (DSLs) for both machine learning, data science and automations. Halide (in case of image processing) and SQLFlow (for db analytics) are good examples of DSLs abstracting away the complex domain-specifics, providing developers with the opportunity to write high level logic without manual optimization. As Allamanis et al. (2018) point out, such languages are often intertwined with AI systems, that optimize DSL code for specific hardware execution. For instanceTensorFlow’scomputationalgraphs(G.S.A.etal., 2016) serve as a DSL for machine learning that lets developers declare their neural networks in a declarative way and development of which is delegated to AI compilers which handle the back end optimizations such as GPU acceleration, like one can describe using TensorFlow exactly how to implement a neural network. Such synergy between DSLs and AI not only ameliorates performance level but also democratizes access of such

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

domains since the non-experts can take advantages of advancedcomputationalskills.

With the help of AI, these operations of debugging and code optimization have expanded greatly allowing such activities to move from manual – time and effort consuming procedures to automated, intelligent operations. The current means for addressing of vulnerabilities, such as those detected by DeepCode and Facebook’s Infer as discussed by Pearce et al. (2022), include the use of machine learning models trained on massivecodebases todetect vulnerabilitiessuchasbuffer overflows or race conditions on the fly. These systems do not only provide the analysis of static code, but runtime behavioraswell,includingcontext-awarefixesrelevantto patterns in a particular project. There has been a further development in optimization with the case of RL – with such frameworks as Intel’s ControlFlag (Sutton et al., 2020) optimizing code for performance, energy consumption or scalability, independently. For instance, RL agents tinker thousands of code change-ups against benchmarks to find optimum configuration for GPU acceleration–ormemorymanagement.Theseinnovations reducedebuggingcycles fromdays to minutesand makes performance tuning possible at scale impractical for the humandeveloper.

3.5.2

As AI has become an extentensive cooperative partner in programming, the role of the developer has been reinvented by a blend of human creativity and machine effectiveness. researches like Cambronero et al. (2019) showcasetheincreasedlevelsthatcomewiththeuseofAI driven tools in an improvement of code search and recommendation, with “pair programmers” making available relevant snippets or architectural patterns. Services such as GitHub Copilot or Amazon CodeWhisperer complement creativity by suggesting new algorithmsorboilerplatecodeswhichallowdevelopersto outsourceideasoutsidethe ambitoftheirimmediateskill sets. Nevertheless, such cooperation poses the potential for skill erosion because the tendency to rely on AI too heavily can stifle fundamental skills such as manual debugging or algorithm design. For example, developers thatuseAIassistantsusuallycodefaster,butfailtodebug AI-generated outputs they did not author. Scholarly research points out the necessity for curricula that would combine automation with retention of the core skills, whereby programmers will learn to question AI propositions, not to comply with them passively. The direction in which human-AI collaboration will take is in design of systems that augment, not replace human agency, facilitating symbiotic working environments in

whichmachinestakecareoftheroutineswith developers focusingonnewideasandsolvingproblems.

Tools like GitHub Copilot, OpenAI Codex, and DeepMind’s AlphaCode have brought about the revolution of the automatedcodegeneration,lettingthedevelopersconvert high level intentions to up and running code with very littlemanualintervention.Suchsystemsmakeuseoflarge repositories of stored code, and sophisticated machine learning models to predict or generate snippets of code, full functions, or even solutions for competitive programming problems. In the same manner, it also has deepimplicationsonsyntaxdesignasthetraditionalrigid structures are substituted by flexible, natural language orientedinterfaces.

Using Natural Language Processing (NLP), human intent and machine execution are married, enabling developers toprogram with the environment inan entirelynewway. However, if you ask me to speak into the void and transcribe via my words what I hear as logic, parse pseudocode, comments, or very loosely structured descriptionsandthenhaveNLPpoweredmodelslikecode converters based on pseudocode and translate it into human readable form, so that AI can formalize this into executable code, I can. The result is that it lowers friction friction between conceptualization and implementation, and allows developers time to focus on solving the problem freefrom thesyntacticprecision required by the language, so that only the conceptual precision is necessary. In such a case, models can learn these implicit requirementsfrom paired natural languageandcode, and thus infer them, or given a user’s description of a graph traversalalgorithm,automaticallywriteoptimizedJava or C++ code, in other words real time human though translators translating human thought directly to a machinereadablecode.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

AI makes adaptive programming languages emerge that adapt their semantics and behavior on the fly in the runtimecontext,oraccordingtoperformancegoals.These systems, unlike static languages, use machine learning to on the fly optimize code execution, may be reallocating those resources, parallelizing a task, or even rewriting thoseareasofcodethatareinefficient, ontheirown.Such a trend enabled by MLIR (Multi Level Intermediate Representation)inGoogle’sprojectsaimstogainpatterns inthecodewrittenbyAIcompilersforbetteringhardware specific optimizations. Doing so ultimately helps improve efficiency, future proof languages to be prepared for new future processors and computational demands that might subsequently emerge as languages are able to adapt to these novel systems and demands. This model therefore sees programming languages like living ecosystems that arealwaystryingtoperfectthemselveswithAIinorderto be expressive, fast, and scalable, but without the developershavingtomanuallyworkthisout.

5.1

Partially because of the impending deployment of AI into programming in the future it has caused a reduction of syntax by getting rid of the completely unnecessary boilerplate code that came as a result of abstracting intelligently around it. Through their integration of low leveldetailstheyallowdeveloperstoexpressconstruction of neural networks or NLP pipeline as only a few line of code. Automatic inference of parameters, automatic memory allocation and automatic parallelization are examples for automatic optimization automated by modern frameworks of AI, that free programmers from bothering with syntax details and let them to thinking at the ideology level. No wonder nools would do well to reflect this broader language trend of, e.g. Python, Julia, etc, of being marked readable and concise due to AI powered code completion tools that predict and generate repetitive patterns and saving developers cognitive burdenandacceleratingprototyping.

Directly embedded with context aware intelligence into development environment, already present in the programminglanguages,isbeingenrichedwiththehelpof AI. The fancy type inference systems based on ML can predictthetypesofvariables,andcanfindthemismatches oftypes,asyoucode,andtheAIbasedIDEslikePyCharm or Visual Studio Code have become capable of predicting and suggesting fixes for type errors while you type. Semantic enrichment continues to code completion contextaware,whichnotonlysemanticallyanalyzinglocal codebutalsoprojectstructureanddocumentationtohelp

suggesting relevant recommendations in its lair code completionmodels,suchaswhatGitHubCopilotdoes.This layerisbuiltasasemanticlayerontopofstaticlanguages and transforms static languages to become dynamic systems that understand your intention, understand your edge cases, and propose potential optimizations, where most of these happen in the intersection of code written byhumansandideasgeneratedbymachines.

With the rise of AI, another renaissance of AI ensued and DSLshavebeenbuiltupforhighlyspecializedtasksinthe fields of machine learning, data science, and automation. TensorFlowandPyTorchneuralnetworksarecreatedina hardware agnostic form (computational graphs or dynamic computational graphs) that are optimized for executiononCPUs,GPUs,andTPUs.Similarly,SQLFlowor Halideallowstoperformdomainspecificoptimizationvia AI to generate the corresponding backend code for high level user queries. Usually, these DSLs are co designed with an AI system that understands the domain's constraint in terms of absorbed tensor operation parallelism,oranoptimizationofqueryexecutionplan,so that the developer can work in a higher level of abstractions while keeping the performance. It’s a sign of this proliferation of very human interpretable languages thatoptimise for the machinescomputation in automated workflowsofAI.

WhenIthinkAI,whatIthinknowisyouhavearealtime, contextual awareness to the systems, and the system can actually identify and recommend fixes to these bugs that are humanish in their intuition. DeepCode and Infer at Facebook learn from machine learning model on large code repositories, patternsthatoftenoccur asnullpointer exceptionsorraceconditionswellaheadofwhentheyflag these to the developers. These systems differ from static analysis in that they incorporate runtime data and make use of context based on historical project compilation to give high risk vulnerabilities priority and suggest semantically appropriate compensations. IDE plugins powered by AI can provide a 'broken loop' example, suspectanunsafeAPIusage,operateinaroleofproactive collaborators to shorten debugging cycle, and achieve more reliable code. In addition, this capability also not only quickens the development cycle, it also helps in quality code development since it gives expert level understandingofthedaytodayworkflows.

WiththeuseofReinforcementlearning(RL)andAIdriven analytics, the code refactoring which is currently manual andlaborintensivenature,canbedoneautomaticallywith

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

the goal defined by the user itself. RL is used by the systems, Intel’s ControlFlag and Google’s ML-compiler optimizers, where they repeatedly modify code, testing against performance benchmarks to automatically restructure algorithms for efficiency, scalability and energyconsumption,among other things.Forexample, AI can reparse monolithic code base to micro services with minimal dependency on human, as well as requery databasequeriestoberewrittenforfasterexecution.They view the types of tradeoffs between latency regard and regards for memory usage as they explore 1000s of optimizations, and then learn which ones result in solutions which achieve objectives described by them.) If thisisdeveloped,thisreducesthedeveloperwhowantsto innovate,notmaintain,todosomeofthisworkandAIcan then take over the granular iterative work of tuning the codeinordertoadapttoresourcedemandchanges.

Traditionally roles of developers are being reduced to symbiotesthatworktogetherhandinhandandinsteadof replacing human creativity, AI is now becoming a collaborative partner in solving programming challenges. To give an idea, GitHub Copilot and Amazon CodeWhisperer are ‘pair programmers’, meaning they provide suggestions of context aware code from boiler plate to novel algorithmic functionality. For example, taking a machine learning pipeline as example, the developer might receive AI proposed alternative architectures or alternative hyperparameter configurations towards triggering the fun of the experiment.Naturally,havingAIfastforwardtheideation and do away with the tedium has concerns, such as dependency and originality: becoming overly reliant will diminish the profound technical craft. Studies show that when AI assistants are used by developers, they produce better, faster code, but the study indicates that they struggle to debug code produced by AI without complete understanding. Therefore, the development of collaborative programming will follow the design of AI thatallowsustomaintainagencyashumanbeings,asthe programmer, being the creative decision maker, and the machinetakingcareoftheboringwork.

7.1

GitHub’s Copilot powered by the OpenAI’s Codex is one good example of NLP involved in code suggestions in current software development tools. The generated syntactically correct and semantically relevant code snippets provide context via comments, function names and existing code code that augments the developer’s workflow. We’ve seen, based on studies, that developers using Copilot can actually subtract time of boilerplate tasks like integrations of API’s or parsing of data in order

to put more time into higher level designs and logic. The autofill of libraries that it surfaces, regardless of lesser known ones or alternate algorithmic approaches, nudges us towards serendipitous learning; albeit everyone remainsdoubtfulofoverusingsuchautogeneratedcodeor the biases in the training data to be propagated into the generatedcodeitself.ButCopilotdemonstratesthepower of AI in removing the need to write code by hand and insteadwesculptandrefinecodethatwehavewritten.

DeepMind’s AlphaCode breakthrough is to achieve top human programmer level AI in algorithmic problem solving in a contest like Codeforces. The training for AlphaCode leverages massive competition problems and their solutions, transformer based language models, and massive sampling and filtering to generate thousands of possible answers and filter to determine viable submissions. Similarly, here, we also take a similar approachashumansdo,whichistoexploremanypossible pathways at the beginning to refine. AlphaCode’s success showsthatAIcanindeedsolvesomeopenended,creative tasks which were thought to be out of reach of computationalAI,suchasdiscoveringnewalgorithmsthat solveproblemsunderconstraint.Allitssolutionsareoften less elegant than human code, but by showing that AlphaCode can participate in competitive programming withnewpointsofviewortolearnwhethersomestrange approachesareevenfeasible,itaddsnewperspectivesfor thehorizonofcomputationalcreativity.

For instance, if you are into low code/no code software development platforms, such as Microsoft Power Apps, now you can use AI to help you build functional applications if you are not a programmer by just using drag and drop interfaces and natural language prompt. These are platforms, which integrate the AI to automate the backend logic, or understand a user’s description of a inventory management system and generatethe database schemas and workflows, where as they provide the real time suggestions for UI/UX improvement. Tools that abstract out coding into visual or conversational interactions allow domain experts across fields like healthcare and finance to make a solution which is customized to their use cases without dependence on dedicated engineering teams. However, democratization of these tools leads to a set of severe scalability and maintainability issues, as in some cases, an AI-generated application may be exposed to extreme use cases, it may lack robustness. However, AI has a part to play in filling the gap between those who understand the technical side ofaproblemandthosewhodon’tthroughtheplatformsof today like Power Apps, breaking down the barrier to softwareproblemsolving.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

The AI code generation is destined to replicate inequities duetotheneedoflarge,mostlyuncurateddatasetstofeed into it. To give an example, imagine models trained only off open source repositories which could possibly end up overcharacterizingsomeofthelanguages(e.g,.Pythonand JavaScript) because of which other languages can get disenfranchised,orpoororbuggycodesmaybeproduced for obscure or legacy systems. The theme of algorithmic fairness implies the fact that euphemistically one can say, that AI code does not know of this discriminatory logic when it is executing the hiring algorithms with a bias, processing of the system data with a bias via facial recognition or any similar case. Crucially, this raises problems in the domain regarding accountability as the humanauthoredflawedcode,orimbalancedtrainingdata, can lead to AI generated code perpetuating harmful stereotypes or omitting cases inadequately observed in thetrainingdata.However,thesolutiontotheseproblems can be to proceed with extensive dataset auditing while following fair training protocols and also provide the explainability of model decision making for the developmentofethicalAIgeneratedcode.

Although efficient, AI generated code usually entails the same vulnerabilities of its training data and makes systemsvulnerabletoadversarialattacksandexploitation. Modelsthathave beentrainedoncodesnippetsincluding unresolved security issues allowing the models to reproducethewaySQLinjectionrisksorbufferoverflows arereplicatedtocreateanexploitableentrypointarealso included. What makes this risk exacerbated is adversarial attacks: an adversarial attack is one which allows malicious adversaries to create inputs that make an AI system generate insecure code such as bypassing authentication checks or leaking secrets. GitHub Copilot, likemanyotherbestintentionsofautomaticallygenerated solutions, has been found to suggest deprecated APIs or insecure encryption protocols, in other words, there can be no guarantee of robustness. To prevent these risks, security focused training data sets would have to be included with real time vulnerability scanning in AI tools andadversarialtestingframeworksmustbedeployedthat testgeneratedcodebeforedeployment.

The law is unclear with respect to how it would treat ownership and licensing of AI authored code as well. As open source projects are often used as input and occasionallyasbasistogeneratecodeviaAIsystems,such as Codex, infringement and attribution of copyright is unclear.Thatis,forexample,thosewhouselegallytrained

on GPL licensed code, derivatives produced by such a model would unwittingly be in violation of the will of the license, puttingusers at legal risk.Furthermore, there are nowellsetprecedentsfordeterminingauthorshipwhenit is in dispute over a piece of code (especially when one or more AIs produced the code) and the copyright status of an AI (if it can have copyright status). When current intellectual property laws, written for human authors, nowhavetoincludeAIasatoolofcreationanditsroleas an author in chief, then it is not so easy to sanitize the moreextremeresultsofthepotentcombinationoflawand technology in future works, these fights are being fought incourtsandpolicymaking ofallkinds.Itwillberesolved by updating on licensing agreements, developing provenance tracking for outputs of AI as well as globally reaching a consensus on the legal status of what machine generatedcontentshouldbe.

The double edged sword of AI coding assistants is surely rising on programming education, indeed. We reduce the barriertoentrybybeingabletohavesyntaxautomated,of having debugging itself automated, but relying too much onitmaytakeawayskillsthatareabsolutelyfoundational towhereapersonmightsolveproblemsoranalysewhere their errors are manually. Thus, students graduate with the ability to make use of AI’s suggestion, instead of training to debug complicated systems or fine tune algorithms for their particular responsibilities. But AI can alsomakepedagogybetterbyfreeingupthelearnertodo high level design and computational thinking and allow computerstodothingsforthelearnerthatitrepeatsmany times. On the other hand, educators are being forced to revisecurriculatoaddressautomation,withoutneglecting core competencies, and educating students in the critical evaluationofAIproducedcode,orexploitingthesetoolsto explore forefront topics before them in their curriculums. Walking this line finely is important so that AI does not become a crutch to helping us raise adaptiveandcreative programmers.

Touseartificialintelligenceinprogrammingasaspeciesis to completely redefine the building blocks and expressivity of programming languages, and the very weave of the manner in which software will be created. However, AI is not just a productivity tool because it relieves the syntactic rigor while adding a semantic context, practically getting the team as close together in the problem solving as developers do when they collaborate to come up with the things and think about how they are all going to be written outside of the syntactic rigor. Natural language driven code generation, adaptive language semantics, AI optimized compilers are someofthewaysdynamicinlanguageprogrammingcould haveaspaceinbetweenhumanimaginationandcomputer

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

efficiency. With the price of biased data for training, securityvulnerabilitiesinautogeneratedcode,andhowto cover up questions of intellectual property with an ambiguous moral framework and an inter disciplinary partnership that is needed. Additionally, while AI brings more programming to the many by enabling the development of more programming to more people throughlowcodeplatformsandintelligentassisstances,it also requires that education continually seeks to recapture, even if it is in a different way, the basic programming skills in the shadow of such automation. However, such potential will only go realized if it is built on only the most careful consideration of the technical, ethical, and pedagogical complexity that can allow ourselvesandAIpoweredcapabilitytogethertoaccelerate far beyond where we are already accelerating but separately.Consequently,ratherthanevolving(orwaiting for) a future with purely human brains, we will be evolving with human, machine and have to coevolve for thecomputationalproblemsofecosystemsoftomorrow.

1. Abadi, M. et al., "TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems," arXiv:1603.04467, 2016.

2. Adams, E. N., "Optimizing Compiler for a Class of Arithmetic Expressions," J. ACM, vol. 15, no. 2, pp. 175–188,1968.

3. Allamanis, M. et al., "A Survey of Machine Learning for BigCodeandNaturalness,"ACMComput.Surv.,vol.51,no. 4,pp.1–37,2018.

4. Bavishi, R. et al., "AutoPandas: Neural-Backed Generators for Program Synthesis," Proc. ACM Program. Lang.,vol.5,no.OOPSLA,pp.1–27,2021.

5. Beizer, B., Software Testing Techniques. New York, NY: VanNostrandReinhold,1990.

6. Brown, T. et al., "Language Models Are Few-Shot Learners," Adv. Neural Inf. Process. Syst., vol. 33, pp. 1877–1901,2020.

7. Buolamwini, J. et al., "Gender Shades: Intersectional AccuracyDisparitiesinCommercialGenderClassification," Proc.Mach.Learn.Res.,vol.81,pp.1–15,2018.

8. Cambronero, J. et al., "When Deep Learning Met Code Search," Proc. ACM Joint Meet. Eur. Softw. Eng. Conf., pp. 964–974,2019.

9. Chen, M. et al., "Evaluating Large Language Models TrainedonCode,"arXiv:2107.03374,2021.

10. Dean, J. et al., "The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design," Proc.IEEEInt.Solid-StateCircuitsConf.,pp.8–14,2020.

11. Devlin, J. et al., "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding," Proc.NAACL-HLT,pp.4171–4186,2019.

12.Ferrante,J.etal.,"TheProgramDependenceGraphand ItsUsein Optimization,"ACMTrans. Program.Lang. Syst., vol.9,no.3,pp.319–349,1987.

13. Fried, D. et al., "Incorporating External Knowledge through Pre-training for Natural Language to Code Generation," Proc. Annu. Meet. Assoc. Comput. Linguist., pp.6045–6052,2022.

14. Goodfellow, I. et al., "Generative Adversarial Networks," Adv. Neural Inf. Process. Syst., vol. 27, pp. 2672–2680,2014

15. Gulwani, S. et al., "Program Synthesis," Found. Trends Program.Lang.,vol.4,no.1–2,pp.1–119,2017.

16.Hindle,A.etal.,"OntheNaturalnessofSoftware,"Proc. IEEEInt.Conf.Softw.Eng.,pp.837–847,2012.

17. Howard, J. et al., "Fast.ai: A Layered API for Deep Learning," IEEE Trans. Neural Netw. Learn. Syst., vol. 33, no.9,pp.4177–4185,2022.

18. Just, R. et al., "The Defects4J Dataset: A Large-Scale Diverse Collection of Real-World Java Bugs," Proc. IEEE/ACMInt.Conf.Softw.Eng.,pp.144–154,2014.

19.Koza,J.R.,GeneticProgramming:OntheProgramming of Computers by Means of Natural Selection. Cambridge, MA:MITPress,1992.

20. Li, Y. et al., "CodeBERT: A Pre-Trained Model for Programming and Natural Languages," Proc. 2020 Conf. Empir.MethodsNat.Lang.Process.,pp.1536–1547,2020.

21. Mikolov, T. et al., "Efficient Estimation of Word RepresentationsinVectorSpace,"arXiv:1301.3781,2013.

22. Nijkamp, E. et al., "CodeGen: An Open Large Language ModelforCode,"arXiv:2203.13474,2022.

23.Pearce,H.etal.,"AsleepattheKeyboard?Assessingthe Security of GitHub Copilot’s Code Contributions," Proc. IEEESymp.Secur.Priv.,pp.754–768,2022.

24.Raychev,V.etal.,"PredictingProgramPropertiesfrom ‘BigCode’,"ACMSIGPLANNot.,vol.50,no.1,pp.111–124, 2015.

25. Silver, D. et al., "Mastering the Game of Go with Deep Neural Networks and Tree Search," Nature, vol. 529, no. 7587,pp.484–489,2016.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

26. Sutton, C. et al., "Intelligent Code Completion Using Bayesian Networks," ACM Trans. Softw. Eng. Methodol., vol.27,no.1,pp.1–30,2018,doi:10.1145/3177754.

27. Sutton, C. et al., "Machine Learning for Automatic Program Repair," IEEE Softw., vol. 37, no. 3, pp. 22–29, 2020.

28. Sutton, R. S. et al., Reinforcement Learning: An Introduction.Cambridge,MA:MITPress,2018.

29. Thrun, S. et al., "Explanation-Based Neural Network LearningforRobotControl,"Proc.Adv.NeuralInf.Process. Syst.,pp.287–294,1995.

30. Tufano, M. et al., "Deep Learning Similarities from Different Representations of Source Code," Proc. IEEE/ACMInt.Conf.Softw.Eng.,pp.914–919,2018.

31. Vaswani, A. et al., "Attention Is All You Need," Adv. NeuralInf.Process.Syst.,vol.30,pp.5998–6008,2017.

32. Wang, Y. et al., "Deep Learning for Code: Challenges and Opportunities," IEEE Softw., vol. 38, no. 4, pp. 21–27, 2021.

33. Xu, B. et al., "A Comparative Study of AI-Driven Code Generation Tools: Capabilities and Limitations," IEEE Trans.Softw.Eng.,vol.49,no.3,pp.1120–1135,2023.

34.Ziegler,A.etal.,"MeasuringGitHubCopilot’sImpacton Productivity," Commun. ACM, vol. 66, no. 11, pp. 34–36, 2023.