General-purpose robots require a “data flywheel” to collect data from teleoperation and simulation, train policies, and evaluate whether they’ll work, notes Boston Dynamics.

Editor’s Note: Where can robotics and AI take us?

Why humanoid robots aren’t advancing as fast as AI chatbots

Scraping the web to train generative AI is one thing, but training robots for real-world tasks is an order of magnitude more challenging, according to UC Berkeley Prof. Ken Goldberg

MIT roboticists debate the future of robotics, data, and computing

Despite the growing interest in AI and robotics, there isn’t a single approach to applying machine learning to the physical world. Experts from MIT, EPFL, and Georgia Tech discuss possibilities.

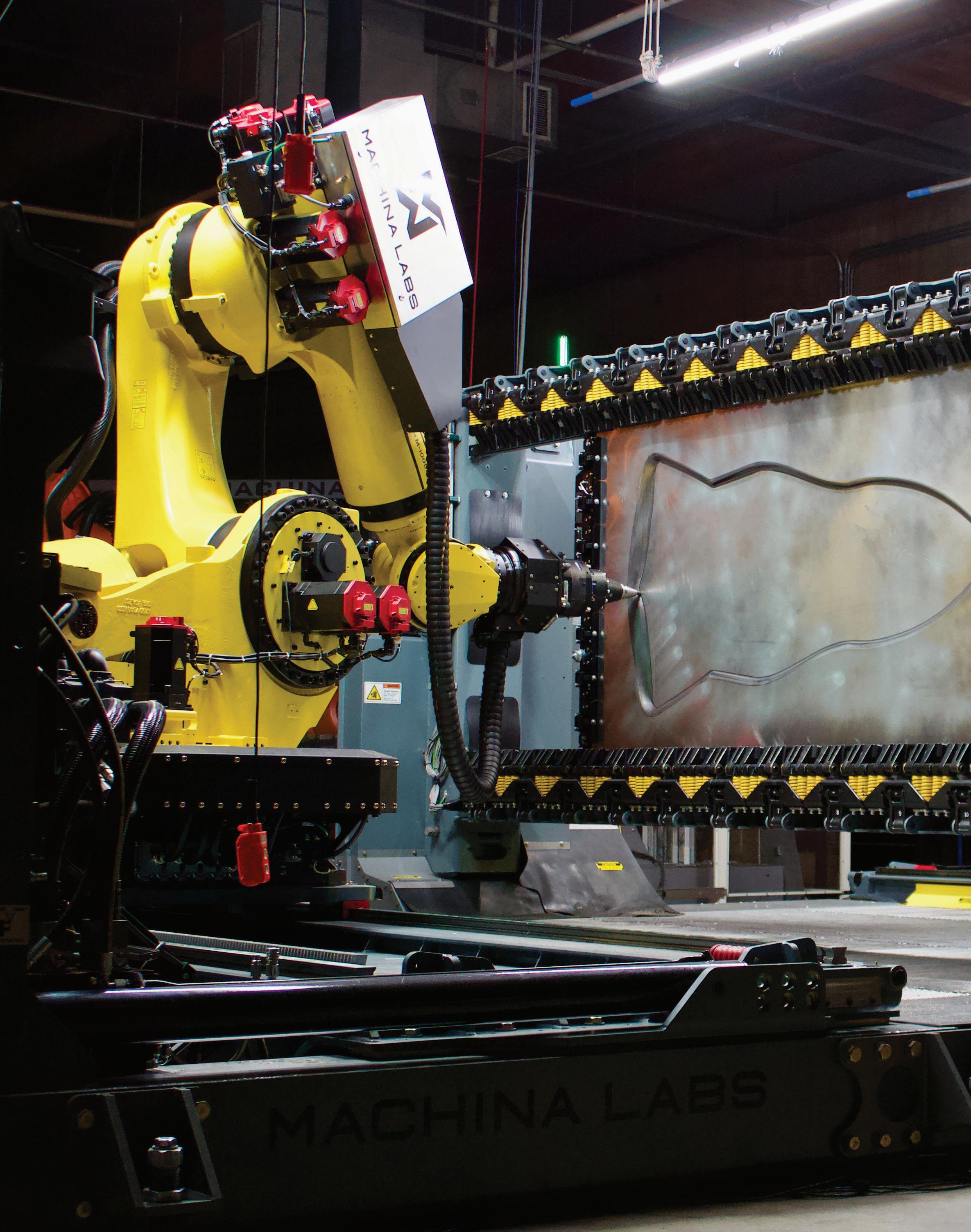

Machina Labs uses robotics and AI to customize automotive body manufacturing

The shaping of sheet-metal car parts with dies and molds requires a lot of space and money. RoboCraftsman could not only streamline manufacturing, but it could also allow for new materials.

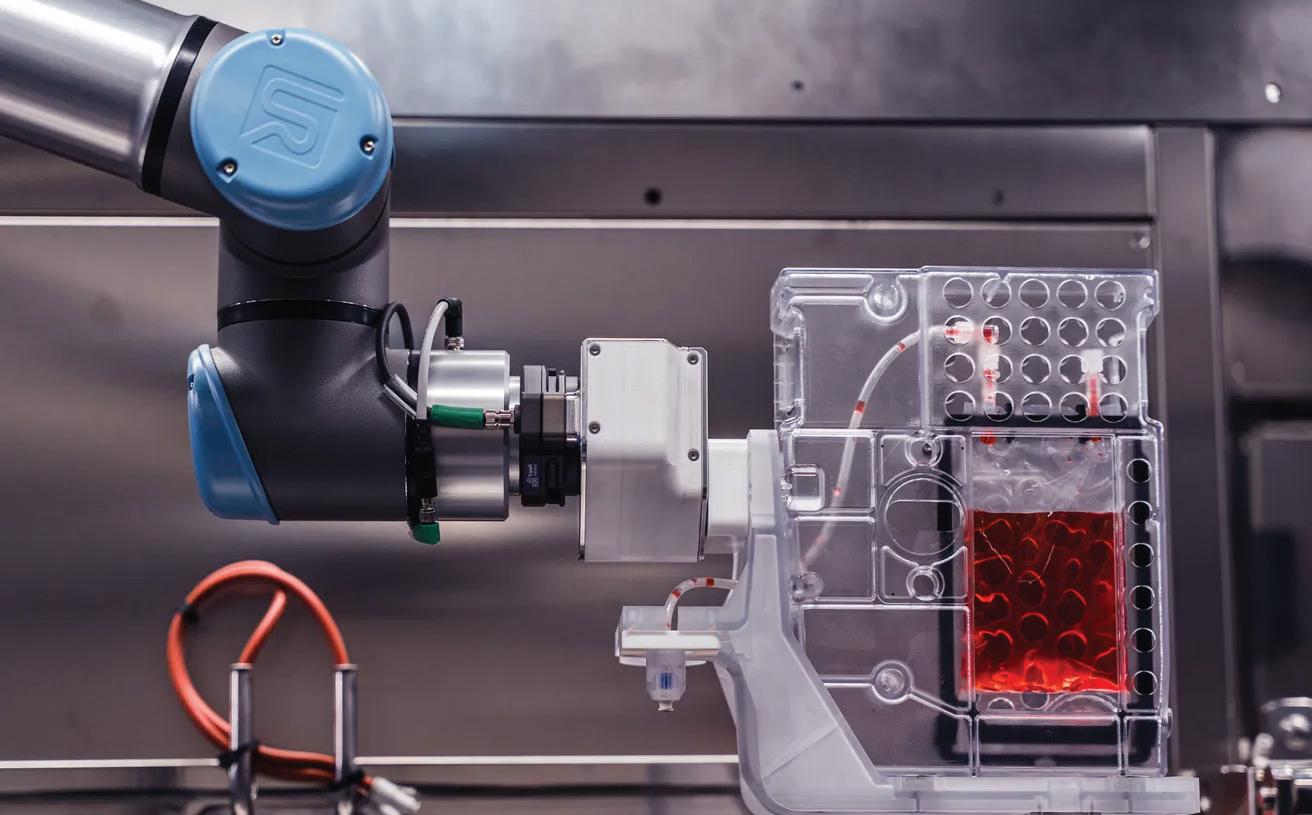

Multiply Labs reduces biomanufacturing costs by 74% with UR cobots

Multiply Labs, which develops and produces individualized drugs, chose force- and power-limited arms to automate small batch processing.

Updated ANSI/A3 standards address industrial robot safety

The biggest A3 standards update in a decade covers industrial robots, as well as applications and workcells. It covers functional safety requirements, end effectors, robot classifications, cybersecurity, and more.

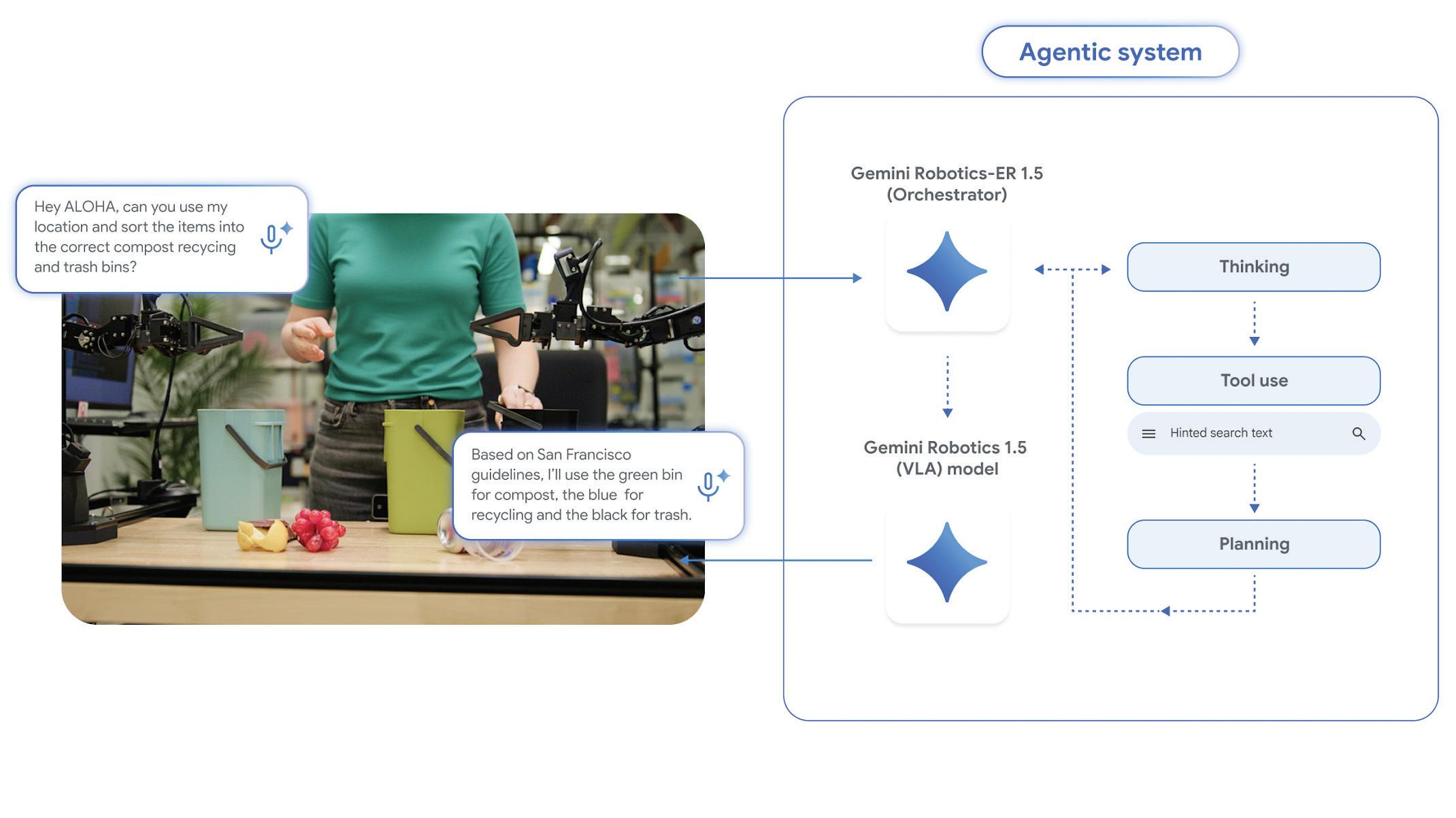

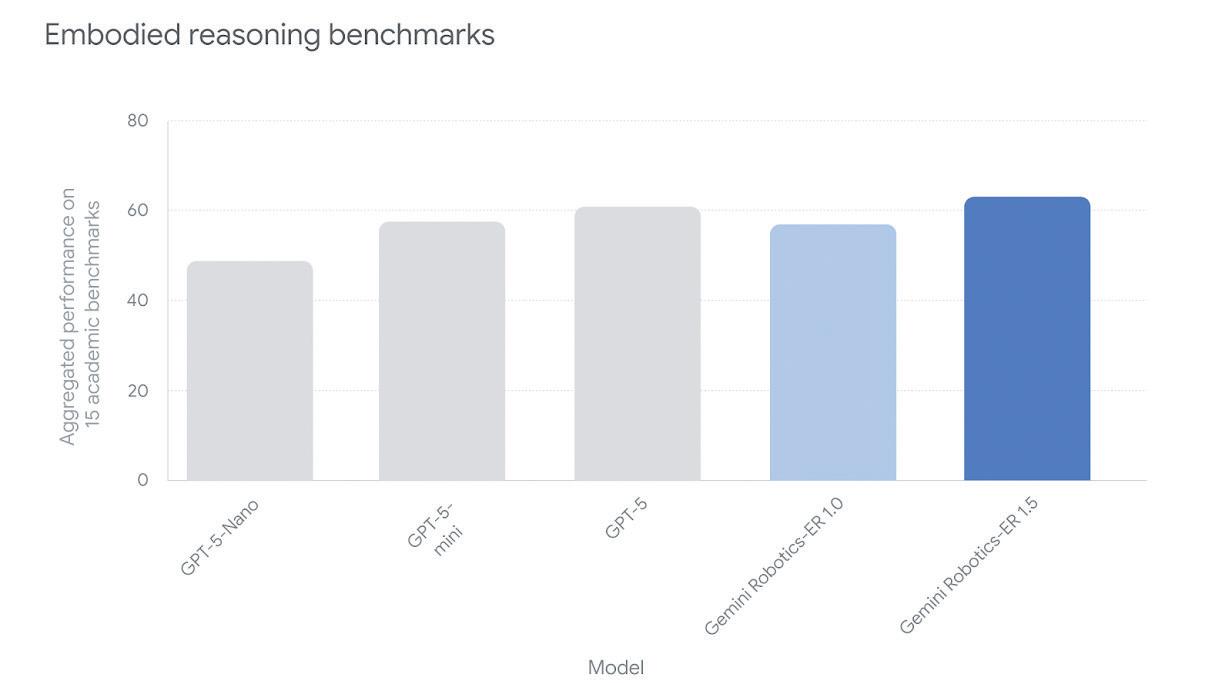

Gemini Robotics 1.5 enables agentic experiences, explains Google DeepMind

Google says its new vision-language-action model is its most capable yet for turning images into robot commands.

It’s time to recreate China’s robotics strategy in the U.S.

While China and the U.S. are major economic rivals, each is also a major market for automation. What can we learn from China’s approach to technology?

The pace of robotics development has picked up. However, since it’s a multidisciplinary field – involving electrical, software, and mechatronics engineers, to name just a few – it may be difficult to see the forest for the trees.

In this year’s Robotics Handbook, The Robot Report looks at promising innovations in hardware and software as humanoid robots and artificial intelligence bask in investor and public attention.

In this issue, you can learn about technology advances, market challenges, and the regulatory and global trade environment. It’s a helpful snapshot of an evolving ecosystem.

We also get perspectives from top minds in robotics and AI. But don’t expect an easy consensus, as these experts draw from varied experiences in research, development, and commercialization.

While many companies are pursuing general-purpose automation, few have been able to demonstrate progress. Boston Dynamics and Toyota Research Institute share their work on using large behavior models to train the Atlas humanoid.

Also in this issue, Ken Goldberg, a noted roboticist at UC Berkeley, explains why humanoids aren’t progressing as fast as AI chatbots. He leads a debate among experts from MIT CSAIL, EPFL, and Georgia Tech about the future of data, robotics, and computing. Progress isn’t inevitable, but it is the product of decisions being made and the work being done today.

In fact, some forms of AI and industrial robots are already helping companies, as we show in a case study with Machina Labs and Toyota.

At the same time, form factors such as force- and power-limited

robot arms, commonly referred to as cobots, are finding their place in more production settings. Multiply Labs used systems from Universal Robots in a “biomanufacturing cluster” it said could cut the cost of cell therapies by 74%.

Standards are key enablers of progress in robot design and deployment. Look at how ANSI/ A3 R15.06-2025 applies to safety for industrial robots. Companies that consider standards early in their development and integration processes will have an easier time scaling.

Back on the AI side, Google DeepMind recently released Gemini Robotics 1.5, which it said can “unlock agentic experiences” across robot embodiments. Understanding one’s environment is a prerequisite to more complex behaviors.

Finally, Katie Clasen at Prelude Ventures talks about how the U.S. can learn from China’s robotics strategy. While they have different strengths, both countries want to take the lead in emerging technologies. Can we identify the right applications and investments? RR

EUGENE DEMAITRE

Editorial Director

The Robot Report

EDITORIAL

VP, Editorial Director

Paul J. Heney pheney@wtwhmedia.com

Editor-in-Chief Rachael Pasini rpasini@wtwhmedia.com

Managing Editor Mike Santora msantora@wtwhmedia.com

Executive Editor Lisa Eitel leitel@wtwhmedia.com

Senior Editor Miles Budimir mbudimir@wtwhmedia.com

Senior Editor Mary Gannon mgannon@wtwhmedia.com

PRINT CREATIVE SERVICES

VP, Creative Director Matthew Claney mclaney@wtwhmedia.com

Art Director Allison Washko awashko@wtwhmedia.com

AUDIENCE DEVELOPMENT

Director, Audience Growth Rick Ellis rellis@wtwhmedia.com

Audience Growth Manager Angel Tanner atanner@wtwhmedia.com

DIGITAL MARKETING

VP, Operations Virginia Goulding vgoulding@wtwhmedia.com

Digital Marketing Manager Taylor Meade tmeade@wtwhmedia.com

Customer Service Manager Stephanie Hulett shulett@wtwhmedia.com

Customer Service Rep Tracy Powers tpowers@wtwhmedia.com

Customer Service Rep JoAnn Martin jmartin@wtwhmedia.com

Customer Service Rep Renee Massey-Linston renee@wtwhmedia.com

Customer Service Rep Trinidy Longgood tlonggood@wtwhmedia.com

DIGITAL PRODUCTION

Digital Production Manager Reggie Hall rhall@wtwhmedia.com

Digital Production Specialist Nicole Johnson njohnson@wtwhmedia.com

Digital Design Manager Samantha King sking@wtwhmedia.com

Marketing Graphic Designer Hannah Bragg hbragg@wtwhmedia.com

Digital Production Specialist Elise Ondak eondak@wtwhmedia.com

WEB DEVELOPMENT

Web Development Manager B. David Miyares dmiyares@wtwhmedia.com

Front End Developer Melissa Annand mannand@wtwhmedia.com

Software Engineering Manager David Bozentka dbozentka@wtwhmedia.com

EDITORIAL

Executive Editor Steve Crowe scrowe@wtwhmedia.com @SteveCrowe

Editorial Director Eugene Demaitre edemaitre@wtwhmedia.com @GeneD5

Senior Editor Mike Oitzman moitzman@wtwhmedia.com @MikeOitzman

Associate Editor Brianna Wessling bwessling@wtwhmedia.com

Chatbots have rapidly advanced in recent years, and so have the large language models, or LLMs, powering them. LLMs use machine learning algorithms trained on vast amounts of text data.

Many technology leaders, including Tesla CEO Elon Musk and NVIDIA CEO Jensen Huang, believe a similar approach will make humanoid robots capable of performing surgery, replacing factory workers, or serving as in-home butlers within a few short years. Other robotics experts disagree, according to UC Berkeley roboticist Ken Goldberg.

In two new papers published online in the journal Science Robotics, Goldberg described how the “100,000year data gap” will prevent robots from gaining real-world skills as quickly as artificial intelligence chatbots have gained language fluency.

In the second article, leading roboticists from MIT, Georgia Tech, and EPFL summarized the heated debate among roboticists over whether the future of the field lies in collecting more data to train humanoid robots or relying on “good old-fashioned engineering” to

program robots to complete realworld tasks.

UC Berkeley News recently spoke with Goldberg about the “humanoid hype,” the emerging paradigm shift in the robotics field, and whether AI really is on the cusp of taking everyone’s jobs.

Goldberg spoke about training robots for the real world at RoboBusiness 2025. He explored how advances in physical AI that combine simulation, reinforcement learning, and real-world data are accelerating deployment and boosting reliability in applications like e-commerce and logistics.

Will humanoid robots outshine humans?

Recently, tech leaders like Elon Musk have made claims about the future of humanoid robots, such as that robots will outshine human surgeons within the next five years. Do you agree with these claims? Goldberg: No; I agree that robots are advancing quickly, but not that quickly. I think of it as hype because it’s so far ahead of the robotic capabilities that researchers in the field are familiar with.

We’re all very familiar with ChatGPT and all the amazing things it’s doing for vision and language, but most researchers are very nervous about the analogy that most people have, which is that now that we’ve solved all these problems, we’re ready to solve [humanoid robots], and it’s going to happen next year.

I’m not saying it’s not going to happen, but I’m saying it’s not going to happen in the next two years, or five years or even 10 years. We’re just trying to reset expectations so that it doesn’t create a bubble that could lead to a big backlash.

What are the limitations that will prevent us from having humanoid robots performing surgery or serving as personal butlers in the near future? What do they still really struggle with?

The big one is dexterity, the ability to manipulate objects. Things like being

able to pick up a wine glass or change a light bulb. No robot can do that.

It’s a paradox — we call it Moravec’s paradox — because humans do this effortlessly, and so we think that robots should be able to do it, too. AI systems can play complex games like chess and Go better than humans, so it’s understandable that people think, “Well, why can’t they just pick up a glass?” It seems much easier than playing Go.

But the fact is that picking up a glass requires that you have a very good perception of where the glass is in space, move your fingertips to that exact location, and close your fingertips appropriately around the object. It turns out that’s still extremely difficult.

In your new paper, you discuss what you call the 100,000-year “data gap.” What is the data gap, and how does it contribute to this

disparity between the language abilities of AI chatbots and the real-world dexterity of humanoids?

Goldberg: To calculate this data gap, I looked at how much text data exists on the internet and calculated how long it would take a human to sit down and read it all. I found it would take about 100,000 years. That’s the amount of text used to train LLMs.

We don’t have anywhere near that amount of data to train robots, and 100,000 years is just the amount of text that we have to train language models. We believe that training robots is much more complex, so we’ll need much more data.

Some people think we can get the data from videos of humans — for instance, from YouTube — but looking at pictures of humans doing things doesn’t tell you the actual detailed motions that the humans are performing, and going from 2D to 3D is generally very hard. So that doesn’t solve it.

Another approach is to create data by running simulations of robot

motions, and that actually does work pretty well for robots running and performing acrobatics. You can generate lots of data by having robots in simulation do backflips, and in some cases, that transfers into real robots.

But for dexterity — where the robot is actually doing something useful, like the tasks of a construction worker, plumber, electrician, kitchen worker or someone in a factory doing things with their hands — that has been very elusive, and simulation doesn’t seem to work.

Currently people have been doing this thing called teleoperation, where humans operate a robot like a puppet so it can perform tasks. There are warehouses in China and the U.S. where humans are being paid to do this, but it’s very tedious.

And every eight hours of work gives you just eight more hours of data. It’s going to take a long time to get to 100,000 years.

Do roboticists believe it is possible to advance the field without first creating all this data?

Goldberg: I believe that robotics is undergoing a paradigm shift, which is when science makes a big change — like going from physics to quantum physics — and the change is so massive that the field gets broken into two camps, and they battle it out for years. And we’re in the midst of that kind of debate in robotics.

Most roboticists still believe in what I call good old-fashioned engineering, which is pretty much everything that we teach in engineering school:

Position, angle and speed measurement

Contactless, no wear and maintenance-free

High positioning accuracy and mounting tolerances

Linear and rotary solutions

physics, math, and models of the environment.

But there is a new dogma that claims that robots don’t need any of those old tools and methods. They say that data is all we need to get us to fully functional humanoid robots.

This new wave is very inspiring. There is a lot of money behind it and a lot of younger-generation students and faculty members are in this new camp.

Most newspapers, Elon Musk, Jensen Huang, and many investors are completely sold on the new wave, but in the research field, there’s a raging debate between the old and new approaches to building robots.

What do you see as the way forward?

Goldberg: I’ve been advocating that engineering, math, and science are still important because they allow us to get these robots functional so that they can collect the data that we need.

This is a way to bootstrap the data collection process. For example, you could get a robot to perform a task

well enough that people will buy it, and then collect data as it works.

Waymo, Google’s self-driving car company, is doing that. It’s collecting data every day from real robotaxis, and their cars are getting better and better over time.

That’s also the story behind Ambi Robotics, which makes robots that sort packages. As they work in real warehouses, they collect data and improve over time.

What jobs will be affected by AI and robotics?

In the past, there was a lot of fear that robotic automation would steal blue-collar factory jobs, and we’ve seen that happen to some extent. But with the rise of chatbots, now the discussion has shifted to the possibility of LLMs taking over white-collar jobs and creative professions. How do you think AI and robots will impact what jobs are available in the future?

Goldberg: To my mind as a roboticist,

the blue-collar jobs, the trades, are very safe. I don’t think we’re going to see robots doing those jobs for a long time.

But there are certain jobs — those that involve routinely filling out forms, such as intake at a hospital — that will be more automated.

One example that’s very subtle is customer service. When you have a problem, like your flight got canceled, and you call the airline and a robot answers, you just get more frustrated. Many companies want to replace customer service jobs with robots, but the one thing a computer can’t say to you is, “I know how you feel.”

Another example is radiologists. Some claim that AI can read X-rays better than human doctors. But do you want a robot to inform you that you have cancer?

The fear that robots will run amok and steal our jobs has been around for centuries, but I’m confident that humans have many good years ahead — and most researchers agree. RR

Editor’s note: This interview has been edited for length and clarity.

As data scales across tasks, robots could learn common sense.

Adobe Stock

Since its inception, the robotics industry has worked towards creating machines that could handle complex tasks by combining mathematical models with advanced computation. Now, the community finds itself divided on how to best reach that goal.

A group of roboticists from around the world investigated this divide at the IEEE International Conference on Robotics and Automation (ICRA) earlier this year. The show closed with a debate between six leading roboticists:

• Daniela Rus is the director of the Computer Science & Artificial Intelligence Laboratory (CSAIL) and the Andrew (1956) and Erna Viterbi Professor of Electrical Engineering and Computer Science at MIT. Rus also keynoted the Robotics Summit & Expo earlier this year.

• Russ Tedrake is the Toyota Professor at CSAIL, EECS, and MIT’s Department of Aeronautics and Astronautics.

• Leslie Kaelbling is the Panasonic Professor of Computer Science and Engineering at MIT.

• Aude Billard is a professor at the School of Engineering at the Swiss Federal Institute of Technology in Lausanne (EPFL).

• Frank Park is professor of mechanical engineering at Seoul National University

• Animesh Garg is the Stephen Fleming Early Career Assistant Professor at the School of Interactive Computing at Georgia Tech.

UC Berkeley’s Ken Goldberg moderated the debate, framing the discussion with the question: “Will the future of robotics be written in code or in data?”

Rus and Tedrake argued that data-driven approaches, particularly those powered by large-scale machine learning, are

critical to unlocking robots’ ability to function reliably in the real world.

“Physics gives us clean models for controlled environments, but the moment we step outside, those assumptions collapse,” Rus said. “Real-world tasks are unpredictable and human-centered. Robots need experience to adapt, and that comes from data.”

At CSAIL, Rus’s Distributed Robotics Lab has embraced this thinking. The team is building multimodal datasets of humans performing everyday tasks, from cooking and pouring to handing off objects. Rus said these recordings capture the subtleties of human action, from hand trajectories and joint torques to gaze and force interactions, providing a rich source of data for training AI systems.

The goal is not just to have robots replicate actions, but to enable them to generalize across tasks and adapt when conditions change.

In the kitchen testbed at CSAIL, for example, Rus’s team equips volunteers with sensors while they chop vegetables, pour liquids, and assemble meals. The sensors record not only

joint and muscle movements but also subtle cues such as eye gaze, fingertip pressure, and object interactions.

AI models trained on this data can then perform the same tasks on robots with precision and robustness, learning how to recover when ingredients slip or tools misalign. These real-world datasets let researchers capture “long-tail” scenarios – rare but critical occurrences that model-based programming alone would miss.

Tedrake discussed how scaling data transforms robot manipulation. His team has trained robots to perform dexterous tasks, such as slicing apples, observing diverse outcomes, and recovering from errors.

“Robots are now developing what looks like common sense for dexterous tasks,” he said. “It’s the same effect we’ve seen in language and vision: once you scale the data, surprising robustness emerges.”

In one example, he showed a bimanual robot equipped with simple grippers that learned to core and slice

apples. Each apple differed slightly in size, firmness, or shape, yet the robot adapted automatically, adjusting grip and slicing motions based on prior experience.

Tedrake explained that, as the demonstration dataset expanded across multiple tasks, recovery behaviors—once manually programmed—began to emerge naturally, a sign that data can encode subtle, high-level common-sense knowledge about physical interactions.

Kaelbling, who also spoke at the event, argued along with Billard and Park for the continuing importance of mathematical models, first principles, and theoretical understanding.

“Data can show us patterns, but models give us understanding,” Kaelbling said. “Without models, we risk systems that work, until they suddenly don’t. Safety-critical applications demand something deeper than trial-and-error learning.”

Billard said robotics differs fundamentally from vision or language:

real-world data is scarce, simulations remain limited, and tasks involve infinite variability. While large datasets have propelled progress in perception and natural language understanding, she cautioned that blindly scaling data without an underlying structure risks creating brittle systems.

Park emphasized the richness of inductive biases from physics and biology—principles of motion, force, compliance, and hierarchical control— that data-driven methods alone cannot fully capture. He noted that carefully designed models can guide data collection and interpretation, helping ensure safety, efficiency, and robustness in complex tasks.

Garg, meanwhile, articulated the benefits of combining data-driven learning with structured models. He emphasized that while large datasets

can reveal patterns and behaviors, models are necessary to generalize those insights and make them actionable.

“The best path forward may be a hybrid approach,” he said, “where we harness the scale of data while respecting the constraints and insights that models provide.”

Garg illustrated this with examples from collaborative manipulation tasks, where robots trained purely on raw data struggled with edge cases that a physics-informed model could anticipate.

The debate also drew historical parallels. Humanity has often acquired “know-how” before “knowwhy.” From sailing ships and internal combustion engines to airplanes and early computers, engineers relied on empirical observation long before fully understanding the underlying scientific principles.

Rus and Tedrake argued that modern robotics is following a similar trajectory: data allows robots to acquire practical experience in messy, unpredictable environments, while models provide the structure necessary to interpret and generalize that experience. This combination is essential, they said, to move from labbound experiments to robots capable of operating in homes, hospitals, and other real-world settings.

Throughout the debate, panelists emphasized the diversity of the robotics field itself. While deep learning has transformed perception and language tasks, robotics involves many challenges. These include high-dimensional control, variable human environments, interaction with deformable objects, and safety-critical constraints.

Tedrake noted that applying large pre-trained models from language directly to robots is insufficient; success requires multimodal learning and the integration of sensors that capture forces, motion, and tactile feedback.

Rus added that building large datasets across multiple robot platforms is crucial for generalization. “If we want robots to function across different homes, hospitals, or factories, we must capture the variety and unpredictability of the real world,” she said.

“Solving robotics is a long-term agenda,” Tedrake reflected. “It may take decades. But the debate itself is healthy. It means we’re testing our assumptions and sharpening our tools. The truth is, we’ll probably need both data and models – but which takes the lead, and when, remains unsettled.” RR

Automotive body manufacturing typically involves rigid massproduction lines, with dies and presses forming sheet metal into panels and accessories. Machina Labs recently announced a robotic approach that it said will enable vehicle makers to bring customized vehicles to market at massproduction prices.

“Traditional production tools are often massive, comparable in size to a small car and weighing over 20 tons,” stated Ed Mehr, co-founder and CEO of Machina Labs. “With our solution, the need for dedicated tooling per model variation is eliminated. That means lower project capital, less storage both in-plant and for past models, which today can last up to 15 years, and faster production changeovers.”

“The dies or molds to stamp out sheet-metal parts are very expensive and bulky,” he told The Robot Report “They have to move around rails and cranes, and two-thirds of facilities are filled with these dies.”

Founded in 2019, Machina Labs said it is “building the next generation of intelligent, adaptive, and softwaredriven factories.”

The Los Angeles-based company‘s RoboCraftsman platform integrates advanced robotics and AI-driven process controls to rapidly manufacture complex metal structures for aerospace, defense, and automotive applications.

“We started Machina Labs as a new paradigm of manufacturing, with cellbased matrix production,” Mehr said. “Our program can do different things — metal forming, trimming, and quality control — but forming is our core. With 200 to 300 of our systems, you have the equivalent of a data center for manufacturing using UGVs [uncrewed ground vehicles].”

Machina Labs modernizes sheet forming

The automotive customization and accessories industry is valued at $2.4 billion in 2024 for trucks alone, noted

Machina Labs. However, conventional high-volume manufacturing limits the amount of variation in vehicles and the timeframe in which they can be changed.

The company said its RoboCraftsman platform and RoboForming technology enable a proprietary method of incremental sheet forming proven to deliver customized panels from sheet metal for ground and aerospace vehicles at high volume, high quality, and short lead times.

“Our process gets rid of the die,” Mehr explained. “Robots incrementally form the sheet into a shape. You can get parts significantly faster. The fastest you can change die making is like three months; with some complicated ones, it’s up to a year before you can get your first part.”

“With us, you can get your first part in hours after the design is done,” he added. “There are also certain metals that were not able to be formed at room temperature using traditional

RoboCraftsman uses robotics to shape metal faster than traditional die-based methods. Machina Labs

technologies, so we open up to new alloys like titanium or nickel alloys.”

In addition, the company claimed that its systems can streamline process flow within the factory. Current manufacturing models require separate storage, repackaging, and dedicated assembly lanes for custom parts.

Machina said its systems provide ondemand part production in low volumes from cells near the assembly line, allowing for dynamic batching or broadcast-driven manufacturing – all without disrupting existing flows.

“The automotive market formed models around these time limits — they had to produce the same car over and over again to amortize the cost of dies and molds and keep the dies and inventory for 15 years to support them,” said Mehr. ‘We’re looking at a completely new market with customization. We did a topographic map of LA on the hood of one car.”

Toyota provides investment, pilot “Our first market was defense, with highmix, low-volume production of UAVs, aircraft, and missiles,” said Mehr. “But I’m a car enthusiast, and we were lucky to get connected with Toyota.”

“We’re very good at making bespoke products, but there’s a lot to learn about standard operating procedures,” he said. “We’ve spent a few years on this collaboration to understand upstream and downstream automotive processes.”

Machina Labs made its announcement at UP.Summit alongside a pilot of the technology with Toyota Motor North America and a strategic investment from Woven Capital, Toyota’s growth-stage venture investment arm.

The pilot project will apply Machina’s RoboForming technology to customize body panels, with the goal of bringing automotive-grade quality and throughput to low-volume manufacturing.

“We envision a future where customization is available for every Toyota driver,” said Zach Choate, general manager of production engineering and core engineering manufacturing at Toyota Motor North America. “The ability

to deliver a bespoke product into the hands of our customers is the type of innovation we are excited about.”

“AI-powered manufacturing is transforming how products are designed and produced at scale,” added George Kellerman, founding managing director at Woven Capital. “Customers increasingly demand more personalized products, while engineers

need faster, more cost-effective paths from concept to production without the constraints of traditional supply chains.”

Machina plans to spend the next year to continue learning how to integrate its systems into automotive production.

“We’re figuring out how to do a few thousand vehicles or even more,” Mehr said. “We’ve had electric cars and

autonomous cars, and I think we’re now in the early stages of personalization. It’s a new category. People really want to express themselves, which is what car making was before Ford introduced mass production. It was only available to the rich, but now we can bring it back for everybody.” RR

To be useful, humanoid robots will need to be competent at many tasks, according to Boston Dynamics. They must be able to manipulate a diverse range of objects, from small, delicate objects to large, heavy ones. At the same time, they will need to coordinate their entire bodies to reconfigure themselves, their environments, avoid obstacles, and maintain balance while responding to surprises.

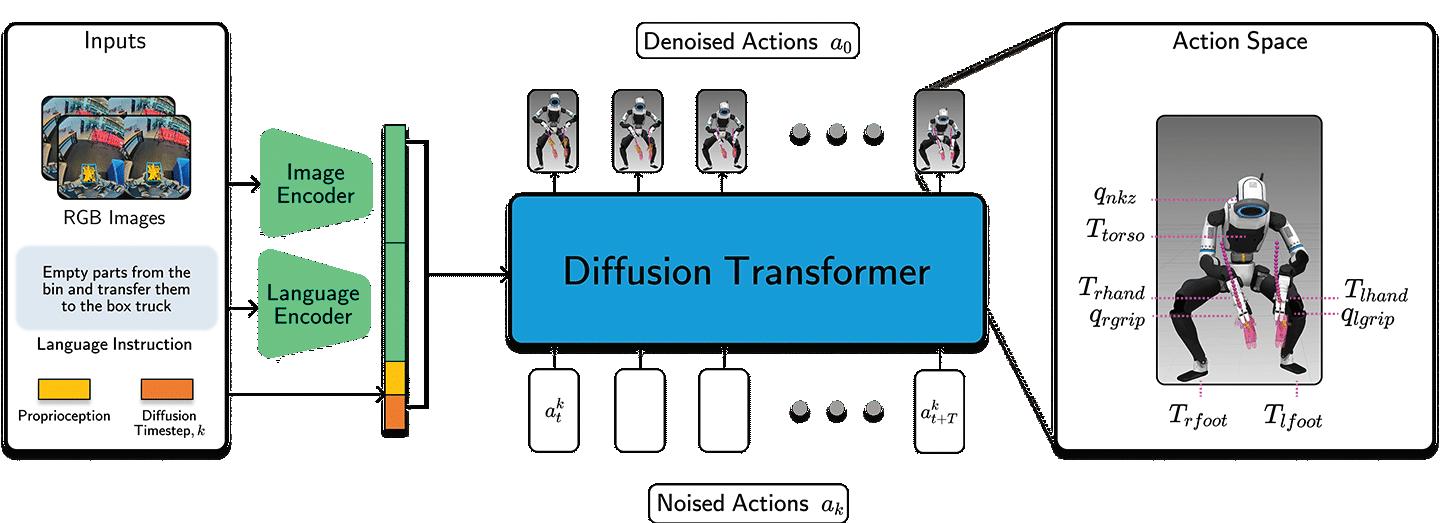

Boston Dynamics said it believes that building AI generalist robots is the most viable path to creating these competencies and achieving automation at scale with humanoids. The company recently shared some of its progress on developing large behavior models (LBMs) for its Atlas humanoid.

This work is part of a collaboration between the AI research teams at Toyota Research Institute (TRI) and Boston Dynamics. The companies said they have been building “endto-end language-conditioned policies that enable Atlas to accomplish longhorizon manipulation tasks.”

These policies take full advantage of the capabilities of the humanoid form factor, claimed Boston Dynamics. This includes taking steps, precisely positioning its feet, crouching, shifting its center of mass, and avoiding selfcollisions, all of which it said are critical to solving realistic mobile manipulation tasks.

“This work provides a glimpse into how we’re thinking about building general-purpose robots that will transform how we live and work,” said Scott Kuindersma, vice president of robotics research at Boston Dynamics.

“Training a single neural network to perform many long-horizon manipulation tasks will lead to better generalization, and highly capable robots like Atlas present the fewest barriers to data collection for tasks requiring whole-body precision, dexterity, and strength,” he added.

Boston Dynamics lays building blocks for creating policies

Boston Dynamics said its process for building policies includes four basic steps:

1. Collect embodied behavior data using teleoperation on both the real robot hardware and in simulation.

2. Process, annotate, and curate data to incorporate into a machine learning (ML) pipeline.

3. Train a neural network policy using all of the data across all tasks.

4. Evaluate the policy using a test suite of tasks.

The company said the results of Step 4 guide its decision-making about what additional data to collect and what network architecture or inference strategies could lead to improved performance.

In implementing this process, Boston Dynamics said it followed three core principles:

Maximizing task coverage

Humanoid robots could tackle a tremendous breadth of manipulation tasks, predicted Boston Dynamics. However, collecting data beyond stationary manipulation tasks while preserving high-quality, responsive motion is challenging.

The company built a teleoperation system that combines Atlas’ model predictive controller (MPC) with a custom virtual reality (VR) interface to cover tasks ranging from finger-level dexterity to whole-body reaching and locomotion.

Boston Dynamics’ process for building humanoid behavior policies. Boston Dynamics

Training generalist policies

“The field is steadily accumulating evidence that policies trained on a large corpus of diverse task data can generalize and recover better than specialist policies that are trained to solve one or a small number of tasks,” said Boston Dynamics.

The Waltham, Mass.-based company uses multi-task, language-conditioned policies to accomplish diverse tasks on multiple embodiments. These policies incorporate pretraining data from Atlas, the upper body-only Atlas Manipulation Test Stand (MTS), and TRI Ramen data.

Boston Dynamics added that building general policies enables it to simplify deployment, share policy improvements across tasks and embodiments, and move closer to unlocking emergent behaviors.

Building infrastructure to support fast iteration and rigorous science

“Being able to quickly iterate on design choices is critical, but actually measuring with confidence when one policy is better or worse than another is the key ingredient to making steady progress,” Boston Dynamics asserted.

The combination of simulation, hardware tests, and ML infrastructure built for production scale, the company said it has efficiently explored the

Boston Dynamics’ policy maps inputs consisting of images, proprioception, and language prompts to actions that control the full Atlas robot at 30Hz. It uses a diffusion transformer together with a flow matching loss to train its model. Boston Dynamics

data and policy design space while continuously improving on-robot performance.

“One of the main value propositions of humanoids is that they can achieve a huge variety of tasks directly in existing environments, but the previous approaches to programming these tasks simply could not scale to meet this challenge,” said Russ Tedrake, senior vice president of LBMs at TRI.

“Large behavior models address this opportunity in a fundamentally new way – skills are added quickly via demonstrations from humans, and as the LBMs get stronger, they require less and less demonstrations to achieve more and more robust behaviors,” he explained.

The “Spot Workshop” task demonstrated coordinated locomotion—stepping, setting a wide stance, and squatting, said Boston Dynamics. It also showed dexterous manipulation, including part picking, regrasping, articulating, placing, and sliding. The demo consisted of three subtasks:

1. Grasping quadruped Spot legs from the cart, folding them, and placing them on a shelf.

2. Grasping face plates from the cart, then pulling out a bin on the bottom shelf, and putting the face plates in the bin.

3. Once the cart is fully cleared, turning to the blue bin behind and clearing it of all other Spot parts, placing handfuls of them in the blue tilt truck.

Boston Dynamics said a key feature was for its policies to react intelligently when things went wrong, such as a part falling on the ground or the bin lid closing. The initial versions of its policies didn’t have these capabilities.

By showing examples of the robot recovering from such disturbances and retraining its network, the company said it can quickly deploy new reactive policies with no algorithmic or engineering changes needed. This is because the policies can effectively estimate the state of the world from the robot’s sensors and react accordingly purely through the experiences observed in training.

“As a result, programming new manipulation behaviors no longer requires an advanced degree and years of experience, which creates a compelling opportunity to scale up behavior development for Atlas,” said Boston Dynamics.

Boston Dynamics adds manipulation capabilities

Boston Dynamics said it has studied dozens of tasks for both benchmarking and pushing the boundaries of manipulation. With a single languageconditioned policy on Atlas MTS, the company said Atlas can perform simple pick and place tasks as well as more complex ones such as tying a rope, flipping a barstool, unfurling and spreading a tablecloth, and manipulating a 22 lb. (9.9 kg) car tire.

These tasks that would be extremely difficult to perform with traditional robot programming techniques due to their deformable geometry and the complex manipulation sequences, Boston Dynamics said. But with LBMs, the training process is the same whether Atlas is stacking rigid blocks or folding a T-shirt. “If you can demonstrate it, the robot can learn it,” it said.

Boston Dynamics noted that its policies could speed up the execution at inference time without requiring any training time changes. Since the policies predict a trajectory of future actions along with the time at which those actions should be taken, it can adjust this timing to control execution speed.

Generally, the company said it can speed up policies by 1.5x to 2x

RUSS TEDRAKE • SVP OF LBMS AT TRI

without significantly affecting policy performance on both the MTS and full Atlas platforms. While the task dynamics can sometimes preclude this kind of inference-time speedup, Boston Dynamics said it suggests that, in some cases, the robot can exceed the speed limits of human teleoperation.

Teleoperation enables highquality data collection

Atlas contains 78 degrees of freedom (DoF) that provide a wide range of motion and a high degree of dexterity. The Atlas MTS has 29 DoF to explore pure manipulation tasks. The grippers each have 7 DoF that enable the robot to use a wide range of grasping strategies, such as power grasps or pinch grasps.

Boston Dynamics relies on a pair of HDR stereo cameras mounted in the head to provide both situational awareness for teleoperation and visual input for its policies.

Controlling the robot in a fluid, dynamic, and dexterous manner is crucial, said the company, which has

invested heavily in its teleoperation system to address these needs. It is built on Boston Dynamics’ MPC system, which it previously used to demonstrate Atlas conducting parkour, dance, and both practical and impractical manipulation.

This control system allows the company to perform precise manipulation while maintaining balance and avoiding self-collisions, enabling it to push the boundaries of what it can do with the Atlas hardware.

The remote operator wears a VR headset to be fully immersed in the robot’s workspace and have access to the same information as the policy. Spatial awareness is bolstered by a stereoscopic view rendered using Atlas’ head-mounted cameras reprojected to the user’s viewpoint, said Boston Dynamics.

Custom VR software provides teleoperators with a rich interface to command the robot, providing them with real-time feeds of the robots’ state, control targets, sensor readings,

for Robotics and Automation

• High Temp. Cable and Wire Wrapping Tapes

• Low Friction Polymer Films & Fabrics for Durable Release or Wear Surfaces

• Silicone Rubber Sheeting & Strip N’ Stick® Tapes

• Custom Die Cutting for part to component application

• Polymer Beading, Tie Cord, Bar, & Rod

tactile feedback, and system state via augmented reality, controller haptics, and heads-up display elements.

Boston Dynamics said this enables teleoperators to make full use of the robot hardware, synchronizing their body and senses with the robot.

The initial version of the VR teleoperation application used the headset, base stations, controllers, and one tracker for the chest to control Atlas while standing still. This system employed a one-to-one mapping between the user and the robot (i.e., moving your hand 1 cm would cause the robot to also move by 1 cm), which yields an intuitive control experience, especially for bi-manual tasks.

With this version, the operator was already able to perform a wide range of tasks, such as crouching down low to reach an object on the ground and also standing tall to reach a high shelf. However, one limitation of this system is that it didn’t allow the operator to dynamically reposition the feet and take steps, which significantly limited the tasks it could perform.

To support mobile manipulation, Boston Dynamics incorporated two additional trackers for 1-to-1 tracking on the feet and extended the teleoperation control such that Atlas’s stance mode, support polygon, and stepping intent matched that of the operator. In addition to supporting locomotion, the company said this setup allowed it to take full advantage of Atlas’ workspace.

For instance, when opening a blue tote on the ground and picking items from inside, the human must be able to configure the robot with a wide stance and bent knees to reach the objects in the bin without colliding with the bin.

Boston Dynamics’ neural network policies use the same control interface to the robot as the teleoperation system, which made it easy to reuse model architectures it had developed for policies that didn’t involve locomotion. Now, it can simply augment the action representation.

TRI’s LBMs received a 2024 RBR50 Robotics Innovation Award. Boston Dynamics said it builds on them to scale diffusion policy-like architectures, using a 450 million-parameter diffusion transformer architecture with a flow-matching objective.

The policy is conditioned on proprioception, images, and also accepts a language prompt that specifies the objective to the robot. Image data comes in at 30 Hz, and its network uses a history of observations to predict an action chunk of length 48 (corresponding to 1.6 seconds), where generally 24 actions (0.8 seconds when running at 1x speed) are executed each time policy inference is run.

The policy’s observation space for Atlas consists of the images from the robot’s head-mounted cameras along with proprioception. The action space includes the joint positions for the left and right grippers, neck yaw, torso pose, left and right hand pose, and the left and right foot poses.

Atlas MTS is identical to the upperbody on Atlas, both from a mechanical and a software perspective. The observation and action spaces are the same as for Atlas, simply with the torso and lower body components omitted. This shared hardware and software across Atlas and Atlas MTS allows Boston Dynamics to pool data from both embodiments for training.

These policies were trained on data that the team continuously collected and iterated upon, where high-quality demonstrations were a critical part of getting successful policies. Boston Dynamics heavily relied upon its quality assurance tooling, which allowed it to review, filter, and provide feedback on the data collected.

Boston Dynamics quickly iterates with simulation

Boston Dynamics said simulation is a critical tool that allows it to quickly iterate on the teleoperation system, write unit and integration tests to ensure the company can move forward without breakages. It also enables the company to perform informative training and evaluations that would otherwise be slower, more expensive, and difficult to perform repeatably on hardware.

Because Boston Dynamics’ simulation stack is a faithful representation of the hardware and on-robot software stack,

the company is able to share its data pipeline, visualization tools, training code, VR software, and interfaces across both simulation and hardware platforms.

In addition to using simulation to benchmark its policy and architecture choices, Boston Dynamics also uses it as a significant co-training data source for its multi-task and multi-embodiment policies that it deploys on the hardware.

So far, Boston Dynamics has shown that it can train multi-task languageconditioned policies that can control Atlas to accomplish long-horizon tasks that involve both locomotion and dexterous whole-body manipulation. The company said its data-driven approach is general and can be used for practically any downstream task that can be demonstrated via teleoperation.

While Boston Dynamics said it is encouraged by the results so far, it acknowledged that there is still much

work to be done. With its established baseline of tasks and performance, the company said it plans to focus on scaling its “data flywheel” to increase throughput, quality, task diversity, and difficulty while also exploring new algorithmic ideas.

The company wrote in a blog post that it is continuing research in several directions, including performance-related robotics topics such as gripper force control with tactile feedback and fast dynamic manipulation. It is also looking at incorporating diverse data sources including cross-embodiment, egocentric human data, etc.

Finally, Boston Dynamics said it is interested in reinforcement learning (RL) improvement of vision-languageaction models (VLAs), as well as in deploying vision-language model (VLM) and VLA architectures to enable more complex long-horizon tasks and open-ended reasoning. RR

Precision medicine, in which complex treatments for cancer and other conditions are personalized, is typically costly, limiting patient access to care. Multiply Labs Inc. has developed a “robotic biomanufacturing cluster” that it said can reduce the cost of life-saving cell therapies by 74%.

“Historically, cell and gene therapy manufacturing has been manual, almost artisanal,” said Fred Parietti, CEO of Multiply Labs. “Expert scientists perform hundreds of tasks by hand, from pipetting to shaking cells.”

San Francisco-based Multiply Labs has developed cloud-controlled automated systems for the production

of individualized drugs at scale. Its team includes mechanical engineers, electrical engineers, computer scientists, software engineers, and pharmaceutical scientists.

The company said its customers include some of largest global organizations in the advanced pharmaceutical manufacturing space.

Personalized cell therapies are often used to treat blood cancers such as lymphoma and leukemia. Unlike mass-produced drugs, they require a customized dose from each patient’s own cells, making large-batch production impossible. Such therapies

are currently priced between $300,000 and $2 million per dose.

In addition, a single microbial contamination can render the entire product unusable, leading to costly manufacturing failures.

Multiply Labs chose Universal Robots A/S (UR) for its modular biomanufacturing cluster after closely

evaluating several force- and powerlimited or collaborative robot arms.

“UR was chosen due to their crucial six-axis capabilities, unrivaled force mode for delicate handling, seamless software integration, robust community support, and cleanroom compatibility,” said Nadia Kreciglowa, head of robotics at Multiply Labs.

UR cobots can replicate intricate processes

As published in peer-reviewed studies with the University of California, San Francisco (UCSF), Multiply Labs used multiple UR cobots working in parallel, stacked floor to ceiling with collision avoidance, to handle the entire cell therapy manufacturing process.

The new partnership, Universal Robots’ first in the cell and gene therapy sector, illustrates the great potential of collaborative robots in healthcare, according to Jean-Pierre Hathout, president of Odense, Denmark-based UR, a Teradyne company.

“By empowering Multiply Labs to replicate intricate manual processes with high precision and scale, our cobots are redefining efficiency in pharmaceutical manufacturing,” he stated. “More importantly, it’s driving significant cost reductions while broadening access to life-saving treatments. At its core, this partnership is a testament to how robotics can elevate human capability and serve critical needs in medicine.”

Multiply Labs uses imitation learning for process fidelity

Multiply Labs cited its imitation learning technology as enabling robots learn from expert human demonstrations rather than dictating new processes.

“We ask the pharmaceutical companies that we work with to videotape their scientists performing the tasks,” explained Parietti. “We then feed this data to the robots, and the robots learn to effectively replicate what scientists were doing in the lab, just

more efficiently, more repeatably, 24/7, and in parallel.”

He added that this approach allows the UR cobots to “self-learn hundreds of new tasks” with exponentially lower engineering costs.

Multiply Labs’ system faithfully replicates manual processes with enhanced efficiency, repeatability, and sanitary conditions, according to the partners. This process fidelity is also crucial for regulatory compliance, potentially saving decades and

billions of dollars in re-approval costs by replicating existing, already approved processes, said Dr. Jonathan Esensten, director of the Advanced Biotherapy Center at Sheba Medical Center.

“Instead of starting from square zero in terms of drug approval, companies can now document that this is the exact same manufacturing process. It just happens to be done by a robot.” he noted. Dr. Esensten, a former member of the UCSF faculty, worked with Multiply Labs to develop the system.

Multiply Labs reduces costs, space utilization, contamination

“When we compared a traditional manual manufacturing process for these cell therapies to a robotic process doing the exact same process, we found a cost reduction of approximately 74%,” said Esensten. “Growing T cells is something that we have been doing for a long time, but to have the robotic system do it without any human hands touching the cells throughout the process, that is a quantum leap in terms of being able to manufacture these medicines at a lower cost and in a smaller space.”

In addition to cost savings, the robotic system can drastically improve space utilization, Parietti said. It can free up enough space to store up to 100x more patient doses per square foot of cleanroom compared with a typical manual process. Quality and sterility are also significantly enhanced, he said.

“Robots don’t breathe, and they don’t touch stuff they’re not supposed to touch,” observed the Multiply Labs CEO.

“While human handling led to contamination in one case, we did not see any contamination in the robotic process,” said Dr. Esensten in reference to the UCSF study.

The Multiply Labs robotic cluster is already deployed at global pharmaceutical companies with results now also documented in collaboration with scientists at Stanford University.

“This will really change the way we think about the manufacturing of these bespoke, custom cell and gene therapies for patients,” said Parietti. “We will ultimately improve patient access globally by lowering manufacturing costs, enabling distributed production worldwide of these life-saving therapies.”

The Association for Advancing Automation, or A3, has published the revised ANSI/A3 R15.06-2025 American National Standard for Industrial Robots and Robot Systems – Safety Requirements. The updated standard is the most significant advance in industrial robot safety requirements in more than a decade, according to A3.

“This is more than a milestone in safety standardization. It’s a call to action,” stated Jeff Burnstein, president of A3. “Publishing this safety standard is perhaps the most

important thing A3 can do, as it directly impacts the safety of millions of people working in industrial environments around the world.”

ANSI/A3 R15.06-2025 covers applications, robot cells

The revised standard is now available for purchase in protected PDF format, and it includes:

• Part 1: Safety requirements for industrial robots

• Part 2: Safety requirements for industrial robot applications and robot cells

A3 has revised the national standard for industrial robot safety based on global standards. charnsitr | Adobe Stock

A3 said it expects to publish Part 3, which will address safety requirements for users of industrial robot cells, later this year. Once available, Part 3 will be retroactively provided at no additional cost to anyone who purchases the full standard now.

R15.06 is the U.S. national adoption of ISO 10218 Parts 1 and 2 and is a revision of ANSI/RIA R15.06-2012. The Robotic Industries Association (RIA), which originally published the 2012 version of this standard, is now part of A3.

TOP: The standards include provisions for collaborative robots, functional safety, and cybersecurity.

BOTTOM: The revised standards are now available. A3

• Clarified functional safety requirementsthat improve usability and compliance for manufacturers and integrators

• Integrated guidance for collaborative robot applications, consolidating ISO/TS 15066

• New content on end effectors and manual loading/unloading procedures, derived from ISO/TR 20218-1 and ISO/TR 20218-2

• Updated robot classifications, with corresponding safety functions and test methodologies

• Cybersecurity guidance, now included as part of safety planning and implementation

• Refined terminology, including the replacement of “safety-rated monitored stop” with “monitored standstill” for broader technical accuracy

“This standard delivers clearer guidance, smarter classifications, and a roadmap for safety in the era of intelligent automation,” said Carole Franklin, director of standards development for robotics at A3. “It empowers manufacturers and integrators to design and deploy safer systems more confidently while supporting innovation without compromising human well-being.”

The revised edition of the ANSI/A3 R15.062025 standard can be purchased globally through A3. Companies and professionals looking to stay compliant with the latest safety requirements can acquire the standard through the A3 Standards online store.

Pricing starts at $655 U.S.

Ann Arbor, Mich.-based A3 is a leading advocate for the benefits of automating. Its members include over 1,400 manufacturers, component suppliers, systems integrators, end users, academic institutions, research groups, and consulting firms. RR

The Robot Report Staff

Google DeepMind recently introduced two models that it claimed “unlock agentic experiences with advanced thinking” as a step toward artificial general intelligence, or AGI, for robots. Its new models are: Gemini Robotics 1.5: DeepMind said this is its most capable visionlanguage-action (VLA) model yet. It can turn visual information and instructions into motor commands for a robot to perform a task. It also thinks before taking action and shows its process, enabling robots to assess and complete complex tasks more transparently. The model also learns across embodiments, accelerating skill learning.

Gemini Robotics-ER 1.5: The company said this is its most capable vision-language model (VLM). It reasons about the physical world, natively calls digital tools, and creates detailed, multi-step plans to complete a mission. DeepMind said it now achieves stateof-the-art performance across spatial understanding benchmarks.

DeepMind is making Gemini Robotics-ER 1.5 available to developers via the Gemini application programming interface (API) in Google AI Studio. Gemini Robotics 1.5 is currently available to select partners.

The company asserted that the releases mark an important milestone

toward solving AGI in the physical world. By introducing agentic capabilities, Google said it is moving beyond AI models that react to commands and creating systems that can reason, plan, actively use tools, and generalize.

Most daily tasks require contextual information and multiple steps to complete, making them notoriously challenging for robots today. That’s why DeepMind designed these two models to work together in an agentic framework.

Gemini Robotics-ER 1.5 orchestrates a robot’s activities, like a high-level brain. DeepMind said this model excels at planning and making logical decisions within physical environments. It has state-of-the-art spatial understanding, interacts in natural language, estimates its success and progress, and can natively call tools like Google Search to look for information or use any third-party userdefined functions.

The VLM gives Gemini Robotics 1.5 natural language instructions for each step, which use its vision and language understanding to directly perform the specific actions. Gemini Robotics 1.5 also helps the robot think about its actions to better solve semantically complex tasks, and it can even explain its thinking processes in natural language — making its decisions more transparent.

Both of these models are built on the core Gemini family of models and have been fine-tuned with different datasets to specialize in their

respective roles. When combined, they increase the robot’s ability to generalize to longer tasks and more diverse environments, said DeepMind.

Robots can understand environments and think before acting

Gemini Robotics-ER 1.5 is a thinking model optimized for embodied reasoning, said Google DeepMind. The company claimed it “achieves state-ofthe-art performance on both academic and internal benchmarks, inspired by real-world use cases from our trusted tester program.”

DeepMind evaluated Gemini Robotics-ER 1.5 on 15 academic benchmarks, including Embodied Reasoning Question Answering (ERQA) and Point-Bench, measuring the model’s performance on pointing, image question answering, and video question answering.

VLA models traditionally translate instructions or linguistic plans directly into a robot’s movement. Gemini

Robotics 1.5 goes a step further, allowing a robot to think before taking action, said DeepMind. This means it can generate an internal sequence of reasoning and analysis in natural language to perform tasks that require multiple steps or require a deeper semantic understanding.

“For example, when completing a task like, ‘Sort my laundry by color,’ the robot in the video below thinks at different levels,” wrote DeepMind. “First, it understands that sorting by color means putting the white clothes in the white bin and other colors in the black bin. Then it thinks about steps to take, like picking up the red sweater and putting it in the black bin, and about the detailed motion involved, like moving a sweater closer to pick it up more easily.”

During a multi-level thinking process, the VLA model can decide to turn longer tasks into simpler, shorter segments that the robot can execute successfully. It also helps the model generalize to solve new tasks

and be more robust to changes in its environment.

Gemini learns across embodiments Robots come in all shapes and sizes, and they have different sensing capabilities and different degrees of freedom, making it difficult to transfer motions learned from one robot to another.

DeepMind said Gemini Robotics 1.5 shows a remarkable ability to learn across different embodiments. It can transfer motions learned from one robot to another, without needing to specialize the model to each new embodiment. This accelerates learning

new behaviors, helping robots become smarter and more useful.

For example, DeepMind observed that tasks only presented to the ALOHA 2 robot during training, also just work on Apptronik’s humanoid robot, Apollo, and the bi-arm Franka robot, and vice versa.

DeepMind said Gemini Robotics 1.5 implements a holistic approach to safety through high-level semantic reasoning, including thinking about safety before acting, ensuring respectful dialogue with humans via alignment with existing Gemini Safety Policies, and triggering low-level safety sub-systems (e.g. for collision avoidance) on-board the robot when needed.

To guide our safe development of Gemini Robotics models, DeepMind is also releasing an upgrade of the ASIMOV benchmark, a comprehensive collection of datasets for evaluating and improving semantic safety, with better tail coverage, improved annotations, new safety question types, and new video modalities. In its safety evaluations on the ASIMOV benchmark, Gemini Robotics-ER 1.5 shows state-of-the-art performance, and its thinking ability significantly contributes to the improved understanding of semantic safety and better adherence to physical safety constraints. RR

Katie Clasen • Prelude Ventures

Terabase is an example of a U.S. startup competing with China’s energy sector. Terabase Energy Inc.

The U.S. is losing the game. We all want to leave our country more prosperous, secure, and sustainable for future generations, with a thriving domestic manufacturing sector. We’re locked into a constant competition with China in innovation, but while America is still pursuing this future, China’s industry has already been successful.

Just look at China’s electric vehicle (EV) sector: It’s now a massive economic engine for the country’s manufacturing base, spawning giants like CATL and BYD while contributing to significant improvements in air quality across Chinese cities. This success story offers a roadmap that we can and should follow domestically.

However, we’re well behind, and our only path to competitiveness lies through rapidly advancing automation and manufacturing efficiency—with robotics at the core of this strategy.

A large focus of the new administration has been to bring manufacturing stateside, with tariffs and other policies incentivizing domestic production. But this means very little considering the massive manufacturing labor shortage.

According to the Manufacturing Institute, the industry will need 3.8 million additional workers by 2033, but as many as 2.1 million will go unfilled — with an opportunity cost of about $1 trillion a year to the U.S. economy.

While concerns about labor displacement are valid and require proactive solutions, the reality is stark. If we want more industrialization in the U.S., the only path to growth and competitiveness on a global scale is through the uptake of advanced automation and robotics. If we don’t, we’re not even in the race.

The U.S. is already seeing largescale private investment into nextgeneration robotics applications, with robotics-related startups securing around $6.1 billion in venture capital investments last year.

Fueled by breakthroughs in computer vision and foundation AI models, what it means to be state-ofthe-art is shifting. Robots could soon be able to accomplish more and more complex tasks on their own, adapting to their environments and making decisions without relying on predefined logic or human teleoperation.

This evolution promises efficiency gains, improved productivity through round-the-clock operation, and automation of tasks previously deemed impossible.

However, to meet this potential and translate this momentum in industrial deployment, the country needs a

national strategy. Admittedly, we’ve had the opportunity to make meaningful gains domestically with technology that has already been in existence for many years. We now stand at yet another technological inflection point, and to fail to engage with strategic clarity and a unified vision would be missing an opportunity we may not see again.

The potential for economic growth and, I’d argue, accelerated decarbonization as a co-benefit, is enormous. Today’s global average

robot density is still relatively low at 162 units per 10,000 manufacturing employees.

The long history of robotics is deeply rooted in the pursuit of efficiency and safety. From the early days of automotive manufacturing, with robots like Unimate shouldering dangerous tasks, to today’s agile mobile robots and sophisticated drones, the trajectory

has been clear: Robotics enable us to do more with less.

These advancements laid the groundwork for the resource efficiency that is crucial in our fight to mitigate climate impacts as our economy continues to grow. It has the potential to make various climate technologies more effective and also meet labor demands of the climate industry.

Recent estimates indicate an additional 13 million workers will be required to meet the needs from

“green growth” across the world’s biggest economies.

Robotics are already enabling meaningful market growth for decarbonization use cases. Often in use cases that fall under the “4 Ds” framework: dull, dirty, dangerous, and dear (expensive) tasks. These are precisely the areas where robots can provide the most significant decarbonization co-benefits.

Certain sectors — such as grid upgrades, manufacturing, and mining — can capture disproportionate benefits by adding robots to the workforce. They aid in avoiding labor shortages and perform operations in extreme conditions unsafe for people.

From automating solar farm construction, EVs, and battery manufacturing to enhancing wildfire prevention and land use management, American startups are already leveraging robotics to tackle some of the most pressing environmental challenges.

The energy and industrial infrastructure sectors are already embracing this potential. Companies like Terabase are scaling robotics for solar installation, increasing labor productivity by 2x and dramatically reducing costs.

In land management, Kodama Robotics and BurnBot are emerging as crucial tools for wildfire prevention and suppression, and for more efficient reforestation efforts.

For aviation, a sector that is notoriously difficult to decarbonize, Pyka is using autonomous aircraft for cargo delivery and crop dusting to reduce CO2 emissions by over 90%.

Even in mature markets like manufacturing, articulated robotic arms and autonomous logistics robots have long been recognized for their role in driving productivity, efficiency gains, and, consequently, emissions reductions.

Assuming a 5% emissions improvement across the whole industrial light manufacturing economy, this could be a reduction of over 30 million tons of CO2 emissions. And that’s just the start.

Learning from China’s success

China has become the global leader in both industrialization and the emerging climate economy as a result of its robotics and automation advances and widespread adoption. It has dominated market expansion across solar cells, EVs, batteries, and other crucial technologies.

In turn, decarbonization technologies have proven to be massive economic growth drivers in China.

We’re now seeing the flywheel effect. Robotics offers a clear pathway to greater efficiency, reduced emissions, and a more sustainable future. It has long been core to scaling emerging climate technologies, and to make the U.S. a compelling and competitive manufacturing powerhouse, we must embrace automation to even be in the game.

The current technical inflection point makes this opportunity even more exciting, but potential alone is insufficient. We all want more domestic manufacturing and communities that are clean and safe from increasing climate disasters. Our industrialization pursuits and the emerging global climate economy are inexplicably tied.

The time to invest, innovate, and integrate robotics into our climate strategy is now, before the opportunity to make a meaningful decarbonization impact disappears. America has the innovation capacity and entrepreneurial drive to lead this transition — we simply need the strategic vision and commitment to make it happen. RR

Katie Clasen is a principal at Prelude Ventures, where she specializes in industrial decarbonization, advanced manufacturing, energy storage, and minerals. Prelude’s notable energy storage investments include Form Energy and Redoxblox, a novel thermal battery system for industrial use cases.

Before joining Prelude Ventures, Clasen worked in technical and commercial roles across the climate and energy sectors, including at Shell and Sila Nanotechnologies, where she worked on advanced battery technologies.

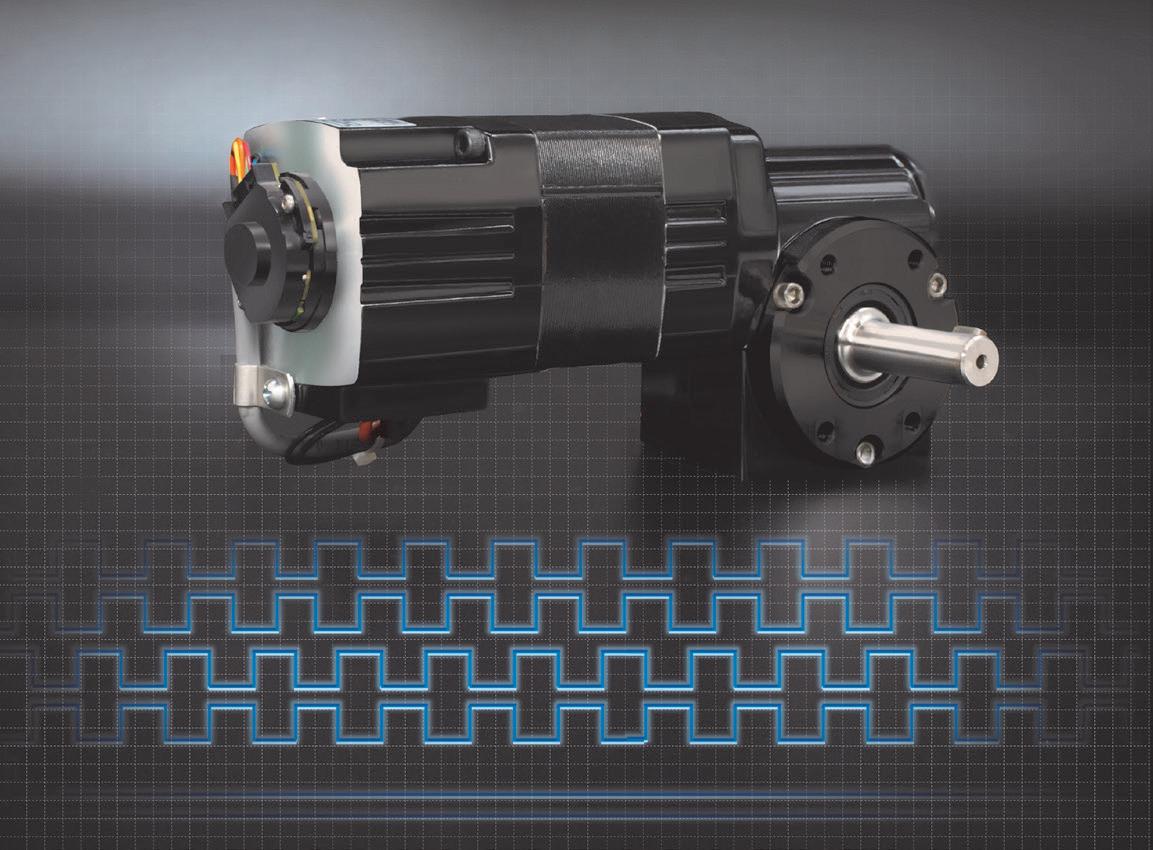

A robot can’t find its way around a warehouse without position feedback on its locomotion gearmotors. Bodine Electric Company makes it easy to find the best way to put an encoder on a gearmotor.

Many of Bodine Electric’s cataloged gearmotors have a motor shaft that extends outside the motor for mounting an encoder. And many more have a means for adding a shaft extension to the end of the motor shaft for mounting an encoder.

But the more common approach for OEMs is to have Bodine Electric make a custom gearmotor for them with the exact encoder they want already installed and tested.

Let Bodine find a way for your robot to find its way around the warehouse.

Bodine Electric Company 19225 Kapp Drive Peosta, IA 52068-9547 USA

773-478-3515

www.bodine-electric.com

info@bodine-electric.com

U.S.A., Inc.

Today’s increasing demands of automation and robotics in various industries, engineers are challenged to design unique and innovative machines to differentiate from their competitors. Within motion control systems, flexible integration, space saving, and light weight are the key requirements to design a successful mechanism.

Canon’s new high torque density, compact and lightweight DC brushless servo motors are superior to enhance innovative design. Our custom capabilities engage optimizing your next innovative designs.

We are committed in proving technological advantages for your success.

Canon U.S.A., Inc. Motion Control Products

3300 North First Street San Jose, CA, 95134

408-468-2320 www.usa.canon.com

DigiKey www.digikey.com

701 Brooks Avenue South, Thief River Falls, MN 56701 USA

sales@digikey.com • 1-800-344-4539

Increase production and reduce worker fatigue with Omron Automation’s TM Collaborative Robots. Repetitive tasks such as pick and place inspection, machine tending, assembly, and more are easily handled by the 6 axis TM cobot. Utilizing graphical programming with the TMFlow software, hand guided position recording, and a built-in vision system, users can set up simple applications in mere minutes. Omron’s TM Collaborative Robots are ISO 10218-1:2011 and ISO/TS-15066 compliant and include features like rapid changeover using TMVision and Landmark, advanced collaborative control, and external camera support.

Quickly…Or slowly…Or in reverse.

When you pair Bodine Electric Company 3-phase AC motors and gearmotors with a variable frequency drive you get smooth, consistent power across a wide speed range and maintenance-free AC performance. Our products set the industry standard for demanding applications such as packaging machinery, ventilation equipment, conveyors, material handling equipment, and metering pumps. For adjustable speed and superior AC motor performance, three phases are better than one.

Learn all about how to match 3-phase AC motors with adjustable speed drives. Read the Engineer’s Guide to Driving AC Induction Motors with Inverters.