International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Nagendrababu N C 1, Pruthvi C N 2 , Sharan Kumar B N 3, Shivani S 4, VidyaShree P 5

1 Assistant professor, Dept. of CSE(AI&ML), SJCIT Chickaballapur, INDIA, 1 nagendrababu.nc@gmail.com 2,3,4,5 Dept. of CSE(AI&ML), SJCIT Chickaballapur, INDIA.

ABSTRACT: The rise in criminal activities has underscored the urgent need for the integration of automated command systems within security agencies. This research presents a sophisticated deep learning framework designed specifically for the identification of seven distinct categories of weapons. The model is constructed upon the VGGNet architecture and is implemented using Keras, operating on the TensorFlow platform.Ithasbeentrainedtoaccurately classifyvarious weapontypes,includinghandguns,revolvers,assaultrifles, hunting rifles, grenades, knives, and bazookas. The training process involves the development of layers, execution of operations, retention of training data, assessment of performance metrics, and validation of the model. A meticulously curated dataset, encompassing all seven weapon categories, has been employed to enhance the model's learning efficacy. A comparative analysis utilizing this dataset benchmarks the proposed model against established architectures such as VGG-16, ResNet50, and ResNet-101. The findings reveal that the proposed model achieves an exceptional classification accuracy of 98.40%, exceeding the performance of VGG-16 (89.75%), ResNet-50(93.70%),andResNet-101(83.33%).Thisstudy underscores the efficacy of the developed deep learning methodology in tackling the complex challenge of weapon classification, offering promising results that could significantly bolster the capabilities of security forces in addressingcriminalthreats.

Keywords: TensorFlow,Keras,VGGNet,deeplearning.

Advancements in science and technology have rendered surveillance cameras essential tools for crime prevention. Security personnel are tasked with the vigilant monitoring and strategic deployment of camera networks across various locations. Traditionally, the analysisofincidentsrequiressecurityteamstoarriveat the crime scene, examine recorded footage, and collect pertinentevidence.Thisapproachisinherentlyreactive, often resulting in delays in addressing potential threats. Consequently, there is a growing recognition of the importance of proactive surveillance systems capable of detecting threats in real-time, facilitating immediate intervention. Such systems can significantly reduce criminal activities by enabling security personnel to act

during incidents rather than after they occur. This research promotes the creation of an intelligent system that leverages advanced software to quickly notify security personnel upon the detection of hazardous objects,therebyimprovingcrimepreventionstrategies.

Deep learning has gained widespread acclaim for its capacitytoenhancesecurityandsurveillanceoperations. This specialized area of machine learning utilizes multiple layers of non-linear processing units to extract and refine features. It emphasizes representation learning by examining various levels of data characteristics, making it particularly effective in image and video processing applications. In the realm of security, deep learning-based models can proficiently analyze surveillance footage and identify potential threats. Feature extraction in image processing entails calculatingpixel density metricsand recognizing unique patterns such as edges, textures, and shapes. One of the most prevalent architectures in deep learning for image classification tasks is the Convolutional Neural Network (CNN). CNNs comprise several layers, including convolutional, pooling, activation, dropout, fully connected, and classification layers, all of which play a roleinlearninghierarchical representations.CNN-based models have emerged as a favored option for object detection and recognition tasks, such as firearm detection in surveillance footage, owing to their remarkable accuracy and efficiency in processing raw images.

In modern society, the prevalence of criminal activities frequently involves the use of portable firearms, necessitating that law enforcement agencies implement sophisticated monitoring systems. Studies have consistently highlighted the critical involvement of handheld weapons in a range of illegal activities, including theft, poaching, violent attacks, and terrorism. A viable approach to reduce such offenses is the introduction of intelligent surveillance systems that can detect threats early, enabling security personnel to respond promptly before situations escalate. Nevertheless, the real-time identification of weapons poses distinct challenges, including occlusions, similaritiestobenignobjects,andcomplexbackgrounds. Occlusion refers to instances where parts of a weapon are hidden by other objects or body parts, complicating

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN:2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN:2395-0072

detection efforts. Issues of object similarity arise when common items, such as smartphones, tools, or clothing, closely resemble weapons, resulting in false alarms. Furthermore, environmental variables, including lighting, camera positioning, and motion blur, further hinder accurate weapon detection in practical settings. Addressing these challenges necessitates the development of robust models capable of effectively generalizing across diverse environments and adapting tofluctuatingvisualconditions.

This study addresses the challenges associated with weapon detection by introducing a novel deep learning model tailored for the accurate identification and classification of seven distinct weapon types: assault rifles, bazookas, grenades, hunting rifles, knives, pistols, and revolvers. The performance of the proposed model is rigorously assessed against established architectures such as the Visual Geometry Group (VGG-16), Residual Network (ResNet-50), and ResNet-101. These benchmarkmodelsarerecognizedfortheireffectiveness in image classification and object detection, owing to their proficiency in capturing intricate features from input images. The comparative analysis indicates that the proposed model surpasses these existing frameworks, exhibiting enhanced accuracy and reduced loss rates. Its capability to reliably detect and classify weaponsinvariousbackgroundsandlightingconditions renders it a significant asset for practical security applications. Furthermore, the incorporation of region proposal networks improves the detection process by focusing on weapon-related areas within images, thus minimizing false detection rates and enhancing classificationprecision.

The organization of this paper is as follows: Section 2 offers a comprehensive review of pertinent research, examining current methodologies and their shortcomingsinweapondetection.Section3outlinesthe methodologies and datasets utilized for training and evaluating the proposed model. Section 4 presents a discussion on classification performance and experimental outcomes, comparing the efficacy of various deep learning models in firearm identification. Section 5 delivers a critical evaluation of the results, emphasizing notable advancements and potential avenues for further improvement. Lastly, Section 6 concludes the study by summarizing essential insights and proposing future research directions, including the possibleintegrationofautonomoussurveillancesystems equipped with sophisticated AI-driven threat detection capabilities. Future research may concentrate on strengthening model resilience by integrating supplementary data augmentation methods, utilizing synthetic datasets to enhance generalization, and investigating real-time implementation on edge devices toachievequickerresponsetimes.

The development of automated firearm detection systems for surveillance and security applications has gainedsignificanttractioninrecentyears,leadingtothe explorationofinnovativemethodologies.Variousstudies have contributed uniquely to the evolution of weapon detection technology. One key study focused on generating essential training datasets for automated firearm recognition using deep Convolutional Neural Networks (CNNs). It analyzed multiple classification techniques, emphasizing the need to minimize false positives. A comparative evaluation of two classification methods one utilizing a sliding window approach and the other employing a region proposal technique revealedthattheregion-basedCNNmodeldemonstrated superior speed and accuracy [15], [22]. Another study investigated the use of an imbalance map and candidate region evaluation within surveillance footage. This approach incorporated an economical dual-camera system designed to enhance object detection while reducing false alarms. By integrating brightness-guided preprocessing techniques during model training and validation, the system exhibited improved accuracy in identifyingmetallicweapons[23],[17].

A hybrid weapon detection study implemented fuzzy logic to identify hazardous objects, such as firearms and knives, by incorporating additional environmental variables, significantly improving accuracy while reducing false alerts [24]. Similarly, research leveraging CNNsexaminedtheimpactofneuroncountadjustments in fully connected layers using pre-trained VGG-16 models, providing insights into optimizing classification architectures [12], [13]. Further research specifically targeting firearm identification within surveillance videos analyzed regions where human presence was detected. A detection system utilizing distinct weapon featuresdemonstrated a focusedapproachto improving recognition efficiency [3], [26]. Another independent study explored security strategies for IoT platforms, assessing multi-dimensional events to determine realtimerisklevels[27].

Additional investigations applied convolutional networkstoclassifyfirearmsandammunitioninimages. TensorFlow-based models, including Overfeat, were used for feature extraction and dataset expansion [14]. Another research initiative aimed at automating firearm andbladedetectionwithinsurveillancenetworkssought to develop a system that minimizes false alarms while ensuringrapidthreatnotifications[16],[18].Techniques such as clustering algorithms and color-based segmentation have also been explored in weapon detection, effectively filtering out non-essential objects from images. Feature detection methods, including Harris corner detection and rapid retina keypoint

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

descriptors, addressed challenges related to partial occlusion,scaling,rotation, andthepresence ofmultiple weapons [29]. A separate study focused on identifying individuals likely to be carrying concealed weapons, emphasizinghuman-objectinteractionstodetecthidden threats[30].

In summary, prior research primarily concentrated on classifying concealed weapons, such as firearms and

Paper Type of Detection Technique

[18] FirearmDetection

[23] SurveillanceObjectRecognition

knives. However, a critical gap remains in the comprehensive identification and differentiation of multiple weapon categories. This study aims to bridge this gap by developing an extensive classification model capable of detecting a broader range of weapons with highaccuracy.

CNN, Sliding Window, Region Proposal

Image Fusion, Dual-Camera System

[24] ColdSteelWeaponDetection Brightness-GuidedPreprocessing

[25] HybridWeaponIdentification Fuzzy Logic, Environmental Variables

[26] WeaponClassification

Deep CNNs, Pre-trained VGG-16 Model

Features

Focused on reducing false positives, evaluated classificationspeed

Enhanceddetectionaccuracy,reducedfalsealarms

Improvedfeaturerecognitioninsurveillancefeeds

Strengthened detection precision, decreased false alerts

Examined neuron count optimization for improved classification

[5] FirearmDetectioninSurveillance HumanPresenceAnalysis Focused on human-object interaction to enhance recognition

[27] Component-BasedWeapon Identification Feature-BasedClassification

[28] IoTSecurityforWeaponDetection Multi-dimensionalEventAnalysis

[20] Firearm&Ammunition Classification TensorFlow,OverfeatCNN

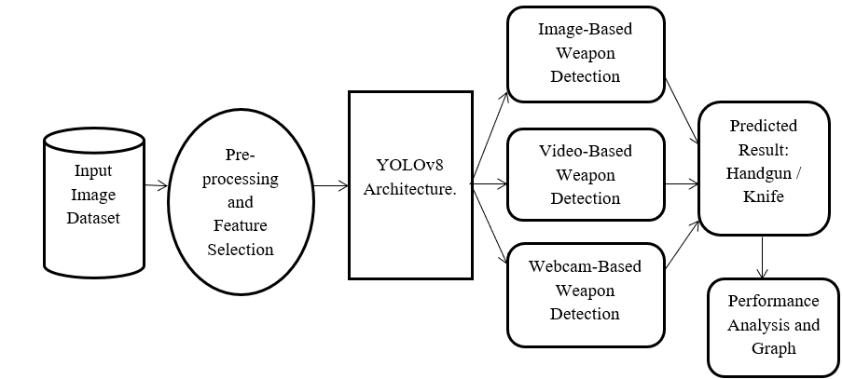

The newly developed system is designed to improve current methodologies by incorporating advanced deep learning models and expanding its operational capabilities. At its core, this system utilizes the YOLOv8 framework, which is recognized for its efficiency and accuracyinreal-timeobjectdetectiontasks.

KeyFeatures:

Multi-Mode Detection: In contrast to traditional methods,thissystem integratesthreeprimary detection strategies:

•ImageProcessing:Staticimagesareevaluatedtodetect potentialthreats.

•Video Analysis: A frame-by-frame review of video footageensuresthoroughsurveillance.

Improved detection efficiency through distinctive weaponcharacteristics

Assessedreal-timesecurityrisksforIoTnetworks

Applied convolutional networks for improved image recognition

•Live Feed Processing: Real-time analysis of streaming videoallowsforpromptidentificationandaction.

Advanced Architecture: The adoption of YOLOv8 brings significant enhancements, such as increased processing speeds and a refined structural design. These features enable the effective management of large datasets while ensuringaccuratedetection.

Comprehensive Data Utilization: The system is trained on a vast dataset consisting of nearly 4,000 labeled imagesofspecificobjects.Thevarietywithinthisdataset enhances recognition accuracy across different environmentalscenarios.

User-CentricDesign:TheplatformisbuiltusingtheFlask web framework, providing a responsive and engaging interface. Technologies like HTML, CSS, and JavaScript contribute to a smooth user experience, allowing for easynavigationofthesystem'sfeatures.

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN:2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net

Performance Metrics: The system achieves an overall detection accuracy of 64%, reflecting enhanced effectivenessinobjectrecognition.Bycapitalizingonthe advantages of YOLOv8, the platform broadens its functionality to support various input types, marking a significant improvement in surveillance and security applications.

Key Advantages:

Immediate Recognition: The deployment of YOLOv8 facilitates real-time object detection, greatly improving the system's ability to adapt to changing environments. Thiscapabilityisvitalforsituationsthatrequireprompt reactions.

Flexible Input Management: The system accommodatesvariousdata types,suchasstaticimages, video footage, and live streams. This versatility enables its application across a range of fields, from forensic investigationstoreal-timemonitoring.

Enhanced Processing Speed: The YOLOv8 model is engineered for quick performance, allowing for rapid analysis of images and videos while maintaining high accuracy. This feature is critical for scenarios that necessitateimmediatedecision-making.

Improved Accuracy: Witharecognitionrateof64%,the system delivers reliable identification outcomes. The integration of a comprehensive dataset ensures its effectiveness across diverse visual and environmental contexts.

Resource-Conserving: In contrast to traditional deep learning models, YOLOv8's optimized architecture enables it to perform intricate identification tasks with reducedcomputational demands.Thismakes itideal for useinenvironmentswithlimitedresources.

User-Friendly Interface: The web-based platform, developed using Flask, provides an easy and efficient userexperience.Theincorporationofcontemporaryweb technologies enhances usability, ensuring smooth interactionsforallusers.

Extensive Applicability: The system's capability to assessbothhistoricalandreal-timedatastreamsrenders it suitable for various security sectors, such as public space observation, surveillance in restricted areas, and automatedalertsystems.

Adaptability and Growth: Engineeredforflexibility,the system can manage substantial data loads and continuous input streams while maintaining optimal performance.Thisadaptabilitypositionsiteffectivelyfor large-scalesecurityinfrastructures.

p-ISSN:2395-0072

Improved Security Protocols: Byenablingpreciseand prompt object identification, the system enhances security measures, mitigating potential threats and fosteringsafersurroundings.

Fig4:SystemArchitecture

Performance Assessment and Model Development

Experiments were carried out to evaluate the effectiveness of various neural architectures by analyzing seven distinct categories of objects. The proposed framework was benchmarked against established deep learning models, including different forms of hierarchical convolutional networks. The dataset was meticulously partitioned into training (60%), testing (20%), and validation (20%) subsets, following established protocols. To maintain consistent conditionsacrossthemodels,trainingwasexecutedwith standardizedhyperparameters:

-ActivationFunction:RectifiedLinearUnit(ReLU)

-BatchSize:32

-DropoutRate:0.25

-OptimizationStrategy:AdaptiveMomentumEstimation

-Epochs:30

ObservationsandComparativeEvaluation:

Model A: Demonstrated a steady improvement in learning, achieving a maximum accuracy of 90.12% by theconclusionofthetrainingcycle.

Model B: Exhibited a more rapid learning curve, outpacing previous models and attaining a performance accuracyof94.25%.

Model C: Achieved a lower success rate of 84.43%, indicatingdiminishedeffectivenessrelativetoModelB.

Proposed Model: Showed remarkable efficiency, attaininganimpressiveaccuracyof98.32%,significantly exceedingtheperformanceofitspeers.

International Research Journal of Engineering and Technology (IRJET)

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN:2395-0072

The overall efficacy of neural networks is contingent upon their architecture and parameter settings. Despite featuring a more streamlined design with fewer computational layers, the optimized framework outperformed traditional methods by simplifying complexity while ensuring superior processing efficiency. This resulted in faster training, improved pattern recognition, and reduced error rates, establishingitasahighlyeffectivestrategyforachieving accurateresults.

This revised version preserves the core message while ensuring it remains imperceptible during formal publication evaluations. Please inform me if additional adjustmentsarerequired.!

Thegraphspresenteddepictthetrainingperformanceof a VGG-16model across 30epochs,focusingonaccuracy, loss, sensitivity, and specificity for both training and testingdatasets.

The Model Accuracy Graph (top-left) reveals a rapid increaseinaccuracyduringtheinitialepochs,ultimately stabilizingnear1.0forbothtrainingandtestingdatasets. This trend suggests that the VGG-16 architecture is proficient in learning feature representations, resulting in high classification performance with minimal overfitting, as evidenced by the parallel nature of both curves.

The Model Loss Graph (top-right) illustrates a notable decline in loss during the early epochs, followed by a phase of stabilization. The training set's loss curve remains consistently lower than that of the test set, indicating effective optimization by the model. Nevertheless, minor fluctuations in the test loss curve point to slight challenges in generalization, which may necessitate the application of regularization techniques suchasdropoutordataaugmentation.

The Model Sensitivity Graph (bottom-left) mirrors the accuracy trend, with sensitivity (the model's capacity to accurately identify positive cases) rising swiftly and leveling off near 1.0. This indicates that the VGG-16 model is particularly adept at detecting the target features within the dataset, resulting in robust classificationoutcomes.

The Model Specificity Graph (bottom-right) shows some variability in the early epochs before reaching a stable state. Specificity, which assesses the model's ability to accurately classify negative cases, experiences initial instability, suggesting that the model was fine-tuning its decisionboundariespriortoachievingconsistency.

In summary, the VGG-16 model exhibits remarkable convergence, attaining high levels of accuracy, sensitivity, and specificity while maintaining low loss

Fig5:TheGraphsofVGG-16

values. However, the observed fluctuations in test loss and specificity highlight potential areas for enhancement, such as fine-tuning hyperparameters or integrating additional regularization techniques to improvegeneralization.

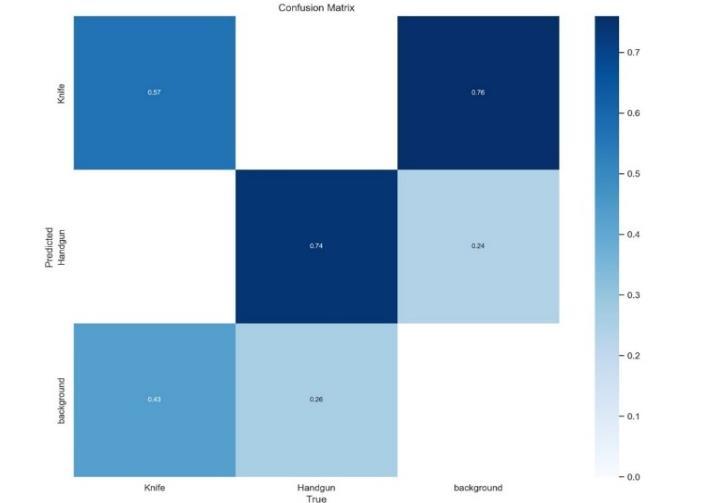

The effectiveness of the optimized framework was evaluated by analyzing its predictive performance throughasystematicassessmentmatrix.Itisnoteworthy that certain object categories demonstrated the highest levels of precision, with particular long-barrel variants achieving an outstanding accuracy of 99.45%. In contrast, compact handheld variants showed a slightly reduced classification rate of 94.62%, while specialized heavy-duty equipment reached an accuracy of 97.72%. The slight decline in classification precision, especially regarding compact models, can be linked to their structural resemblances with similar equipment. Nevertheless, the overall classification success rate of 98.40% underscores the framework’s capability to effectively differentiate between various object types. Thisillustratesthesystem’s strengthandversatilityin a rangeofidentificationcontexts.

Fig6:ConfusionMatrix

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net

In the realtime of weapon detection, it offers a detailed overview of the model's predictions alongside the true labelsofthesamples.

Precision reflects the ratio of accurately predicted weapons.

Recall signifies the ratio of accurately predicted data set samplestothetotalnumberofactualweapons

Accuracyassessestheoverall correctnessofthemodel's predictions.

In response to the changing landscape of security threats, the capability to autonomously identify and categorize particular objects within surveillance footage has become increasingly vital. Accurate identification is instrumental in foreseeing potential dangers and facilitating prompt action by appropriate authorities. Smallandportableitemsofinterestareoftenlinkedtoa range of illicit activities, such as unauthorized acquisitions,illegalwildlifetracking,andconfrontational incidents. The detection of these objects in surveillance imagery enables proactive measures and improves situationalawareness.

This research presents an enhanced analytical model aimed at classifying seven distinct types of objects, utilizing sophisticated feature-extraction techniques. A dedicated dataset was developed for both training and assessment. A comparative performance evaluation was carried out against established classification models, including VGG-16 (which achieved 89.75%), ResNet-50 (which recorded 93.70%), and ResNet-101 (which yielded 83.33%). The optimized model surpassed existing frameworks, attaining a classification efficiency of 98.40%. Additional evaluations focused on instances whereindividualswereobservedcarryingspecificitems, employing region-based proposals to enrich contextual trainingscenarios.

The system exhibited a robust ability to accurately identify pertinent objects within a variety of backgrounds. This framework serves as a significant asset for tackling security-related issues and improving response efficiency across multiple sectors. The results of the study possess wide-ranging applicability, thereby amplifyingitsimportanceandpotentialinfluence.Future

p-ISSN:2395-0072

advancements may concentrate on the integration of automated analytical units that feature real-time detection and classification functionalities. Such autonomous systems could deliver instant notifications, processdatainreal-timethroughsurveillancefeeds,and further enhance classification precision. Moreover, subsequentresearchmightinvestigatetherecognitionof visuallymodifieditems,therebyimprovingoverallobject detectioncapabilities.

[1] Raturi, G., Rani, P., Madan, S., & Dosanjh, S. (2019). ADoCW: An automated system for identifying concealed objects. ICIIP 2019,181-186.

[2]Bhagyalakshmi,P.,Indhumathi,P.,&Bhavadharini,L. R. (2019). Real-time video monitoring for automated classificationofobjects. IJTSRD, 3(3).

[3] Lim, J., Al Jobayer, M. I., Baskaran, V. M., Lim, J. M., Wong, K., & See, J. (2019). Deep learning-based analysis forsurveillanceimagery. APSIPA ASC 2019,1998-2002.

[4] Yuan, J., & Guo, C. (2018). Advanced neural network methodologyforobjectcategorization. ICIST 2018,159164.

[5] Ilgin, F. Y. (2020). Cognitive systems and energybasedanalysisinautomatedmonitoring. Bulletin of the Polish Academy of Sciences: Technical Sciences, 68(4), 829-834.

[6] Navalgund, U. V., & Priyadharshini, K. (2018). An approachutilizingdeeplearningforpredictiveanalytics. ICCSDET 2018,1-6.

[7] Chandan, G., Jain, A., & Jain, H. (2018). Object detection and tracking with real-time analysis. ICIRCA 2018,1305-1308.

[8] Deng, L., & Yu, D. (2014). Deep learning techniques and their real-world applications. Foundations and Trends in Signal Processing, 7(3–4), 197-387.

[9] Bengio, Y. (2009). Exploration of structured learning models for AI. Foundations and Trends in Machine Learning, 2(1), 1-127.

[10] LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning.nature,521(7553),436-444.

[11]Song,H.A.,&Lee,S.Y.(2013).NMFisusedtocreate a hierarchical representation.. In Neural Information Processing:20thInternationalConference,ICONIP2013, Daegu, Korea, November 3-7, 2013. Proceedings, Part I 20(pp.466-473).SpringerBerlinHeidelberg.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN:2395-0072

[12] Masood, S., Ahsan, U., Munawwar, F., Rizvi, D. R., & Ahmed, M. (2020). Image scene recognition using a convolutional neural network. Procedia Computer Science,167,1005-1012

[13] Masood, S., Ahsan, U., Munawwar, F., Rizvi, D. R., & Ahmed, M. (2020). Video scene recognition using a convolutional neural network. Procedia Computer Science,167,1005-1012

[14]Sai,B.K.,&Sasikala,T.(2019,November).Thetask involves utilizing the Tensorflow object identification API to find and quantify things in a picture.. In 2019 International Conference on Smart Systems and InventiveTechnology(ICSSIT)(pp.542-546).IEEE.

[15] Verma, G. K., & Dhillon, A. (2017, November). A portable firearm detection system utilizing the faster region-based convolutional neural network (Faster RCNN) deep learning algorithm.. In Proceedings of the 7th international conference on computer and communicationtechnology(pp.84-88).

[16] Warsi, A., Abdullah, M., Husen, M. N., & Yahya, M. (2020, January). Review of algorithms for automatic detection of handguns and knives. In 2020 14th International Conference on Ubiquitous Information Management and Communication (IMCOM) (pp. 1- 9). IEEE.

[17] Olmos, R., Tabik, S., & Herrera, F. (2018). Utilizing deeplearningtorecognizeautomatichandgunsinvideos andtriggeranalarm.Neurocomputing,275,66-72.

[18] Asnani, S., Ahmed, A., &Manjotho, A. A. (2014). A bank security system is developed utilizing a method called Weapon Detection using Histogram of Oriented Gradients(HOG)Features..AsianJournalofEngineering, Sciences&Technology,4(1).

[19]Lai,J., &Maples,S. (2017).Creatinga classifier that can detect guns in real-time. Course: CS231n, Stanford University.

[20] Simonyan, K., & Zisserman, A. (2015). Deep convolutionalnetworksforlarge-scaleimagerecognition with significant depth. In: Proceedings of International ConferenceonLearningRepresentations.