International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

Sonal

Chaudhari¹, Aaryaman Ahirao², Jivhesh Chaudhari³, Vinay Kumkar´, Aditya Kelaskarµ

¹Assistant Professor, Dept. of Computer Engineering, Datta Meghe College of Engineering, Navi Mumbai, India

²³´µDept. of Computer Engineering, Datta Meghe College of Engineering, Navi Mumbai, India

Abstract - The project focuses on the development of an Android-based application for disease detection using realtime image capture and image uploads. The app employs a machine learning model that utilizes advanced technologies such as deep learning, vision transformers, Swin Transformer technology, image segmentation, an encoderdecoder unit, and a SoftMax probabilistic classifier

Key Words: Aritificial Intelligence, Deep learning, ConvolutionalNeuralNetwork.

1.INTRODUCTION

Radiant Dermat is an advanced skin diagnosis tool that leverages the power of machine learning and computer vision to provide accurate and personalized skin assessments. This innovative platform aims to revolutionize the field of dermatology by offering accessible and efficient skin healthcare solutions. Using cutting-edgeimageanalysisanddeeplearningalgorithms, Radiant Dermat can identify skin lesions and provide customized recommendations to users. This project aims to achieve the highest level of accuracy and reliability for the end users. By harnessing the power of machine learning (ML) and computer vision, this innovative platform offers accurate, personalized skin assessments, makingexpert-leveldermatologicalevaluationsaccessible toanyone,anywhere.

TheapplicationofAIwithindermatologyisexperiencinga periodofrapidgrowth,extendingacrossabroadspectrum of concerns from common skin conditions like acne and eczema to the more critical areas of skin aging and skin cancer detection [1] . This technological advancement addresses a crucial public health issue by enhancing diagnostic accuracy, efficiency, and accessibility [2] . AI is transformingdermatologybyautomatingmanyaspectsof diagnostic processes into digital systems, thereby reducing manual administrative tasks and allowing healthcare professionalsto focuson more critical medical decisions [2] . This shift is particularly evident in early skin cancer detection, where AI-based systems leverage medical data and analytics to improve diagnostic processes and simplify medical services .Furthermore, AI

servesasa valuable tool thataugments the capabilities of dermatologists, particularly in the early detection of melanoma, which can significantly improve patient survival rates [2] . The capabilities of AI in this domain include providing diagnostic support, facilitating medical interventions, and aiding in the development of proactive healthstrategies.Manymodernhospitalsareincreasingly incorporating AI technologies to reduce operational costs and enhance diagnostic precision [2] . These systems can rapidly analyze large volumes of medical data, identifying patterns and anomalies that may elude human detection, thus streamlining workflows and ensuring more accurate diagnoses.

Thedevelopmentandinterestinsmartphoneapplications forskinlesiondiagnosisandtriageareontherise[1].The widespread use of smartphones has made it feasible to develop AI models that can run directly on patients' devices,offeringaconvenientandprivatemeansforinitial skin assessments [3] . This on-device processing of AI models addresses data privacy concerns by ensuring that sensitivemedicaldataremainsontheuser'sdevice,rather thanbeingtransmittedtoexternalserversforanalysis[3] .However, while some mobile skin lesion analysis applications demonstrate promising accuracy in controlled settings, many face limitations in terms of sensitivity and specificity when evaluated in real-world scenarios [4] . Clinical validation of these apps remains a significant challenge, with studies revealing variable and often low accuracy, recommending caution against their sole use for diagnostic purposes [5]. For instance, a 2020 systematic review of several melanoma detection apps foundpoorstudydesignandahighriskofbias,withmany apps failing to accurately identify melanoma cases [5] Conversely, some applications, like Dermalyser, have shown high diagnostic accuracy in prospective clinical trials, indicating the potential of AI in this domain when rigorously validated [6] . The performance of AI-powered skin lesion detection apps is also significantly influenced by the quality of the input images . Standardized image capture and high-quality images are crucial for ensuring thereliabilityofAIanalysis,asvariationsinlighting,focus, andanglecanaffecttheaccuracyoftheresults[1]

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

Various deep learning architectures, particularly Convolutional Neural Networks (CNNs), are fundamental to the development of AI-powered skin lesion classification systems [7] . These networks excel at learning hierarchical representations from image data, makingthemhighlyeffectivefortaskslikeidentifyingand classifying skin lesions [8, 9] . Transfer learning, a techniquethatinvolvesusingpre-trainedmodelsonlarge datasets(likeImageNet)andfine-tuningthem forspecific tasks with smaller datasets, has proven valuable in improvingtheaccuracyofskinlesionclassifiers,especially given the limited size of many medical image datasets [10]. A common challenge in training deep learning models for skin lesion classification is data imbalance, where the number of imagesfor different types oflesions canvarysignificantly.Toaddressthis,dataaugmentation techniques,suchas image rotation, flipping,andzooming, are often employed to artificially increase the size and diversity of the training data, thereby improving the robustness and generalization of the models [8] . Furthermore, there is a growing recognition of the importance of explainable AI (XAI) in dermatology [11] . XAI methods aim to provide transparency into the decision-making processes of AI models, allowing clinicians to understand and validate the AI's diagnoses, which is crucial for building trust and facilitating the integrationofAIintoclinicalpractice[12].

The U-Net architecture has become a cornerstone in the field of medical image segmentation [13] , widely utilized for its effectiveness in pixel-level classification of lesions and other anatomical structures . Its design features an encoderpaththatprogressivelyextractsfeaturesfromthe input image while reducing its spatial dimensions, and a decoder path that reconstructs the segmented image at the original resolution. Crucially, U-Net incorporates skip connections that directly link corresponding layers in the encoderanddecoder,enablingthedecodertoaccessfinegraineddetailscapturedbytheencoder,whichisessential forpreciselocalizationofabnormalitiesinmedicalimages [13].VisionTransformers,including architectureslikethe SWIN Transformer, represent a significant advancement in image processing by effectively capturing global contextual information, a capability that traditional CNNs oftenlackduetotheirfocusonlocalfeatures[14,15].The SWIN Transformer employs a mechanism called shifted windows, which allows for efficient processing of highresolution images by confining the attention computation towithinlocalwindowswhilealsoenablingcross-window connections to model global relationships [15] . This ability to understand the broader context of an image,

coupledwiththedetailedlocalprocessingofCNNs,makes Vision Transformers highly effective for both image classification and segmentation tasks in medical imaging. Recognizing the complementary strengths of U-Net for precise localization and Vision Transformers for global contextunderstanding,thereisagrowingtrendinmedical imageanalysistocombinethesearchitecturesintohybrid models [16] . These hybrid approaches aim to leverage the benefits of both types of models to achieve improved performance in medical image segmentation and classification.Manysuchmodelshavedemonstratedstateof-the-art results, achieving high segmentation accuracy, often measured by metrics like the Dice score and Intersection over Union (IoU), as well as superior classification accuracy [17, 18]. Radiant Dermat's architecture adopts a specific hybrid approach where the feature maps extracted by the U-Net encoder are directly fed into the SWIN Transformer, bypassing the decoder phaseof U-Net. This design presentsa novel strategythat could potentially offer computational efficiency by omitting the U-Net decoder while still capitalizing on the robust feature extraction capabilities of both U-Net and SWIN Transformer. The effectiveness of this particular architectural choice would need to be evaluated against the performance and efficiency of other hybrid models reported in the literature, such as those that integrate Transformers atdifferent stages of theU-Netoruse them inparallelpathways.

Radiant Dermat distinguishes itself through several key features and potential innovations. The core diagnostic capability is driven by a novel hybrid model architecture that integrates U-Net and a SWIN Transformer for skin lesion detection and classification. This combination aims to achieve high accuracy by leveraging the segmentation prowessofU-Netandtheglobalcontextunderstanding of SWIN. A notable feature is the weekly updating of new diseases on the server, complete with images and descriptions, which serves to continuously improve the user's knowledge about various skin conditions. The integration of Gemini 1.5 Pro provides users with an advanced chatbot capable of answering health-related inquiries and potentially offering personalized information. Furthermore, Radiant Dermat includes a comprehensive user profile feature that allows users to upload their past medical history. This capability to integrate user medical history could potentially enhance the personalization and accuracy of skin assessments, although it necessitates stringent privacy and security measures . The application also offers standard functionalities such as photo upload/capture of skin lesions, resultdisplaywithdetailedinformationaboutthe diagnosed condition and the ability to store this

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

information in a history log, and a feature to view the user's test history. The technical architecture, utilizing Jetpack ComposeforthefrontendandSpringBootforthe backend, along with Firebase for authentication, provides a solid foundation for a scalable and maintainable application. The direct feeding of U-Net encoder features into the SWIN Transformer, skipping the U-Net decoder, could represent a potential innovation in terms of computational efficiency or model performance, warranting further investigation and comparison with existinghybridmodels.

The dataset utilized in this study is the HAM10000 ("Human Against Machine with 10000 training images") dataset [Reference to HAM10000 paper if available]. This publiclyavailabledatasetcomprisesacollectionof10,015 dermatoscopic images of pigmented skin lesions. These images represent seven distinct diagnostic categories of skin diseases, making it a suitable benchmark for multiclass skin lesion classification tasks. The seven classes includedinthedatasetare:

1.Actinickeratosesandintraepithelialcarcinoma/ Bowen'sdisease(akiec)

2.Basalcellcarcinoma(bcc)

3.Benignkeratosis-likelesions(solarlentigines/ seborrheickeratosesandlichen-planuslikekeratosis) (bkl)

4.Dermatofibroma(df)

5.Melanoma(mel)

6.Nevus(nv)

7.Vascularlesions(angiomas,angiokeratomas,pyogenic granulomasandhemorrhage)(vasc)

The original images in the HAM10000 dataset have a native resolution of approximately 600 x 450 pixels with three color channels (RGB). The preprocessing pipeline aimed to enhance the quality and standardize the input images before feeding them to the UNetSwinClassifier model. Initially, Digital Hair Removal (DHR) was performed to mitigate the obscuring effects of hair in dermatoscopic images. This involved applying the Black Hat transformation, a morphological operation, to highlight the hair structures, followed by an impainting technique to seamlessly fill in the hair regions, allowing the model to focus on the underlying skin lesion. Subsequently, a Median Filter was employed to reduce noise present in the images while preserving essential textural details and edges, thereby improving the signalto-noise ratio. Finally, to ensure uniformity and compatibility with the model's input requirements, all preprocessed images were resized from their original

dimensions of approximately 600x450 pixels to a fixed size of 224x224 pixels using bilinear interpolation, which helps to maintain image integrity during the scaling process.

Fig -1: ResultsofDigitalHairRemoval(DHR)Appliedon animagefromthedatasetusingBlack-Hattransformation andimpainting.

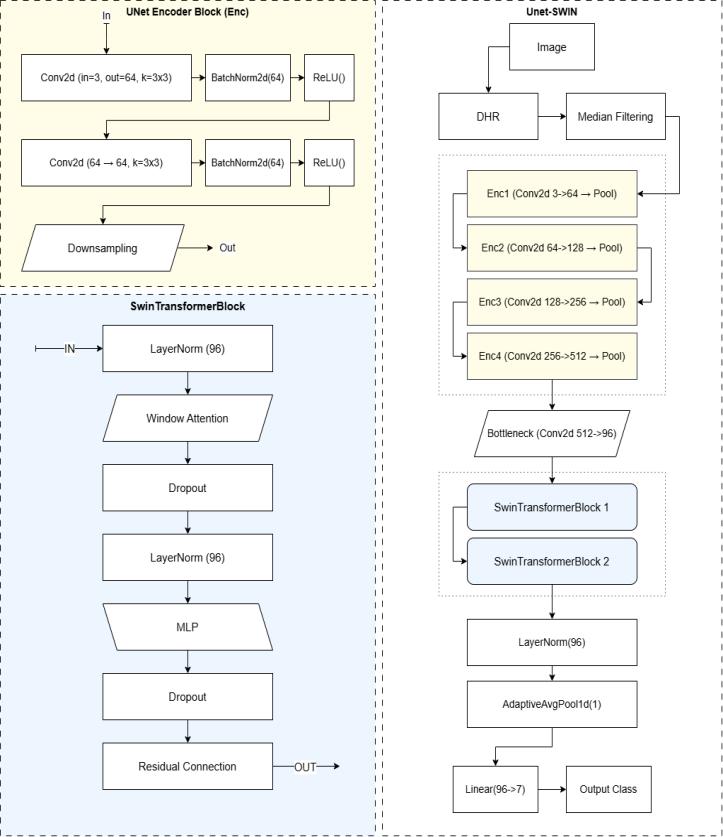

Thissection detailsthe architecture of the proposed deep learning model for skin disease classification. The model, namedUNetSwinClassifier,leveragesthestrengthsofboth convolutional neural networks (CNNs) for local feature extractionandTransformernetworksforcapturingglobal dependencies. The architecture comprises four main components:aU-Netstyleencoderforhierarchicalfeature extraction, a bottleneck convolutional layer for channel reduction, a Swin Transformer module for learning longrangerelationships,andafinalclassificationhead.

Fig-2:Modelbreakdown

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

U-Net Style Encoder - The initial stage of our architecture employs a U-Net inspired encoder to extract hierarchical features from the input skin lesionimages.Theencoderconsistsoffoursequential UNetEncoderBlock layers. Each UNetEncoderBlock is designed to perform feature extraction and downsampling.

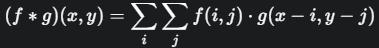

Double Convolutional Layer - Each UNetEncoderBlock begins with a double convolutional layer. This module comprises two consecutive convolutional layers, each followed by Batch Normalization and a Rectified Linear Unit (ReLU) activation function. The convolutional operationcanbemathematicallyrepresentedas:

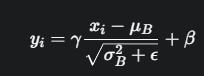

wherefistheinputfeaturemapandgistheconvolutional kernel.Inourcase,weutilize2Dconvolutionallayerswith a kernel size of 3x3 and a stride of 1, maintaining the spatial dimensions through padding.Batch Normalization (BN) is applied after each convolutional layer to stabilize learning by normalizing the activations of the previous layer.ForabatchofactivationsB={x1,...,xm},BNcomputes the mean μB and variance σB2 and normalized output yi isthengivenby:

where γ and β are learnable scale and shift parameters, andϵ is a small constant for numerical stability.The ReLU activationfunction,appliedelement-wise,introducesnonlinearity.

Max pooling layer - Following the double convolutionallayerineachUNetEncoderBlock,aMax Pooling layer is used for spatial downsampling. Specifically, we employ a 2x2 Max Pooling operation withastrideof2,whichreducestheheightandwidth ofthefeaturemapsbya factorof2.TheMax Pooling operationselectsthemaximumvaluewithineach2x2 window. The inputimageof size224x224undergoes this encoding process, resulting in feature maps of decreasingspatialdimensionsandincreasingchannel counts (64, 128, 256, and 512 channels after each of

the four encoder blocks). The final output of the encoderhasaspatialdimensionof14x14.

Bottleneck Convolutional Layer - After the U-Net style encoder, a bottleneck convolutional layer is introduced. This layer consists of a single 2D convolutionallayerwithakernelsizeof1x1anda stride of 1. The purpose of this layer is to reduce the number of feature channels from 512 (the output of the final encoder block) to a lower dimension of 96. This reduction helps in managing the computational complexity of the subsequent Swin Transformer module. The 1x1 convolution performs a linear combination of the inputchannelsateachspatiallocation:

Swin Transformer module - To capture global contextual information crucial for accurate skin disease classification, we integrate a Swin Transformer module. The Swin Transformer operates on the feature maps produced by the bottleneck layer, which have a spatial resolution of 14x14 and 96 feature channels. This module consists of two sequential SwinTransformerBlock layers.

Swin Transformer block - Each SwinTransformerBlock implements a shifted window-based multi-head self-attention mechanism. The block comprises several sublayers:

1. Layer Normalization (LN): Applied before the attention mechanism and the MLP to stabilize training

2. Window-based Multi-Head Self-Attention (W-MSA) / Shifted Window-based Multi-Head Self-Attention (SW-MSA):ThecoreoftheSwinTransformerblockis the attention mechanism. The input feature map is divided into non-overlapping windows of size 7x7. Within each window, self-attention is computed. For subsequent blocks, a shifted window partitioning scheme is employed to enable cross-window connections, enhancing the ability to model global relationships.

3. DropPath: A form of stochastic depth that randomly drops entire residual connections during training to improverobustness.

4. MLP: AMulti-LayerPerceptronwithtwolinearlayers and a GELU (Gaussian Error Linear Unit) activation function in between. The first linear layer expands

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

the feature dimension by a ratio of 4 (as defined by swin_mlp_ratio),and the secondlinearlayer projects it back to the original dimension. Dropout is applied aftertheGELUactivation.

The Swin Transformer module processes the input feature map of size 14x14, maintaining the spatial resolution while refining the feature representations through the attention mechanism. The output of the SwinTransformermodulehasashapeof(BatchSize, 14*14,96).

Final clasification layers - The final stage of the architecture is responsible for mapping the learned features to the classification output. This stageconsistsofthefollowinglayers:

1. Layer Normalization: Another Layer Normalization layerisappliedtotheoutputoftheSwinTransformer module.

2. Adaptive Average Pooling: An Adaptive Average Pooling layer with an output size of 1 is used to reducethespatialdimensionsofthefeaturemap.The input tensor of shape (Batch Size, 14*14, 96) is reshaped to (Batch Size, 96, 14*14) before being passed to this layer, resulting in an output of shape (Batch Size, 96, 1). This effectively performs global averagepoolingacrossthespatialdimensions

3. Linear Layer (Classification Head): A final Linear layerwithaninputsizeof96andanoutputsizeof7 (correspondingtothenumberofskindiseaseclasses) is used to perform the classification. This layer outputsthelogitsforeachclass.

The overall forward pass of the UNetSwinClassifier takes an input image of size (Batch Size, 3, 224, 224) and produces an output tensor of shape (Batch Size, 7) representing the classification logits for the seven different skin disease classes. These logits are then typicallypassedthroughaSoftmaxfunctiontoobtainclass probabilities for prediction. This detailed description provides a comprehensive overview of the proposed UNetSwinClassifier architecture, outlining the function and mathematical formulations of its key components. This methodology aims to effectively leverage both local and global feature extraction for accurate skin disease classification.

Accuracy: Percentage of correctly detected diseases.

Latency: Time taken from image capture/upload toresultdisplay.

PrecisionandRecall:Usedtoevaluatethemodel's ability to detect the correct disease without false positivesornegatives.

Scalability: Ability to handle multiple users and real-time requests without a significant drop in performance.

Table-1: PerformanceMetrics

1. IntegrationofaDiseaseProgressionTrackingSystem: Future work could focus on developing a system to tracktheprogressionofskindiseasesovertime.This would involve incorporating longitudinal data analysis capabilities, potentially allowing users to monitor changes in their condition based on sequential image analysis and other relevant information.

2. Enhancing Model Accuracy and Robustness: Further research will aim to improve the classification accuracyandrobustnessoftheproposedmodel.This could involve exploring advanced architectures, incorporating attention mechanisms more deeply, leveraging larger and more diverse datasets, and investigatingtechniquesforhandlingclassimbalance andimprovinggeneralizationtounseendata.

[1] Gabriela Lladó Grove, Gorm Reedtz, Brian Vangsgaard, Hassan Eskandarani, Merete Haedersdal, Flemming Andersen, and Peter Bjerring. 2024. “Artificial Intelligence Smartphone Application for Detection of Simulated Skin Changes: An In Vivo Pilot Study.” Skin Research and Technology 30(10): e70056.DOI:10.1111/srt.70056.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN:2395-0072

[2]Kavita Behara, Ernest Bhero, and John Terhile Agee.2024. “AI in Dermatology: A Comprehensive Review into Skin Cancer Detection.” PeerJ Computer Science10:e2530.DOI:10.7717/peerj-cs.2530.

[3]IrenValova,PeterDinh,andNatachaGueorguieva. 2023. “Mobile Application for Skin Cancer Classification Using Deep Learning.” Journal of MachineIntelligenceandDataScience(JMIDS)4:15–26.DOI:10.11159/jmids.2023.003.

[4]Sangers,T.,Reeder,S.,vanderVet,S.,Jhingoer,S., Mooyaart, A., Siegel, D. M., Nijsten, T., & Wakkee, M. (2022). Validation of a Market-Approved Artificial Intelligence Mobile Health App for Skin Cancer Screening: A Prospective Multicenter Diagnostic AccuracyStudy. Dermatology, 238(4),649–656.

[5]Sun,M.D.,Kentley,J.,Mehta,P.,Dusza,S.,Halpern, A., & Rotemberg, V. (n.d.). Accuracy of commercially available smartphone applications for the detection ofmelanoma.BrJDermatol.,186(4),744–746.

[6]Panagiotis Papachristou, My Söderholm, Jon Pallon,MarinaTaloyan,SamPolesie,JohnPaoli,Chris D Anderson, and Magnus Falk. 2024. “Evaluation of an artificial intelligence-based decision support for the detection of cutaneous melanoma in primary care: a prospective real-life clinical trial”. British Journal of Dermatology 191(1) DOI 10.1093/bjd/ljae021

[7]Abdulrahman Takiddin, JensSchneider, YinYang, Alaa Abd-Alrazaq, and Mowafa Househ. 2021. “Artificial Intelligence for Skin Cancer Detection: Scoping Review.” Journal of Medical Internet Research23(11):e22934.DOI:10.2196/22934.

[8]Yinhao Wu,BinChen,AnZeng, Dan Pan, Ruixuan Wang, and Shen Zhao. 2022. “Skin Cancer Classification With Deep Learning: A Systematic Review.” Frontiers in Oncology 12: 893972. DOI: 10.3389/fonc.2022.893972.

[9]Mohamed A. Kassem, Khalid M. Hosny, Robertas Damasevicius, and Mohamed Meselhy Eltoukhy. 2021.“MachineLearningandDeepLearningMethods for Skin Lesion Classification and Diagnosis: A Systematic Review.” Diagnostics 11(8): 1390. DOI: 10.3390/diagnostics11081390.

[10] MdSirajul Islam and Sanjeev Panta. 2024. “Skin CancerImagesClassificationusingTransferLearning Techniques.”arXiv:2406.12954v1.

[11] Muhammad Bilal Jan, Muhammad Rashid, Raja Vavekanand, and Vijay Singh. 2025. “Integrating Explainable AI for Skin Lesion Classifications: A

Systematic Literature Review.” Studies in Medical and Health Sciences 2(1): 1–14. DOI: 10.48185/smhs.v2i1.1422.

[12]K. Srilatha and N. Satheesh Kumar. 2024. “Explainable AI Framework for Skin Cancer Classification, and Melanoma Segmentation.” ARPN Journal of Engineering and Applied Sciences 19(15): 1819–6608.

[13]Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. “U-Net: Convolutional Networks for Biomedical Image Segmentation.” In Medical Image Computing and Computer-Assisted Intervention (MICCAI), edited by Nassir Navab, Joerg Hornegger, William M. Wells, and Alejandro F. Frangi, 234–41. Cham: Springer International Publishing. arXiv:1505.04597v1.

[14]Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov,DirkWeissenborn,XiaohuaZhai,Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. 2021. “An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale.” In International Conference on Learning Representations.arXiv:2010.11929v2.

[15]ZeLiu,YutongLin,YueCao,HanHu,YixuanWei, Zheng Zhang, Stephen Lin, and Baining Guo. 2021. “Swin Transformer: Hierarchical Vision Transformer usingShiftedWindows.”arXiv:2103.14030v2.

[16]Pengsong Jiang, Wufeng Liu, Feihu Wang, and Renjie Wei. 2025. “Hybrid U-Net Model with Visual Transformers for Enhanced Multi-Organ Medical Image Segmentation.” Information 16(2): 111. DOI: 10.3390/info16020111.

[17]Ovais Iqbal Shah, Danish Raza Rizvi, and Aqib Nazir Mir. MAPUNetR: A Hybrid Vision Transformer and U-Net Architecture for Efficient and Interpretable Medical Image Segmentation. Department of Computer Engineering Jamia Millia Islamia (A Central University) New Delhi.ment of ComputerEngineeringJamiaMilliaIslamia(ACentral University)NewDelhi.

[18]Li, X., Zhu, W., Dong, X., Dumitrascu, O. M., & Wang,Y.(2024).EVIT-UNET:U-NETLIKEEFFICIENT VISION TRANSFORMER FOR MEDICAL IMAGE SEGMENTATION ON MOBILE AND EDGE DEVICES (arXiv:2410.15036v1).