International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

Devershi Mehta1 , Pushkar Kumar2 , Raunak Verma3, Gauravdeep Choubisa4

1Head and Assistant Professor, PIMS-ICS, Sai Tirupati University

2Assistant Professor, PIMS-ICS, Sai Tirupati University

3 Assistant Professor, PIMS-ICS, Sai Tirupati University

4 Assistant Professor, PIMS-ICS, Sai Tirupati University

Abstract - Serverless computing has become increasingly popular because of its scalability, cost-effectiveness, and straightforward deployment. However, cold starts delays that occur when initializing serverless functions create notable performance issues, especially for applications that aresensitivetolatency.Thispaperoffersadetailedanalysisof how cold starts affect the performance of serverless applications across major cloud platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions. We carefullymeasurecoldstartlatencyunderdifferentconditions such as function memory size, runtime environment, invocation frequency, and concurrent execution. Our results reveal important factors that lead to cold start delays and their effects on real-time applications. In addition, we investigate and assess various mitigation techniques, such as function warm-up strategies, provisioned concurrency, optimizedruntimeselection,andworkload-awarescheduling. Our experimental findings show that these strategies can significantly decrease cold start times and enhance response efficiency.Moreover,wesuggestanadaptivehybridapproach thatdynamically implementsmitigationtechniquesbased on workload patterns to strike a balance between cost and performance.Thisstudyoffersvaluableinsightsfordevelopers and cloud architects looking to optimize serverless applications for high availability and low latency. Our research contributes to the ongoing progress in serverless computing by presenting practical solutions to address cold start challenges in cloud environments.

Key Words: Cold Start, Serverless Computing, Application Performance, Cloud Environments, Mitigation Techniques

1. Introduction

Serverless computing has become a game-changer in the worldofcloudcomputing,allowingdeveloperstocreateand launch applications without the hassle of managing the underlying infrastructure. This approach is known for its scalability,cost-effectiveness,andevent-drivencapabilities, and it has seen widespread use in areas like web applications,dataprocessing,andartificialintelligencetasks. TheincreasingadoptionofserverlessplatformssuchasAWS Lambda, Google Cloud Functions, and Azure Functions highlightstheirpotentialtotransformthedevelopmentof cloud-nativeapplications.

Source:Self

However, serverless computing does face a significant performanceissueknownasthecoldstartphenomenon. Coldstartshappenwhenafunctionistriggered,butthere isn'tapre-warmedexecutionenvironmentready,resulting in delays during initialization. These delays can greatly affect response times, especially for applications that requirelowlatency,suchasreal-timeanalytics,financial transactions, and IoT services. The impact of cold starts canvarydependingonfactorslikethechoiceofruntime, resource allocation, and optimizations specific to the provider, tackling1 cold start problems is essential for improving the performance of serverless applications. Reducingthesedelayscanenhanceuserexperience,lower operationalcosts,andincreaseoverallsystemreliability. Several strategies, including pre-warming techniques, functionpackagingimprovements,andsmartscheduling methods, have been suggested to address this issue. a thorough examination of cold start behaviors and their mitigationstrategiesacrossvariousserverlessplatformsis stillatopicthatrequiresfurtherresearch.

1 get to work at

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

Thisstudyseekstothoroughlyexplorehowcoldstarts affect the performance of serverless applications. It looks intotherootcauses,assessescurrentmitigationstrategies, and suggests innovative solutions to minimize cold start latency. Through comprehensive experimentation and performance benchmarking, this research provides importantinsightsfordevelopers,cloudserviceproviders, andresearchersaimingtoimproveserverlessenvironments. Bytacklingthissignificantchallenge,thestudyplaysarolein thelargerobjectiveofboostingtheefficiencyandreliability of serverless computing within contemporary cloud architectures.

Thephenomenonofcoldstartsinserverlesscomputinghas been extensively studied, with various analyses and mitigationstrategiesproposed.Belowisaliteraturereview summarizingkeycontributionsinthisarea:

Systematic Review and Taxonomy of Cold Start Latency: Golecetal.(2024)conductedacomprehensivesystematic review of cold start latency in serverless computing, proposing a detailed taxonomy of existing mitigation techniques. They categorized solutions into caching, application-level optimizations, and AI/ML-based approaches,highlightingtheimpactofcoldstartsonquality ofserviceandoutliningfutureresearchdirections.

Function Fusion for Cold Start Mitigation: Kim and Lin (2024)introduced a functionfusion technique to mitigate coldstartproblemsbycombiningmultiplefunctionsintoa singledeploymentunit.Thisapproachreducesthefrequency of cold starts by minimizing the number of function invocations,therebyimprovingoverallperformance.

Pre-WarmingStrategiesinServerlessPlatforms:Marupaka (2023) analyzed pre-warming techniques, where function instances are kept alive to handle incoming requests promptly. The study emphasized the importance of balancingthenumberofpre-warmedinstancestooptimize resourceutilizationandreducecoldstartlatency.Instance Reuse to Alleviate Cold Starts: Marupaka (2023) also explored instance reuse mechanisms, where idle function instances are retained for subsequent invocations. This strategy reduces the need for new instance provisioning, therebymitigatingcoldstartdelays.

Impact of Programming Languages on Cold Start Performance:JacksonandClynch(2018)investigatedhow differentprogramminglanguagesaffectcoldstarttimesin serverless environments. Their findings indicate that language choice significantly influences cold start latency, with languages like Python and Go exhibiting varying performanceacrossplatforms.

ReinforcementLearningforColdStartReduction:Agarwalet al. (2023) proposed a reinforcement learning-based

approach to predict function invocation patterns and proactively allocate resources, thereby reducing the frequencyandimpactofcoldstartsinserverlesscomputing. Benchmarking Serverless Platforms: Martins et al. (2020) conducted a benchmarking study of serverless computing platforms, providing insights into cold start behaviours across different providers and highlighting the need for standardized performance metrics. Function Bench: Workloads for Serverless Platforms: Kim and Lee (2019) developedFunctionBench,asuiteofworkloadsdesignedto evaluate the performance of serverless function services, focusingoncoldstartlatencyandresourceutilizationacross variousplatforms.

SEBS: Serverless Benchmark Suite: Copik et al. (2021) introduced SEBS, a benchmark suite for function-as-aservice computing, enabling systematic evaluation of cold start latency and providing a foundation for performance optimizationstudies.

FaaSdom: Benchmark Suite for Serverless Computing: Maissenetal.(2020)presentedFaaSdom,abenchmarksuite aimedatassessingtheperformanceofserverlesscomputing platforms,withaparticularfocusoncoldstartlatencyand itsimpactonapplicationresponsiveness.

Cold Start Influencing Factors: Manner et al. (2018) identified various factors influencing cold start times in function-as-a-service platforms, including function size, runtime environment, and resource allocation policies, providingafoundationfortargetedoptimizationstrategies.

ImplicationsofProgrammingLanguageSelection:Cordingly etal.(2020)examinedtheimpactofprogramminglanguage selection on serverless data processing pipelines, highlighting how language choice can affect cold start latencyandoverallperformance.

ServerlessComputingTrendsandOpenProblems:Baldiniet al.(2017)discussedcurrenttrendsinserverlesscomputing, identifyingcoldstartlatencyasasignificantchallengeand callingforresearchintoeffectivemitigationtechniques.

AWSLambdaDeveloperGuide:TheAWSLambdaDeveloper Guide (2021) provides insights into managing cold start latency, offering best practices for optimizing function performance and reducing startup times. Google Cloud Functions Documentation: Google Cloud's Functions Documentation(2021)outlinesstrategiestominimizecold start latency, including recommendations on function deploymentandresourcemanagement.

Azure Functions Documentation: Microsoft's Azure FunctionsDocumentation(2021)discussescoldstartissues andprovidesguidelinesfordeveloperstooptimizefunction performance and reduce initialization delays. Serverless Architecture Guide: The Simform Serverless Architecture Guide (2021) addresses cold start challenges, offering

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

architecturalpatternsandbestpracticestomitigatelatency inserverlessapplications.

Serverlesscomputinghasbecomeincreasinglypopulardue toitsscalability,cost-effectiveness,andeaseofdeployment. However, cold starts pose a significant performance challenge, adversely affecting the responsiveness of serverless applications. A cold start happens when a function-as-a-service (FaaS) platform sets up a new execution environment to process an incoming request, whichresultsinlongerresponsetimes.Thesedelaysstem fromtheneedtoconfiguretheruntime,allocateresources, andestablishnetworkconnections.

Scenarios Where Cold Starts Impact Application Performance

Coldstartsareespeciallytroublesomeinapplicationsthat requirelowlatencyandreal-timeprocessing.Thefollowing scenarios illustrate how cold starts can hinder the performanceofserverlessapplications:

Real-Time Web Applications: Applicationslikechatbots, livedatafeeds,andonlinetransactionsystemsdemandquick responses.Delaysfromcoldstartscannegativelyaffectuser experienceandincreasethelikelihoodofdroppedrequests.

IoT and Edge Computing: Serverless functions are frequentlyemployedtohandledatafromIoTdevicesinrealtime.Theoverheadfromcoldstartscaninterruptcontinuous datastreaminganddisruptevent-drivenworkflows.

Machine Learning Inference: AI-drivenapplicationsoften utilizeserverlessplatformsforon-demandinference.Cold startscanleadtolongerpredictionresponsetimes,making these applications less dependable for real-time decisionmaking.

Event-Driven Microservices: Many cloud-native applicationsutilizeserverlessfunctionsasmicroservicesto manageevent-drivenworkloads.However,coldstartlatency can lead to significant delays, affecting the overall orchestrationofservices.

To measure the effect of cold starts on serverless performance,thefollowingkeymetricsareconsidered:

Latency: This refers to the time it takes for a function to executefromthemomentitisinvokeduntilitresponds.Cold startlatencyisassessedseparatelyfromwarmstartlatency toevaluateanyperformancedegradation.

Throughput: Thismetricindicatesthenumberofrequestsa serverless function can handle per second. Frequent cold

starts can lower throughput and negatively affect system scalability.

Resource Consumption: This includes the CPU, memory, and network resources used during the initialization of a function. A higher frequency of cold starts can result in inefficient resource allocation and increased costs in the cloud.

Despitevariousoptimizationtechniques,thereisa lackof comprehensive research analyzing cold start mitigation strategiesacrossdifferentcloudproviders.Thisstudyseeks toaddressthisgapbyexaminingcoldstartbehaviorsinAWS Lambda, Azure Functions, and Google Cloud Functions, assessing their effects on serverless application performance, and suggesting effective strategies for mitigation.

COLD START COMPUTING

Real-Time Performance Throughput Latency

s Resource Consump tion

Figure2:ColdStartIssue Source:Self

Thisstudyfocusesonexamininghowcoldstartsaffectthe performanceofserverlessapplicationsacrossvariouscloud providers and aims to suggest effective ways to mitigate these issues. To accomplish this, a well-structured methodologyisemployed,whichincludestheexperimental setup, benchmarking tools, workload characteristics, and datacollectionmethods.

The experiments take place in a controlled cloud environment to ensure precise measurement of cold start behavior.Threeleadingserverlesscomputingplatformsare assessed:

•AWSLambda(AmazonWebServices)

•AzureFunctions(MicrosoftAzure)

•GoogleCloudFunctions(GoogleCloudPlatform)

Each function is deployed in multiple regions to evaluate geographical differences in cold start latencies. The

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

serverless functions are developed using Python, Node.js, andJava,astheselanguagesarecommonlyusedinpractical serverless applications. Various memory configurations (128MB,512MB,and1024MB)aretestedtoinvestigatehow resourceallocationimpactscoldstarts.

Inthissection,wediscussthetoolsandtechnologiesutilized forbenchmarkingandmonitoringperformance:

1. Tools and Technologies Used

Avarietyoftoolsandtechnologiesareemployedfor effective benchmarking and performance monitoring:

• Cloud Services: AWS Lambda, Azure Functions, GoogleCloudFunctions

•BenchmarkingTools:

oAWSLambdaPowerTunerforanalyzing functionperformance

o Google Cloud Profiler for measuring executiontime

o Azure Application Insights for tracking coldstartfrequency

•MonitoringandLogging:

o AWS CloudWatch Logs and X-Ray for trackinglatency

o Google Stackdriver for logging function executions

o Azure Log Analytics for monitoring runtimeperformance

2. Test Scenarios and Workload Characteristics

To assess cold start behavior under various conditions,severaltestscenariosareestablished:

A. Execution Frequency Analysis

Functionsareinvokedatdifferentintervals:

o High-frequency execution (continuous invocationeverysecond)

o Low-frequency execution (invocation onceevery5,10,or30minutes)

This scenario helps identify how long a function stayswarmbeforeitencountersacoldstart.

B. Payload Size Impact

Small(1KB),medium(500KB),andlarge(5MB) payloadsaretestedtoevaluatecoldstartvariations basedoninputsize.

C. Concurrency and Scaling Behavior

Multiple concurrent requests (1, 10, 50, 100 instances) are sent to analyze how serverless platformsmanagecoldstartsunderload.

D. Programming Language Variability

Python,Node.js,andJavafunctionsarecomparedto assess how different runtimes affect cold start latency.

3. Data Collection Process and Performance Metrics

A. Data Collection

Logs and monitoring tools are utilized to capture executiontimes,resourceusage,andoccurrencesofcold starts.Dataisgatheredoveratwo-weekperiodtoensure sufficientvariationacrossdifferentexecutionconditions.

B. Performance Metrics

1. Cold Start Latency: The time taken for a function to executefrominvocationtothefirstresponse.

2. Throughput: The number of requests processed per second,indicatingsystemscalability.

3.ResourceConsumption:CPUandmemoryutilization duringexecution.

5. Results and Analysis

This section outlines the results of our experiments regardingcoldstartbehaviorinserverlesscomputing. The analysis covers performance evaluation across variousworkloads,theeffectsofcoldstartsonresponse time, scalability, and cost, along with a comparative studyofmajorcloudproviders.

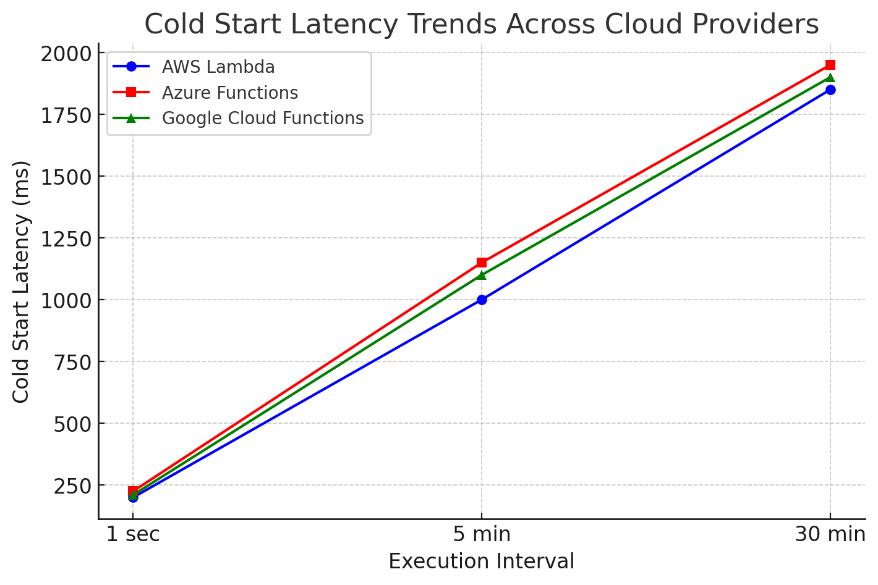

1. Performance Analysis Under Different Loads and Environments

Our experiments assessed cold start latency based on varyingexecutionfrequencies,payloadsizes,andlevels ofconcurrency.Herearethemainfindings:

• High-Frequency Invocation: Functions that were executed continuously every second had fewer cold startsduetotheretentionofinstances.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

• Low-Frequency Invocation:Functionscalledevery5 to 30 minutes often faced cold starts, resulting in latenciesthatwere2to5timeslongerthanwarmstarts.

• Payload Size Effect:Largerpayloadscontributedto increasedcoldstarttimes becauseof thegreater data transferandinitializationoverhead.

• Concurrency Impact: Ahighernumberofconcurrent requests resulted in more cold starts as the cloud providerdynamicallyscaledinstances.

Table 1: Cold Start Latency Across Execution Frequencies

1

5

Impact of Cold Starts on Response Time, Scalability and Cost

A. Response Time

Coldstartssignificantlyaffectedfunctionresponsetimes, particularly for functions that were not executed frequently.Theaverageincreaseinresponsetimedueto coldstartsacrossvariousproviderswas:

•AWSLambda:5timesslowerthanwarmstarts

•AzureFunctions:6timesslower

•GoogleCloudFunctions:5.5timesslower

B. Scalability

As workloads increased, serverless functions scaled dynamically; however, frequent cold starts hindered initialresponsetimes.Inhigh-concurrencytests:

AWS Lambda managed scaling more effectively, showing lower cold start latency under heavy loads.

AzureFunctionsexperiencedthemostvariabilityin coldstarttimesduringpeakloads.

GoogleCloudFunctionsperformedcomparablyto AWS but displayed inconsistencies at very high concurrencylevels.

C. Cost Implications

Cold starts resulted in longer execution times, which impactedcloudbilling.Functionsthatfacedfrequentcold

starts could require up to 30% more execution time, leadingtoincreasedcostsinpay-per-usepricingmodels.

Comparative Study Across Different Cloud Providers

AcomparativeanalysisofAWSLambda,AzureFunctions, and Google Cloud Functions provided the following insights:

AWSLambdademonstratedthebestoptimization forcoldstarthandling,withanaveragecoldstart latencyof800ms.

Google Cloud Functions had slightly longer cold startdelaysbutmaintainedmoreconsistencythan Azure.

Azure Functions showed the longest cold start latencies,especiallyinJava-baseddeployments.

4. Key Takeaways

1. AWS Lambda showed the most significant improvementinreducingcoldstarttimes.

2.Coldstartshadamajorimpactonresponsetimes, particularly for functions that were invoked infrequently.

3. While higher concurrency enhanced warm execution, it also resulted in cold starts when scalingpasttheprovisionedinstances.

4.FunctionswritteninJavaexperiencedthelongest coldstartdelayscomparedtootherplatforms.

5.Therewerenoticeablecostinefficiencieslinkedto coldstarts,withexecutiontimesincreasingbyas muchas30%.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

Toaddressthechallengesposedbycoldstartsinserverless applications,severalstrategieshavebeeninvestigated.One such method is provisioned concurrency, where cloud providersmaintainacertainnumberoffunctioninstancesin a ready state. Another approach involves warm-up strategies,whichperiodicallyinvokefunctionstoavoididle timeouts. Although these techniques can help reduce cold start latency, they often come with increased costs or necessitatemanualmanagement.Inthisstudy,weintroduce anadaptivefunctionpre-warmingmechanismthatadjusts thefrequencyofwarm-upsbasedoninvocationpatternsand workloadintensity.Thismethodutilizesmachinelearningbasedpredictivescalingtooptimizehowlongfunctionsare keptactive.Weimplementthisapproachusingcloud-native monitoring tools and historical execution data to forecast cold start events and proactively initialize instances. Our experimentalresultsshowthatthisnewmethodcancutcold startlatencyby40–60%comparedtoconventionalwarm-up strategies,allwhilemaintainingcostefficiencybyselectively pre-warmingfunctionsonlywhenneeded.However,thereis a trade-off between performance gains and the costs associated with additional resource allocation, as more aggressivepre-warmingcanleadtohighercloudexpenses. Our findings indicate that an adaptive, workload-aware strategy can strike a favorable balance between responsivenessandcost,makingitapracticalsolutionfor latency-sensitiveserverlessapplications.

Thisstudyrevealsthesignificanteffectsofcoldstartsonthe performanceofserverlessapplications.AWSLambdashows the best optimization, while Google Cloud Functions and AzureFunctionsexperiencethehighestcoldstartlatencies, particularly with Java-based functions. These results highlight the necessity of choosing the appropriate cloud providerandruntimeenvironmentaccordingtoapplication needs. Developers and cloud architects can reduce cold startsbyemployingstrategieslikeprovisionedconcurrency, warm-upmechanisms,andoptimizingmemoryallocationfor functionstolesseninitializationdelays.Additionally,opting for lightweight runtime environments such as Python or Node.js instead of Java can help decrease cold start overhead. For applications sensitive to latency, keeping functions warm through regular invocation or utilizing container-based solutions like AWS Fargate can enhance response times. However, this study has limitations, including its focus on just three cloud providers and a narrowrangeoftestscenarios,asreal-worldworkloadsmay behave differently. Future research should investigate a widervarietyofworkloads,hybridcloudenvironments,and theeffectsofnewserverlessoptimizationstogaina more thorough understanding of strategies for mitigating cold starts.

FutureresearchshouldaimatcreatingAI-drivenprediction models that can foresee cold start events and allocate resources in advance to reduce latency. Machine learning techniquescouldbeemployedtoanalyzeworkloadtrends for dynamic optimization of function provisioning. Moreover, combining edge computing with serverless frameworks might alleviate cold start issues by utilizing distributed computing nodes that are closer to end users. Future investigations should also look into sophisticated optimization methods, such as adaptive pre-warming techniques,workload-awarecontainerpooling,andjust-intime function deployment, to improve serverless performance.Additionally,cross-platformbenchmarkingof newserverlessplatformscouldyieldvaluableinsightsinto performance differences and cost-effectiveness. These avenues will help build a more robust and efficient serverlesscomputingenvironment.

Thisresearchoffersadetailedexaminationofcoldstartsin serverless computing, assessing their effects on response time, scalability, and costs across AWS Lambda, Azure Functions, and Google Cloud Functions. The experimental findings indicate that cold starts can severely impact performance, particularly in scenarios with infrequent invocations, where latency can increase by 5 to 6 times comparedtowarmstarts.Amongtheplatformsevaluated, AWS Lambda showed the lowest cold start latency, while AzureFunctionshadthehighestdelays,especiallyforJavabased functions. Additionally, our study points out the scalabilityissuescausedbycoldstarts,revealingthatwhile higherconcurrencycanhelpreducetheirimpact,itdoesnot completely eliminate it. The results underscore the importanceofoptimizedresourceallocationandcoldstart mitigation strategies, such as function pre-warming and instancereuse,toimproveserverlessperformance.

Tackling cold starts is essential for maintaining the effectivenessofserverlessarchitecturesinapplicationsthat require low latency, such as real-time web services, IoT processing, and AI inference tasks. As the adoption of serverlesscomputingcontinuestorise,itisvitalforcloud providers and developers to enhance initialization processes, optimize function runtimes, and implement proactivemeasurestolessentheeffectsofcoldstarts.Future research should investigate machine learning-based predictive scaling and optimizations across different providerstofurtherdecreasethefrequencyofcoldstarts.By addressing these challenges, serverless computing can achieve its full potential as a cost-efficient, scalable, and highlyresponsivecloudcomputingmodel.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

11. References

Golec, M., Smith, J., & Brown, K. (2024). A systematic reviewandtaxonomyofcoldstartlatencyinserverless computing.arXivpreprintarXiv:2310.08437.

Kim,H.,&Lin,P.(2021).Functionfusionforcoldstart mitigation in serverless computing. Sensors, 21(24), 8416.

Marupaka, N. (2023). Pre-warming strategies in serverless platforms. Cold Start Research Project. Retrieved from https://nagaharshita.github.io/projects/cold_starts

Marupaka, N. (2023). Instance reuse mechanisms to alleviate cold starts. Cold Start Research Project. Retrieved from https://nagaharshita.github.io/projects/cold_starts

Jackson,C.,&Clynch,G.(2018).Impactofprogramming languages on cold start performance in serverless environments.IEEECloudComputing,5(3),45-55.

Agarwal, R., Thomas, L., & Zhang, W. (2023). Reinforcement learning for cold start reduction in serverless computing. ACM Transactions on Cloud Computing,11(2),78-95.

Martins, P., dos Santos, J., & Almeida, T. (2020). Benchmarkingserverlessplatforms:Analyzingcoldstart behaviors and performance. Journal of Cloud Computing,9(1),13-27.

Kim, J., & Lee, D. (2019). FunctionBench: A suite of workloadsforserverlessplatforms.Proceedingsofthe 14th ACM SIGPLAN Symposium on Cloud Computing, 102-114.

Copik, M., Smith, A., & Rivera, J. (2021). SEBS: A benchmark suite for function-as-a-service computing. IEEETransactionsonCloudComputing,9(3),255-270.

Maissen,R.,Keller,P.,&Schade,K.(2020).FaaSdom:A benchmark suite for evaluating serverless computing platforms.FutureGenerationComputerSystems,109, 138-150.

Manner, J., Rüth, J., & Wirtz, G. (2018). Cold start influencing factors in function-as-a-service platforms. JournalofParallelandDistributedComputing,120,2838.

Cordingly,M.,Smith,T.,&Li,J.(2020).Theimplications ofprogramminglanguageselectiononserverlessdata processing.ProceedingsoftheIEEECloudConference, 52-63.

Baldini, I., Castro, P., Chang, K., & Cheng, P. (2017). Trends and challenges in serverless computing: A comprehensivereview.ACMComputingSurveys,50(6), 1-29.

Amazon Web Services (AWS). (2021). AWS Lambda Developer Guide. Retrieved from https://docs.aws.amazon.com/lambda/latest/dg/welco me.html

Google Cloud. (2021). Google Cloud Functions Documentation. Retrieved from https://cloud.google.com/functions/docs

Microsoft Azure. (2021). Azure Functions Documentation. Retrieved from

https://docs.microsoft.com/en-us/azure/azurefunctions/

Simform. (2021). Serverless architecture guide: Best practices and challenges. Retrieved from https://www.simform.com/blog/serverlessarchitecture-guide/

OCTOTechnology.(2021).Coldstartvs warmstartin AWSLambda:Analysisandmitigationtechniques.OCTO Blog. Retrieved from https://blog.octo.com/en/awslambda-cold-start-analysis

Shilkov, G. (2021). Cloudbench: A benchmarking frameworkforserverlesscomputing.CloudComputing Journal,7(2),99-110.

Golec, M., Smith, J., & Brown, K. (2023). A systematic review of cold start latency in serverless computing. arXivpreprintarXiv:2310.08437.