International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Sahil Vartak1 , Yashyashsvi Singh2 , Roshaun Dsouza3, Saakshi Bagal4 , Varsha Shrivastava5

1UG Student, Department of Computer Engineering, St. Francis Institute of Technology

2UG Student, Department of Computer Engineering, St. Francis Institute of Technology

3UG Student, Department of Computer Engineering, St. Francis Institute of Technology

4UG Student, Department of Computer Engineering, St. Francis Institute of Technology

5Asst. Professor, Department of Computer Engineering, St. Francis Institute of Technology

Abstract - In a world where communication is the lifeblood of connection, those with speech and hearing impairments often find themselves trapped in a profound silence, yearning to be seen and heard. Each day, they navigate a landscape filled with misunderstandings and isolation, their thoughts and emotions swirling within, desperately seeking a bridge to the outside world. The weight of unexpressed feelings and unfulfilled desires creates a heart-wrenching loneliness, as they watch others engage in the very interactions that remain just out of reach. This longing for connection is not merely a desire but a deep-seated need, leaving them to grapple with the emotional toll of being unseen in a world that moves on without them. Their struggle is a poignant reminder of the essential human desire for understanding and the heartbreaking reality of living in silence, where voices go unheard,andconnectionsfadeintotheshadows.Ourproject aim is to address this critical need by providing an intuitive sign language recognition tool that simplifies the task of interpreting diverse gestures. Through advanced technology, real-time gesture recognition, and a userfriendly interface, our project empowers individuals with hearing or speech impairments to engage more effectively with their surroundings, promoting inclusivity and bridging communicationgapsinanincreasinglyconnectedworld

Key Words: Sign Language Recognition, Communication Accessibility, Hearing Impairment, Speech Impairment, YOLOv8 etc.

1.INTRODUCTION

Signlanguages areintricateand distinctlinguistic systems that serve as vital modes of communication for Deaf communities worldwide. These languages transcend mere gestures; they are rich, fully developed languages that encapsulate the cultural, social, and historical contexts of their users. Each sign language reflects the unique experiences and identities of its community, shaped by factors such as geography, history, and social interactions. For instance, American Sign Language (ASL) has evolved within the unique cultural landscape of the United States, influenced by the historical experiences of the Deaf community, including the establishment of schools for the Deaf and the integration of various regional signs.

Similarly, British Sign Language (BSL) embodies the cultural nuances and idiomatic expressions prevalent in theUnitedKingdom,illustratinghowlanguagecanserveas amirrorofnationalidentityandsocialvalues.

In India, Indian Sign Language (ISL) plays a crucial role in connecting the Deaf community, drawing from the country’s diverse linguistic and cultural heritage. ISL is characterized by its own grammatical structures and vocabulary, which are distinct from both ASL and BSL, highlightingthecomplexityandrichnessofsignlanguages. Each of these sign languages not only facilitates communication but also fosters a sense of belonging and identity among their users. This paper will explore the uniquecharacteristicsofASL,BSL,andISL,examiningtheir grammatical frameworks, regional variations, and the dynamic nature of their evolution. By doing so, it will underscore the importance of sign languages as essential forms of communication that empower Deaf individuals and enrich the cultural tapestry of their respective communities.

Signlanguageservesasaprimarymodeofcommunication for the deaf and mute communities, yet there exists a substantial communication gap between sign language users and the general population due to the lack of accessible and efficient translation systems. Existing methods for automatic sign language recognition (SLR) sufferfromsignificantchallengesrelatedtoaccuracy,realtime processing,andadaptability todiverse signingstyles. The complexity of hand gestures, variations in finger positioning, motion speed, and background noise makes it difficult for conventional machine learning and deep learning models to achieve robust recognition in uncontrolled environments. Additionally, occlusions, lightingvariations,anddifferencesinsigningstylesamong individuals further complicate gesture interpretation, leading to inconsistent performance across different datasetsandreal-worldapplications.

Traditionalapproaches,includingSupportVectorMachines (SVM), K-Nearest Neighbors (KNN), and Decision Trees, rely on handcrafted features, which limit their ability to generalize across complex and dynamic gestures. While

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

CNN-based models such as ResNet and MobileNet have improved feature extraction, they demand extensive computational resources and suffer from latency issues, making them impractical for real-time sign language recognition.Similarly,RecurrentNeuralNetworks(RNNs), including LSTMs and GRUs, attempt to model temporal dependencies in sign sequences but face challenges in handling variable-length input, high training time, and performance degradation over long sequences. Two-stage object detection frameworks like Faster R-CNN provide high accuracy but at the cost of increased computational overhead, making them unsuitable for real-time inference, particularlyonedgedevicesandmobileplatforms.

To address these challenges, the development of a fast, efficient, and highly accurate Sign Language Recognition (SLR)systemiscrucialforbridgingthecommunicationgap faced by the deaf and mute communities. This research focuses on overcoming these limitations by utilizing YOLOv8, an advanced objectdetection model designed for optimizedreal-timeperformance.UnlikeconventionalCNN and RNN-based models, YOLOv8 employs an anchor-free detection approach, a lightweight neural architecture, and enhancedfeatureextractioncapabilities.Theseinnovations enable it to effectively handle dynamic, continuous, and complexsigngestureswithminimaldelay.

The model's ability to process high-frame-rate video streams ensures smooth real-time translation, while its robust spatial awareness allows accurate detection even under conditions of occlusion and environmental variability. By developing a scalable and deployable SLR systempoweredbyYOLOv8,thisresearchseekstoprovide accessible communication tools for the Indian deaf and mute communities, foster social inclusion, and improve access to essential services through real-time gesture-tospeechtranslation.

Atwo-streamneural network architectureprocessesboth spatial and temporal data for gesture recognition. The spatial stream identifies hand shapes, while the temporal stream captures motion patterns, distinguishing similar gestures. The Accumulative Video Motion (AVM) technique enhances recognition by analyzing motion across frames, achieving high accuracy in real-time applications[1].

A deep learning framework automates sign language recognition, translation, and video generation. It extracts spatial and temporal features from raw video input, converts recognized signs into text or speech, and animatesavirtualhumanforgesturereplication.Thisendto-end approach improves real-time communication and educationalaccessibility[2].

For Indian Sign Language (ISL), techniques like GANs, transfer learning, and ensemble methods improve recognition. GANs generate synthetic images to expand datasets, while models like ResNet-50 and VGG-19 enhance feature extraction. Ensemble methods reduce bias,achieving92.5%accuracy,advancingISLrecognition forreal-timeapplications[3].

In Turkish Sign Language, YOLOv4-CSP enables real-time static sign classification with minimal preprocessing. Using 1,500 labeled images and data augmentation, it achieves an F1 score of 98.55% and mAP of 99.49%. Its hybrid architecture, inspired by Vision Transformers, optimizes computational efficiency while maintaining robustdetection[4].

Sign language recognition systems convert hand gestures into text using segmentation and feature extraction. Built with Python, OpenCV, and TensorFlow, they require quality cameras and 8GB RAM. Future improvements include facial expression recognition and speech conversion, further bridging communication gaps for the deafcommunity[5].

A real-time Marathi Sign Language detection system uses MediaPipe for hand tracking and LSTMs for sequence processing, achieving 97.50% accuracy on 37,500 frames of15words.Thisresearchprovidesavitalcommunication tool for Marathi-speaking regions, with future improvements integrating GRU models for enhanced accuracy[6].

Deeplearning-drivensignlanguagerecognitionintegrates CNNs, LSTMs, and HMMs to capture spatial and temporal gesture features. Using the PHOENIX dataset for German Sign Language, Transformer-based models achieve 96.6% accuracy. Innovations like attention mechanisms further improve performance, though challenges like user independenceremain[7].

AI-powered sign language recognition uses LSTM networkstotranslategesturesintospeech,achieving85% accuracy. Developed with Python, OpenCV, and TensorFlow, these systems support real-time communication, enhancing accessibility for the deaf and hard-of-hearingcommunities[8].

A novel video-based sign language recognition approach combines ResNet for spatial features and LSTMs for temporal analysis. Applied to Argentine Sign Language (LSA64), it achieves 85.26% accuracy, improving communication accessibility and enabling real-time translation[9].

A British Sign Language (BSL) recognition system using Histogram of Oriented Gradients (HOG) features with an SVM classifier achieves over 99% accuracy with 170ms processing time. Tested on 13,066 samples, it offers an

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

efficient, real-time, non-invasive solution for BSL recognition[10].

A smart glove system integrates silk-fibroin-based strain sensorswith1D-CNNs,achievingover99%accuracy.With 10sensornodesina3D-printedglove,itenablesreal-time visual feedback, enhancing accessibility in healthcare, sports,andcommunicationforthehearing-impaired[11].

Avision-basedsignlanguagerecognitionsystemleverages AI and computer vision using cameras, Kinect, and Leap Motion controllers to process spatial and temporal gestures. Enhanced by preprocessing techniques like histogram equalization, it enables real-time sign translationandaccessibility[12].

A deep learning-based ISL recognition system uses a CNN on a custom dataset, achieving 99% accuracy. Preprocessing with HSV color space conversion and data augmentation ensures precise segmentation, making it a scalablesolutionforreal-timeISLtranslationineducation andcommunication[13].

Table -1: Survey of recent papers

Methodology Dataset Used Performance / Accuracy

Two-streamCNN-LSTM withAVM[1] PublicGesture Datasets Highaccuracy indynamic gestures

DeepLearningwith CNN,LSTM,andVideo Generation[2]

GANs(DCGAN,SRGAN), TransferLearning (ResNet-50,VGG-19), andEnsembleMethods [3]

Custom datasets Superior efficiencyover traditional methods

IndianSign Language (ISL) 92.5% recognition accuracy

YOLOv4-CSPwithVision Transformer-inspired hybridmodel[4] TurkishSign Language (TSL)(1500 labeled images)

F1score: 98.55%,mAP: 9949%

CNN+Feature ExtractionwithOpenCV [5] MultipleSign Language Datasets 85%accuracy

MediaPipeforhand tracking+LSTM sequenceprocessing[6] MarathiSign Language (37,500 frames) 97.5%accuracy

CNN-LSTM-HMM Hybridwith Transformers[7] PHOENIX Dataset (GermanSL) 966%accuracy

AI-drivenSLrecognition usingLSTM[8] Custom dataset 85%accuracy

ResNet+LSTM[9] ArgentineSign Language (LSA64) 8526% accuracy,F1: 84.98%, Precision: 87.77%

HOGFeatures+SVM Classifier[10] BritishSign Language (BSL)(13,066 samples) 99%accuracy, 170mslatency

SmartGlovewith1DCNN+Silk-Fibroin StrainSensors[11]

Custom gesture dataset 99%+accuracy

AI+ComputerVision (Kinect,LeapMotion) [12] VariousSL datasets Highprecision

CNNwithHSVColor Space&Data Augmentation[13] IndianSign Language (ISL) 99%accuracy

Table-1 summarizes studies in sign language recognition, covering methodologies, datasets, performance metrics, andkeycontributions.Ithighlightsapproacheslike CNNs, LSTMs, GAN-based augmentation, transfer learning, and wearable devices, showcasing advancements in accuracy, efficiency, and real-time recognition for improved accessibilityandcommunication.

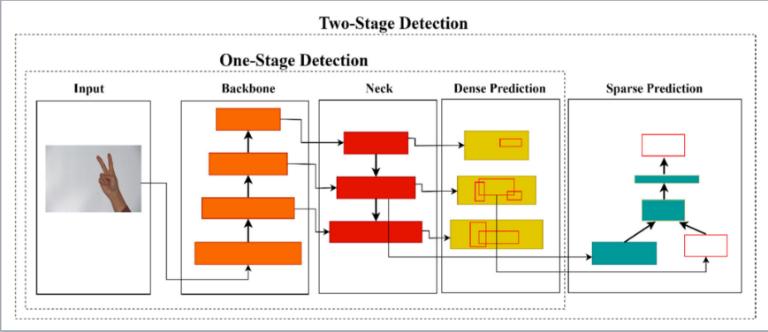

Object detection algorithms are generally classified into two approaches: one-stage and two-stage methods, as illustrated in Fig. 1. Two-stage models, such as Faster RCNN, incorporate a Region Proposal Network (RPN) to extract potential object regions from an image before classifying them. In contrast, single-stage object detection methods process the entireimageina singlestep,directly predictingboundingboxesandobjectcategories.

While two-stage algorithms excel in accuracy and localizationsensitivity,theyarenotoptimizedforreal-time detection due to their computational complexity. To address this, the YOLO series introduced a regressionbased object detection approach in 2016, achieving realtime performance at 45 frames per second. Despite its speed, YOLO faces challenges in detecting and accurately localizing small objects. The model applies a confidence threshold and uses non-maximal suppression to eliminate overlapping bounding boxes, ensuring the most relevant detectionwhileminimizingredundancy.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Fig –1: Generalarchitectureofobjectdetectionalgorithm referredfrom[4]

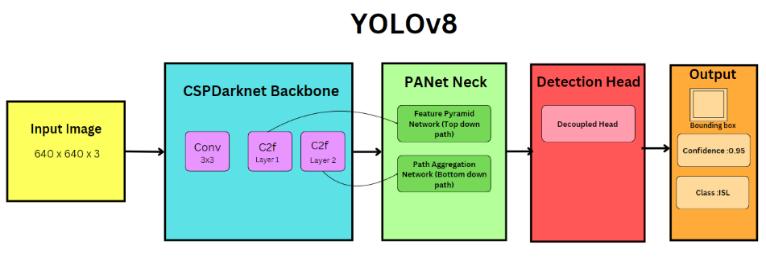

Our proposed solution leverages YOLOv8’s advanced architecture with significant enhancements specifically optimizedforIndianSignLanguagerecognition.YOLOv8is astate-of-the-artobjectdetectionmodel thatwasreleased in 2023. It is a significant improvement over previous versionsofYOLO,intermsofbothaccuracyandspeed.Its system backbone utilizes YOLOv8’s CSP-Darknet structure with optimized feature extraction through convolution channels and cross-stage connections. This is complemented by a modified neck implementing Path Aggregation Network (PANet) with additional skip connections for improved feature map aggregation. The detection head employs multi-scale prediction with anchor-freedetection,enablingprecisegesturelocalization withreducedcomputationaloverhead.

The technical implementation incorporates several key improvements to the base YOLOv8 architecture, including comprehensive dataset preparation through Roboflow library. We processed and used an annotated dataset containing500ISL gestureimagesusingRoboflowdataset library.Thepreprocessingpipelineimplementsautomated features for image standardization, including resolution normalization to 640x640 pixels, orientation correction, andlightingnormalization.Thisrobustdatasetpreparation forms the foundation of our model’s high accuracy in gesturerecognition.

Theprimaryobjectiveofthisprojectistoaccuratelydetect sign language gestures using the YOLOv8 architecture. A customdatasetcomprisingXclassesofsignswithatotalof N images was utilized, ensuring robustness by capturing

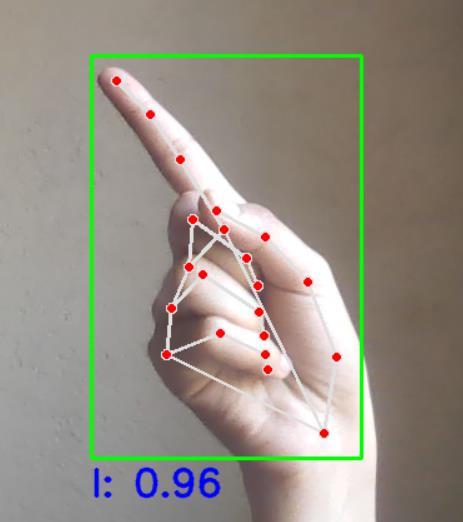

imagesundervaryinglightingconditionsandbackgrounds. YOLOv8 was used for gesture detection, while MediaPipe was employed during real-time inference to draw handtracking key points, enhancing visualization and tracking accuracy.

Beforetraining,imageswereresizedto640×640pixelsto match YOLOv8’s input requirements. Data augmentation techniques such as random flipping, rotation, scaling, and brightness adjustments were applied to improve generalization. The dataset was split into 80% training, 10%validation,and10%testing.

For model configuration, the YOLOv8-small variant was chosen for its balance between accuracy and inference speed. The default architecture was used with minor modifications, including X additional layers to enhance feature extraction from hand gestures. The model was trainedforYepochswitha learningrateof1×10⁻⁴ using theAdamoptimizer.

The model’s performance was evaluated using standard YOLOv8 metrics, including precision, recall, F1-score, and mean Average Precision (mAP). Qualitative results, illustrated in Fig-3 and Fig-4, demonstrate the model’s robustness in detecting hand gestures across complex backgroundsandvaryinglightingconditions.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

In conclusion, the development of a real-time sign language recognition system represents a significant step forward in bridging communication gaps for the deaf and mute communities. By leveraging advanced machine learning technologies, this system offers a reliable, accessible solution for seamless communication between individuals with hearing or speech impairments and the broadersociety.Withhighaccuracyingesturerecognition and the ability to operate without specialized equipment, it enhances social and professional interactions while promoting inclusivity. Furthermore, the system’s educational features contribute to the learning of sign language,makingitaversatiletoolacrossvarioussectors, including education, healthcare, and public services. As this technology evolves, it holds the potential to further refine communication methods, ultimately fostering a moreinclusiveworldforallindividuals,regardlessoftheir abilities.

[1] H. Luqman, “An Efficient Two-Stream Network for Isolated Sign Language Recognition Using Accumulative Video Motion,” in IEEE Access, vol. 10, pp. 93785-93798, 2022, doi: 10.1109/ACCESS.2022.3204110.

[2] B. Natarajan et al.,” Development of an End-to-End Deep Learning Framework for Sign Language Recognition, Translation, and Video Generation,” in IEEE Access, vol. 10, pp. 104358-104374, 2022, doi: 10.1109/ACCESS.2022.3210543.

[3] Shirude, Ruchita & Khurana, Soumya & , R.Sreemathy & Turuk, Mousami & Jagdale, Jayashree & Chidrewar, Saurabh. (2024). Indian Sign Language Recognition System using GAN and Ensemble based approach. 10.21203/rs.3.rs-4303833/v1.

[4] Melek Alaftekin, Ishak Pacal, and Kenan Cicek, “Realtime sign language recognition based on YOLO

algorithm,” Neural Computing and Applications, Feb. 2024, doi: https://doi.org/10.1007/s00521-02409503-6.

[5] Ravindra Bula, Dipalee Golekar, Rutuja Hole, Sidheshwar Katare, S.R. Bhujbal, ”SIGN LANGUAGE RECOGNITION BASED ON COMPUTER VISION”, International Journal of Creative Research Thoughts (IJCRT), ISSN:2320-2882, Volume.10, Issue 5, pp.g374-g388, May 2022, Available at :http://www.ijcrt.org/papers/IJCRT2205740.pdf

[6] S. Giri and A. Patil, “Marathi Sign Language Recognition using MediaPipe and Deep Learning Algorithm,” Research Square (Research Square), Apr. 2024, doi: https://doi.org/10.21203/rs.3.rs4210048/v1.

[7] Y. Zhang and X. Jiang, “Recent Advances on Deep Learning for Sign Language Recognition,” Computer ModelinginEngineering&Sciences,vol.139,no.3,pp. 2399–2450, 2024, doi: https://doi.org/10.32604/cmes.2023.045731.

[8] DaksheshGandhe, Pranay Mokar,Aniruddha Ramane, and R. M. Chopade, “Sign Language Recognition for Real-time Communication,” vol. 12, 2024, doi: https://doi.org/10.22214/ijraset.2024.61514.

[9] J. Huang and Varin Chouvatut, “Video-Based Sign LanguageRecognitionviaResNetandLSTMNetwork,” Journal of Imaging, vol. 10, no. 6, pp. 149–149, Jun. 2024, doi: https://doi.org/10.3390/jimaging10060149.

[10] M. Quinn and J.I. Olszewska, “British sign language recognition in the wild based on multi-class SVM,” in 2019 federated conference on computer science and informationsystems(FedCSIS),IEEE,2019,pp.81-86

[11] W. Lu et al., “Artificial Intelligence–Enabled GestureLanguage-RecognitionFeedbackSystemUsingStrainSensor-Arrays-Based Smart Glove,” Advanced Intelligent Systems, vol. 5, no. 8, Apr. 2023, doi: https://doi.org/10.1002/aisy.202200453.

[12] I. A. Adeyanju, O. O. Bello, and M. A. Adegboye, “Machine learning methods for sign language recognition:Acriticalreviewandanalysis,”Intelligent Systems with Applications, vol. 12, p. 200056, Nov. 2021, doi: https://doi.org/10.1016/j.iswa.2021.200056

[13] H. K. Vashisth, T. Tarafder, R. Aziz, M. Arora, and Alpana, “Hand Gesture Recognition in Indian Sign Language Using Deep Learning,” Engineering Proceedings, vol. 59, no. 1, p. 96, 2023, doi: https://doi.org/10.3390/engproc2023059096.