International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

Volume:12Issue:01|Jan2025 www.irjet.net

Sanskar Khaire, Swarup Mane, Sandeep Milake Dr. Anant Bagade

Department of Information Technology, SCTR’s Pune Institute of Computer Technology, Pune, Maharashtra, India

Abstract - The rapid growth of social media, the spread of fake news has become a pressing issue, leading to misinformation and public distrust. This project focuses on developing an efficient system to detectfakenewsonsocialmediausingacombination of DistilBERT and Support Vector Machine (SVM) models.DistilBERT,apre-trainedtransformermodel,is employedforitsabilitytounderstandthecontextual meaning of text, capturing relationships between words and phrases, even in complex sentences. The processed text features extracted by DistilBERT are used as input for the SVM model, which acts as a classifier to distinguish between fake and real news. Thesystemistrainedonadatasetcontaininglabeled fake and real news articles to learn patterns that characterize misinformation. By combining the languageunderstandingcapabilitiesofDistilBERTwith the robust classification power of SVM, the model achieves high accuracy in identifying fake news.The final model offers a practical solution to combat the widespread issue of misinformation on social media platforms, contributing to a more reliable and trustworthydigitalinformationecosystem.

Key Words: (Fake News Detection, DistilBERT, Support VectorMachine(SVM),NaturalLanguageProcessing(NLP), Transformer Models, Hybrid Model, Logistic Regression, MisinformationDetection,DeepLearningforNLP.

1.INTRODUCTION

In recent years, the rapid proliferation of social media platformshasfundamentallytransformedhowinformation isdisseminated,accessed,andconsumed.Theunparalleled speed and reach of these platforms have democratized informationsharing,enablingindividualsfromallwalksof life to express their views and contribute to the global discourse.However,thisverydemocratizationhasbrought aboutasignificantandtroublingdownside:theunchecked spread of fake news. Fake news, characterized as intentionally false or misleading information presented in theguiseoflegitimatenews,hasbecomeapervasiveissue.

Its primary motivations often include influencing public opinion, sowing discord, or generating profit through sensationalismandclickbait.

The consequences of fake news are far-reaching, with the potential to disrupt societal harmony, influence political outcomes, and compromise public health. For instance, misinformation during elections can manipulate voter behavior, while the dissemination of false information during health crises, such as the COVID-19 pandemic, can hindereffectivepublichealthresponses.Thesheervolume and velocity of fake news propagation pose challenges to traditionalmethodsofverification,whicharenotonlylaborintensivebutalsoincapableofscalingtomeetthedemands of the digital age. These limitations have necessitated the developmentofautomated,technology-drivensolutionsto addressthegrowingmenaceoffakenews.

This research paper focuses on leveraging state-of-the-art advancements in natural language processing (NLP) and machine learning to tackle the problem of fake news detection. Specifically, it explores the combined use of DistilBERT and Support Vector Machine (SVM) as a novel approach to identifying and categorizing fake news. DistilBERT, a lightweight and efficient transformer-based model,hasemergedasapowerfultoolfortextanalysisdue to its ability to capture nuanced semantic and syntactic relationships within language. Unlike traditional NLP models,DistilBERTexcelsinunderstandingcontextualword meanings,enablingittoidentifysubtledifferencesbetween credibleanddeceptivecontent.Itsreducedcomputational complexity,comparedtoitspredecessorBERT,makesitan optimalchoiceforreal-timeapplications.

TheSVMclassifier,ontheotherhand,isa robustmachine learningalgorithmrenownedforitseffectivenessinbinary classificationtasks.Byanalyzingpatternsandrelationships within a labeled dataset, SVM can accurately distinguish between real and fake news. Integrating DistilBERT's contextual understanding with SVM's classification capabilities creates a synergistic model that significantly enhancestheaccuracyandefficiencyoffakenewsdetection systems.

The proposed system is designed to undergo rigorous trainingusingadiversedatasetcomprisinglabeledarticles from various domains, including politics, health, and entertainment. This diversity ensures the model's

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

adaptability to different contexts and types of misinformation. A key aspect of this research involves curatingandpreprocessingthedatasettoeliminatebiases and ensure representativeness, which are critical for the model'sgeneralizability.Additionally,theperformanceofthe DistilBERT-SVM approach will be benchmarked against existing methodologies to evaluate its effectiveness and reliability.

Beyond algorithmic development, this project also emphasizesuseraccessibilityandpracticalimplementation. A user-friendly interface will be developed to allow individuals, organizations, and policymakers to perform real-time analysis of news content. This interface will provide actionable insights, such as credibility scores and justificationsforclassification,empoweringuserstomake informeddecisionsaboutthereliabilityofinformation. Keyobjectivesofthisresearchinclude:

Constructing a comprehensive and high-quality dataset of labeled fake and real news articles to facilitateeffectivemodeltrainingandevaluation.

Developing a robust and scalable fake news detection system that integrates DistilBERT and SVMforenhancedperformance.

Comparingtheproposedapproachwithtraditional and state-of-the-art methods to establish its efficacy.

Creatingaseamlessandintuitiveplatformforenduserstoutilizethemodelinreal-worldscenarios. In conclusion, the increasing prevalence of fake news underscores the urgent need for innovative and effective detection mechanisms. As social media continues to dominate the information landscape, safeguarding the integrity of shared content is crucial for fostering an informed and responsible society. By combining the advancedcapabilitiesofDistilBERTandSVM,thisresearch aims to contribute a meaningful solution to the pressing challengeoffakenewsdetection,therebysupportingefforts to preserve the credibility and trustworthiness of digital informationecosystems.

Theliteraturesurveydelvesintovariousmethodologies for detecting fake news, incorporating state-of-the-art transformer-basedmodels,hybridapproaches,andclassical machine learning techniques. Transformer architectures, suchasDistilBERT,havedemonstratednotableefficiencyin misinformationdetectionduetotheirreducedcomputational overhead and high accuracy, particularly when integrated withsupportvectormachines(SVM)orlogisticregression classifiers. Studies have highlighted that DistilBERT-SVM hybrid models achieve robust classification performance, offering a balanced trade-off between resource utilization andaccuracy,whichiscriticalforapplicationsinresourceconstrained environments [2, 5]. Moreover, comparative analyses of deep learning architectures, including BERT,

DistilBERT,andLSTM,revealthatDistilBERToutperforms traditional models in terms of computational efficiency withoutsignificantcompromisesinaccuracy,particularlyon domain-specificdatasetssuchasCOVID-19-relatedfakenews [3,4].

Hybrid frameworks have emerged as highly effective solutions for real-time applications. For instance, models combining DistilBERT with SVM or RoBERTa have been showntoprovidesuperioraccuracyandspeedcomparedto standalonelargermodels[5,8].Thesemodelsleveragethe scalability of transformer architectures while maintaining computational efficiency, thus making them suitable for deployment in real-world scenarios. Structured datasets, suchasLIARandFakeNewsNet,havebeenpivotalintraining robust models, enabling a deeper understanding of the linguisticandcontextualfeaturesoffakenews.Furthermore, theinclusionofsocialmediadatasetsallowsresearchersto explore the nuanced characteristics of misinformation propagatedonplatformslikeTwitterandFacebook[7,9].

Innovativemethodologies,suchastheuseofBiLSTMand attentionmechanisms,haveproveneffectiveindetectingfake news within specific contexts, such as Indian politics or health misinformation. These approaches leverage critical keyworddetectionandcontextualembeddingstoenhance performance.GenerativeAdversarialNetworks(GANs)are anothernotableadvancement;theyareutilizedtogenerate adversarial samples that improve model robustness by simulatingchallengingmisinformationcases[12].However, despitetheirefficacy,GANsandattention-basedmechanisms requiresignificantcomputationalresources,highlightingthe necessityforoptimizationinpracticalscenarios[12].

A key limitation in existing research lies in the generalizability of models across diverse datasets and domains.Forinstance,whilemanymodelsexceloncurated datasets,theirperformanceoftendiminisheswhenappliedto multilingualorambiguousnewscontent.Studiessuggestthat incorporatinglarger,morediversedatasets,alongwithcrossdomaintesting,couldsignificantlyimprovegeneralizability [4, 9, 12]. Furthermore, integrating external knowledge sources,suchasdatabasesfromtrustednewsoutlets,and employingadaptivelearningmechanismscanenhancerealtimedetectionaccuracy[8,9].

Recent research also emphasizes the importance of domain-specific advancements. For example, non-English datasets remain underexplored, despite the growing prevalence of fake news in languages other than English. Techniques such as multilingual embeddings and domain adaptationarepromisingdirectionsforaddressingthisgap [8,10].Additionally,achievinganoptimalbalancebetween recallandprecisioniscrucial,especiallyinscenarioswhere falsepositivesandfalsenegativeshavevaryingdegreesof impact[8,10].

Finally, while progress has been made in leveraging transformer-based architectures, challenges remain in optimizing their deployment for real-time applications. Future research must address the trade-offs between accuracy, speed, and resource efficiency, ensuring that

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

models can meet the demands of dynamic and evolving misinformation landscapes. The integration of real-world data, continual learning systems, and enhanced interpretabilityframeworksareessentialforadvancingthe fieldoffakenewsdetection.

The proposed methodology centers on developing an efficient, robust, and scalable fake news detection system tailored specifically for social media platforms. By integratingtheDistilBERTalgorithmwithaSupportVector Machine(SVM)classifier,thissystemaimstoachievehigh accuracywhilemaintaininglowcomputationalcosts,making it ideal for real-time detection in dynamic environments. Thissectionelaboratesonthestep-by-stepprocessinvolved indesigningandimplementingthissystem.

3.1DataCollectionandDatasetPreparation

The foundation of the methodology begins with assembling a comprehensive and diverse dataset sourced from reliable repositories and platforms. These datasets oftenconsistoflabelednewsarticlescategorizedasfakeor real.Toensurerobustness,thedatasetiscuratedtoinclude content froma variety of domainssuchaspolitics,health, entertainment,andfinance,reflectingthebroadspectrumof topics discussed on social media. Additionally, data augmentation techniques, such as paraphrasing, synonym replacement,andback-translation,areappliedtoincrease the dataset size and diversity, mitigating the risk of overfittingduringtraining.

3.2

Thepreprocessingphaseiscriticaltoensuretheinputdata isclean,uniform,andreadyforfurtheranalysis.Thisstage involvesmultiplesub-steps:

Text Cleaning: Unnecessary characters, punctuation,HTMLtags,URLs,emojis,andspecial symbols are removed to eliminate noise and improvedataquality.

Case Normalization: All text is converted to lowercase to ensure uniformity and avoid discrepanciescausedbycasesensitivity.

Stopword Removal: Commonlyusedwords(e.g., "and," "the," "is") that do not contribute to the semantic meaning are removed to enhance the model’sfocusonrelevantfeatures.

Stemming and Lemmatization: Techniques are employed to reduce words to their root forms, ensuring consistency and reducing the dimensionalityofthedata.

3.3Tokenization

Tokenization is a crucial step in natural language processing (NLP) that involves breaking down text into

smaller units or tokens. This methodology leverages advanced sub-word tokenization techniques, such as WordPiece or Byte-Pair Encoding (BPE), to handle rare words and out-of-vocabulary terms effectively. These techniques segment words into smaller components, enabling the model to process and understand nuanced language patterns, including slang, acronyms, and misspellingsfrequentlyfoundonsocialmedia.

3.4VectorizationwithDistilBERT

Once tokenized, the text data is transformed into numericalembeddingsusingDistilBERT,alightweightand efficient variant of the Bidirectional Encoder Representations from Transformers (BERT) model. DistilBERT’s architecture retains 97% of BERT’s language understanding capabilities while being 60% smaller and significantlyfaster,makingitidealforresource-constrained environments.

KeyfeaturesoftheDistilBERTarchitectureinclude:

Knowledge Distillation: The model is trained to mimictheperformanceofalargerBERTmodelby transferringknowledgethroughateacher-student framework.

LayerReduction: Byreducingthenumberoflayers inthetransformerarchitecture,DistilBERTachieves faster inference times without compromising accuracy.

Dynamic Masking: Thisfeatureensureseffective training by introducing variations in the masked languagemodelingtask,allowingthemodeltolearn diversecontexts.

The resulting embeddings from DistilBERT are highdimensional, context-aware representations that capture semanticrelationshipsandsyntacticstructureswithinthe text.

3.5 ClassificationwithSVM

TheembeddingsgeneratedbyDistilBERTarepassedtoa Support Vector Machine (SVM) classifier for binary classification into fake or real news. SVM is a powerful supervised learning algorithm that works by finding an optimal hyperplane that maximizes the margin between differentclasses.Thekeycomponentsofthisstepinclude:

KernelFunctions: TheSVMmodelemployskernel functions such as the radial basis function (RBF) and polynomial kernels to handle both linear and non-lineardata.Thesefunctionsenablethemodel to map input features into higher-dimensional spacesforbetterseparability.

RegularizationTechniques: Topreventoverfitting and improve the generalization of the model, regularizationtechniquessuchasL2regularization areincorporated.Thisensuresthemodelperforms wellonunseendata.

Hyperparameter Optimization: Grid search or randomizedsearchtechniquesareusedtofine-tune hyperparameters such as the regularization

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

parameter(C)andkernelcoefficients,maximizing modelperformance.

3.6.ModelEvaluationandValidation

To assess the effectiveness of the proposed system, the model is rigorously evaluated using metrics such as accuracy,precision,recall,andF1score.Thedatasetissplit into training, validation, and testing subsets, ensuring unbiased performance measurement. Cross-validation techniques,suchask-foldcross-validation,areemployedto validate the robustness of the model across different data partitions.

One of the unique aspects of this methodology is its adaptability to real-time social media content. This is achieved by periodically updating the dataset with new examplesfromlivefeeds,retrainingthemodeltoaccountfor emergingtrends,andensuringrelevancetocontemporary content.

Thesystemisdesignedtohandlemultilingualdatasetsby leveraging multilingual pre-trained models or fine-tuning DistilBERTforspecificlanguages.Noiseinthedata,suchas typos, slang, and abbreviations commonly found in social media, is addressed through customized preprocessing pipelinesandadvancedembeddingtechniques.

Theproposedmethodologyprovidesastrongfoundation forfutureenhancements,suchas:

Integration of External Trusted Data Sources: Incorporating external datasets from verified sourcestoimprovethereliabilityofpredictions.

Incorporating User Feedback: Adding mechanisms for users to report misclassified content, enabling continuous improvement of the model.

Support for Multimodal Inputs: Extending the system to analyze images, videos, and metadata alongsidetexttoimprovedetectionaccuracy.

Scalability: Adaptingthesystemfordeploymenton cloud-based infrastructures to handle large-scale dataefficiently.

By combining the lightweight and context-aware capabilities of DistilBERT with the robust classification power of SVM, the proposed methodology offers a scalable, efficient, and accurate solution for detecting fake news on social media platforms.

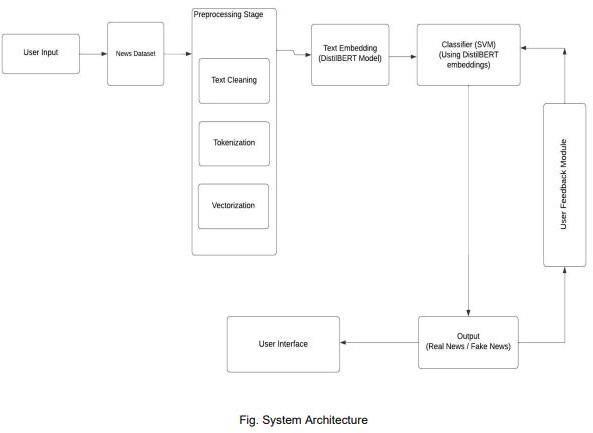

Theproposedsystemarchitectureforfakenewsdetection integrates advanced natural language processing (NLP) techniquesandmachinelearningmethodstoclassifynews articlesasrealorfakewithhighaccuracy.Thearchitectureis designedtohandlethecomplexitiesoflanguage,including contextual nuances, and leverages user feedback for continuousimprovement.Belowisadetailedexplanationof thevariousstagesinvolvedinthesystemarchitecture:

4.1UserInputandSubmission

The system begins with the user submitting a piece of text, a news article, or even an entire document for classification.Theinputmechanismisdesignedtobeflexible, allowinguserstoenterdata directlythroughatextboxor uploaddocumentsinmultipleformatssuchasPDForWord. ThesystemalsosupportsURL-basedsubmissionswherethe content of the webpage is extracted automatically. This adaptabilityensuresthesystemcancatertoawiderangeof users, from researchers to everyday social media users, seekingtoverifytheauthenticityofnewscontent.Asecure interface ensures the privacy and confidentiality of the submittedinformation,whilebackgroundvalidationchecks confirmthattheinputisvalidandrelevantforanalysis.

Preprocessingisacriticalstageinthearchitectureasit ensuresthattheinputdataisclean,consistent,andreadyfor analysis. The system begins by removing extraneous characterssuchasHTMLtags,specialsymbols,andnumbers that do not contribute to semantic meaning. It further eliminatescommonstopwordslike"is,""the,"and"and"to reducenoiseinthedata.Afterward,thetextisconvertedto

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

lowercase to ensure uniformity, which helps avoid caserelated discrepancies during analysis. Tokenization then dividesthetextintosmaller,meaningfulcomponents,suchas words or subwords, which are easier to process computationally.Additionalstepslikelemmatizationreduce wordstotheirbaseordictionaryforms,ensuringconsistency and minimizing redundancy. Together, these processes produceastandardizedandstructureddatasetthatisprimed forfeatureextraction.

4.3FeatureExtractionandVectorization

Once the text has been preprocessed, the system transitions to feature extraction, where it transforms the cleanedtextintoanumericalformatthatmachinelearning modelscaninterpret.Theinitialstepinvolvesvectorizingthe text using techniques such as Term Frequency-Inverse Document Frequency (TF-IDF), which highlights the importanceofspecifictermsrelativetothecorpus.Thisis followed by embedding generation using DistilBERT, a lightweight and efficient transformer model. DistilBERT processesthetexttoproducedense,low-dimensionalvector representationsthatcapturenotonlythesemanticmeaning butalsothecontextualrelationshipsbetweenwords.These embeddingsarebothcomputationallyefficientandrichin information,providingarobustfoundationforclassification tasks while ensuring faster processing times compared to traditionalmodels.

4.4ClassificationwithSVM

TheembeddingsgeneratedbyDistilBERTarepassedto theSupportVectorMachine(SVM)classifier,whichistasked withthe binaryclassificationof newsas real orfake. SVM operatesbyidentifyingoptimalhyperplanesthatseparate different classes within the high-dimensional embedding space.Duringthetrainingphase,SVMlearnsfromlabeled datasets,adjustingitsboundariestomaximizeclassification accuracy. Once trained, the classifier can determine the position of new inputs within the embedding space and assignthemtotheappropriateclass.TheintegrationofSVM ensuresthatthesystemisnotonlyaccuratebutalsocapable ofhandlingcomplexandhigh-dimensionaldataefficiently.Its reliance on relatively fewer parameters makes it computationally less expensive while maintaining high precisionindecision-making.

4.5

A standout feature of the proposed architecture is its feedbackandretrainingmodule,whichensurescontinuous improvementinsystemperformance.Whenusersencounter misclassifications,theyhavetheoptiontoflagtheseerrors, therebycontributingtoagrowingdatasetofedgecasesand exceptions.Theseflaggedexamplesareaddedtotheexisting trainingdataset,enrichingitwithreal-worldvariationsand patterns.ThesystemperiodicallyretrainstheSVMclassifier usingthisaugmenteddataset,allowingittorefineitsdecision boundariesandimproveitsadaptabilitytoemergingtrends infakenews.Importantly,whiletheDistilBERTembeddings remainfixedforefficiency,theiterativeretrainingprocess focusessolelyontheSVMmodel,ensuringthatupdatesare computationallyfeasibleandtargeted.

Afterprocessingtheinputthroughallpreviousstages,the system delivers the final output, which is a classification result labeled as either "real" or "fake." To enhance transparency and user trust, the system also provides additionaldetailssuchasconfidencescoresandexplanations fortheclassification.Theseinsightshelpusersunderstand the rationale behind the decision and foster informed decision-making.Forinstance,thesystemmayhighlightkey phrasesorpatternsthatinfluencedtheclassification,giving users deeper insights into the analysis. The output is designed to be intuitive and accessible, catering to both technicalandnon-technicalaudiencesalike.

Performanceoptimizationisacornerstoneofthesystem's design, ensuring that it remains scalable, efficient, and reliableundervaryingworkloads.Toachievethis,thesystem employsbatchprocessingtechniquesthatallowittohandle multipleinputssimultaneously,therebyreducinglatencyand improving throughput. Additionally, the architecture leverages cloud-based infrastructure to distribute computational tasks across multiple nodes, ensuring seamless operation even during peak usage. Caching mechanismsareemployedtostorefrequentlyusedmodels andembeddings,furtherreducingredundantcomputations. Thesestrategiescollectivelyensurethatthesystemremains responsive and capable of handling large datasets or high usertrafficwithoutcompromisingaccuracyorefficiency.

4.8AdvantagesoftheProposedSystem

Theproposedsystemoffersseveraladvantagesthatmake itarobustsolutionforfakenewsdetection.Byincorporating DistilBERT,itcapturesthecontextualnuancesoflanguage, enablingittodistinguishsubtledifferencesbetweenrealand fake news. The use of SVM ensures precise classification whilemaintainingcomputationalefficiency.Theintegration of a feedback mechanism allows the system to adapt dynamically,addressingnewpatternsofmisinformationas theyemerge.Additionally,thearchitecture’stransparency, evidencedbyfeatureslikeconfidencescoresandexplanatory insights,fosterstrustamongusersandpromotesitsadoption invariousdomains.Theseadvantagespositionthesystemas a versatile and effective tool in combating the growing challengeoffakenews.

4.9ChallengesandMitigation

Despiteitsstrengths,thesystemisnotwithoutchallenges. One of the primary issues is the imbalance in labeled datasets, where real news significantly outnumbers fake news. To mitigate this, techniques such as oversampling, undersampling,andsyntheticdatagenerationareemployed tobalancethedataset.Anotherchallengeistheever-evolving natureoffakenews,whichnecessitatesperiodicupdatesto the training dataset and retraining of the classifier. Computational resourceconstraintsposedbytransformer models like DistilBERT are addressed using hardware acceleratorssuchasGPUsorTPUs.Byproactivelyaddressing

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

these challenges, the system ensures its robustness and reliabilityovertime.

Lookingahead,numerousenhancementscanbeundertaken toelevatetheperformance,scalability,andapplicabilityof thefakenewsdetectionsystem.Theseadvancementsarenot only aimed at improving technical aspects but also at addressingsocietalchallengesposedbymisinformationinan increasinglydigitalizedworld.

5.1DatasetExpansionandDiversity

Acriticalstepinenhancingtherobustnessofthesystem involvesexpandingthedatasettoencompassabroaderrange of languages, dialects, and cultural nuances. The existing datasets,whileeffectivewithincertainparameters,areoften limitedinscope,leadingtopotential biasesinpredictions. Incorporating multilingual datasets with diverse cultural contextswouldenablethesystemtoadaptseamlesslytoa globalized digital ecosystem. This would make the model particularly relevant in regions with diverse linguistic landscapes, such as India, Africa, and Europe, where misinformation often exploits local contexts and language subtleties. Additionally, efforts to include data from underrepresentedregionsandnicheonlineplatformscould bridge existing gaps, ensuring the system’s applicability acrossvarieddemographics.

5.2Real-TimeMonitoringandAlertSystem

Theintegrationofreal-timemonitoringfeaturesstands asapivotalenhancement.Byemployingadvancedstreaming data technologies, the system can be transformed into a proactive tool that identifies and flags potential misinformationasitemerges.Real-timemonitoringwould empoweruserstoreceiveimmediatealertsaboutpotentially fake news articles, videos, or posts, thereby fostering a cultureofproactiveengagementagainstmisinformation.For instance,socialmediaplatformscouldembedthesystemto scan posts and comments instantaneously, notifying administrators and users about dubious content before it gains traction. Coupled with machine learning models optimizedforspeed,thisfeaturecouldsignificantlyreduce thespreadofmisinformation,especiallyduringcriticalevents likeelections,pandemics,ornaturaldisasters.

5.3LeveragingEnsembleLearningTechniques

Exploring ensemble learning techniques is another promising avenue for improving detection accuracy. By combining multiple models, such as decision trees, neural networks,andsupportvectormachines,ensemblemethods leverage the strengths of each to deliver more reliable predictions.Techniqueslikebagging,boosting,andstacking canhelpmitigateindividualmodelweaknesses,resultingin higher precisionandrecall rates.Forinstance,integrating models fine-tuned for specific languages or content types

with general-purpose algorithms could create a more versatilesystemcapableofhandlingdiversescenarios.The adoption of ensemble methods not only enhances the detectioncapabilitiesbutalsoensuresgreaterresilienceto adversarialattacks,makingthesystemmorerobustagainst deliberateattemptstospreadmisinformation.

Expanding the system’s functionality to include automated fact-checking would significantly enhance its utility.Thisfeaturewouldallowthesystemtonotonlydetect fakenewsbutalsoprovideuserswithvalidatedinformation sourcesasalternatives.Bycross-referencingdetectedcontent with verified databases, news portals, or authoritative publications, the system can offer users a deeper understanding of the context. For instance, users encounteringdubiousnewsarticlescouldreceivesuggestions forcrediblesourcesthateitherconfirmorrefutetheclaims. Thiscapabilitywouldbeparticularlyvaluableforjournalists, educators, and policymakers who rely on accurate information for decision-making. Additionally, integration with open-source fact-checking APIs could streamline the developmentprocessandensureaccesstoawidearrayof reliabledata.

An essential aspect of building trust in AI systems is ensuringexplainabilityandtransparency.Futureiterationsof the fake news detection system could incorporate userfriendlydashboardsthatprovideinsightsintothemodel’s decision-making process. For example, the system could highlightspecificwords,phrases,orpatternsthatinfluenced its classification of a news article as fake or genuine. This transparencywouldnotonlybuilduserconfidencebutalso facilitate constructive feedback for improving the model. Furthermore, employing techniques like SHAP (Shapley AdditiveExplanations)valuescouldhelpdemystifycomplex neural network predictions, making the system more accessibletonon-technicalstakeholders.

The system’s impact could be amplified through seamless integration with popular social media platforms suchasFacebook,Twitter,andInstagram.APIsandplugins could be developed to enable real-time scanning of usergenerated content, providing instant feedback on the credibilityofposts.Partnershipswiththeseplatformswould ensurethatmisinformationiscurtailedatitssource,reducing itsabilitytospreadvirally.Additionally,thesystemcouldbe incorporated into messaging apps like WhatsApp and Telegramtoanalyzeforwardedmessages,acommonvector for misinformation in many countries. Such integrations wouldpositionthesystemasaubiquitoustoolforcombating fakenewsineverydaydigitalinteractions.

Volume:12Issue:01|Jan2025 www.irjet.net

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

Tofurtherenhancethesystem’sefficacy,incorporating behavioral and sentiment analysis could provide deeper insightsintothespreadofmisinformation.Byanalyzinguser behaviorpatterns,suchassharingfrequency,audiencereach, andengagementmetrics,thesystemcouldidentifyhigh-risk contentlikelytogoviral.Sentimentanalysiscouldalsohelp detectemotionallychargedlanguageoftenusedinfakenews tomanipulatepublicopinion.Combiningthesefeatureswith existingdetectioncapabilitieswouldcreateacomprehensive solution capable ofaddressing the multifaceted nature of misinformationcampaigns.

As the system matures, its application could be broadened to address challenges beyond traditional fake newsdetection.Forinstance,thesametechnologycouldbe adaptedforuseincombatingdeepfakecontent,identifying fraudulentadvertisements,ordetectingbiasedreportingin newsarticles.Collaborationwithorganizationsinfieldssuch ascybersecurity,healthcare,andeducationcouldopennew avenues for the system’s application. Additionally, the developmentoflightweightversionsofthesystemoptimized for deployment on low-resource devices could ensure accessibility in regions with limited technological infrastructure.

5.9ContributiontoPublicAwarenessandEducation

Finally,thesystem’sroleinenhancingpublicawareness anddigitalliteracycannotbeoverstated.Bypartneringwith educationalinstitutions,NGOs,andgovernmentagencies,the projectcouldbeusedtodevelopworkshops,campaigns,and training modules aimed at fostering critical thinking and media literacy. Interactive tools, such as quizzes and simulations, could help users learn to identify fake news independently,reducingtheirrelianceonautomatedsystems. Thisholisticapproachwouldcontributetoamoreinformed andresilientsociety,capableofnavigatingthecomplexitiesof thedigitalage.Byembracingtheseadvancements,thefake newsdetectionsystemhasthepotentialtotransformintoa comprehensive, globally impactful tool. From technical improvements such as ensemble learning and real-time monitoringtobroadersocietalapplicationslikefact-checking and public education, the system’s future is rich with possibilities.Theseenhancementswillnotonlystrengthen the fight against misinformation but also contribute to buildingamoretruthfulandtransparentdigitalecosystem, fostering trust and integrity in online interactions. Furthermore,thesystem'sefficiencyisessentialinhandling thevolumeofdatageneratedacrosssocialmediaplatforms. Given the speed at which information spreads, real-time detectionoffakenewsiscritical.Thisframework,withits abilitytoprocesslargedatasetsefficiently,canactasarealtime news filtering tool, helping users to receive accurate andtimelyinformation.

Thisprojectillustratesthepowerfulcapabilitiesofmachine learningtechnologies,particularlytheDistilBERTalgorithm and Support Vector Machine (SVM) model, in effectively detectingfakenewsonsocialmediaplatforms.Byleveraging naturallanguageprocessing(NLP)techniques,theproposed system demonstrates its ability to analyze text data and distinguish between genuine news articles and misinformationwithhighaccuracy.Theintegrationofthese advancedmodelsallowsforscalableandefficientprocessing oflargedatasets,whichiscrucialintoday'sfast-paceddigital landscapewherethespreadoffakenewsposessignificant societal challenges. This system not only aids users in navigating the vast information available online but also servesasavaluabletoolfororganizationsseekingtocombat misinformation.Theadaptabilityoftheframeworkenables its deployment across various social media platforms, enhancingoverallinformationreliabilityandcontributingto amoreinformedpublic.

Moreover,theapproachtakeninthisprojectaddressesthe growingconcernoffakenewsthathasbecomeamajorissue inbothpoliticalandsocialspheres.Withthevastamountof content generated daily on social media platforms, it has become increasingly difficult for individuals to discern betweenaccurateandmisleadinginformation.Byutilizing theDistilBERTalgorithm,thesystemisabletocomprehend and analyze textual content with a deep understanding of contextualrelationshipsandlinguisticnuances,makingitfar superiortotraditionalrule-basedsystems.TheSVMmodel further enhances the system's efficiency by providing a robustclassificationmechanismthatishighlyeffectiveeven incasesofimbalanceddatasetsorambiguousdata.

The effectiveness of this system extends beyond just detectingfakenews;itcanbeadaptedforuseinavarietyof domains including healthcare, finance, and political campaigns, where misinformation can have dire consequences.Thismulti-domainapplicabilityensuresthat the technology has far-reaching implications and can be utilizedinmultipleindustriestosafeguardpublictrustand reduce the adverse impacts of fake news. In addition, this approach can be continuously improved with the introduction of more sophisticated algorithms and larger trainingdatasets,ensuringthatthesystemstaysuptodate withevolvingtrendsinmisinformation.

In conclusion, this project presents a robust and scalable solution to the growing problem of fake news on social mediaplatforms.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 p-ISSN:2395-0072

Volume:12Issue:01|Jan2025 www.irjet.net

[1] "A Comparative Study of Hybrid Models in Health MisinformationTextClassification"-MkululiSikosanaetal. UniversityofCapeTown,SouthAfrica(3-9-2021).

[2]"EvaluatingDeepLearningApproachesforCovid19Fake News Detection" - Patwa, P. et al. Indian Institute of Technology,Delhi(4-4-2020).

[3]"FightinganInfodemic:Covid-19FakeNewsDataset"Pennington,J.,etal.StanfordUniversity,USA(2021).

[4]"DistilBERT:ADistilledVersionofBERT"-Sanh,V.,etal. HuggingFace,Paris(3-5-2019).

[5] "Fake News Detection on Social Media: A Data Mining Perspective" - Shu, K., et al. Arizona State University, USA (2020).

[6]"BeyondNewsContents:TheRoleofSocialContextfor Fake News Detection" - Shu, K., et al. Arizona State University,USA(2021)

[7]"LIAR,LIARPantsonFire:ANewBenchmarkDatasetfor FakeNewsDetection"-Wang,W.Y.UniversityofCalifornia, SantaBarbara(2017).

[8] "Transformers: State-of-the-art Natural Language Processing"-Wolf,T.,etal.-HuggingFace,Paris(3-2-2020).

[9] "Detection of Bangla Fake News using MNB and SVM Classifier" - Md Gulzar Hussain1, Md Rashidul Hasan2, Mahmuda Rahman3, Joy Protim4, and Saki. University of Dhaka,Bangladesh(2022).

[10]"DistilBERTandRoBERTaModelsforIdentificationof FakeNews"-MartinaToshevska,GeorginaMirceva.Saints CyrilandMethodiusUniversity,NorthMacedonia(2021).

[11] "BiLSTM with Attention for Indian Fake News Detection" - Pandey, K., Patel, S., Indian Institute of Technology.IndianInstituteofTechnology,Kanpur(2022).

[12]"TransferLearningwithGANsforFakeNewsDetection andGeneration"-Miller,A.,StanfordUniversit,USA(16-32021.