Real-time Distress Signal Recognition: Acoustic Monitoring for Crime Control

1 Sirisha R, 2 Dhanalakshmi S, 3 Chetna L Shapur, 4Prof.

Swathi Shrikanth Achanur

1,2,3 UG student, Dept. of CSE-AIML, AMC Engineering College, KARNATAKA, INDIA. 4Assistant professor, Dept. of CSE-AIML, AMC Engineering College, KARNATAKA, INDIA

ABSTRACT - This research focuses on developing a "Real-Time Acoustic Monitoring System for Crime Prevention,"leveragingmachinelearningtodetect human screams and assess risk levels. By leveraging Mel Frequency Cepstral Coefficients (MFCCs) for feature extraction and employing Support Vector Machines (SVM) and Multilayer Perceptrons (MLP) for classification, this system generates high-risk or medium-risk alert messages based on surrounding conditions. Integrating Kivy for the user interface and AWS SNS for SMS notifications ensures accessibility and timely communication with law enforcement

Key Words: Acoustic monitoring, Human scream detection, MFCCs, SVM, MLP, Machine learning, Realtime alerts, Crime prevention, Kivy, AWS SNS

1. INTRODUCTION

In modern urban landscapes, where noise pollution is abundant,identifyingandrespondingtodistresssounds such as human screams is both challenging and critical. Traditional surveillance systems often rely on visual inputs, which may fail in low-visibility environments or locations without proper camera coverage. Additionally, the sheer volume of data generated by such systems makes manual monitoring impractical. This creates a pressing need for intelligent, real-Systems capable of independently detecting and interpret audio signals indicativeofdistress.

This research focuses on developing an audio-based crime prevention system designed to detect human screams in real-time using machine learning models. By combining advanced sound processing techniques with risk assessment algorithms, the system ensures timely and accurate responses to potential emergencies. The integration of user-friendly interfaces and automated alert mechanisms adds further value, making this solution a promising approach to enhancing public safety.

This research aims to close the divide between traditional visual surveillance systems and advanced audio-based crime detection, offering a robust and efficientsolutionforenhancingpublicsafetyinreal-time. The integration of intelligent algorithms and real-time

alertmechanismsestablishesthissystemasapioneering approach to crime prevention and emergency response.

2. EXISTING SYSTEM

Currently,mostcrimepreventionsystemsrelyonvisual surveillance,suchasCCTVcameras,whichprovidevideo footage for monitoring and analysis. While effective in certain scenarios, these systems have significant limitations. Visual surveillance fails in poor lighting conditions, blind spots, or areas where camera installation is impractical. Moreover, such systems often require human intervention to monitor footage, making themlesseffectiveinreal-timeemergencyscenarios.

Audio-based detection systems, on the other hand, are still in their infancy. Although some systems can detect generic sounds, they often struggle to differentiate between distress sounds like screams and background noise, leading to high false-positive rates. Furthermore, existing solutions rarely Incorporate sophisticated machine learning algorithms for accurate classification, limitingtheirabilitytoadapttodynamicenvironments.

A strong need exists for a reliable system that not only detects screams with high precision but also evaluates the urgency of the situation and ensures timely communicationwithlawenforcementagencies.Thisgap in existing systems forms the foundation for this research.

3. PROBLEM STATEMENT

The inability of existing surveillance systems to detect and respond to audio-based distress signals, such as human screams, in real-time has left a critical gap in publicsafetymeasures.Visualsystems,whileeffectivein certain conditions, fail in low-visibility scenarios or unmonitored areas. Furthermore, traditional audio detection systems lack the precision to distinguish distress sounds from background noise, resulting in delayedormissedresponses.

This research aims to address these limitations by developing an intelligent, real-time acoustic monitoring system capable of detecting human screams, assessing the level of risk, and generating timely alerts. The proposed system should integrate machine learning

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN:2395-0072

techniques to improve accuracy, minimize false positives, and offer adaptable solutions for various environments.

4. ARCHITECTURE

The architecture of the proposed system is crafted to guarantee efficient sound detection, classification, and alertgeneration.Itconsistsofthreemaincomponents:

1. Data Acquisition and Pre-processing: Audio signals are gathered from the environment and processed using the Librosa library to extract MFCC features. These features capture the unique characteristics of the sounds, enabling accurateclassification.

2. Machine Learning Models: The system uses two classifiers SVM for primary scream detectionandMLPforsecondaryvalidationand refinement.Thislayeredapproachenhancesthe system’soverallaccuracy.

3. Alert Mechanism: Depending on the classification results, the system evaluates the levelofrisk.High-riskalertsaretriggeredwhen both classifiers detect a scream, while mediumrisk alerts are triggered if only one classifier detects a scream. Alerts are sent as SMS messages using AWS SNS to the nearest law enforcementagencies.

4.1.1 DESIGN

The system design emphasizes simplicity, accuracy, and real-timefunctionality.

User Interface: Developed using the Kivy framework, the UI provides a clear and interactive dashboard for monitoring sound classificationsandalertstatuses.

Classifier Integration: SVM and MLP models work together to ensure accurate scream detection by cross-validating results from differentalgorithms.

Decision Logic: A decision-making algorithm assesses the risk level and initiates suitable actionsbasedontheidentifiedaudiopatterns.

Explanation of Design

Feature Extraction: MFCCs are extracted from the collected audio signals, capturing the spectral and temporal properties of the sounds. These features are saved in a structured format formodeltraining.

Model Training: The Support Vector Machine (SVM) model is trained on binary classes (screams and non-screams), while the MLP model is trained for multi-class classification to further refine detection accuracy. Both models aresavedusingTensorFlowforfutureuse.

Alert Logic:Thesystem analysestheoutputsof both models to assess the risk level. High-risk alerts are triggered if both models detect a scream, while medium-risk alerts are triggered ifonlyonemodeldetectsit.

BLOCK DIAGRAM

Fig-1: BlockdiagramofReal-timeDistressSignal Recognition:AcousticMonitoringforcrimecontrol

4.1.6 WORKING

The system operates by capturing real-time audio signals through a microphone and processing them to detect distress sounds, such as human screams. The stepsinvolvedintheworkingprocessareasfollows:

1. AudioCapture:Thesystemcontinuouslylistens to environmental sounds through an integrated microphone.

2. Feature Extraction:TheLibrosalibraryisused for extracting Mel Frequency Cepstral Coefficients (MFCCs), which serve as the featuresforinputtomachinelearningmodels.

3. ModelPrediction:

o Support Vector Classifier (SVC) model classifies sounds as screams or nonscreams.

o TheMultilayerPerceptron(MLP)model further analyses the audio signals for betteraccuracy.

4. Risk Assessment: Driven by the predictions from both models, the system determines whether the situation is high-risk or mediumrisk.

o High Risk: Both models detect a scream.

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net

o Medium Risk: Only one model detects ascream.

5. Alert Generation: An appropriate SMS alert is generated and sent to the nearest police station usingAWSSNS,enablingtimelyintervention.

6. User Interaction: The system’s status and classifications are displayed on the Kivy-based userinterfaceforeasymonitoring.

5. IMPLEMENTATION

UI Development: The user interface is implemented using the Kivy framework, ensuring cross-platform compatibilityandeaseofinteraction.

Dataset Preparation: The dataset includes 2000 positive samples of human screams and 3000 negative samples of other sounds, collected from various online sources.

FeatureExtraction:MFCCsareextractedfromtheaudio datasetusingtheLibrosalibraryand storedinaCSVfile fortrainingpurposes.

ModelTraining:

The SVM model is trained to classify sounds as screamsornon-screams.

The MLP model is trained to refine detection andimproveclassificationaccuracy.

System Integration: The trained models are incorporated into the application using TensorFlow, enablingreal-timeprediction.

Alert Mechanism: AWS SNS is configured to send SMS alerts to the nearest authorities based on the detected risklevel.

6. RESULT

The proposed system was evaluated using a diverse set ofaudiosamplestoevaluateitsaccuracyandreliability.

1. ModelPerformance:

The SVM model achieved a classification accuracy of approximately 92%, effectively distinguishingscreamsfromnon-screams.

The MLP model enhanced overall accuracy to 95%byrefiningpredictions.

2. Risk Assessment: The system successfully categorized scenarios into high-risk and medium-risk situations, with minimal false positives.

3. Real-Time Alert: The SMS alert mechanism demonstrated rapid communication, with messagesdeliveredwithinsecondsofdetection.

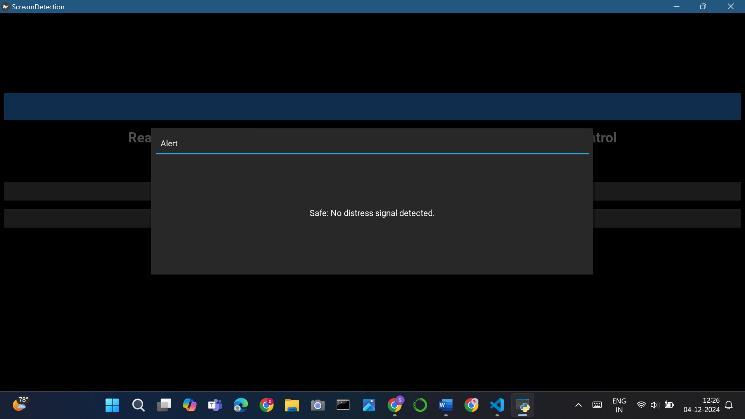

4. UserExperience:TheKivy-basedUIprovideda seamless and interactive experience, ensuring easeofoperation.

Fig -6.2: Selecting negative audio clip

Fig -6.1: UserInterface

Fig -2: WorkingInterface

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN:2395-0072

7. CONCLUSIONS

This research demonstrates the potential of leveraging audio-based systems for real-time crime prevention. By integrating advanced sound processing techniques, machine learning models, and automated alert mechanisms, the proposed system effectively addresses the limitations of traditional surveillance methods. The system provides accurate detection of human screams, reliableriskassessment,andtimelycommunicationwith lawenforcementagencies,makingItservesasavaluable

tool for improving public safety. The user-friendly interface and scalable architecture further contribute to itspracticalimplementationindiverseenvironments.

8. FUTURE WORK

Noise Filtering: Implementing advanced noise cancellation techniques to improve performance in highlynoisyenvironments.

Multilingual Support:Expandingthesystemto detect distress sounds across different languagesandculturalcontexts.

Expanded Dataset: Including more diverse audio samples to improve the robustness and generalizabilityofthemachinelearningmodels.

Edge Deployment: Deploying the system on edge devices for better scalability and reduced latencyinreal-timeoperations.

Integration with IoT:IncorporatingInternetof Things (IoT) devices to create a distributed network of acoustic sensors for broader coverage.

Advanced Alerts: Adding location-based alerts and integrating with emergency services for automateddispatchincriticalsituations.

REFERENCES

[1]McFee,etal.(2015)."Librosa:AudioandMusicSignal Analysis in Python." Proceedings of the 16th Python in Science Conference Introduces the Librosa library, crucial for extracting audio features like MFCCs for signalanalysisinyourproject.

[2] Cortes, C., et al. (1995)."Support Vector Networks." Machine Learning, 20(3), 273–297. DOI: 10.1007/BF00994018 DefinestheSVMalgorithm,used in your project to classify human screams from environmentalsounds.

[3] Rumelhart, D. E., et al. (1986). "Learning Representations by Back-Propagating Errors." Nature, 323(6088), 533–536. DOI: 10.1038/323533a0. Introduces the back propagation algorithm, essential for training the Multilayer Perceptron (MLP) model in yourproject.

[4] Stowell, D., et al. (2014). "Automatic Large-Scale Classification of Bird Sounds is Strongly Improved by Unsupervised Feature Learning." Peer J, 2, e488. DOI: 10.7717/peerj.488.

Fig -6.3: Detecting High Risk Alert

Fig -6.4: AlertSMS

Fig

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN:2395-0072

BIOGRAPHIES

Chetna.L.Shapur

6th SEM,Dept.ofCSE-AIML AMCEngineeringCollege

Sirisha.R

6th SEM,Dept.ofCSE-AIML AMCEngineeringCollege

Dhanalakshmi.S

6th SEM,Dept.ofCSE-AIML AMCEngineeringCollege

SwathiShrikanthAchanur Assistantprofessor Dept.ofCSE-AIML AMCEngineeringCollege