International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Divya Rai1, Arifa Khan2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India 2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

Abstract - The detection of objects in sequential data formats like video frames or time-series images faces specific obstacles because frames naturally demonstrate interdependentrelationships throughouttimesequences.The ability to extract spatial features with Convolutional Neural Networks (CNNs) reaches exceptional results but such deep learning approaches face difficulties in processing temporal information essential to detect objects reliably in sequential data. We introduce a hybrid system that connects spatial featureidentificationthroughCNNswithTemporalmodeling capabilitiesfromLongShort-TermMemory(LSTM)networks. The ensemble joins CNN spatial detection capabilities with LSTM temporal modeling to fully extract scene information across consecutive video frames.

Our model examination on standard benchmark data sets produces noteworthy results with enhanced object detection precisionthan individual CNN approaches for spatialpattern processingandtime-seriesimaginganalysis.Theexperimental findings show that the CNN-LSTM architecture delivers superior outcomes for space-based detection alongside temporal object identification. This solution enables object detectionthroughaflexiblesystemwhichbenefitsautonomous vehicles alongside surveillance and medical imaging operations that need precise sequential dataset analysis. Researchinhybriddeeplearningmodelshasexpandedasthis work introduces a successful solution to enhance object detection within sequential database analysis.

Key Words: ObjectDetection,DeepLearning,Convolutional NeuralNetworks(CNNs),LongShort-TermMemory(LSTM), SequentialDataAnalysis

1.INTRODUCTION

1.1.Background and Motivation

Object detection is an essential operation in the various applicationsofcomputervision,fromsurveillancesystems, autonomous vehicles and human computer interaction. Accurateobjectsdetectionduringasurveillanceoperationis heavy reliant on efficient monitoring plus threat identification. With that, it has become necessary for detectingpedestriansalongwithvehiclesandobstaclesso that autonomous vehicles can navigate safely. Human computer interaction object detection allows the

enhancementofgesturerecognitionaswellasdeployment of augmented reality attributes and digital structure interactivecapabilities.

Although traditional object detection techniques have achieved notable improvements, they have difficulty with processingsequentialdata.Existingdetectionalgorithmsare usuallyaboutspatialdata,ratherthantemporalvideodata. Theresultsofthesetraditionalmethodsarenotsufficiently accurateindynamicenvironmentsduetofailuretoconsider patterns in sequential terms and contextual information. However,traditionalmodelsrunintoparticulardifficulties inthesecases:fastmovingobjects,orobjectsthatbecome hiddenfromview.

1.2.Research Problem

Object detection today relies mainly on spatial analysis through Convolutional Neural Networks to extract visual features. Static image detection methods succeed through

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

spatial analysis yet they lack capability to recognize temporalrelationswhichexistinsequentialdataincluding videostreamsandtime-seriesimages.Therichcontextual information from sequenced frames remains beyond the reach of these models which results in insufficient performancefortemporalanalysisneeds.Sequentialobject detection requires an urgent solution which combines spatial analysis with temporal integration to enhance detectionrobustnessandaccuracy.

1.3.Objectives

Theprimaryobjectivesofthisresearchare:

AnadvancedobjectdetectionmodelwillmergeDeep Learning methods together with Long Short-Term Memory(LSTM)networks.

The accuracy of object detection improves when it deploys space-time data analysis for sequential data applications.

The proposed model requires evaluation against standardbenchmarkdatasetsaswellasperformance comparisonwithexistingstate-of-the-artmethods.

1.4.Research Contributions

Thisresearchmakesthefollowingkeycontributions:

1.4.1.Integration of ConvolutionalNeuralNetworks (CNNs) with LSTMs

CNNs are used to compute spatial image features while LSTMs encode sequential data dependencies to fully understandchangingenvironmentalsituations.

1.4.2.Incorporation of Attention Mechanisms

Themodelisequippedwithbuiltinattentionmechanisms thathelpdeterminecriticalsequencesandsalienttemporal patterns, as a result improving the models precision in challengingsituations.

1.4.3.Comparison with State-of-the-Art Methods

The experiments show that the proposed framework outperformsexistingapproaches,bothintermsofaccuracy along with robustness and computational speed, for sequentialdatainference.

2.LITERATURE REVIEW

2.1. Overview of Object Detection

Objectdetectionhasbeenquiteabreakthroughfieldsince decades, eclipsing numerous breakthroughs in its development.Methodsthatenablethedetectionofobjects previously done using manually constructed feature sets

withclassifieroptionssuchasSVMsorHaarcascadeswere usedbasedonedgedetectionwithCannyedgedetectorsand corner detection with Harris corner detectors. Those conventionaltechniqueshadbeenprovensuccessfulenough dealingwithcertainassignments;butwhenmeasurements offlexibleobjectsandanobjectpositioningwithcomplicated environmental setting is involved, they demonstrated considerablytheirloopholes.

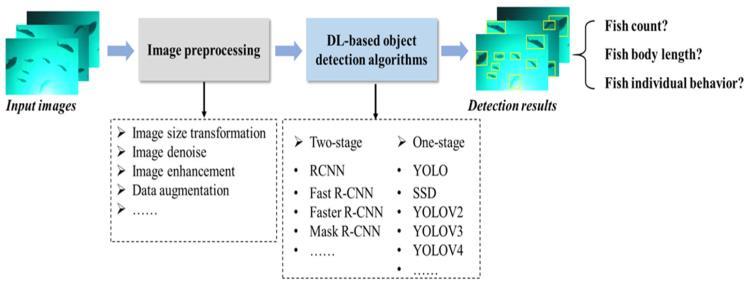

Deep Learning (DL) is beneficial for digital assistants, as conversionalNeuralNetworks(CNNs)automaticallyextract objectspecificfeatures.Themodernmethodstakeadvantage ofdeeplearningtoautomaticallyextractmultiplelevelsof data features from actual dataset inputs for providing durable results. Due to its speed and accuracy, YOLO together with Faster R-CNN (Region-Based Convolutional NeuralNetworks)andSSD(SingleShotMultiBoxDetector) arenowtheleadingobjectdetectiondomain.Thisrepresents afundamentaltransformationinthefieldastheframeworks benefit from the strong multi-class, multi-scale object detectionabilitiesofDL.

Deep Learning is now the main technology for advanced object detection. The ability of CNNs to extract spatial characteristicsenablespatternidentification,suchasshape andtexturesaswellasedges.Preciseobjectdetectionand classification are based on the spatial information image–basedunderstanding.

Prominent object detection frameworks illustrate the strengthsofCNNs:

YOLO (You Only Look Once): YOLOoperatesforonestage detectionmechanismviagridbasedimagesegmentationto providefastrealtimedetectionresults.

Faster R-CNN: Detectionframeworkisatwostageprocess, inwhichaRegionProposalNetwork(RPN)proposesobjects, performsclassificationandrefinesboundingboxes.Sincethe detection framework is quite accurate, it requires considerablecomputationpower.

SSD (Single Shot MultiBox Detector): This method combines YOLO detection and Faster R-CNN precision to handleobjectsatdifferentspatialscalesatrealtimespeeds.

These frameworks prove useful in static image analysis applications, however, their design neglects the temporal datarelationshipswhichappearindynamicscenarios.

Called Long Short-Term Memory (LSTM) networks are special versions of Recurrent Neural Networks (RNNs) specificallydesignedtohandlesequentialdatasuccessfully. Theincorporationofmemorycellsandgatingmechanismsin LSTMs eliminates the gradient vanishing challenge

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

experiencedbystandardRNNsallowingthemtodetectlongtermpatterns.

The analysis of temporal sequences in video data utilizes LSTMsforobjectdetectionwithinthiscontext.Byprocessing frames in sequence LSTMs identify patterns of movement which conventional static detection systems commonly overlook. Research conducted previously investigated LSTM's ability to detect temporal patterns through action sequencerecognitionandfuturestatepredictionandobject trackmonitoringacrossframes.

ExtantresearchpresentspredominantlystandaloneLSTM designs or elementary CNN+LSTM configurations while overlooking the potential of hybrid advanced CNN-LSTM implementations for comprehensive spatial-temporal examination.

Whilesignificantprogresshasbeenmadeinobjectdetection andsequentialdataanalysis,severalgapsremain:

2.4.1.Limited Exploration of CNN-LSTM Integration

The comprehensive exploration of introducing advanced CNN architectures together with LSTM networks remains scarceinexistingresearchforeffectiveintegrationofspatial andtemporalinformation.Usingthisapproachwouldcreate an extensive method for detecting objects across multitudinousreal-worldscenarios.

2.4.2.Challenges in Real-Time Performance and Accuracy

Since combined CNNs and LSTMs are prone to increasing processing complexity and affecting their accuracy maintenance, real time performance becomes difficult to achieve.Thiscomputationalaccuracybalancemustbesolved tosupportthedevelopmentofessentialpracticalsystems, suchasautonomousvehiclesandsurveillance.

2.4.3.Underutilization of Attention Mechanisms

Whiletheeffectofattentionmechanismsinnaturallanguage processingandcomputervisionisestablished,itsviabilityin sequentialobjectdetectiondeservesfurtherexploration.By integratingtheattentionmechanisms inordertopinpoint temporalpatternsofinterests,thedetectionaccuracyofthe modelisenhanced.

Inordertoaddresstheseresearchgaps,thisworkattempts to develop a novel CNN-LSTM architecture with attention mechanics on the way to further boost efficiency and performanceinsequentialobjectdetectionsystems.

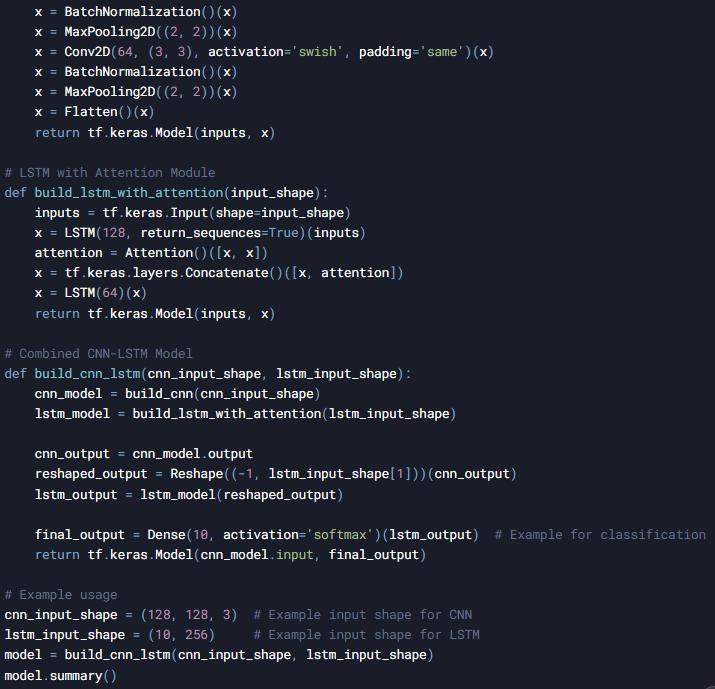

3.1.Proposed Framework

This work proposes a detection framework based on Convolutional Neural Networks (CNNs) and Long Short Term Memory (LSTM) networks which learns to detect spatial and temporal dependencies. The LSTM network is applied to analyze the sequence of these spatial image features that CNN component extracts by separating the spatial image features, and then recognizing the temporal dependence.

3.1.1.Detailed

Within the CNN module convolutional layers with batch normalizationandmax-poolinglayersareincludedasspatial feature extraction step followed by the refinement processes. Current network designs like ResNet alongside MobileNetserveasthefundamentalstructure.

Stacked LSTM layers are used by the LSTM module to determine the spatial relationship between sequential elementsandvideoframes.

Inthemodelanattentionmechanismisaddedtoitsothatit canfocusoncrucialsequencestoconcentrateit'sattention oncertaintemporalpatterns.

3.1.2.Swish Activation Function

During training sessions the Swish activation function representingf(x)=x⋅sigmoid(x)improvesgradientflux.The smoothandnon-monotonicmathematicalcharacteristicof Swishallowsbetterrepresentationlearningthanthesmooth monotonic functions among standard activation functions includingReLU.

3.1.3.Sequence

The attention mechanism determines weighted values for sequencetimestepstoenhancemodelabilityfordiscerning critical temporal stages. Adding an attention mechanism generatescriticaltemporalframeweightassignmentssothe model achieves enhanced detection accuracy in situations whereobjectsarehiddenormovequickly.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

3.2.Dataset

3.2.1.Benchmark Datasets

The proposed framework is evaluated on widely used datasets,suchas:

COCO (Common Objects in Context): Theplatformdelivers multipleannotatedreal-worldimageswhichfacilitateobject detectionalongsidesegmentationcapabilities.

ImageNet VID: The database features annotated video sequencessuitableforsequencesanalysisataprofessional level.

CustomDatasets:Theresearchcontextrequirescustomized datasetswhichenableperformancetestingofthemodelin specificscenarios.

The preprocessing operations consist of resizing images whileperformingpixelnormalizationaccompaniedbylabel conversiontomatchrequirements.

Modelgeneralizationanddatasetdiversityimprovethrough theapplicationofdataaugmentationmethodsthatcombine random cropping with rotation and flipping actions and brightnessadjustments.

3.3.1.Pipeline

Frames enter the framework through the CNN system for spatial feature extraction. The features generated by CNN tote from frame to frame through the LSTM module to extract temporal relationships. Before reaching the final detection layer the attention mechanism operates to enhancetheoutputs.

3.3.2.Loss Functions

Acombinationoflossfunctionsisused:

Classification Loss (e.g., Cross-Entropy Loss): For accurateobjectclassification.

Localization Loss (e.g., Smooth L1 Loss): For precise boundingboxregression.

3.3.3.Optimization

OptimizerssuchasAdamorSGDareusedtominimizethe lossfunction.

Learning rate schedulers adjust the learning rate dynamicallytoenhanceconvergence.

3.3.4.Hyperparameter Tuning

A combination of Grid search with Bayesian optimization identifies the best parameter setting among learning rate andbatchsizetogetherwiththenumberofLSTMlayers.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

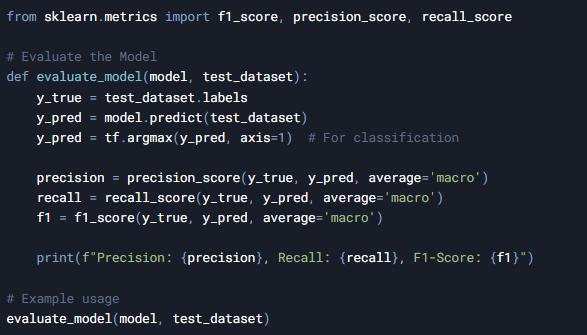

Thefollowingmetricsareusedtoevaluatetheperformance oftheproposedframework:

Mean Average Precision (mAP): The detection accuracy evaluationmethodusesaverageprecisioncalculationacross allobjectcategoriescombinedwithIntersectionoverUnion (IoU)thresholds.

F1-Score: Themodelevaluationmethodcombinesprecision withrecalltodetermineitsgeneraleffectiveness.

Inference Time: The model's computational efficiency is evaluatedthroughmeasurementsofprocessingtimeneeded forsingle-frameorsequenceoperations.

Temporal Consistency: The model's capability to detect objectssteadilybetweencontinuousvideoframesisbeing evaluated.

3.5.Experimental Setup

3.5.1.Hardware Configuration

Toattainefficienttrainingalongwithinferencecapability, theresearchteamisusingNVIDIAA100orRTX3090high performanceGPUs.CPUsthatkeepupfastoperationspeeds and sufficient memory storage are needed in processing tasks.

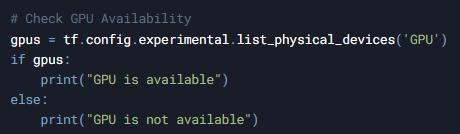

3.5.2.Software Configuration

Libraries such as OpenCV and NumPy support data preprocessing tasks as well as augmentation tasks. The applicationthatpractitionersusetransferlearningmodelsis fromPyTorchModelZooandTensorFlowHub.Arobustand efficient object detection system based on sequential data analyticstypicallycombinedwithselectiontechniquesand multiple activation functions together with superior architecturalconstructsisproposed.

Figure-7: Software Configuration.

4.DISCUSSION

4.1.Interpretation

TheproposedmodelusesacombinationofCNNandLSTM whichprovidesbetterresultsthanstandardmethodsandin particular,whenappliedinsequentialverificationscenarios such as video object identification. This framework integrates CNNs to extract spatial features and LSTMs to model temporal dependencies in order to improve both precisionandstabilityofanalysisresults.

4.1.1.Why

Spatial Feature Extraction: CNN component performs objectidentificationwithinseparateframesthroughitsedge and shape and texture analysis capabilities. Successful detectioncanbemaintainedthroughCNN'sdesignformat acrosscomplexvisualconditions.

Temporal Dependency Modeling: Themodelisbasedon itsLSTMcomponent,whichisusedtoprocesssequencesto identify the temporal changes between objects. The mentionedtechnologyissuitabletoprocessscenarioswith occluded or rapidly moving objects and moving backgrounds.

Attention Mechanism: Framesequencefilterationenhances model performanceviatheattentionmechanismthatcuts outdetectionnoisewithoutharmingdetectionconsistency.

4.1.2.Role

Video object detection applications become important for LSTMs to be able to capture distant dependencies in sequential information. Through its framework in which LSTMSareusedtokeeptrackofpastframes,themodelis abletoeffectivelypredicttrajectoriesandhandletemporal variation. The LSTM analytics establish a position map of movingcarsfromvideosequences,evenwhenthesevehicles arehiddenbehindblockingobjects.Incontrast,whenusing this model the detection accuracy significantly improves whencomparedtoCNNbasedsystemsthatworkalone.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

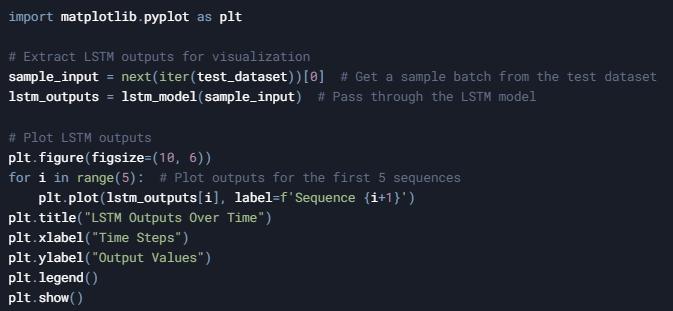

Code to Visualize LSTM Outputs:

Code to Visualize LSTM Outputs.

To address this challenge, this work proposes a spatial features extraction and temporal dependency modeling hybrid CNN-LSTM framework for object detection in sequential data. The proposed model employs CNNs to detect objects within single frames and LSTMs to model temporalrelationshipsbetweenframes,outperformingthese methods alone. The work includes key contributions of incorporating an attention mechanism to highlight important frames and showing improved performance on benchmarkdatasets.Whenunderstandingobjectmovement andcontextiscrucialtothedynamicenvironmentlikevideo analysis, the hybrid approach works extremely well. The modelholdspromise,butwillcontinuetobechallengedby computational complexity and dataset limitations. Finally, future work may take the form of transformer based architectures and lightweight designs, to enable better efficiency and scalability. We explore the necessity of combining spatial and temporal models to analyze sequential data in this research; this opens the door to improvementinapplicationssuchasautonomousdriving, surveillance,orsometypeofhealthcare.

1. H. Yin, “Object detection based on deep learning,” Transactions on Computer Science and Intelligent Systems Research,vol.5,pp.547–555,Aug.2024,doi: 10.62051/bcefz924.

2. H. Liu, “Dual attention-enhanced SSD: A novel deep learning model for object detection,” Applied and Computational Engineering, vol. 57, no. 1, pp. 26–39, Apr.2024,doi:10.54254/2755-2721/57/20241308.

3. R. A. Dakhil and A. R. H. Khayeat, “Deep Learning for Enhanced Marine Vision: Object detection in underwater environments,” International Journal of Electrical and Electronics Research, vol. 11, no. 4, pp. 1209–1218,Dec.2023,doi:10.37391/ijeer.110443.

4. A. M. Reda, N. El-Sheimy, and A. Moussa, “DEEP LEARNING FOR OBJECT DETECTION USING RADAR DATA,” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences,vol.X-1/W12023, pp. 657–664, Dec. 2023, doi: 10.5194/isprsannals-x-1-w1-2023-657-2023.

5. P. Thangavel and S. Karuppannan, “Dynamic Event Camera Object Detection and Classification Using Enhanced YOLO Deep Learning Architectur,” Optica OPen, Nov. 2023, doi: 10.1364/opticaopen.24532636.v1.

6. I.García-Aguilar,J.García-González,R.M.Luque-Baena, andE.López-Rubio,“Objectdetectionintrafficvideos: an optimized approach using super-resolution and maximal clique algorithm,” Neural Computing and Applications, vol. 35, no. 26, pp. 18999–19013, Jun. 2023,doi:10.1007/s00521-023-08741-4.

7. S.A.Nawaz,J.Li,U.A.Bhatti,M.U.Shoukat,andR.M. Ahmad, “AI-based object detection latest trends in remote sensing, multimedia and agriculture applications,” Frontiers in Plant Science, vol. 13, Nov. 2022,doi:10.3389/fpls.2022.1041514.

8. B.Hwang,S.Lee,andH.Han,“LNFCOS:EfficientObject DetectionthroughDeepLearningBasedonLNblock,” Electronics, vol. 11, no. 17, p. 2783, Sep. 2022, doi: 10.3390/electronics11172783.

9. H.-J. Park, J.-W. Kang, and B.-G. Kim, “SSFPN: Scale Sequence (S2) Feature-Based Feature Pyramid Networkforobjectdetection,” Sensors,vol.23,no.9,p. 4432,Apr.2023,doi:10.3390/s23094432.

10. N.Kumar,“ObjectDetectionusingArtificialIntelligence and Machine Learning,” SciSpace - Paper, Aug. 2021, [Online].Available:https://typeset.io/papers/objectdetection-using-artificial-intelligence-and-machine3l22987dr3

11. I.G.B.Sampaio,L.Machaca,J.Viterbo,andJ.Guérin,“A novelmethodforobjectdetectionusingdeeplearning andCADmodels.,” SciSpace-Paper,Feb.2021,[Online]. Available:https://typeset.io/papers/a-novel-methodfor-object-detection-using-deep-learning-and4tu99a9l43

12. S. Burde and S. V. Budihal, “Multiple object detection andtrackingusingdeeplearning,”in Lecture notes in electrical engineering, 2021, pp. 257–263. doi: 10.1007/978-981-33-4866-0_32.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

13. D. Cao, Z. Chen, and L. Gao, “An improved object detection algorithm based on multi-scaled and deformable convolutional neural networks,” HumancentricComputingandInformationSciences,vol.10,no. 1,Apr.2020,doi:10.1186/s13673-020-00219-9.

14. A. B. Qasim and A. Pettirsch, “Recurrent Neural Networksforvideoobjectdetection.,” SciSpace-Paper, Oct. 2020, [Online]. Available: https://typeset.io/papers/recurrent-neural-networksfor-video-object-detection-4drpghk9oc

15. B.-Y.Chen,Y.-K.Shen,andK.Sun,“Researchonobject detection algorithm based on multilayer information fusion,” Mathematical Problems in Engineering, vol. 2020, pp. 1–13, Sep. 2020, doi: 10.1155/2020/9076857.

16. R. Huang et al., “An LSTM approach to temporal 3D objectdetectioninLiDARpointclouds,”in Lecturenotes in computer science, 2020, pp. 266–282. doi: 10.1007/978-3-030-58523-5_16.

17. A. B. Arrieta et al., “Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challengestowardresponsibleAI,”InformationFusion, vol. 58, pp. 82–115, Dec. 2019, doi: 10.1016/j.inffus.2019.12.012.

18. A. Khan, A. Sohail, U. Zahoora, and A. S. Qureshi, “A surveyoftherecentarchitecturesofdeepconvolutional neuralnetworks,”ArtificialIntelligenceReview,vol.53, no.8,pp.5455–5516,Apr.2020,doi:10.1007/s10462020-09825-6.

19. F. Ordóñez and D. Roggen, “Deep convolutional and LSTM recurrent neural networks for multimodal wearableactivityrecognition,”Sensors,vol.16,no.1,p. 115,Jan.2016,doi:10.3390/s16010115.

20. Z. Zhou, X. Chen, E. Li, L. Zeng, K. Luo, and J. Zhang, “Edge Intelligence: Paving the last mile of artificial intelligencewithedgecomputing,”Proceedingsofthe IEEE, vol. 107, no. 8, pp. 1738–1762, Jun. 2019, doi: 10.1109/jproc.2019.2918951.