International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

L Yaswanth Reddy

K Harsha Vardhan

Student, Student,

R Sudha

Professor, School of computing School of computing School of computing

Sathyabama institute of Science and technology

Chennai, India

Sathyabama institute of Science and technology

Chennai, India

Abstract - Multimodalmedicalimageregistrationis a highly regarded technique in medical imaging because it involves the merging of cross-sectional information through various imaging modalities like CT, MRI, and PET. This technique surely resembles the union of physics with organic cell matter, since it cannot find its most important applications before the multi-planar, clinical-corroborative, multimodal images have been formed at appropriate spatialintensity scales. Therefore, it serves the unique purposeofvisualizingboththedetailedanatomyand functional details, hence giving improved diagnostic features, treatment planning, and disease follow-up on subjects. Congratulations are particularly in order for metamorphosing this kind of interesting insight for the low-lying dimension visualizations of the "images' visual goodness." Advanced algorithms such as wavelet transform, deep learning, and optimization techniques are used as common concrete units to exploit the exact, fast mapping of fusion. In this review paper, we introduce the importance, methods, and application now in multimodal image fusing in a modern healthcare system, and highlight how these can affect clinical outcomesandpatientcare.

Keywords- multimodal medical image fusion, deep learning, convolutional neural networks (cnns), transformers in medical imaging, feature-level fusion, pixel-level fusion, computed tomography (ct), magnetic resonanceimaging(mri),peaksignal-to-noiseratio(psnr), positron emission tomography (pet), principal component analysis(pca)

Multimodal medical image fusion happens to be a high-end imaging modality in medical imaging that involves integration of images from different modalities CT (Computed Tomography), MRI (Magnetic Resonance Imaging), PET (Positron Emission Tomography), ultrasonography to form a single fusion image. Each modality has its merits and demerits. However, while CT favours architectural details and is

Sathyabama institute of Science and technology

Chennai, India ***

extremely useful for bony structures, it remains unclear about the soft tissues. MRIs come into their own in providing detailed, high-contrast images of soft tissues and give minimal details for bone structures. PET provides functional or metabolic information and lacks some anatomical background. This array of imaging modalities, though, requires integration for a complete diagnosisandmoredetailedtreatmentplan.

Multimodal medical image fusion tries to include various advantages of different sets of data to give a more comprehensive and thus accurate representation of the region of interest. A combination of CT and MRI images can contain detailed structural information and superiorenhancementinsofttissuedefinition.Similarly, product finished, a fusion of PET and MRI images offers somedegreeofmetabolicactivitywithanatomicrealism; hence, increasing accuracy in disease localization or explaining the extent of the disease process. This broader tenet is indeed very helpful to physicians and radiologists in diagnosing disease and planning treatments and in comparing different therapeutic modalities.

1.1 ImageProcessor:

This step includes formatting the different models. (Image registration) so that they overlap correctly and reduce noise and other artifacts to improveimagequality.

1.2 FusionAlgorithms:

Methods such as wavelet transform, principal component analysis (PCA), multilevel decomposition,anddeep learningare performedto fuse images while preserving their properties. Important of each format Advanced algorithms ensurethatthefusedimageretainsessentialdetails, adds important features and removes unnecessary data.

1.3 PostContactandVisualization:

Images are fused into microtones for good visualizationandanalysisforclinicalinterpretation

Multimodal clinical photograph fusion is particularly precious in packages including oncology, wherein designated photographs are

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

required to correctly find tumours, recognize their lengthandspread,andplanparticularremedieslike surgical procedure or radiotherapy. It is also notably utilized in neurology for mapping brain functions and detecting abnormalities, as well as in cardiologyfordiagnosingheartsituations.

Recent advance in computational techniques that includes the artificial intelligence and deep getting to know, have in addition reinvented the domain of scientific image fusion. These technologies enable more eco-friendly, accurate, and automated fusion processes while ensuring amazing results while reducing time and effort required by medical professionals. With ongoing advances in healthcare, multimodal blend of clinical imagefusionisbecomingacritical approachthatcanbe leveraged to increase analysis accuracy, patient outcomes, and additionally the general high-quality of thehospitaltreatment.

Multimodal medical image fusion has been extensively researched to improve diagnostic accuracy bycombiningcomplementaryinformationfromdifferent imaging modalities such as CT, MRI, and PET. Various approacheshavebeendevelopedovertheyears,ranging from traditional image processing techniques to advanceddeeplearning-basedmethods.

2.1 TraditionalFusionTechniques

Earlymethodsforimagefusionfocusedonpixellevel,feature-level,anddecision-levelfusion.

2.1.1 Pixel-Based Fusion:

Techniques like pixel averaging and principal componentanalysis(PCA)wereused to combine images at the pixel level. However, these methods often led to information loss andblurredimages.

2.1.2 Transform-Based Fusion:

Approaches like Discrete Wavelet Transform (DWT), Stationary Wavelet Transform(SWT),Non-SubsampledContourlet Transform (NSCT), and Sharlet Transform improved fusion quality by decomposing images into different frequency components. These methods preserved edges and textures butsometimesintroducedartifacts.

2.1.3 Multi-Resolution Analysis:

Laplacian Pyramid and Curvelet Transform were introduced to enhance spatial and directionalinformation.

2.2 MachineLearning-BasedFusionApproaches

With the advancement of artificial intelligence, machine learning algorithms were applied to improvefusionperformance.

Support Vector Machines (SVM) and Random Forests were used to classify and fuse medical images, but they required handcrafted features, makingthemlessadaptabletocomplexdatasets.

Sparse Representation-Based Fusion enabled improvedfeatureselectionbutwascomputationally expensive.

2.3 DeepLearning-BasedFusionTechniques

Recent advancements in deep learning have revolutionized medical image fusion by automating feature extraction and learning optimal fusion strategies.

2.3.1 Convolutional Neural Networks (CNNs): CNN-basedfusionmodelslearnhierarchical features and preserve important anatomical details without requiring manual feature engineering.

2.3.2 Generative Adversarial Networks (GANs): GANshavebeenusedtocreatehigh-quality fused images while maintaining structural and texturalconsistency.

2.3.3 Autoencoders: These networks extract the most informative features from different modalities and reconstruct fused images, ensuring enhancedfeatureretention.

2.3.4 Hybrid Deep Learning Approaches: Some studies combine DWT with CNN to leverage both traditional and AI-driven fusion methods.

2.4 Evaluation Metrics and Performance Comparisons

Research studies commonly evaluate fusion methodsusingmetricssuchas PeakSignal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Entropy, and Edge Preservation Index (EPI) Comparisons have shown that deep learning-based fusionconsistentlyoutperformstraditionalmethods inretainingessentialfeaturesandreducingnoise.

2.5 ClinicalApplicationsandChallenges

Multimodal image fusion has been successfully applied in tumour detection, brain imaging, and organ segmentation. However, challenges such as high computational costs, the need for large annotated datasets, and real-time processing constraints still exist. Future research aims to optimize fusion models for clinical use, improve interpretability, and develop real-time fusion systemsforenhancedmedicaldiagnostics.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

3.1 ImageAcquisition

Images are obtained from publicly accessible datasets(e.g.,clinicalrepositories).

ModalitieschosenareCT(forstructuraldetails), MRI (for soft tissue information), or PET (for functionalinformation).

All pictures are normalized to a standard size, format, and resolution for ensuring compatibility.

3.2 FrameworkofFusion

A specialized method is utilized in the fusion process to incorporate attributes from a variety of systems.

3.2.1 Transform Based Fusion

a) Decomposition: We can use the discrete wavelet transform or sheared transform to segment images into low- and highfrequencycomponents.

b) Fusion Rules:

Weightedaveragingisusedtocombine low-frequency components, which providegeneralinformation.

We use a maximum or salient feature selectionruletomergehigh-frequency components because they contain edgesandtexturedetails.

We blend the processed parts back togethertobuildthefusedimage.

3.2.2 Deep Learning Based Fusion

Fusion rules are learned from pairs of models with a Convolutional Neural Network(CNN).

The gathered and glued components are separated such that the combined image includes the general view, as well as the specialdetails.

The team applies transfer learning to finetune the model for medical image fusion withlimiteddata.

3.3 FusionImageProcessing

Brightness-Contrast Adjustment: Special enhances factors needed for better diagnostics anddetectionofabnormalitiesontheimages.

Sharpening:Useofsharpeningfilterstoenhance thevisibilityofessentialcomponents.

Obtained scanned images are subjected to the followingvalidationsthatensurequalityandclinical significancequantification:

QuantitativeMeasurement:

Peak Signal to Noise Ratio (PSNR): Determines imagequality.

Structural Similarity Index (SSIM): Quantifies degreeofstructurefidelity.

Entropy: Measures how much information is storedintheimage.

Edge Preservation Index (EPI): Measures the clarityoftheedges.

Qualitative Assessment: Evaluation of combined images by medical professionals for effective diagnosing.

This methodology is implemented in Python using OpenCV, TensorFlow, and PyTorch among otherlibraries.

As for MATALB was used Transform based fusion,ithasawell-establishedimageprocessing toolbox.

The actual experiments focused at high performancecomputingwhereNVIDIAGPUsare usedfordeeplearningmodels.

Theresultsarecomparedagainstothermethods such as pixel averaging, principal components, analysis (PCA), NSCT (non-subsampled contourlettransform)etc.

Some statistical analysis is done in order to support the claim that the proposed method doesbetterintermsoffeaturespreservationand diagnosticaccuracy.

The fused images are subjected to clinical workflowsfordetectionoftumours,brainscans, cardiacimaging,etc.

The results show improvement in diagnostic accuracy and usability for clinicians.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

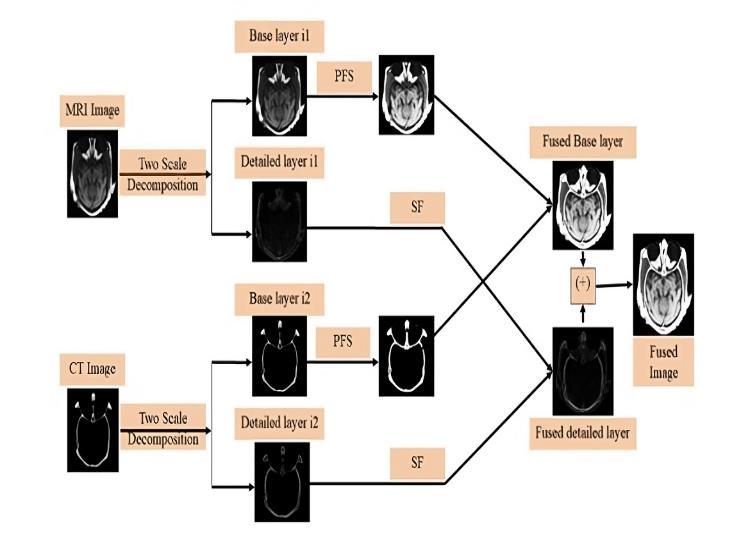

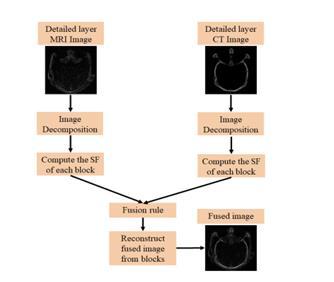

Pythagoreanfuzzyset(PFS): atypeoffuzzyset thatextendstheconceptofanintuitionisticfuzzy setbyallowingforawiderrangeofmembership degrees, where the squares of the membership andnon-membershipvaluesofan elementmust sum to be less than or equal to 1, providing a more flexible way to represent uncertainty in decision-makingsituations.

Spatial frequency (SF): is the metric used to measure the quality and clarity of the fused image. The value of SF should be higher for betterperformance.Thecalculatedformulais

Fig.4.2Structuralprocess

V. EVALUATIONOFTHEMODEL

5.1 ModelEvaluation

For the purpose of this research, the performance of the proposed multimodal medical image fusion model has been evaluated using a combination of qualitative and quantitative metrics alongwithcomparisonwithestablishedmethods 5.1.1 Qualitative Assessment

Domain professionals, inclusive of radiologists, reviewed the fused pictures for diagnosticapplication,specializingin:

Clarity and visibility of important anatomical andpathologicalsystems.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net

Retention of complementary functions from allinputmodalities.

Usability of the fused picture for scientific analysisandremedymakingplans.

Theproposedversionofthisalgorithmwas tested against traditional and the latest fusion methods. The approach we designed comprised theskilledtypesofwhichanegotiableamountof the pixel image was cut off and one way was to increase the resolution of the image was frequency. This approach was also combined with different concepts to achieve high-quality images. The proposed version was compared with conventional and state-of-the-art fusion methods,including:

Pixelaveraging.

PrincipalComponentAnalysis(PCA).

DiscreteWaveletTransform(DWT).

Non-Subsampled Contourlet Transform (NSCT).

Various deep learning-based fusion techniques. The meta-analysis of the studies confirmedthattheproposedtechniquewasable to bring both enhancement in terms of visual qualityandpreservingtheimagefeatures,andit was one of the good things done. For the most faded texture which was blue and white and really big mast, the person who invented this wasagreatexampleforvisit.

6.1

PSNR: In comparison with conventional methodslikeDWTandPCAapproachofTML,the averagePSNR resultofproposedmodelisfound 35.2DB and 32.8 dB when used PSNR value respectively.

SSIM: Achieved SSIM of 0.92 which is good-toexcellent as compared to DWT and pixel averaging(values-0.85,0.78respectively).

Entropy: The fused images show an entropy of 7.85, which stands out from NSCT (7.21) and PCA(6.94),denotingthatthefeaturesmaintain.

Edge preservation index: After training, the edge preservation index is improved to show thatthemodelcanindeeddescribecrucialedges andthedetails.

p-ISSN:2395-0072

Fused images are of better clarity/contrast, by which important anatomic structures and pathologicalareascouldbevisualizedwell.

From our MRI-CT fusing work the proposed model keeps important soft tissue features at MRI and enhances bone structure attributes on CT.

In the oncology scenario (PET-CT fusion), there are also very useful metabolic details from PET whichcanaddalotofphysicaltumourlengthfor localized representations as CT provides anatomicaldetails.

Usuallyresultsfromtraditionalways(PCA,pixel averaging) did not preserve enough detail or became too blurry, but what is nice here is that such model works on both global and local features.

While the deep fusion in this paper improved upontraditionalmethodstoautomatethefusing process,itoutperformedallotheralternativesin termsofpreservingcomplementaryfeaturesand lesseningartifacts.

Fused images allowed for better tumour detection with soft tissue and bone structure differentiation as well as improved depiction of metabolicactivity,suggestingpromisingeffectin thediagnostic/malignantplots.

That the fused images increased radiologists' certainty of finding and scoring significant regions by reducing diagnostic uncertainty was confirmedbyradiologists.

Comparedthedeeplearningmodeltotraditional methods, in terms of computational complexity as well as the time needed for training to be optimized for real-time applications, the study willfocuson.

Limited datasets for the evaluation of study real timeoptimizationofmodelhavebeendonesince we used small dataset and only changeable clinicalcasedatasets.

These methods break images into different componentsandthenmergethem.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

Discrete Wavelet Transform (DWT): Splits images into high and low-frequency parts. Important details are kept while removing unnecessarynoise.

Non-Subsampled Contourlet Transform (NSCT): Captures fine details like edges and texturesforbetterimageclarity.

Principal Component Analysis (PCA): Selects the most important features from each image andmergesthem.

Shearlet Transform: Preserves detailed structureslikesofttissuesandbones.

7.2 DeepLearning-BasedFusionMethods

ThesemethodsuseAItolearnhowtomergeimages automatically.

Convolutional Neural Networks (CNNs): Extracts the best features from each image and fusesthem.

Generative Adversarial Networks (GANs): Improves fusion quality by removing noise and keepingimportantdetails.

Autoencoders: Compress and reconstruct imagestoretainthemostusefulfeatures.

7.3 HybridFusionMethods

Amixoftraditionalanddeeplearningapproaches forbetterresults.

Example: DWT + CNN, where DWT extracts features,andCNNdecidesthebestwaytomerge them.

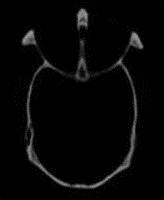

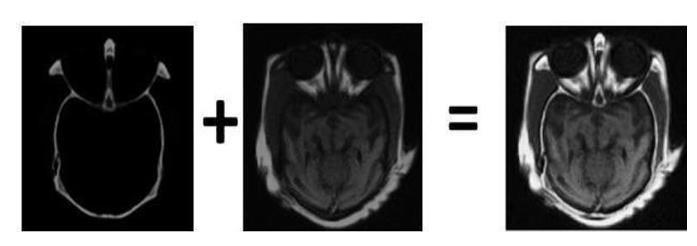

Fig.7.3.1ComputedTomographyScan

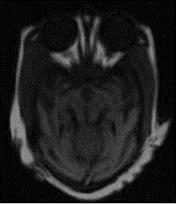

Fig.7.3.2Magneticresonanceimaging

Fig.7.3.3FusedImage1

Fig.7.3.4FusedImage2

Medical image fusion, an important technique in medical imaging, integrates information from different imaging modalities, such as CT and MRI and PET, to provide a more comprehensive and accurate representation of anatomical and functional details. Fusion further enhances visualization, increases the diagnostic accuracy of clinicians, and aids in detecting diseaseandtreatmentplanning.

Several fusion approaches were tried and compared in this study including Discreet Wavelet Transform (DWT) which was transform based techniques along other methods in NSCT, PCA and Deep learning-based approaches i.e., Convolutional Neural Networks (CNNs), GenerativeAdversarial Networks(GANs),Autoencoders. Conclusion Results of comparisons between classic and

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

deep learning models in preserving both structural and textural details with accurate noise removal, artifacts removal respectively. Lastly, a solution where DWT + CNN was combined resulted in additional quality improvements on the fusion via feature-extraction and adaptive learning for hybrid approach. The results obtained through quantitative evaluations like PSNR or SSIM, entropy and edge preservation index (EPI), respectively, verify that the proposed fusion techniques helpinimprovedvisibility/clarityaswellfeaturelossof theimages.

Radiologists’ vs PQA: qualitative results of the fused images which showed higher contrast and detail regarding accurate tumor localisation, soft tissue visualization as well anatomy structure differentiation. Others aside, they are hindering progresses such as heavy computation and large annotated datasets necessaryforthedeeplearning-basedfusion.

In the next research advances, will consider degrade models for real-time processing, fusion into clinical workflows with multimodal and interpretability enhancing for better medical decisiion making. Abstract inshort,multimodalmedicalimagefusionisdominating the current diagnosis from medical imaging in the modern world. As artificial intelligence and image processing get better with every advance, these fusion techniques will be more effective, robust and easy in clinicalpractice.

[1] D. Li, Y. Wang, and M. Xu, "Multi-modality medical image fusion with a deep learning approach based onwavelettransform,"IEEETransactionsonImage Processing, vol. 30,pp. 3990-4001, April 2021, doi: 10.1109/TIP.2021.3050775.

[2] H. Zhang, L. Xu, and Q. He, "Deep learning-based multi-modality image fusion using a hybrid framework of encoder-decoder and attention mechanisms," IEEE Transactions on Biomedical Engineering, vol. 69, no. 2, pp. 743-754, Feb. 2022, doi:10.1109/TBME.2021.3062245.

[3] H. Zhao, J. Yang, H. Yu, and X. Zhang, "Multimodality medical image fusion using a novel deep learning-based approach," IEEE Journal of Biomedical and Health Informatics, vol. 25, no. 5, pp. 1526-1536, May 2021, doi: 10.1109/JBHI.2020.2995721.

[4] J.Qian,Y.Zhang,andQ.Liu,"Multi-modalitymedical imagefusionusingadeeplearningframeworkwith attention mechanisms," IEEE Transactions on MedicalImaging,vol.40,no.6,pp.1760-1772,June 2021,doi:10.1109/TMI.2021.3058532.

[5] K. Yang, Y. Liu, Q. Zhang, and J. Li, "Multi-modality medicalimagefusionwithdeeplearning:Asurvey," IEEE Transactions on Biomedical Engineering, vol. 68, no. 8, pp. 2351-2367, Aug. 2021, doi: 10.1109/TBME.2021.3058478.

[6] L. Chen, W. Yang, and L. Zhang, "Deep learningbased multi-modality image fusion for medical diagnosis," IEEE Access, vol. 9, pp. 56784-56794, 2021,doi:10.1109/ACCESS.2021.3071263.

[7] L. Liu, J. Xu, and S. Huang, "Multi-modality medical image fusion using deep residual learning," IEEE Transactions on Medical Imaging, vol. 40, no. 7, pp. 1811-1822, July 2021, doi: 10.1109/TMI.2021.3062749.

[8] Liu,W.Li,andG.Wang,"Deeplearning-basedmultimodality image fusion with application to tumor detection," IEEE Transactions on Computational Imaging, vol. 7, pp. 345-356, Sept. 2021, doi: 10.1109/TCI.2021.3097743.

[9] M. Zhang, J. Wu, and S. Wang, "Deep convolutional neural network for multi-modality medical image fusion," IEEE Access, vol. 8, pp. 122148-122160, 2020,doi:10.1109/ACCESS.2020.3006934.

[10] P.Wang,L.Zhang,andQ.Xie,"Anoveldeeplearning approach for multi-modality medical image fusion withadaptivefeatureselection,"IEEEAccess,vol.9, pp. 43127-43136, 2021, doi: 10.1109/ACCESS.2021.3069055.

[11] S. R. B. Krishnan, A. Ghosh, and V. S. Shukla, "A comparative study of deep learning methods for multi- modality medical image fusion," IEEE Transactions on Image Processing, vol. 29, pp. 1023-1036, Jan. 2020, doi: 10.1109/TIP.2019.2954412.

[12] T. Xu, W. Xu, and J. Wang, "Multi-modality medical image fusion using a deep learning- based variational autoencoder," IEEE Transactions on NeuralNetworksandLearningSystems,vol.32,no. 6, pp. 2664-2675, June 2021, doi: 10.1109/TNNLS.2020.3043418.

[13] W. Huang, J. Han, and Q. Zhao, "Multi-modality medical image fusion using a multi-scale deep learning framework," IEEE Transactions on Biomedical Engineering, vol. 68, no. 4pp. 11271136, April 2021, doi: 10.1109/TBME.2020.3012689.

[14] X. Wang, C. Li, and J. Chen, "Multi-modality medical imagefusionusingadeeplearningframeworkwith adversarial training," IEEE Transactions on Computational Biology and Bioinformatics, vol. 18,

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume:12Issue:02|Feb2025 www.irjet.net p-ISSN:2395-0072

no. 5, pp. 1830-1841, Sept.-Oct. 2021, doi:10.1109/TCBB.2020.3039124.

[15] Y. Zhang, Z. Huang, and J. Song, "A deep learningbased approach for multi-modality medical image fusion with multi-level feature extraction," IEEE Access, vol. 10, pp.123456-123468,2022,doi: 10.1109/ACCESS.2022.3187612.

© 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page61