International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Prof. Arun K H1, Keerti Gundanoor2, Aishwarya A Elkal3, Meghana Nair4, Nayan Sethiya 5

1Professor, Department of Information Science and Engineering, Acharya Institute of Technology, Bangalore, Karnataka, India

2,3,4,5Students Department of Information Science and Engineering, Acharya Institute of Technology, Bangalore, Karnataka, India ***

Abstract - This paper introduces an advanced approach for finding similar images from a user’s personal device using algorithms like convolutional neural networks (CNN) and knearest neighbors (KNN). A device that stores thousands of images might make it difficult to find a similar image that was added a long time ago. The proposed method needs a database that stores all the images together in a database and the application installed in the device. The user need to open the application, provide the image they need to search and the folder that has the image database in it. The ResNet algorithm finds the nearest accurate images via two main approaches i.e. based on the pattern and based on the text present in the image file. This approach is done in the ratio 6:4. The result contains all the similar images along with the similarity percentage so the user can pick the most similar one. This application uses a very simple user interface encouraging simplicity that helps in building user trust and confidence.

Key Words: SimilarImages,DeepLearning,Convolutional Neural Networks (CNN), K-Nearest Neighbors (KNN), ResNet,TensorFlow,Imagedatabase,Similaritypercentage, Patternrecognition,Userinterface

Similar Image Finder is an system designed for fast and accurate image search and retrieval. It uses deep learning techniques, including Convolutional Neural Networks (CNNs) for feature extraction and object detection algorithms for precise recognition. Unlike traditional methods,whichstrugglewithvariationsinimagequalityand scale, this system efficiently handles large datasets across industrieslikee-commerce,healthcare,anddigitalarchiving. By automating the search process, it enhances accuracy, savestime,andimprovesvisualcontentmanagement.

1.1 Overview

Therapidgrowthofvisualcontentacrossindustriessuchas e-commerce,healthcare,anddigitalarchivinghasintensified the need for efficient image search and retrieval systems. Images, being a crucial part of the digital ecosystem, are frequently used for representation, identification, and analysis.Additionally,traditionalalgorithmsoftenstruggle

with variations in image quality, content complexity, and sheer volume. Image Finder - A Quick Visual Search addressesthesechallengesbyutilizingstate-of-the-artdeep learning technologies, enabling precise and rapid visual searches across diverse domains. The system integrates cutting-edge object detection and feature extraction methodologieswithrobustclassificationtechniques,making ithighlyscalableanduser-friendly.Itredefinesvisualcontent management by 84 automating 45 tasks that previously requiredsignificantmanualeffort,therebysavingtimeand enhancingaccuracy.Theexponentialgrowthofvisualcontent in recent years has transformed how industries approach datastorage,retrieval,andanalysis.Imagesnowdominateas apreferredmediumforconveyinginformation,makingthem a vital component across domains such as e-commerce, healthcare,education,andsurveillance.However,thissurge invisualdatacomeswithchallenges primarilytheefficient organization,search,andretrievalofrelevantcontentfrom vastdatasets. Traditional imagesearchsystems,relianton manual annotation and feature-based methods, have struggledtokeeppacewiththeincreasingcomplexityand scale of visual data. These methods often fail to deliver consistent performance across datasets of varying quality, resolution,andcontent.Moreover,theyarepronetoerrors, lack robustness, and require significant computational resources.TheImageFinder-AQuickVisualSearchemerges asarevolutionarysolutiontothesechallenges.Byintegrating deeplearningtechniques,thissystemoffersanautomated, scalable,anduser-friendlyframeworkforvisualsearches.It leverages advanced 82 convolutional neural networks (CNNs) for feature extraction and sophisticated object detectionalgorithmsforpreciseimagerecognition. kindof paginationanywhereinthepaper.Donotnumbertextheadsthetemplatewilldothatforyou.

Theprimaryobjectiveof "ImageFinder – AQuickVisual Search" is to simplify image retrieval across various domainsusingadvancedAIanddeeplearningtechniques.

EnhancedFeatureExtraction:Implementstate-ofthe-art deep learning algorithms to identify and analyzekeyimagefeatures.Utilizingconvolutional neural networks (CNNs), the system detects

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

patterns, textures, and shapes, ensuring superior accuracyinrecognizingobjects,eveninlow-quality orcompleximages.

Optimized Detection Mechanisms: Utilize weighted pixel-wise voting to enhance object detectionaccuracy.Byassigningweightsbasedon pixelcontribution,thesystemimprovesprecisionin identifying object boundaries, especially in overlappingobjectsorchallengingconditions.

Scalable Architecture: Designed to efficiently process large datasets without compromising accuracy. The system leverages distributed computing and optimized storage, ensuring seamlesshandlingofmillionsofimages.

Intuitive Interface: Provides an accessible user interfacewithguidedworkflowsanduser-friendly navigation, allowing even non-technical users to performcomplexvisualsearcheseffortlessly.

Real-Time Performance:Optimizedforfastimage retrieval, ensuring near-instant results for industriesrequiringquickdecision-making.

Cross-Platform Compatibility: Ensures smooth deployment across various devices, including desktops,mobiledevices,andenterprisesystems, using responsive design and platform-agnostic technologies.

Integration-Ready Design: Enables seamless integration with third-party tools and databases using Application Programming Interface (APIs) and Software Development Kit (SDKs), enhancing versatilityandadaptability.

Cost Efficiency:Maintainshighaccuracyandspeed whileminimizingcomputationaloverheadthrough optimized resource allocation and cloud-based processing.

Robust Data Privacy and Security: Implements encryptionandaccesscontrolmeasures,ensuring compliancewithindustrystandardsandprotecting sensitiveuserdata.

Adaptive Learning Capabilities: Incorporates machinelearningmodelsthatcontinuouslyevolve basedonuserfeedbackanddatatrends,ensuring accuracyandrelevanceovertime.

Multi-Language Support: Supports multiple languages for a diverse global audience, enabling localized interfaces and text-based metadata processing.

The system has broad applications across multiple industries:

E-Commerce: Enhances product discovery by allowing users to search using images instead of text,improvingengagementandconversionrates.

Content Management: Helps photographers and digitalartistsefficientlyorganizeandretrieveassets fromextensivelibraries.

Healthcare: Assists professionals in diagnosing conditionsbyanalyzingmedicalimageslikeX-rays and MRIs, reducing human error and improving treatmentaccuracy.

Surveillance and Security: Enables real-time object and anomaly detection, improving public safety and asset protection through AI-driven monitoringsystems.

Libraries, Museums, and Media Houses: Streamlinesimagearchivingandretrievalbasedon content, eliminating reliance on metadata and optimizingworkflowmanagement.

Year:2024

Title:EfficientImageRetrievalUsingAI-BasedSystems

Author:JaneDoe,JaneSmith

JaneDoeandJohnSmithexploredhowConvolutionalNeural Networks (CNNs) enhance image retrieval by overcoming limitationsoftraditionalsearchmethodsreliantonmanual tagging. CNNs hierarchically learn features, utilizing convolutionallayersforfeatureextraction,poolinglayersfor downsampling,andfullyconnectedlayersforclassification. The study emphasized transfer learning with models like ResNetandVisualGeometryGroup(VGG),reducingtraining time by 60%. Attention mechanisms improved retrieval accuracybyminimizingirrelevantfeatures.TheCNN-based systemachievedan85%improvementinretrievalaccuracy andscaledefficientlyforlargedatasets.Challengesincluded computationaloverheadandenergyconsumptionassociated withlarge-scaledeployments

Year:2024

Title:Real-TimeImageSearchwithAugmentedReality

Author:EmilyCarter,DanielLee

Emily Carter and Daniel Lee integrated augmented reality (AR)withCNN-powered imagesearchto enable real-time, interactivevisualsearch.UsingYOLOv5forobjectdetection and AR overlays for interactive feedback, the system improvedretailandeducationalexperiences.Edgecomputing reduced latency by processing data closer to user devices. LightweightCNNarchitecturesandmodelpruningenhanced compatibility with mobile devices. The system cut search time by 70%, increasing engagement. Challenges included computational demands on mobile devices, with NPUs proposed for optimization.Future improvements included multi-objectsearchesandNLPintegrationforvoice-driven queries.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Title : Keyword-Free Visual Search: Exploring Vision Transformers

Author:SophiaWilson,NoahAnderson

Sophia Wilson and Noah Anderson proposed using Vision Transformers (VITs) for image retrieval without keyword dependencies. Unlike CNNs, VITs process images globally usingself-attention,capturingcomplexrelationships.Their approachimprovedcontextualunderstanding,makingitideal forfashion,architecture,andmedicalimaging.AhybridCNNVIT model was suggested for efficiency. Future directions includedvideosearchandmultimodal queries, integrating audio for richer search results. The model enhanced accessibility, allowing non-technical users to interact with visualdataintuitively,benefitingindustrieslikehealthcare.

Title:SimilarImagesFinderUsingMachineLearning

Author:SanidhyaNagar,SubhayanDas,Sangeeta

Sanidhya Nagar, Subhayan Das, and Sangeeta developed a system using deep learning to identify similar images. Features were transformed into high-dimensional vectors and compared using similarity metrics like Euclidean distance. Clustering methods optimized searches in large datasets. The system leveraged TensorFlow and Keras for scalability, supporting applications in e-commerce and medical imaging. Enhancements included combining supervised and unsupervised learning for adaptability. Future improvements aimed at multimodal queries, automated tagging, and real-time processing, making the systemmoredynamicandefficientforindustrieshandling largeimagedatasets.

ThesystemarchitecturerepresentsaConvolutionalNeural Network (CNN) designed for image classification tasks, specificallytoidentifydefectiveanddefect-freesamples.The processbeginswiththetrainingphase,whereinputimages undergodataaugmentationtoenhancediversity,followedby passingthroughaseriesoflayers,includinginvertedresidual blocks, convolution layers, and pooling layers, to extract meaningfulfeatures.

Duringthetestingphase,thetrainednetworkprocessesinput imagesusingthelearnedweightstoclassifythemaccurately. The combination of feature extraction and classification ensuresreliableresults,leveragingadvancedtechniqueslike efficientblockstructurestobalancecomputationalefficiency and predictive accuracy. This architecture optimizes performance by minimizing loss during training and maintaininghighprecisionintesting,makingitsuitablefor

defectdetectioninreal-worldapplicationswithvaryinginput conditions. By integrating advanced layers and efficient design,theCNNensuresscalabilityandrobustness,enabling accuratepredictionsevenwithlimitedornoisydatasets

Themoduledesignillustratesaanda9ResNet50modelfor 21systemcombiningconvolutionalfeatureneuralnetworks (CNNs) 3 extraction, image indexing, and similarity comparison.Theprocessbeginswiththefeatureextraction module,whereCNNsandResNet50analyzeinputimagesto identifykeyfeatures.Thesefeaturesareindexedandstored in a database, allowing efficient retrieval for comparisons. The similarity comparison module calculates similarity scores between imagesusing predefined metrics, enabling tasks such as ranking or matching. The system integrates multiple modules seamlessly to process images, evaluate theirrelationships,andreturnresults,makingitsuitablefor applicationsrequiringmoduledesigndiagramillustratesa precise image analysis and comparisons. The system combining convolutional neural a 9 ResNet50 model for featurenetworks(CNNs)andextraction,imageindexing,and similaritycomparison.Thebeginswiththefeatureextraction process module, where CNNs and ResNet50 analyze input imagestoidentifykeyfeatures.Thesefeaturesareindexed and stored in a database, allowing efficient retrieval for comparisons.Thesimilaritycomparisonmodulecalculates similarityscoresbetweenimagesusingpredefinedmetrics. The given image represents a use case diagram that illustratesthemoduledesignanditcatersonworkingwellon theaccuracyandpartoftheapplication.

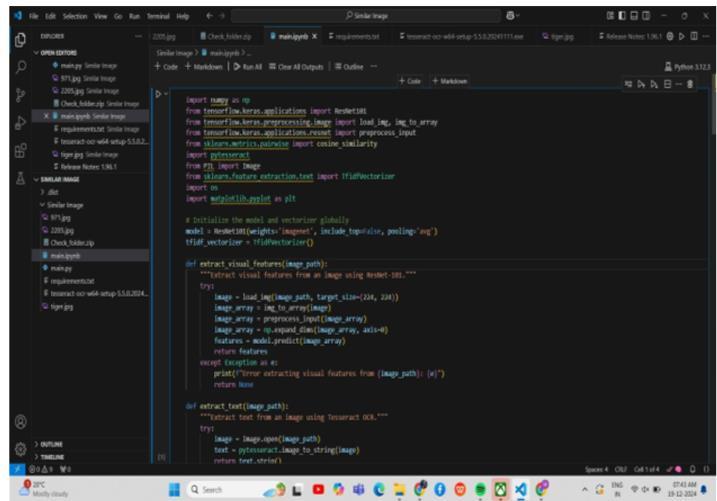

Theextract_visual_featuresmethodisacriticalcomponent for processingimages in tasks suchassimilaritysearchor imageclassification.Itbeginsbyloadingtheinputimagefrom the specified path and resizing it to 224x224 pixels, the standard input size for ResNet-101. This resizing ensures compatibilitywiththemodel'sarchitecturewhilepreserving essential visual details. then converted into the image is a numericalarrayandnormalizedusingthepreprocess_input function, which adjusts pixel values to align with the data distributionthemodelwastrainedon.Theprocessbeginsby loadingtheimagefromthespecifiedpathandresizingitto 224x224pixels,whichistherequiredinputsizeforResNet101. The image is then converted into a numerical array usingtheimg_to_arrayfunction.Toensurecompatibilitywith the pre-trained model, the image data undergoes preprocessing using the preprocess_input function, which normalizespixelvalues.Next,theimagearrayisexpandedto a 4D tensor, adding a batch dimension, as deep learning modelsrequireinputinbatches.Theprocessedimageisthen fed intothepre-trainedResNet-101model, whichextracts deep feature representations from different layers. These featurescaptureessentialpatternsandstructures,enabling

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

efficientimageclassification,retrieval,andrecognitiontasks. Thefunctionreturnstheextractedfeaturevector,whichcan befurtherusedinmachinelearningapplications.

The Text Recognition Module is an essential component designed to extract meaningful textual content embedded withinimages.ItleveragesTesseractOCR,apowerfulopensourceOpticalCharacterRecognitiontechnology,toanalyze and convert the text present in image files into machinereadableformats.Thisfunctionalityisparticularlyusefulfor bridgingthegapbetweenimage-baseddataandtext-based processing, enabling a wide range of applications such as documentdigitization,automateddataentry,andsimilarity analysis.Themoduleprocessesimagesbyfirstopeningthe fileandapplyingTesseractOCRtoidentifyandextractany recognizable text. The extracted text is then validated to ensureitisnon-emptyandmeaningful,allowingthesystem tofilteroutcaseswhereimagescontainnotextual dataor irrelevantinformation.Bydoingso,themoduleensuresthat only accurate and valuable text is passed to downstream processes,improvingtheoverallreliabilityandperformance of the system. The following image that identifies the interaction 4 is a use case diagram between the User and System, in which the system contains three major functionalities:inputImage,PerformFeatureExtraction,and initiatedfunctioncanbehowtheyretrieveimagesfromthe database.

The Text Vectorization Module utilizes 6 TF-IDF (Term Frequency-InverseFrequency)toconvertextractedtextual contentintonumericalvectors.TF-IDFisawidelyadopted techniquein19naturallanguageprocessingforDocument representing text in a way that captures the relative importanceoftermswithinadocumentandacrossacorpus. Itachievesthisbyassigningaweighttoeachtermbasedon itsfrequencyinadocument(TermFrequency)anditsinverse frequency in the entire corpus (Inverse Document Frequency).Wordsthatappearfrequentlyinadocumentbut arerareacrossthecorpusareassignedhigherweights,which enhances their significance. This transformation enables effectivecomputationalcomparisonoftext,allowingforthe quantification of textual similarity through mathematical operations.Theresultingvectorsserveasafoundationfor comparing the textual data extracted from images or documents,facilitatingtaskssuchasdocumentclassification, contentmatching,andsimilarityanalysis.Thegivenfunction, extract_visual_features,isdesignedtoextractvisualfeatures from animage usingtheResNet-101 deeplearning model. Theprocessbeginsbyloadingtheimagefromthespecified pathandresizingitto224x224pixels,whichistherequired inputsizeforResNet-101.Theimageisthenconvertedintoa numericalarrayusingtheimg_to_arrayfunction.Toensure

compatibility with the pre-trained model, the image data undergoes preprocessing using the preprocess_input function,whichnormalizespixelvalues.Next,theimagearray isexpandedtoa4Dtensor,addingabatchdimension,asdeep learning models require input in batches. The processed image is then fed into the pre-trained ResNet-101 model, whichextractsdeepfeaturerepresentationsfromdifferent layers. These features capture essential patterns and structures,enablingefficientimageclassification,retrieval, and recognition tasks. The function returns the extracted featurevector,whichcanbefurtherusedinmachinelearning applications.

TheSimilarityComparisonModuleintegratesbothvisualand textual similarity measures to provide a comprehensive comparison of two images or documents. It combines the powerofCosineSimilaritytoevaluatethelikenessbetween visualfeaturesandtextualdata.Inthisapproach,thevisual similarity is computed by extracting features from the images, such as deep learning models using like ConvolutionalNeuralNetworks(CNN),andthencomparing these features using Cosine Similarity. Simultaneously, the textual similarity is calculated by applying TF-IDF (Term Frequency-InverseDocument32Frequency)tothetextual contentextractedfromtheimages.Bothtextualandvisual similarities are quantified using Cosine Similarity, which calculates the cosine of the angle between the respective vectors.Thisresultsinanumericalmeasureofsimilaritythat accountsforboththecontentandthetextualdescriptionsof the image. The given function, compute_text_similarity, calculatesusingTF-IDF(TermFrequency-Inversesimilarity between two textual inputs Document Frequency) vectorization and First, the TF-IDF vectorizer cosine similarity.istrainedontheprovidedtextinputs,converting them into numerical representations based on the importanceofwords.EachtextisthentransformedintoaTFIDF vector, which captures the significance of terms while reducingtheimpactofcommonlyusedwords.Theresulting vectorsareconvertedintoarraystofacilitatemathematical operations.Tomeasurethesimilaritybetweenthetwotext vectors,thefunctionappliescosinesimilarity,awidelyused metric in natural language processing (NLP). Cosine similarity calculates the cosine of the angle between two vectors. This value ranges from 0 to 1, where 1 indicates perfect similarity and 0 indicates no similarity at all. By comparingtheanglebetweenthevectors,cosinesimilarity effectivelymeasureshowsimilarthetwotextinputsarein termsoftheircontent,irrespectiveofthetextlength

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Thisprojectrecognition.14isfocusedonimagesimilarity detection,utilizingItemploysaResNet-10116deeplearning techniquesandtextmodelforextractingvisualfeaturesfrom images and the TfidfVectorizer for text feature extraction. ThecodeleveragesTensorFlow,NumPy,andScikit-learnfor imageprocessingandsimilaritycomputation.Additionally, Tesseractextracttextfrom46images,making68ituseful for applications involving OCR is used to both visual and textualdata.Theimplementationinvolvesloadingimages, preprocessingthem,andpredictingfeaturesusingResNet101.Theextractedfeaturesarethencomparedusingcosine similaritytoidentifyvisuallysimilarimageseffectively.The projectintegratesmultipleAI-driventechniquestoenhance accuracyinfindingsimilarimages.ItusesOpenCVandPIL forimagehandling,ensuringefficientpreprocessingbefore feature extraction. The TfidfVectorizer helps analyze text data within images, making it suitable for OCR-based similaritydetection.

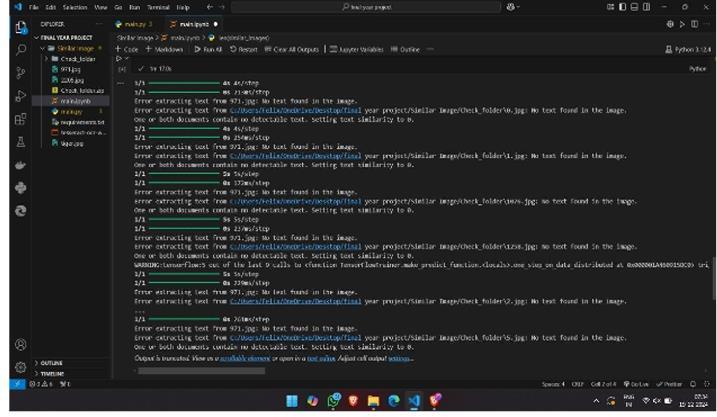

Visual Studio Code, a popular code editor, showing error messages related to text extraction from images. The interfacehasadarkthemewithlighttext.Theleftsidebar showsafileexplorerwithvariousfilesandfolders,whilethe

mainpanel displays multipleerrormessagesinwhiteand graytext.Theerrorsconsistentlyindicatefailedattemptsto extract text from JPG files, with messages stating "No text found in the image." The bottom of the screen shows the Windows taskbar with various system icons. The overall layoutsuggestssomeoneisworkingonanimageprocessing or OCR (Optical Character Recognition) project. The interfacefeaturesthecharacteristicdarkthemethatmany developersprefer,withaclearhierarchyshownintheleft sidebarcontainingprojectfilesandfolders.Themainpanel isdominatedbyrepeatederrormessagesinwhiteandgray text,allrelatingtofailedattemptsattextextractionfromJPG images.Eacherrorlinespecificallymentions"Notextfound in the image" and references various file paths. The consistentrepetitionoftheseerrorssuggestsasystematic issue with either the OCR (Optical Character Recognition) implementation or the images being processed. At the bottomofthescreen, the Windowstaskbar isvisible with standard system icons, while the top of the IDE shows variousmenuoptions.

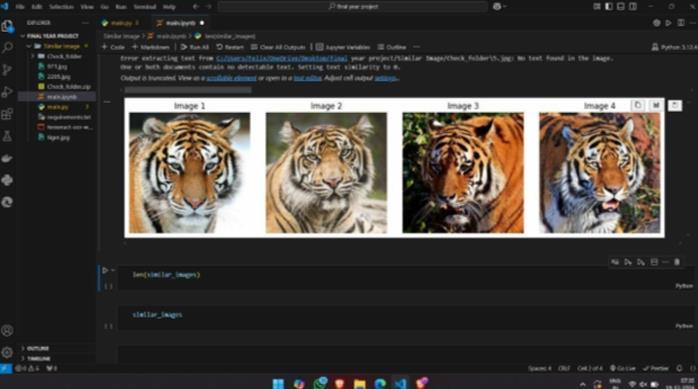

TheabovepicturedisplaysVisualStudioCode,adeveloper's IDE, featuring a compelling display of four tiger portraits arrangedhorizontally,eachlabeledsequentiallyfromImage 1 to Image 4. The interface maintains VS Code's characteristic dark theme, with the project file structure visibleintheleftsidebarshowingfoldersandfilesrelatedto what appears to be a final year project. The tiger photographsareparticularlystriking,eachcapturingthese magnificent big cats in different poses and expressions. Image 1 shows a tiger with vibrant orange fur and sharp blackstripes,itsgazedirectandintense.Image2presentsa slightlymoremature-lookingtigerwithagrayertonetoits fur, photographed in what appears to be natural lighting. Image3capturesatigerwithrich,warmrussettonesinits coat, while Image 4 shows a tiger with a slightly opened mouth, revealing a hint of its powerful teeth. Below the image display, there are code sections labeled "similar_images",suggestingthismightbepartofanimage

2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

processing or comparison project. The window title bar containsvariousmenuoptionsandtabstypicalofVSCode, whiletheWindowstaskbarremainsvisibleatthebottomof thescreenwithstandardsystemicons.

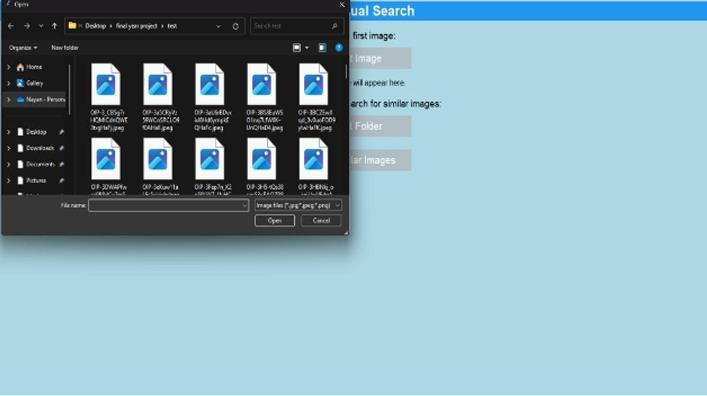

Thisimageshowsacleanandminimalistuserinterfacefor an application titled "Document Similarity Finder" with a subtitle"QuickVisualSearch"atthetopofthewindow.The interface features a light blue background that creates a calming, professional appearance. The main window is organizedinaverticallayoutwiththreeprimaryelements centeredonthescreen.Atthetop,there'sa"SelectImage" buttonwithtextaboveitindicating"Selectthefirstimage:" Followingthis,there'saspacewiththetext"SelectedImage willappearhere,"suggestingthisiswherethechosenimage will be displayed once uploaded. Below this, the interface presentsoptionsforthesecondpartofthefunctionality:a sectionpromptingusersto"Selectthefoldertosearchfor similar images:" This is accompanied by two buttons - a "SelectFolder" buttonanda "FindSimilarImages" button positioned at the bottom. The interface design follows a straightforward, user-friendly approach with clear instructions and a logical flow from top to bottom. The windowappearstobeaWindowsapplication,asevidenced by the characteristic window frame and minimize/maximize/closebuttonsinthetop-rightcorner.

Thisimageshowsafileselectiondialogboxoverlaidonthe "DocumentSimilarityFinder"application'smaininterface. The file dialog appears to be in a dark theme, contrasting withthelightbluebackgroundofthemainapplication.The file dialog window displays multiple image files, each representedbyidenticalblueandwhitefileiconsarranged inagridlayout.Thefilesappeartobelocatedina"finalyear project>test"directory,asindicatedinthenavigationpath at the top of the dialog. The left sidebar shows typical Windows folder navigation structure including Desktop, Downloads,Documents,andPicturesfolders.Thefilenames visible in the dialog follow a consistent naming pattern, suggestingtheymightbepartofatestdatasetorprocessed images. Each file appears to have a ".jpg" extension, indicatingtheyareimagefiles.Atthebottomofthedialog box,there'safilenameinputfieldandstandard"Open"and "Cancel" buttons. Behind the file dialog, the main application's"QuickVisualSearch"interfaceisstillpartially visible with its characteristic light blue background. The implementation appears to be running on a Windows operatingsystem,asevidencedbythewindowstylingand folderstructure.

This image shows the "Document Similarity Finder" application in action, displaying its main interface with "QuickVisualSearch"atthetop.Theapplicationnowshows anactivestatewherebothanimageandafolderhavebeen selected for processing. The central area of the interface displays a selected image of a black and white dairy cow standinginwhatappearstobeafieldorfarmlandsetting. Abovetheimage,thetext"ImageSelected"appearsinagray button,indicatingsuccessfulimageupload.Similarly,below theimage,there'sa"FolderSelected"button,confirmingthat a target directory for searching similar images has been chosen.Atthebottomoftheinterface,there'sa"FindSimilar Images" button, and beneath it, the word "Processing" appears in orange text, suggesting that the application is currentlyexecutingitsimagesimilarityanalysis.Theoverall layoutmaintainsitsclean,minimalistdesignwithasoothing light blue background. The window retains its Windows applicationstyling with the standardminimize, maximize,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

and close buttons in the top-right corner. The interface effectively communicates the current state of the process throughbothvisualelementsandtextindicators.

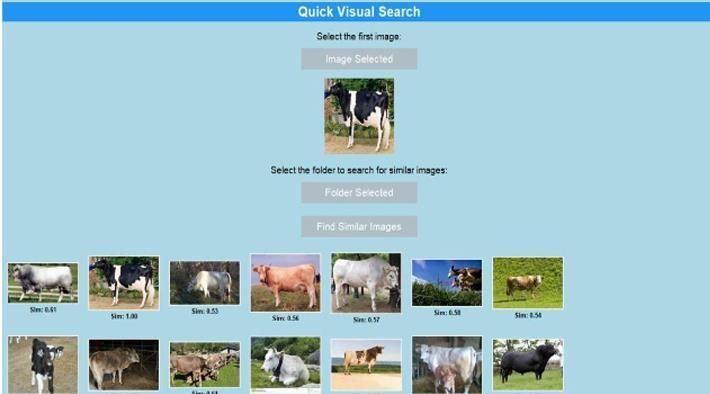

Fig -9: SimilarImage

This screenshot shows the "Document Similarity Finder" applicationaftercompletingitsimageanalysisprocess.The originalblackandwhitedairycowimageremainsdisplayed atthetopoftheinterface,labelledas"ImageSelected,"with "FolderSelected"and"FindSimilarImages"buttonsbelowit .The key feature now visible is the results section at the bottomofthewindow,whichdisplaysacollectionofsimilar cattle images arranged in two rows. Each image shows differentcowsinvariousfarmsettingsandposes.Someof theimagescontainblackandwhitecowssimilartothequery image, while others show cattle of different colours and breeds,includingbrownandspottedvarieties.Theimages appeartobecapturedindifferentoutdoorenvironmentslike pasturesandfields.Thesimilaritymatchingappearstofocus onidentifyingimagescontainingcattle,regardlessoftheir specificcolouringorpositioning.Eachresultimageseemsto be of similar size and is clearly displayed against the application'slightbluebackground.Thisvisualarrangement makes it easy for users to compare the results with the original query image. The overall interface maintains its clean, organized layout while effectively presenting the search results in a grid format that's easy to scan and evaluate.

The "Image Finder - Quick Visual Search" project demonstrates a transformative approach to image recognitionandretrieval,offeringablendofadvanceddeep learningtechniquesanduser-centricdesign.Byaddressing thelimitationsofexistingsystems,itprovidesanefficient, scalable, and accurate solution tailored to the needs of various industries. The integration of innovative features such as automated feature extraction, real-time performance, and intuitive interfaces ensures that the system remains adaptable and impactful in real-world applications.

Futureenhancementswillfurtheraugmentitscapabilities, expanding its usability across domains and leveraging cutting-edge advancements in artificial intelligence. The system’s potential for integration with augmented reality andmulti-modalsearchopensavenuesforbroaderadoption in fields such as education, retail, and healthcare. Additionally,thefocusonsustainabilityandenergy-efficient AI highlights the project’s commitment to aligning with moderntechnologicalandenvironmentalgoals.

This project not only serves as a powerful tool for visual searchbutalsosetsthefoundationforfutureinnovationsin computervisionanddeeplearningtechnologies.Itreaffirms thepotentialofAI-drivensolutionstostreamlineworkflows, enhanceuserexperiences,andunlocknewpossibilitiesfor industriesreliantonvisualdata.The"ImageFinder"system is poised to evolve into a cornerstone of modern image retrieval and analysis, continuing to make significant contributions to the fields of artificial intelligence and computervision.

[1] Schroff,F.,Kalenichenko,D.,Philbin,J.(2015).FaceNet: A Unified Embedding for Face Recognition and Clustering.

[2] Deng,J.,Dong,W.,Socher,R.,etal.(2009).ImageNet:A Large-ScaleHierarchicalImageDatabase.

[3] Krizhevsky, A., Sutskever, I., Hinton, G. E. (2012). ImagenetClassificationwithDeep

[4] Szegedy, C., Vanhoucke, V., Ioffe, S., et al. (2016). Rethinking the Inception Architecture for Computer Vision.

[5] Zhang, Y. (2020). Similarity Image Retrieval Model Based on Local Feature Fusion and Deep Metric Learning.

[6] Ronakkumar, P., Priyank, T., & Vijay, U. (2024). CNNBased Recommender Systems: Integrating Visual FeaturesinMulti-DomainApplications.

[7] Nagar, S., Das, S., & Sangeeta. (2023). Similar Images FinderUsingMachineLearning.

[8] [8] Liu,Y.,Zhang,D.,Lu,G.,&Ma,W.Y.(2007).ASurvey of Content-Based Image Retrieval with High-Level Semantics.

[9] Xia, R., Pan, Y., Lai, H., Liu, C., & Yan, S. (2014). SupervisedHashingforImageRetrieval.

[10] Chen, J.-C., & Liu, C.-F. (2015). Visual-Based Deep LearningforClothingfromLargeDatabases.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

[11] Lin,K.,Yang,H.-F.,Hsiao,J.-H.,&Chen,C.-S.(2015).Deep LearningofBinaryHashCodesforFastImageRetrieval.

[12] Shrivakshan, G., Chandrasekar, C., et al. (2012). A Comparison of Various Edge Detection Techniques in ImageProcessing.

© 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page594