International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Savan C. Becker1 , Michael E. Johnson, PhD2

1 DepartmentofEngineering,CapitolTechnologyUniversity,Laurel,MD,USA

2 AdjunctProfessor,DepartmentofEngineering,CapitolTechnologyUniversity,Laurel,MD,USA

Abstract - Extendedreality(XR)conceptsandtechnology have been used by the National Aeronautics and Space Administration (NASA) since the very early days of the U.S. space program, primarily evidenced by the agency’s extensive application of XR-enabled telerobotic techniques while conducting remote exploration and space operations [1, 2, 3]. NASA has also leveraged virtual reality (VR), augmented reality (AR), and mixed reality (MR) when facilitating human spaceflight training, seeking ways to ensureastronautphysicalandmentalhealth,anddesigning human-machine interfaces; the agency has further experimented with telepresence applications, which integratemultipleXRdisciplines[1,2,4]. Herewepresenta survey of historic and recent XR-related efforts in the U.S. space program and also highlight forecasted uses of such technologies in future long duration (i.e., cislunar and interplanetary)spacemissions,suchasArtemis [5,6,7,8,9, 10]. Note that this article focuses on human and humanrobotic spaceflight operations and does not cover the ubiquitous examples of XR being used throughout NASA for additional science, engineering, analytic, and educational applications [11, 12, 13, 14]. Throughout this review it should be evident that as XR concepts, techniques, and subdisciplines have emerged and evolved, increasingly advancedintegrated usecasesofthetechnologieshave also manifested.

Key Words: Technology, Extended Reality, Virtual Reality, Augmented Reality, Robots, Telerobotics, Telepresence, Telemedicine, Telehealth, HumanMachine Interfaces, NASA, Space, Spaceflight, Spacecraft, ISS, Artemis, Gateway, Moon, Mars, Cislunar, Space Exploration, Mars Rovers, Science Fiction, Gaming, Video Games, Cyberspace, Digital Twins, Virtual Worlds, Synthetic Environments, Immersive Worlds

Extended reality (XR) is an umbrella term encompassing concepts,technologies,andtechniquesthatenablehumanhumanand/orhuman-machineinteractionswithinvirtual environments(VE) andcombined environmentsfeaturing real and computer-generated components [3]. The XR domain includes the subdisciplines of virtual reality (VR), augmentedreality(AR),andmixedreality(MR). Extended

realityalsocoversmediatedremoteexperiences –suchas telepresence and telerobotics – that can be enhanced by VR/AR/MR-associated technologies. This is particularly the case when such implementations include immersive elements that enhance a user’s sensation of “presence” within synthetic, augmented, or mixed-reality renditions ofdistantenvironments Manyoftheconceptsunderlying anddrivingthedevelopmentandevolutionofXRmethods and technologies have their roots in science fiction [3]. Fittingly, throughout its history the National Aeronautics and Space Administration (NASA) has investigated, developed,andimplementedXRtechniquestoaccomplish itsspaceexplorationmission[1,2,4,15,16,17,18].

We begin our review of XR technology in the U.S. space programbyhighlightingnotablehistoricexperimentaland operational applications of telerobotics, VR/AR/MR, and telepresenceinNASA-ledhumanandhuman-roboticspace initiatives. Afterward, we discuss potential uses of XR technologyinfuturespaceexplorationprograms,focusing on applications for long-duration cislunar and interplanetaryspaceflightendeavorssuchas Artemis [5,6, 7,8,9,10]

Telerobotic operations involve the remote control of robots to perform actions in distant and/or dangerous environments [3, 19, 20]. Telerobots can be operated via various control schemes, some of which are designed to help compensate for distance-related communications delay (i.e., latency), or to assist humans by handling complex processes – thereby freeing the controller to focus on aspects of tasks that require more skill, innovation, or attention. These control methods include [3,19,20]:

Full Autonomy: The robot can operate on its own to accomplishtasks,withoutoperatorintervention.

Supervisory Control/Partial Autonomy: An operator is responsible for defining the higher-level goals for thetelerobotbasedonitscurrentsituation. Therobot can handle some complex processes autonomously butperformsitsfunctionsundertheoverallcontrolof a person using a command-based structure. Useful underconditionswithsubstantiallatency.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Shared Control: The robot has a higher degree of autonomy to dynamically assist the operator in completingtasks.

Direct or Manual Control: The operator has full controloverthesystemallthetime.

Fig – 1:Surveyor3andfirstlunardig(left,center). Project Viking Marslanderreplica(right). [24,30]

Telerobotic systems often rely on XR methods and technologies to provide users with intuitive interfaces featuring more “human-like” sensations and/or realistic recreations of distant or dangerous environments during machine-enabledremoteoperations[19, 21,22,23]

Significantly, the first instance of near real-time supervisory control of a telerobot on an extraterrestrial surfacewasaccomplishedbyNASAinAprilof1967,when the Surveyor 3 lander’s mechanical arm was directed to probe the Moon’s regolith by Earth-based operators [1, 24]. The honor of teleoperating the first “trench dig” on another world went to JPL engineerFloydRobertson (see Fig – 1) [25]. Robertson and other operators, guided by the spacecraft’s television camera, used the arm’s Soil MechanicsSurfaceSamplers(SMSS) toscoop,manipulate, and ascertain the physical characteristics of surface materials; this was repeated during the Surveyor 7 mission in 1968. In practice, controlling the arm was a step-by-step process, requiring visual verification of results before a new command was issued. Nevertheless, OranNicks–thenheadoflunarflightsystems–described this ability “to manipulate the surface the way a person might with his hand” as adding another dimension to exploring[25]. Asaresult,geologistswereabletoconfirm the presence of highly consolidated material on the lunar surface,pavingthewayforhumanexplorersduring Apollo [1,25].

Incidentally, as the U.S. proceeded with additional robotic precursor missions and then on to the crewed Apollo program, the Soviet Union landed and operated the first rovervehiclebeyondEarthon17November1970[26,27, 28]. During the historic Lunokhod 1 mission, a “five-man team”ofRussianoperatorsdirectlycontrolledtheroverin real-time, successfully driving it across the surface with a top speed of about 100 meters per hour, despite a communications delay of about 5 s round-trip time. The 10kmoflunarsurfaceexploredduringthelifespanofthis first rover was greatly surpassed by Lunokhod 2 in 1973, which covered 37 km using the same direct, real-time driving method. Science operations and surface imaging

using the rovers cameras were not conducted while they were moving, but only when stationary. Unfortunately, the time delay ultimately contributed to the malfunctioningofLunokhod2,whendustthrownupbyan inadvertent descent into a crater interfered with its thermal control systems [26, 28]. Nevertheless, the Lunokhod program predicted the utility and feasibility of direct, Earth-to-Moon teleoperations of robotic vehicles [26]. More recently, China applied VR and teleoperation techniques to control lunar rovers during the Chang’e-3 and Chang’e-4 missions to the Moon’s near- and far side, respectively, with some facets of these operations reportedlyconductedinreal-time[29].

Development and application of telerobotic planetary exploration techniques in the U.S. space program continued throughout the remainder of the 20th and into the 21st century, epitomized by multiple Mars surface missions mounted by NASA. Beginning with surface sampling conducted by the Viking telerobotic arms, technology and procedures became more advanced with each spacecraft the agency sent to the “Red Planet” (see Fig–1). Supervisorycontrolremainedtheprimarymeans of controlling surface robots to compensate for the considerable communications delay to Mars (at times in excess of 40 min) [2, 20, 30, 31]. However, increasing degrees of independence were introduced beginning with the Mars Pathfinder mission launched in 1996, which deployed Sojourner, the first semi-autonomous planetary rover. Lateron,thetwo Mars Exploration Rovers (MER) –Spirit and Opportunity – journeyed over 7 km and 45 km, respectively, using autonomous and supervisory control methods [31]. Of interest, based on subjective reporting, D. Chiappe and J. Vervaeke [32] suggested that MER operators experienced sensations of extended-presence and core-presence, as defined by G. Riva and J. A. Waterworth[33].

By 2024, using a combination of supervisory control and autonomous driving, the Mars Perseverance rover –launched in 2020 – had traversed and explored over 30 km of the Martian surface, demonstrating advanced navigation and obstacle avoidance skills [31, 34, 35, 36]. Moreover, after performing the historic first powered, controlled flight on another planet on 19 April 2021, the 1.8 kg Ingenuity Mars Helicopter that accompanied Perseverance completed72explorationflightsthroughthe

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

skies of Mars over nearly 3 years. The helicopter flew autonomously, based on plans that were remotely generated and transmitted by NASA Jet Propulsion Laboratory(JPL)operators. Althoughitsufferedacrashin January 2024, Ingenuity greatly surpassed its five-flight mission objective, gathering important imagery and data while demonstrating advanced independent flight control and successful operator-assisted navigation on another planet[31,34].

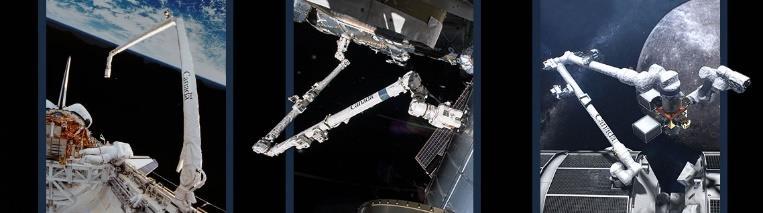

RemotemanipulationwasalsoutilizedthroughouttheU.S. Space Shuttle (i.e., Space transportation System, STS) program. This is highlighted by the integration of the Canadian Space Agency (CSA)-supplied Canadarm robotic arm aboard 90 shuttle spacecraft flights in 30 years (1981–2011) [1, 37, 38]. Also known as the Shuttle RemoteManipulatorSystem(SRMS),theCanadarmandits various end effectors, manipulation assemblies, and sensor systems was instrumental in performing duties such as spacecraft deployment, repair and maintenance; spaceflight photography and videography; surveys and manipulation of the shuttle cargo bay and its payloads; and astronaut extravehicular activity (EVA) assistance. The Canadarm could be operated in various ways, including visual and camera-aided manual teleoperation by astronauts via the SRMS Control System within the space shuttle cabin, or preprogrammed automatic control [1,37].

The success and legacy of the Canadarm was carried into Canadarm2, also called the Space Station Remote Manipulator System (SSRMS), which has been actively deployed aboard the International Space Station (ISS) since 2001 (see Fig – 3) [1, 37]. Canadarm2, like its predecessor, can be controlled by astronauts aboard the ISS using the station’s Robotics Workstation, operated in automatic/preprogrammed mode, or teleoperated by groundcontrollers[1,37]. AugmentedbytheMobileBase System (MBS) moveable work platform/storage location, andthehighlyadvancedDextrespacerobot,thecombined SSRMS suite can perform similar duties as the original Canadarm as well as very sophisticated ISS maintenance andoperationsfunctions[1,38].

In the early to mid-2000s, NASA delivered several small, free-flying telerobots called Synchronized Position Hold, Engage, Reorient, Experimental Satellites (SPHERES) to ISS [39]. These battery-powered, 18-sided polyhedral robots,eachabout21cmindiameterwithamassofabout

4.1 kg, were inspired by the autonomous, free-floating “seeker droid” that Luke Skywalker practices his lightsaberskillagainstinthesciencefictionfilm StarWars (1977). Each SPHERES robot is propelled by compressed CO2 gas and can roam about ISS assisting astronauts and conducting experiments sponsored by U.S. and internationalpartnerspaceorganizations,corporations,or academic researchers [39, 40]. The SPHERES can be remotely controlled with WiFi or smartphone (i.e., “SmartSPHERES”), are gyroscopically stabilized, and are equipped with cameras and multiple sensors. Additional instruments can be attached to the SPHERES as specified by the intended experiments, such as 3D imagers for autonomouscontrol,objectinspection,andscenemapping techniques [39, 40]. In 2018, NASA commissioned the Astrobee project, replacing the SPHERES with three more advancedandhighlycustomizablefree-flyingrobots;each cubic robot is 32 cm on a side and equipped with miniature telerobotic arms (see Fig – 4) [40, 41, 42, 43, 44].

Virtual reality (VR) is a subset of XR that – via a combination of technologies – can immerse humans within realistic, three-dimensional (3D), artificially generatedworldsorvirtualenvironments(VE)[3,45,46]. Augmentedreality(AR),likevirtualreality,isasubdomain of XR that enhances a participant’s perspective by dynamicallyoverlayingdigitalelementsontotheirfield-ofview (FOV) while allowing them to maintain a sense of presence and interoperability with the actual world [47]. In this way, AR “augments” their perception of the realworld without replacing it. Mixed reality (MR) is similar to augmented reality – however, MR takes the overlay of digital data a step further by allowing a user to interact withbothvirtualobjects and actualobjectsresidinginthe “realworld”[48].

NASA’s investigations into the uses of VR technology in human and robotic spaceflight training and operations have been ongoing since at least the 1980s [1,2]. Crewed spaceflight is risky, complicated, and expensive, and the agency discovered that VR could give astronauts realistic trainingtoensuresafety,loweroverallcosts,andincrease the success rates of human spaceflight operations Similarly,theagencyappliedVRtechniquestopreparefor

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

and conduct robotic planetary exploration programs –especially for landers, rovers, and aircraft sent to Mars –andevaluatedatareturnedfromthesemissions.

Interestingly, NASA itself developed the first low-cost, experimental wide field-of-view (WFOV) virtual reality HMDinthemid- tolate1980s(seeFig–5)[2,49,50,51, 52]. Known as the Virtual Visual Environment Display (VIVED, pronounced "vivid"), the device used basic, low resolutionliquidcrystaldisplays(LCD)madeforhandheld televisions. The system was invented by Michael McGreevy of NASA’s Ames Research Center (ARC) who –as a visionary in the field of early XR – worked at the agencyforyears“…applyingtheideasofvisualizationand inhabitablespacestoenabletheplanetaryexplorerstobe wheretheycouldnotphysicallygo”[53].

InpursuingVIVED,McGreevyandhis colleaguesaimed to develop a multisensory VE workstation for use in spaceflight teleoperations and telepresence activities [2]. Infact,oneoftheprimarygoalsoftheteamwastocreatea telepresence system that would enable a user to immersively teleoperate robots on other planets [54]. To this end, following VIVED they developed the Virtual Environment Workstation (VIEW), which integrated the EyePhone HMD and DataGloves for input, both developed by Jaron Lanier’s company VPL [2, 3]. The VIEW team made advancements in head-coupled displays for gazetracking, and the 3D audio technology required for immersivemultisensoryexperiences

In1993,NASAusedVRtechnologyextensivelytoprepare astronautsandgroundcrewsfortheSTS-61SpaceShuttle flight, which was the highly important first mission to service the faulty Hubble Space Telescope (HST) [2, 55]. Instruction using virtual environments supplemented more costly and time-consuming EVA training utilizing physical resources such as the Weightless Environment Training Facility (WETF) at Johnson Space Center (JSC). The VE methods were later assessed to be a contributing factor to the success of the HST repair mission, and elevatedinterestinusingthistypeoftechnologyinfuture NASA human spaceflight operations, as pursued by JSC’s Virtual Reality Lab (VRL) This was shown in continued useofVR/VEtechniquesthroughoutthe1990sand2000s to conduct training for STS and ISS telerobotic operations

(i.e., for Canadarm and Canadarm2), astronaut EVAs, and rescueoperationsusingtheSimplifiedAidforEVARescue (SAFER) unit – a relatively small spacesuit propulsion module for emergency returns to a spacecraft or space station[2,56,57].

Johnson Space Center’s VRL became the birthplace of NASA’s VR-equipped Dynamic Onboard Ubiquitous Graphics (DOUG) system, which was used first on the Shuttle then integrated into ISS systems in the 2010s. As an extension of the VE training devised in the 1990s, DOUG enables virtual reality-immersed EVA crew membersonthegroundandaboardthestationtopractice on-orbit EVA procedures, engage with multiple robotic arm operators, and train on EVA scenarios [57, 58] In 2016, the VRL also deployed the Microsoft HoloLens aboard ISS during Operation Sidekick – a program designed to make it easier and more efficient for ground controllersandsubjectmatterexpertsto“holographically” assist astronauts with spaceflight, maintenance, and payload operations on the station via the HMD’s AR/MR capabilities[15,59].

Since then, a variety of other HMDs and VR/AR devices were used on orbit by NASA and its international partner space agencies during telerobotics testing, multiple scientific investigations, and medical/health experiments [15]. Between2020and2022,forexample,anImmersive Exercise Experiment aboard ISS integrated a stationary bicycle with an HMD to help determine if VR could enhance astronaut exercise aboard the station [17, 60]. The system played prerecorded panoramic videos of scenic Earth landscapes and urban environments while astronauts cycled, adjusting the playback speed to match their pedaling. The goal was to provide some additional variation and motivation for astronauts while they performedthetwohoursofdailyexerciserequiredaboard the station to mitigate detrimental effects of prolonged spaceflight (i.e., decreased muscle and bone mass). Additionally, a specially designed Vive Focus 3 HMD was sent to ISS in 2023 to support the study of VR for preventative mental health care; the VR headset was engineered for microgravity use via a collaborative effort between HTC Vive, Nord-Space Apps, and XR Health, and representedthefirstsuchuseofadevice[61].

– 6: HoloLensaboardISS(left);ImmersiveExercise Experimentgroundtesting(right).[15,60]

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

TheimpactofVRonNASA’steleroboticspaceexploration initiativeshasalsobeensubstantial. Continuingfromtheir work with VIVED and VIEW, McGreevy and associates built the Virtual Planetary Exploration (VPE) system [2, 50]. This system integrated digital terrain modeling techniques and imagery overlays to investigate the utility of using synthetically generated environments to study data from Mars probes such as Viking. Virtual reality technology, including HMDs, enhanced the sense of presence while performing exploration of realistic renditions of the Martian surface derived from the scientificinformationacquiredbythelanders. Effectively, this combination emulated physical, in situ exploration of extraterrestrial environments and enabled a type of virtualfieldresearch.

McGreevy’s efforts with the VPE were inspirational in developing the VR-enhanced tools used by NASA to perform mission planning, remote control, and evaluation of the data returned by interplanetary lander/rover missions from the 1990s to present day [62]. These include the Rover Control Workstation (RCW) developed for the Mars Pathfinder program and the follow-on Rover Sequencing and visualization Program (RSVP) that has been used by operators of all subsequent Mars missions. RSVP Includes the Rover Sequence Editor (RoSE) for GUI commanding, and the HyperDrive application for 3D/stereo image and terrain modeling of the VE used to inform, guide, and simulate operations of surface rovers andaircraft[62].

Some of the concepts devised by McGreevy and his colleagues were also incorporated into the NASA ARCdeveloped Visual Environment for Remote Virtual Exploration(VERVE) roveroperationsystem(seeFig– 7) [63, 64, 65, 66]. With VERVE, controllers are provided with real-time, interactive high-fidelity 3D views of a rover's state, task sequence status, and position on a terrain map. In 2013, in an impressive test of space-tosurface robotic teleoperation, astronauts aboard ISS used a laptop equipped with VERVE and the Surface Telerobotics Workbench to remotely control a K10 rover inasimulated“Roverscape”constructedatNASAARC[66, 67,68,69,70]. Threedistincttestswere performed,with

three different astronauts operating the rovers for a total of about 10.5 hr; the tests featured intermittent line-ofsight (LOS) communications, latencies between 500 ms and 750 ms, and a combination of direct and supervisory control[69].

Among other tasks, the astronauts participating in the 2013 surface telerobotics tests from ISS conducted a virtual“sitesurvey”andsuccessfullydeployedasimulated antenna in the “Roverscape” [69]. While not fully immersive (i.e., in the sense of incorporating XR HMDs, haptics, etc.), this terrestrial analog demonstrated the value of advanced desktop VR techniques to enhance orbital control of a planetary surface vehicle. As highlightedbyTerryFong–NASAAmesChiefRoboticist–the test achieved a number of milestones, including the first[68]:

Real-timeteleoperationofaplanetaryroverfromISS.

Real-timesupervisorycontrolofaroverfromISS.

Interactiveastronautcontrol ofa planetaryroverina terrestrialanalogusingahigh-fidelity3Dmodel.

Use of NASA data networks to connect an astronaut’s laptopcomputertoaremote,surface-basedrobot.

Overall, this initial experiment exemplified the potential impact of using realistic “virtual world” models to facilitate dynamic human-robotic planetary exploration, and the value of fusing XR-techniques (i.e., VR and telerobotics) toward the development of more advanced spacetelepresenceapplications[68,71,72,73,74,75,76].

Telepresence typically integrates XR concepts and associatedtechnologies – i.e.,VR/AR/MR andtelerobotics – to enable a user to interact with physically remote or otherwise inaccessible environments [3] Ideally, this interaction is intuitive and immersive, with the operator feeling as if they are physically present in the distant location; this usually requires significant sensory input and feedback [3, 77]. Ultimately, telepresence seeks to enhanceahumanuser’ssenseof“being”whenintroduced into a replicated distant environment, particularly when conducting remote controlled, computer-mediated operations[3,78,79,80]

Telepresence has many potential applications in space exploration, and NASA has investigated its use across a spectrum of robotic and human spaceflight endeavors, most frequently associated with teleoperation of systems performingplanetaryexplorationorspacecraftoperations and maintenance [1, 2, 4]. A review of some of the agency’s noteworthy telepresence experiments illustrates the interdisciplinary nature of the field. It further highlights how XR-concepts and technologies can evolve from each other and be combined to address challenges associatedwithremoteexploration.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

As noted in our discussion on the associated XR subdiscipline of VR, Michael McGreevy and his team envisioned telepresence applications when developing theirpioneeringVIVED,VIEW,andVPEsystems,including immersiveoperationofroboticsystemsonMars[2,50,53, 54]. These ideas likely helped vector NASA to study telepresence enhancements to its robotic exploration and spacecraftservicinginitiatives[81,82].

Throughout the 1980s and 1990s, NASA sponsored the testing of telepresence-operated rovers on Earth that examined the possible benefits of such control for interplanetary probes. Carol Stoker of NASA ARC was an early principal investigator in this field [83]. In 2020, while with NASA’s Planetary Systems Branch, Stoker recalledoneoftheearliestofthesesurrogateexperiments, whichtookplaceinthe1980s:

“Myfirstventureinthistelepresenceareaoccurredinthe late 1980s before we had any access to surface rovers. I worked with a team of roboticists and we got an opportunity to do a field experiment in Antarctica in ice covered underwater environments. We did robotic exploration with underwater vehicles. We purchased a small tethered robotic underwater vehicle and we outfitteditwithstereocamerasmountedonapanandtilt platform and a communication system that would allow theoperatoronthesurface towearsensorsontheirhead and move the stereo cameras on the rover underwater with their head. The operator wore goggles that put the cameradisplaydirectlyinfrontoftheireyes. Thiswaythe operatorwastelepresent.”[84]

NASA and international partner space agencies continued to conduct telepresence tests of this kind into the 21st century – often using underwater, desert, volcanic, and arctic locations on Earth as analog planetary environments [73]. These experiments probed the utility of XR-enhanced control interfaces and telepresence for spaceexploration,whichhasremainedan area ofinterest for NASA and many independent researchers [66, 67, 73, 76, 85, 86]. As an example, in November 2019 European Space Agency (ESA) astronaut Luca Parmitano conducted asimilarbut–insomeways–moreadvancedexperiment

thantheearlierISS-basedteleroboticoperationoftheK10 robot with VERVE [87, 88]. From ISS, Parmitano controlled an Earth-based rover in a simulated physical lunar environment constructed in a hangar in Germany. For the first time, the rover was equipped with an advanced 6-degree of freedom (6DOF) gripper with dexterity and mobility equivalent to a human hand (see Fig – 8). Additionally, Parmitano used a haptic, forcefeedback controller and real-time video to operate the rover, pick up rock samples, and manipulate them similar to how he might if on the surface with his own hands. Moreover, this was done with the input and advice of a teamofexpertEarth-basedgeologists.

Parmitano’s successful demonstration of space-to-Earth, haptic-enabledteleroboticspecimencollection– all under supervision by Earth-based scientists – highlighted the potential value of collaborative space exploration telepresence [87, 88]. In the words of Aaron Pereira of the German Aerospace Center (DLR) – a NASA/ISS partner:

"This is the first time that an astronaut in space managed to control a robotic system on the ground in such an immersive, intuitive manner… our control interface incorporates force feedback so that the astronaut can experience just what the rover feels, even down to the weight and cohesion of the rocks it touches. What this doesishelpcompensateforanylimitationsofbandwidth, poor lighting or signal delay to give a real sense of immersion – meaning the astronaut feels as though they arethereatthescene."[87]

ThomasKrueger, headof ESA'sHumanRobotInteraction Laboratoryalsoadded:

"Robots can be given limited autonomy in known, structured environments, but for systems carrying out exploratory tasks such as sample collection in unknown, unstructured environments some kind of ‘human-in-theloop' oversight becomes essential. But direct control has not been feasible due to the inherent problem of signal delay – with transmission times constrained by the speed oflight…sowehavebeenworking towardstheconceptof humansstayingsafelyandcomfortablyinorbitaroundthe Moon, Mars or other planetary bodies, but being close enough for direct oversight of rovers on the surface –combining the human strengths of flexibility and improvisation with a robust, dexterous robot on the spot tocarryouttheircommandsprecisely.”[87]

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Fig – 9: Conceptuallow-latencytelepresencecontrolof surfaceroboticsfromorbitingspacecraft.[26,89]

Alongwiththe2016K10rovertests,the2019experiment conductedfromISSshowedhowsimilarsystemsmightbe able to facilitate immersive, collaborative, near real-time exploration of extraterrestrial planetary environments from orbiting spacecraft or surface installations [66, 68, 87]. Thisapproachcanincreasetheopportunitiesfornonastronaut (i.e., Earth-based) scientists, payload, and mission specialists to participate and contribute more dynamically, immersively, and collaboratively during space operations and in situ exploration. Furthermore, it can help to mitigate the expense of frequently deploying astronautsintospaceorontoaplanetarysurfaceforEVAs, concurrently reducing the environmental risks and hazards they might experience when doing so (e.g., radiationexposure)(seeFig–9)[26,89,90,91].

Nevertheless, it is worth mentioning that expert NASA roboticist Terry Fong [72] indicated that – when it comes toteleroboticoperation–“…telepresence(immersiverealtime presence) is not a panacea… manual control is imprecise and highly coupled to human performance (skills, experience, training).” Fong [72] suggested that minimizing risk is often more important than ease of operation. Consequently, methods such as supervisory control will still frequently be relied upon in future XRbased human-robot space exploration, even if telepresenceoperation(i.e.,direct,immersivecontrol)ofa roboticsystemispossible.

Along these lines, consideration of the communications delays/latenciesassociatedwithaparticularteleoperation usecaseisimportantindeterminingwhethertelepresence (i.e., immersive human-human mediated interaction, or human-machine control) is possible and – if so – how it canbeimplementedeffectively Afterdecadesofpractical and experimental applications in the space program, researchers and operators have come to classify teleoperation/telepresence operations into three categories according to relative communication

delay/latency [26, 69, 70, 90, 91, 92]. Broadly speaking, theseare:

Low-Latency Teleoperations/Telepresence (LLT): Operationswithdelaysof less thanone second. Inthe most exceptional cases LLT can be characterized by near real-time or real-timeteleroboticcontrol and/or human-machine or human-to-human telepresence experiences. High bandwidth, continuous communications are typically required to support LLT,particularlyifhigh-definitionvideoorotherdata intensive media are transferred. In telerobotics, achieving and implementing LLT is most useful for circumstances and tasks which are enhanced by or require having a “human-in-the loop” (HIL), such as interactive exploration, complex spacecraft servicing, ortelesurgery. Inthesecases,high-degreeoffreedom manipulators, force-feedback controls, and haptic interfaces can facilitate more dexterous and immersive human controls. Examples include NASA andESAtelerobotic/telepresencecontrolofterrestrial roversfromEarth-orbit(i.e.,viaISS).

Moderate-Latency Teleoperations/Telepresence (MLT): Operationswithdelaysontheorderof several seconds. Under MLT conditions there can be occasional direct/interactive human telerobotic operation, but increased delay may require some supervisor control, step-by-step commanding, or degree of autonomy on the part of the machine. Complex tasks like human directed scouting, surveying, and servicing can still be accomplished, with moderate requirements for communications bandwidthandavailability. ExamplesincludetheU.S. Surveyor robotic lunar surface samplers, and the SovietUnion’s Lunokhod Moonrovers.

High-Latency Teleoperations/Telepresence (HLT): Operations with delays on the order of more than several seconds. In these cases, methods such as supervisory control, command-based control, and some degree of machine autonomy become more important. There is no live interaction under HLT, and tasks are usually more simplified and scripted than with LLT or MLT. Further, HLT can often be executed with lower bandwidth requirements and intermittent communications. Examples include U.S. interplanetary surface landers, rovers, and aircraft operated on Mars (e.g., Sojourner, Curiosity, Spirit, Ingenuity).

These descriptions help us frame and conceptualize the temporal domains associated with XR-enabled space operations, particularly for possible future long duration human-robotic exploration programs (i.e., cislunar and interplanetary), which may involve a combination of LLT, MLT and HLT. In near-future human spaceflight endeavors, however, it is likely that NASA will focus on LLT, which the agency gained additional experience with

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

duringoneofitsmostambitiousandvisibletelepresencerelatedprogramstodate–Robonaut

Robonaut – which stands for Robotic Astronaut – was initiatedinthe1990sandcenteredonthedevelopmentof ananthropomorphichumanoidrobotdesignedspecifically for space operations (see Fig – 10) [2, 54, 93]. Conceived at Johnson Space Center, the effort was precipitated by NASA’s conclusion that that maintenance of ISS would require 75 percent more EVA time than had been anticipated [94]. As a possible mitigation strategy, technicians at JSC first invented the Dexterous AnthropomorphicRoboticTestbed(DART)todetermineif human-liketeleroboticsystemscouldperformsometasks thattypicallynecessitatedastronautspacewalks

– 10: EvolutionofRobonaut. Fromleft: DART, Robonaut1,Robonaut2,R5“Valkyrie”.[41,95,99]

As a precursor to Robonaut, DART was roughly anthropomorphic in that it had a head with two eyes for stereoscopic vision, two arms, and two hands. Significantly, DART was operated via a telepresence interface, including an HMD and hand controllers, which gave the human user a more seamless and intuitive connection with the machine; they could see the environment through DART’s eyes and intuitively use its hands to manipulate objects [95] However, DART was limited in terms of dexterity and was too large and unwieldy to be considered flight-ready [94]. Consequently, the system was evolved into the more humanoid Robonaut, designed from the start be an “EVAequivalent” robot capable of working with the humanratedspaceflighthardwareusedbyastronauts toperform spacecraftservicing[2,94,96].

Robonaut was intended to work alongside its biological counterparts and also perform missions alone, in environments and circumstances that were either too dangerous or not efficient for astronaut duty. Notably, it canoperateundermanualcontrol,intermittentcontrol,or function completely autonomously for some tasks –consistent with canonical concepts of telerobotic operation [3, 54, 97]. Since its inception, Robonaut has been upgraded physically and in terms of capability –evolving into Robonaut 2, which was operated on ISS by astronautsonthestationorremotelybycontrollersonthe ground[98]. RobonautandRobonaut2havebeenutilized

toperformexperimentsandactualoperationsbothaboard ISS and during EVAs, demonstrating sophisticated telerobotic and telepresence techniques [93, 97, 98]. Development continues, and the program has spawned other human-assisted robotic devices and experimental anthropomorphic robot projects, such as the ruggedized R5 “Valkyrie” humanoid robot, suggesting possible future applicationsonplanetarysurfaces[98].

Some NASA officials, researchers, and medical professionals have envisioned using evolved versions of Robonaut or similar telerobotic systems to facilitate human controlled or autonomous clinical duties during future human spaceflight operations [100, 101, 102, 103, 104] One application, for example, would be robot assisted surgery (RAS) – or telesurgery – which has been conducted on Earth for many years, at times telerobotically over great distances [105, 106, 107, 108]. This desire to address medical issues using telerobotics andtelepresencetechniquesreflectsoneoftheNASAfirst human spaceflight concerns – the health and safety of astronauts–which,arguably,mayhavebeenanimportant catalystforworldwide telemedicine asweknowittoday.

While not intrinsically an XR-discipline, or necessarily dependenton XR technologies,telemedicine canintegrate facets of telerobotics and telepresence to perform remote health-related functions. This is particularly true, for example, when enhancing a medical practitioner’s ability to seem “present” with geographically separated patients, or when providing realistic sensory input to a surgeon performingremoteproceduresviaateleoperatedsurgical robot[109,110,111,112]. Contextually,theinvestigation of this field at NASA (also known as telehealth) likely predates telepresence and other XR applications. NASA’s initialforaysintotelemedicinegobacktotheearliestdays ofhumanspaceflightwhen,inthe1960s,itsoughtwaysof monitoringthehealthoforbitingastronautsandremotely administeringmedicalcarewhenrequired[113]

The idea of “healing at a distance” was not a new concept in the 1960s. Historically, telehealth has origins in proposalsbysome19th centurydoctorsthatsuggestedthe newly invented telephone and telegraph could help mitigatefrequentpatientvisitsordrawontheexpertiseof distant physicians [114]. And – as with other technical advancements – very prescient predictions of technologically-enabled remote medical procedures were speculated in early 20th century science fiction writings, including those by authors E.M. Forster and Hugo Gernsback[115].

However, initial pursuits by NASA during its burgeoning astronaut program are often cited as the first, practical foundational steps toward the development of “telemedicine” – atermcoinedbyDr.ThomasBirdin1970

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

[115, 116]. This field would evolve to encompass many subdisciplines, such as teleradiology, telesurgery, telepathology, and telepharmacology, and become widespread following the maturation of the commercial internet in the 21st century [114, 116, 117, 118]. In addition, some of the major research objectives for telepresence in the space program are derived from this earlyandcontinuingneedtoremotelycareforastronauts.

Fig – 11: NASASTARPAHCtelemedicineproject.[113]

NASA began monitoring the physiological and metabolic activity of astronauts during the Mercury project, relying heavily on telemetry (i.e., space-to-Earth data links) for transmission of biometric data. Recognizing the importance of observing, understanding, and circumventing the effects of unique spaceflight conditions (i.e.,microgravity)onitscrews,theagencyestablishedthe Integrated Medical and Behavioral Laboratories and Measurement Systems (IMBLMS) program in 1964 [113]. The IMBLMS was viewed as instrumental to Apollo and subsequent long-duration human spaceflight initiatives. However, healthmonitoringsystemsdevelopedunderthe project were never actually tested during the first Moon program[113,114,115,116].

Nevertheless, the IMBLMS did get a boost in the early 1970s when NASA redirected the technologies toward an Earth-basedanalogknownasSpaceTechnologyAppliedto Rural Papago Advanced Health Care (STARPAHC). STARPAHCtookadvantageofstrongWhiteHousesupport and successfully demonstrated telemedicine applications in service to rural and indigenous Americans (see Fig –11). It became the genesis of several follow-on programs and terrestrial NASA testbeds that were key in furthering telemedicine for human spaceflight and also seeding the transition of space technology to public health and humanitarianwelfareefforts[113,119,120]. Throughout this time, NASA continued to use remote methods to monitor the health of astronauts during all human spaceflight programs, including Gemini, Apollo, the Space Shuttle,andISSmissions.

The enduring practice of collecting and analyzing medical telemetry from astronauts has been instrumental in improving knowledge of the effects spaceflight conditions on human physiology and facilitating remote and postmissionhealthcareforspacefarers[119,121,122,123]. In general, terrestrial supervision of healthcare for astronauts is essential because – at least to this point in thehistoryofhumanspaceflight–ithasnotbeencommon practice toinclude a dedicated doctor asa member ofthe relatively small crews placed into orbit or sent to the Moon. In fact, the ISS astronaut designated as the Crew Medical Officer is typically an individual without a professional clinical background that has been provided with60hoursofsupplementalmedicaltraining[104].

surgeonSchmidNASAadvisesISScrew(right).[128,129]

While this might be sufficient for missions in low-Earth orbit (LEO), where the risk of long-term disease/health issues or serious injury requiring immediate on-site surgery might be manageable, it will almost certainly not suffice for more distant, lengthier missions [124]. Consequently, the idea of using telepresence to provide enhanced remote medical guidance and possibly administermedicaltreatmenthasbeenofinteresttoNASA for decades [113, 125, 126, 127]. As XR concepts have evolved and associated technologies have improved, the agency has been able to experiment with novel ways of augmenting the relative inexperience of the CMO, sometimes leveraging telepresence to deliver terrestrial medicalsubjectmatterexpertisetoastronauts.

In 2021, the agency demonstrated a vision of this when experimenting with a technique called holoportation, during which real-time 3D renditions of ground-based medical personnel were transmitted to ISS. Using the mixedrealitycapabilitiesoftheMicrosoftKinectcamera –adeviceoriginallydesignedforvideogames –NASAflight surgeon Dr. Josef Schmid and his Earth-based colleagues “holoported” to the station to provide medical consultation and interact with astronauts equipped with Microsoft HoloLens AR/MR HMDs. The team showed the potential ofimmersive,interactivespaceflight telemedical consultation, and also conducted the “first holoportation handshake from Earth in space” [17, 128]. For science fiction fans, this might conjure mental images of the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Emergency Medical Holographic (EMH) program – a virtual, holographic, artificially intelligent medical officer designed to provide contingency clinical support to the crewof StarTrek:Voyager (seeFig–12)[129].

Fig – 13: spaceMIRAtelesurgeryrobotcontrolledfrom Earth(right),andaboardonISS(left).[102,133]

Going beyond holoportation, NASA conducted a groundbreaking initial test of Earth-to-space telesurgery capabilities in 2024 using the Space Miniaturized In Vivo Robotic Assistant (spaceMIRA) surgical telerobot – a spaceflight adapted version of the MIRA Surgical System, manufactured by Virtual Incision Corporation [101, 112, 124, 130]. Of note, MIRA was the first miniaturized robotic-assistedsurgery(miniRAS)systemdeveloped,and – as of mid-2024 – had been used to perform approximately 30 soft-tissue operations on Earth, specializing in procedures in the intra-abdominal space (i.e., bowel/colon resectioning) [101, 102, 131]. The systemisverycompact,andrequireslesssetup,teardown and clean-up procedures than larger “mainframe” RAS systems. Precision remote manipulation is facilitated by hand and foot controls, with visualization provided by a specializedconsole[111,112,132,133].

Specially made to be fit for space travel, the compact spaceMIRA is about 76 cm long – 7.6 cm shorter than its terrestrial counterpart – and weighs about 0.9 kg [130, 133]. Thesystem waslaunchedto ISS in January 2024 to test telesurgery procedures – particularly the effects of microgravity and latency on such operations [101, 102].

During the demonstration, six surgeons controlled the spaceMIRA, precisely using the instrument’s two miniature“hands”andcutterstomanipulateanddissecta seriesofrubberbandsundertension,whichsimulatedthe consistencyofhumantissue (seeFig–13)[102,130]. All operations were deemed successful, with each of the surgeons contending with the round-trip latency of about 0.85 s, roughly the time it took for the commands and video signals to travel to and from ISS, orbiting approximately400kmoverhead[130,133].

Dr.MichaelJobst,anaccomplishedcolorectalsurgeonwho was one of the first doctors to use MIRA during medical trials, participated in the space-based RAS demonstrations; as of 2024 he had conducted 15 operationsonhumanpatientsusingMIRA[130]. Dr.Jobst indicated that with spaceMIRA “…you have to wait a little

bit for the movement to happen; it’s definitely slower movements than you're used to in the operating room” [124]. To help compensate for the delay, ground controllers and technicians experimented with different scaling factors on the terrestrial control console used by thedoctors,sothatmovementsperformedbysurgeons on Earth would be translated into smaller motions executed by spaceMIRA [133]. The lessons learned from this experimentwillbecarriedforwardintofuturetelesurgery tests, as NASA works to understand how to effect remote medical procedures on missions at greater distances and of longer duration, such asduring Artemis and beyond [7, 130,133].

NASA-sponsored research and planning documents suggest that additional experimental and operational XRenabledactivities will beconductedduring future U.S.-led space initiatives, including exploration of the cislunar domain under the Artemis program [7, 17, 18, 23; 26]. Theseeffortswillleveragetheagency’spastachievements, benefit from ongoing academic research in the field, and take advantage of accelerating advancements in commercial XR-associatedtechnologies. Below webriefly highlight several areas in which XR may play a role in supporting future human and human-machine long duration space operations, including those in cislunar space.

Future astronauts will certainly continue to leverage XR technologies for training and familiarization with spaceflight hardware and procedures. Crews are already utilizing HTC Vive headsets to orient themselves with GatewayviaimmersionwithinVRrenditionsofthefuture lunar space station (see Fig – 14) [134, 135, 136]. Furthermore, Boeing – one of NASA’s “Commercial Crew” spaceflight partners – has modeled the cockpit of its Starliner spacecraft using Unreal Engine, software typically used for gameworld development [137, 138]. The resulting “human-eye resolution” VR simulation enables highly realistic training on Starliner, which is intended to take personnel to the ISS and possibly to the Moonduringthe Artemis program.

Digital twins (DT) are synthetic, virtual analytic models and environments that exchange data with the actual physical systems they replicate [3, 139, 140, 141]. This type of technology may be used to improve synthetic training versions of surrogate space habitats, develop data-enriched interactive mission simulations, and devise collaborativeAR/MR-enhancedproceduresforastronauts, flight controllers, and scientific investigators [142, 143, 144]. A. Mazumder et al. [141] described how “Experimentable Digital Twins” (EDT) incorporated into

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

virtual test beds (VTB) and DT-enhanced, haptic telerobotic manufacturing, maintenance, and operations methods have been studied for space applications. These approacheswouldallowforbidirectionalinformationflow between computer replicas and actual spaceflight hardware[141,145].

Fig – 14: GatewayfamiliarizationviaHTCViveHMD.[135]

Augmented reality technology will also be utilized to support operations and maintenance during cislunar and interplanetary human spaceflight missions. NASA has expressed specific interest in outfitting next-generation Moon-bound spacesuits with AR features, primarily to enhance the performance of astronauts conducting EVAs around spacecraft and on planetary surfaces [146, 147, 148, 149]. The agency hopes to streamline task performance by integrating AR capabilities directly into spacesuit helmets, infusing astronauts’ views of the “real world” with digital data including technical information, real-time instructions, equipment and system status, and navigationalguidance. Thismayimprovetheexecutionof both collaborative and independent duties. An AR interfacecould,forexample,facilitatemoreimmersiveand effectiveassistancefromlocal andEarth-basedcolleagues and subject matter experts (i.e., with visual cueing), or allow increased autonomy afforded by immediate and intuitive access to information contained in networked databasesandreferencesystems.

To help realize this, an industry technology request for information (RFI) was distributed by NASA’s Center InnovationFundbetween2019and2021,solicitinganAR system with the ability “ …to comfortably display information to the suited crew member via a minimally intrusive see-through display” [146, 150]. As envisioned, the system will be integrated directly into a helmet visor, in lieu of a head-worn device that might introduce equipment integration problems. NASA’s codename for this program is the Joint Augmented Reality Visual Informatics System (JARVIS). Notably, the acronym for this project is a nod to the AR/MR-enabled, naturallanguage artificial intelligence computer system that is integrated into Tony Stark’s powered “Iron Man” armor, residence, and “Avengers Tower” in the Marvel Comics and Cinematic Universe franchises [151]. Along with the JARVISrequest,NASAreleasedanassociatedAR-interface software design challenge specifically targeted at colleges

and universities called the NASA Spacesuit User Interface TechnologiesforStudents(NASASUITS);thetestweekfor thisprogramwasslatedformid-2025[150].

In the realm of telerobotics, 50 years of successful joint space operations between NASA and CSA using the Canadian Space Agency’s Canadarm (Space Shuttle) and Canadarm2 (ISS) will continue into the Artemis program. The agency plans to permanently deploy Canadarm3 aboard the Gateway lunar space station [37, 38]. As its predecessor did aboard ISS, Canadarm3 will be used to move Gateway modules, inspect and repair station elements, assist with visiting spacecraft rendezvous and docking procedures, and facilitate astronaut EVAs and science activities. Similar to Canadarm2, the system will be designed for both autonomous and remote operations (i.e.,controlledfromEarthorGateway)[37,38].

While concrete plans have not been established, NASA officials have further indicated that intravehicular teleoperated and semi-autonomous robots – such as the RobonautseriesandAstrobee–areimportantadditionsto spacestationcrews[41]. Consequently,itislikelythatthe agency will deploy similar systems aboard Gateway to assist with and/or conduct maintenance and science operations. Further, since NASA was experimenting with more robust and survivable evolutions of Robonaut (i.e., theR5“Valkyrie”),itispossiblethatsomesimilarsystems will be adapted to function in and around lunar and planetarysurfacefacilities[99].

Tangentially,NASAmayuse a mixtureof low-, moderate-, and high-latency teleoperations (LLT, MLT, HLT) to remotely control robots aboard and around cislunar and interplanetary spacecraft in the performance of maintenance and science-related functions [4, 26]. A combinationofthesetechniqueswilllikelyalsobeusedto operate robots on the surface of the Moon (and/or Mars) to assist with landing site selection and preparation/assessment of outpost locations [26]. Once long-term facilities and equipment are in place, teleoperatedrobotswillprobablybeleveragedtoperform operationsandmaintenanceofthem,particularlyutilizing orbitingspacecraft(i.e.,Gateway)ascontrolplatforms[26, 90].

Inadditiontoutilizingteleoperatedrobotstohelpoperate and maintain infrastructure, NASA and its partners will almost certainly investigate and implement LLT-based techniques for surface exploration during Artemis and beyond. Recall that the feasibility of XR-enabled LLT for such a purpose was demonstrated by both NASA and ESA in the early to mid-2010s when astronauts controlled terrestrial rovers from ISS [66, 69, 75, 87,]. Given the reduced communications delay from lunar orbit to the Moon’s surface (i.e., relative to MLT/HLT from Earth),

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

astronauts aboard Gateway – for example – will likely exercise similar methods to remotely operate robotic instrumentedvehiclesand exploretheworldbelow them. Doingsowillenablethemtoguideandperformtasksand scientific experiments that benefit from a “human-in-theloop”, but with enhanced system responsiveness and reducedrisk. Typesofoperationsmayinclude[26,69,90, 91,152]:

Fig – 15: ConceptualviewfromLLTworkstation.[26]

Crew-Assisted Telerobotic Sample Return: Selection and acquisition of surface samples for return to Gatewayand/orEarthforscientificanalysis.

Specialized/Remote-Site Science Operations: Interactive in situ human-machine exploration of regions of the Moon that are particularly difficult (or expensive)forhumanstoaccessalone;and/or,which requirehumanjudgement,intuition,and/orexpertise, but preclude frequent or robust human physical presence. For example, extended exploration of the lunar farside from Gateway via cislunar communications relays, or from crewed vehicles operatingneartheL2Lagrangepoint.

Lunar Resource Exploitation: Investigation, assessment, and/or extraction of lunar resources in challenging or dangerous locations, such as the potentially volatile/resource-rich permanently shadowedareasoftheMoon.

Minimization of delay is highly desired for dynamic exploration, which – intuitively – suggests that line-ofsightcommunicationsfromlunarorbitshouldprovidethe most direct, low-latency path to the surface. That being said, leveraging LLT to perform operations from lunar orbit (or Mars orbit) – for instance – does not preclude also doing so from outposts or habitats located on the surface itself, if the proper communications architectures are available (i.e., surface antennas and satellite relays) (seeFig–15)[26].

Similarly, XR-enabled LLT operations conducted by astronauts deployed at and around the Moon may be integrated with MLT/HLT operations executed by Earthbased investigators, providing the proper architecture exists. Despite the potential for increased latency introduced by the multiple “links” and users in the communications chain, fusing these approaches may provide opportunities for interactive/immersive humanto-humanandhuman-machineexploration involving both local and terrestrial participants. A variety of control modes – e.g., real-time/direct, interactive/supervisory, and pre-planned/command-based – may be employed to mesh these different domains together [10, 69, 153, 154, 155].

In terms of actual human in situ investigation, the AR enhancements to NASA spacesuits mentioned earlier will probably also aid future surface explorers in coping with unfamiliar extraterrestrial environments. The austere, airless environment of the Moon – for example –challenged the perception of Apollo crew members in the 1960s, presenting them with unusual lighting conditions thatcomplicatedjudgementofsizeanddistanceofsurface features[156]. K.Rahill[156]testedandconfirmedthese sensory effects on astronauts – dubbed “lunar psychophysics” – using virtual reality modeling and simulationtechniquesfacilitatedbytheUnity3DVRgame engine[157]. Itispossiblethatfuturesurfaceexploration spacesuits will be equipped with AR/MR capabilities that will help astronauts compensate for issues arising from unique geographic and atmospheric phenomena –allowing them to more accurately, safely, and efficiently navigateandexplorethelandscapeduringEVAs.

Collectively, VR and AR/MR capabilities (i.e., deployed on the computers of principle investigators and integrated into NASA spacesuits), together with telepresence/teleroboticstechniques,maybecombinedto enhance future collaborative interplanetary exploration. Oneveryexceptionalandpromisingexampleofthisisthe Aerospace Corporation’s Project Phantom, which utilizes VRandARtechnologiestodynamicallyconstructavirtual, interactive analog of a remote planetary landscape that can be mutually investigated by both terrestrial researchers and Moon- or Mars-based astronauts (see Fig –16)[158]. TheVRconstructprovidesameansbywhich

2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

scientistsandengineers–called“Phantoms”–canexplore and interact with a 3D, reality-based, immersive “digital ecosystem” that has been populated with real-world data via the input of AR-equipped in situ astronauts – called “Explorers”. Using their AR-augmented suits, field Explorers can also access notes and annotations placed into the immersive environment by Phantom researchers toguideandinforminvestigations–therebyenrichingthe shared exploration system. Developers envision that the system will leverage GPS-like location services, allowing Explorers and Phantoms to inject waypoints, geotag significant findings, and jointly chart courses across a digital planetary landscape that is sustained by current information.

The Aerospace Corporation notes that the system differs from many current “digital twin" applications in that Phantom can truly pass data between the virtual and actual worlds bi-directionally, whereas the latter usually focus on updating the synthetic model with real-world information [3, 158]. In other words, when the virtual world is updated by Phantom scientists, deployed Explorers will see thoseupdatesoverlayed ontotheir ARenhance visualizations. Conversely, when Explorers submit information (i.e., images, science data, remote sensing information, etc.) from sensors they carry or deploy, the virtual world is populated with their findings for reference by Phantoms and other Explorers. Presumably, data would also be injected by autonomous and teleoperated robotic vehicles. A Project Phantom prototype was tested in a surrogate Martian environment on Earth in 2022, incorporating a simulated Marsequivalent time delay/latency between when Explorers and drones collected data and when the information was available within the system [158]. In the words of Engineering Specialist Trevor Jahn of the Aerospace Corporation:

“Project Phantom connects the real and virtual worlds together, so we can bring the scientific world into the operational space for astronauts on the Moon or Mars although turnaround time for communication is a factor, it’s arguably an improved form of bi-directional communication between astronauts or users on location andwhoeverisaccessingthevirtualmodel. Itspotentialis undeniable.” [158]

Similarly, Don Haddad – Planetary Software Engineer at NASA ARC and research affiliate at MIT’s Space ExplorationInitiativeExtensive–hasconductedextensive research further highlighting the potential benefits of using digital twin and XR-technologies to facilitate planetaryexploration[159]. Asanexpertoncouplingthe real, virtual, and imaginary worlds, Haddad [160] did an outstanding job relating how an immersive, sensor-fed digital twin of a planetary environment could be developed, allowing researchers to explore an information-rich landscape mirroring the actual world.

Haddad’s work in this area evolved from a previous project constructing a digital twin of a portion of actual terrestrial wetlands in Plymouth, Massachusetts [161]. That virtual counterpart, called Doppelmarsh, was populated with information derived from a real-time network of instruments deployed in the physical area it replicated. This produced an “avatar landscape” fabricated from live video, weather and temperature information, and even sensory data that reflected the activity of animals in the area. Among other capabilities, the system allowed a roaming user to toggle between a texturized, realistic perspective and a data-based visualizationofthelandscapewithacapabilityknownasa “virtuallens”,orSensorVision[161].

Fig – 17:

Thespaceexplorationanalogproposedandprototypedby Haddad [160] was called Doppelbots, within which a user could drive and operate a virtual, robotic vehicle (i.e., a Doppelbot) that mimicked the performance of an actual rover through a digital twin landscape. Significantly, like Doppelmarsh, the Doppelbots system was developed using theverypopularUnitygameengineandisaccessibleviaa video game-like interface, incorporating many interface elements, navigation tools, and other control and visual features that would be very familiar to gamers [157]. According to Haddad [160] , “…these game-inspired elements enrich the user’s interaction and enhance their navigation experience within the simulated environment of Doppelbot.” In fact, in designing Doppelbots, Haddad [160]drewsubstantialinspirationfromreal-timestrategy (RTS) video games such as Command and Conquer –particularlyinhowthe“player”canuseacomputermouse to navigate their robot with a “birds-eye” view of the landscape, setting waypoints and chaining commands together. The user interface also provides the operator withamini-map–acommonfeatureofvideogames–and allowedtogglingbetweenautomaticandmanualcontrolof theDoppelbotrover.

Similar to the bi-directional information flow that the Aerospace Corporation [158] demonstrated with Project Phantom, Hadadd [160] showed synchronization between the virtual Doppelbot rover and it’s real-world

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

counterpart. In doing so, Haddad and colleagues illustrated how the synthetic rendition of the robot could beoperatedinthe Doppelbots spacewhilelinkedwiththe actualroverperformingthesameactionsintheMITMedia Lab test facility. Moreover, to address the anticipated latencies encountered when performing actions during real-world space operations (e.g., on the Moon or Mars), Haddad [160] envisioned using a novel visualization construct that would allow users to see reflections of the last-known and predicted locations of actual deployed rovers along with the desired position of the user’s Doppelbot, all within the digital twin construct (see Fig –17). This immersive, “4D” depiction of past and possible present locations of surface vehicles provides a very intuitive and responsive space for remote operators dealing with “in-game” lag, so to speak. According to Haddad:

“The integration of the simulated and actual rover in a single environment provides a comprehensive understanding of the rover’s status, actions, including simulations of time lag associated with communication. Thisvisualizationconceptaimstohelpoperatorsmaintain situational awareness and ensure effective and timely controloverspaceoperationsandmonitoring.”[160]

Given these efforts, it is not difficult to see how DT conceptsmightbeintegratedwithotherXR-enabledspace operations – such as exploration telepresence (LLT, MLT, and HLT), telerobotics, and AR/MR-enhanced human surface expeditions – to deliver highly enriched, data driven, collaborative human-machine exploration experiences during future cislunar and interplanetary endeavors.

Spaceisanextremeenvironment,anddecadesofresearch have revealed that astronauts confront unique physiologicalchallengesthatcanmakelivingandworking thereuncomfortableatbestand–intheworstcases–life threateningorfatal. Inadditiontotheimmediatehazards posed by things such as ionizing space radiation and potential exposure to vacuum, astronauts face lingering biological effects resulting from extended time in closedloop, microgravity environments [122, 162, 163, 164]. Thesecaninclude:

Spacemotionsickness

Lossofmuscleandbonemass

Orthostaticintolerance

Spatialcoordinationissues

Eye/visionproblems

Dietarycomplications

Respiratoryissues

Increased exposure to microbial contaminants and infection

Beyond the bodily impacts, spaceflight operations also requireconsiderationof the mental healthimplications of isolation, confinement, and small group dynamics under tremendously stressful conditions [122, 162, 163, 164, 165]. Extreme distance from family, friends, and operational support personnel – and the need to function optimally in life threatening situations for protracted periods of time – exacerbate the psychosociological stressors associated with human spaceflight (i.e., depression, stress, loneliness, boredom) Stays aboard orbital space stations and missions to extraterrestrial planetary bodies of interest can vary from days to years, and all of these potentially debilitating issues – physical and mental – are exacerbated during extended duration missions. Further complicating these problems is the typical absence of substantial specialized medical expertiseresidentonthespacecrews[103,104].

In our review of XR in the U.S. space program we highlighted a few of the numerous experiments that researchers have conducted in space to evaluate the potential merits of XR in addressing the medical needs of astronauts[15,17,61,102,130]. Theseinvestigationsand many more like them will undoubtedly continue during Artemis, with some use cases seeing operational implementation. Here, based on a review of available literature, we outline several of the most likely ways that XR-associated technologies will be pursued to ensure the physical and mental health of astronauts voyaging to the Moonandbeyond.

NASAhasalreadyexperimentedwithVRHMDsonISSasa means of improving the mental health of astronauts [61]. Other clinical tests supported that XR (i.e., VR/AR/MR) can be used to help reduce stress by allowing people to temporarily immerse themselves in synthetic, realistic renditionsofenvironmentsthatarecomfortingorcalming to them [17, 165, 166, 167]. Some experiments specifically directed at spaceflight crews indicated that includingsimulatedsmellsalongwiththeusualvisualand auditory facets of VR simulations enhances the sense of presence in such environments [165, 167, 168]. This can improverelaxationeffectswhenastronautsareallowedto virtually “escape” for a time from the relatively small, industrial volumes of their spacecraft. It is possible that NASA will continue to seek ways to apply VR in this capacity, enabling astronauts to occasionally experience more expansive natural or fanciful virtual worlds. Doing somayhelpthemcopewithmentalhealthissuesthatcan arise due to long duration confinement, isolation, and distance from Earth. Likewise, using VR/AR to learn new skills (i.e., languages) or play games can be a healthy distraction, help lessen boredom and depression, and improvemorale[17,122,165].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

The vast distances, extended mission times, and high operational tempos associated with spaceflight can result inprolongedperiodsoftimeoutofcontactwithfamilyand friends, which can be demoralizing for astronauts. Extended reality devices and methods can assist in enriching communication with loved ones. In a fairly straightforward example, NASA demonstrated that the holoportationtechniqueusedto“beam”virtualrenditions ofphysicianstoISSin2021couldalsobeusedtofacilitate immersive family conferences for astronauts, allowing themtofeelmorepresentwiththeirlovedones[17].

Moreover, XR may be of particular help when latency or mission parameters preclude dynamic/real-time or frequent contact during long duration space missions. Some proposed communications solutions include rendering virtual avatars of Earth-bound family members that can be used to interact with crew members via VR/AR/MR interfaces [165, 167, 169]. Digital versions of family members might deliver recorded messages to astronauts, for example, or be ported into shared, virtual environments (VE) to facilitate actual or simulated conversationsdrivenbyartificialintelligence(AI)[165].

D. L. Hansen et al. [169] took these concepts further and considered methods that deliberately tried to account for theincreasedlatenciesassociatedwithlongdistancespace travel. They developed an approach called the Designing for Extended Latency (DELAY) Framework, coming up with several XR-like methods to compensate for communications lag in an effort to maintain and enhance feelingsofsocialconnectedness,presence,andimmersion between astronauts and their distant families. These included[169]:

A mobile app to facilitate recording and sharing of common experiences (i.e., eating meals, exercising, etc.) between crew members, friends, and family, delivered at appropriate relative times during their days;and‘turn-based’activitiesthatproducedshared, integratedvideos.

A concept that delivered pictures to digital “smart frames” or played messages from each other when certain actions were detected (i.e., walking into a room)underanInternetofThings(IOT)typesystem.

Avideogamethatallowedcrewmembersandfamilies to meet, play alongside, and battle against AI avatars ofoneanother,withplaystylesupdatedusingmachine learning(ML).

Similarly,P.Wuetal.[168]conceptualizedasystemcalled A Network of Social Interactions for Bilateral Life Enhancement(ANSIBLE). ANSIBLEcreatedshared,group indoor and outdoor virtual environments that astronauts, family,andfriendscoulduseforasynchronousinteraction viaavatarsandvirtualagents(VA)(i.e.,AI-drivenavatars) representing each other. In many ways this construct is similar to virtual worlds used in immersive video games and online virtual social communities that have existed since the mid-1990s, including Worlds Chat, Alpha World, and the thriving Second Life VE (see Fig – 18) [170, 171]. Purely AI agents (i.e., “embodied” agents) were also available in ANSIBLE as helpers, consultants, and conversation partners, akin to non-player characters (NPCs)or bots invideogames[172,173,174]. P.Wuetal. [168] envisioned that users and entities could even exchange personal care packages with each other within ANSIBLE. Note that the term ANSIBLE is a tribute to a devicefromsciencefictionthatenablesinstantaneous(i.e., “faster than light”) communications between any two pointsinspace[175]. Thesci-fi“ansible”wasinventedby authorUrsulaK.LeGuin,whowroteacclaimedbookssuch as The Left Hand of Darkness (1969), and reused by other renown sci-fi writers, including Orson Scott Card, author of the famed novel Ender’s Game (1977). While not instantaneous,itispossiblethatsystemsbasedonsimilar concepts to ANSIBLE or DELAY could be used to help alleviate stress associated with the time and distance separatingfutureastronautsandtheirlovedones.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

In what some call “exergaming”, the integration of XR devices such as stereoscopic HMDs with exercise equipment can increase motivation, enjoyment, and performance–particularlywhensensationsofimmersion and presence are enhanced [176, 177]. One study by S. Grosprêtre et al. [178] also indicated that XR-enabled video games combined with exercise promoted cognitive enhancement. Tothoseends,itispossiblethatNASAwill install systems like the VR-enhanced stationary bicycle tested aboard ISS during the Immersive Exercise Experiment on Gateway and other future stations, spacecraft, and lunar/planetary surface outposts supporting semi-permanent human occupation [17, 60] Givenspace,mass,andpowerconstraints,theagencymay consider adapting the concept to other types of exercise equipment,suchasaspecializedrowingmachine[17].

Extended reality technology can offer significant benefits todelivering routineandcritical healthcaretoastronauts, complimenting and improving the support provided by on-site personnel who may only have peripheral clinical responsibilities, such as the Crew Medical Officer (CMO). ManyoftheimplementationsofXRinmedical supportfor long duration human spaceflight operations (i.e., Artemis and beyond) will probably be directed at increasing the autonomy of the crew, ideally enabling them to address some health concerns independent of immediate support from Earth. Once again invoking parallels to science fiction, it is possible that a full-fledged EMH-type solution might be available someday in the far future, as depicted on Star Trek: Voyager [129]. Until then, extended reality methods supporting remote healthcare for cislunar and interplanetaryastronautsmayinclude:

AR-Guided Medical Procedures: Using AR displays and voice guidance to visually and audibly navigate astronauts through medical procedures. This may be done by using XR-systems that are connected to local virtual libraries and knowledge bases (possibly AIand/or ML-enhanced), or via XR-enabled cues/instructions from terrestrial doctors. Facets of these capabilities were explored under Operation Sidekick, with remote guidance delivered via the Microsoft HoloLens. Additionally, a Small Business Innovation Research/Small Business Tech Transfer effort solicited by NASA in 2021 called the Intelligent Medical Crew Assistant (IMCA) project resulted in prototype procedures and devices, and a detailed requirements capture (see Fig – 19). [15, 17, 18, 59, 122,150,179]

AR-Facilitated Medical Inventory Assistance: Augmented reality methods might be employed to help space crews without significant medical training inventory medicine and other health-related supplies

on future spacecraft and outposts. Two NASA experiments – MedChecker (AR-enabled) and the Autonomous Medical Officer Support Software (AMOS) (non-AR) – suggest that taking advantage of an AR HMD’s camera, with appropriate software, could allow astronauts to take stock of medical supplies more efficiently, without ground assistance. [17,180]

Holoportation: Transmission of medical expertise to remote space facilities using AR/MR technology may continue, as demonstrated by ISS astronauts and Dr. Josef Schmid (NASA flight surgeon) during the 2021 holoportation experiment. Bidirectional, immersive XRconferencingmayhelpspatiallyseparatedmedical personnel evaluate astronauts’ health issues and provide more effective guidance to address their needs. Further, if also enhanced with AI/ML and virtual agent techniques, this approach might be adaptable to periods/circumstances with higher communicationsdelay. [17,18,165]

Telesurgery: The spaceMIRA experiment conducted in 2024 aboard ISS was a significant step toward illustratingthepotentialapplicationsoftelesurgeryin the space program. However, additional research, development, and experimentation is required to develop spaceflight-worthy systems and protocols capable of routinely enabling terrestrial doctors to conduct remote operations for astronauts. This is especially true for cases of high latency. Focus areas include instrument tracking, haptics, scene visualization/recognition, patient monitoring and diagnostics, administration of anesthesia, physician voice recognition/cueing, RAS training/experience, AI/ML and AR/MR integrations, and expansion of telesurgery use cases (i.e., beyond soft tissue abdominal applications). Initially, telesurgery applications may focus on assisting the CMO vs replacing them. Ultimately, in the absence of an onsite,highlytrainedsurgeon,thedeploymentofarobot capable of confidently performing autonomous medicalprocedureswouldbedesired. [101,102,104, 181]

As with other XR-enabled space applications, one of the main issues to overcome in space telemedicine is communications delay/latency. However, it is worth noting that future cislunar and interplanetary human spaceflightendeavorswilllikelyincludetheestablishment of space stations (i.e., Gateway) and surface outposts. Consequently, in years to come, it is possible that timedominant XR-enabled telemedicine scenarios (i.e., telesurgery) could end up being addressed by doctors based at these locations versus Earth, thereby reducing latency for “in-system” healthcare. This would be similar to teleoperating robots from orbiting spacecraft via LLT techniques in the performance of surface exploration of theMoon(orMars).

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Science fiction-inspired visions of virtual worlds, intuitive human-machineinteraction,andremote“presence”fueled many developments in extended reality technology and techniques [3]. Unsurprisingly, XR has been investigated and applied across a spectrum of use cases throughout NASA’s history, including human-machine exploration, personnel training, spaceflight operations and maintenance, and ensuring the health and safety of astronauts [1,2,4,15,16,17,18]. Notably,asthisreview highlights, aspects of XR (e.g., VR, AR, MR, telerobotics, telepresence) have in many cases evolved synergistically with space exploration requirements, occasionally being directly catalyzed by them (i.e., telemedicine) [50, 52, 69, 75,76,113]. Spaceexplorationinitiativesalsoofferfertile ground for advanced integration of XR subdisciplines to address complex scenarios involving human-machine, human-human, and human-environment interaction; this becomes even richer and more interesting when associated fields such as digital twin and video game technology are factored in [156, 158, 160, 161, 165, 168, 169].

Finally, the importance of XR as an enabler for long duration spaceflight is evident, with significant applications in future cislunar and interplanetary exploration, as promised by programs such as Artemis –elements of which have been in development since 2010 [7,26,71,86] Theincrementalbuild-upofcapabilitieson andaroundtheMoonduring Artemis hasbeendeemedby NASA as “essential to preparing for human exploration ofMars”,anditislikelythatXR applicationsdeveloped to explore our “nearest neighbor” will be ported to the Red Planet [7,26]. Overall,itisclearthatsuchtechnologycan help our minds and bodies voyage to other worlds –literally and virtually – in highly immersive, intuitive, and collaborative ways. We hope this review stimulates interest and serves as a starting point for continued investigations, particularly those focused on using XR to makethethrillofspaceexplorationmoreaccessibleforall ofus.