International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Savan C. Becker1 , Michael E. Johnson, PhD2

1 Department of Engineering, Capitol Technology University, Laurel, MD, USA

2 Adjunct Professor, Department of Engineering, Capitol Technology University, Laurel, MD, USA

Abstract - Extended reality (XR) is by no means a new field, with conceptual and cultural references permeating science fiction (sci-fi) since the early 20th century, and technical origins going back to the 1950s and the 1960s [1, 2]. However, in recent years, advances in computing, visualization, consumer electronics, and communications technologies have made XR more accessible to the public. Since the mid-2010s, games and applications on cell phones, for instance, have seamlessly and ubiquitously started to integrate XR technology into everyday life [3, 4, 5]. This review article provides a source of comprehensive background information that can be used as a foundation for additional research and investigation of XR and its past, present, and future applications. To that end, we discuss technologies and concepts associated with several XRassociated fields, including virtual reality (VR), augmented reality (AR), mixed reality (MR), digital twins, telepresence, and telerobotics. We further include some historical context to enrich appreciation for the origins of these disciplines, the relationships between them, and their impact on society.

Key Words: Technology, Extended Reality, Virtual Reality, Augmented Reality, Robots, Telerobotics, Telepresence, Human-Machine Interfaces, Space Exploration, Science Fiction, Gaming, Video Games, Cyberspace, Digital Twins, Virtual Worlds, Synthetic Environments, Immersive Worlds

Tobeginour review,we notethat“extended reality”(XR) is an umbrella term that, as concisely stated by O’Donnell [4], covers “…all real-and-virtual combined environments and human-machine interactions generated by computer technology.” Thisincludes virtualreality(VR)augmented reality (AR), mixed reality (MR), and – by extension –many forms of telepresence and telerobotic applications [3, 6, 7]. Interestingly, the term “Extended Reality” and usage of its abbreviation in this context were actually coined in 1991 by Steve Mann and Charles Wyckoff, decades before penetrating mainstream awareness [8]. Wyckoff – a photochemical expert and forerunner of high dynamicrange(HDR)photographyinthemid-20th century – invented “XR” or “eXtended Response” film in 1962; XR filmenabledthecaptureofextremelyfastphenomena(i.e., nuclear explosions) with unprecedented detail by using photosensitive emulsion layers with different sensitivities or “speeds” [9, 10, 11]. Mann, with a background in physics and engineering, was a doctoral student at the

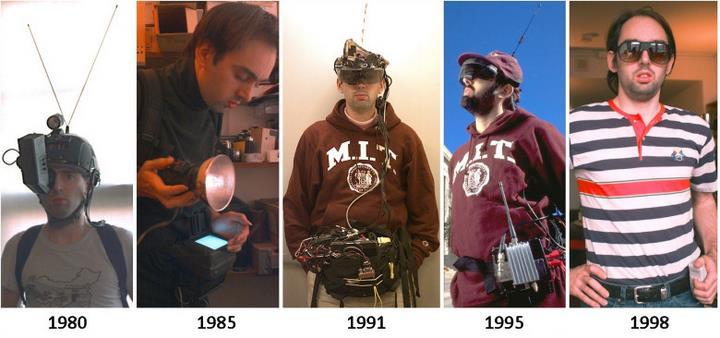

Massachusetts Institute of Technology (MIT) studying media arts and sciences when he collaborated with Wyckofftoconceptualizeandbuild“XRvision”devicesfor early wearable computers [8, 11, 12]. Tangentially, the word “cyborg” was first used by Manfred Clynes and NathanKlineintheirarticle Cyborgs and Space todescribe a human being augmented with technological "attachments" to facilitate space exploration [13, 14, 15]. Mann–whooftenusedtheterm“cyborg”self-referentially – is widely considered the “Father of the Wearable Computer”, inventing the first wearable AR computers, smartwatch,anddigitaleyeglasses–precursorstotoday’s well-known VR/AR head mounted displays (HMD) and otherwearabletechnology (SeeFig–1)[16,17,18,19].

Fig – 1:SteveMann’searlywearableXRdevices.[16]

Virtual reality (VR) is a subset of XR which – in its most widespread, recognizable form – is made possible by a combination of technologies that provides human interfaces into realistic, immersive three-dimensional (3D), simulated/artificially generated worlds or virtual environments (VE) [3, 2, 20, 21, 22]. An immersive VR system introduces users into a 360-degree synthetic reality designed to envelop them with simulated visual and(generally)auditoryinputthatisdistinctfromthereal world. Ideally,thisalternaterealitycompletelytakesover a person’s senses, changes as they move through it via tracking of a person’s head, body, and extremities, and is indistinguishable from “real life” [23, 24]. The artificial environments simulated with VR can range from “mundane” recreations of familiar venues, to challenging, seldom-seen environments (e.g., deep sea, space, planetary landscapes), and entirely fictitious worlds derivedfromsciencefictionandfantasy.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Not surprisingly, as with many technological innovations, VR has very strong roots in science fiction. Historically, manyconsidertheoriginsoftheconcepttobeinthe1935 science fiction story “Pygmalion’s Spectacles” by Stanley Weinbaum, wherein the main character is able to experience an artificial sensorium of a fictional “holographic” world via the use of a pair of goggles [22, 25]. Thefirstdocumenteduseoftheterm“virtualreality” is said to have been in the 1982 novel The Judas Mandala byDamienBroderick[26,27].

The concept of VR was popularized by media in the late 20th century,followingthepromulgationof“cyberspace”–aterminventedbysci-fiwriterWilliamGibsonin1984in his seminal novel, Neuromancer, [28] In Neuromancer, Gibson described cyberspace as “ a consensual hallucination experienced daily by billions of legitimate operators in every nation”; users accessed this ”matrix” neurologically, via brainwave reading electrodes connectedtosleek,sophisticatedconsolesanddecks. Neal Stephensonfurtherdevelopedthisideainhisclassicbook Snow Crash [29],inwhichhackerandpizzadeliverydriver “Hiro Protagonist” navigated a complex future-scape dominated by commercial franchises and sovereign corporate enclaves. The world of Snow Crash is rife with information piracy and viruses that plague the avatars (i.e., virtual bodies) of users who frequently venture into the novel’s vast virtual landscape for entertainment and commerce. This expansive digital domain called the “metaverse” is accessed using sophisticated computer terminalswithadvanced,laser-basedopticsandgraphical renderingcapabilities. Notably,theword“metaverse”was invented by the Stephenson and co-opted years later by Facebook/Meta to describe that company’s XR ecosystem [30]. Arguably,via Neuromancer’s“cyberspace”and Snow Crash’s “metaverse”, Gibson and Stephenson literally precipitatedthemassivelypopular“cyberpunk”sci-fisubgenre and social culture, established a widely accepted view of our technological future, and inspired the commercialdrivetowardit.

Similar concepts in motion pictures such as Tron (1982), The Lawnmower Man (1992), Strange Days (1995), and The Matrix (1999) also contributed to public awareness and fascination with VR technology during the late 20th century. Inoneofthemostwidelyrecognizedadaptations of a virtual reality construct, the television series Star Trek: The Next Generation (1987-1994) featured a fully immersive, multi-user “Holodeck”. Films such as Tron: Legacy (2010) and Ready Player One (2018) continued to promote visions of VR and immersive synthetic worlds to the public into the 2000s, and the mass adoption of the internet broadened familiarity and appreciation for the subject. [20,23,27,31,32,33]

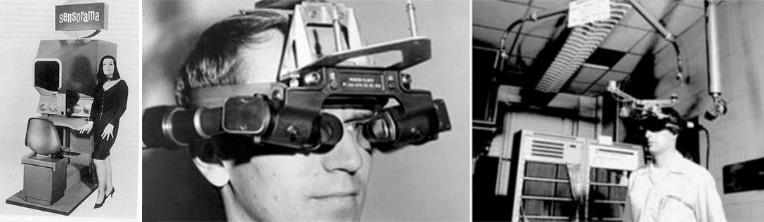

In terms of practical implementation, Morton Heilig, a cinematographer and documentary director, is often credited with the first investigations of the VR concept, beginning with his invention of the “Sensorama Machine” in1962 (SeeFig–2)[20,21,34].

Fig – 2:Heilig’s“Sensorama”(Left)[34]. Sutherland’s “SwordofDamocles”(Center,Right)[25].

Heilig patented this device ten years later, marketing an unsuccessful, arcade-like machine that promised to take “the user into another world” [35]. By combining a 3D motion picture with stereo sound, smell, motion and tactile feedback (i.e., vibrations), the Sensorama provided users with the illusion of activities such as riding a motorcycle, flying in a helicopter, and going on a date [2, 22,34]. Heiligalsosketchedoutpreliminarydesignsfora head mounted display (HMD) – a device designed to immerse the user in a simulated environment; this would becomeacrucialelementofsomefutureVRsystems.

Despite Heilig’s initial forays, many consider Ivan Sutherland’s“UltimateDisplay,”anHMDconceptualizedin 1965, to be the first blueprint for a true VR system – one that the inventor claimed would replicate reality so well thattheuserwouldnotbeabletodifferentiateitfromthe realworld (SeeFig–2)[22,25]. Thisislikelybecausethe Ultimate Display concept incorporated key ideas that widely considered to be fundamental qualities of the VR experience[2,21,36,37]:

Immersion: Using stereoscopic viewing, head tracking, and similar technologies to create the sensation of being within a world created and maintainedbyacomputerinreal-time. Thisincluded augmented 3Dsoundandtactile(i.e.,touch) feedback andtheabilitytointeractwithobjects.

Navigation: Giving the user the ability to roam about theartificialworld.

Sutherland wasof the mindsetthat forthcomingadvances in computer technology would enable complete user immersion within artificial constructs [22, 37]. Significantly, with his student Bob Sproull, he subsequently created the “Sword of Damocles”, the first VR/AR HMD – which integrated computers into the fabrication of simulated environments and head-tracking to enable the navigation of his virtual worlds [25, 34, 35, 38].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Just as William Gibson is considered to be the “father of cyberspace and cyberpunk,” Jaron Lanier – a leading computer scientist and pioneer in multimedia art – has been called the “father of virtual reality” [39, 40]. After working at Atari, Lanier co-founded Virtual Programming Languages (VPL) Research in 1985 with Thomas Zimmerman. VPL was one of the first companies to developandmarketcommercialandindustrialVRsystems including the DataGlove wearable hand interface and the EyePhone HMD (see Fig - 3) [22, 39]. While there are earlier uses of the phrase in science fiction, Lanier is credited with coining the term “virtual reality” in 1987 as a consolidated reference for immersive simulated environments[20,22,27]. Therewerethreekeypointsto thedefinitionattachedtohisterm[41,42]:

The virtual environment is a computer-generated 3D scene,providingahighdegreeofrealism.

The virtual world is interactive, sensing and responding to the user’s input, and reflecting the consequencesviaadisplayorHMD.

Theuserisenvelopedinthisvirtualenvironment.

Often-citedtextssuchas Virtual Reality byRheingold[21] and Silicon Mirage: The Art and Science of Virtual Reality by Aukstakalnis and Blatner [41] provided comprehensive treatments of the history of the developing field of VR, its enabling science and technology, and applications in commercial industry, academia, government, and entertainment; Becker [43] also gave a brief historical review of VR and its applications in the space program until the early 1990s. Captured in these works are descriptions of key enabling technologies and techniques associated with VR systems such as head/body tracking, stereoscopic video, immersive sound, gesture recognition, and physical feedback. Also described are implementations of VR that offered varying degrees of immersionwithouttheuse ofanHMD,includingthe Cave AutomaticVirtualEnvironment(CAVE™),pioneeredinthe early 1990s at the University of Illinois at Chicago. By projecting stereoscopic images on the walls, floors, and

ceilings of a room-sized cube, a CAVE™ system generated an immersive illusion of being in a virtual world; a head/gaze-trackingsystem couldcontinuouslychangethe views so that the user was always presented with a stereoscopic perspective. Inmany ways,the CAVEcan be considered an early version of the “Holodeck” as envisioned in Star Trek and may someday evolve into a fullyrealizedadaptationofitssci-ficounterpart.

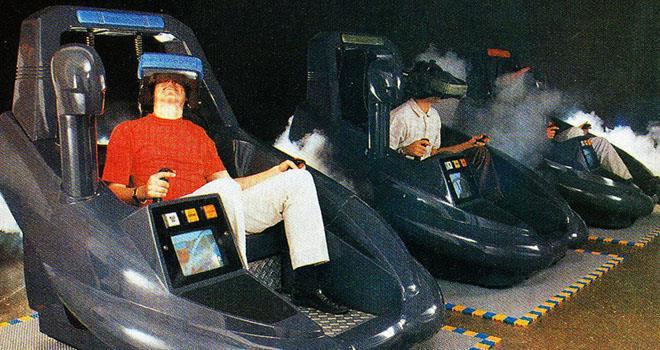

Fig - 4:EarlycommercialVirtualitypods.[25]

One of the first widespread instances of virtual reality for public entertainment was developed by pioneering VR techcompanyVirtualityin 1991[25,44]. Foundedby Dr. Jonathan D. Waldern, who conducted research in virtual reality during his doctoral program, the company developed and distributed specialized VR interfaces and games to arcades and “Virtuality Centers” across the United States [45]. In addition to specialized handheld controllers and joysticks, Virtuality equipment included the “industry standard” 1000CS and 1000SD HMD helmets;1000CS,2000SU,and2000SDstandingplatforms with motion tracking; and the seated 1000SD rig that enabledpilotingofvehicles(seeFig–4).

The 1000CS (Amiga 3000-based) and 2000SU/SD (Intel 486-based) platforms enabled the first ever multiplayer networkedgame forimmersiveVR, Dactyl Nightmare [46, 47]. The game took place on an eerie, multi-level map with tiered platforms, on which players used “grenade launcher” weapons to battle each other and a circling, attacking pterodactyl enemy. Other Virtuality games, distributed by Arcadian Virtual Reality, L.L.C., included robot shoot-‘em-ups, fantasy adventures, space battles, andairandrobotcombatsimulators(facilitatedbythesitdown 1000SD). The company’s streamlined gear was important in establishing the iconic, pop-cultural look of virtual reality tech, and familiarizing the public with VR environments. Many New Yorkers (including one of the authors),forexample,hadtheirfirstVRexperiencewithin a multiplayer version of Dactyl Nightmare, installed in 1991 at Manhattan’s South Street Seaport “Virtuality Center” [48]. While Virtuality is no longer in business, ownership of its technology (and Arcadian software) has transferred to ViRtuosity Tech, Inc., which continued to

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

market industrial and entertainment VR systems as of 2024[49].

Since the introduction of VR into mainstream culture in the 1990s, the philosophical and practical definitions of what constitutes “virtual reality” became somewhat open to interpretation. Other terms and phrases – including “cyberspace,” “synthetic environments,” and “artificial reality” – becameused synonymouslywithVRinindustry and media, contributing to ambiguity over what is and isn’t considered a VR system. As an example, in Castronova’s book Synthetic Worlds: The Business and Culture of Online Games –arelativelyearly,comprehensive look at the emerging online gaming experience – the authorcoinsthenowpervasiveterm synthetic world while describingVRtechnology:

“SofarIhavebeenreferringtothetechnologyinquestion asa‘practicalvirtualreality”tool,awaytomakedecently immersive virtual reality spaces practically available to just about anyone on demand. For the most part, I will refer to these places as synthetic worlds: crafted places insidecomputersthataredesignedtoaccommodatelarge numbers of people… places created by video game designers. Chancesare,ifyouare under35yearsoldyou know exactly what that means, because you have been playing in synthetic worlds since you were a kid and you knowthattheyhavemovedonline.”[50]

This description includes concepts that are core to online/networked console and PC-based games –particularlythosesetinexpansive,interactivemultiplayer artificialenvironments,gameworlds,or virtual worlds (see Fig–5). Similarly,evensincethe1990s,manydesktopor “look-through”systems–ascharacterizedbyvideogames and industrial implementation such as commercial flight simulators–havesometimesbeenconsideredformsofVR at its lowest level, since they can provide sophisticated interaction with a simulated environment [21, 43, 51]. This is especially true when advanced monitors/displays, graphicscards,andinputdevicesareused.

More recently, a robust article by Cipresso et al. [36] captured several definitionsofVRgleaned fromliterature on its developmental history that echo three common facets: immersion, interaction, and the perception to be present in an environment. Achieving these depends greatly on the types of technologies used to deliver the experiencetotheuser. Consequently,“VR”canrangefrom non-immersive (i.e., traditional desktop screens/monitors), to semi-immersive (i.e., stereographic 3D monitors), and more fully immersive implementations that take advantage of high-definition HMDs, multidimensional sound/audio, spatial/movement tracking, and robust physical feedback for the user, including haptics

Haptic feedback is a technology that uses the sense of touch to communicate physical sensations from actual or simulatedreal-lifeinteractionstoauser,suchaspressure, vibrations, and textures, and other “touch-related” sensations [7, 52, 53] This is often done with the use of haptic-capableinterfacedevices(e.g.,helmets,controllers, gloves, body suits) equipped with actuators and transducers designed to interpret and relay physical feedback. Haptics have become another cornerstone technologyassociatedwithXRgames/applicationsandare used to maintain the illusion that users are immersed in physicalenvironment(simulatedorremote)[7,54,55].

Fig – 5 : Gameworldsfrommultiplayer,networkedvideo games(e.g.,Bungie’s Destiny)shareVRprinciples.[51]

A review of literature quickly reveals substantial overlap and often interchangeable usage of several terms and concepts within the field of haptics, including force feedback, kinesthetic feedback, tactile feedback, and cutaneous feedback. However, parsing the multiple interpretations leads to two general categories of haptic feedback[52,53,56,57,58,59,60]:

Kinesthetic (or Force) Feedback: Applies to interaction forces between a user and a virtual (or remote)objectorenvironmentalelements. Generally, thistypeoffeedbackrelatestosensationsreceivedvia thelimbs(e.g.,arms,hands,legs,feet,head,etc.)ofthe user, and is also responsible for spatial and temporal awareness of the movement and position of those extremities. Kinestheticfeedbackcanenableauserto perceive the size, weight, and density of an object through physical sensation, and can be passively implemented (i.e., by impeding the user’s movement) and/oractive(i.e.,byactuallymovingtheuser).

Tactile(or Cutaneous) Feedback: Informationpassed through sensors in the skin from simulated (or remote) objects and environmental stimuli, with particular reference to the spatial and temporal pressure,contact,ortraction(e.g.,pulling,pushing,or grip) sensations. This type of feedback can be responsibleforreplicationoftexture,shape(including edges),temperature,andvibrations.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

In academic literature, cutaneous feedback is often nuanced as the form of tactile feedback that deals particularly with the sense of touch – i.e., sensation directly associated with feeling against the skin [58, 60, 61]. Furthermore, thermal feedback (e.g., temperature) and electromagnetic feedback (e.g., using magnetic fields to simulate touch) have also been isolated as subcategories of physical feedback [53]. However, in commonusage(particularlyforconsumerproducts)allof these disciplines are typically lumped under the umbrella termof“haptics”. Overall,theinclusionofany/allofthese physical feedback mechanisms can significantly enhance thelevel ofimmersionfora userandleadtoanincreased degree of presence – an important concept to consider in thefieldofVR/XR.

As VR was gaining academic and cultural traction in the early1990s,ThomasSheridan[62]–pioneeringroboticist and professor in MIT’s Department of Mechanical Engineering–considereditsconnectionstotheconceptof presence Sheridan[62]describedpresenceasasensation that is difficult to describe, being “subjective… and not so amenable to objective physiological definition and measurement.” However,hesuggestedthattherecouldbe some crude measurements of presence in relation to VR, particularly in light of emerging technologies such as “…high-density video, high-resolution and fast computergraphics, head-coupled displays, instrumented ‘data gloves’ and ‘body suits’, and high-bandwidth, multidegree-of-freedom force-feedback and cutaneous stimulationdevices.”

Sheridan [62] went on to posit three principal determinantsonthesenseofpresenceinavirtualworld:

1. The extent of sensory information: The density of transmitted “bits” of information to appropriate sensorsavailabletotheuser.

2. Control of relation/orientation of sensors to environment: Such as, the ability of the observer to modifytheirpoint-of-viewtoenablevisualparallaxor anexpanded field-of-view,change theirheadposition toalteraudiotheresponseofstereo/binauralhearing, and perform haptic (i.e., physical feedback-driven) searchesoftheirsurroundings.

3. Ability to modify the physical environment: The amountofcontrolandinteractionavailabletoactually changeobjectsin theenvironmentortheirrelationto oneanother.

Since then, researchers have devised various models to frame the concept of presence in synthetic environments. Tangential to Sheridan, Michael McGreevy – a pioneer of VR implementation at NASA – was developing the first

inexpensive,widefield-of-view(WFOV),trackedHMD(see Fig–6)[25,34,63]. McGreevyalludedtothesignificance of grounding a participant in a virtual world, indicating that:

“…connecting the user’s space and the virtual display space is essential for creating a sense of presence in a virtual reality. Several strategies can be used to support this linkage by building a model of the user into the simulation system. Typically, however, the observer is hardlymodeledatall,other thanasa pinholecamera,the simplestmodel ofthe eye. Still,eventheminimal pinhole camera model of the user can be used as a spatial link betweentheuserandthedepictedscene.”[63]

Fig – 6: NASA-firstlow-costWFOVtrackedHMD.[25,34]

McGreevy’s thoughts recognize the technological limitations of the time – i.e., the basic “pinhole camera” perspective characteristic of many early VR systems. However, his suggestion that building a “model of the user” is a valuable strategy in helping to link participants to artificial worlds echoes throughout future XR efforts that seek to improve the sense of connection, interaction, and presence within a synthetic space via the inclusion of avatars. Infact,nearly30yearslater,astudypublishedby then NASA Human Research Program Senior Scientist Katherine Rahill and colleague Marc Sebrechts suggested that the similarity of an avatar to the person it represents can impact performance and subjective experience in computergames[64,65]. Moreover,theauthorsputforth that enabling the individual to have a role (i.e., agency) in constructing and customizing their avatar can also enhanceperformanceandasenseofpresence,noting:

1. Thesimilarity/dissimilarity ofanavatar'sappearance to players' characteristics influenced performance, presence, and perceptions of performance and control.

2. Avatarconstructionsource(i.e.,player-constructedvs. externally constructed) of an avatar influenced performance, presence, and perceptions of performance.

3. The sense of presence evoked by player-construction andplayer-similarityshowedlimitedcorrelation with gameperformance.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Lombard and Ditton [66] described presence as being relatedtothe“…illusionthatamediatedexperienceisnot mediated.” Drawing from then-available literature and research on computer-mediated (i.e., generated and/or delivered) experiences, their work highlighted several facetsofpresenceanditsrelationtorealism,including:

Presence as Realism: Defined by the degree to which accurate representations of actual objects, events and peoplecanbeproducedinamediatedenvironment.

Presence as Transportation: The degree to which a mediatedenvironmentcantransportausertoanother place; objects or people from another place can be transported to a user; or, multiple users can be transportedtoasharedspace.

Presence as Immersion: Which factors in the psychological component of feeling “absorbed, engaged,orengrossed”inasyntheticenvironment.

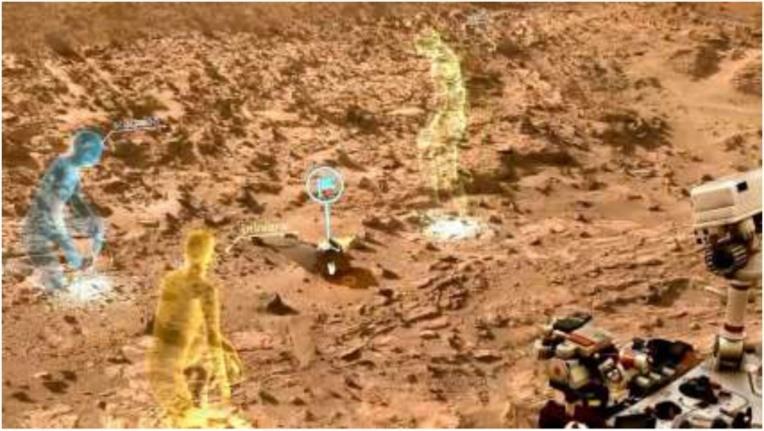

Fig – 7: VRmissionplanningforNASAMSL/MER.[68]

Later on, Riva and Waterworth [67] postulated “Three Layers of Presence” for a synthetic environment, wherein the sensation is maximized when all three layers are integrated around the same object or situation Notably, this framework was used to study the experience of presence by NASA’s Mars Science Lab (MSL)/Mars Exploration Rover (MER) telerobotic mission controllers, who also used VR for mission planning (see Fig – 7) [68]. Thethreelayerswerecharacterizedas:

Proto-Presence: Relates how perception and action are correlated when we intuitively and unconsciously interact with objects. In particular this involves lowlevel forms of guidance and monitoring of our body partscalled“micro-movements”or“motorintentions” (M-intentions) that occur without “thinking” about them. High levels of Proto-Presence result when our unconscious movements accurately correspond to consequences. It is an evolutionary consequence of the “proto-self” needing to model the external world based on our intuitive motions. The M-Intentions

associated with Proto-Presence operate over a timescaleof10-300ms.

Core-Presence: Relates to higher level actions representing conscious forms of guidance and monitoring directed at specific objects. These are “present-directed intentions” (P-intentions) that initiatebasedonaparticularsituation,orinconscious response to a specific object. They are content/contextually anchored in the present/nearterm(i.e.,300msto3s)ina“do this ina responseto that”kindofway.

Extended-Presence: A result of successful attainment of long-term goals based on planned activities and imagined future situations. Extended-presence can manifest as a result of exclusively mental activities –in which the participant considers their possible actions,thesignificanceofthoseactions,andprobable consequences. These “distal-intentions” (Dintentions) take place over “narrative” timescales –i.e., greater than 3 s. Extended-Presence can be maximized when future goals are attainable and consistent with the participant’s identity within the worldorstorytheyresidein.

Interestingly,RivaandWaterworth(2014)suggestedthat “cybersickness”mightbearesultofconflictbetweentheir firsttwolevelsofpresenceinVR:

“Inanawake,healthyanimalinthephysicalworld,protopresence and core-presence will rarely if ever be in conflict. This is an aspect of presence in the physical worldthatisveryhardtoduplicatewithinteractivemedia such as VR. In fact, in VR there is always some degree of conflictbetweenthesetwolayersand,whenitissevereor the participant is particularly sensitive, so-called ‘cybersickness’(essentiallya formofmotionsick- ness)is acommonresult.”[67]

Several years later Cipresso et al. highlighted the significance of presence as a philosophical and practical concept in virtual world creation, indicating that a user’s experienceinVRcouldbecharacterized:

“…by measuring presence, realism, and reality’s levels. Presenceisacomplexpsychologicalfeelingof‘beingthere’ in VR that involves the sensation and perception of physicalpresence,aswellasthepossibilitytointeractand react as if the user was in the real world. Similarly, the realism’s level corresponds to the degree of expectation that the user has about the stimuli and experience. If the presented stimuli are similar to reality, VR user’s expectations will be congruent with reality expectation, enhancing VR experience. In the same way, higher is the degree of reality in interaction with the virtual stimuli, higher would be the level of realism of the user’s behaviors.”[36]

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

This synopsis reflects the focus of many other studies in the 2000s that explored the importance of presence and realism in VR [69, 70, 71, 72]. Van Gisbergen et al. [72] suggested that realism could refer to the act of reproducing something that is “known and familiar to the observer” from the “real, non-mediated world”; or somethingthatisperceivedasrealintheabsenceofanya priori knowledge of that object. Research conducted by Van Gisbergen [71] suggests that presence and realism in VRarecommonlyheldtobeenhancedbyacombinationof fourenablingtechnologies:

Sensory: Technologiesusedtoaccesscontent.

Interaction: Technologies used to explore, navigate through, and participate in virtual environments; can range from simple keyboards to advanced headsets, goggles,hand-heldcontrollers,andgloves.

Control: Technologies that enable users to regulate real-time interaction with the virtual environment, providing the latitude to determine where to look, what to look at, how long to look at it, etc. This includes technologies that enable manipulation and alteration of the VE, and those that allow sharing of virtual content and social interaction in the virtual world.

Location: Technologies used to automatically locate and track the user in the physical and virtual world, including GPS, inertial measurement devices, optical and wireless sensors, and tracking of eye and body movements. This incorporates technologies that are frequently implemented in portable smart devices (i.e.,cellphones)andwearabledevices.

These concepts of presence and realism and their impact on the user experience in VR are becoming more important to understand as industrial, commercial, and consumertechnologybecomesmorecapableofgenerating realistic virtual worlds. It is clear that establishing a strong sense of presence in XR environments is likely to grow in importance as technology becomes more advanced and the experiential expectations of users increasecommensurately.

Atpresent,virtualrealityusuallyrequiresarelativelyhigh degreeofcomputerprocessingpowerandoftenrequiresa “VR-ready” gaming computer to render more sophisticated simulations and run more intensive applications[24]. Whilealternativeimplementationsexist (i.e., CAVE systems), HMDs (e.g., “goggles” or a “headset”) are the most ubiquitous sensory interfaces used in consumer-level VR systems, since these devices can provide immersive experiences by placing the user in an artificial world that is as separated from the “real” environment as possible. This is reflected in the may commercial VR systems that emerged in the 2010s and 2020s produced bycompaniessuchasOculus,Meta,HTC,

Microsoft, Google, and Apple [25] The many varieties of HMDs available can be connected to a PC, console, or smart device (wired or wireless), and can sometimes also functionasstandalonedevices[4]

Current HMDs typically use high-resolution displays enabled by light-emitting diode (LED) or organic LED (OLED) technology [73]. Spatially tracked via external sensors, cameras, and/or inertial measurement units (IMUs), HMDs are usually paired with hand-held controllers that are similarly monitored. Advanced systems feature integrated hand tracking, body mapping, and even facial expression recognition capabilities for the rendering of avatars that represent the user in the synthetic environment. Noteworthy commercially available HMD-based VR systems in the 2024-2025 timeframe included (see Fig – 8) [23, 25, 33, 74, 75, 76, 77,78]:

MetaQuest2,3,andPro

HTCVIVEXRElite,Flow,ProandFocus

SonyPlayStationVR2

AppleVisionPro

HPReverbG2

Fig – 8: ClockwisefromTopLeft:MetaQuestPro,HTC VIVEPro,AppleVisionPro,MetaQuest3.[75,76,77,78]

ThespectrumofcommercialVRgearmarketedforgaming andindustrialapplicationscontinuestoevolveintermsof technicalsophistication,applications,andintegrationwith each manufacturer’s ecosystems of hardware and software. With advances in technology, HMDs have become smaller,lighter, and morecapableover the years. Also – while full immersion is usually the goal – VR headsets are often equipped with cameras that scan their environments for tracking, safety, and “look-through” functionality to merge synthetic and real-world elements [73]. Theseadvancements, andtheproliferationofhighly sophisticated mobile devices including smartphones, smartwatches,andwearable“smartglasses”,enablemany commercial “VR” systems to offer additional capabilities

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

across the XR continuum – including augmented reality (AR)andmixedreality(MR)functionality[24,73,76].

Augmented reality (AR), like virtual reality, is a subset of XR and the two disciplines share many technologies and dependencies. However, whereas VR attempts to construct and envelop users within a fully immersive synthetic environment, an AR system is based on the operator also maintaining a sense of presence and interoperabilitywith theactualworld. Augmentedreality systems incorporate mechanisms and displays that combine real and simulated objects, allowing the user to distinguishtheseobjects. Azuma etal. [79]indicatedthat anARsystemshould:

Combine real and virtual objects in a real environment

Registerrealandvirtualobjectswitheachother

Runinteractivelyinreal-time.

Essentially, with AR physical reality is “augmented” by dynamically overlaying digital elements onto the participant’s view/perception of the real-world, thereby enhancing the experience of the environment without replacing it; AR systems also routinely integrate haptic feedback[4,21,76]

Theterm“augmentedreality”wascoinedbyphysicistand researcherTomCaudell in 1990 while working atBoeing, when he and co-author David Mizell described the design and implementation of “…a heads-up, see-through, headmounted display… combined with head position sensing and a real-world registration system” for use in the aerospace industry [5, 81, 82]. Very early head’s up displays(HUDs)suchasthoseusedinfighteraircraftwere precursorstoARtechnologyinthattheydisplayedcritical information within the line-of-sight of a pilot or system operator (i.e., on a transparent visor or cockpit window/screen). However, they often did not have the computingpowerandtracking/registrationcapabilitiesto seamlessly integrate virtual data with the field-of-view (FOV) view of the user’s environment and associate information with entities within that FOV [83, 84]. The implementation envisioned by Caudell and Mizell would, forexample,enabledatatobesuperimposeddirectlyonto a real-world object for a technician during the aircraft manufacturing process by tracking and registering what they were looking at. Since then, many contemporary HUDs in aircraft/spacecraft cockpits have been enhanced toincludeAR capabilities[83,85]. Similarly,operators of remotely piloted vehicles (RPVs) and drones can use ARcapable headsets to steer their craft beyond visual range

with aid of real-time video, providing a first-person view (FPV) overlaid with flight-data and navigational information [86]. This kind of capability is widely

availableincommercialandrecreationaldronesandRPVs as well, allowing hobbyists to fly vehicles using ARenhanced smartphones equipped with on-screen virtual controls,and/orintegratedwithhandheldcontrollers[87, 88].

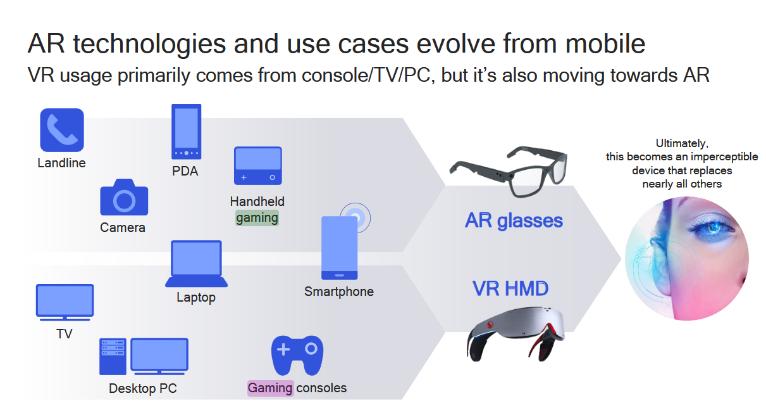

Since Caudell and Mizell conceived their industrial application for augmented reality several developments have made AR one of the most widespread and popular versions of XR, including: advancements and miniaturization in computing and sensor technology (e.g., cameras, proximity sensors, accelerometers, gyroscopes, etc.); relatively low-cost integration of these components into personal smart devices (i.e., cellphones); and, the advent of high-performance mobile/wearable displays and“smartglass”[5,89,90].

In fact, because so many people own smartphones, augmented reality technology has become the most accessible type of extended reality. AR apps use phone cameras to capture imagery and video of the real world –often dynamically – and overlay virtual objects onto the scene so that users can see them on the screen in a composite view [3, 4] One of the best examples is the immensely popular AR game Pokémon GO, released in 2016 by Niantic [3, 73, 91, 92]. Pokémon GO enables players to search for, locate, and capture Pokémon (i.e., “pocket monsters”) scattered throughout the “real world” using an AR-enhanced mobile device interface and GPS locationaldata.

Fig – 9: Meta’sOrionARglassesperspective.[80]

Specially designed glasses and headsets can provide even more sophisticated AR experiences by projecting digital data right in front of the user’s eyes and adding virtual objectsintotheirperceptionoftherealworld[4] InApril 2013, Google introduced one of the first commercially available smart glasses called Google Glass, with the goal of putting smartphone functionality, including internet

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

access, into a wearable set of slim glasses [93, 94]. Soon thereafter, in 2016, Microsoft released its HoloLens augmented reality goggles amidst much less hype than Google Glass, which had met with marginal success and hadbeen recastasan “EnterpriseEdition” targetedat the business and industrial sectors [94, 95]. Notably, the HoloLens had its technical roots in Microsoft’s Kinect device – a 2010 add-on for the Xbox gaming console that enabledtrackingofplayers andinterpretation ofgestures into commands for immersive gaming experiences [95, 96]; while the Kinect was discontinued in 2017, it still findsusebydevelopersintheWindowsenvironment[97]. GoogleGlasswasofficiallydiscontinuedin2023,butatthe time of this writing, Microsoft’s more advanced and well received HoloLens 2 is still being sold, offering advanced AR capabilities focused on technical and business applications[98,99].

As cited earlier, several recent commercial XR headsets aimedateverydayusersprovideARfunctionalityaimedat gaming and personal applications, including the MetaQuest 3 and Pro [76], Apple Vision Pro [78, ], and Snap’s AR Spectacles [100]. Additionally, in 2024 Meta revealed the prototype for its Orion AR Glasses – a much morecapableevolutionoftheRay-BanMetaSmartGlasses marketed the year before (See Fig – 9) [101, 102, 103, 104]. Meta [104] characterized the Orion as the “most advanced pair of AR glasses ever made”, and a “feat of miniaturization” that ”… bridges the physical and virtual worlds, putting people at the center so they can be more present,connectedandempoweredintheworld.” Notable features, which highlight many desired characteristics of consumerARdevices,include[76,102,104,105]:

Lightweight, “stylish”, and designed for indoor and outdooruse.

Multi-uservideo,audio,andtextcommunication.

Collaborative, dynamic interaction with 2D and 3D digitalcontentblendedintothephysicalworld.

Shared digital experiences with multiple users, that canbelocalorlocatedaroundtheworld.

Micro LED projectors, enabling a holographic, highclarity WFOV display (70 deg), with transparent lensesthatenableuserstoseeeachother’sfaces.

Wireless multitasking (i.e., multiple simultaneous applications), enhanced by a high-performance pocket-sized“computepuck”.

Advancedinputcontrol–includingvoice,eyetracking, hand tracking, and a new electromyography (EMG) wristband that enables subtle input in low-light, free ofexternalsensors.

Hapticfeedback.

Seamless, “multimodal”, contextual AI (i.e., Meta AI) that enables sensing, interpretation, and understandingoftheworldsurroundingtheuser.

While the initial version of Orion was not intended for retail release, it was described by Meta as a “Purposeful

ProductPrototype”andwasprovidedtoalimitednumber ofMeta employeesin2024 fortestingand evaluation[76, 104]. Thiswouldallowtheirdevelopmentteamto“learn, iterate and build” towards the consumer AR glasses product line expected 2-3 years later – focusing on tasks like fine tuning the AR display quality, optimization of components to further reduce the form factor, and scaled manufacturing to make the glasses more affordable [102, 104]. Orion was hailed as possibly “game-changing” and “prettyfar-out”bynotedcriticsandreviewersspecializing in XR technology, making the release of the consumer versionseagerlyawaited[102,105].

In perhaps the most advanced attempt at streamlining AR/MR technology to date, companies such as Sony and Mojo Vision have planned and prototyped “smart contact lenses” that will enable users to experience XR environmentsandseamlesslyrecordimagesandvideosof therealworld[106,107,108,109]. Asthehistoricaltrend for XR technology indicates, these lenses arguably represent the logical next step in the evolution of canonical HMDs beyond smart glasses – largely driven by miniaturization, advances in communications and display technology,humanfactors,andwidespreadconsumeruse cases (e.g., gaming and work/personal productivity) (See Fig – 10) [110, 111]. Consequently, contact lens-like overlays may become the leading XR interface of the future – at least until Gibson-inspired neural systems and/or Star Trek-likeHolodecksemerge.

Augmented reality (AR) and mixed reality (MR) are so closely related that they can be very hard to distinguish, andthetermsareoftenusedinterchangeably[3]. Bothof thesesubsetsofXRblenddigitalandreal-worldelements. However, a main distinction could be that while AR overlays digital data onto a real-world perspective – and can register those overlays with physical objects – it does not necessarily require interaction with those physical objects. AsstatedbyMeta:

“ARoverlays don’t interact withtherealworld,thoughyou may be able to interact with them depending on the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

softwareinuse. MRisanothermatter. Itoverlaysthereal world with digital elements and the physical and digital objectsco-existand react toeachotherinreal-time.”[112]

BothAR andMR are enabledby VRtechnologies,and this shared dependency can help illuminate the subtle distinction. AccordingtoGreenwald:

“…mixed reality is when primarily VR headsets incorporate AR aspects into their use. All major VR headsetscurrentlyavailablehaveoutward-facingcameras that can scan your surroundings and provide a view of what's around you. Virtual reality becomes mixed reality whenthosesurroundingsfactorintowhatyou'redoingin theheadset. Itcanbeassimpleassettingbordersaround you,soyoudon'ttripoveranythingintherealworld,oras complicated astaking detailedmeasurements offurniture and building the virtual environment to reflect those physicalobjects.”[73]

InMR,digitalandreal-worldobjectsbydefinitioninteract with each other leading to a kind of “hybrid reality” that usually requires more processing power than with AR or VR [90]. Moreover, MR can facilitate face-to-face communication and collaboration between people in virtualspaces[4]. TosupportanticipateddemandforMR applications,Microsoftannouncedsupportforcommercial headsets based on its “Windows Mixed Reality” software platform – an extension to the Windows 10 operating system in 2016 [113, 114]. Numerous headsets based on WindowsMixedRealitywereproduced,includingthoseby manufacturerssuchasHP,Dell,Lenovo,SamsungandAcer [114,115,116].

However, possibly due to poor marketing and controller design issues, in 2023 Microsoft decided to discontinue supportforWindowsMixedRealityheadsetsbeginningin 2027 [113, 117]. Nevertheless, it vowed to maintain production and support of the HoloLens 2, which is the basis for the U.S. military’s Integrated Visual Augmentation System (IVAS) which provides enhanced situational awareness to soldiers on the battlefield [118, 119]. Further,MicrosofthasrecentlypartneredwithMeta toproducelimitedrunQuestVR/MRheadsetsforusewith the Xbox, bring Microsoft applications to Meta Quest devices, deploy Microsoft Teams communication capability to Quest headsets, and integrate Xbox Cloud Gaming with the Meta Quest XR application store [120, 121, 122]. Many of these integrations will presumably extend to the forthcoming retail version of Meta’s Orion ARglassesaswell[104].

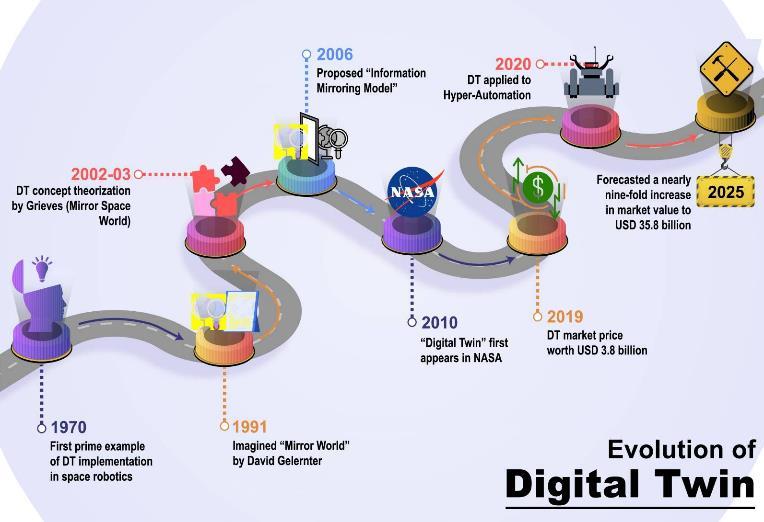

In reflecting on how VR and AR/MR can facilitate modeling of the physical world and enhance human interactionwithit,itisimportanttomentionarecentlyreemerging concept known as the digital twin (DT) [123, 124]. AccordingtoPurdy et al.[125],a digital twinis“an

item, person, or system that can be updated continuously with data from its physical counterpart.” The term was initially coined by Jonathan Vickers and Michael Grieves while they were working at NASA in 2002 (see Fig – 11) [126]. However,seminalconceptsrelatedtothenotionof the digital twin were first put forth by computer scientist David Gelernter in his 1991 book Mirror Worlds: Or: The Day Software Puts the Universe in a Shoebox... How It Will Happen and What It Will Mean [127, 128]. In his text, Gelernter(1991)statedthat“MirrorWorlds”:

“…are software models of some chunk of reality, some piece of the real world going on outside your window. Oceans of information pour endlessly into the model (through a vast maze of software pipes and hoses): so much information that the model can mimic the reality's everymove,moment-by-moment…”[127]

It is not difficult to see how these ideas may have influenced Grieves, who had been studying replicative simulation methods at NASA as ways to improve product lifecycle management (PLM) for manufacturing. At first referring to the concepts as “mirrored spaces model,” “information mirroring models” and “virtual doppelgangers”, Grieves ultimately adopted the “digital twin” monicker while working with Vickers, who was tryingtocaptureandapplysimilarconcepts(seeFig–12) [129,130,131,132]. In2023,Vickerswasstillservingas aprincipaltechnologistatNASAandGrieveswasthechief scientistforNASA’sDigitalTwinInstitute[131,132].

thedigitaltwinconcept.[126]

Going further back, the first methods resembling digital twin techniques were initially referred to as “living models” by NASA in the 1960s and applied to the historic Apollo 13 mission to train astronauts and mission controllers via then high-fidelity simulators [133]. This approachcontributed tothe successful returnof thecrew toEarthfollowingtheexplosionofanoxygentankontheir spacecraft while enroute to the Moon in 1970 [126, 133].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Interestindigitaltwintechnologycontinuedattheagency, as exemplified in a study by Glaessgen et al., who promoted the application of the techniques toward the designanddevelopmentof futureNASAandU.S.AirForce aerospacevehicles. Theydescribedthedigitaltwinas:

“…an integrated multiphysics, multiscale, probabilistic simulation of an as-built vehicle or system that uses the best available physical models, sensor updates, fleet history, etc., to mirror the life of its corresponding flying twin. The Digital Twin isultra-realistic and mayconsider one or more important and interdependent vehicle systems, including airframe, propulsion and energy storage, life support, avionics, thermal protection, etc.” [134]

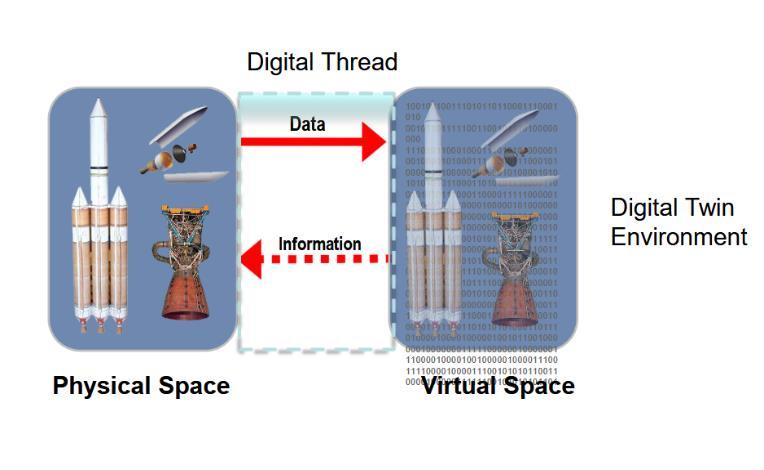

Sincethen,severalresearchersindictedthatinorderfora modeltobeaccuratelydescribedasadigitaltwinitshould becharacterizedbybi-directionalvs.one-wayinformation flow between the physical system and the simulation/replica; otherwise, the “clone” would just be consideredadigitalshadow(DS)[126,135]. Assuggested byBergsetal.:

“…theDigitalShadowcanbeseenasapreliminarystageof a Digital Twin. The DS sets the basis for the DT contributingmeasurementdataormeta-dataattributedto a specific object with spatial or temporal reference, but does not yet describe the physical object itself with its properties… On the other hand, an asset's DT is a virtual representation of its physical state. State changes are describedbymodelsandsupportedbydata.”[136]

In support of this interpretation, NASA Senior Technologist for Intelligent Flight Systems B. Dannette Allen provided several additional definitions of the DT while discussing the history and application of these methodsatthe2021DigitalTwinSummit(heldvirtually). Notably,asattributedtoIBM[137]:

“A Digital Twin is a virtual representation of an object or system that spans its lifecycle, is updated from real-time

data, and uses simulation, machine learning, and reasoningtohelpdecision-making.”[133]

While the digital twin is a distinct concept, there is considerable discussion in industry about how the convergence of DT- and XR-related technologies will be a powerful combination [123, 124, 138]. Basing VR and AR/MRenvironmentsondynamicallyupdatingstreamsof real-world information, for example, can enable researchers to immerse themselves in evolving simulations based on current data. As the disciplines evolve and continue to overlap, it is likely that DT techniques will have an impact on the design and implementation of immersive synthetic worlds for academic, industrial, and commercial purposes, and also be used to enrich implementations of remote user experiencesviatelepresence.

The term telepresence was coined in 1980 by Marvin Minsky,a noted Americanscientist inthefieldofartificial intelligence (AI). Minsky used the term as a reference to teleoperation systems and applications for remote object manipulation, augmented by enhanced sensory feedback for the user that could assist them in completing complex physical tasks at a distance [139, 140, 141]. Generally speaking, the central goal of telepresence is to enhance a human user’s sense of “being” when introduced into a synthetic/replicated environment or involved in remote controlled, computer-mediated operations. As such, telepresence can be considered a subset of extended reality, as it is enabled and facilitated by XR technologies. However,telepresenceisfrequentlyreferencedwithmore experiential, phenomenological, or philosophical terms –particularwhenmoreimmersiveformsarepursued. Very often, telepresence is connected to the discipline of telerobotics and implemented to enhance human teleoperation ofremotesystems.

As the term implies, telepresence is the conceptual and literalextensionofthesensationofpresence,asdiscussed earlier in relation to VR. Drawing from Minsky’s original description, Sheridan [62] explained telepresence as “…feelinglikeyouareactually‘there’attheremotesite of operation”anda“…senseofbeingphysicallypresentwith virtual objects at a remote teleoperator site.” Basically, both VRand telepresenceseek tocreatea senseof“being inanotherworld.” However,whereasabasicrequirement for pure VR is that the user is placed into a synthetic environment, telepresence usually exposes the user to a rendition of an actual distant or otherwise inaccessible environmentandenablesinteractionwithphysicalobjects within that remote space. To facilitate the illusion of presence, it is typically useful to replicate the distant environmentfortheuser“locally” withXR technology. In

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

thissense,telepresenceisprobablymorecloselyrelatedto MR,andspecifically“MRatadistance”.

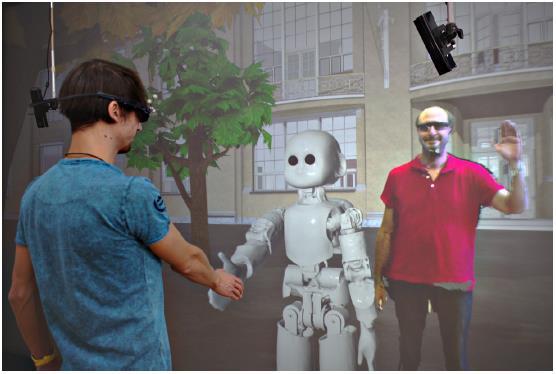

Fig – 13: Developmentaltelepresencedemonstratedby Matthesetal.usingVRNeuroroboticsLabs.[144]

Contemporary telepresence implementations run the gamut from consumer-level high-definition (HD) interactive video teleconferencing equipment, to experimental, fully immersive 3D systems. Some advanced telepresence systems can surround multiple, geographically separated people with a highly realistic virtual rendition of a shared environment populated with actualphysicalobjects,permittingtheuserstoexperience, move, and interact as if they were in proximity to each other; this includes the provision of a “virtual body” (i.e., avatar) within the mediated environment (see Fig – 13) [139,142,143,144,145].

Telepresence interfaces are frequently pursued for systems that benefit from enhanced degrees of realism, immersion, and/or responsiveness, such as high-fidelity remote surgical applications and operation of robots for remote exploration [146, 145, 147]. Haptic interface devices are often integrated into such telepresence systems, employing force-feedback and sensations that providetactilestimulationtoreplicatethemanipulationof real objects. Significantly, given that telepresence is computer- and technology-mediated experience, a user’s capabilitiesneednotbeconstrainedbyhumanlimitations.

A telepresence-enabled system can be designed to replicate what a human would naturally experience in a remote environment or expand on that experience with additional capabilities such as advanced/remote sensing, enhanced mobility, and mechanically aided means to interact with remote physical objects. Exploring this idea leadsusintotheconnecteddisciplineof telerobotics

Largelyasaresultofsciencefictionliteratureandcinema, the term “robot” typically conjures images of anthropomorphic, artificial, autonomous beings that can

think for themselves, interact with humans, and affect their environments. Film-inspired examples span the spectrum from “Robby the Robot” in the 1956 film Forbidden Planet [148,149]andandroid/droid“C-3PO”of the Star Wars Franchise [150], to actor Sean Young’s portrayalof“Rachael”–thealluringandlifelike“replicant” in director Ridley Scott’s 1982 cinematic masterpiece, Blade Runner (see Fig – 14) [151, 152, 153]. Widespread use of robotic technology in industry, manufacturing, and domesticconsumerproductsinvokesmoreprosaicvisions ofmechanicalautomataasworkforcehelpersthatperform repetitive or labor-intensive tasks, such as vacuuming carpetsorbuildingautomobiles.

Fig – 14: Robots,androids,and“replicants”fromsci-fi; RobbytheRobot,C-3PO,andRachael.[139,141,144]

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008

Thomas Sheridan [154] defined a robot as a “reprogrammable, multifunctional manipulator designed to move material parts, tools, or specialized devices through variable programmed motions for the performance of a variety of tasks”. This might seem straight forward, but a precise academic definition of a robot can be challenging to attain, with degrees of independence, environmental interaction, and sophistication of behavior usually at the heart of the question. Nevertheless, a survey of opinions held by leading roboticists yields some commonly held characteristics of a robot, including [140, 155, 154, 156, 157, 158]:

Autonomy: Robots generally have some level of independence, with a fully autonomous robot requiring no human input or external control to accomplish intended or pre-programmed tasks For very sophisticated tasks, full autonomy likely entails that the machine incorporates a degree of artificial intelligence. Some robots can move and act completelyontheirown;othersrequiresomeformof remote control or human supervision – as can be the casewith telerobotics,discussedbelow.

Sensing: Robotsaretypicallyequippedwithhardware and/or software sensors that take measurements, respond to events, and send signals to computer controllers and/or motors and actuators to understandandeffectchangesintheirenvironment.

Computing: Robots are controlled by computers or carry out computations to perform decision-making, often employing repeated sensing-computing-acting

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

cycles called “feedback loops”. The information processing algorithms and feedback/control methods used bysome roboticcomputerscan besophisticated enoughtomanifesthuman-likebehaviorandartificial intelligence.

Actuating/Manipulating: Robots usually perform actions in the real world, or actions that have effects and consequences on real-world systems (i.e., computer systems and networks). Some robots are physically mobile, and some can handle objects using actuators – either on their own initiative or under somedegreeofhumancontrol.

Furthermore, in the definition for robot contained in its Standard Ontologies for Robotics and Automation [159],the Institute of Electrical and Electronics Engineers (IEEE) acknowledges the idea that robots can work with pure “software agents” (i.e., “bots”), and can act collaboratively in concert with other robots and people toward common objectives:

“Robot: An agentive device in a broad sense, purposed to act in the physical world in order to accomplish one or moretasks. Insomecases,theactionsofarobotmightbe subordinated to actions of other agents, such as software agents(bots)orhumans. Arobotiscomposedofsuitable mechanicalandelectronicparts. Robotsmightformsocial groups, where they interact to achieve a common goal. A robot (or a group of robots) can form robotic systems together with special environments geared to facilitate their work. See also: automated robot; fully autonomous robot; remote-controlled robot; robot group; robotic system; semi-autonomous robot; teleoperated robot.”[159]

Overall, robots as machines comprised of software and hardware can range from very simple to extremely complex mechanisms designed to perform single tasks or fill multiple, versatile roles. In the case of telerobotics, humans can leverage the advantages robots provide by remotely operating them to conduct operations in distant or dangerous environments [160, 161]. Telerobotic systems can assist human operators by handling complex processes required to achieve results, freeing the controller to focus on aspects of tasks that require more skill, innovation, or attention. As succinctly stated by renownroboticistandVR/XRexpertDr.Goldberg:

“…a telerobot is a robot that accepts instructions from a distance, generally from a trained human operator. The human operator thus performs live actions in a distant environment and through sensors can gauge the consequences ”[140]

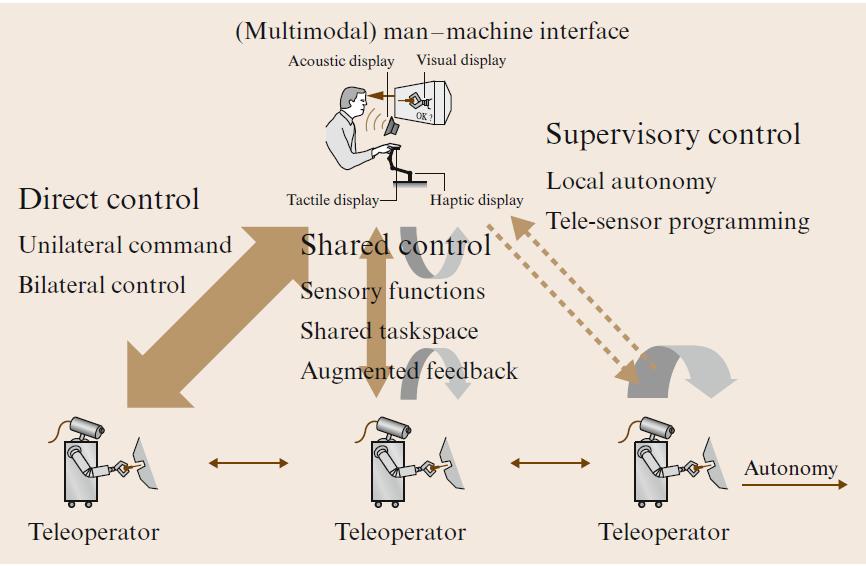

Sheridan [154] indicated that there are three classic controlmodesforteleroboticoperations:

1. Continuous manual control under the direction of a (human)operator.

2. Intermittentmanualcontrol.

3. Fully autonomous operation, for extended periods of timeorcontinuously.

Fig – 15: Niemeyeretal.illustrationof common teleroboticcontrolmodels.[162]

Consistent with those concepts, system designers and engineers have devised various levels of autonomy and control schemes for telerobotic systems to address different scenarios and tasks (see Fig – 15) With partial autonomy or supervisory control, for example, an operator is responsible for defining the overall higher-level goals forthetelerobotbasedonitscurrentsituation [160,162]. The controller will assess the situation, set objectives, transmit commands to the telerobot, and assess the outcome; prior to doing this, the operator may program and simulate the command session in a synthetic environment. Although this type of machine can handle somecomplexprocessesautonomously,itisstilldesigned to perform its functions under the overall control of a person.

Supervisory control can be carried out in near real-time. However,it isparticularly useful insituations when delay –or latency –isappreciative,butnotsubstantialenoughto preclude the operator from monitoring results and responding to situations to effect changes within a timeframe that does not necessarily jeopardize mission accomplishment. This gives the operator more creative control over the situation. A computer in a telerobotic arm on an interplanetary probe, for example, can independently “close” the required control loops in responsetotasksgeneratedbythehumancontroller. But thearmisstillultimatelybeingsteeredtowardatargetby an operator, who is being fed information from the telerobot’ssensors.

With shared control, thereis a higherdegreeof autonomy present in the robot to dynamically assist the operator in completing tasks [160, 162]. The user can also rely on these higher levels of autonomy to execute something called traded control, which allows them to monitor or

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

overseearobot’sindependentactionsforshortperiodsof time and still take over completely when required. This leadstothenotionof direct or manual control, whereinthe operatorhasfullcontroloverthesystemallthetime. The human user can control the telerobot in real-time/near real-time (depending on the latency) or opt to do so only whenmachine faces tasksorobstacles itdoes not “know” how to deal with. In such “master-slave” systems, an operatoruserwilltypicallyusesomeformofcontrollerto physically direct the telerobot’s actions (e.g., joystick, mouse,keyboard,gamecontroller,etc.)[160,162]. Haptic elements can be integrated into telerobotic humanmachine interfaces (HMI) to enable bilateral control in shared or direct control systems; this “bidirectional control” uses force feedback and tactile interfaces to facilitate more intuitive operation of the telerobot [160, 162].

While telerobotics is not necessarily a subset of the extendedrealitydomain,itisstillverycloselyrelatedtoit. Dr.Goldbergindicatedthat:

“Virtual reality presents a simulacrum, a synthetic construction. Withtelerobotics,whatisbeingexperienced is distal rather than simulacral. While VR admits to its illusory nature, telerobotics claims to correspond to a remotephysicalreality.”[163]

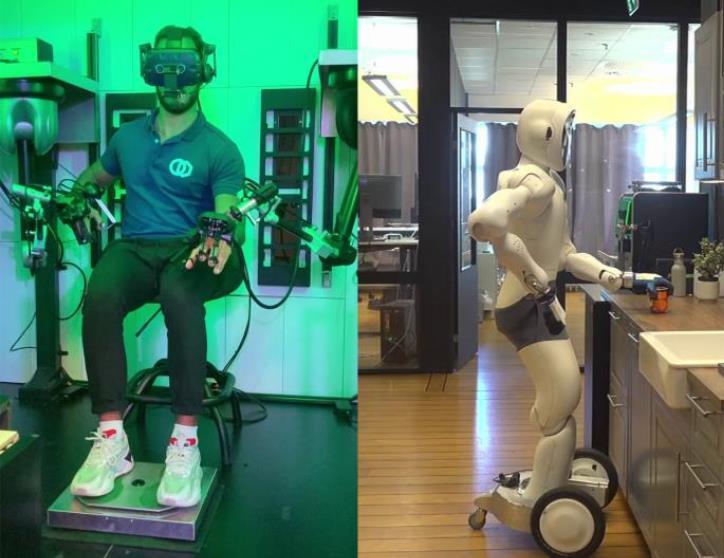

Consequently, designers of telerobotic systems often leverage a spectrum of XR concepts and technologies to achieve more believable local recreations of inaccessible (ordangerous)environments,emulateahuman’spersona in a distant location, and/or provide a user with more complete information or “human” sensation during machine-enabledremoteoperations [160,162,164,165]. Virtual reality can be used to simulate environments in which telerobotic operations are performed, and AR/MR interfacescanbeusedtoenhanceandinformanoperator via overlaid/interactiveinterfacesthatinteractivelyblend data and actual perspectives of the “real world”. Fully immersive telepresence-enabled telerobotic systems requireHMIs advancedenoughto completely replicate all of the sensations and qualities that could be (safely) experienced by the operator – including sight, sound, taste, smell, and haptic feedback (touch, pressure, force) [56, 166, 167, 168]. Such systems also require intuitive controls capable of translating typical human physical actionstothetelerobot;and,incaseswheretherecouldbe interactionwithhumansorothertelerobotsatthedistant end, sophisticated sensors might be needed to accurately translateandrelayhumansocialcues/behavior[160,162, 169]

Fig – 16: J.VanErpetal.demonstrateimmersive telepresencetelerobotics.[169]

Immersive telepresence is often discussed as the ultimate control system for telerobotics (see Fig - 16) [169, 170, 171, 172, 173]. In their chapter on “Telerobotics” in the Springer Handbook of Robotics,onNiemeyeretal.highlight thisbysayingthattelepresence:

“…promises to the user not only the ability to manipulate the remote environment, but also to perceive the environment as if encountered directly. The human operatorisprovidedwithenoughfeedbackandsensations to feel present in the remote site. This combines the haptic modality with other modalities serving the human senses of vision, hearing or even smell and taste. The master–slavesystembecomesthemedium throughwhich the user interacts with the remote environment and ideally they are fooled into forgetting about the medium itself. If this is achieved, we say that the master–slave systemis transparent.”[162]

Therefore, removing the “illusory nature” of XR to the greatest extent feasible, and providing the most natural, intuitive human interaction with remote environments (and other entities) are goals of many telepresence and telerobotic systems. In pursuit of these objectives, researchers and developers often explore the concepts of transparency and embodiment,whicharecloselyrelatedto the previously mentioned XR principles of presence and realism

The term “transparent telepresence” was coined by designerandengineerGordonMairinthelate1990swhile hewasonstaffasaseniorlecturerandresearchadvisorat the University of Strathclyde in Glasgow, Scotland. Mair defined transparent telepresence as "the experience of being present interactively at a live real-world location remote from one's own immediate physical location"

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

[174], and established the Transparent Technology Research Group (TTRG) at the University with the mission:

“To create, through research and development, the first telepresence system that allows a user to experience the sensation of being fully present at a remote site. This implies that the interface between the user and the surrogatepresencewillbecompletelytransparent."[175]

The TTRG was organized under Strathclyde’s Design Manufacturing & Engineering Management department, and–asrelatedbyMair[174]–wascomposedoffull-time researchers and graduate students; TTRG staff also oversaw projects for undergraduate and postgraduate students Government and industry provided funding totalingmorethan£2M,andprojectswereworkedjointly with the Departments of Computer Science, Architecture, Civil Engineering, Psychology, and Mechanical Engineering.

Mair and the TTRG [175] ardently promoted and investigated the characteristic of transparency in telepresence, “…in which the technological mediation is transparent to the user of a telepresence system.” According to their paradigm, an awareness of the HMI diminishes the sensation of presence, and full transparency would be achieved when the user was not aware they were being connected to a remote environment withinterveninghardwareorsoftware. The groupproposedthatcomplete"presence"inthemediated worldwouldbepossibleifthepropertechnologycouldbe developed to make the interface as imperceptible as possible,allowingforcompleteimmersion[175]

In the course of their research, experimentation, and prototyping,theTTRGexploredandidentifiedkeysystem elements and enabling technologies required to support transparency in telepresence. These included [174, 175, 176]:

Advanced“RemoteSite”visual,aural,haptic,olfactory, and vestibular sensors, possibly based on anthropomorphic platforms (i.e., sensor platforms withhumancharacteristics).

Ergonomic “Home Site” equipment, interfaces, and controls for the user/operator. Minimally, Home Site equipmentshouldfeaturestereoscopicvisualdisplays (i.e.,HMDs,panoramicscreens);high-fidelity,spatially immersive audio displays (i.e., speakers and headphones); olfactory displays for smell replication; haptic displays that provide touch and kinesthetic feedback;andvestibularfeedbackmechanismstofeed the body’s proprioceptive system and impart a sense ofmotion.

Image compression, decompression, and stereo coencoding algorithms that can provide high resolution andfastframerates.

Optimized communications bandwidth and protocols toachievepredictablethroughputandlatencies.

The TTRG garnered a decent amount of niche attention, with features in numerous newspaper articles and television appearances including an appearance on the popular British science show Tomorrow's World, which focuses on developments and future trends in technology [174,177]. In2001,Mairestablishedaspin-outcompany totakeadvantageofsomeofthetechnologytheTTRGhad createdforlowbandwidthtelepresenceandteleoperation overmobilephonenetworks[174] Althoughhedissolved thecompanyin2006for“avarietyofreasons”andretired from Strathclyde in 2015, Mair continued to work and author books in the engineering and manufacturing industries. Notably, Mair declared his firm belief that science fiction informed many of developments in telepresence and presaged its future – citing works such as Neuromancer [28] and Snow Crash [29] as critical inspirations for the drive toward immersive synthetic environments and full transparency [178]. Along these extrapolated lines of fiction-inspired development, Mair andtheTTRGmentioneddirectbraininterfaceandcontrol as an example of a method that might eventually achieve fulltransparency[176].

The notion of transparency in telepresence, particularly when considered within the realm of telerobotics, led to the conceptualization of embodiment Embodiment refers to theoretical and practical ways of understanding and mimicking the sensation of using one’s own “real-world” bodywhenassociatedwiththerepresentationofanavatar in a synthetic domain, or projection of a person into a remote physical environment [179, 172]. In short, as stated by J. Van Erp et al. [173], “…embodiment may be considered as ultimate transparency” in a mediated experience.

According to a theoretical construct offered by A. Haans and W. IJsselsteijn [180], embodiment depends on replicatingauser’s:

Morphology: Thephysicalcharacteristicsofthebody.

Body Schema: The “dynamic distributed network of procedures”that“combinestheindividualpartsofour morphology into a coherent functional unity” and guidesinteractionwiththeenvironment.

Body Image: “Consciousness” that the body and any synthetic extensions of it (e.g., virtual or robotic) are actually owned byanindividual.

Similarly, A. Toet et al. [172] suggested that achieving embodiment goes beyond the “technical” solutions that fostertransparencyinamediatedexperienceandinvolves “…recruiting brain mechanisms of telepresence (i.e., the perceived relation between oneself and the environment) andbodyownership”. Theypositedthreekeycomponents ofsensoryexperiencerequiredtoachieveembodimentas:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

SenseofSelf-location(“Self-Location”): Thefeelingof locationinspace;facilitatingasensationofcollocation betweenavirtualandrealbody.

Sense of Ownership (“Ownership”): The feeling that non-bodily objects are part of one’s own body; fostering the “realism” of a virtual body via temporal correlationofvisualandtactilestimulation.

Sense of Agency (“Agency”): The feeling of being the “author” of an observed action; promoted by efficient andeffectivemotorcontrol.

– 17: J.VanBruggenetal.demonstratingtheI-Botics AvatarSystem,ANAAvatarXPRIZEentry.[169]

These ideas were further developed and expanded upon by Prof. Jan Van Erp and fellow researchers at the University of Twente, The Netherlands, who highlighted the importance of multisensory cues in attaining the “illusion” of embodiment, including haptics, touch, temperature,smell,andairflow[169].

Achieving full transparency and embodiment in telepresence and telerobotics is not necessarily solely dependent on replication of a user’s physical body and capabilities in/through a mediated environment. J. Van Erp et al. [169] also suggested the importance of transporting/replicating both the functional self and the social self through the mediated environment. The functional self is related to “…spatial presence, task performance, and interacting” with objects in a virtual or remote environment; the social self pertains to attaining social presence via the ability to interact with and “feel connected to” people in remote environments. According to the researchers, embodiment depends on “…synchronicity in multisensory information, a firstperson visual perspective, human-like visual appearance and anatomy of the telepresence robot” and “…the important bidirectional social cues it needs, including eye contact, facial expression, posture, gestures, and social touch.” They summarized additional sensory and social cuesrequiredtoevokeembodimentandsocialpresencein telepresence/teleoperationasfollows[169]:

Sensory Cues (Embodiment): Realistic point of view; posture; visual-motor-proprioception synchrony; connection between body parts (i.e., anatomically correct);visual-tactilesynchrony;visualappearance.

Social Cues (Social Presence): Realistic eye contact; facial expression; non-verbal sounds; eye gaze and blinks; gestures, movement, orientation, and posture; touch;proximityandpersonalspace.

J. Van Erp and his colleagues integrated these ideas into the design and development of a fully integrated telerobotic avatar teleoperation system, convicted that “…the feeling that you are the telepresence robot implies that there is no longer a mediating device”; and that “…ultimate transparency may lead to lower cognitive workloadandfasterlearning”[169]. Thesystemfeatured a universal control pod that could be utilized by an operator to control multiple types of telerobots, including a humanoid version called “Eve”, and a more functionally orientedfour-leggedanimal-likesystemcalled“ANYmalC” [169,173,181]. Ofnote,thissystem–dubbedtheI-Botics AvatarSystem–placed5th intheinternationalANAAvatar XPRIZE competition – which awarded $5M to the team that could “create a robotic avatar system that could transport human presence to a remote location in realtime” (see Fig – 17) [181, 182]. The University of Bonn sponsored NimbRo, an immersive and intuitive robotic telepresence system that incorporated many similar concepts and architectural considerations, claimed first prize[183,184,185].

With roots in science fiction, applications in industry and exploration,andstrongconnectionstopopcultureandthe modern consumer electronics/entertainment market, extended reality (XR) concepts and technology have permeatedsocietyformanyyears. Inthisarticlewehave reviewed and cross-referenced sources that provide foundational information relating to the historical, conceptual, and technical development of XR and associated subdisciplines, including virtual reality (VR), augmented reality (AR), mixed reality (MR), digital twins, telepresence, and telerobotics. These inter-related domains often use similar technology to facilitate humanmachine and human-human interaction in synthetic or replicatedenvironments –oftenremotelyoratadistance. And,inmanywaystheyhaveevolvedfromoneanotherin an almost organic way, responding quite rapidly to advancements in enabling technologies and emerging use cases. However, perhaps the most interesting and fascinatingconsequenceofthepursuitofXRinitsvarious forms is how it has iteratively challenged us to consider howandwhyweexperiencetheworldaroundus–realor rendered. We hope this review stimulates interest and serves as a starting point for continued investigations by XRresearchersandenthusiastsalike

TheauthorswouldliketothanktheirfamiliesandCapitol TechnologyUniversityforsupportingthisresearch.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

[1] M. Colagrossi, “The history of VR: How virtual reality sprang forth from science fiction,” Big Think, Apr. 19, 2022. Available: https://bigthink.com/technology-innovation/thehistory-of-vr-how-virtual-reality-sprang-forthfrom-science-fiction/(AccessedJan.10,2024).

[2] Lumen and Forge, “The history of virtual reality,” Lumen and Forge, Nov. 02, 2023. Available: https://lumenandforge.com/the-history-of-virtualreality/(AccessedJan.10,2024).

[3] T. Gerencer, “What Is Extended Reality (XR) and How Is it Changing the Future?,” HP® Tech Takes, Apr. 20, 2021. Available: https://www.hp.com/usen/shop/tech-takes/what-is-xr-changing-world (AccessedJan.10,2024).

[4] M. O’Donnell, “Extended Reality: An overview of augmented,virtual,&mixedreality,”Launch,2023. Available: https://www.launchteaminc.com/blog/extendedreality-an-overview-of-augmented-virtual-mixedreality(AccessedJan.10,2024).

[5] B. Poetker, “A Brief History of Augmented Reality (+ Future Trends & Impact),” G2.com, Aug. 22, 2019. Available: https://www.g2.com/articles/history-ofaugmented-reality(AccessedAug.02,2023).

[6] W. R. Sherman and A. B. Craig, Understanding Virtual Reality, 2nd ed. Morgan Kaufmann, 2018. doi:10.1016/C2013-0-18583-2

[7] J. Jerome and J. Greenberg, “Augmented Reality + Virtual Reality: Privacy & Autonomy Considerations in Emerging, Immersive Digital Worlds,” Future of Privacy Forum, Apr. 2021. Available:https://fpf.org/blog/fpf-report-outlinesopportunities-to-mitigate-the-privacy-risks-of-arvr-technologies/(AccessedJan.10,2024).

[8] Mann and Wyckoff, “Extended Reality,” Massachusetts Institute of Technology, vol. 4, no. 405, 1991, [Online]. Available: http://wearcam.org/xr.htm

[9] LIFE, “Tools to Reach Beyond the Boundaries of Man’s Vision: Slow-fast film catches both the brightestandthefainteststagesofanuclearblast,” LIFE, no. Vol. 61, No. 26, pp. 112–113, Dec. 23, 1966.

[10] S. Mann, “The Wyckoff principle,” WearCam.org, 2002. Available: http://wearcam.org/wyckoff/index.html (AccessedJan.16,2024).

[11] A. Prabha, “Could XR be the next big thing?,” TechHQ, Jan. 08, 2019. Available: https://techhq.com/2019/01/could-xr-be-thenext-big-thing/(AccessedJan.16,2024).

[12] K.O’Neill,“HDRpioneerlookstofutureofwearable tech,” alum.mit.edu, Oct. 04, 2023. Available: https://alum.mit.edu/slice/hdr-pioneer-looksfuture-wearable-tech(AccessedJan.16,2024).

[13] N. Kline and M. Clynes, “Cyborgs and Space,” Astronautics, Sep. 1960, Accessed: Sep. 11, 2024. [Online]. Available: https://web.mit.edu/digitalapollo/Documents/Ch apter1/cyborgs.pdf

[14] A. C. Madrigal, “The Man Who First Said 'Cyborg,'50YearsLater,”TheAtlantic, Jul. 28, 2011. [Online]. Available: https://www.theatlantic.com/technology/archive/ 2010/09/the-man-who-first-said-cyborg-50years-later/63821/

[15] C. L. Smith, “50 years of Cyborgs,” The Guardian, Oct. 02, 2010. [Online]. Available: https://www.theguardian.com/theobserver/2010 /oct/03/50-years-cyborgs

[16] J. Atwood, “Steve Mann, Cyborg,” Coding Horror, Jul. 12, 2007. Available: https://blog.codinghorror.com/steve-manncyborg/

[17] P. Clarke, “ISSCC: ‘Dick Tracy’ watch watchers disagree,” EE|Times, EE Times, Feb. 09, 2000. Accessed: Jan. 16, 2024. [Online]. Available: https://www.eetimes.com/ISSCC Dick-Tracywatch-watchers-disagree/