International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Harshada V. Talnikar1 , Snehalata B. Shirude2

1 Asst. Prof. Institute of Industrial and Computer Management and Research, Nigdi, Pune. Maharashtra, India

2 Asst. Prof. School of Computer Sciences, K. B. C. North Maharashtra University Jalgaon, Maharashtra, India

Abstract – As numerous and heterogeneous-structured data is available, it is the challenge to identifyindividuals with expert knowledge on a specific topic. This paper provides an analysis and review of different Information Retrieval (IR) models. One of the models is elaborated focusing on its experimentalresultsandeffectiveness.Literaturestudydepicts that, when user searches documents to find an expert for any particular domain, in earlier IR models it often produces a ranked list of documents according to relevance. Modern IR algorithms are designed for achieving high mean average precision and better recallrates.Thesemodelshavechallenges in balancing precision, recall, and computational efficiency. We conducted experiments on the publication details of different experts with a single research domain. We analyzed expert finding models- BM25, TF-IDF, and Latent Dirichlet Analysis and it is observed that in domain specific expert retrieval, BM25 ranking function outperforms other ranking methods, if corresponding precision and recall metrics are considered.

Key Words: Expert finding, information retrieval; relevance, precision, recall, expert finding models, BM25, ranking functions.

Incurrentscenario,thereisgrowinginterestfortheexpert findingtask.Itinvolvesfindingalistofpersonwithrecent knowledgeorexpertiseinrequiredspecificdomain.Research has focused on developing and implementing methods for identifying experts within specific academic disciplines. If anyonesearchesexpertsinaspecificacademicfieldthenit has many applications, as research consultation, forming reviewers’ panels [1], organizing experts’ speech and reviewingpapersasapartofaPeerReviewProcessetc.[2, 3].

Thepaperaimstoexploretheexpertsfindingtaskwiththe helpofmodelimplementationinrecentresearchfieldrelated to academic domain. Our approach of study to find the experts in academics relies heavily on acquiring a robust datasetofpublicationsthatcanserveasabasisforassessing expertise.GoogleScholarservesasavaluableinitiativefor retrieving the necessary data for expert determination purpose.GoogleScholarconsidersaresearcher’spublications asrepresentativesoftheirexpertise[3].Eachpaperrecordin

GoogleScholartypicallyincludesonlythetitle,whichcanbe used for calculating the relevance of papers to specific queries.Therefore,weuseitasasupplementarydatasource [4].

We propose three models for expert finding, considering GoogleScholarasadatasource:

This model determines an expert by finding the relativity with associated documents. It pre determines some prior probabilityfortherelateddocuments,whichenhancesthe performance compared to the other basic expert finding modelstermedaslanguagemodels.

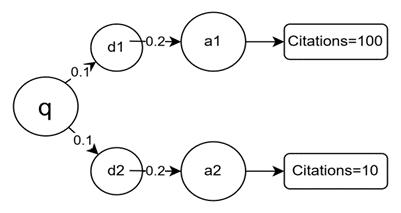

The language model evaluates the relation in a query and relevant documents to number an expert’s expertise. For instance,takeaqueryqwithequalrelevanceprobabilityof 0.1fordifferentdocuments,d1,d2ofauthors’a1aswella2 respectively, with association probabilities of 0.2. It is assumedthat,d1has100citations,whiled2hasonly10.In thebasiclanguagemodel,a1anda2areconsideredequally likely experts for topic q. However, considering citation countsrevealsthatd1ismoreimpactfulthand2,makingit moreintuitivetoconsidera1asthestrongercandidate.

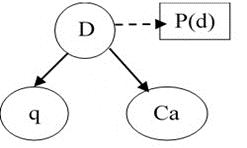

Toovercomethelimitations,anovelweightedmodelisused. It integrates the prior probability p (d), representing the likelihoodthatadocumentispublishedbyanexpert.Prior, probability depends on the document’s importance and considersfactorslikecitationcounts.

Consider D represents set of related documents and Ca denotes expert candidate [5, 6]. If combined both the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

document’s relevance to a query and its significance, the author’sexpertisemaybedeterminedthroughscrutiny.

Intopicbasedmodels,expertcandidateisdenoted asweightedmixtureofalltherelevanttopics.Suchstructure evaluatesthesignificanceofrelateddocuments.Itenhances results compared to the language model. This approach associates each expert candidate with multiple topics and enablestherankingbasedontheweightedcombinationof thetopics.

Hybridmodelintegratestheadvantagesofweighted language model as well the topic-based model. Here the systemisevaluatedusingtheinputdatasetandjudgmentsto assess how the experts supports those query topics. It is observed that, the hybrid model is effective as compared withothermodels.

Literaturesurveyfocusesonquery-dependentand query-independent strategies for expert finding. In these methods, expert finding model searches expert related documents.Thenusingthatinformation,itcalculateshow much the candidate is expert [7]. The approach which is query independent [8] involves constructing a “virtual document” or profile for each candidate [9], using all documents associated with them, and determining their rankingscorerelativetoauserquerybasedonthisprofile. Oppositetothis,thequery-dependentapproach [10] begins byrankingdocumentsinthedatasetcentredonthequery and ascertains possible experts using the subgroup of retrievedinputdocuments [11] .

Westudiedstrengthsandweaknessesofeachapproach [7] Query-independentprofilesareoftenmuchsmallerinsize comparedtotheoriginalretrieveddata,makingthemmore useful for data storage and management. However, this approach does not allow for evaluating the individual contributionofeachdocumenttotheprofile,whichcanlimit itseffectiveness.Incontrast,theapproachwhichdependson query facilitates use of enhanced techniques for text sculpting to rank specific documents, offering a more accurateassessment.

Differentstudiedexpertfindingmodelscompriseof followingprimarycomponents:

Candidate: Aresearcherorpersoneligibleasan expert.

Document: Thediversesourcesofexpertiserelateddata.

Topic: Thespecificdomainorareaofexpertise [4] .

Theexpertfindingtasktypicallyinvolvesfivekeyaspects:

a. Selection of Expertise Evidence (Data Sources): This step involves identifying and extracting documents relevant to expertise, which serves as the base for discovering experts. Relevant data about individuals must be gathered to determine theirexpertiseforthespecificdomain.

b. Expert Depiction: The major goal of any expert finding system is searching inferred data that locatesexpertiseandhelpsusersinevaluatingand selecting the most relevant experts. This requires identifying which information will be helpful for userswhoareunfamiliarwiththeexpertsinmaking decisions.Expertiseisgenerallyassessedthrough documentedevidence,relationships,andcontextual factors,suchashumaninteractions.

c. Model Development: Model building considers pre-processing,ranking,andretrieval.

i. Pre-processing and ranking: Similar to document retrieval systems, this involves creatingindexes.However,expertiseretrieval introduces additional challenges, such as identifying expert identifiers (e.g., names, emails)withindocumentsandintegratingdata from diverse sources. Pre-processing techniques,suchasremovingstopwords,are adaptedfromtraditionalinformationretrieval (IR)systems [1].Therearemanychallengesas handling ambiguities like different names referring to the same individual or identical names belonging to different individuals. Techniques like disambiguation and Named EntityRecognitionarecritical[12].Forexample, some systems use matching methods which arerule-basedtodefineexpertsthroughexact matches, initials with last names, or email addresses [13]

ii. Modelling and Retrieval: In this phase it constructsmodelstotiecandidateexpertsand user queries to rank them centred on the strength of relativity [1]. It includes common approaches as probabilistic models, network graph-basedmodels,votingsystems,andother hybridtechniques [4]

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

d. Evaluation of Models: An expert finding system model is evaluated on the basis of its accuracy to retrieverelevantexpertstoresponduserqueries. Thetestdatasetsareusedtoevaluateefficiency[14] e. Interaction Design: To present expert search results to users is the challenging task. The list containing only expert names is not sufficient to evaluate a candidate's expertise [15]. It needs snippetswhichareabletoreviewquickly.Soitis essential that, result pages should contain additionaldetailssuchasnumberedlistsofrelated documents, publications or projects, conferences, journals, affiliations, contact information, photos, andlinksetc.To enrich results,linksforpersonal homepage are given using supervised learning methods [16-18] .

Thisdocumentistemplate.Weaskthatauthorsfollowsome simpleguidelines.Inessence,weaskyoutomakeyourpaper lookexactlylikethisdocument.Theeasiestwaytodothisis simplytodownloadthetemplate,andreplace(copy-paste) thecontentwithyourownmaterial.Numberthereference itemsconsecutivelyinsquarebrackets(e.g.[1]). However the authors name can be used along with the reference number in the running text. The order of reference in the runningtextshouldmatchwiththelistofreferencesatthe endofthepaper.

2.1. Generative Probabilistic Model:

The generative probabilistic model evaluate existing associationsbetweenqueryandexpertbymanipulatingthe probabilityofspecifictopicgeneratedbycandidatee,given thathe/sheisexpertonqueryq.Itisrepresentedas(P(e| q)).

Followingtwomethodsareusedtoestimatethisprobability: i. Candidate generation model: This method directly generates candidates based on query domain (q) and the usedprobabilitymodelforidentifyingtheexperte. ii. Topic generation model: ThismethodreliesonBayes’ Theorem,anditisexpressedas:

eq.(1)

As the result of our studies, content-based generative probabilistic models [20] have been explored to rank researchers’profiles:

1. Okapi BM25: (OKAPI) Herearesearchers’profilesare represented as a collection of terms. Two profiles arecompared,whereoneistreatedasaqueryand theotheristreatedasanindividualdocument.

2. Kullback-Leibler Divergence: (KLD) Inthemodel,a researcher's profile is considered as a probability distribution. Generally it is a multinomial distributiononterm frequencies.KLD derivesthe similarity between these distributions and rank thoseprofiles.

3. Latent Dirichlet Allocation: (LDA) Here researcher profileisrepresentedasmixturesoftopics,rather thana singledistribution.This methodisuseful if researchersarewithdiverseinterests.

4. Trace-Based Similarity: (REL) This model uses density based matrices. These matrices denote vector subspaces which capture complex relationshipsbetweenterms.

Various research papers have applied these techniques to tasks like finding similar experts within a university [21] , recommending beneficial collaborators [22], and forming researchgroupsfromheterogeneousdocuments.

Further for the implementation purpose we studied the documentbasedmodelOkapiBM25indepth.OkapiBM25is a popular ranking function in Information Retrieval (IR) knownforitsaccuracy,especiallywhendealingwithshort documents [23].It'sbasedonaprobabilisticretrievalmodel.

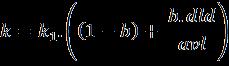

TheBM25formulaconsidersseveralfactors:

Term Frequency: DenotedasTF.Itrepresentshow manytimesatermappearsinadocumentorquery.

Inverse Document Frequency: DenotedasIDF.It represents how rarely a term is in the entire documentset.

Document Length: It is length of the input document.

Here

P(e)istheprobabilityofanycandidateebeinganexpert. P (q) is the possibility of the query q. As P (q) remains constantforaninputquery,itisignored. Thus, the probability of a candidate expert e for inputted queryqisproportionatetothatquery.Itisweightedbythe priorcertaintyinthecandidate’sexpertise [19]

Query-dependent and document-based methods search relevantdocumentstoagivenquery.Thenrankcandidates ontheconsiderationofagroupingofdocumentsignificance andindividual’scorrelationtothosedocuments.

Query Term Weight: Itsignifiestheprominenceof aterminthequery.

eq.(2)

Here, qisuserquery. Nistotalnumberofdocumentsretrieved. nt istotalno.ofdocumentswhichcontaintermt.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

representsnumberofoccurrenceofthetermtinthe documentdreferredastermfrequency. denotesnumberofoccurrenceofterm(t)inqueryq.

eq.(3)

Dldisnumberoftermsinddocument Avlisaveragedocumentlength b, , areparameterusedfortuningwhichhasvaluesas b=0.75, k1=1.2,������ =1000,thatcanbeadjustedtofinetunetheranking.

Byconsideringthesefactors,BM25effectivelygrades documentsbasedontheirimportancetoaninputted queryq,makingitvaluabletoolinsearchenginesand otherinformationretrievalapplications.

ThepseudocodeforBM25implementation:

Begin

Step 1: Tokenizequeryanddocumentsintoindividual terms. //Tokenization

Step 2:

Foreachdocumentincorpus: //CalculateTerm //Frequency(TF)

Foreachqueryterm:

ComputeTermFrequency=Countoftermt occurrenceinthedocumentd.

Step 3:

Foreachqueryterm: //FindInverseDocument //Frequency(IDF)

CalculateIDF= log ((Totalnumberofdocuments+1) /(numberofdocumentscomprisingtheterm+1)).

Step 4:

//AdjustTF //basedondocumentlength

Computeaveragedocumentlength=totallengthofall retrieveddocuments/Totalretrieveddocuments.

Foreverydocumentincorpus: foreachqueryterm:

AdjustedTF=TF/(1-b+b*(documentlength/ averagedocumentlength)) //'b'refersthetuningparameter.

Step 5:

Foreverydocumentincorpus: //ComputeBM25 //Score

InitializeBM25Score=0.

Foreachqueryterm:

ComputeweightedTF-IDFscore=AdjustedTF* IDF.

AddweightedscoretoBM25Score. ReturnBM25Scoresforalldocuments. End

BM25iseffectivemodelasithastheabilitytobalanceboth term frequency and document length.It does parameter tuning.Theparametersk1andbareadjustedtofine-tune the algorithm for particular datasets. The model treats documents as a collection of words irrespective of word order.

The experiments are conducted using a dataset retrievedfromGoogleScholar. GoogleScholarischosenas the primary resource for obtaining publication data of experts.LargenumberofrecordsarecollectedfromGoogle Scholar, as outlined in Table 1. Given the diverse and heterogeneousoriginsofthedata,itwasexposedtomissing values,noise,andinconsistencies.Thesuperiorityofthedata highlyimpactstheoutcomeofdatamining.Hence,rawdata pre-processing was carried to improve its quality. This criticalstepinvolvescleaningandtransformingthedatain proper format for subsequent mining processes. Preprocessing helps to lessen the raw data size, establish correlationsbetweenfeatures,normalizethedata,eliminate outliers,andretainonlyrelevantfeaturesbyeliminatingless important ones. Key techniques applied during this phase include data cleaning, integration, transformation, and reduction [24]

Table -1: Summaryofretrieveddataset

instances after Data preprocessing 1806

Resultant attributes after feature selection O3

Followingthedatacleaningprocess,approximately10%of therecordswereremoved.Thecleandatawasthenscaled andnormalizedusingmethodsStandardScaling,Maximum Absolute Scaling, and Median-Quantile Scaling. Subsequently,featurescalingandabstractionwerecarried out,resultinginthreehighlycorrelatedfeaturesforthenext stages of the expert finding task. These steps produced cleaned, scaled data which effectively contributed to accurateandpreciseresults.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

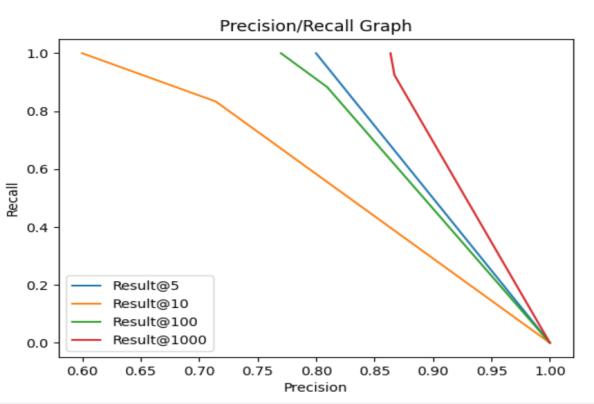

Forfindingexpertsusingthepre-processeddata,theOKAPI BM25modelhasbeenimplementedandanalysed.Toassess itsperformance,thenumberof recordsprocessedineach execution are varied. The assessment of the BM25 implementationresultstypicalmetricsrecallandprecision, whicharecommonlyusedtogaugetheaccuracyofranking anddocumentretrieval.Precision,ameasureofcorrectness [25]. It evaluates how effectively the model excludes irrelevantdocuments,whilerecall,aunitofcompleteness, assesses the model capability to retrieve all relevant documentswhichmeettheuserneeds.Pertinentdocuments inthecorpusareorderedbasedontherelevancescores [26] . Performanceofthemodelisevaluatedusingthemethodsat different precision values as P@5, P@10, P@100, and P@1000asshowninTable2.

Table -2: Precision@5,Precision@10,Precision@100, Precision@1000resultsusingBM25algorithmson GoogleScholarretrievedDataset.

missing relevant results is unacceptable. This paper highlights various approaches to find expert in any particular domain and implementation of OKAPI BM25 model. It offers in depth analysis of how the present day expertfindingmodelsfunctionandhighlighttheirstrengths aswellpointoutweaknesses. Ithelpstoidentifythegaps.

[1] TalnikarHV,ShirudeSB.AnArchitecturetoDevelopan AutomatedExpertFindingSystemforAcademicEvents. In Proceedings of the International Conference on Paradigms of Computing, Communication and Data Sciences: PCCDS 2022 2023 Feb 24 (pp. 297-306). Singapore:SpringerNatureSingapore.

[2] D.M.MimnoandA.McCallum.Expertisemodelingfor matching papers with reviewers. In KDD, pages 500–509,2007.

[3] BhartiPK,GhosalT,AgarwalM,EkbalA.PEERRec:An AI-based approach to automatically generate recommendationsandpredictdecisionsinpeerreview. International Journal on Digital Libraries. 2024 Mar;25(1):55-72.

[4] GoogleScholar.URL:http://scholar.google.com/

[5] K.Balog,L.Azzopardi,andM.deRijke.Formalmodelsfor expertfindinginenterprisecorpora.InSIGIR,pages43–50,2006.

4. CONCLUSION

Inproposedpaper,itisanalysedandexperimentallyswotted theBM25algorithms.Therankingandcomparisonmeasures are assessed in the context of altered number of input records.InourexperimentusingGooglescholardataset,we found that increasing the retrieval threshold impacts the model's performance: precision tends to drop as more results are retrieved, but recall improves. The choice of threshold depends on whether precision or recall is more critical for the specific task. High recall is preferred when

[6] I.Soboroff,A.deVries,andN.Craswell.Overviewofthe trec-2006 enterprise track. In Proceedings of TREC 2006.

[7] D.PetkovaandW.B.Croft.Hierarchicallanguagemodels forexpertfindinginenterprisecorpora.InICTAI,pages 599–608,2006.

[8] K. Balog andM. de Rijke. Determining expert profiles (withanapplicationtoexpertfinding).InIJCAI,pages 2657–2662,2007.

[9] C. Macdonald and I. Ounis. Voting for candidates: adapting data fusion techniques for an expert search task.InCIKM,pages387–396,2006.

[10] H. Fang and C. Zhai. Probabilistic models for expert finding.InECIR,pages418–430,2007.

[11] Balog, K.; Fang, Y.; De Rijke, M.; Serdyukov, P.; Si, L. ExpertiseRetrieval.Found.Trends®Inf.Retr.2012,6, 127–256.

[12] Petkova, D.; Croft, W.B. Proximity-Based Document Representation for Named Entity Retrieval. In Proceedingsofthe16thACMConferenceonConference

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

on Information and Knowledge Management, Lisbon, Portugal,6–10November2007;pp.731–740.

[13] Balog,K.;Azzopardi,L.;DeRijke,M.FormalModelsfor ExpertFindinginEnterpriseCorpora.InProceedingsof the29thAnnualInternationalACMSIGIRConferenceon Research and Development in Information Retrieval, Seattle,WA,USA,6–11August2006;pp.43–50.

[14] Shirude, S.B., Kolhe, S.R., Classification of Library Resources in Recommender System Using Machine LearningTechniques.In:Mandal,J.,Sinha,D.(eds)Social Transformation – Digital Way. CSI 2018. CommunicationsinComputerandInformationScience, vol836.Springer,Singapore.

[15] Lin, S.; Hong, W.; Wang, D.; Li, T. A Survey on Expert FindingTechniques.J.Intell.Inf.Syst.2017,49,1–25.

[16] Fang, Y.; Si, L.; Mathur, A.P. Discriminative Graphical ModelsforFacultyHomepageDiscovery.Inf.Retr.2010, 13,618–635.

[17] Das,S.;Mitra,P.;Giles,C.LearningToRankHomepages for Researcher name Queries. In the International WorkshoponEntity-OrientedSearch;ACM,EOS,SIGIR 2011Workshop:Beijing,China,2011;pp.53–58.

[18] Tang, J.; Zhang, J.; Yao, L.; Li, J.; Zhang, L.; Su, Z. Arnetminer:ExtractionandMiningofAcademicSocial Networks. In Proceedings of the 14th ACM SIGKDD InternationalConferenceonKnowledgeDiscoveryand Data Mining, LasVegas,NV, USA,24–27August2008; pp.990–998.

[19] Fang,H.;Zhai,C.ProbabilisticModelsforExpertFinding. InProceedingsofEuropeanConferenceonInformation Retrieval;Springer:Berlin/Heidelberg,Germany,2007; pp.418–430.

[20] Gollapalli,S.D.;Mitra,P.;Giles,C.L.SimilarResearcher SearchinAcademicEnvironments.InProceedingsofthe 12thACM/IEEE-CSJointConferenceonDigitalLibraries, Washington,DC,USA,10–14June2012;pp.167–170.

[21] Hofmann, K.; Balog, K.; Bogers, T.; De Rijke, M. ContextualFactorsforFindingSimilarExperts.J.Assoc. Inf.Sci.Technol.2010,61,994–1014.

[22] Kong,X.;Jiang,H.;Wang,W.;Bekele,T.M.;Xu,Z.;Wang, M.ExploringDynamicResearchinterestandAcademic InfluenceforScientificCollaboratorRecommendation. Scientometrics2017,113,369–385.

[23] Li, C.-T.; Shan, M.-K.; Lin, S.-D. Context-Based People SearchinLabeledSocialNetworks.InProceedingsofthe 20thACMInternationalConferenceonInformationand KnowledgeManagement,Glasgow,Scotland,UK,24–28 October2011;pp.1607–1612.

[24] Tamilselvi,R.B.SivasakthiandR.Kavitha.,Anefficient preprocessing and postprocessing techniques in data minig.Intl.J.Res.Comput.Appl.Rob..3:80-85,2015.

[25] deCamposLM,Fernández-LunaJM,HueteJF,RibadasPenaFJ,BolañosN.Informationretrievalandmachine learning methods for academic expert finding. Algorithms.2024Jan23;17(2):51.

[26] ShirudeSB,KolheSR.Measuringsimilaritybetweenuser profile and library book. In2014 international conference on information systems and computer networks(ISCON)2014Mar1(pp.50-54).IEEE.

© 2025, IRJET | Impact Factor value: 8.315 | ISO