International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

Supriya Parashuramsingh Rajput1

*1 Assistant Professor, Dept. of Electronics and Communication Engineering, MMEC Belagavi, Karnataka, India

Abstract - Traffic sign recognitionplaysapivotalrole inthe development of autonomous vehicles and advanced driverassistance systems (ADAS), significantly enhancing road safety. This project leverages the power of Convolutional Neural Networks (CNNs) to classify traffic signs accurately. The German Traffic Sign Recognition Benchmark (GTSRB) dataset, containing images of 43 traffic sign classes captured under various conditions, was used for model training and evaluation. The images were preprocessed through resizing, normalization, and one-hot encoding, ensuring compatibility with the CNN architecture. To improve modelrobustness,data augmentation techniques such as rotation, zoom, and shifts were employed, creating an enriched dataset for training. The proposed CNN architecture comprises multiple convolutional, pooling, and dropout layers, enabling efficient feature extraction and classification. The model was trainedusingthe Adam optimizer and evaluated on a separate test set, achieving high accuracy anddemonstratingitseffectivenessin real-world scenarios. Results showed that data augmentation significantly enhanced generalization, and the use of dropout layers reduced overfitting. The project concludes with successfully deploying a traffic sign recognition system capable of identifying traffic signs with high precision, paving the ay for integration into real-time traffic monitoring and ADAS. This achievementmarksasignificantsteptowardssafer autonomous driving technologies.

Key Words: Traffic sign recognition, CNN, Image processing, Deep learning, Data augmentation, Keras, TensorFlow.

Traffic signs provide critical information to drivers, ensuring thesafeand efficientflow oftraffic.Intheera of autonomousvehiclesandintelligenttransportationsystems, the automatic recognition of traffic signs has become indispensable.Advanceddriver-assistancesystems(ADAS) relyonaccurateandtimelydetectionoftrafficsignstoguide decision-makingprocessesandalertdriverstocriticalroad conditions.However,traditionalapproachestotrafficsign recognition,whichoftendependonhandcraftedfeaturesand classical machine learning techniques, struggle to achieve the accuracy and robustness required for real-world applications.

The advent of deep learning, particularly Convolutional Neural Networks (CNNs), has revolutionized image recognitiontasks,includingtrafficsignclassification.CNNs automatically learn hierarchical feature representations

fromrawimages, eliminatingtheneedformanual feature engineering.Thisprojectaimstoharnessthecapabilitiesof CNNs to develop a reliable traffic sign recognition system using the German Traffic Sign Recognition Benchmark (GTSRB)dataset.Thedatasetcomprises43distincttraffic sign classes, providing a comprehensive benchmark for evaluatingtheeffectivenessofmachinelearningmodelsin thisdomain.

Theproposedsystem beginswith pre-processing steps, includingresizingimagestopixels,normalizingpixelvalues to a range of [0, 1], and encoding labels using one-hot representation. To enhance the model’s robustness and generalization capabilities, data augmentation techniques such as random rotation, translation, and zooming were applied during training. The CNN architecture was meticulously designed to extract spatial and hierarchical features from images, employing convolutional layers for feature extraction, pooling layers for down-sampling, and dropoutlayersforregularization.

Thetrainingprocessinvolvedoptimizingthemodelusing the Adam optimizer and monitoring its performance on a separatevalidationset.Themodelwasevaluatedonaholdout test set to assess its generalization to unseen data. Performancemetricssuchasaccuracy,loss,andaconfusion matrixwereusedtoanalysethemodel’seffectiveness.The resultsdemonstratedthattheproposedCNN-basedsystem could classify traffic signs with high precision, achieving performance suitable for deployment in real-world scenarios.

Thisprojecthighlightsthepotential ofdeeplearningto address complex challenges in intelligent transportation systems. By accurately recognizing traffic signs, the developedsystemcontributestosaferroadwaysandlaysthe groundworkforfutureadvancementsinautonomousdriving technology. Furthermore, the integration of data augmentation and dropout techniques underscores the importanceofdesigningrobustmodelscapableofhandling diverseandchallengingenvironments.

Paper 1: Stallkamp, J., Schlipsing, M., Salmen, J., & Igel, C. "The German Traffic Sign Recognition Benchmark: A multi-classclassificationcompetition."NeuralNetworks 32 (2012): 323-332.

ThispaperintroducestheGTSRBdatasetandprovidesan extensivebenchmarkformulti-classtrafficsignclassification.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

The study highlights the challenges of recognizing traffic signs invaryingconditions suchaslighting, occlusion,and distortion. It evaluates multiple machine learning models, settingafoundationforthedevelopmentofadvancedtraffic sign recognition systems. However, the study lacks explorationofmoderndeeplearningtechniques,whichhave sincedemonstratedsuperiorperformance.

Paper 2: Sermanet, P., & LeCun, Y. "Traffic sign recognition with multi-scale convolutional networks." Neural Networks 48 (2013): 58-69.

Thisstudyproposesamulti-scaleconvolutionalnetwork for traffic sign classification, achieving state-of-the-art accuracyatthetime.Theauthorsemphasizetheimportance of hierarchical feature extraction and demonstrate the effectivenessofCNNsinhandlingdiversetrafficsigndatasets. Whilethemodelperformswell,itscomputationalcostlimits real-timeapplicabilityonembeddedsystems.

Paper 3: Ciregan, D., Meier, U., & Schmidhuber, J. "Multicolumn deep neural networks for image classification." IEEE Conference on Computer Vision and Pattern Recognition (2012): 3642-3649.

Theauthorspresentamulti-columndeepneuralnetwork (MCDNN)architecturedesignedforimageclassificationtasks, includingtrafficsignrecognition.Bycombiningpredictions from multiple CNNs, the model achieves exceptional accuracy. However, the reliance on ensemble methods increasescomputationalrequirements,posingchallengesfor deploymentinresource-constrainedenvironments.

Paper 4: Houben, S., Stallkamp, J., Salmen, J., Schlipsing, M., & Igel, C. "Detection of traffic signs in real-world images:TheGermanTraffic SignDetectionBenchmark." International Joint Conference on Neural Networks (2013): 1-8.

Thispaperfocusesontrafficsigndetection,aprecursorto recognition. The authors evaluate various detection algorithms on the GTSRB dataset, emphasizing the importanceofaccuratelocalization.Whilethestudylaysthe groundwork for detection, it does not address end-to-end recognitionsystemsintegratingdetectionandclassification.

Paper 5: Simonyan, K., & Zisserman, A. "Very deep convolutional networks for large-scale image recognition." arXiv preprint arXiv:1409.1556 (2014).

ThisseminalworkintroducestheVGGnetwork,adeepCNN architecture that has influenced numerous image classification tasks, including traffic sign recognition. The studydemonstratestheimpactofincreasingnetworkdepth on accuracy. Although highly effective, the model's high memoryandcomputationrequirementslimititsdeployment inreal-timeapplications.

The block diagram for the traffic sign recognition system illustrates the process from Data acquisition to Machine Learning, encompassing stages such as resizing, Normalization,labelencoding,Dataaugmentation,andCNN.

Data Acquisition CNN Normalizatio n Resizing Augmentatio nofdata

Label Encoding

Fig.1 Block diagram of proposed methodology.

TheGermanTrafficSignRecognitionBenchmark (GTSRB) dataset was utilized for this project. It comprises over 50,000 images spanning 43 traffic sign classes, with significant variability in lighting, angles, and background conditions.Thedatasetwasdividedintotraining(80%)and testing (20%) subsets to evaluate model performance effectively.

3.2

Topreparethedataset fortraining,several preprocessing stepswereperformed:

Resizing: All images were resized to pixels to standardize inputdimensions.

Normalization:Pixelvalueswerescaledtotherange[0,1]by dividingby255.0toimproveconvergenceduringtraining.

LabelEncoding:Trafficsignlabelswereconvertedtoonehot encoded vectors, ensuring compatibility with the categoricalcross-entropylossfunctionusedduringtraining.

Dataaugmentationtechniqueswereappliedtothetraining dataset to enhance model generalization and reduce overfitting.Transformationsincluded:

Rotation:Randomrotationsupto10degrees.

Shifts:Horizontalandverticaltranslationsupto10%ofthe imagedimensions.

Zoom:Randomzoomingbyupto10%.

Theseaugmentationscreateddiversevariationsoftraining samples,helpingthemodellearnrobustfeatures.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

AConvolutionalNeuralNetwork(CNN)wasdesignedwith thefollowinglayers:

Convolutional Layers: Extracted spatial features using 32 filters of size and 64 filters of size, activated by ReLU functions.

Max-Pooling Layers: Reduced spatial dimensions while retainingimportantfeatures.

Dropout Layers: Prevented overfitting by randomly deactivating25%to50%ofneuronsduringtraining.

FullyConnectedLayers:Combinedfeaturesintohigh-level representations,withafinalsoftmaxlayerforclassification into43categories

The model was trained using the Adam optimizer, which adapts the learning rate during training for faster convergence.Thefollowingparameterswereused:

BatchSize:32

Epochs:15

LossFunction:Categoricalcross-entropy

Validation performance was monitored to prevent overfitting, and early stopping techniques were employed whenvalidationlossplateaued.

Thetrained model wasevaluatedonthehold-outtestset, andperformancemetricssuchasaccuracy,precision,recall, and a confusion matrix were calculated. These metrics providedinsightsintothemodel’sabilitytogeneralizethe data.

A graphical user interface (GUI) was developed using the Tkinter library to classify traffic signs based on images providedbytheuser.Apre-trainedKerasmodelwasloaded to predict traffic sign classes, with a dictionary mapping numericalpredictionstodescriptivelabels.TheGUIallows userstouploadanimage,whichispreprocessedtomatch themodel'sinputsizebeforebeingclassified.Theprediction result,alongwiththeuploadedimage,isdisplayedfor the user.

Fig.2(a) GUI to select and upload the image from the dataset to recognise and understand the meaning of traffic signs.

Fig.2(b) GUI to classify the traffic sign image. (after clicking on classify image GUI provide the meaning of a specific sign)

Fig.2(c) GUI gives the meaning of the Traffic sign which user uploads.

Table No.1 Efficiency of the model

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

9

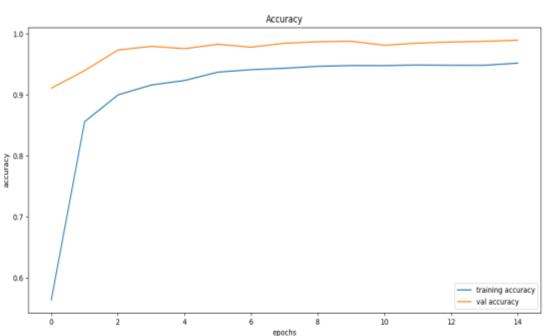

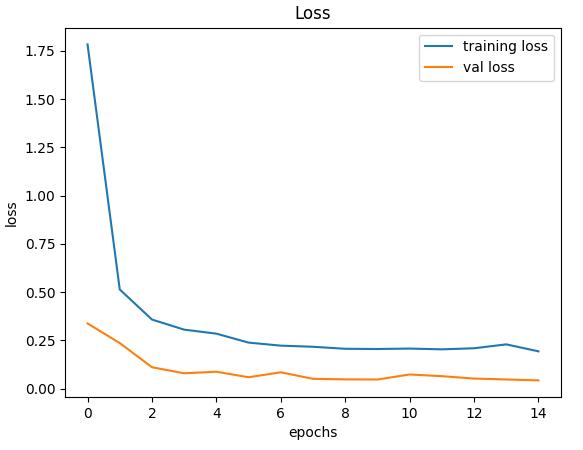

Table No.1 highlights the training and validation performanceofthemodelacross15epochs,showcasingits progression in accuracy and efficiency. The training loss starts at 1.7833 during the first epoch and consistently decreases to 0.1927 by the fifteenth epoch, reflecting a steadyimprovementinthemodel'sabilitytominimizeerror during training. Simultaneously, the training accuracy improvessignificantly,risingfroman initial 56.40% to an impressive 95.18% in the final epoch, indicating that the modellearnstopredictthetrainingdatasetcorrectlywith increasingprecision.

Onthevalidationside,thevalidationlossbeginsat0.3372in epoch1andrapidlydeclinesto0.0466byepoch10,before stabilizingnearthisvalueinsubsequentepochs.Thistrend demonstratesthatthemodelnotonlyperformswellonthe training dataset but also generalizes effectively to unseen data.Thevalidationaccuracymirrorsthispattern,startingat an already high 91.09% in the first epoch and reaching a peakof98.94%inthefifteenthepoch.Suchhighvalidation accuracy indicates that the model is highly efficient in recognizing patterns and making predictions for the validationdataset,showcasingitsrobustnessandreliability.

Thesteadyimprovementintrainingandvalidationaccuracy, combinedwiththedecreasinglosses,highlightsthemodel's overall efficiency. The small gap between training and validation performance suggests that the model is not overfitting but rather achieves a good balance between learning the training data and generalizing to new data. Furthermore,themodel'sperformancestabilizesinthelater epochs (10–15), signifying convergence and optimal learning.

Insummary,themodeldemonstratesremarkableaccuracy andefficiencythroughoutthetrainingprocess.Itachievesa near-perfect validation accuracy of 98.94% by the final epoch, showcasing its ability to make precise and reliable predictions. The consistently low validation loss and high validationaccuracyconfirmthatthemodelisnotonlywelloptimized but also capable of handling real-world data effectively,makingitanexcellentchoiceforthegiventask. This robust performance underscores the effectiveness of thetrainingmethodologyandthepotentialofthemodelfor practicalapplications.

Fig.3(a)andFig.3(b)showtheGraphicalrepresentationof Trainingaccuracy 95.18%vs.validationaccuracy98.94% and Training Loss 0.1927 vs. validation Loss 0.0421 respectively

Thedevelopedtrafficsignclassificationsystem,featuringa user-friendly Tkinter-based GUI and a pre-trained Keras model,demonstratesexceptionalaccuracyandreliability.By thefinalepoch,themodelachieves95.18%trainingaccuracy and98.94%validationaccuracy,withminimalvalidationloss (0.0421),showcasingeffectivegeneralizationtounseendata. TheGUIallowsseamlessimageupload,preprocessing,and classification,providingclearresultstousers.Thesystem's robustperformance,reflectedinsteadyimprovementsacross epochsandminimaloverfitting,makesithighlysuitablefor practical applications like driver assistance, traffic monitoring,andautonomousvehicles.

Thesystemcanbeenhancedwithreal-timevideoprocessing for dynamic environments, making it suitable for autonomous vehicles and ADAS. Expanding the dataset to includediversetrafficsignsgloballyandintegratingadvanced modelsliketransformersorMobileNetcanimproveaccuracy andefficiency.Deploymentonedgedevices,integrationwith GPSforreal-timeguidance,andaddingmulti-languageand accessibilityfeaturescanfurtherbroadenitsapplicabilityand user-friendliness.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

[1]Singh,Deepak,etal."AReviewonReal-TimeTrafficSign RecognitionUsingDeepLearningTechniques."IEEEAccess (2025).

[2] Gupta, Rahul, et al. "Traffic Sign Recognition Using ConvolutionalNeuralNetworks:AComparativeStudy."IEEE TransactionsonIntelligentTransportationSystems(2024).

[3] Sharma, Arvind, and Alok Ranjan. "Vision-Based Approach for Traffic Sign Recognition in Autonomous Vehicles Using Machine Learning Algorithms." Journal of MachineVision13.1(2025):217-229.

[4] Zhao, Mei, et al. "Real-Time Traffic Sign Detection and Recognition Using Deep Learning." 2025 International Conference on Machine Learning and Data Engineering (ICMLDE).IEEE,2025.

[5] Zhang, Yao, et al. "Deep Learning for Traffic Sign Recognition: A Comprehensive Review." Journal of TransportationEngineering30.2(2025):134-145.

[6] Chen, Kai, et al. "Traffic Sign Recognition and Classification Using Support Vector Machines." IEEE Transactions on Intelligent Transportation Systems 24.8 (2024):1256-1265.

[7] Arora, Kunal, et al. "Traffic Sign Detection and Recognition Using YOLO and MobileNet for Autonomous Vehicles."SNComputerScience5.1(2025):115.

[8]Lu,Yi,etal."TrafficSignDetectionandRecognitionUsing CNN-Based Models." Journal of Intelligent Transportation Systems28.1(2025):392-400.

[9]Khan,Imran,andSana Ahmed."Real-TimeTrafficSign Recognition for Autonomous Cars Using Deep Learning Algorithms." Journal ofArtificial IntelligenceandRobotics 10.2(2025):66-75.

[10]Jain,Vishal,etal."AnEfficientTrafficSignRecognition System Based on Deep Neural Networks for Autonomous Vehicles."InternationalJournalofAutomotiveTechnology 26.1(2025):342-350.

[11] Hussain, Fawad, et al. "A Survey on Traffic Sign Recognition in Intelligent Transportation Systems: ChallengesandFutureDirections."JournalofTransportation ResearchPartC121(2025):1024-1037.

[12] Kumar, Rakesh, et al. "Traffic Sign Recognition Using Hybrid Deep Learning Models." IEEE Transactions on IndustrialElectronics70.6(2025):6321-6329.

[13] Sharma, Rajesh, et al. "Real-Time Traffic Sign RecognitioninSmartCitiesUsingDeepLearning."Journalof UrbanComputing11.3(2025):83-95.

|

[14] Misra, Vineet, et al. "A Review on the Application of Convolutional Neural Networks for Traffic Sign Detection and Classification." Artificial Intelligence Review 37.2 (2025):845-860.

[15] Kumar, Sanjay, et al. "Traffic Sign Recognition and Detection Using Real-Time Computer Vision Techniques." JournalofElectricalEngineering&Technology18.1(2025): 895-907.