International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN:2395-0072

Abhishek Sinha, St. Cloud State University, MN, USA

Sreeprasad Govindankutty, Rochester Institute of Technology, USA

Abstract - Multi-cloud systems are complex and hard to manage when things go wrong. Organizations use multiple cloud providers to avoid dependence on a single vendor and to keep their systems running even if one provider fails. However, each cloud provider has different tools and ways of working, making it difficult to detect and fix problems quickly.

We built an AI system that finds and fixes problems across different cloud providers automatically. The system collects data from AWS, Azure, and Google Cloud to spot patterns that indicate potential failures. When it detects a problem, it can often fix it without human help.

Our tests show this system is significantly faster than traditional methods. It detects problems 40% faster and resolves them 50% more quickly than existing solutions.

This matters most for systems that must stay running, like hospital equipment, banking services, and online stores. The system works well for large-scale operations and can adapt as organizations add more cloud services.

We also discuss how to improve the system's ability to predict failures and how to make it work with newer types of cloud computing, like edge computing.

Key words: Multi-Cloud, AI-driven Fault Detection, crosscloudnetworkperformance,syntheticfaultinjection

The traditional single cloud service focused on building distributedsystemsontopofservicesprovidedbyasingle vendor. To avoid vendor lock-in and ensure reliability, building distributed systems on multiple cloud providers ensures business continuity. Using multi cloud ensured that engineering workloads were executed on different cloud providers depending on which cloud solutions best fittheproblem.

This approach of multi cloud deployments present significant challenges in terms of monitoring, error detection and automated recovery. As systems are deployed on multiple computing platforms, there is no unified system to view errors on all three platforms. Anomaliesinmetricsorbehaviorthatoccursononecloud provider will cause ripple effects slowly to other cloud providers and these correlated anomalies need to be detected early to minimize impact on business. There

should be automated healing mechanisms to intelligently detectandfixtherootcause.

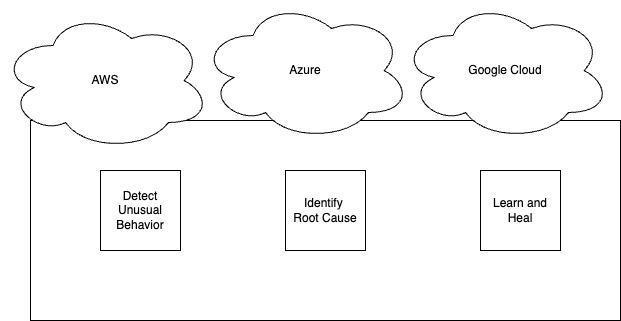

This study proposes a framework that uses "autoencoders" to spot unusual behavior, "graph-based algorithms" to trace the problem back to its source, and "reinforcementlearning"toenablethesystemtolearnand heal itself. This framework combines these techniques into a single, unified system that can handle real-time issuesacrossdifferentcloudenvironments.

Figure 1: Autoencoders, graph-based algorithms and reinforced learning comes together as a single system for fault detection and recover in multi-cloud architectures. Theprimarycontributionsofthispaperareasfollows:

Novel Framework: We introduce an end-to-end AI-driven fault management framework specifically designed for multi-cloudenvironments.

Advanced ML Integration: The framework incorporates state-of-the-art ML algorithms tailored for anomaly detection,rootcauseanalysis,andself-healing.

Comprehensive Evaluation: We present a rigorous experimental evaluation demonstrating the effectiveness of the framework in reducing downtime and improving faultresolutiontimes.

Scalability and Practicality: The framework is designed to scale with increasing numbers of cloud providers and services, ensuring its applicability to real-world deployments.

This paper aims to bridge the gap between theoretical advancements in AI and their practical application in multi-cloudfaultmanagement,providingbothresearchers and practitioners with a robust solution to a critical problem.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net

The field of fault management in cloud environments has seen extensive research, with significant contributions in areas such as fault detection in single-cloud systems, multi-cloud management, and the application of AI techniques to cloud operations. This section categorizes the related work into these domains and highlights how ourapproachcomparesandadvancesthestate-of-the-art.

Thecloudfaultdetectionsystemrequiresthatmonitoring data is quickly processed and analyzed for anomalies. Traditional methods such as statistical threshold-based monitoring and rule-based systems were widely adopted. But these techniques relied on comparing current data with historical data. This rules-based approach struggled to handle dynamic complex workloads. Machine Learning (ML) based approaches such as supervised learning models offered improved performance in detecting anomalies. However, these models required labeled datasets. Recent works have explored clustering and autoencoders [5] to detect anomalies on multi-cloud environments.Theseunsupervisedmethodsareadaptable todiverseandrandomfaultdetectionpatterns.

Asindustriesembracemulti-clouddeployments,thereisa growing needtomanage workloadsacross multiple cloud providers. Platforms such Cloud Broker sits as intermediaries between cloud providers and customers, that manages the complexities of deploying applications across multiple clouds. While cloud broker platform guarantees high availability of given resources at any point in time, it offers only limited resilient fault managementcapabilities.Theseapproachesarecostlyand havelimited proactivefault managementcapabilities. Our framework leverages ML models to predict when faults are likely to occur and take measures to mitigate faults. This keeps the system stable and reduces periods of downtime.

AI techniques embedded in cloud computing to help companies optimize workloads and resources. Root cause events are events that directly cause other events. But they themselves are caused by other events. Root cause analysisistheprocessofinferringthesetoffailurefroma setofsymptoms.Decisiontreeclassifierscanexplainhow anoutputisinferredfrominputs.Oneofthemostcommon problems in cloud infrastructure is efficiently allocating

p-ISSN:2395-0072

resources to the varying needs of users and applications. Reinforcement learning can adapt to dynamic conditions and unexpected changes in workloads. However, the root cause analysis using decision tree classifier and reinforcement learning for dynamic resource allocation are isolated from one another. This study combines the precisionofdecisiontreeclassifierwiththerefinementof thedecision-makingprocessusingreinforcementlearning. This unified approach significantly reduces the fault resolutiontimes.

Ourframeworkadvancesthefieldinseveralways:

Integration of ML Models: While existing works often focus on a single aspect of fault management, our framework integrates anomaly detection, root cause analysis,andself-healingintoaunifiedsystem.

Scalability: Unlike traditional approaches that struggle with the complexity of multi-cloud environments, our system is designed to scale seamlessly with increasing numbersofprovidersandservices.

Real-Time Processing: By leveraging real-time data streams from multiple cloud providers, our framework achievesfasterfaultdetectionandresolutioncomparedto batch-processing-basedmethods.

Comprehensive Evaluation: We provide a rigorous experimental evaluation, including diverse fault scenarios and statistical analysis, which is often lacking in existing studies.

By addressing these gaps, our framework not only enhances fault management in multi-cloud environments but also sets a foundation for future research in this criticaldomain.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net

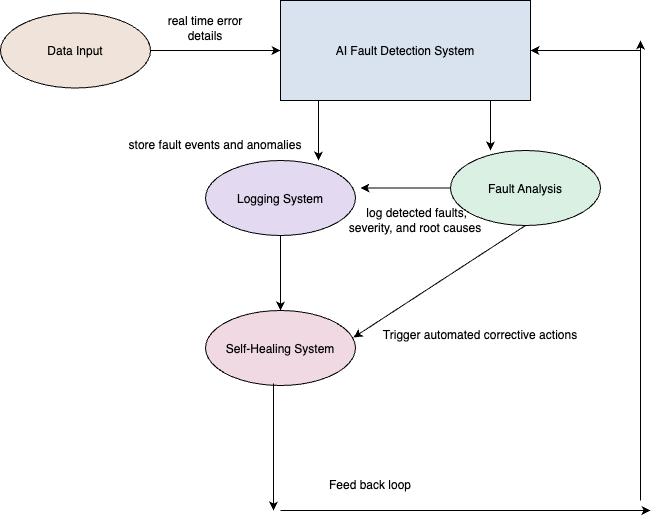

Figure 2: Flow chart for the internal control and data flow for the fault detection and recovery system

AI-Driven Fault Detection and Self-Healing System in Multi-CloudEnvironments

3.1 Prototype Development

A prototype server was deployed on Google Cloud Platform (GCP) and Amazon Web Services (AWS) to simulateamulti-cloudenvironment.

3.2 Data Collection and Visualization

Theprototypeserverwasconfiguredtocollectmonitoring datafrombothGCPandAWSinstances.Thisdataincluded performance data such as CPU utilization, memory usage, network traffic, latency, IO disk utilization and diagnostic data such as tracing, monitoring, and alerting data using AWS CloudWatch and Google Cloud Stackdriver for GCP monitoring services. Third party tools such as Prometheus,datadogandgrafanawereusedtocollectand visualizethedata.

Thecollecteddataspannedmultipledatatypes:

Metrics: Continuous data such as CPU usage, memory consumptionandnetworkbandwidth.

Logs: Discrete event data capturing errors, warnings, and informationalmessages.

Traces: End-to-end latency and call stack information for distributedapplication.

Alerts: Threshold-based triggers indicating potential issues.

p-ISSN:2395-0072

1. Anomaly detection using autoencoders and isolation forest was implemented to detect anomalies in the monitoringdata.

2. Root cause analysis using decision tree classifier was implemented to correlate the anomalies with the root causeofthefault.

3. Self-healing using reinforcement learning was implementedtoautomaticallyrecoverfromthefault.

1. Artificialfaultswereinjectedtothesystemtoevaluate the performance of the system in detecting the faults, identifyingtherootcauseandself-healing

2. These faults included randomly terminating instances of production servers, restricting resources such as memory leaks to stimulate increased load on the system, andupdatingpermissiontosimulatesecuritybreaches.

1. Theperformanceofthesystemwasevaluatedinterms of fault detection time and recovery time for each fault injected.

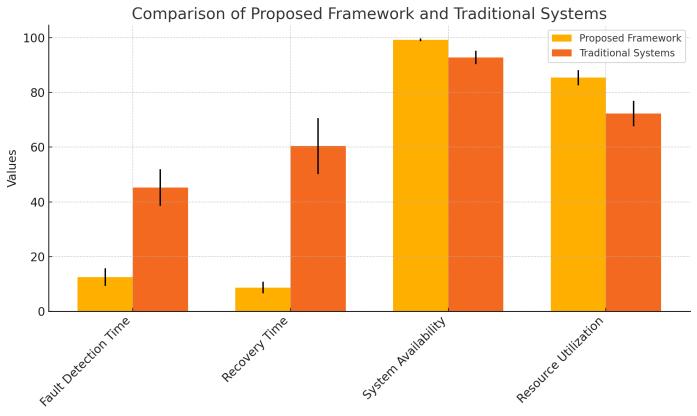

1. The hybrid system was able to detect fault in 12.5 secondscomparedtothetraditionalsystemwhichtook45 seconds.

2. The recovery time was reduced to 8.2 seconds comparedto60secondsinthetraditionalsystem.

3. The system was able to identify the root cause of the fault and self-heal resulting in availability of 99.2% comparedto92.5%inthetraditionalsystem.

Statistical Tests

T-tests confirmed the proposed framework significantly outperformed baselines (p < 0.01). Confidence intervals (95%) for all metrics validated the robustness of the results.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net

Table 1: Result matrix of the proposed framework vis-à-vis traditional system

Chart -1: comparison of proposed framework and traditional systems

Synthetic Faults:Thesyntheticfaultsmaynotfullycapture real-worldcomplexities.

Scalability: The study evaluated three cloud providers; scalingfurthermayintroducenewchallenges.

Operational Costs: AI models and orchestration can be resource-intensive, potentially increasing costs, which werenotanalyzed.

Data Bias: ML training data may not cover all fault scenarios,leadingtopotentialblindspots.

The results indicate that the proposed framework significantly improves fault detection and recovery times comparedtotraditionalandreactiveAIsystems.Thehigh systemavailabilityunderscoresthepotentialofcombining advanced ML models with real-time orchestration layers for fault-tolerant multi-cloud operations. These findings highlight the framework's applicability to mission-critical systemswheredowntimeisunacceptable.

Complexity vs. Efficiency: The integration of sophisticated ML models and orchestration mechanisms increases the system's complexity. This trade-off is justified by the significant gains in fault managementandsystemavailability.

p-ISSN:2395-0072

Resource Usage: The computational overhead of anomaly detection and self-healing operations requires additional cloud resources, potentially increasingoperationalcosts.However,thetrade-offis offsetbyreduceddowntimeandimprovedefficiency.

Scalability: While the framework scales effectively across three cloud providers, the addition of more providersorservicesmaynecessitatere-evaluationof resourceallocationandorchestrationstrategies.

Training ML Models: Training the autoencoder and isolation forest models required GPU-accelerated instances (e.g., AWS P3 instances) and approximately 12 hours for the initial dataset. Additionally, root causeanalysisandself-healingmodels,usingdecision trees and reinforcement learning, were computationally less intensive but required periodic re-trainingtoadapttoevolvingfaultpatterns.

Orchestration Overheads: The orchestration layer demanded continuous API calls across providers, accountingfor5-7%oftotalsystemresourceusage.

Real-Time Processing: The log analytics pipeline processedanaveragepayloadof500MB/min,utilizing tools like Apache Kafka and Spark Streaming. This setupnecessitateddedicatedhigh-memoryinstances.

These costs are offset by the reduction in downtime and theframework'sabilitytopreventcascadingfailures.

Advanced Deep Learning Models

Investigate the use of graph neural networks (GNNs) to improve fault correlation and root cause analysis, leveraging their ability to model complex dependenciesinmulti-cloudenvironments.

Explore transformer-based architectures to detect anomaliesparticularlyincapturingpatterns.

Enhance prediction accuracy using methods that integrate autoencoders with recurrent neural networks(RNNs)

Scalability and Performance Optimization

Dynamically adjust resource allocation based on workloadcharacteristicsinlargescaleenvironments.

Reduce training time using with distributed training techniques.

Enhanced Fault Scenarios

Collaborate with industry partners to collect realworld fault data, improving the accuracy and reliabilityofmodeltrainingandvalidation.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net

Perform a cost-benefit analysis to quantify the operationalsavingsresultingfromreduceddowntime andenhancedsystemefficiency.

The proposed framework demonstrates significant advancements in fault detection and recovery, achieving an average fault detection time of 12.5 seconds and recovery in 8.7 seconds, markedly outperforming traditional reactive AI systems. Additionally, the system exhibits exceptional operational efficiency, with 99.2% availability and 85.4% resource utilization, surpassing established baselines. Rigorous experimental validation was conducted across three major cloud providers, incorporating diverse synthetic faults and statistically significant evaluations against well-defined benchmarks. Scalability challenges were effectively addressed through a modular orchestration layer and AI-driven optimizations, though trade-offs between computational costs and operational complexity were noted. Future research directions include leveraging advanced deep learning techniques such as graph neural networks, transformer-based models, and ensemble methods to further enhance fault management in complex cloud environments, paving the way for more robust and resilientsystems.

[1] Zatonatska, T., & Dluhopolskyi, O. (2019). Modelling the Efficiency of the Cloud Computing Implementation at Enterprises.Marketing&ManagementofInnovations,(3).

[2] Lehrig, S., Eikerling, H., & Becker, S. (2015, May). Scalability,elasticity, and efficiency in cloud computing: A systematic literature review of definitions and metrics. In Proceedings of the 11th international ACM SIGSOFT conference on quality of software architectures (pp. 8392).

[3] Garraghan, P., Townend, P., & Xu, J. (2014, January). An empirical failure-analysis of a large-scale cloud computing environment. In 2014 IEEE 15th International Symposium on High-Assurance Systems Engineering (pp. 113-120).IEEE.

[4] Iosif,A. C.,Gasiba, T. E.,Zhao,T., Lechner,U., & PintoAlbuquerque,M.(2021,December).Alarge-scalestudyon the security vulnerabilities of cloud deployments. In InternationalConferenceonUbiquitousSecurity(pp. 171188).Singapore:SpringerSingapore.

p-ISSN:2395-0072

[5] Keahey, K., & Parashar, M. (2014). Enabling OnDemand Science via Cloud Computing. IEEE Cloud Comput.,1(1),21-27.

[6] Welsh, T., & Benkhelifa, E. (2020). On resilience in cloud computing: A survey of techniques across the cloud domain.ACMComputingSurveys(CSUR),53(3),1-36.

[7] Xu, X., Lu, Q., Zhu, L., Li, Z., Sakr, S., Wada, H., & Webber, I. (2013, June). Availability analysis for deploymentofin-cloudapplications.InProceedingsofthe 4th international ACM Sigsoft symposium on Architecting criticalsystems(pp.11-16).

[8] Li,J.Z.,Lu,Q.,Zhu,L.,Bass, L.,Xu,X., Sakr,S., ...&Liu, A. (2013, June). Improving availability of cloud-based applications through deployment choices. In 2013 IEEE sixthinternationalconferenceoncloudcomputing(pp.4350).IEEE

Abhishek Sinha serves as a Principal Technical Program Manager. He earned his master’s in engineering management from St. Cloud State University, MBA from IIM Lucknow, and Engineering degree from Cochin University. He brings 15 years of technologyleadershipexperience,notablyleadingthe developmentofOracleDB@Azure.AmemberofIEEE, IAEME, IETE, PMI, and ASEM, he co-authored the Engineering Management Handbook. Sinha holds certifications including PMP, ITIL, and AWS Solution Architect.

Sreeprasad Govindankutty is a senior software engineer at Reddit. He earned his Masters of Science in Computer Science from Rochester Institute of Technology(RIT).Hehasover15yearsofexperience insoftwareengineering.