International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

Nwabueze, C. E1, Nwokorie, E. C2, Onyemauche, U. C3, Ayogu, I. I4

1, 2, 3,4

Department of Computer Science, Federal University of Technology Owerri, Nigeria.

Abstract - This research focuses on creating and assessing an artificial intelligence-driven model designed to detect fire incidents. The model was developed to identify fire occurrences in indoor settings using computer vision methodologies andconvolutionalneuralnetworks(CNN).The CRISP-DM (Cross-Industry StandardProcess for DataMining) framework was applied throughout the project to guide the development and data analytics process.Adatasetcomprising 5,030 fire and non-fire images was sourced from Kaggle.com (2023). These 3- channeled images, with a resolution of 224 × 224 pixels, were processed using Python libraries such as TensorFlow, Keras, and NumPy. The model was trained and tested on a binary classification task to distinguish between fire andnon-fire scenarios. Performanceevaluationrevealeda high accuracy rate of 98.77%. Detailed metrics included precision, recall, and F1-scores for both classes: non-fire instances achieved precision, recall, and F1-scores of 98.70, 99.12, and 98.70, respectively, while fire instances recorded scores of 98.18, 96.43, and 97.40, respectively. The confusion matrix provided further insights, with the fire class test set yieldingtruepositives (TP) of 54, false positives (FP) of1,false negatives (FN) of 2, and true negatives (TN) of 187. Similarly, the non-fire class test set recorded TP as 270, FP as 3, FN as 5, and TN as 2. These findings demonstrate the model's high efficacy in fire incident detection, while also identifying potential areas for enhancement. By integrating artificial intelligence with computer vision, the proposed model has the potential to significantly improve fire safety measures and enable rapid emergency responses.

Key Words: Fire, Fire Detection, Fire Monitoring, Convolutional Neural Network, Computer Vision

Throughout history, fire incidents have posed significant riskstodomesticandindustrialproperties,buildings,sites, andoffices[1].Theconsequencesoffireoutbreaksareoften catastrophic,leadingtosubstantiallossoflivesandproperty. Beyond these immediate damages, fire incidents have inflicted financial burdens on nations, costing billions of dollarsinrepairsandreconstructionefforts[2].Toaddress thesechallenges,researchersandfiremanagementagencies have continuously developed various methods for fire monitoring, detection, and response, utilizing advanced technologies and tools. According to the Northeast DocumentConservationCentre(NEDCC),akeystrategyfor fireprotectionliesinidentifyingpotentialfireemergencies early and promptly alerting building occupants and

emergency response teams. This critical function is effectively carried out by fire monitoring and detection systems. Depending on factors such as the expected fire scenario,buildingtype,occupancy,andfireseverity,these systemsaretailoredtoprovideeithergeneralorspecialized functionalities[3].Firemonitoringinvolvesobservingand managing fire risks in a specific location based on parameters such as weather conditions, the state of flammable materials, and potential ignition sources. This process aids in implementing preventive measures and minimizingdamagebeforeafireoccurs.Italsoincorporates mechanisms to predict factors that may trigger a fire outbreak[5].Firedetection,ontheotherhand,focuseson identifyingfiresintheirearlystages,allowingforthesafe evacuationofoccupants.Whentechnologyisappliedtofire detection, implementing a robust and efficient algorithm becomes essential, as it plays a critical role in the overall firefighting process [6]. This study introduces a system designedforfiremonitoringanddetectionusingcomputer visionandconvolutionalneuralnetworks(CNN).Theaimis to enhance conventional fire detection systems, which typicallyrelyonsensorsfordetectingsmoke,temperature changes,andotherfirebyproducts.Traditionalsensor-based systems, while useful, are often constrained by space and location,limitingtheirefficiencyincertainenvironments[4]. Inthisresearch,acost-effectivecomputervisionapproachis employed,utilizinghigh-definition(HD)camerastocapture real-timevideoimageswithinthedeploymentarea,enabling thepredictionofpotentialfiresituations.ACNNmodelwas trainedonacomprehensivedatasetofindoorandoutdoor fire images sourced from Kaggle, ensuring diverse representationtosupportrobustgeneralization.Thetrained model's performance was rigorously evaluated using an independent dataset, with key metrics such as accuracy, precision, recall, and F1-score assessed to validate its effectiveness. Furthermore, the final prototype of the fire detectionsystemwasdeployedasauser-friendlyweb-based platform.Thisplatform,developedusingPython,HTML,CSS, and the Flask framework, integrates real-time detection capabilitiestoprovideanefficientandpracticalsolutionfor firemonitoringanddetection.

Todate,thereisnouniversallyoptimizedalgorithmforfire detection using computer vision; instead, various studies have explored different approaches to model the fire detection problem, aiming to enhance accuracy and minimize false alarms. Existing research reveals that numerousalgorithmshavebeendevelopedandproposedfor

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

video-basedfiredetectionsystems,eachstrivingtoimprove detection accuracy. Several notable works in this domain includecontributionsbyZhangetal.,Hsuetal.,Gongetal., andMuhammadetal.[2,7,8,9].Inarecentstudy,Avazovet al.[10]soughttoreplacetraditionalfire-detectionsystems withvision-basedalternativestoimprovefiresafetyusing advanced technologies such as digital cameras, computer vision, artificial intelligence, and deep learning. Their research developed a fire detection system capable of identifying small sparks and triggering an alarm within 8 seconds of a fire outbreak. Zhang et al. [7] introduced DeepFireNet, a real-time fire detection framework that combines fire-specific features with convolutional neural networks(CNNs)toanalyzereal-timevideofrommonitoring systems. Similarly, Gong et al. [8] proposed an innovative video-basedfiredetectionmethodusingmulti-featurefusion of flame characteristics. Their preprocessing approach combinedmotionandcolordetection,significantlyreducing computation time in identifying fire candidate pixels. However,despiteitsinnovation,theirproposedalgorithm was heavily affected by false positive results, limiting its effectivenessinreal-worldscenarios.

3.1 Dataset Description and Source

Thedatasetusedinthisstudyiscriticalfordeveloping an intelligentfiredetectionmodelapplicabletobothindoorand outdoorsettings.Table1outlinesthedatasetdetails,which aredrawnexclusivelyfromKaggle's"FireImagesDataset" [11]. This publicly available dataset, with a total size of approximately 130MB, consists of images organized into three main folders: training, validation, and testing sets. These folders facilitate the structured development and evaluation of the convolutional neural network (CNN) model. The images are divided into two categories: "Fire" and"Non-Fire,"enablingthemodeltodistinguishbetween scenescontainingfireandthosewithout.Allimagesarein JPGformatandresizedtoaconsistentresolutionof224x224 pixels, a standard dimension for image recognition tasks. The dataset includes 5,030 images, providing a comprehensivebasisfortrainingandvalidatingthemodel. Animportantcharacteristicofthisdatasetisitsimbalance, withoneclass(eitherFireorNon-Fire)likelyhavingmore images than the other. Such imbalances can present challenges for the model to perform equally well in both classes, necessitating the use of specialized techniques to addressthisissueduringtraining.

Table-1: DatasetSourceandDescription

Name of Dataset: FireImagesDataset(IndoorandOutdoor)

Size of Dataset: 130MB

Link to Dataset: https://www.kaggle.com/datasets/rachadlaki

s/firedataset-jpg-224

No. of Folders Three(3),[trainset,validationsetandtestset] No of Classes Two(2),[FireandNon-Fire]

Image Type JPG

Total No of

Images 5,030

Image

Normalization (224,224)

Comment

Imbalanced dataset dataset

Thissectionoutlinesthedatasetsplittingstrategyandclass distribution.Table2detailsthedivisionofthedatasetinto threesubsets:training,validation,andtesting.Thedataset followsan80-10-10split,ensuringsufficientdataformodel training,fine-tuning,andevaluation.Thisdivisionminimizes overfitting risks while providing a robust framework for modeldevelopment.Thetrainingsetaccountsfor80%ofthe data, consisting of 1,931 fire images and 2,083 non-fire images,makingitrelativelybalancedforeffectivelearning. Balanced data is essential to ensure the model can differentiate between fire and non-fire scenarios without bias. The validation set, comprising 10% of the total data, includes225fireimagesand267non-fireimages.Itservesa pivotalroleinoptimizingmodelhyperparameters,suchas learningrateorregularizationstrength,whilesafeguarding against overfitting by evaluating performance on unseen data during training. The test set, another 10% of the dataset,contains244fireimagesand280non-fireimages. This subset exclusively assesses the final model’s performance, providing an unbiased evaluation of its generalizationability.Balancedclassesinthetestsetensure reliableaccuracy,precision,recall,andF1-scoremetrics.

Table-2:DatasetSplitandClasses

Infiredetectiontasks,thetrainingandvalidationdataplay distinctyetcomplementaryroles.Thetrainingdataconsists oflabeledimages(fireandnon-fire),whichthemodeluses tolearnpatternsbyadjustingitsinternalparameters,such as neural network weights and biases. This process

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

minimizes prediction errors by iteratively updating the model.Thevalidationdata,aseparatelabeledset,evaluates the model’s intermediate performance during training. Unlikethetrainingdata,itdoesnotcontributetoparameter updates. Instead, it helps detect overfitting, ensuring the model captures meaningful patterns ratherthan noise. By monitoring validation performance, hyperparameters like thelearningrateorregularizationstrengtharefine-tunedto enhancethemodel’sabilitytogeneralizetounseendata.

Fig-1:High-levelprocessflowdiagramforfireimage detectionandclassification

TheproposedmodelisillustratedinFigure1,showcasing the high-level process for fire image detection and classificationusingaConvolutionalNeuralNetwork(CNN). The process ensuresaccurateidentification of fire-related features,culminatinginreliablebinaryclassification(fireor non-fire).

1. Image Input:Imagesarefedintothesystemasrawdata foranalysis.Theseincludediverseimagescapturedinindoor andoutdoorenvironmentswherefiredetectioniscritical.

2. Pre-Processing and Image Enhancement: Thiscrucial stepimprovesimagequality,enablingeffectiveanalysisby theCNN.Techniquessuchasnoisereduction,normalization, andcontrastenhancementemphasizefire-specificfeatures whilesuppressingirrelevantdetails.

3. Feature Extraction: The CNN identifies and extracts hierarchicalfeatures,suchaspatterns,shapes,andtextures, indicative of fire. This step leverages the CNN’s ability to learn complex feature representations for accurate classification.

4. Classification: Extracted features are analyzed and assignedtooneoftwocategories:fireornon-fire.Thisstage leveragestheCNN’sfullyconnectedlayers,whichpredictthe outcomebasedonlearnedpatterns.

5. Output Result: The system delivers a binary result indicatingthepresenceorabsenceoffire.Thisoutputcan trigger immediate responses, such asactivating alarms or notifyingemergencyservices,inreal-worldscenarios.

4.1

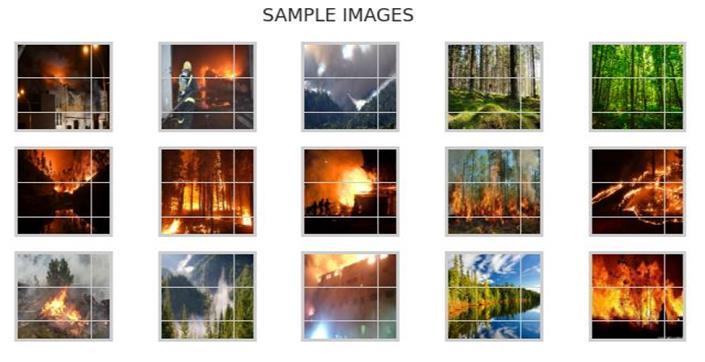

Thissectionillustratesexamplesfromthedatasetsourced fromKaggle.com,showcasingboththeoriginalimagesand

theirpreprocessedcounterparts.Therawimagesundergo preprocessing stepssuchasresizing, noisereduction,and feature enhancement to ensure the data is optimized for model training. These preprocessing steps are vital for improvingthemodel'sabilitytoaccuratelydetectfire.Figure 2presentsaselectionoffireandnon-fireimagesfromthe datasetusedinthisstudy.Thedatasetcomprisesimagesof fires occurring in various settings, such as indoor environmentsandoutdoorareaslikehousesandbushes.By analyzingpatternswithintheseimages,themodellearnsto differentiate between fire and non-fire scenarios. This capability is critical for developing a system that can promptly detect and alert users to indoor fire hazards, contributingtosafety.Figure3highlightstheoriginalimages alongside their preprocessed versions, showcasing techniquessuchasmasking,segmentation,andsharpening.

4.2

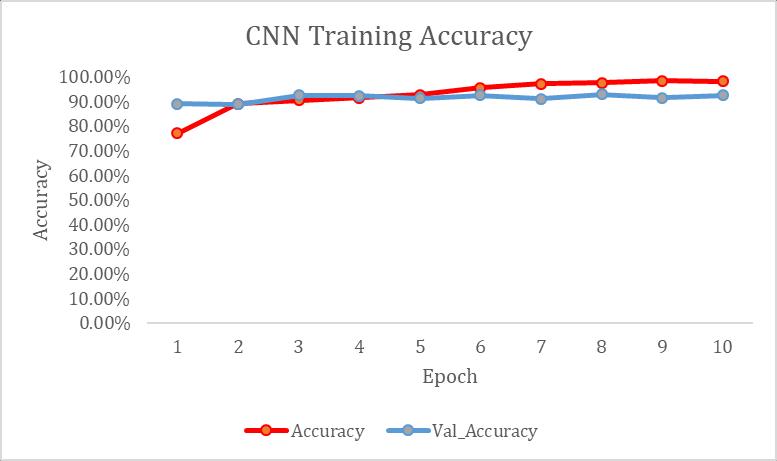

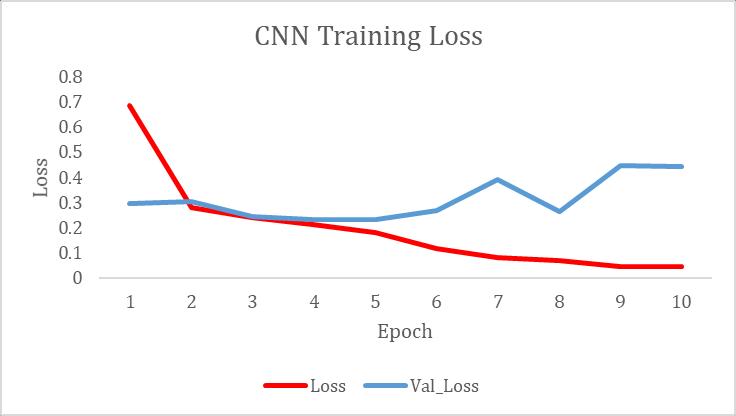

Thissectionoutlinestheresultsachievedduringthetraining oftheConvolutionalNeuralNetwork(CNN)forfireandnonfiredetection.Itincludesdetailsaboutthemodel'slearning progress, accuracy, and error minimization, providing insights into its performance and reliability. Training Summary:Table3displaysthemodel'strainingperformance over ten (10) epochs. During the first epoch, the model achievedanaccuracyof77.17%withalossof0.6841,while thevalidationaccuracywas89.02%withavalidationlossof 0.2959.Overthecourseoftraining,theaccuracyimproved significantly, reaching 98.4% in the ninth epoch, with a corresponding loss of 0.0454. Validation accuracy also remained consistent, starting at 89.02% and ending at 92.48%.However,validationlossfluctuated,rangingfroma

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net

lowof0.2306inthefourthepochtoahighof0.4477inthe ninth epoch. Table 3 demonstrates the model’s ability to increase accuracy while minimizing errors, despite some variabilityinvalidationloss.

Table-3:SummaryofTrainingResults

1

2

3

4

5

6

7

8

9

10

Figure5depictsthereductionintrainingandvalidationloss overtime.Initially,traininglosswas0.6841,decreasingto 0.0454bytheninthepoch.Validationlossstartedlowerat 0.2959 but showed fluctuations, peaking at 0.3921 in the seventhepoch.Despitethesevariations,thefinalvalidation losswas0.4424,indicatingthemodelsuccessfullyminimized errorsduringtrainingwhileachievingstableresults.

The performance of the trained model was assessed using a separate test set. Metrics such as accuracy, precision,recall,andF1-scorewereusedtoevaluateits effectivenessindistinguishingbetweenfireandnon-fire scenarios. Additionally, the proposed model was compared to existing studies to highlight its improvementsinaccuracyandreliability.

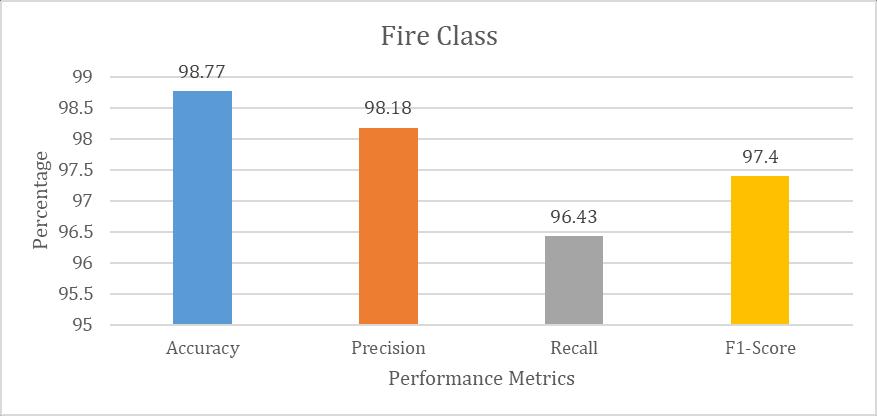

(a). Performance for Fire Class: Themodelwastested with244fireimages,anditsperformancemetricsare summarized in Table 4. Accuracy reflects the model's overallcorrectness,whileprecisionmeasuresitsability to accurately identify fire instances, minimizing false positives.Recallevaluatesitscapacitytodetectallfire cases,andtheF1-scoreoffersabalancedassessmentof precisionandrecall.

Table-4: FireClassPerformanceSummary

Figure4illustratesthemodel’saccuracyprogressionduring training. With a dataset comprising 1,931 fire images and 2,083non-fireimagesfortraining,and225fireimagesand 267 non-fire images for validation, the model improved steadily.Accuracybeganat77.17%fortrainingand89.02% for validation in the first epoch, climbing to 98.31% and 92.48%bythetenthepoch.Thisimprovementdemonstrates the model's ability to recognize fire effectively while maintainingstrongperformanceonunseenvalidationdata.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

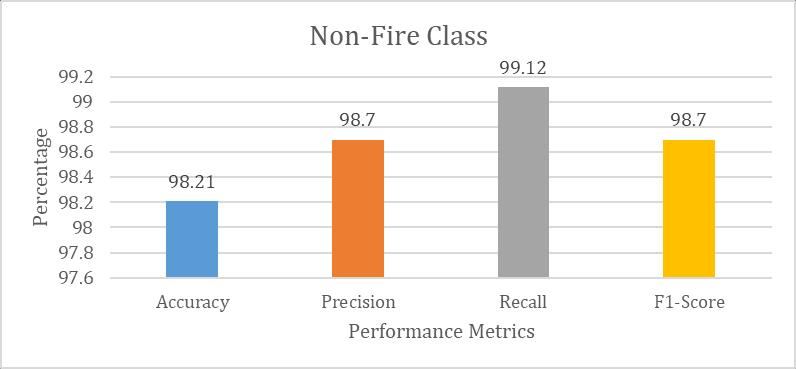

Thissectionevaluatesthemodel'sperformanceon280nonfireimagesusingmetricssuchasaccuracy,precision,recall, and F1-score, summarized in Table 5. Accuracy measures overallcorrectness,precisionevaluatestheratiooftruenonfirepredictionstototalnon-firepredictions,recallassesses themodel'sabilitytoidentifyallnon-firecases,andtheF1score balances precision and recall to provide a comprehensiveperformancemetric

Table-5: PerformanceMetricsforNon-FireClass

PerformanceMetrics(Figure6):Figure6providesavisual representation of the performance evaluation. The model achieved98.77%accuracy,highlightingitseffectivenessin correctly classifying fire instances. Precision (98.18%) demonstratesreliabilityindetectingfireswithminimalfalse positives. Recall (96.43%) indicates strong capability in identifying true fire cases, and the F1-score (97.40%) reflectsa balancedandrobustperformance.Theseresults confirmthemodel’shighreliabilityforfiredetection,making itavaluabletoolforreal-worldapplications.

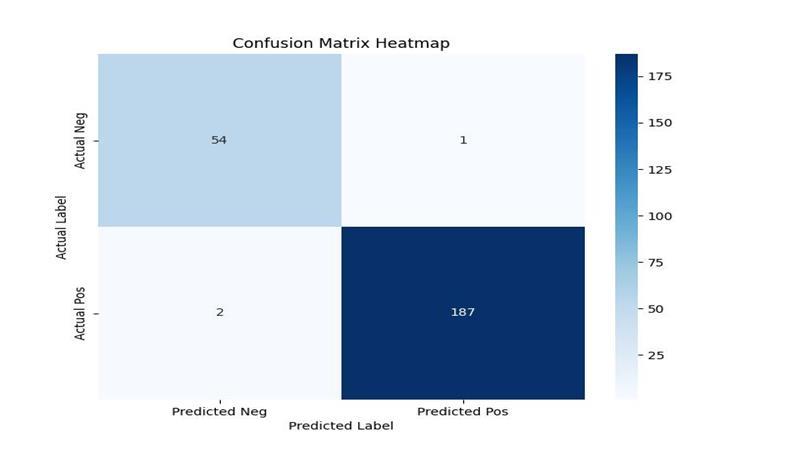

(b). Confusion Matrix Heatmap Result for Test Set (Fire Class)

ClassTestSet

Figure7displaystheconfusionmatrixheatmapforthefire detection model's performance on 244 image instances. UsingaCNN-basedapproach,themodelcorrectlyidentified 54 true positives and 187 true negatives, showcasing its reliabilityindistinguishingbetweenfireandnon-fireimages. The model produced just 1 false positive and 2 false negatives,reflectinghigh precisionandrecall rates.These resultsemphasizethemodel'seffectivenessinminimizing errors,underscoringitspotential asa dependabletoolfor firedetectioninpracticalapplications.

Figure8visuallyrepresentstheperformancemetricsforthe non-fire detection model. The high accuracy of 98.21% demonstratesitsreliabilityincorrectlyclassifyingnon-fire instances.Precision(98.70%)highlightsthemodel'sability toreducefalsepositives,whilerecall(99.12%)signifiesits capability to identify actual non-fire cases with minimal misses. The F1-score (98.70%) reflects a balanced performancebetweenprecisionandrecall.Together,these metrics affirm the model's robustness and reliability in detectingnon-firescenarios.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

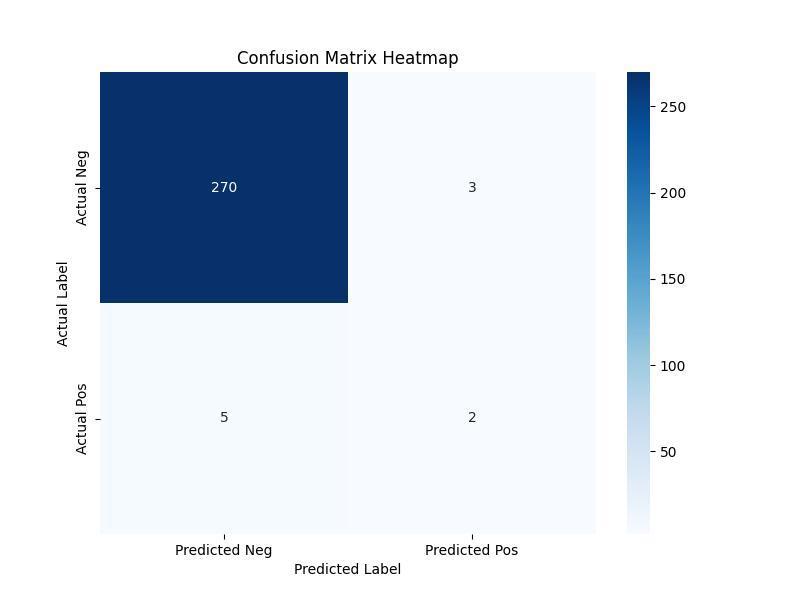

Figure 9 illustrates the confusion matrix for the non-fire class test set, comprising 280 image instances. The CNN model accurately identified 270 true positives and 5 true negatives, demonstrating strong detection capabilities. However, 3 false positives and 2 false negatives were recorded,indicatingminorareasforimprovement.Despite these errors, the model's overall accuracy in recognizing non-fire images remains impressive, highlighting its effectivenessasafiredetectiontoolfordiverseapplications.

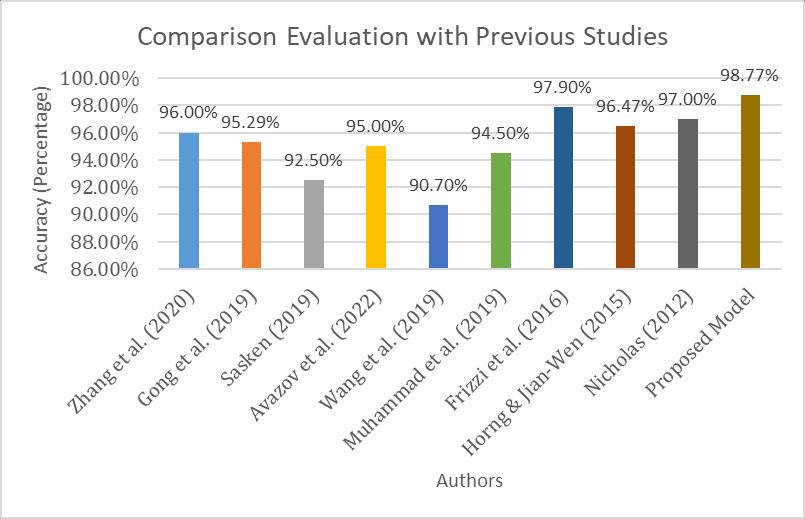

Thissectioncomparestheproposedmodel'sperformance withthatofninepreviousstudies,emphasizingitssuperior accuracyinfiredetection,asdepictedinFigure10.

Fig-10:ComparativeEvaluationoftheProposedModel andPreviousStudies

Figure 10 highlights the proposed model's accuracy of 98.77%,surpassingtheresultsofpreviousstudies:Zhanget al.(96.00%),Gongetal.(95.29%),Sasken(92.50%),Avazov et al. (95.00%), Wang et al. (90.70%), Muhammad et al. (94.50%), Frizzi et al. (97.90%), Horng & Jian-Wen (96.47%),andHsuetal.(97.00%)[7,8,12,10,13,9,14,15, 2].Thisexceptionalperformanceunderscoresadvancements infiredetectiontechnologyusingCNNandestablishesthe proposed model as a more reliable solution compared to earlierapproaches.

The CNN-based fire detection model achieved an overall accuracy of approximately 98% on the validation set, correctlyclassifyingfireandnon-fireinstances98%ofthe time. This result is promising and highlights the model's effectiveness.

5.1. Evaluation Metrics for Fire and Non-Fire Classes

• Precision: For the non-fire class, the precision is 98.7%, indicating that out of all predicted non-fire cases, 98.7%

werecorrect.Forthefireclass,theprecisionisslightlylower at98.18%.

• Recall: The non-fire class achieved a recall of 99.12%, demonstratingthemodel'sabilitytodetectalmostallactual non-firecases.Thefireclassrecall,at96.45%,suggestsroom forimprovementinidentifyingallactualfirecases.

• F1-Score: The F1-score balances precision and recall, achieving98.7%forthenon-fireclassand97.40%forthe fireclass,indicatingrobustoverallperformancebutslightly lowerefficiencyforfiredetection.

TheconfusionmatricesinFigures7and9provideadditional insightsintothemodel'spredictions:

• True Positives (TP):Themodelidentified270firecases and54non-firecasescorrectly,demonstratingitsreliability indetectingthepresenceoffire.

• False Positives (FP):Withonly3falsepositives,themodel minimizes false alarms, which is essential for practical applications.

• False Negatives (FN): Despite achieving low false negatives (2 for each class), further analysis is needed to understandandaddressthefactorscontributingtomissed detections.

• True Negatives (TN): Correctly identifying non-fire scenarios(187cases)highlightsthemodel'sabilitytoreduce unnecessaryalerts.Thesefindingsalignwithpriorstudies, suchasthosebyWuetal.[6],Hsuetal.[2],andGongetal. [8], emphasizing the importance of minimizing false positivesandnegativesinfiredetectionsystems.TheCNNbased model demonstrates significant potential for fire detection,withhighprecision,recall,andF1-scoresforboth fire and non-fire classes. However, further refinements, particularly to enhance recall and F1- scores for the fire class,couldimprovethemodel'sreliability.Byaddressing theseareas,themodelcouldbecomeavaluabletoolforfire monitoringandsafetyinreal-worldenvironments.

Thisresearchsignificantlyadvancesthefieldofintelligent firedetectionbyaddressingthelimitationsofpriorstudies. A key achievement is the development of a model that demonstrates high performance across diverse datasets whileseamlesslyintegratinginto a web-basedapplication forreal-timefiredetection.Unlikepreviousstudies,which primarily emphasized improving model accuracy without considering practical implementation, this study takes a comprehensive approach. For example, Zhang et al. [7] achievednotableaccuracyincontrolledenvironmentsbut didnotextendtheirworktocreateadeployablesystem.In

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 02 | Feb 2025 www.irjet.net p-ISSN: 2395-0072

contrast,thisresearchbridgesthegapbetweentheoretical development and practical usability by creating a userfriendly interface for real-time fire detection in various indoorsettings.Thispractical innovationensuresthatthe findings can be effectively applied, enhancing safety measuresinbothpublicandprivatespaces.Theoutcomesof thisstudylayarobustfoundationforfutureadvancements infiremonitoringanddetectiontechnologies.

The authors confirm that no conflicts of interest are associatedwiththisresearch.

[1] ShazaliDauda,M.,&Toro,U.S.ArduinoBasedFire DetectionandControlSystem. InternationalJournal of Engineering Applied Sciences and Technology, 2020;04(11):447–453.

[2] Hsu,T.W.,Pare,S.,Meena,M.S.,Jain,D.K.,Li,D.L., Saxena, A., Prasad, M., & Lin, C. T. An early flame detection system based on image block threshold selection using knowledge of local and global featureanalysis. Sustainability(Switzerland),2020; 12(21):1–22.

[3] NEDCC. An Introduction to Fire Detection, Alarm, andAutomaticFireSprinklers. PreservationLeaflets, 2017;1–15.

[4] Moumgiakmas,S.S.,Samatas,G.G.,&Papakostas,G. A.Computervisionforfiredetectiononuavs from softwaretohardware.FutureInternet,2021;13(8).

[5] Ghali,R.,Jmal,M.,SouideneMseddi,W.,&Attia,R. RecentAdvancesinFireDetectionandMonitoring Systems:AReview.SmartInnovation,Systemsand Technologies,2020; 146(January),332–340.

[6] Wu,H.,Wu,D.,&Zhao,J.Anintelligentfiredetection approach through cameras based on computer visionmethods.ProcessSafetyandEnvironmental Protection,2019;127(July),245–256.

[7] Zhang,B.,Sun,L.,Song,Y.,Shao,W.,Guo,Y.,&Yuan, F. DeepFireNet: A real-time video fire detection method based on multi-feature fusion. Mathematical Biosciences and Engineering, 2020; 17(6),7804–7818.

[8] Gong, F., Li, C., Gong, W., Li, X., Yuan, X., Ma, Y., & Song, T. A real-time fire detection method from video with multifeature fusion. Computational IntelligenceandNeuroscience,2019.

[9] Muhammad, K., Ahmad, J., Member, S., Lv, Z., & Bellavista, P. Efficient Deep CNN-Based Fire

Detection and Localization in Video Surveillance Applications.2019.

[10] Avazov,K.,Mukhiddinov,M.,Makhmudov,F.,&Cho, Y. I. Fire detection method in smart city environments using a deep-learning-based approach. Electronics (Switzerland),2022;11(1),1–17.

[11] Lakis, R., “Fire Images Dataset (Indoor and outdoor)”,MendeleyData,2020,V1.

[12] Sasken.ComputerVisionBasedFireDetection.Ucsd, 2019.1–15.

[13] Wang, Y., Dang, L., & Ren, J. Forest fire image recognitionbasedonconvolutionalneuralnetwork. Journal of Algorithms and Computational Technology,2019;13,1–11.

[14] Frizzi, S., Kaabi, R., Bouchouicha, M., Ginoux, J. M., Moreau, E., & Fnaiech, F. (2016). Convolutional neuralnetworkforvideofireandsmokedetection. IECON Proceedings (Industrial Electronics Conference), 877–882. https://doi.org/10.1109/IECON.2016.7793196

[15] Horng,W.,&Jian-Wen,P.(2015).Image-BasedFire Detection Using Neural Networks. Conference Paper: Department of Computer Science and Information Engineering,TamkangUniversity,Taipei,Taiwan25137, ROC, October.https://doi.org/10.2991/jcis.2006.301