Edge AI Made Practical

AI-Projects for the Raspberry Pi with the AI Hat+

●

Günter Spanner

● This is an Elektor Publication. Elektor is the media brand of Elektor International Media B.V.

PO Box 11, NL-6114-ZG Susteren, The Netherlands

Phone: +31 46 4389444

● All rights reserved. No part of this book may be reproduced in any material form, including photocopying, or storing in any medium by electronic means and whether or not transiently or incidentally to some other use of this publication, without the written permission of the copyright holder except in accordance with the provisions of the Copyright Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licencing Agency Ltd., 90 Tottenham Court Road, London, England W1P 9HE. Applications for the copyright holder's permission to reproduce any part of the publication should be addressed to the publishers.

● Declaration

The authors and publisher have used their best efforts in ensuring the correctness of the information contained in this book. They do not assume, or hereby disclaim, any liability to any party for any loss or damage caused by errors or omissions in this book, whether such errors or omissions result from negligence, accident or any other cause.

● British Library Cataloguing in Publication Data

A catalogue record for this book is available from the British Library

● ISBN 978-3-89576-714-2 Print

ISBN 978-3-89576-715-9 eBook

● © Copyright 2026 Elektor International Media www.elektor.com

Editor: Clemens Valens

Prepress Production: D-Vision, Julian van den Berg

Printers: Ipskamp, Enschede, The Netherlands

Elektor is the world's leading source of essential technical information and electronics products for pro engineers, electronics designers, and the companies seeking to engage them. Each day, our international team develops and delivers high-quality content - via a variety of media channels (including magazines, video, digital media, and social media) in several languages - relating to electronics design and DIY electronics. www.elektormagazine.com

Warnings

• The circuits and boards in this book may only be operated with tested, doubleinsulated safety power supplies. Insulation faults in a simple power supply could lead to life-threatening voltages on non-insulated components.

• High-power LEDs can cause eye damage. Never look directly into an LED!

• The author and publisher accept no liability for any damage resulting from the construction of the described projects.

• Electronic circuits can emit electromagnetic interference. Since the publisher and author have no influence over the user's technical implementation, the user is solely responsible for complying with relevant emission limits.

Program download

The programs from this book can be downloaded from www.elektor.com

If a program is not identical to the one described in the book, the version from the download should be used, as it is the more up-to-date version.

Chapter 1 • Introduction

Embedding artificial intelligence into small devices—often called "edge AI"—is rapidly changing how we interact with everyday gadgets. By placing the "brain" of AI directly inside cameras, phones, wearables, and other compact electronics, we unlock powerful capabilities without ever sending our data off to distant servers.

Edge AI refers to the deployment of artificial intelligence (AI) algorithms locally on devices ("at the edge" of a network) rather than in centralized cloud servers.

Imagine your security camera recognizing a visitor at the door instantly, flagging friends and family while ignoring pets. There's no roundtrip delay to the cloud, so you get an immediate alert the moment someone approaches. Picture your smartphone translating speech on the fly without streaming audio to the internet, letting you carry on a private conversation abroad. Think of a wearable health tracker that spots an irregular heartbeat in real time and prompts you to take action before an alarm even sounds—no need to wait for some central server to crunch the numbers.

These aren't futuristic fantasies anymore; they're practical benefits of running AI directly on the device itself. Processing data locally means decisions happen in milliseconds, boosting responsiveness in lifecritical and timesensitive scenarios. Because raw data never leaves the gadget, your personal videos, voice recordings, and health metrics stay private and secure. And in places where connectivity is spotty or expensive—rural farms, construction sites, or even a subway tunnel—edgeAI devices keep working without interruption.

Beyond speed and privacy, edge AI also slashes bandwidth costs by only transmitting concise results (like "face detected" or "alert generated") instead of full data streams. Energyefficient AI chips designed for the edge can deliver trillions of operations per second on just a few watts, keeping batterypowered devices running longer. Finally, deploying thousands of small, selfreliant units can be more scalable and resilient than relying on a handful of cloud servers; if one device goes offline, the rest carry on unimpeded.

By embedding AI into the very fabric of our gadgets, we're creating a world of instant insights, enhanced privacy, reduced costs, and true offline capability—transforming the way we live, work, and play.

1.1. Why is AI inside small systems relevant?

First, speed must be considered. When sensor data, a photo, or a snippet of audio is sent to a remote server for analysis, a delay is introduced. Even a delay of a few hundred milliseconds can make a significant difference. In situations like obstacle avoidance in a self-driving toy, every millisecond is crucial. By having inference (the decision-making step of the AI model) executed directly on the device, the back-and-forth communication is eliminated. As a result, responses are produced nearly instantly, allowing cameras, drones, or robots to act without hesitation.

Privacy is also greatly enhanced. When raw data—such as voice, facial imagery, or home videos—is transmitted over the internet, privacy concerns are raised. Questions arise about who might see the data and where it is stored. With Edge AI, sensitive information is retained on the device. Processing is performed locally by the AI model, and only minimal, anonymized results are sent (a short message of a few bits instead of a full video clip wit Mega- or even Gigabytes). This leads to fewer privacy complications and helps ensure compliance with regulations such as the GDPR (General Data Protection Regulation) in Europe or strict regulations in healthcare, which mandate rigorous handling of personal data.

Internet usage and cost are critical considerations. In many regions, connectivity is slow or costly. Cellular or satellite connections often incur charges based on data usage. If highresolution video were to be continuously streamed to the cloud, significant costs would be generated. However, by enabling video feeds to be filtered locally via edge-AI cameras, only essential snapshots are uploaded (e.g., when an animal is detected or a machine issue arises). This drastically reduces data transmission and keeps expenses low.

Reliability is another benefit. Devices that rely on cloud services function only as well as the network allows. If the Wi-Fi or cellular signal is lost, smart features can cease to operate. With on-device AI, systems continue functioning offline. For example, drones mapping remote forests can keep identifying tree species, and wearable health monitors can continue issuing alerts about abnormal heartbeats even during events like marathons in tunnels. Offline capability ensures trustworthiness in any location.

Battery life and energy efficiency are vital, especially for portable or remote devices. Running complex AI models on general-purpose CPUs or transmitting data constantly via cellular modems can quickly deplete battery life. Specialized AI chips—such as the Hailo8L—are designed to execute trillions of operations per second for each watt consumed. In practical terms, significant AI processing is performed with minimal power usage, allowing batterypowered cameras or sensors to operate for extended periods—days or weeks rather than hours.

Scalability and cost-effectiveness are naturally linked. When thousands of smart sensors are deployed across an urban environment—for traffic monitoring, air quality tracking, or public safety—equipping each node with edge-AI capability removes the need for largescale cloud server infrastructure and associated computing costs. Processing is carried out independently on each device. This distributed model scales efficiently: devices can be added as needed without escalating cloud expenses.

An unexpected benefit lies in system resilience. Centralized cloud platforms may experience outages, maintenance, or cyberattacks, which can disable smart functionality. However, autonomous devices maintain functionality independently. For instance, home security cameras continue detecting motion even during manufacturer cloud updates or disruptions.

From a development perspective, Edge AI creates opportunities for innovation. AI models can be tailored to work within constraints such as limited memory, low processing power, and low energy availability. Tools like the Hailo Dataflow Compiler assist in converting widelyused neural-network formats into optimized code suitable for execution on edge chips. This makes fast iteration possible, allowing for rapid prototyping of novel applications—such as plant-monitoring sensors that detect dry soil or gesture-controlled robotic arms.

Of course, challenges exist when deploying AI on small devices. Models must be reduced in size using techniques such as quantization (fewer bits per number) or pruning (removal of rarely used connections). A careful balance must be struck between accuracy, speed, and power usage. Fortunately, the surrounding ecosystem of tools and community support is advancing rapidly, making it easier to integrate sophisticated models into compact systems.

Also, the environmental impact should not be overlooked. Large-scale data centers consume vast energy and require significant cooling. By distributing inference across numerous efficient devices, the overall carbon footprint of AI applications can be reduced. When processing is performed locally rather than centralized in GPU-based cloud farms, total energy usage often decreases. In the context of global sustainability goals, Edge AI offers a greener alternative.

In conclusion, embedding AI directly into small devices enables advantages in

• speed,

• privacy,

• cost,

• reliability,

• efficiency,

• scalability.

Data is kept local, bandwidth usage is minimized, and functionality is maintained even in remote or challenging environments. Power-efficient AI chips allow devices to perform complex tasks without exhausting batteries, while distributed intelligence increases resilience. As tools and methods improve, further creative applications will emerge—across smart homes, industrial monitoring, health wearables, and autonomous machines. This evolution represents more than a technological trend—it forms the basis for a future filled with secure, sustainable, and responsive intelligent systems.

Finally, edgeAI scales easily and affordably. Instead of building one massive data center, organizations can distribute hundreds or thousands of small units, each doing its own local processing. Adding more devices is straightforward and doesn't balloon cloudcompute costs. And because intelligence is decentralized, a problem in one unit—like a hardware fault or software update—doesn't bring the entire system down.

As specialized chips, software tools, and modeloptimization techniques continue to improve, we'll see edgeAI grow in every corner of our lives. Smart homes will learn our routines, factories will selfcorrect in real time, doctors will have instant diagnostic support at the

patient's bedside, and environmental monitors will protect wildlife and farmland without human intervention. By moving AI to the edge, we gain faster reactions, stronger privacy, lower costs, greater reliability, and better energy efficiency—making our devices not just connected, but truly intelligent.

1.2. The Technical Advantages of the Raspberry Pi AI Hat+

The Raspberry Pi AI Hat+ represents a significant leap forward in deploying artificial intelligence at the edge. The kit combines Hailo's specialized AI processor with development tools, enabling rapid prototyping and efficient execution of deep learning models on embedded devices. The technical benefits of the Raspberry Pi AI Hat+ include its energy efficiency, scalable architecture, and support for industry-standard AI frameworks. The Raspberry Pi AI Hat+ is useful in applications ranging from real-time object detection to intelligent sensor data analysis without relying on cloud-based computing. Some of the main advantages are:

• High Compute Performance: Up to 26 TOPS (Tera Operations per Second), outperforming many CPUs/GPUs found in singleboard computers

• Energy Efficiency: Consumes just 2.5–3 W, ideal for batterypowered systems

• Framework Support: Compatible with TensorFlow, PyTorch, ONNX, OpenCV

• Easy GStreamer Integration: Preoptimized pipelines for videoAI applications

With the AI Hat+, a Raspberry Pi can perform realtime video analytics that would simply be impossible on its CPU or even GPU. Typical use cases include:

• Fast Object & Face Detection: For surveillance cameras or security systems

• Optical Quality Control: Spotting defects on a production line

• High Performance Text Recognition (OCR – Optical Character Recognition): Automating document capture or licenseplate reading

Because of its GStreamer support, the AI Hat+ can be dropped directly into live camera streams to detect and track objects on the fly.

One of the biggest hurdles for drones and robots is processing sensor data in real time. The Raspberry Pi AI Hat+ makes this possible and includes:

• Navigation & Obstacle Avoidance: Autonomous vehicles and robots can analyze their surroundings faster

• Gesture Control: Recognize hand motions to steer robots or drones

• Object Tracking: Let drones follow moving targets or map terrain

Low power draw means the AI Hat+ is suitable for mobile, batterydriven platforms like robots and drones. In home automation, the AI Hat+ enables:

• Local Voice Control & Recognition: No cloud connection required

• Anomaly Detection: Spot suspicious movements or unusual sounds

• Automatic Lighting & Climate Control: Adjust settings based on detected presence

Edge computing with the AI Hat+ ensures that sensitive data never needs to leave your home network.

Finally, factories and industrial facilities benefit from realtime AI on the (production) line:

• Predictive Maintenance: Analyze sensor data to forecast equipment failures

• Automated Inspection: Detect faults on production assemblies

• LicensePlate Recognition: For toll systems or parkinglot management

The AI Hat+'s performance makes it well suited even for demanding, realtime industrial applications.

Why not just use a GPU? GPUs are common AI accelerators but present challenges at the edge:

• Higher Power Draw: GPUs often require 10–100 W, whereas the AI Hat+ uses only 2.5–3 W

• Large Size: Too bulky for compact Single-Board Computer like the Raspberry Pi

• Higher Cost: Devices such as NVIDIA Jetson modules or external GPUs typically are much more expensive than the AI Hat+

Additionally, a dedicated NPU (Neural Processing Unit) like the Hailo8L are optimized for AI workloads without the overhead of a generalpurpose GPU.

Today, developers can build powerful AI applications that once required large GPUs or cloud servers on small-formfactor devices. If you're exploring modern AI technologies or looking for efficient edge solutions, the Raspberry Pi AI Hat+ is well worth your consideration.

Chapter 2 • Fundamentals of Artificial Intelligence and Machine Learning

Machine Learning (ML) is a branch of artificial intelligence that enables computers to learn patterns from data and make predictions or decisions without being explicitly programmed for each specific task.

In recent years, Artificial Intelligence and Machine Learning have transformed from niche areas of research into central pillars of modern technology. From voice assistants and recommendation systems to medical diagnostics and autonomous vehicles, these technologies are reshaping industries and daily life alike. This chapter provides a foundational understanding of AI and ML—what they are, how they work, and why they matter. We begin by exploring the historical evolution of AI, distinguishing between symbolic reasoning and data-driven learning. We then delve into the core principles of machine learning, including supervised, unsupervised, and reinforcement learning, and introduce key concepts such as models, algorithms, training, and evaluation.

Rather than focusing solely on technical implementation, this chapter emphasizes conceptual clarity, aiming to equip readers with a solid base for further study and practical application. Whether you're a student, developer, or simply curious about intelligent systems, understanding these fundamentals is the first step in engaging with the rapidly advancing world of AI.

2.1. Introduction to AI and ML concepts

AI and ML are two of the most transformative fields in computer science and data technology today. Together, they enable machines to perform tasks that typically require human intelligence—such as recognizing patterns, understanding language, making decisions, and adapting to new information. As these technologies increasingly power applications across healthcare, finance, education, transportation, and beyond, a solid understanding of their foundations has become essential for both students and professionals.

Artificial Intelligence refers to the broader goal of creating systems that can simulate human-like intelligence. It encompasses a range of subfields, including natural language processing, robotics, computer vision, and expert systems. AI systems are designed to perceive their environment, reason through complex problems, learn from experience, and take actions that maximize the chances of achieving a specific objective.

Within AI, Machine Learning has emerged as a key approach. Rather than being explicitly programmed for every task, ML systems learn patterns and relationships from data. Machine learning focuses on developing algorithms that allow computers to learn and make predictions or decisions based on input data. This shift from rule-based logic to data-driven learning has made it possible to build more flexible, scalable, and adaptive systems.

This chapter explores the fundamental principles of AI and ML, including:

• Historical context and evolution of artificial intelligence

• Types of AI: narrow vs. general AI, and symbolic vs. connectionist approaches

• Categories of machine learning: supervised, unsupervised, and reinforcement learning

• Key concepts: datasets, features, models, training, testing, and evaluation metrics

• Algorithms and models: including decision trees, linear regression, neural networks, and clustering techniques

• Challenges and limitations: such as bias, overfitting, interpretability, and data dependency

• Ethical and societal considerations of deploying AI in real-world contexts

By the end of this chapter, readers will be equipped with a conceptual toolkit for understanding how AI and ML systems are built, how they operate, and where they are most effectively applied. This knowledge lays the groundwork for deeper study, hands-on experimentation, and informed discussion around the development and use of intelligent technologies.

2.2. Types of machine learning: supervised, unsupervised, reinforcement learning

Machine learning is typically categorized into three main types: supervised learning, unsupervised learning, and reinforcement learning. Each type is defined by how the algorithm learns from the data and the kind of feedback it receives during training.

Supervised learning is the most common and intuitive form of machine learning. In this approach, the algorithm is trained on a labeled dataset, where each input is associated with a known output. The goal of supervised learning is to learn a mapping from inputs to outputs so that the model can accurately predict the output for new, unseen data. This category is further divided into classification and regression tasks. Classification is used when the output is categorical, for example, identifying whether an email is spam or not. Regression, on the other hand, deals with continuous outputs such as predicting house prices or global temperatures.

Common algorithms used in supervised learning include linear regression, logistic regression, decision trees, support vector machines, and neural networks. While supervised learning can be highly effective, it typically requires large volumes of labeled data, which can be costly and time-consuming to obtain.

In contrast, unsupervised learning deals with unlabeled data. The algorithm is not given explicit instructions on what to predict but is instead tasked with identifying hidden structures or patterns within the data. The most common unsupervised learning techniques are clustering and dimensionality reduction. Clustering algorithms group similar data points together based on their features, making them useful for customer segmentation or anomaly detection.

Dimensionality reduction techniques like Principal Component Analysis (PCA) help reduce the complexity of the data while preserving essential information, often used for data visualization or preprocessing. Since there are no labels, evaluating the performance of unsupervised models can be challenging, and the outcomes may not always be straightforward to interpret.

Reinforcement learning is a fundamentally different approach inspired by behavioral psychology. In this paradigm, an agent interacts with an environment, learning to make decisions by receiving feedback in the form of rewards or penalties. The objective is to learn a policy—a strategy for choosing actions—that maximizes cumulative rewards over time. Reinforcement learning is particularly useful in dynamic, complex environments where actions have long-term consequences. It is widely used in applications such as game playing (e.g., AlphaGo), robotics, autonomous vehicles, and adaptive resource management. Some well-known reinforcement learning algorithms include Q-learning, deep Q-networks, and policy gradient methods. Training reinforcement learning models can be computationally intensive, and they often require vast amounts of interaction with the environment to learn effectively. Moreover, challenges such as balancing exploration (trying new actions) and exploitation (choosing the best-known action) make this a more complex learning framework.

In summary, supervised learning excels in scenarios where labeled data is available and precise predictions are needed; unsupervised learning is suited for discovering patterns in unlabeled data; and reinforcement learning is ideal for learning optimal strategies through interaction and feedback. Understanding the distinctions and appropriate applications of these learning types is fundamental to successfully applying machine learning techniques across various domains.

2.3. Neural networks and deep learning basics

Neural networks are a fundamental concept in modern artificial intelligence, especially in the field of deep learning, which has achieved remarkable success in areas such as image recognition, natural language processing, speech synthesis, and autonomous systems. Inspired by the structure and functioning of the human brain, neural networks are computational models composed of layers of interconnected nodes called neurons or units, which process data to learn patterns and representations.

At the core of a neural network is the artificial neuron, also known as a perceptron, which mimics a biological neuron's ability to activate in response to stimuli. Each neuron receives inputs (often numerical values), processes them through a weighted sum, adds a bias term, and passes the result through a nonlinear activation function such as the sigmoid,

tanh, or ReLU (Rectified Linear Unit). This activation determines whether and how strongly the neuron "fires," contributing to the network's overall decision-making process.

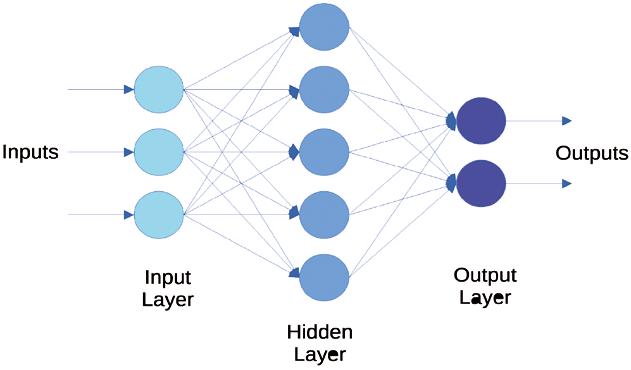

Neural networks are typically organized into layers:

1. Input Layer: Receives the raw input data, such as pixel values of an image or numerical features from a dataset.

2. Hidden Layers: One or more intermediate layers where computations are performed. Each neuron in a hidden layer is connected to neurons in adjacent layers, and these layers learn to extract increasingly abstract representations of the input.

3. Output Layer: Produces the final result of the network, such as a classification label or a numerical prediction.

When a neural network contains many hidden layers—often more than three—it is referred to as a deep neural network, and the learning process is called deep learning. These deep architectures allow models to learn complex hierarchical features, making them powerful for tasks like recognizing faces, translating languages, or generating text.

Training a neural network involves a process called backpropagation, which calculates the gradient of a loss function (a measure of how far the model's prediction is from the actual label) with respect to each weight in the network. Using an optimization algorithm such as stochastic gradient descent (SGD) or Adam, the network updates its weights iteratively to minimize the loss function, thereby improving accuracy.

Several important neural network architectures have emerged to handle different types of data:

• Fully Connected Networks (Dense Networks) are basic architectures where each neuron is connected to every neuron in the previous and next layer. These are suitable for structured data or simple classification problems.

• Convolutional Neural Networks (CNNs) are specifically designed for image data. They use convolutional layers to detect spatial hierarchies in data, such as edges, textures, and shapes.

• Recurrent Neural Networks (RNNs) and their advanced variants like LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Units) are tailored for sequential data, such as time series or natural language, as they can maintain internal memory of previous inputs.

• Transformers, a more recent innovation, have revolutionized natural language processing (NLP) by enabling parallel processing of sequence data instead of relying on sequential steps like in RNNs. Introduced in the seminal paper "Attention is All You Need" (Vaswani et al., 2017), transformers use a mechanism called self-attention, which allows the model to weigh the importance of different words in a sequence relative to each other. This architecture underlies large-scale models like GPT, and other foundation models, and has become a cornerstone of modern deep learning applications in language understanding and generation.

Another important aspect of deep learning is feature learning. Unlike traditional machine learning models that often rely on hand-crafted features, deep neural networks learn features automatically from raw data. As information passes through successive layers, the network progressively extracts higher-level abstractions—for example, in image processing, the early layers might detect edges and textures, while deeper layers identify shapes, objects, and context.

Training deep neural networks comes with several challenges. One common issue is overfitting, where the model performs well on training data but poorly on unseen data. To combat this, techniques like dropout (randomly deactivating neurons during training), regularization, and early stopping are commonly used. Additionally, training deep models requires significant computational resources and often large, labeled datasets, which has led to the development of transfer learning. This is a method where a pre-trained model is fine-tuned for a specific task using a smaller dataset.

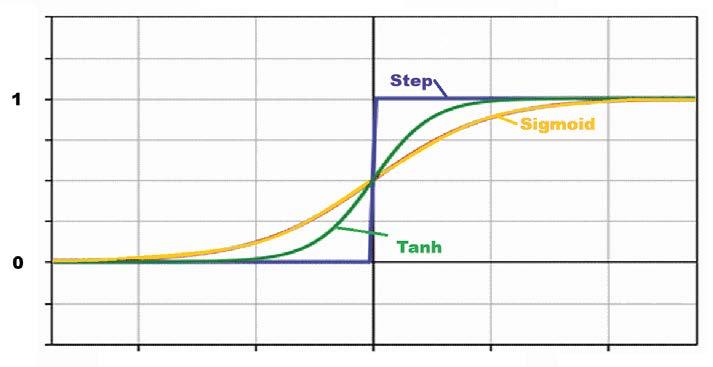

Another critical component in deep learning workflows is the use of activation functions. These functions introduce non-linearity into the network, allowing it to model complex relationships in the data. Common activation functions include:

• ReLU (Rectified Linear Unit): The most widely used in hidden layers due to its simplicity and efficiency.

• Sigmoid: Often used in output layers for binary classification problems.

• Softmax: Converts outputs into probabilities for multi-class classification tasks.

• Step: Produces discrete outputs (e.g., 0 or 1) depending on whether the input crosses a threshold

• Tanh (Hyperbolic Tangent): Outputs values between –1 and 1, providing zerocentered activations and often used in hidden layers before ReLU became popular.

2.2: Activation Functions

In addition to architectures and training techniques, deep learning frameworks such as TensorFlow, PyTorch, and Keras have significantly accelerated development by providing tools for model building, training, and deployment. These libraries abstract many low-level details and offer pre-built modules, making deep learning more accessible to researchers, engineers, and hobbyists alike.

In summary, neural networks form the computational backbone of deep learning. Through the stacking of layers, careful tuning of weights, and the use of powerful training algorithms, these models can learn to solve highly complex tasks across various domains. As data availability and computational power continue to grow, neural networks and deep learning will remain central to the advancement of artificial intelligence and intelligent systems.

2.4. Edge-AI vs. Cloud-AI

As artificial intelligence becomes increasingly integrated into devices, applications, and services, the underlying infrastructure powering AI workloads has become critically important. Traditionally, AI models were developed, trained, and deployed in the cloud, i.e. powerful remote data centers connected via the internet. However, with the explosive growth of connected devices, sensors, and real-time applications, there has been a significant shift toward processing AI tasks at the edge—close to where data is generated. This paradigm is known as Edge AI.

This chapter provides a comprehensive comparison between Edge AI and Cloud AI, exploring their architectures, use cases, trade-offs, and how they complement each other in modern AI systems. Understanding these paradigms is essential for building efficient, scalable, and responsive intelligent systems, especially in domains like IoT, robotics, autonomous vehicles, and smart cities.

Edge AI refers to the deployment of AI models on edge devices such as smartphones, microcontrollers, cameras, drones, and embedded systems. These devices process data locally, often using specialized hardware such as NPUs (Neural Processing Units), GPUs, FPGAs, or dedicated AI chips.

Key Characteristics

• On-device inference: AI computations occur on or near the device where data is generated.

• Low latency: Real-time or near-real-time decision-making.

• Offline capability: Works without continuous internet access.

• Resource constraints: Limited processing power, memory, and energy.

Examples

• Voice assistants on smartphones

• AI-powered security cameras detecting motion or faces

• Industrial sensors monitoring equipment health in real-time

Cloud AI refers to the use of centralized data centers to train, store, and serve AI models. It relies on high-performance computing infrastructure and massive datasets to develop and deploy complex AI services. Users typically access cloud AI services via APIs or platforms like Google Cloud AI, AWS SageMaker, or Microsoft Azure AI.

Key Characteristics

• Centralized processing: Heavy computation is offloaded to cloud servers.

• Scalability: Easily scales up to support large datasets and complex models.

• Model training and deployment: Ideal for developing and managing largescale AI solutions.

• Continuous connectivity: Requires reliable internet connection for inference.

Examples

• Cloud-based natural language processing services

• AI-powered search and recommendation engines

• Remote training and deployment of large deep learning models

The Architectural Differences between the two versions are shown in the following table:

Aspect

Location of computation

Latency

Connectivity

Power consumption

Data privacy

Model complexity

Edge AI

On the device or local gateway

Low (real-time)

Operates offline or with intermittent internet

Optimized for low-power operation

High, as data remains local

Limited (due to hardware constraints)

There are several Advantages and Limitations in both cases:

Edge AI: Advantages

Cloud AI

Remote cloud servers

Higher (network-dependent)

Requires consistent internet connection

High energy usage in data centers

Data sent over networks (privacy concerns)

Very high (leverages GPU clusters)

• Real-time performance: Critical for time-sensitive applications like autonomous driving and robotics.

• Reduced bandwidth usage: Less data needs to be transmitted to the cloud.

• Enhanced privacy and security: Sensitive data stays on the device.

• Offline functionality: Ideal for remote or unstable network environments.

Edge AI: Limitations

• Limited resources: Constraints in processing power, memory, and storage.

• Model simplification: Requires compression, pruning, or quantization.

• Difficult updates: Firmware and model updates can be complex.

Cloud AI: Advantages

• Scalable computation: Supports large-scale training and inference.

• Centralized updates: Easier to maintain and improve models.

• Data aggregation: Can leverage massive datasets for improved accuracy.

Cloud AI: Limitations

• Latency and reliability: Dependent on network speed and stability.

• Privacy risks: Sensitive data must be transmitted to external servers.

• Bandwidth costs: High data transmission rates can be expensive.

The following overview shows the most important Use Cases and Applications:

Domain Edge AI Applications

Healthcare Wearable health monitors, portable diagnostics

Manufacturing Predictive maintenance, visual inspection

Retail Smart shelves, autonomous checkout

Cloud AI Applications

Medical image analysis, population-level insights

Production optimization, supply chain analytics

Customer analytics, recommendation engines

Transportation Autonomous vehicles, traffic signal control Fleet management, traffic prediction

Agriculture In-field crop monitoring, pest detection

Smart Cities Surveillance cameras, air quality sensors

Weather analysis, large-scale yield prediction

City-wide analytics, infrastructure planning

Modern AI systems increasingly adopt a hybrid approach, combining the strengths of both edge and cloud. For example:

• Federated learning: A decentralized learning technique where models are trained locally on edge devices and only model updates (not raw data) are shared with a central server.

• Split computing: The inference task is divided between the edge and the cloud to balance latency and accuracy.

These approaches offer a promising path forward in building scalable, private, and efficient AI systems.

The distinction between Edge AI and Cloud AI is expected to blur further as:

• Edge hardware becomes more powerful

• AI model optimization techniques improve

• 5G and edge-cloud infrastructure reduce latency and enable seamless handoffs

• Decentralized and privacy-preserving AI frameworks such as federated learning and differential privacy gain traction

Additionally, green AI initiatives are promoting energy-efficient AI models, making edge deployment not only practical but also environmentally responsible.

Edge AI and Cloud AI are not mutually exclusive; they serve complementary roles in the AI ecosystem. Cloud AI provides the heavy lifting needed for training and large-scale deployment, while Edge AI brings speed, privacy, and local intelligence to the device level. Choosing between them—or designing a hybrid solution—depends on application requirements such as latency, data sensitivity, computational demand, and infrastructure availability.

As AI continues to expand into every facet of our lives, understanding these two paradigms is crucial for developers, system architects, and decision-makers aiming to build intelligent, responsive, and responsible AI systems.

Edge AI Made Practical

AI Projects for the Raspberry Pi with the AI HAT+

Edge AI is transforming everyday devices by putting intelligence where it matters most: directly inside the hardware. With on-device inference, a camera can recognize a visitor instantly, a phone can translate speech without streaming audio to the cloud, and a wearable can detect anomalies in real time—fast, private, and reliable even when the network disappears.

This book is your practical guide to building exactly those kinds of systems with the Raspberry Pi AI HAT+ and the Hailo-8L accelerator. You’ll start with clear foundations: core AI and machine-learning concepts, how neural networks work, and what truly distinguishes Edge AI from cloud AI—plus an honest look at ethical considerations and future impacts.

Then it’s straight to hands-on physical computing. Step by step, you’ll set up Raspberry Pi OS, power and cooling, and develop in Python using the Thonny IDE. You’ll learn GPIO basics with lights and servos, mount the AI Hat+ hardware, install and verify the Hailo software stack, and connect the right camera—official modules or USB webcams, even multiple cameras.

From your first pipeline to real projects, you’ll run person detection, pose estimation, segmentation, and depth estimation, then level up with YOLO object detection: smart alerts, guest counters, and custom extensions. You’ll even connect vision to motion by combining gesture recognition with servo-driven mechanisms, including a robotic arm.

With troubleshooting tips, hardware essentials, and a practical Python refresher, this book turns Edge AI from buzzword into buildable reality.

Dr. Günter Spanner has been active in research and development for several major corporations for more than 30 years. Project and technology management in the fields of biosensors and physical measurement technologies form the core of his work. In addition to his role as a lecturer at ETH Zurich and EU|FH, the author has successfully published technical articles and books on a wide range of topics – from electronics to measurement and sensor technology, all the way to gravitational-wave physics. His contributions to the frequency stabilization of lasers found application in the field of direct gravitationalwave detection, which was awarded the Nobel Prize in 2017.