Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Twinmotion: a new era

Reshaping the architect-friendly viz tool

Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Twinmotion: a new era

Reshaping the architect-friendly viz tool

Freedom from files: AEC firms seek data ownership

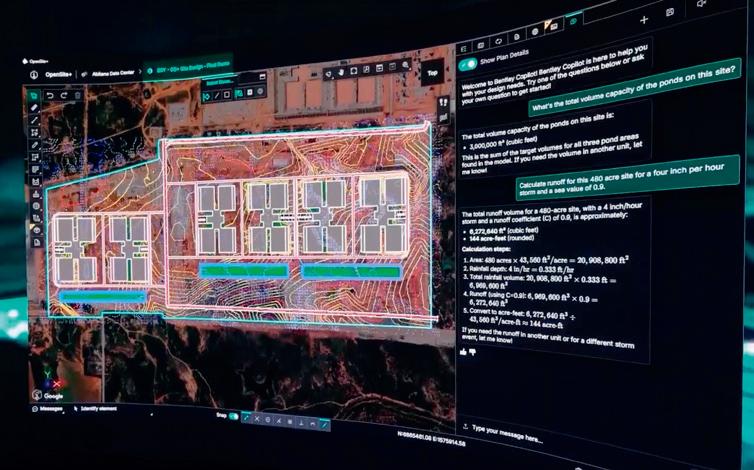

Visionary event returns 13-14 May NXT BLD 2026 An ecosystem of specialised agents Bentley frames AI future

editorial

MANAGING EDITOR

GREG CORKE greg@x3dmedia.com

CONSULTING EDITOR

MARTYN DAY martyn@x3dmedia.com

CONSULTING EDITOR

STEPHEN HOLMES stephen@x3dmedia.com

advertising

GROUP MEDIA DIRECTOR

TONY BAKSH tony@x3dmedia.com

ADVERTISING MANAGER

STEVE KING steve@x3dmedia.com

U.S. SALES & MARKETING DIRECTOR DENISE GREAVES denise@x3dmedia.com

subscriptions MANAGER

ALAN CLEVELAND alan@x3dmedia.com

accounts CHARLOTTE TAIBI charlotte@x3dmedia.com

FINANCIAL CONTROLLER

SAMANTHA TODESCATO-RUTLAND sam@chalfen.com

AEC Magazine is available FREE to qualifying individuals. To ensure you receive your regular copy please register online at www.aecmag.com about

AEC Magazine is published bi-monthly by X3DMedia Ltd 19 Leyden Street London, E1 7LE UK

T. +44 (0)20 3355 7310

F. +44 (0)20 3355 7319

© 2025 X3DMedia Ltd

All rights reserved. Reproduction in whole or part without prior permission from the publisher is prohibited. All trademarks acknowledged. Opinions expressed in articles are those of the author and not of X3DMedia. X3DMedia cannot accept responsibility for errors in articles or advertisements within the magazine.

Register your details to ensure you get a regular copy register.aecmag.com

Aecom acquires AI start-up for $390m, Trimble brings collaboration into SketchUp, KREOD to bring ‘aerospacegrade precision’ to AECO, plus lots more

Agentic AI to be embedded across Trimble’s technology stack and within the workflows of customers and partners

City of Raleigh using AI to gain insight into traffic, Tektome extracts knowledge from past projects, Viktor to simplify coding for automation, plus lots more

We preview some of the promising new new AI tools for the “Figma of 2D CAD”

AEC Magazine’s new directory will help you find the AI tools aimed at your particular pain point or specific function within the AEC concept-to-build process

When transitioning away from proprietary files, some AEC firms are seeking out cloud-based databases that promise genuine ownership of data

Make a diary date for 13-14 May 2026, when we will be holding the tenth edition of NXT BLD, incorporating NXT DEV

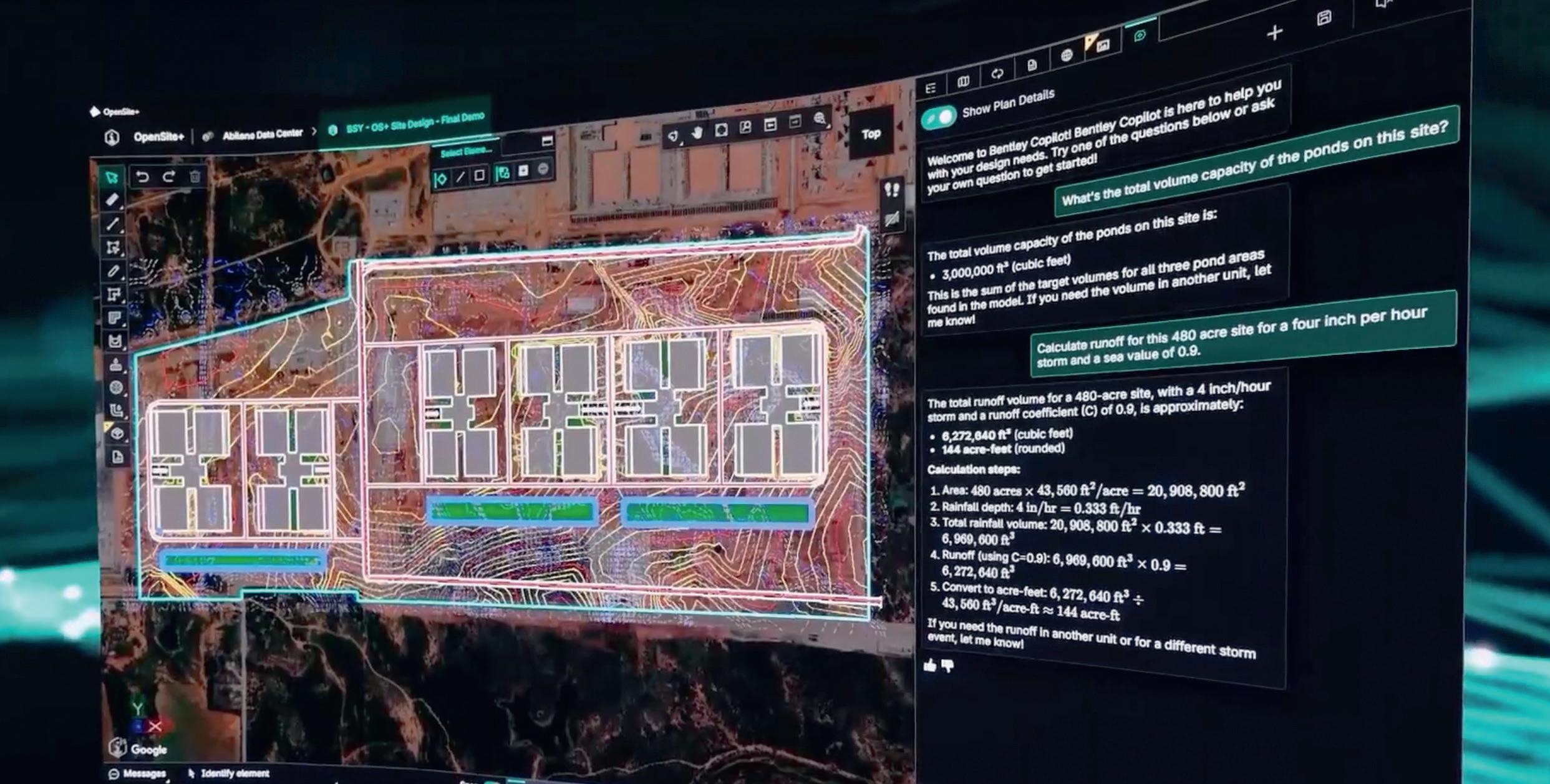

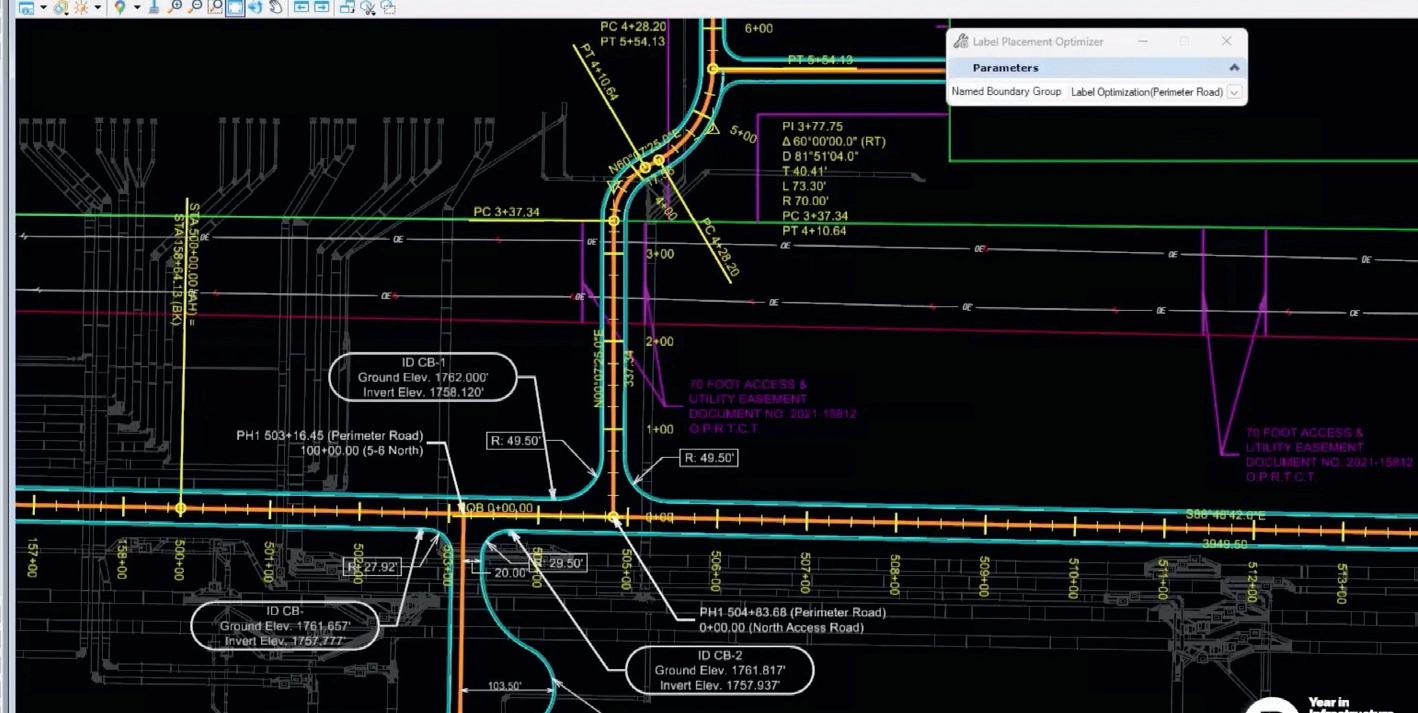

From civil site design to construction planning, Bentley is embedding AI across more of its tools, while giving firms full control over outcomes and data

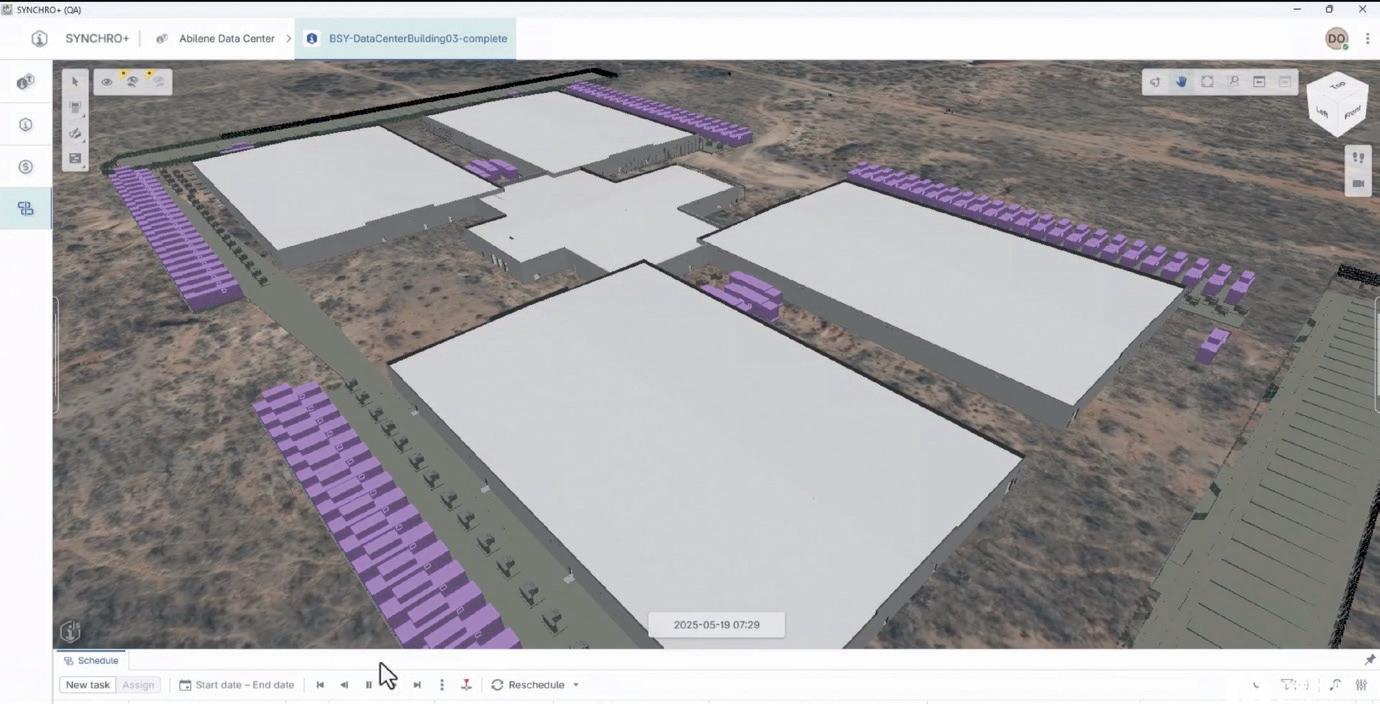

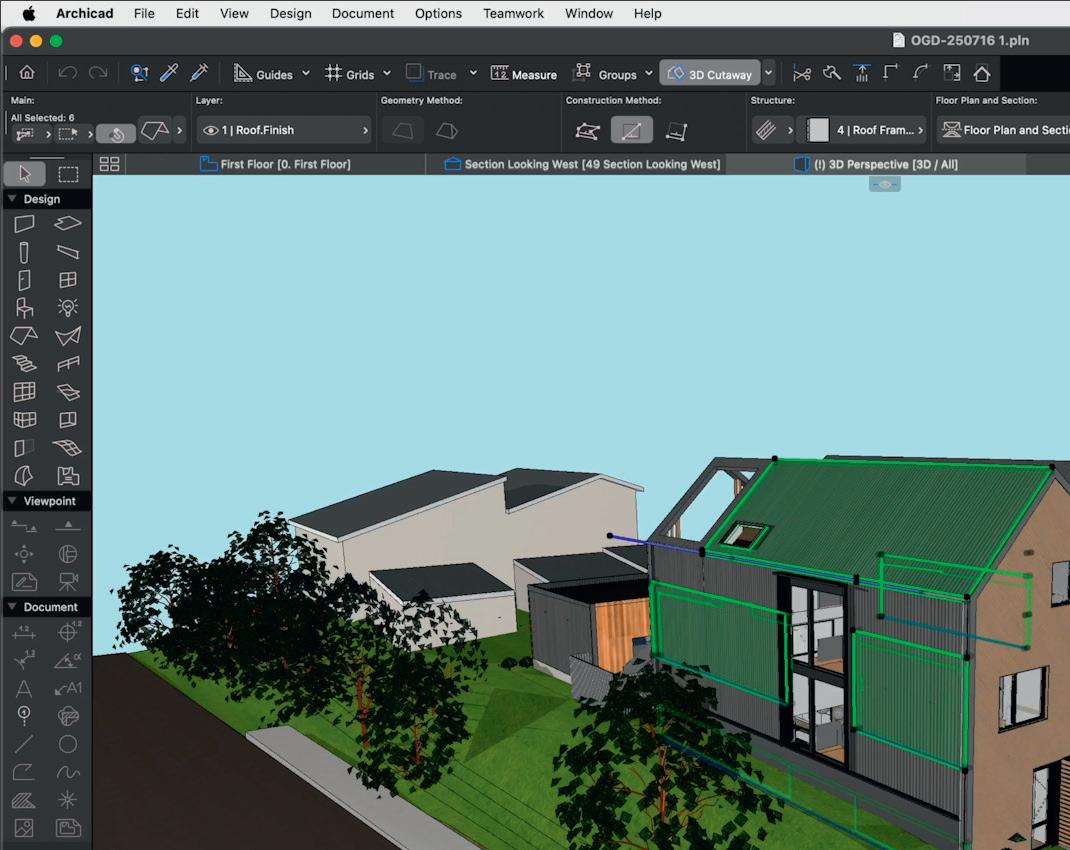

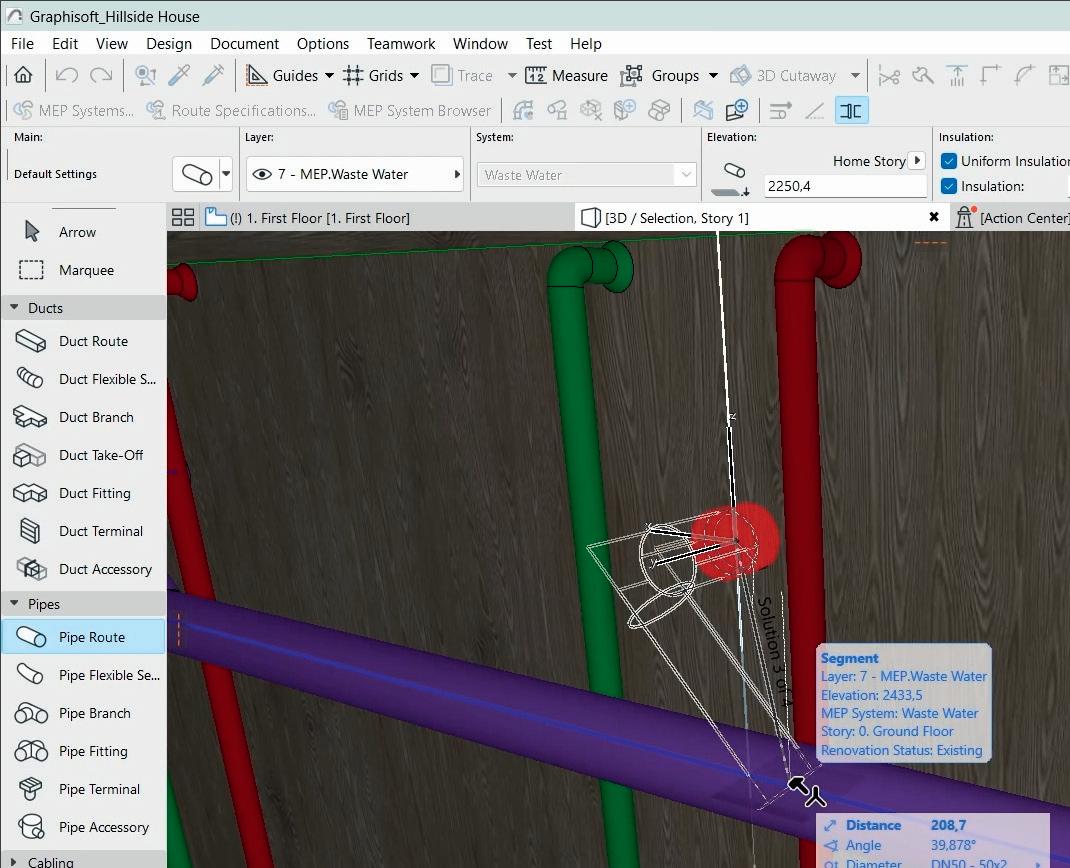

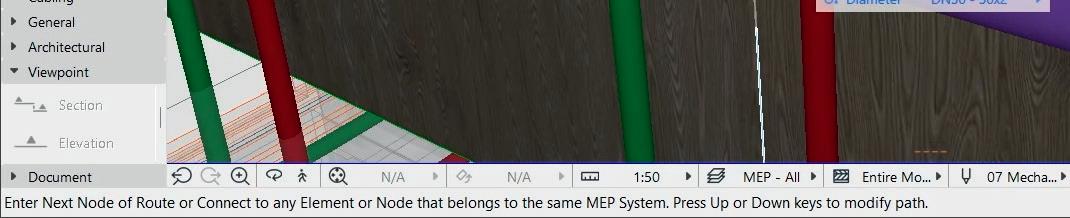

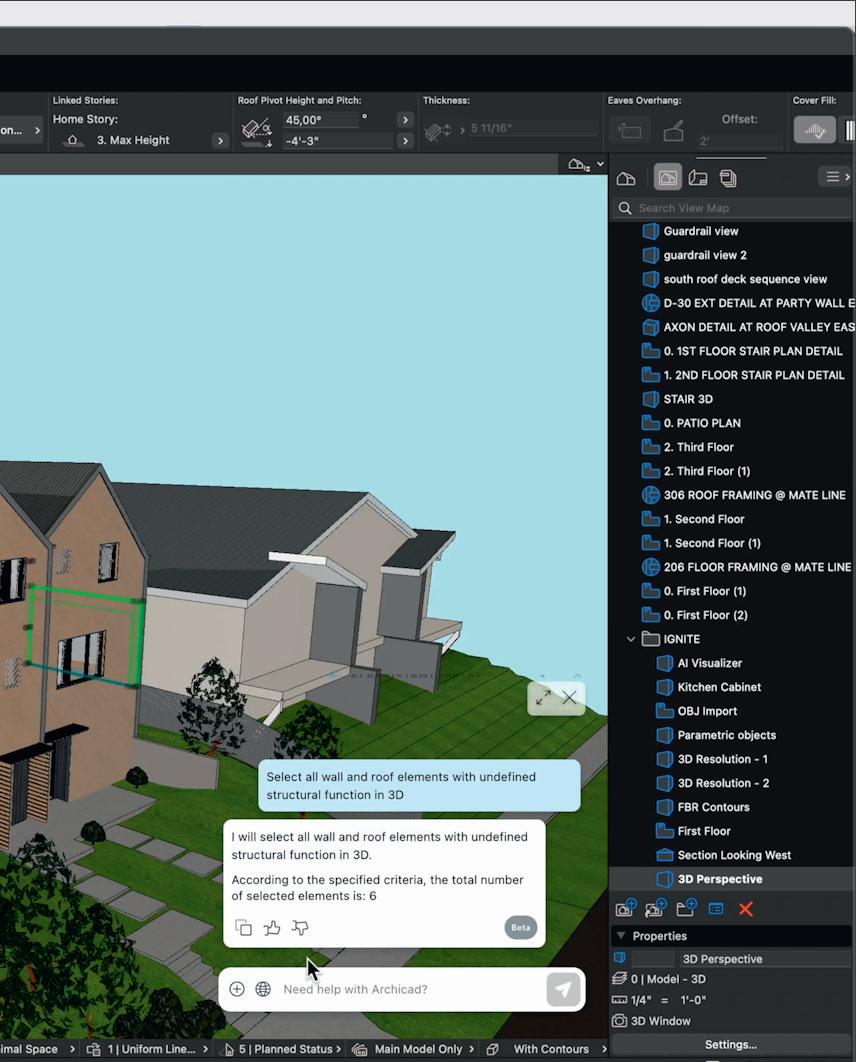

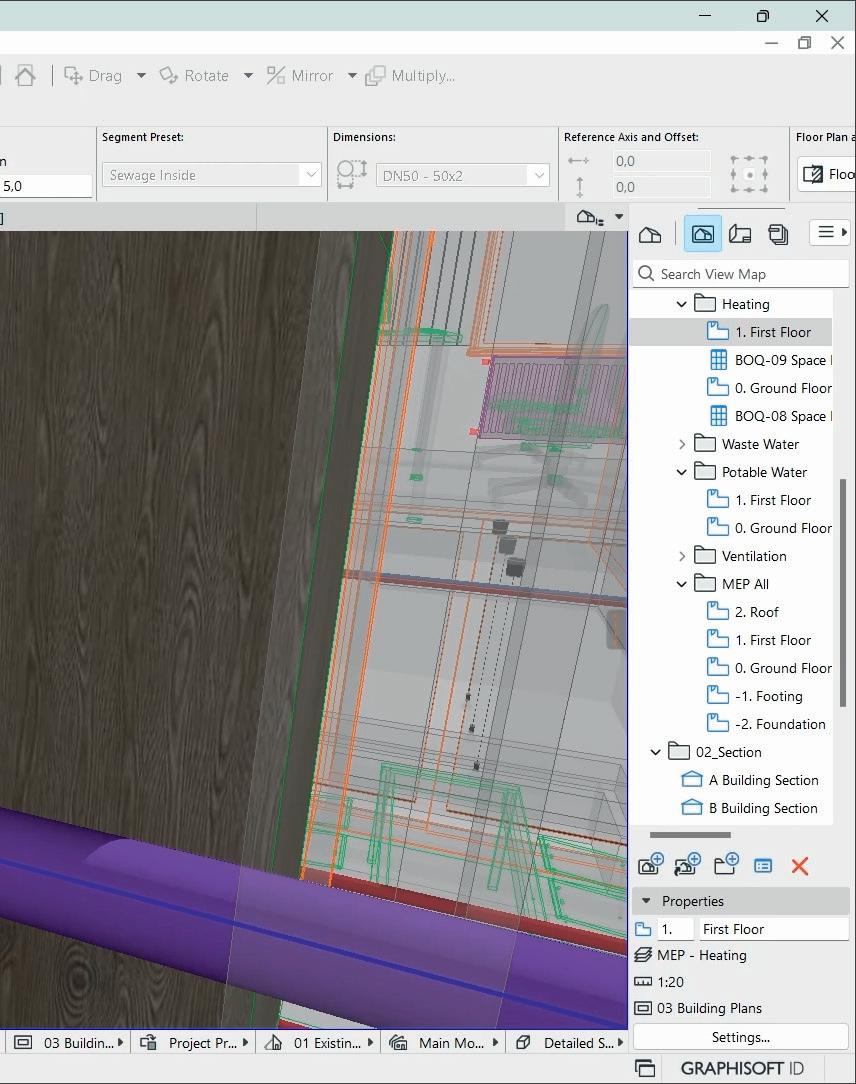

Company executives and customers shared Graphisoft’s latest technology developments and showcased some impressive project work

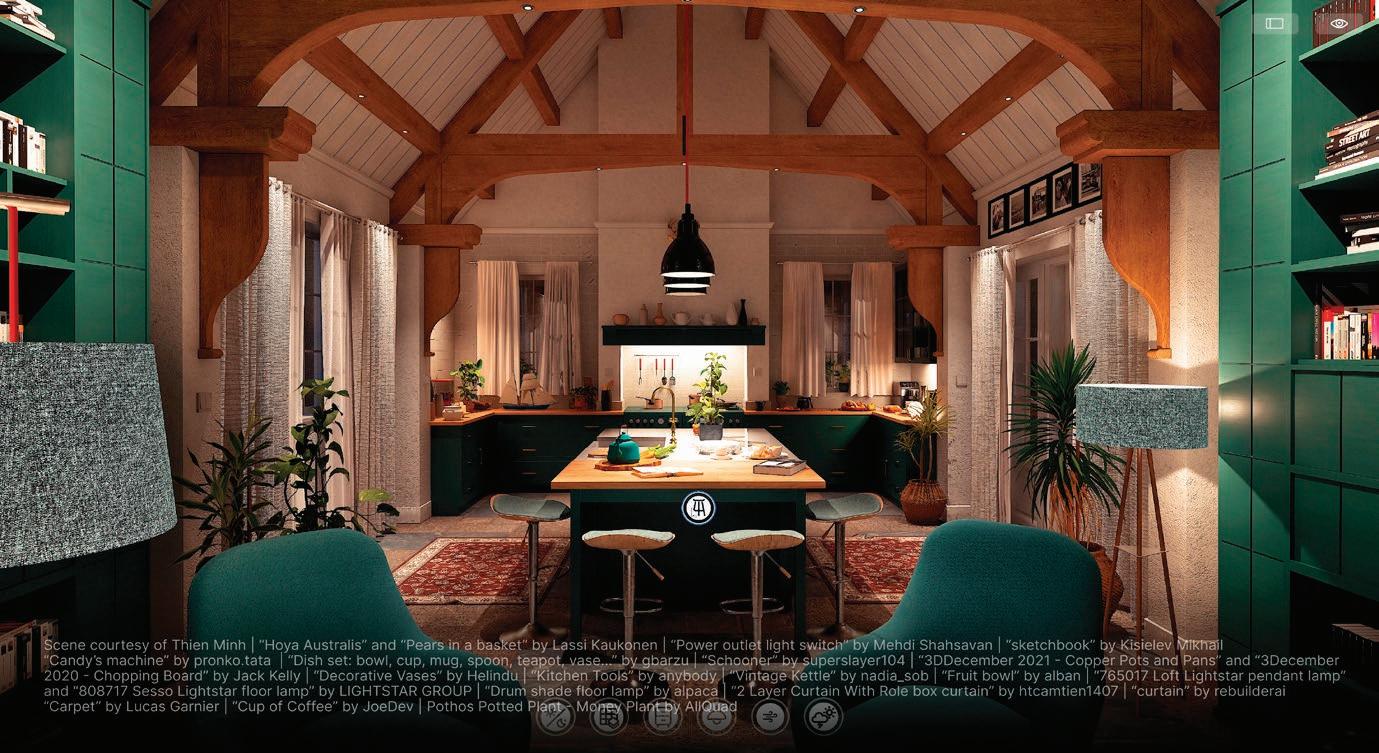

Epic Games is reshaping its arch viz tool, aligning it more closely with Unreal Engine while enhancing geometry, lighting, materials, and interactivity

46

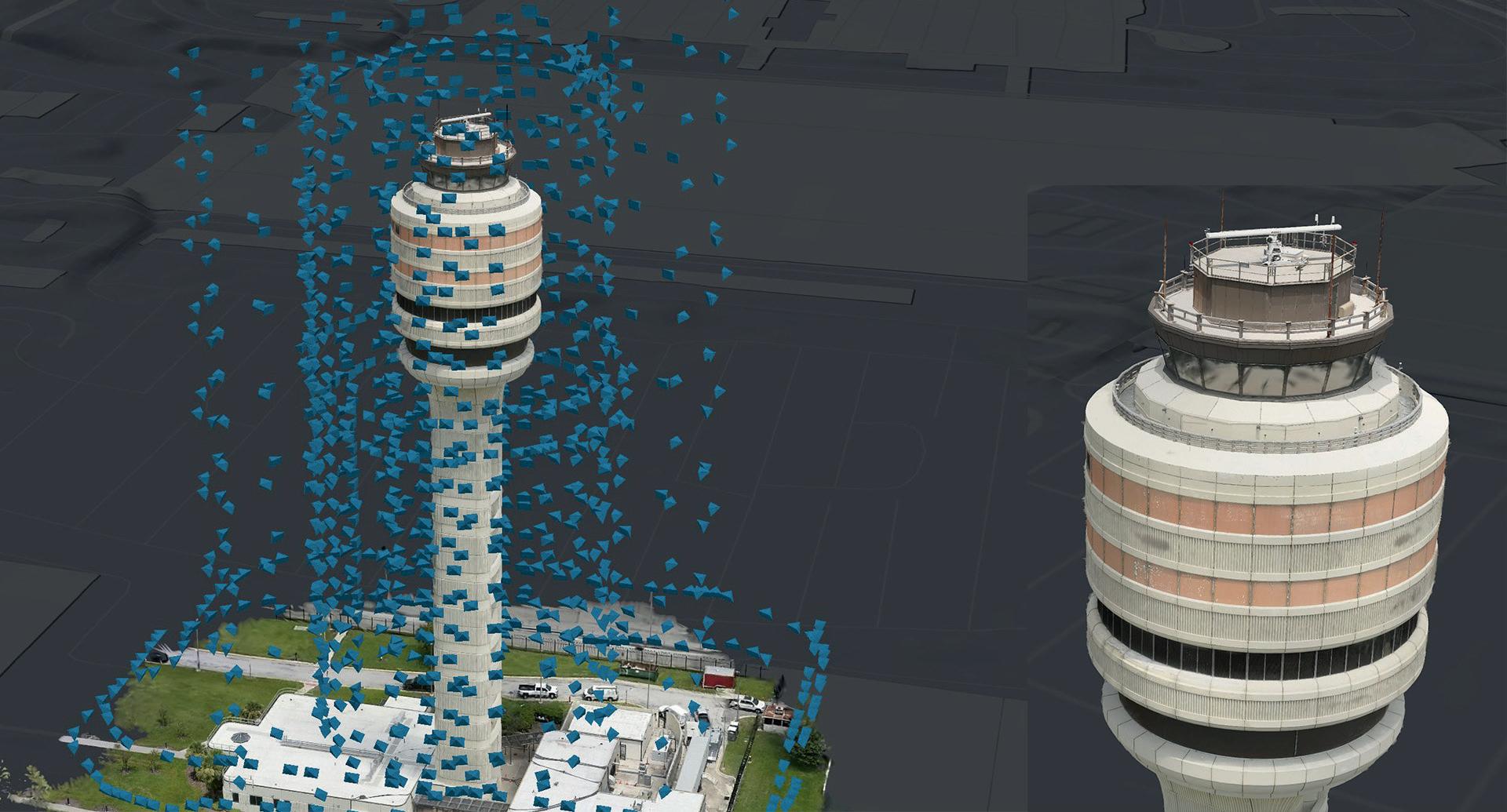

Reality capture and surveying have undergone considerable technological changes in recent years. Now there’s a new kid on the block, but what does it mean for AEC workflows? Building

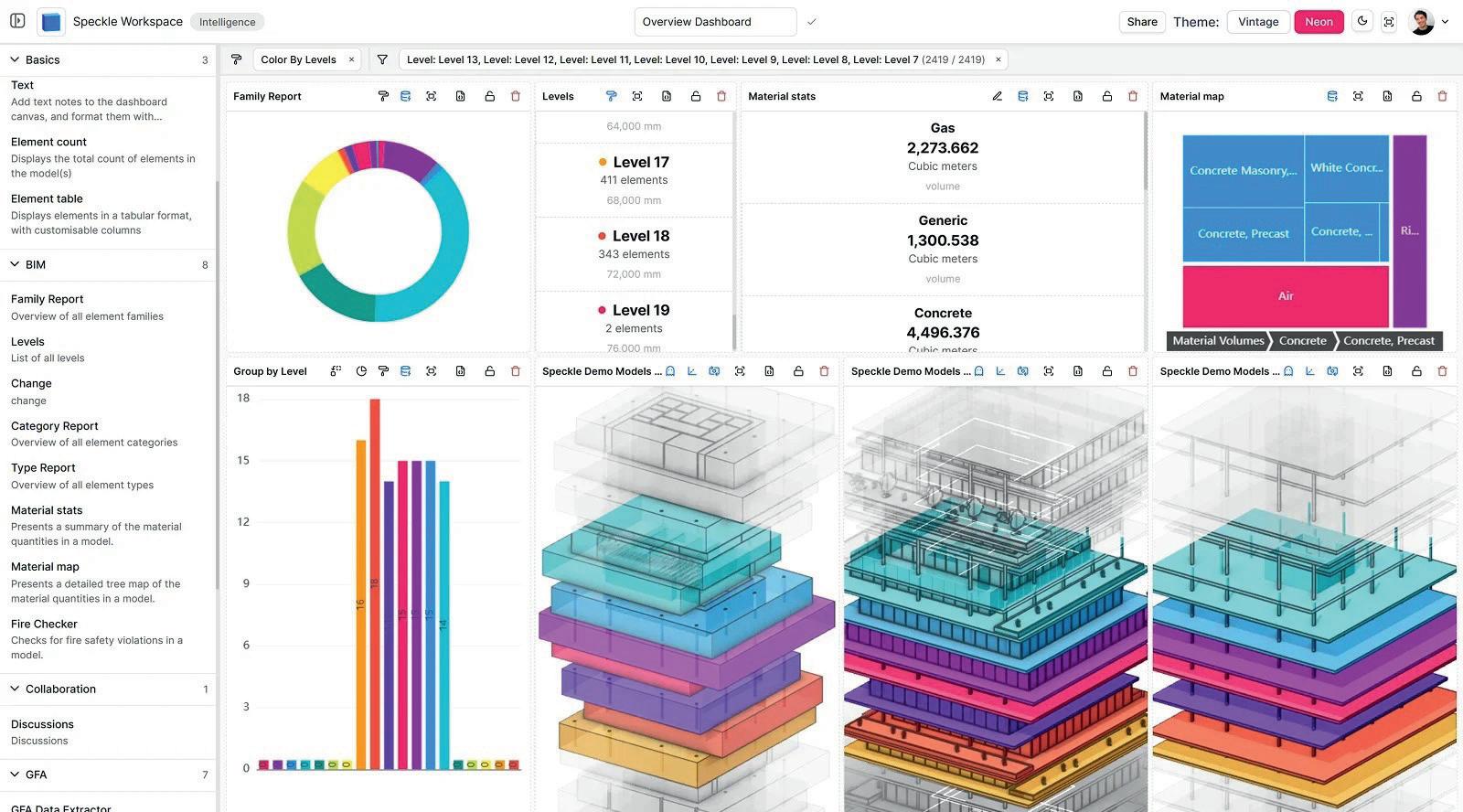

We report from Speckle’s annual meet-up, where the open-source data platform promises AEC users a chance to break free from proprietary lock-ins

Last month’s cover story on the trend in EULA metastasis certainly invoked a wide range of responses. Martyn Day provides an update

Global infrastructure engineering giant Aecom has acquired Norwegian AI startup Consigli, in a deal worth 4 billion NOK (approx US $390m), according to Norwegian business newspaper Dagens Næringsliv (DN). Prior to the acquisition, Aecom and Consigli had worked together on several projects. Consigli founder and CEO Janne AasJakobsen will take up the role of head of AI engineering at Aecom.

The acquisition marks a notable shift in the AEC software landscape, where startups are typically snapped up by established software vendors rather than their customers. It suggests that Aecom views Consigli’s technology — and its engineering talent — as a strategic differentiator.

Consigli brands itself as “The Autonomous Engineer”, an AI agent for space analysis, unit optimisation, automated MEP loadings, level 3 modelling, report generation, plant room optimisation, tender documents de-risking, O&M docs and more. The company claims its technology gives reductions in engineering time of up to 90%.

It is not yet clear if Consigli will remain

a commercial entity or will only be for Aecom’s internal use.

For Aecom, the deal addresses a growing battleground: the convergence of construction services and embedded predictive software. With project margins under pressure and clients demanding real-time data-driven insights, owning a targeted AI layer would offer a differentiator

From Consigli’s perspective, being part of a global infrastructure services business opens access to large-scale projects and the global engineering-delivery pipeline. And, if DN’s reported figure of $390 million is correct, it’s the exit many start-ups in this space can only dream of.

The acquisition has raised eyebrows across the AEC industry. Software vendors weighing potential startup targets may now feel added pressure, and losing one to a customer could spark a frenzied wave of negotiations. Meanwhile, other AECO firms may feel compelled to behave more like tech companies — or risk being pushed into commodity territory. Consigli was approached for comment but did not respond by the time of publication.

■ www.consigli.ai

Autodesk Estimate, a new cloud-based estimating solution that connects 2D and 3D takeoffs to costs, materials, and labour calculations, has launched.

Part of Autodesk Construction Cloud,

Autodesk Estimate aims to help contractors produce more accurate estimates and proposals, eliminating the need for manual merges, juggling spreadsheets, and switching between disconnected tools.

■ www.construction.autodesk.com

Allplan’s new 2026 product lineup — developed for architects, engineers, detailers, fabricators, and construction professionals — puts a strong emphasis on automation, collaboration, and sustainability.

To expand its sustainable design capabilities, the new release features an integration with Preoptima Concept, a third party tool for whole-life carbon assessments (WLCAs).

Meanwhile, with GeoPackage DataExchange urban planners and designers can integrate GIS data. According to Allplan, providing access to accurate site context supports better decisions on zoning, infrastructure, and environmental impact.

Elsewhere, a new AI Assistant is said to improve planning efficiency and decision making by providing guidance on Allplan workflows, AEC standards, and best practices, while offering smart suggestions for tasks, including coding support.

■ www.allplan.com

ERB Rail JV PS, the joint venture leading the construction of the Rail Baltica mainline in Latvia, one of the largest infrastructure projects in Europe, has chosen Vektor.io as its platform for managing and visualising infrastructure data.

ERB will use the platform to bring together 2D plans, BIM models, GIS data, and other reference materials spread across many different formats and systems, directly in the browser, accessible both in the office and on site.

■ www.vektor.io

CivilSense ROI Calculator is a new digital tool designed to help municipalities and utilities make informed decisions by revealing hidden water losses within the supply network and demonstrating the financial benefits of building sustainable, resilient water systems ■ www.oldcastleinfrastructure.com

A new report by PlanRadar suggests inconsistent QA/QC standards are eroding margins, driving rework, and fuelling disputes across construction projects. According to the study, companies rank QA/QC among their top priorities, yet 77% still report inconsistent documentation that varies across projects, sites and trades ■ www.planradar.com

ABB has launched ABB Ability BuildingPro, a platform designed to connect, manage, and optimise building operations. Acting as a ‘central intelligence hub’, it unifies data from building systems to improve performance, reduce energy use, and enhance occupant experience ■ www.abb.com

Arup has designed a Net Zero Carbon in operation (NZCio) Welsh school using performance modelling technology from climate tech firm, IES. The software will help reduce carbon emissions at the Mynydd Isa Campus by over 100 tonnes per year ■ www.iesve.com

Avail has launched new add-ons for AutoCAD and Civil 3D that extend Avail’s content management solution directly into the AutoCAD and Civil 3D environments, helping firms organise, visualise, and reuse their CAD content, including block libraries ■ www.getavail.com/autocad

Schneider Electric has launched EcoStruxure Foresight Operation, an ‘open and scalable, intelligent platform powered by AI’ designed to unify energy, power and building systems in facilities such as data centres, hospitals and pharmaceutical campuses ■ www.se.com

Trimble has built a new suite of collaboration tools directly into the heart of SketchUp for Desktop, alongside improvements to documentation, site context, and viz.

The 3D modelling software now includes private sharing control, in-app commenting, and real-time viewing, allowing designers to collect feedback from clients and stakeholders without leaving the SketchUp environment. Designers can securely share models with stakeholders, controlling who can view and comment.

Feedback is attached directly to 3D geometry, ensuring comments are linked to the right part of the model.

“Great designs are shaped by conversation, iteration and shared insight,” said Sandra Winstead, senior director of product management, architecture and design at Trimble. “Rather than jumping between email threads or third-party tools to hold conversations, collaborate and make design decisions, we’ve built collaboration directly into SketchUp.”

■ www.sketchup.com

HP has enhanced its construction management platform, HP Build Workspace, introducing mobileenabled scanning and AI-powered vectorisation directly from HP DesignJet multifunction printers.

HP AI Vectorization enables the conversion of raster images into ‘clean, editable’ vector drawings suitable for CAD applications.

elements such as doors, windows, text, and dashes Currently, the processing is done in the cloud but next year users will also be able to run jobs locally on HP Z Workstations, such as the HP Z2 Mini. Meanwhile, through HP Click Solutions integration, AEC professionals can also ‘seamlessly print’ documents from HP Build Workspace directly to HP DesignJet printers.

drawings suitable for CAD applications.

The multi-layered AI Vectorisation engine, which is trained on real architectural and construction plans, also includes object recognition so it can identify architectural

HP has also launched a new large format printer, the HP DesignJet T870 (pictured left), which is billed as a compact, versatile 24-inch device that combines high-quality output with sustainable design. ■ www.hp.com

HP has also launched a new large format printer, (pictured left)

Chaos has released Chaos Vantage 3, a major update to its visualisation platform that allows AEC professionals to explore arch viz scenes in real time complete with real-time ray tracing.

Headline features include support for gaussian splats, enabling users to place their projects directly into lifelike environments, USD and MaterialX for asset exchange across varied pipelines, and access to the Chaos AI Material Generator, to give AEC users precise

emetschek Group – the AECO software developer whose brands include Graphisoft, Vectorworks, Allplan and Bluebeam –and Takenaka, one of Japan’s largest construction companies, have signed a Memorandum of Understanding (MoU) to advance digital transformation and AI-driven solutions in construction.

The MoU initiates a strategic partnership to develop and pilot AI-assisted, cloudbased, and open digital platforms that streamline and enhance collaborative workflows across planning, design, construction, and operation processes.

“This partnership with Takenaka, a true leader with deep expertise in the construction industry, is a pivotal step,” said Marc Nezet, chief strategy officer at the Nemetschek Group. “By combining their extensive, practical know-how with

control over the look of a scene.

penSpace has acquired Disperse, the company behind the construction progress tracking technology that powers OpenSpace Progress Tracking that helps construction teams identify productivity issues earlier in projects.

With Vantage 3, AEC users can now make the most of Gaussian splats that are part of their V-Ray Scene files. Gaussian splatting allows the real world to be captured as detailed 3D data, quickly turning photos or scans of objects, streets or entire neighbourhoods into editable 3D scenes. Architects and designers can then place their projects directly into lifelike environments, creating an immediate sense of scale and context.

■ www.chaos.com/vantage

our advanced digital and AI capabilities, we are co-creating a more efficient, sustainable, and data-driven future for the entire AEC/O industry.”

■ www.nemetschek.com N

Key areas outlined within the agreement include a commitment to best practice exchange through regular knowledge-sharing sessions, methodologies, and operational insights.

Nemetschek and Takenaka will also focus on joint AI and digital platform innovation, working together to identify, prioritise, and develop cloud-based digital and AI solutions for the AECO sector.

Secure data sharing and validation form another cornerstone of the agreement, with governance models and technical safeguards established to enable data-driven transformation. Both parties also reaffirmed their commitment to data protection and compliance.

The system combines 360° jobsite imagery, computer vision, and “expert human verification” to provide an objective, trusted, and detailed view of what has been built — and what hasn’t.

“Disperse was built to give construction teams a trustworthy, objective picture of progress,” said Olli Liukkaala, CEO of Disperse. “By joining OpenSpace, we can deliver that clarity at unprecedented speed and scale— and bring even more value to GCs, owners, and specialty contractors on projects of every size.”

OpenSpace Progress Tracking is part of OpenSpace’s Visual Intelligence Platform.

■ www.openspace.ai

Scia has released Scia Engineer 2026, a major update to its multi-material structural analysis and design software.

Headline enhancements include new tools for designing structures subject to mobile loads, full compliance with 2nd generation Eurocodes, and automated wind load generation in compliance with the latest US design code ASCE 7-22.

Other new features include “accurate and economical” design of structures subjected to significant torsion and new tools to help eliminate vibrations due to human activity.

■ www.scia.net/scia-engineer

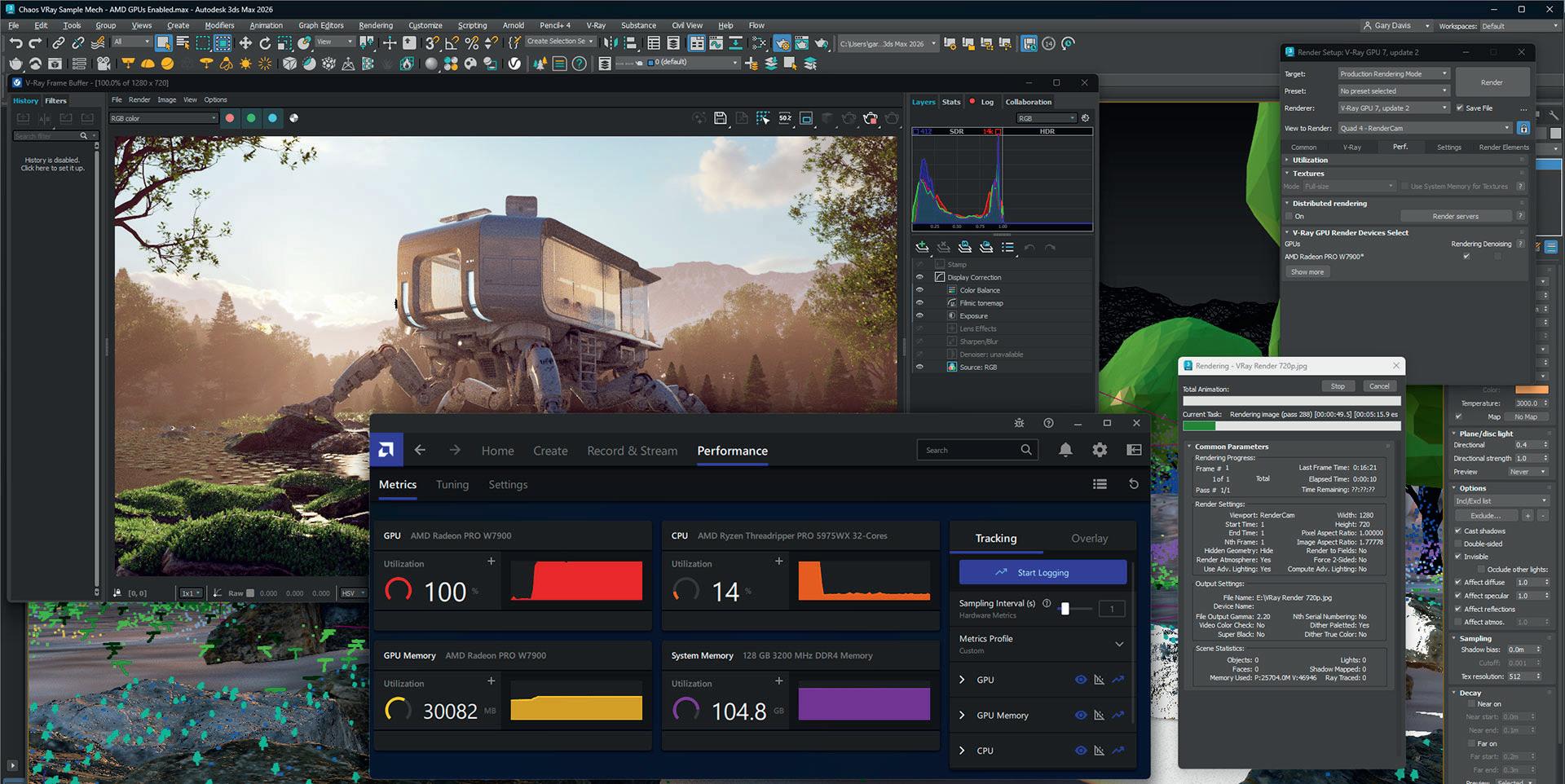

Chaos V-Ray will soon support AMD GPUs, so users of the photorealistic rendering software can choose from a wider range of graphics hardware including the AMD Radeon Pro W7000 series and the AMD Ryzen AI Max Pro processor that has an integrated Radeon GPU.

Until now, V-Ray’s GPU renderer has been limited to Nvidia RTX GPUs via the CUDA platform, while its CPU renderer has long worked with processors from both Intel and AMD.

Chaos plans to roll out the changes publicly in every edition of V-Ray, including 3ds Max, SketchUp, Revit and Rhino, Maya, and Blender.

At Autodesk University 2025, both Dell and HP showcased V-Ray GPU running

on AMD GPUs – Dell on a desktop workstation with a discrete AMD Radeon Pro W7600 GPU and HP on a HP ZBook Ultra G1a with the new AMD Ryzen AI Max+ 395 processor, where up to 96 GB of the 128 GB unified memory can be allocated as VRAM.

“[With the AMD Ryzen AI Max+ 395} you can load massive scenes without having to worry so much about memory limitations,” said Vladimir Koylazov, head of innovation, Chaos. “We have a massive USD scene that we use for testing, and it was really nice to see it actually being rendered on an AMD [processor]. It wouldn’t be possible on [most] discrete GPUs, because they don’t normally have that much memory.”

■ www.amd.com ■ www.chaos.com

rojectFiles from Part3 is a new construction drawing and documentation management system for architects designed to help ensure the right drawings are always accessible on site, in real time, to everyone that needs them.

According to the company, unlike other tools that were built for contractors and retrofitted for everyone else, ProjectFiles was designed specifically for architects.

ProjectFiles is a key element of Part3’s broader construction administration platform, and also connects drawings to the day-to-day management of submittals, RFIs, change documents,

instructions, and field reports.

Automatic version tracking helps ensures the entire team is working from the most up-to-date drawings and documents. According to Part3, it’s designed to overcome problems such as walking onto site and finding contractors working from outdated drawings, or wasting time hunting through folders trying to find the current structural set before an RFI deadline.

The software also features AI-assisted drawing detection, where files are automatically tagged with the correct drawing numbers, titles, and disciplines.

■ www.part3.io

acobs has introduced Flood Platform, a cloud-hosted hub for flood modelling that is designed to support the planning and delivery of critical flood infrastructure programs.

Flood Platform is designed to help firms overcome the challenge of managing and interpreting large volumes of data, especially as flooding and extreme weather events become more frequent and severe.

The subscription-based offering, built on Microsoft Azure technology, standardises how users manage, view and analyse flood-related data, and acts as a central location for data, simulations and collaboration.

■ www.floodplatform.com

Amulet Hotkey has updated its CoreStation HX2000 datacentre remote workstation with a new Intel Core Ultra 9 285H processor option, delivering higher clock speeds and built-in NPU AI acceleration.

The CoreStation HX2000 is built around a 5U rack mounted enclosure that can accommodate up to 12 single-width workstation nodes that can be removed, replaced, or upgraded.

Each node is accessed by a single user over a 1:1 connection and can be configured with a choice of discrete Nvidia RTX MXM pro laptop GPUs .

Amulet Hotkey is also developing a new CoreStation HX3000, which will feature full Intel Core desktop processors and low-profile Nvidia RTX and Intel Arc Pro desktop GPUs.

■ www.amulethotkey.com

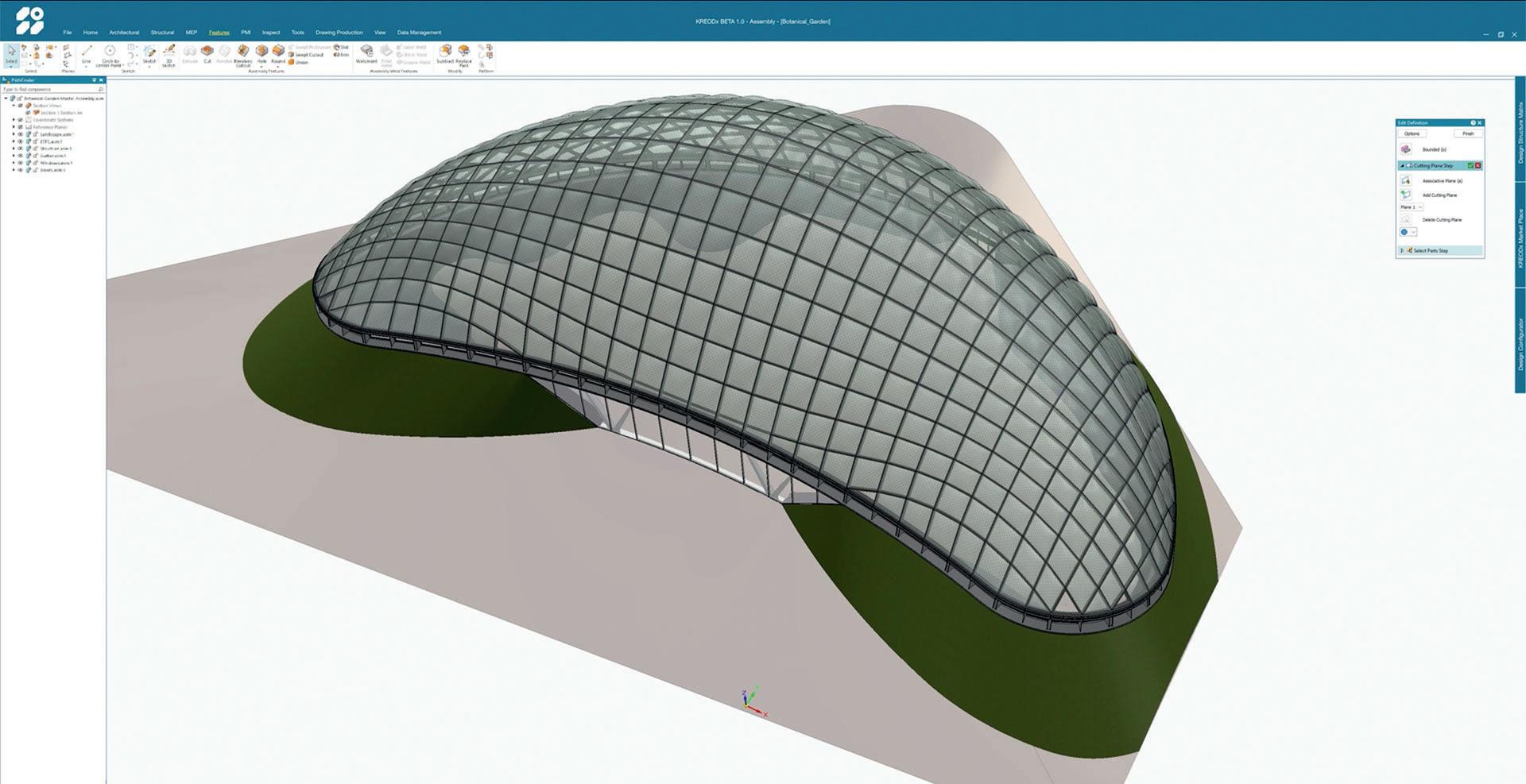

London-based KREOD is planning to bring “aerospacegrade precision” to the built environment, with its new KREODx platform which has launched in beta.

The software harnesses Parasolid from Siemens Digital Industries Software, a geometric modelling kernel that is typically found inside mechanical CAD tools such as Dassault Systèmes Solidworks, Siemens Solid Edge, and Siemens NX.

The software combines Design for Manufacture and Assembly (DfMA) principles with a building-centric approach to Product Lifecycle Management (PLM) — a process commonly used in manufacturing to manage a product’s data, design, and

development throughout its entire lifecycle.

KREODx is said to be powered by “Intelligent Automation” with parametric design and engineering workflows that “eliminate errors and accelerate delivery”.

The software offers full support for Bill of Materials (BoM) to deliver what the company describes as a single source of truth for costs, materials, and procurement, giving transparency from model to assembly.

According to the company, KREODx is also aligned with the circular economy, extending building lifespans, reducing waste, and enabling re-use and adaptability over time.

■ www.kreodx.com

Sisk, one of Ireland’s largest construction and engineering companies, is using DroneDeploy’s reality capture platform to enable faster inspections, higher data accuracy and real-time visibility across 20+ projects in Ireland and the UK.

Sisk’s geospatial engineering program covers capture with aerial drones and 360 cameras. DroneDeploy is used across flagship developments including Dublin’s Glass Bottle project and the Kex Gill road realignment scheme in Yorkshire, helping teams capture, analyse and share high-resolution site data for progress tracking, design verification and stakeholder communication.

■ www.dronedeploy.com

intoo has released Esri Experience Builder Widget for ArcGIS, enabling users to stream and interact with high-res, mesh-based laser scan data directly within the Esri ArcGIS environment. The Esri Experience Builder Widget brings immersive 360-degree panoramic views and virtual inspection capabilities

to ArcGIS. According to Cintoo, this is particularly beneficial for indoor and brownfield projects where conventional GIS tools often struggle to handle dense or detailed spatial data.

The integration converts terrestrial and mobile LiDAR data into lightweight 3D meshes. According to Cintoo, this maintains full point cloud fidelity while enabling teams to ‘effortlessly work’ with what were previously large, complex datasets.

Users can analyse scan data, compare it to BIM and CAD models, and manage asset tags without leaving the ArcGIS environment. Use cases include managing a large-scale facility, or overseeing construction progress.

■ www.cintoo.com

anchester University NHS Foundation Trust (MFT) has gone live with a digital twin of six hospitals as part of its strategy to create a smart estate. Replacing disparate systems and paper-based processes, the digital twin visualises floors, rooms and spaces with associated data and is already being used to understand space optimisation and support the management of RAAC and asbestos. Future plans include adding indoor navigation, patient contact tracing and realtime asset tracking.

Created using Esri UK’s GIS platform, which includes indoor mapping, spatial analysis, navigation and asset tracking, the digital twin went live in October.

■ www.esri.com

Fast-track digital innovation in your organisation with a free Remap Hackathon.

Our Hackathons give your team space to explore ideas, test newworkflows, and prototype solutions — all in a single, energising day. We’ve run them with…

And yes… we run them forfree!

We help you gather ideas and workflow challenges from your team, then shortlist the top opportunities to focus on during the Hackathon.

A guided innovation sprint with briefing, collaborative hacking and optionalCPD, helping your team learn, experiment and prototype new digital solutions.

You gain a practice-wide ideas list plus refined concepts or prototypes that address your most valuable workflow challenges. 1 2 3 4

InnovationDiscovery: Structured idea-gathering to identify opportunities that genuinely matter to your team.

CollaborativeProblem-Solving: Guided development sessions where multidisciplinary staff work together to explore and prototype solutions.

TechnologyInsightSessions:Optional CPDs during lunch covering topics such as computational design, automation, data workflows, or emerging tools.

PrototypedSolutions:End-of-day presentations to the organisation sharing the outputs — from sketches andworkflows to functioning prototypes or solved pain points.

We are a London-based digital transformation and software development consultancy helping built-environment organisations progress their digital ambitions through practical, collaborative innovation events.

As industry giants continue to flesh out their AI strategies, Trimble’s Dimensions conference in Las Vegas was an opportunity for the company to relay its own plans for integrating the technology with its various products and services

here is the construction software market headed and what role is AI likely to play in it? These are the two questions that Trimble executives were keen to address at the company’s annual Dimensions conference, held in Las Vegas in November 2025.

While many vendors seemingly feel obliged to label every product announcement as ‘AI-driven’, Trimble’s message suggests a more considered strategy, based on embedding agentic AI across its technology stack and within the workflows of customers and partners.

At the heart of this strategy is a new agentic AI platform, designed to provide a standardised foundation for building agents, complete with services for their deployment, security and management.

Trimble positions Trimble Agent Studio as both an internal development layer and an extensible system on which customers and partners can build. The company arguably has little choice but to take this route. After all, construction is a fragmented market, so any AI push that sees the technology embedded directly within individual products would simply carry that fragmentation forwards. In other words, AI needs to be a foundational technology, not a bolt-on.

A platform-first approach

Trimble’s response is its Agent Studio, which abstracts the plumbing to enable multi-agent workflows and cross-tool automation. Trimble Agent Studio is currently in pilot testing at a handful of select customers.

Executives at Trimble say this platformfirst approach is necessary because the number of AI use cases emerging in construction is outpacing any vendor’s ability to build bespoke features. The theory is that an agentic layer lets Trimble embed intelligence into onboarding, object generation, data search, field reporting and asset maintenance more consistently, rather than replicating engineering work across SketchUp, Tekla, ProjectSight, Viewpoint and Trimble Connect.

In practice, this is also an attempt to reinforce the company’s wider ‘Connect and Scale’ strategy, an ongoing push to break down internal data silos and move towards a more coherent ecosystem.

The practical expression of this is seen in the AI features funnelling through Trimble Labs, the company’s early-access programme. SketchUp already has AI rendering available, with object generation and an assistant planned for late 2025. Tekla has rolled out user and developer assistants and an AI-based cloud drawing capability. ProjectSight offers help agents, auto-submittals and AI title block extraction in production, with daily report agents in testing. Similar assistants are on the way for Trimble Connect and Unity Maintain.

If the pace of this rollout seems brisk, a long list of phrases such as “expected in Q4 2025” and “available in early 2026” signals that this will be a multi-year transition rather than a ‘big bang’ delivery of a fully realised AI ecosystem.

Perhaps the most grounded Dimensions announcement was that relating to ProjectSight 360 Capture, a new workflow that brings 360-degree imagery directly into Trimble’s project management and collaboration environment.

The idea is simple enough: the user walks a site equipped with a 360-degree camera, an AI algorithm maps the path automatically, and the imagery is stitched back into drawings to form a living record of as-built conditions. The system aligns images, filters out faces for privacy, links images to tasks and workflows, and allows comparisons of progress over time.

This move feels overdue. The market has been moving towards integrated reality capture for years, with various point solutions offering site-walk automation, issue documentation and progress tracking. Trimble’s advantage lies not in the novelty but in its ability to tie the captures directly into broader workflows via ProjectSight and Trimble Connect, and by extension into ERP systems such as

Spectrum and Vista. Issue identification and resolution, from BCF topics to change orders, becomes part of a single ecosystem rather than a patchwork of uploads and shared links. This is precisely the type of connective tissue the industry has historically struggled to solve. Trimble’s vision makes this look more tangible.

The company also took the opportunity to announce Trimble Financials at the Dimensions event. This targets small contractors who lack the skills, time and staff to manage full ERP (enterprise resource planning) systems. Instead, this new subscription tool handles job costing, AP/AR visibility, cashflow dashboards and proposal generation, backed by an AI assistant capable of answering basic financial questions.

Taken together, the announcements relating to Trimble Agent Studio, ProjectSight 360 Capture and Trimble Financials all point to a company that is knitting together what has previously been a broad and somewhat disjointed portfolio into something more unified, using AI for both connective logic and marketing narrative.

Whether an agentic platform can genuinely standardise workflows across the full range of Trimble products remains to be seen – but Dimensions 2025 delivered a coherent signal of intent that Trimble’s AI will be grounded in delivering real workflows.

■ www.trimble.com

HP Z workstations, accelerated by NVIDIA RTX PRO™ GPUs,1 are purpose-built to accelerate complex AI and design workflows, from generative design to diffusion modeling.

NavLive has chosen the Nvidia Jetson Orin system-on-module as the core of its latest handheld construction scanner. The scanner integrates five sensors, including three cameras, LiDAR, and an IMU, all streaming data simultaneously to support SLAM and computer vision tasks

■ www.navlive.ai

Corona 14, the latest version of the photorealistic architectural rendering engine for 3ds Max and Cinema 4D, supports AI material generation, AI image enhancement Gaussian Splats, procedural material generation, and new environmental effects

■ www.chaos.com

Howie, a Vienna-based startup developing AI-driven datamanagement and analytics tools for the AEC sector, has received a sixfigure investment from Dar Ventures. The funding will support Howie’s work on a platform that centralises and analyses project information

■ www.howie.systems

Amsterdam-based startup Struck has raised €2 million in seed funding to further develop its AI platform that gives architects, developers, and municipalities clearer insight into regulations and, in the future, enables automated design-compliance checks

■ www.struck.eu

Construction monitoring software startup Track3D, has raised $10m in Series A funding. The company’s platform uses advanced AI models to transform reality data into ‘actionable insights’, including progress tracking, work-in-place validation, and performance analytics tied directly to drawings and quantities

■ www.track3d.ai

For the latest news, features, interviews and opinions relating to Artificial Intelligence (AI) in AEC, check out our new AI hub

■ www.aecmag.com/ai

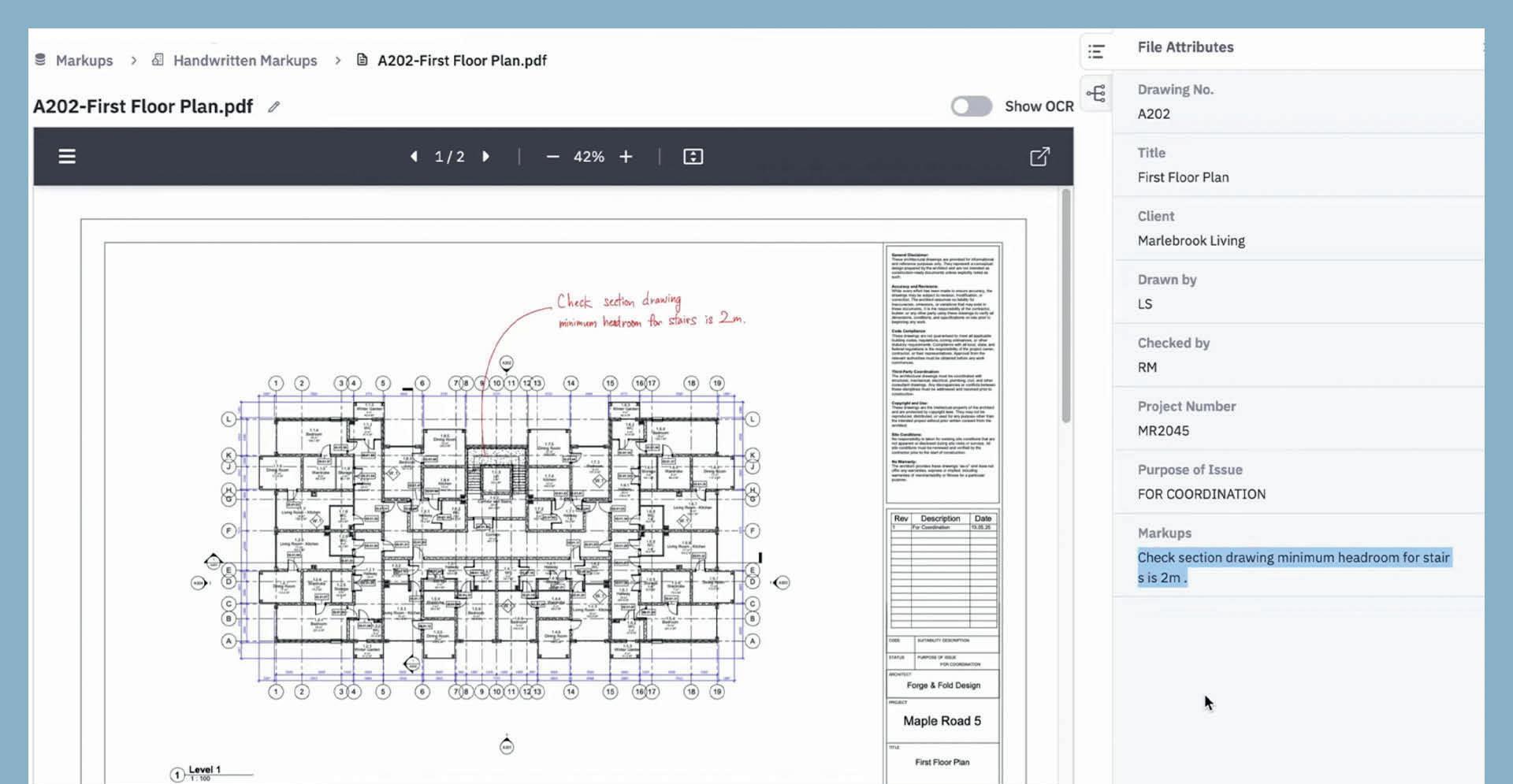

Tektome has unveiled KnowledgeBuilder, an AI-powered platform designed to automatically organise massive volumes of siloed project documents to help AEC teams make smarter decisions while avoiding repeated mistakes.

The software, already piloted by Takenaka Corporation, one of Japan’s largest construction firms, analyses and extracts key content from scattered files — including drawings, reports, photos, and handwritten notes on marked up PDFs — and consolidates the data into a central, structured, and “instantly

searchable” knowledge base.

The platform enables architects and engineers to ask questions in plain language and quickly see how similar issues were handled in the past, eliminating the need to “reinvent the wheel.”

According to the company, even non-IT staff can configure what to pull from drawings, proposals, photos or meeting minutes without coding or complex setup.

KnowledgeBuilder works across PDFs, CAD files (DWG & DXF), scanned images, handwritten markups, and more. BIM file support (RVT & IFC) is coming soon.

■ www.tektome.com

Viktor, a specialist in AI for engineering automation, has launched App Builder, a new tool designed to enable engineers without coding experience to automate tasks and build tools ‘in minutes’.

App Builder is designed to accelerate workflows and improve consistency and quality by eliminating the need to retype data or copy and paste between Excel and

design tools, while ensuring transparency, governance, and compliance.

Automation tasks include design checks, selection workflows, and calculations through a ‘user-friendly’ interface that can be shared and reused across projects.

App Builder can also integrate with AEC tools such as Autodesk Construction Cloud (ACC), Plaxis, Rhino, and Revit.

■ www.viktor.ai

.Engineer is a new agentic AI platform for structural and civil engineers, designed to automate calculations and reporting. The software helps accelerate workflows

and improve consistency, and allows engineers to spend less time on manual tasks and more time on creative design, instructions, and quality assurance.

■ www.a.engineer

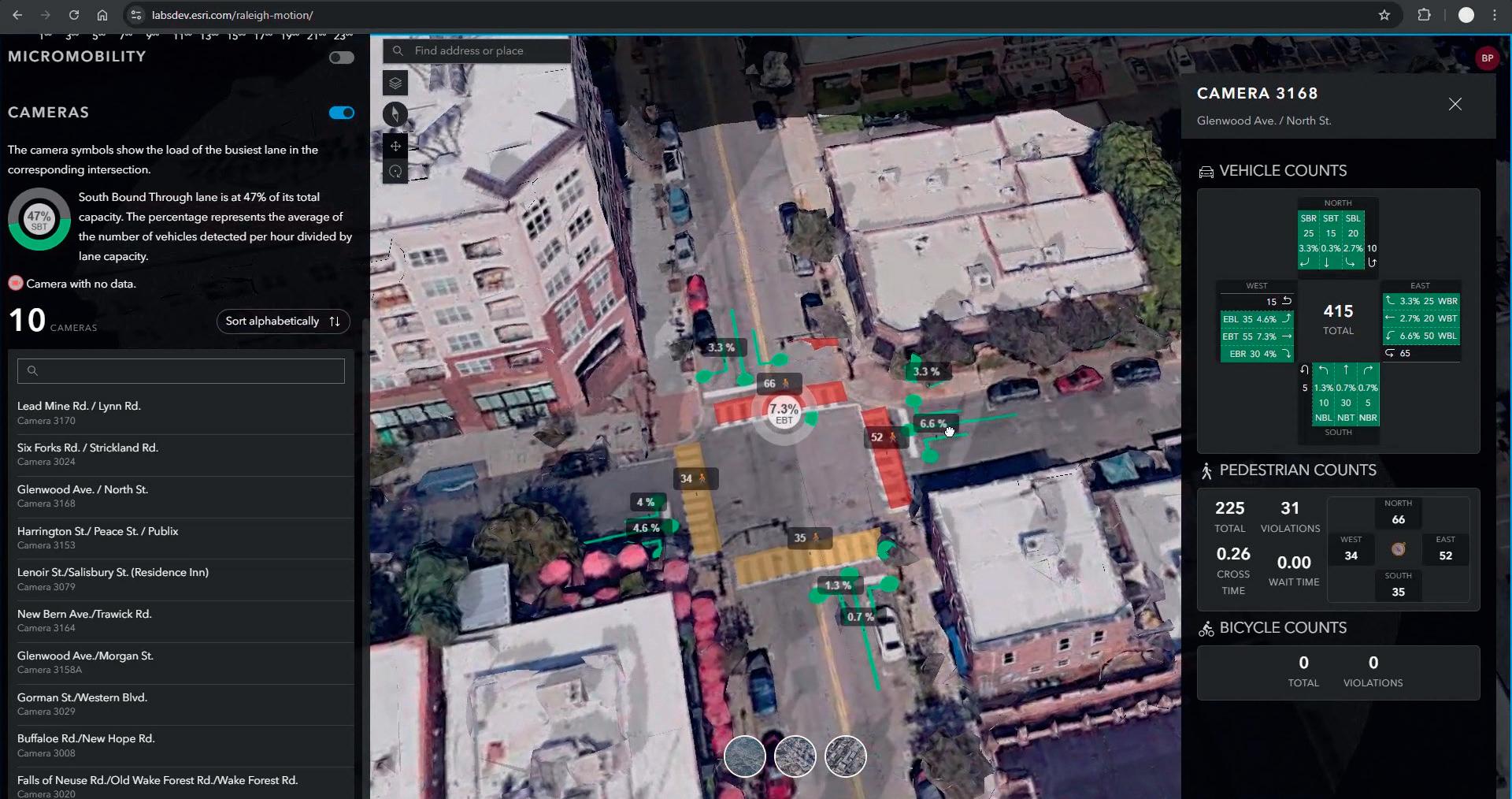

Technology from Esri, Nvidia, and Microsoft is being used in a pilot project for the City of Raleigh to better understand traffic flows and impacts. The “Raleigh In Motion” digital twin project uses AI to analyse massive volumes of data captured by real-time cameras.

The traffic monitoring software allows city officials to monitor current and historic traffic flows through key intersections at the city. Nvidia AI technology processes real-time video from hundreds of cameras around the city to identify vehicles, bikes, and pedestrians.

These feeds are then mapped in Esri ArcGIS where city officials can analyse which intersections are congested based on time of day and day of week. Indicators on the map turn from green to yellow to red as congestion builds up in intersections. The dashboard can even flag current events like stalled vehicles in an intersection that are impacting traffic.

The digital twin can help Raleigh identify dangerous intersections, reduce congestion, provide safer roadways, better respond to incidents and intelligently plan how to remediate problematic junctions. ■ www.esri.com ■ www.nvidia.com

Bluebeam Max is a new subscription plan, due to launch in 2026, that is designed to bring AI features to the PDF-based collaboration and markup tool Revu.

A new Claude integration will bring natural-language AI prompts directly into

Revu, allowing users to automate tasks and turn markup data into ‘actionable insights’. AI-Review and AI-Match are new intelligent tools that will help uncover design issues early, detect scope gaps, and compare drawings.

■ www.bluebeam.com

CMiC has launched Nexus, a construction ERP (Enterprise Resource Planning) platform powered by more than 25 AI agents.

Nexus is said to redefine how construction teams interact with data, automate workflows, and make decisions — enabling them to focus on high-value strategic work

instead of repetitive processes.

“Our AI-powered features offer users advanced data visualisation capabilities, business intelligence tools, and the ability to leverage natural language to optimise key business functions,” said Gord Rawlins, president & CEO, CMiC.

■ www.cmicglobal.uk

Construction technology company mbue has launched a new platform designed to automatically generate “fully compliant” product data submittals, helping subcontractors reduce weeks of manual work to just minutes.

mbue Submittals uses a proprietary AI model and computer vision to analyse drawings and specifications — including electrical schedules — and automatically extracts the required product information. The platform can generate submittals for light fixtures, switchgear, panels, devices, and other materials, streamlining a traditionally time-consuming and error-prone process.

“Submittals have traditionally been a painful, labour-intensive process that slows projects down.” said Garrett White, VP, Texas at Weifield Group. “With mbue, we’re seeing submittals completed in a fraction of the time, with greater accuracy and confidence. This is a huge step forward for the industry and directly helps us increase the amount of volume each PM can deliver, we can do more with the same team which directly contributes to our company’s revenue growth.”

■ www.mbue.ai/submittals

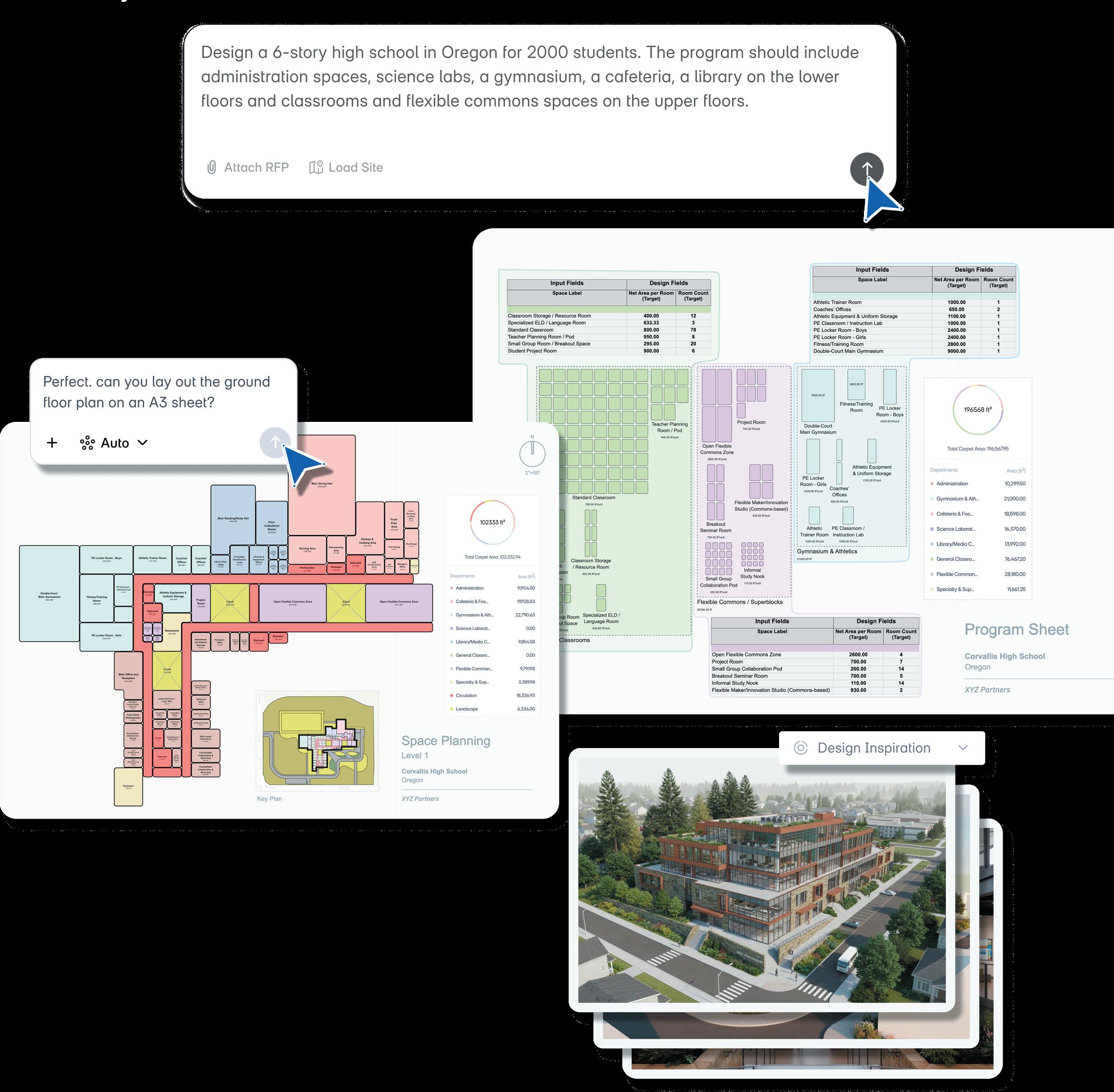

aket is gearing up for the launch of Maket 2.0, its next generation AI platform for architects.

Building on its original floorplan generator, new AI agents will focus on technical compliance, performing a range of tasks such as zoning verification, HVAC planning and material calculations.

■ www.maket.ai

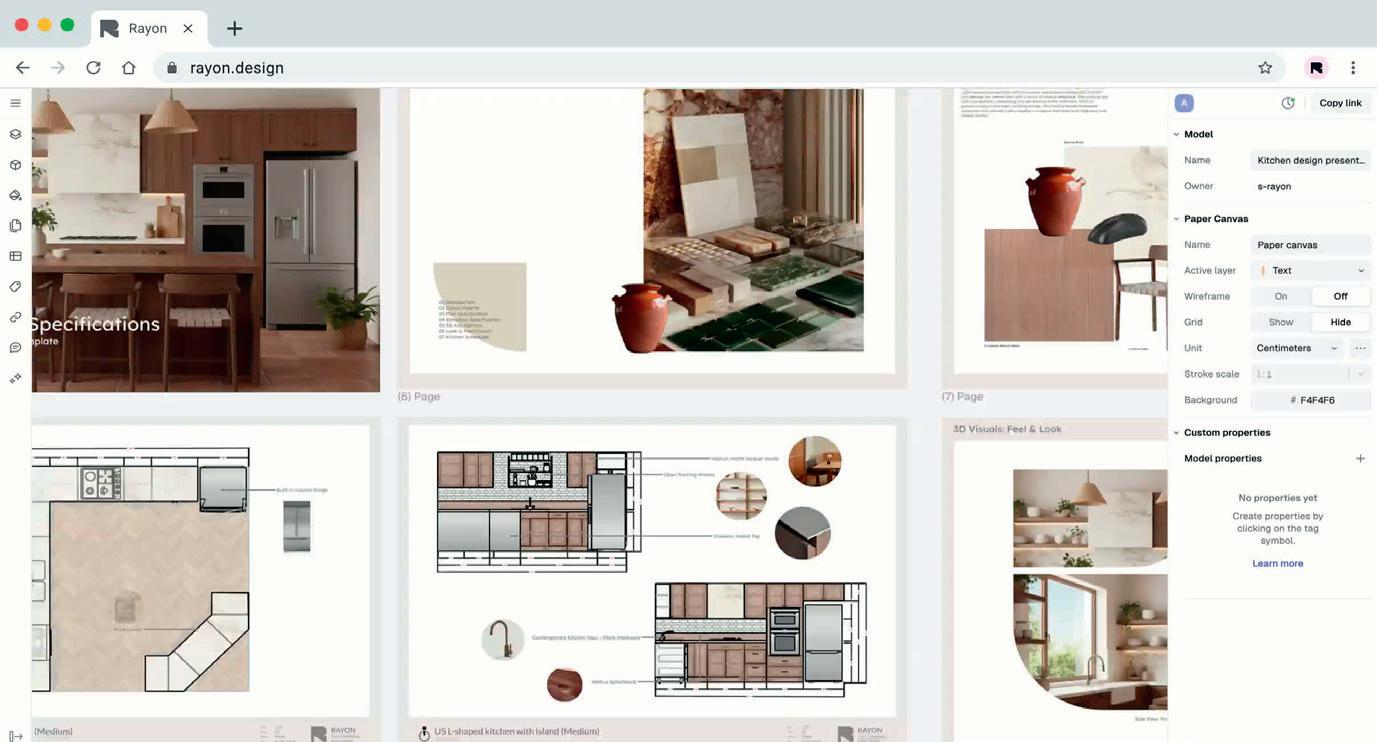

Among the many next-gen CAD tools, Rayon stands out as the only one not targeting Revit. Instead, it’s squaring off against AutoCAD in the world of drawings. Recently, the company has been showing off some forthcoming AI tools that look pretty promising, writes Martyn Day

While other companies promised ‘Figma for BIM’, Rayon is delivering ‘Figma for 2D CAD’. This Paris-based start-up was founded in late 2021 by Bastien Dolla and Stanislas Chaillou and sits in the category of ‘lightweight but capable’ design tools. Rayon’s goal, meanwhile, is to modernise breadand-butter 2D workflows.

To date, the company has raised almost €6 million in funding. Its €4 million seed round back in 2023 may have been relatively modest by AEC start-up standards, but its product has seen serious development velocity over the intervening period. It is argued by some that for many design professionals, day-to-day architectural and interiors work simply doesn’t require full-fat BIM or the overhead of legacy desktop CAD. This is where Rayon is said to fit.

Rayon’s product is a browser-native drafting and space-planning environment, designed for quick turnarounds and easy sharing, rather than for offering users encyclopaedic feature depth. That

said, users can import DWG, DXF or PDF files, sketch out walls and zones, place objects, annotate and publish drawings.

What distinguishes Rayon is its exceptional user interface, its huge library of content and the collaborative layer wrapped around it. Multiple users can work on the same model, libraries and styles are shared across teams, and stakeholders can view or comment without wrestling with installs, versions or licensing. It’s designed as a modern collaborative productivity tool, more agile than the self-contained, file-centric, desktop-based CAD system.

Rayon deliberately positions itself a few weight classes below rivals such as Autodesk’s AutoCAD or AutoCAD LT but is firmly focused on sharpening its AEC focus and relevant feature set. In time, it could become a contender.

AutoCAD remains the industry’s Swiss Army knife, customisable, scriptable and tightly wired into enterprise workflows. But the next generation might be tempted by fresher user interfaces. Rayon focuses on the sizeable

At this stage in the hype cycle, there seems to be a new AI app aimed at AEC users every week. AEC Magazine’s new directory will help you find the AI tools aimed at your particular pain point or a specific function within the AEC concept-to-build process

AI is penetrating the AEC sector at a pace that is both energising and slightly disorientating. Despite the marketing bluster, AI in AEC is still in its formative phase, defined less by a single design market and more by a set of overlapping experiments, architectural ideas and emerging business models.

But the level of AI ambition now being directed at the built environment is unmistakably rising. From early-stage design exploration to construction sequencing and claims analysis, AI and the automation it brings is beginning to reshape expectations of what digital tools should deliver. The real problem is

cohort of architects, interior designers and space-planners whose work is largely 2D, who need clean drawings rather than a full modelling environment, and who value fast onboarding and frictionless sharing over specialist depth. Where Rayon is stronger than the incumbents is in its immediacy. The barrier to entry is low, collaboration is native rather than bolted on, and it handles the everyday tasks that small and mid-size studios repeatedly perform. Whether that’s enough to shift entrenched AutoCAD habits is a separate question, but Rayon at least offers an alternative shaped around contemporary workflows, rather than decades-old assumptions about how drawings should be produced and exchanged.

The professional and educational background of Rayon co-founder Stanislas Chaillou leaves little doubt that AI will be an important part of the company’s future technology offering. The former architect and ex-employee of Autodesk previously

finding the right AI tool for the job.

To help bring some clarity, AEC Magazine has launched the AI Spotlight Directory (aidirectory.aecmag.com), a curated and continuously updated view of the expanding AI ecosystem across architecture, engineering, construction and operations.

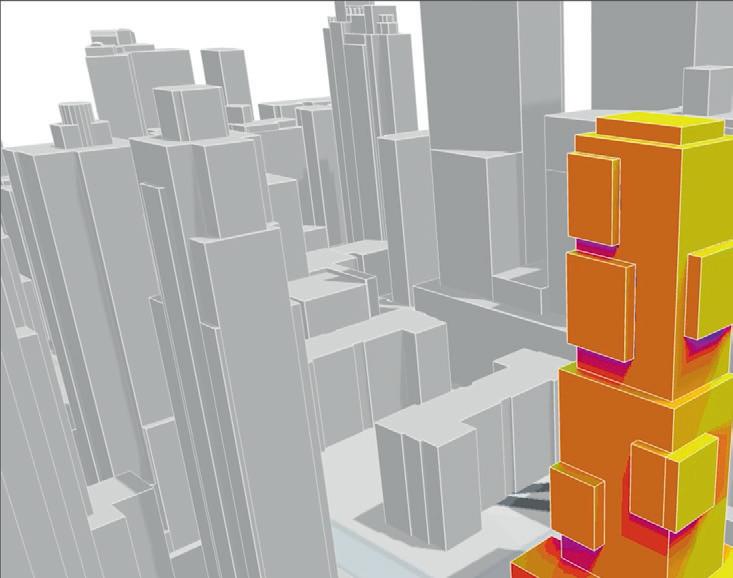

The directory already tracks more than 100 companies, products and features, spanning start-ups experimenting with novel generative approaches, mid-stage firms building targeted automation tools, and major vendors integrating AI into their BIM platforms and cloud environments. What stands out most is the breadth of approaches. Some applications are genuinely generative, offering

geometric options, massing studies or space-planning schemes. Others deploy machine learning for tasks such as classification, prediction, optimisation or schedule risk analysis. Many established tools now include AI-powered features that accelerate documentation or automate repetitive modelling tasks. And as with any early technology wave, there are also prototypes that feel more exploratory, solutions searching for real-world traction or awaiting more mature AI foundations.

This diversity raises an important point. Our task is not to prematurely anoint ‘winners’, but to understand the spectrum of activity, the differing levels of maturity, and the specific

attended Harvard University’s Graduate School of Design and the Swiss Federal Institute of Technology (EPFL), where he focused on the intersection of geometry, generative algorithms and design workflows. His book, Artificial Intelligence and Architecture: From Research to Practice (www.tinyurl.com/AI-arch-book), provides a detailed timeline of AI developments from the technology’s early history to current architectural applications. Along the way, it exposes both the vast potential and the limitations of AI/ML in design.

Rayon’s other co-founder Bastien Dolla, meanwhile, takes an active role in defining how ML features get integrated into design workflows. The company has been signalling on LinkedIn and Instagram that it has some interesting AI features coming down the line and they do indeed look very cool.

Rayon’s AI boost will give it the capability to act as a workflow-embedded companion to architects and space planners, adding rendering and model-view automation together with asset generation. It will also be able to handle natural language input. For example, a user might type in the following description: ‘Open plan office, 10m by 15m, glass partition, 1.2m high.’ The system will then produce a block or arrangement automatically. If robust, this kind of capability could lower the friction of asset reuse and speed up the otherwise tedious process of library searching, sizing and insertion. The software also has a cool AI capability of generating axonometric views automatically from 2D layouts.

Another point of focus is collaborative, multi-user editing, which will be baked into the AI layer. Rayon’s existing multi-

player canvas and library sharing will get a smarter overlay, with the software’s AI panel providing suggestions and auto-snapping design elements. It could perform version-history rewinds in response to semantic changes; for example, ‘You placed a room, convert to zone and annotate.’ This coherence across drafting, annotation and sharing looks amazing. Rayon has also shown off visual-toblock/image-to-object conversion. This involves using an image or 360-degree capture to auto-extract walls, doors or furniture and turn them into native elements. For smaller practices working on refurbishments or adapting existing plans, this kind of feature represents a practical shortcut. It doesn’t compete with full BIM reality-capture workflows, but it rides comfortably in the gap between desktop CAD and immersive scan-to-model technologies.

Rayon’s planned AI features would seem to have much to offer: the reduction of mundane labour, a leaner drawing workflow, some extra magic sprinkles. The strategic bet that the company’s management is making is that if you can make 80% of drawing-related work faster and easier for small and medium-sized practices, there is a potentially huge niche to be conquered and one that the larger vendors tend to ignore.

The real issue here will be how well Rayon executes on its AI plans. How flexible will the text-to-block generation be? How well will it handle edge cases? Will the AI panel remain an optional helper, or will it become a locked-in workflow? All this remains to be seen, but if Rayon gets it right, this start-up may well shift the way that mid-market AEC firms think about CAD.

■ www.rayon.design

workflow niches that each tool targets. As we saw in BIM’s early development, the industry needs clarity if it is to separate genuine capability from hype.

The entries in the directory reveal a market that is anything but uniform. Generative design systems sit alongside automated documentation platforms. AI-enabled constructionsite analysis tools share space with model interrogation assistants. New BIM 2.0 vendors are embedding AI deeply into their data models and user experiences, while incumbent BIM suppliers are layering machine learning and natural-language interfaces onto existing product lines. The variety reflects an industry

trying to determine where AI delivers genuine advantage, balancing speed, accuracy, quality, consistency, and where it risks becoming an overapplied marketing label.

Each directory listing provides concise but structured insight: what the organisation does, where its AI capability sits within the product, which stage of the workflow it addresses, and how mature the offering currently appears.

The intention is to go beyond branding and allow readers to compare propositions, follow market shifts and track where innovation is clustering. It also highlights broader patterns, such as the rapid emergence of AI in documentation and planning,

the growing interest in site-based intelligence, and the significant investment now flowing into datacentric ‘BIM 2.0’ platforms. Above all, we hope you use our AI Spotlight Directory as a living resource. As the market evolves, as firms pivot, and as new ideas emerge from both start-ups and established vendors, we will continue to expand and refine the coverage.

AI may well represent the next major platform shift for the AEC industry and as such deserves a clear, authoritative map for buyers. This directory represents AEC Magazine’s commitment to building that buyer’s guide for the entire community.

■ www.aidirectory.aecmag.com

AEC firms are starting to recognise the value they could extract from their data, if only it weren’t scattered across countless systems, formats and project contributors. The answer for many is a shift away from proprietary files in favour of cloud-based databases that promise genuine ownership of data and better control over it

b y Martyn Day

To many in the AEC industry, data lakes and lake houses sound very much like approaches about which only a CIO or software engineer would care. But the truth is far simpler: this is about finally getting control of your own project information, and stopping the madness of exporting, duplicating and re-formatting the same models over and over again.

Think of a BIM file as a shipping container. Everything you need is technically inside it, but you can only open it from one end and moving it around is slow and clumsy. If you need one box from the back, you still have to haul the entire container to take out its contents.

A lake house is the opposite. It behaves like a warehouse in which every object inside a project – every wall, room, door, parameter, schedule item, photo, scan or RFI – sits neatly on a shelf. It is indexed, searchable and instantly accessible. Nothing has to be unwrapped, exported or repackaged. Every tool, whether internal or external, sees the same live information at the same time. This immediately solves three of the most familiar pain points in BIM delivery.

The first is speed: in a file-based world, clashes are found tomorrow, or Friday, or at the coordination meeting. In a lake house, clashes appear while someone is still modelling the duct.

Checking rules, validating properties, or running energy assessments can happen continuously, not in slow cycles defined by exports.

Second comes ownership: right now, firms only ‘own’ their data in theory. In practice, it sits inside proprietary formats and cloud silos, to which access is metered, limited or simply not available. A lake house flips that. The data sits in open formats you can control yourself. Vendors don’t get to decide what you can do with your own project information.

The third issue is AI deployment: every firm wants to apply AI to its project history, but almost no firm

can, because the data is so scattered. A lake house finally puts all data in one well-governed venue, so that AI tools can use it. The future will be AI agents working on your project information doing cost prediction, design optimisation, risk profiling, automated documentation – all the things that many professionals in the AEC industry would love to see. This shift isn’t about technology for its own sake. It’s about reducing rework, stopping duplication, ending lock-in, improving quality and giving firms back control of the knowledge they already produce. For an industry built on coordination, clarity and shared understanding, it sounds like a transformation we need.

As companies in the AEC industry digitise, it’s increasingly recognised that their most valuable asset is not to be found in drawings, models, or even the tools used to produce them. Their most valuable asset is the data buried inside every project - data that captures geometry, relationships, parameters, costs, clashes, RFIs, site records and more.

This data represents the accumulation of knowledge that a firm may have spent decades developing and supporting. Yet much of it is scattered across different formats and systems, often locked behind proprietary file structures and metered cloud APIs.

This familiar situation is accompanied by an uncomfortable truth: employees at these firms can open their files, they can view their models, but the firm does not meaningfully own or control the data within them. If employees want to analyse it, run automation on, or train an AI model using it, they must purchase additional subscriptions from their software provider, or even additional applications. As the industry chases meaningful digital transformation, this dependency has increasingly become a strategic liability.

But there is an alternative. AEC firms can benefit by shifting from files to their own in-house, cloud-based databases, gaining long-overdue control of their data and possibly freeing themselves from proprietary bottlenecks.

The answer may lie in data lakes and data lake houses, which offer an open data architecture where project data can live as governed, queryable, interoperable information, rather than isolated blobs inside Revit RVTs, AutoCAD DWGs or proprietary cloud databases. This is the landscape into which the industry is now moving.

For thirty years, the AEC industry has revolved around files: RVTs, DWGs, IFCs, 3DMs, PDFs, COBie spreadsheets and thousands of others, all acting as containers for design intelligence. In the desktop era, this was entirely logical. Everything had to be saved, packaged, versioned and emailed. Files were the only workable abstraction.

But the reality of modern project delivery is vastly different from that of the 1990s. Today’s projects involve thousands of files, generated by hundreds of tools, frequently being used by people working across multiple firms. The result is a digital environment that is fragmented, brittle and slow, one in which coordination headaches, data duplication and time delays are not bugs in the system but features of the file-based architecture itself. Meanwhile, cloud platforms, APIs and

increasingly, artificial intelligence (AI) are becoming the defining technologies of modern workflows – and these technologies do not want files. They want structured, granular, persistent data that is streamable, queryable, validated and can be used by multiple systems simultaneously.

A monolithic RVT file cannot support real-time analysis, multimodal AI or firm-wide automation. It was never designed to perform that way.

In fact, firms are realising that their true competitive asset isn’t a file at all, but the data inside it, with its representations of objects, parameters, schemas and historical decisions. A file is simply a container that slows everything down, and because most file formats are proprietary, the data ends up trapped inside someone else’s business model.

Few voices have been more consistent, or more technically grounded on this point than Greg Schleusner, principal and director of design technology at HOK, who also represents the Innovation Design Consortium (IDC). For years, Schleusner has argued that the AEC industry must stop treating BIM as a file-based activity and start treating it as data infrastructure.

At NXT BLD, Schleusner laid out the problem plainly (tinyurl.com/schleusner-NXT). Revit knows everything about an object the moment it is drawn, he told attendees, but that intelligence is locked inside a file until someone performs an export. That could be hours, days or weeks too late. As he put it: “It’s never been an issue getting the metadata out from Revit. It’s always just been the geometry that’s been the slow part.”

Schleusner began his presentation by analysing how the media & entertainment industry has solved similar problems. Pixar’s USD format became the standard for exchanging complex geometric and scene information across tools. Its depth far exceeds IFC, but it has no concept of BIM data. To tackle this issue, Nvidia and Autodesk’s Alliance for OpenUSD aims to extend USD into AEC, but this work is still in progress and is still far from replacing BIM data requirements.

The more radical step in Schleusner’s research is his call to stop thinking about BIM models as files at all. Instead of exporting federated models, or waiting for ‘Friday BIM drops’, he proposes streaming every BIM object – every wall, window, beam, annotation – into an open database the moment it is authored. Each element would have its own identity, lifecycle and change history and would be immediately available for clash detection, rule validation, energy checks or analytics while the model is still being authored. This vision is now driving a broader

conversation about how AEC firms should manage their project data, and why data lakes and lake houses are becoming unavoidable.

To understand why the ‘data lake house’ has become such an important issue in AEC, it’s worth stepping back and looking at how other industries have navigated the data problem. The first major attempt to manage organisational data at scale arrived in the late 1980s with the data warehouse. Warehouses were designed for one job: to pull clean, structured information out of operational databases through a strict ETL (extract, transform and load) pipeline and then serve it up as predefined reports.

The data warehouse did this well, but only within narrow boundaries. Data warehouses were expensive, rigid and completely unprepared for the tidal wave of semistructured and unstructured content that would later define the digital world, including images, logs, documents, telemetry, and later, multimodal information.

By the early 2000s, the so-called Big Data era hit. Organisations in every sector began generating vast amounts of high-velocity, highly varied data that had no obvious schema and traditional warehouses were overwhelmed. Their rigid structure was a poor fit for unpredictable information.

The tech industry response was the data lake, an architectural about-face: instead of structuring data before storage, firms dumped all data – structured, semistructured, unstructured – into a cheap cloud object store such as Amazon’s S3, and only transformed it later as needed. This ELT approach (extract, load, transform) offered enormous flexibility and scale, giving rise to a marketing narrative that data lakes were the future.

But early data lakes quickly developed severe problems. Without governance or schema control, they quickly became data swamps – vast, murky repositories, in which inconsistent, duplicated and unvalidated data accumulated in an uncontrolled manner. Querying could be painfully slow. Trust in data deteriorated. And critically, data lakes lacked transactional integrity: a system could be reading data as another was rewriting it, resulting in broken or inconsistent results.

In short, data lakes solved storage issues but broke reliability, governance and performance – three qualities that AEC firms need more than most.

The data lake house emerged as the solution to this tension. It combines the lowcost, infinitely scalable storage of a data lake with the structure, reliability and transactional control of a warehouse. It

does this by adding a relational-style metadata and indexing layer directly over openformat files stored in cloud object storage.

This hybrid design is the critical step that can turn a loose collection of files into something that behaves like a ‘proper’ database. With this metadata layer in place, a lake house can guarantee ACID (atomicity, consistency, isolation, durability) transactions, meaning that multiple systems can read and write simultaneously. It enforces schema, so project data follows well-defined structures. It maintains full audit trails, so lineage and accountability are preserved. And it allows BI tools, analysis engines and AI models to run directly on the live dataset, rather than duplicating extracts and creating conflicting versions.

For AEC, this is not just convenient. It is foundational. AEC project data is inherently multimodal and includes solid models, meshes, drawings, schedules, reports, energy data, specifications, RFIs, documents, photos and point clouds, as well as the metadata that ties them together. A single Revit file can contain thousands of elements with their own parameters, relationships and behaviours. Trying to run AI, automation or cross-disciplinary analysis on this information using file-based workflows is like trying to do real-time navigation with a paper map that’s updated once a week.

but it is transformative for analytical workloads. Most queries only need a few columns, so engines can read exactly what they need and skip everything else, reducing I/O dramatically. This is crucial in AEC, where models can contain thousands of parameters, but only a handful are needed for any given check.

Parquet’s openness is equally important. It avoids the proprietary format trap that has hamstrung AEC for decades. Once your project data is in Parquet, any tool in the open ecosystem, from Python and Rust libraries to cloud engines like Databricks, Snowflake or open-source query engines, can read it natively. You no longer need to negotiate with vendors or wait for APIs to mature. The data is yours.

If Parquet provides the necessary storage, then Apache Iceberg provides the

‘‘

cant consequences. It delivers consistent views, because every query targets a specific snapshot, ensuring results remain complete and coherent even as new data is being written. It provides true transactional safety, where any change is either fully committed as a new snapshot or not committed at all. And it supports genuine schema evolution, allowing columns to be added, removed or renamed, without having to rewrite terabytes of Parquet files, an essential capability for long-lived AEC datasets. Together, Parquet and Iceberg deliver the reliable, unified project database that the AEC industry has never had before.

Moving from siloed, proprietary files to an open, unified, AI-ready lake house is the clearest path to future-proofing an AEC firm ’’

In short, the lake house shifts the paradigm. A project dataset sits in one place, in open formats, behaving like a continually updated, queryable database. The file no longer defines the project; the data does. At the base of most lake houses sits Apache Parquet, a columnar storage format that has become the industry standard. Parquet stores data by column rather than by row. That may sound minor,

intelligence. Iceberg is an open table format originally developed at Netflix to bring reliability, versioning and high performance to massive data lakes. It adds a metadata layer that tracks the state of a table using snapshots. Each snapshot refers to a manifest list, which itself points to a set of manifest files acting as indexes for the underlying Parquet data.

A manifest is effectively a catalogue: it lists which Parquet files belong to a table, how they are partitioned, what columns they contain and how the dataset has changed over time. Rather than scanning thousands of files to answer a query, Iceberg reads the manifests and instantly understands the structure.

This is an elegant solution with signifi-

Some years ago, Netflix faced almost exactly the same problem the AEC industry struggles with today: mountains of fragmented, multimodal data, scattered across incompatible systems. Its crisis wasn’t content creation. It was scale, complexity and chaos – the same forces reshaping digital AEC.

Netflix’s media estate spanned petabytes of wildly different content: video, audio, images, subtitles, descriptive text, logs, user-generated tags and, increasingly, AI-generated embeddings. Each data type lived in

its own silo. Data scientists spent more time hunting and cleaning data than training models. Collaboration slowed. Infrastructure costs soared. No one had a unified view of the organisation’s own assets.

To solve this, Netflix built a ‘media data lake’, using an open multimodal lake house architecture centred on the Lance format. Lance allowed Netflix to store every data type in one system, with relational-style metadata, versioning and schema evolution layered directly over cloud object storage. Critically, Lance enabled zero-

Once AEC project data sits inside an open lake house – structured, governed and queryable – the benefits begin to accumulate quickly. The most immediate shift is that data finally becomes decoupled from the authoring tools that produced it. Instead of each BIM package jealously guarding its own silo of geometry and metadata, the project information lives in a neutral space where any tool can read it.

This single change unlocks capabilities that, until now, have been aspirational rather than practical. Firms can build their own QA systems that directly interrogate geometric and metadata standards across every project model, regardless of whether the source was Revit, Tekla Structures, Archicad or anything else. There is no export step, no format translation, no broken parameters. The data is just there, in a clean schema, ready to be queried.

With proper schema enforcement, elements, parameters, cost codes and property sets follow firm-wide standards. That finally makes automated reporting relia-

copy data evolution — teams could add new AI features, such as embeddings or captions, without rewriting the underlying petabytes of source video.

The parallel with AEC is obvious. AEC’s data is just as varied and just as with pre-lake house Netflix, this information is locked inside singlepurpose tools. Every application exports its own version of the truth. Every team maintains its own copy. Every AI initiative begins with cleaning up someone else’s chaos.

The Industry Data Consortium’s pursuit of “single export, multi-use”

is effectively the AEC version of Netflix’s journey. By extracting project data into open Parquet tables and managing them via Iceberg, firms gain a single source of truth that supports transactional integrity, schema governance and reliable engineering workflows.

Suddenly, AI pipelines, energyreport parsers, internal search tools and firm-wide assistants become possible — because the data is unified, structured and finally under the firm’s control.

■ www.tinyurl.com/lance-netflix

ble, rather than a brittle sequence of halfworking scripts – something the industry has talked about for a decade.

Once a dataset becomes trustworthy, AI and machine learning models become dramatically more effective. Instead of scraping data from a handful of projects, a firm can train predictive systems on its entire project history. Models can forecast costs from early design parameters, identify risky design patterns from past change orders, predict schedule risks or optimise building layouts for energy performance. These capabilities are not theoretical. They are exactly the sort of AI workloads that other industries have been running for years, but which have been hamstrung in AEC due to poor data foundations.

The same architecture also enables genuinely federated collaboration. Instead of exchanging bloated files, firms can give project partners secure, query-level access to precisely the objects or datasets they require, all drawn from a live single source of truth. A clash engine could read from the same table as a cost tool, which could read from the same table as an AI model or an internal search engine. That interoperability is the essence of BIM 2.0: a move away from document exchange and toward continuous data exchange.

In short, the lake house doesn’t just solve a technical problem. It opens a strategic opportunity: for firms to build their own intellectual property, automation tools and data-driven insights on top of a foundation that is finally theirs.

As the industry accelerates toward automation-heavy workflows, the commercial incentives for large software vendors are beginning to shift in uncomfortable ways. Automation reduces the number of manual, named-user licences — the traditional revenue backbone of the design software business. And history suggests vendors rarely accept declining per-seat income without looking for compensatory levers elsewhere.

In today’s tokenised subscription world, that compensation mechanism may lead to higher token prices, steeper token consumption rates for automated processes, and an overall rebalancing designed to recover revenue lost to more efficient, machine-driven workflows. In effect, the more automation delivers value to practices, the more vendors will seek to recapture that value through metered usage.

This is precisely why the conversation around data lakes and lakehouse architectures matters so much. Owning your data is no longer a philosophical stance, it’s a strategic defence. If automation becomes a

toll road, then firms need control of the highway. By centralising and owning their data, and by running automation on their own plainfield rather than someone else’s, practices can decouple innovation from vendor metering and protect themselves from being priced out of the very efficiencies’ automation is meant to provide.

The next challenge is practical: how does the industry transition from thousands of RVTs, DWGs, IFCs and other formats to an environment in which project data lives as granular, structured, queryable information?

For past projects, there is no shortcut. Firms must extract their model archives – geometry, metadata, relationships –and convert them into open formats that the lake house can govern. This is labour-intensive but unavoidable if firms want their historical data to fuel analytics and AI.

But the real transformation begins with live projects. At HOK, Schleusner has no interest in continuing to export files forever. He is designing a future in which BIM data streams from authoring tools as it is created and directly into the lake. Instead of waiting days or weeks for federated models or periodic exports, the goal is a steady flow of BIM objects, with each wall, room, door, system and annotation arriving in the lake house in real time.

This turns the lake house from an archive into a live, evolving representation of the project. Real-time clash detection stops being a dream and becomes standard practice. Rules-based validation can run continuously instead of catching issues once a week. Analytics and AI can operate on the dataset as it changes, not after the fact.

But there are practical barriers to all this. The first stumbling block for the live streaming of BIM objects – inevitably – is Revit. When Schleusner approached Autodesk to ask whether Revit could stream objects as they are created, as opposed to files being saved, the answer was an unambiguous ‘No’. Revit’s underlying architecture was simply not built for this. The geometry engine and much of the historic core remain predominantly singlethreaded, making real-time serialisation and broadcast of object-level changes impractical without significant performance penalties. In other words, the model cannot currently emit deltas as they occur.

Yet several vendors have found ways to work around these constraints. Rhino. Inside can interrogate Revit geometry dynamically. Motif claims to capture element-level deltas as they change. Speckle has developed its own incremental update

Parquet and Iceberg form the backbone of the AEC data lake house. Why?

Apache Parquet has emerged as the AEC industry’s open file format of choice, storing data by column rather than by row – a simple shift that transforms performance. Most analysis only needs data from a handful of columns, so engines can read exactly what they need and skip everything else. When working with AEC data – thousands of BIM parameters, millions of elements – this reduction in I/O is essential.

Apache Iceberg, originally developed at Netflix, brings database-like intelligence to raw Parquet files. It adds a metadata layer that tracks tables using snapshots, each one referring to a set of manifest files. A manifest is essentially an index: a lightweight catalogue listing exactly which Parquet files belong to the table, what they contain, and how the dataset is partitioned.

Instead of scanning thousands of files to understand the table, Iceberg simply reads the manifests. This gives the lake house the qualities AEC desperately needs consistent views, transactional safety, version control and the ability to evolve schemas without rewriting terabytes of data.

mechanism capable of extracting small, structured updates, rather than monolithic payloads. Christopher Diggins, founder of Ara 3D and a contributor to the BIM Open Schema effort (www.tinyurl.com/d-revit), has also demonstrated experimental object streaming from Revit and recently released a free Parquet exporter for Revit 2025.

A step in the right direction for Autodesk is its granular data access in Autodesk Construction Cloud (ACC), which generates separate feeds for geometry and metadata – but only after the file is uploaded and processed. This is useful for downstream analysis, but it is not true real-time streaming.

Meanwhile, Graphisoft is in the early stages of developing its own data lake infrastructure to support all of the many Nemetschek brands and their schemas. It’s certainly a trend that now is pervasive in the core AEC software suppliers.

As Schleusner puts it: “We don’t want to do what we currently have to do, which is every design or analysis tool running its own export. That’s just dumb.”

What the industry needs, he argues, is single export with multi-use. Data should be extracted once into an open, authoritative environment from which every tool can read and act. By putting BIM data into a shared platform, every tool, inter-

nal or external, can consume that data dynamically, without half the industry re-serialising or rewriting half the model every time they need to run a calculation, test an option, or update a view.

His experiments have ranged from SAT and STL to IFC and mesh formats, but none have provided the fidelity and openness he needs. His preference today is for open BREP – rich, precise, and free from proprietary constraints.

This is where the next piece of the puzzle appears: the Innovation Design Consortium (IDC). This group is emerging as the most significant collective data initiative the AEC sector has seen. It is a public-benefit corporation, comprising many of the largest AEC firms, primarily drawn from the American Institute of Architects (AIA) Large Firm Roundtable. These firms are pooling resources to solve shared data problems that no individual firm could tackle alone.

Schleusner joined the IDC’s executive committee nearly three years ago and brought with him a clear, technically grounded vision: to create a vendor-neutral foundation for project data that enables the streaming, storage and governance of BIM objects in an open, queryable architecture.

He is candid about why the industry hasn’t done this before: “The reason this has not been done or thought of being done today is because there’s no open schema that can actually hold drawing information, solid model representation and mesh representation.”

In his research, the closest fit he has found so far is Bentley Systems’ iModel, a schema and database wrapper that can store BIM geometry, metadata, drawings and meshes while supporting incremental updates. Crucially, iModel is now open source. That gives the IDC something the industry has never had: an adaptable, extensible schema that can act as the wrapper for all the lovely data.

There are caveats, of course. Solid models in iModel use Siemens’ Parasolid kernel, which is still proprietary. Some translation challenges remain. But as a starting point for an industry-wide intermediary layer, it is far further along than anything Autodesk, Trimble or Graphisoft have offered, although Bentley will still need to do some re-engineering.

The IDC is no longer in its prototype phase. It is actively building real tools for its member firms: extraction utilities, schema definitions, lake house integrations, and proof-of-concept pipelines that show Revit, Archicad, Tekla Structures and other tools publishing into a shared, vendor-neutral space.

The goal is not another file format. It is a

live representation of BIM objects that can feed clash engines, QA systems, cost tools, search engines and AI pipelines without rewriting half the model each time.

The IDC also plans to support AI directly. “We’re going to start hosting an open-source chat interface that can connect to IDC, provide data and individual firms’ data, keeping them firewalled,” says Schleusner.

Another technology layer, Lance DB, is also being evaluated. This is emerging as one of the most compelling formats for a modern AEC lake house, because it solves a fundamental problem into which the industry is running headlong: multimodal data at scale.

BIM models are only one part of a project’s digital footprint. The real world adds drawings, specifications, RFIs, emails, photos, drone footage, point clouds, and increasingly, AI-generated embeddings. Traditional columnar formats like Parquet handle tabular data well, but struggle when you need to store and version media, vectors and other nontabular assets in the same unified system. Lance was designed for this exact world. It brings the performance of a high-speed analytics engine, supports zero-copy data evolution, and treats images, video and embeddings as firstclass citizens. Netflix built it because its data is inherently multimodal – but so is that of AEC firms. A lake house built on Lance can finally treat all project information, geometry, documents and media, as one coherent, queryable dataset.

This is the first genuine attempt to build a shared AEC data infrastructure, one that is not driven by a vendor, but instead by firms who actually produce the work.

Another uncomfortable truth is that even if the industry succeeds in building a lake house for BIM data, model geometry and parameters alone are not enough to power meaningful holistic AI of project data. Having a model only will miss the context that lives in issues, approvals, RFIs, change orders, design intent and all those emails.

Worse, the data that does exist is scattered across owners, architects, engineers, specialists, CDEs and contractors. No single party holds the entire picture.

As Virginia Senf at Speckle explains: “It may be the large general contractors and top-tier multidisciplinary consultants who are best positioned to assemble project datasets, because owners are now demanding outcomes, not drawings.

“AECOM’s recent shift toward consultancy reflects this. But even if you gather everything, historical models are often inconsistent or simply wrong, a lot of legacy BIM data is unsuitable for analytics or AI at all.”

The shift to a data lake house isn’t an IT upgrade. It is a re-platforming of the AEC business model. Firms have spent decades selling hours, yet the real value they have generated – the patterns, insights, decisions and accumulated knowledge encoded in their project data – remains locked inside proprietary files and vendor ecosystems.

A lakehouse finally gives firms a way to monetise what they actually know. Data stops being a dormant archive and becomes a living asset that can predict outcomes, guide design intelligence, improve bids and reduce risk.