International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

Abstract - It has often been attributed that face masks can prevent the spread of COVID-19. Many scientists argue that it prevents virus-carrying droplets from reaching other hosts (people) while coughing and sneezing. This helps break the chain of spread. However, people do not like to cover their face with a proper face mask and some of them do not know how to wear it properly. Checking it manually for a large group of people, especially at a crowded place like a train station, theater, classroom or an airport, can be time-consuming and expensive. Also, people can be biased and gullible. Therefore, an automated, accurate and reliable system is required for the task. To train the system adequately, lots of data is required: images. The system should recognize if a person is not wearing a face mask at all, wearing it improperly or if the one is wearing it properly. In this paper, we are using MobileNetv2, which is a convolutional neural network (CNN) based architecture, to build such a face mask detection/recognition model. The developed model can classify people who are wearing masks, not properly wearing and not wearing it with an accuracy of 97.25 percent.

Key Words: COVID-19;Face-mask;Facerecognition;CNN; MobileNetv2ThedetrimentaleffectsofCOVID-19havebeenprevailingfor aroundthreeyearsnow.Ithasaffectedpeopleallaroundthe worldandthereisnosectorleftwhichhasnotbeentouched uponbytheimpactofthispandemic.Thenegativeimpacts areeasilyseeninthehealthsector,economicsector,tourism sector, et cetera. This global impact could be reduced significantlyifmostpeopleweremoreawareofwearingface masks properly. A surveillance system that can detect the mask-wearingpopulationcanbeahugeaidinthisscenario. Hence, a mask detection system is of huge significance as peoplecanbemadecautiousaboutefficientlywearingmasks inpublicareas.Themassiveimpactofthepandemiccanbe minimized if the system is implemented carefully and effectively.

Despite many guidelines and warnings by WHO and government,manypeoplestilldonotwearmasksandithas contributedalottothespreadofthevirus.Wearingamask isamustanditshouldbemonitoredcarefullyforpreventing thespreadofCOVID-19andmanyotherairbornediseases. So,amaskdetectionsystemiscurrentlyessential.

Anditisabouttimethatwecaremoreandberesponsible enoughsothatanynewwaveofnovelcoronavirusdoesnot wreak havoc. Vaccination programs are being conducted worldwideandmanypeoplehavebeenvaccinated.Butthe vaccinesdonotguarantee“NoCOVID”.So,weneedtokeep followinghealthguidelinesforthepreventionofthespread ofCOVID-19.Theworkproposedinourpaperhasreal-world application in several crowded places like bus/railway stations,hospitals,offices,educationalinstitutionsandsoon.

In paper [1], the authors propose a PCA (Principal Component Analysis) facial recognition system. Principal ComponentAnalysis(PCA)isastatisticalmethodunderthe headingoffactoranalysis.ThegoalofPCAistoreducethe largeamountofdatastoragetothesizeofthefunctionspace required to represent data economically. The broad onedimensional pixel vector composed of two-dimensional facial images in the compact main elements of the spatial functionisdesignedforPCAfacialrecognition.Thisiscalled self-spaceprojection.Theappropriatespacingisdetermined by identifying the vectors of the covariance matrix itself, whicharecenteredonthecollectionoffingerprintimages.

Inpaper[2],theauthorsdescribeanewmobilearchitecture, MobileNetV2, that improves the latest performance of mobile models in multiple tasks and benchmarks and differentmodelsizes.Theyalsodescribedeffectivemethods for applying these mobile models to object detection in a newframeworkthatiscalledSSDLite.Thisarticleintroduces anewneuralnetworkarchitecturespecificallydesignedfor mobile and resource-constrained environments. Our network promotes the latest model mobile personalized computer vision technology by significantly reducing the number of operations and memory required while maintainingthesameprecision.

In paper [3], the methods of usage of AI in combating the problemsassociatedwithCovid-19andlikewiseepidemics have been discussed. The authors of this paper have described various ways in which we can understand the clinicalproblemsbetterusingAI.Theyhavepresentedtheir caseonthebasisofthefactthattherehasbeenasurgein clinically available data together with the increase in the hypeaboutAI.Thecombinationofthesetwocouldhelpthe doctors to prescribe medicines better and help us to understandthecausativeandpreventivemethodsforCovid-

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

19.Theyhavealsoconductedasurveytodemonstratethe usefulnessofAIinthemedicalworldwith90%accuracy.

Inpaper[4],ithasbeenhighlightedthatthecurrentmedical facilities are not adequate to deal with a pandemic like situation. According to the authors the solution to this problem could be found in the form of blockchain and artificial intelligence.Theauthors havediscussedhowthe use of blockchain can be helpful in predicting the early outbreak of the pandemic and recognizing the high-risk zones. Similarly, they have also discussed that the use of artificialintelligencecanbetakenasanintelligentmeasure toknowthesymptomsofthedisease.Theyhavegoneonto introduceastate-of-the-artsystemthatcollaboratesblock chain and AI and this combined method could be an interesting exampleabouthowto deal withthepandemic effectively.

In paper [5], the authors have stated that Analog devices Inc.’sCrosscoreembeddedstudioandHOGSVMwereused for detecting person and distance from camera. Face detection and face parts like eyes, nose and mouth is implemented by Viola Jones’s algorithm. Viola Jones face detectionprocedureclassifiesimagesbasedonthevalueof simple features. There are three features, namely two rectangle,threerectangleandfourrectangles.Thevalueofa two-rectangle feature is computed by calculating the difference between the sum of the pixels within two rectangularregions.Thisproposedworkmaynothavebeen abletodetectthepersonwhentheyarewearingamasksoto improvethisaccuracyofeyedetectioncanbeincreasedto helprecognizingthepersonthroughhiseyeandeyeline.

Inpaper[6],theauthorshavepointedoutthattoconfigure YOLOv3 object names created to contain the name of the classes which model needs to detect, an input image is passedthroughtheYOLOv3model,theobjectdetectorfinds thecoordinatesthatarepresentinanimage.Forproducing modeloutputtheneighboringcellswithhighconfidencerate ofthefeatureswereaddedinthemodeloutput.80%ofthe data was used for training and rest is for validation. Fast RCNN object detection architecture can be used with YOLOv3 or the new version of YOLOv4 to increase the performanceofthefacedetectionsysteminrealtimevideo surveillance.

Inpaper[7],theauthorshavestatedthatTransferlearning hasbeenusedbyusingapre-trainedmodelMobileNetto useexistingsolutionstosolvenewproblems.GlobalPooling blocktransformsamulti-dimensionalmapintoa1Dvector having 64 characteristics. Finally, a SoftMax layer with 2 neurons takes the 1D vector and performs binary classification.

In paper [8], the authors’ proposed method consists of a cascadeclassifierandapre-trainedCNN.Forimageinthe dataset1)Visualizetheimageintwocategories:maskand

nomask.2)ConverttheRGBimagetoGrey-scaleimageand resizethisimageinto100X100.3)Normalizetheimageand convert it into a 4D array. To build the CNN model a convolution layer of 200 filters have been added and a second layer of 200 filters. A flatten layer to the network classifierhasbeenadded.Intheendafinaldenselayerwith 2outputsfor2categorieshasbeeninsertedandthemodelis trained.

Inpaper[9],YoloV4hasbeenimplementedusingtwostage detectors. The first-stage detector consists of: InputResolutionof1920*1080.Backbone-Darknet53chosenas detector method contains 29 Convolutional layers by 3*3 andeachlayersenttotheneckdetector.Neck-PANetapplied as the neck detector method. Dense prediction- YOLO v3 modelusedinthisstagetogeneratetheprediction.Secondstagedetector:Ithasasparsepredictionwhichappliesthe fasterR-CNN.Inputtothisis3*3layersgotfromtheneck andtheinputpredictionfromthedenseprediction.

Inpaper[10],collectdata(maskedandunmaskeddata),preprocessing (resizing, converting to array, pre-processing using MobileNetV2, etc.), split data (75:25), build model, testing,andimplementation.MobileNetV2,aconvolutional neural network architecture isused. The model has96.85 accuracy.Thedatacollectedfromdifferentcitiescanbeused for statistical analysis of people wearing masks and appropriateactioncouldbetakenforpreventingthespread ofCOVID-19.

Previously, researchers generally used heavy and complicated models such as VGG16, which needs many backend network parameters and requires high hardware configuration. Hence, it will be difficult to run on mobile deviceswithlimitedcomputationalpower.Also,eventhough they are heavy and complicated, they provide a similar performancetootherlight-weightneuralnetworkmodels.In other papers, where they used YOLOv4, the accuracy obtainedwasmere94.75%,whichislessthanourproposed augmented MobileNetv2 model 3. Most of the pre-existing face-maskdetectionmodelswerebasedonasinglesubject and there seemed to be a problem in detecting faces in crowdedscenarios.Also,inthemajorityofthepapers,theuse of low-resolution and highly compressed images was not prevalent during the training of the models. Hence, their models were not performing that good on these highly compressedimages.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

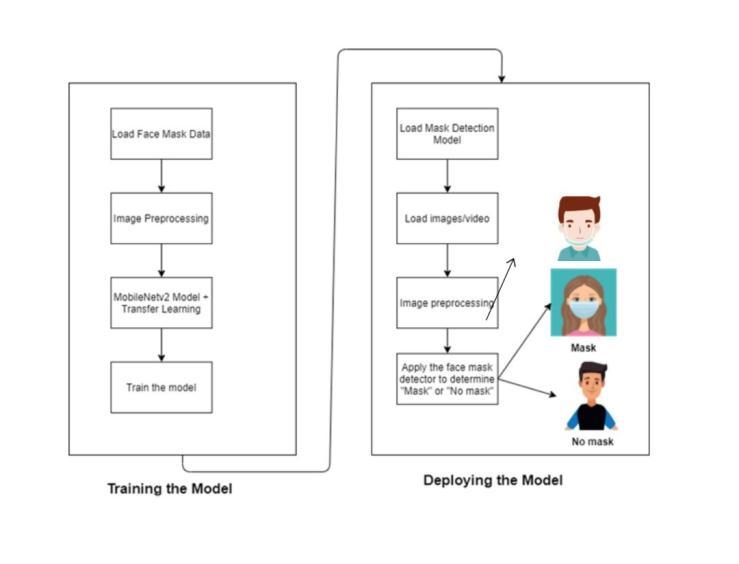

The architecture of this approach can be explained as below.

Abovearchitectureexplainsthedeeplearningmodelthat hasbeencreatedfollowedbyanimageprocessingpipeline. Thestepsfollowedinthearchitecturecanbedescribedas follows:

The development of our face mask recognition model starts with a collection of Kaggle dataset. The referred datasetcontainsthreedifferentclasses(withmask,without a mask and wearing mask incorrectly). Then the trained model can categorize input and detected images into wearingmaskscorrectly,wearingmasksincorrectlyandnot wearingmasks.

The dataset is highly imbalanced and uncleaned. For properfitting,imagesareaugmentedinsuchawaythateach classhasanequaldistributionofimagesandremovingnoisy imageswhichcouldbeconsideredasoutliers.

Sample labelled images (mask weared incorrectly, no mask,mask)takenfromthedatasetfortrainingandtesting areshownbelow:

Firststephere beginswiththeiterationthroughall the imagesandstoringthelabels ofeachimage(withoutmask,withmaskandwearingmask incorrectly). The image sizes are non-uniform. We need some standard size so that we can feed it into our neural network.So,itisresizedto224x224x3imagewhichisour standard image size. Then the image is converted to a NumPyarray.CV2isbetterwithBGRchannelimages,and weareusingitinalaterphase,soweconvertourimageinto BGRchannel.Aftertheimageispreprocessed,itisreadyto feeditintoourmodel.

Thedatawassplitintotwocollections,whicharetraining datanamed80percent,andtherestofthepart(20percent) istestingdata.Eachcollectionconsistsofallthewith-mask, without-maskandwearingmaskincorrectlyimages

Steps:

a. Readthedatasetandstoretheimage-paths.

b. Iteratethroughalltheimagesand

i. Store the labels of each image (without mask/withmask).

ii. Convertimagetoarrayandpreprocessthe image.

iii. Appendtheimagetodata.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

c. ConvertdataandlabelstoaNumPyarray.

d. Transformmulticlasslevelstobinarylevelsusing labelBinarizer.

e. Splitthetrainingandtestingdata.(20%dataisused intestingand80%dataisusedintraining).

f. Create instantiation of mobilenetv2 model and removethelastlayer.

g. AddAveragepooling2D,Flatten,Dense(withrelu activation), Dropout and Dense (with SoftMax activation)layers.

h. Assign trainable properties of mobile net v2 base modelasfalse.

i. Declarelearningrate(0.001),epochs(20)andbatch size(12).

j. UseAdamasoptimizerandbinarycrossentropyas loss.

k. Fitthemodelgivingfollowingparameters:

i. Useimageaugmentationflowtoartificially expand the size of the training dataset. (Use rotation range, zoom range, width_shift_range,etc.asparameters)

ii. Steps per epoch: Calculated as length of trainingdatadividedbybatchsize.

iii. Validationdata:testdata.

iv. Validation steps: Calculated as length of testingdatadividedbybatchsize.

v. Epochs

l. Savethemodel.

m. Testthemodelwiththetestsplitteddataandcreate classificationreports,plotsasneeded.

a. ReadphotopathandweightspathforOpenCVV DNNandcreateamodelusingthesame parameters.

b. Loadthesavedtrainedmodel.

c. Readtheimagetobetested.

d. Useblobfromimagetopreprocessimageand convertimagetotheformatthathasbeenusedby DNNwhiletrainingthemodel.

e. Detectthefacesfromthemodelandsavethe detections.

f. Iterateoverthedetectionsanddothefollowing steps:

i. Find the confidence (confidence of detectionoffaces).

ii. Storethestartandendcoordinates(bothx andycoordinates).

iii. Convert the image to RGB channel and resize,converttoarray,andpreprocessthe image.

iv. Predicttheprobabilityofwithandwithout maskusingthemodelloadedinitially(our trainedmodel).

v. Drawarectanglesurroundingthefaceand put text as “Mask” in green color if mask probability is greater than without mask probabilityandputtextas“Withoutmask” inredcolorifwithoutmaskprobabilityis greaterthanmaskprobability.

g. Formaskdetectiononvideostream,startvideo streamandRepeatstep5and6foreachframe.

To implement our model in the real world, live video is captured,framebyframe.Thisvideofeedisthenfedtoour algorithm (model). It can proceed only if a face has been detected. After detecting a face, required pre-processing tasksaredone.Then,themodelconvertsthepre-processed intoanarray-formanddoestherequiredfurtherprocessing tasksusingMobileNetv2.

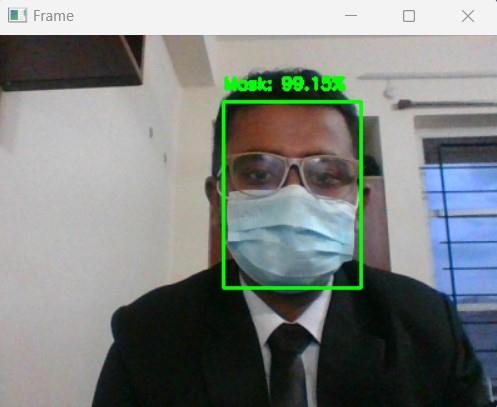

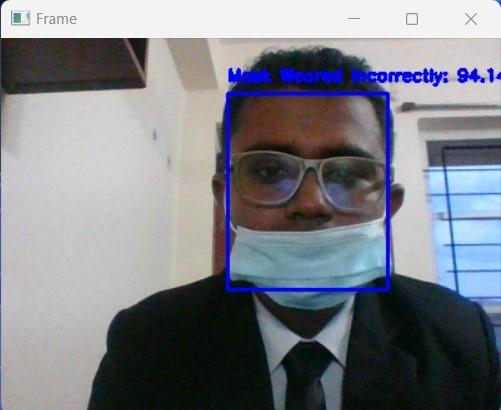

Then, for the given frame, it classifies if the subject is not wearing a mask or wearing it incorrectly or wearing it properlyasthemodel requiresthemto.Theclassification result is shown by putting a colored rectangle around the subject’sfacewiththeprobabilityofthatclassificationinthe samecolor.Itusesthefollowingcoloringscheme:

a. Nomask:Red b. Maskwearedincorrectly:Blue c. Mask:Green

Thisprocessisrepeatedforeachvideoframe.Sample imagesclassifiedbythemodelareshownbelow:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

Table -I: Iterationofcheckingthelossandaccuracy

Epoch Loss Accuracy Val_loss Val_acc

1/20 0.3497 0.8643 0.184 0.9238

2/20 0.2508 0.9056 0.1423 0.9471

3/20 0.2321 0.9120 0.1772 0.9310

4/20 0.2112 0.9247 0.1306 0.9477

5/20 0.1889 0.9283 0.1977 0.9176

6/20 0.1755 0.9324 0.1611 0.9327

7/20 0.1739 0.9336 0.1452 0.9416

8/20 0.1676 0.9385 0.2055 0.9160

9/20 0.1601 0.9397 0.1417 0.9477

10/20 0.1565 0.9446 0.1323 0.9471

11/20 0.1504 0.9454 0.1409 0.9516

12/20 0.1540 0.9438 0.1272 0.9505

13/20 0.1430 0.9445 0.1321 0.9499

14/20 0.1509 0.9478 0.1132 0.9605

15/20 0.1425 0.9468 0.1658 0.9310

16/20 0.1354 0.9489 0.1778 0.9277

17/20 0.1312 0.9546 0.1414 0.9455

18/20 0.1226 0.9576 0.0969 0.9622

19/20 0.1255 0.9548 0.1044 0.9549

Duetoheavycomputationalcostscausedbyclassicaldeep learning and machine learning models, we are pushed to implementtheoptimizationtechniqueforthegivenproblem withrespecttotheavailablehardwareandreliability.

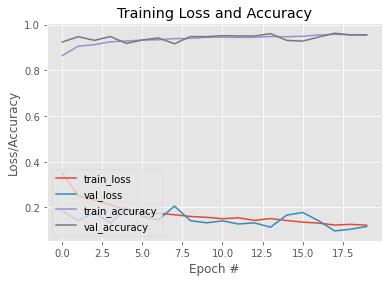

The result for 20 iterations in checking the loss, accuracy, valueloss,valueaccuracywhentrainingthemodelisshown inTableI.

20/20 0.1221 0.9538 0.1162 0.9555

FromTable1,wecaninterpretthattheaccuracyisincreasing atthestartofthesecondepoch,andlossisseendecreasing after it. At the 20th epoch the loss reduced to 0.1221. Similarly,accuracyreached0.95.Theprocessoftraininginto the Deep neural network was much faster than the expectation.Thetableisplottedinthegraphshownforbetter understandinginFigure2.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

improvesovertimeandfollowingthetrajectoriesofseveral framesofthevideohelpstocreateabetterdecision.

In certain edge scenarios where people are wearing the maskincorrectly,themodelmaygetconfusedandgiveouta classification probability of 40-60%. This is an erratic situationforthemodelandthusthemodelcannotaccurately classifybetween"maskwearedincorrectly"and"nomask". Weaimtoimprovetheseshortcomingsinfutureeditionsof thework.

Fig.8.GraphofTrainingLossandAccuracyvsEpoch

Table II. ModelEvaluation

Precision Recall F1score Support

Withmask 0.92 0.98 0.95 599 Without mask 0.97 0.96 0.97 599 Maskweared incorrectly 0.98 0.92 0.95 599 Accuracy 0.96 1797 Macro average 0.96 0.96 0.96 1797

Weighted average 0.96 0.96 0.96 1797

Ourproposedmodelwasabletoclassifythemask-wearing imagewithfaces.Theaccuracyprovidedbythemodelinsuch ascenariowasnearaboutperfectasthemodeldetectedall thefacesthathadmasksonthemaccuratelyalongwiththe accuracylevelanditalsoaccuratelydetectedthefacesthat weren't wearingmasks. Thoughthe model doesn’t givean impressionaboutpeoplewithanincorrectlywornmask,the resultsatthecurrentlevelaresatisfactory.

We faced some issues like varying angles and lack of clarityofframe.Constantlymovingfacesinthevideosteam inputproducesseveralstillframesandthismakesitmore difficulttodetectmaskswithhighaccuracy.However,this

Ourpaperpresentsamodelusingdeeplearningforface maskdetectionforthepreventionofCOVID-19.Oursystem classifies if a person is wearing a face mask properly, wearingincorrectlyornotwearingatall.Themodelwebuilt hashigheraccuracybecauseofheavytrainingwithalmost 9,000 labeled facial images. The final product shows a colored box with accuracy percentage in the upper part, whichincludestheheadoftheperson.Thisresearchwork canbecrucialforcurbingthespreadofCOVID-19insociety. Also, our model hence can be integrated in several applicationsforsuccessfulscalabilityandreal-worldimpact.

[1] Khan,M.,Chakraborty,S.,Astya,R.andKhepra,S.,2019, October.FaceDetectionandRecognitionUsingOpenCV. In 2019 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS) (pp. 116-119).IEEE.

[2] Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. and Chen, L.C., 2018. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conferenceoncomputervisionandpatternrecognition (pp.4510-4520).

[3] A. A. Hussain, O. Bouachir, F. Al-Turjman and M. Aloqaily, "Notice of Retraction: AI Techniques for COVID-19,"inIEEEAccess,vol.8,pp.128776-128795, 2020,doi:10.1109/ACCESS.2020.3007939.

[4] Nguyen,D.C.,Ding,M.,Pathirana,P.N.andSeneviratne, A.,2021.BlockchainandAI-basedsolutionstocombat coronavirus(COVID-19)-likeepidemics:Asurvey.IEEE Access,9,pp.95730-95753.

[5] Deore, G., Bodhula, R., Udpikar, V. and More, V., 2016, June.Studyofmaskedfacedetectionapproachinvideo analytics. In 2016 Conference on Advances in Signal Processing(CASP)(pp.196-200).IEEE.

[6] Bhuiyan,M.R.,Khushbu,S.A.andIslam,M.S.,2020,July. A deep learning based assistive system to classify COVID-19facemaskforhumansafetywithYOLOv3.In 2020 11th International Conference on Computing,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 09 | Sep 2022 www.irjet.net p-ISSN: 2395-0072

CommunicationandNetworkingTechnologies(ICCCNT) (pp.1-5).IEEE.

[7] Venkateswarlu, I.B., Kakarla, J. and Prakash, S., 2020, December. Face mask detection using mobilenet and global pooling block. In 2020 IEEE 4th Conference on Information&CommunicationTechnology(CICT)(pp. 1-5).IEEE.

[8] Das, A., Ansari, M.W. and Basak, R., 2020, December. Covid-19FaceMaskDetectionUsingTensorFlow,Keras and OpenCV. In 2020 IEEE 17th India Council InternationalConference(INDICON)(pp.1-5).IEEE.

[9] Susanto, S., Putra, F.A., Analia, R. and Suciningtyas, I.K.L.N., 2020, October. The Face Mask Detection For PreventingtheSpreadofCOVID-19atPoliteknikNegeri Batam.In20203rdInternationalConferenceonApplied Engineering(ICAE)(pp.1-5).IEEE.

[10] Sanjaya,S.A.andRakhmawan,S.A.,2020,October.Face MaskDetectionUsingMobileNetV2inTheEraofCOVID19Pandemic.In2020InternationalConferenceonData Analytics for Business and Industry: Way Towards a SustainableEconomy(ICDABI)(pp.1-5).IEEE.

Mr.RajaramYadav

UGStudentVIT,Vellore, TamilNadu

Mr.SafalGautam

UGStudentVIT,Vellore, TamilNadu

Mr.RahulRatnaDas

UGStudentVIT,Vellore, TamilNadu

MsPrarthanaShiwakoti

UGStudentVIT,Vellore, TamilNadu

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |